Hyperspatial and Multi-Source Water Body Mapping: A Framework to Handle Heterogeneities from Observations and Targets over Large Areas

Abstract

:1. Introduction

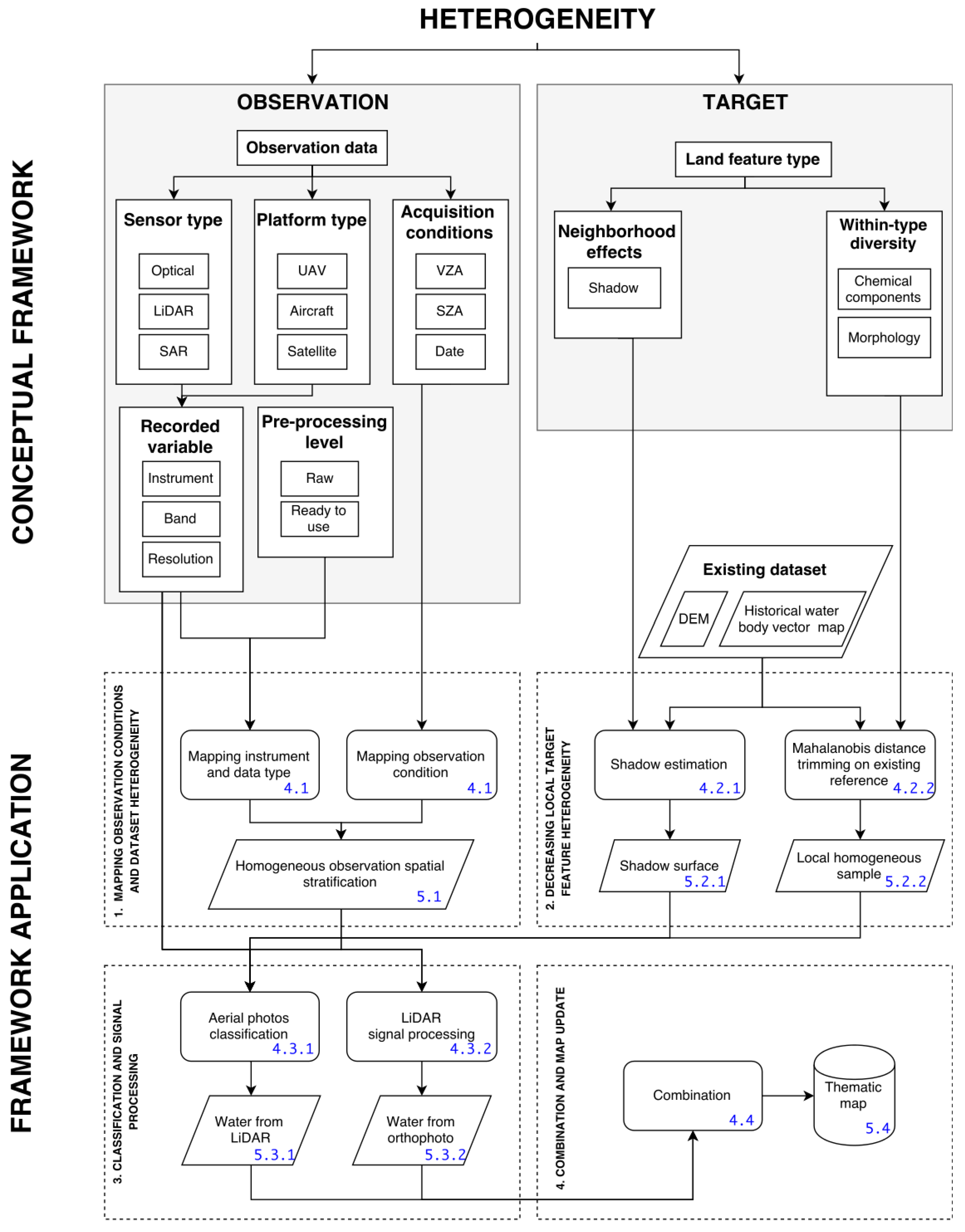

2. Conceptual Framework

3. Study Area

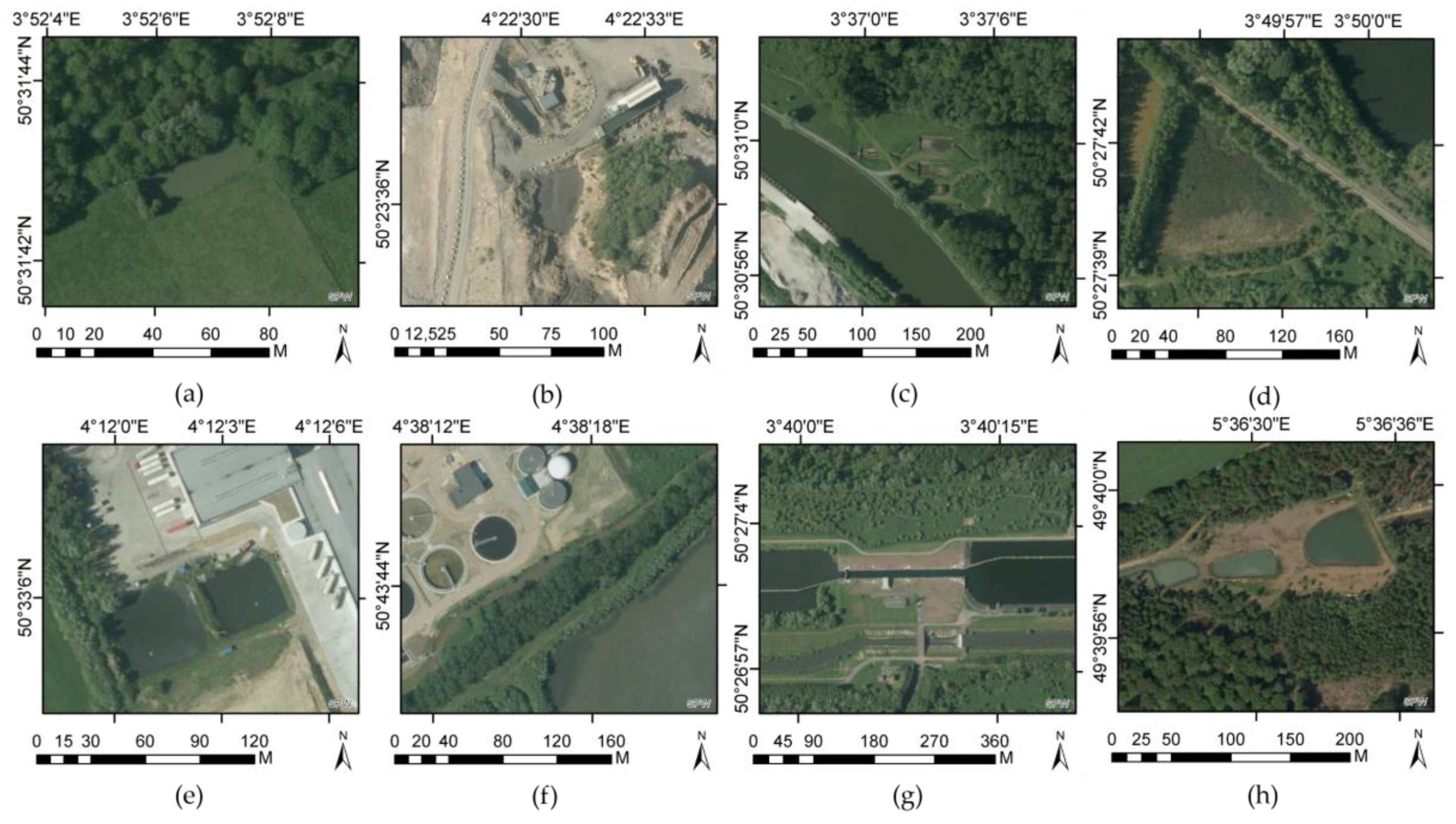

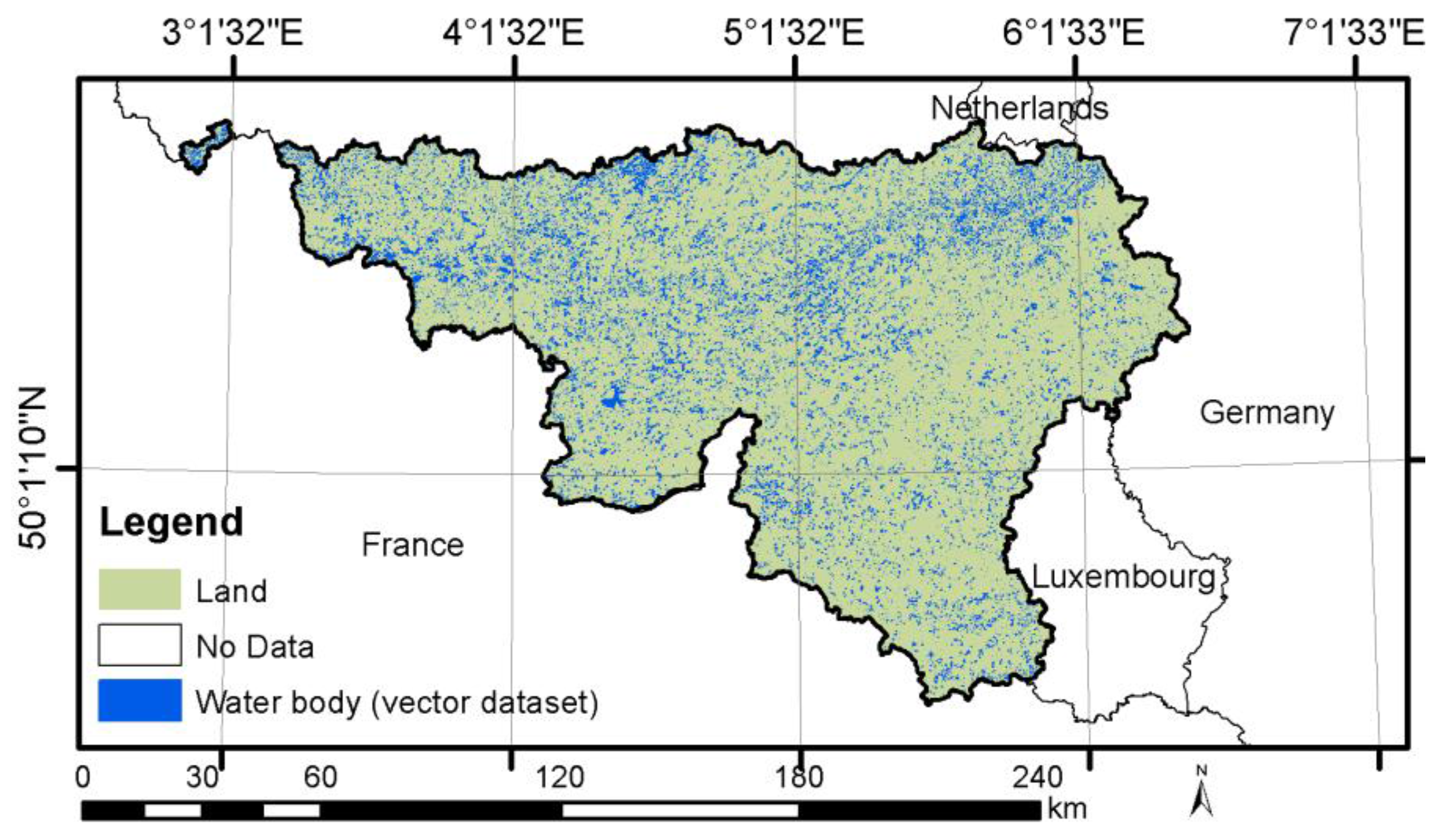

3.1. Study Region

3.2. Historical Water Body Vector Map

4. Framework Application

4.1. Mapping Observation Conditions and Dataset Heterogeneity

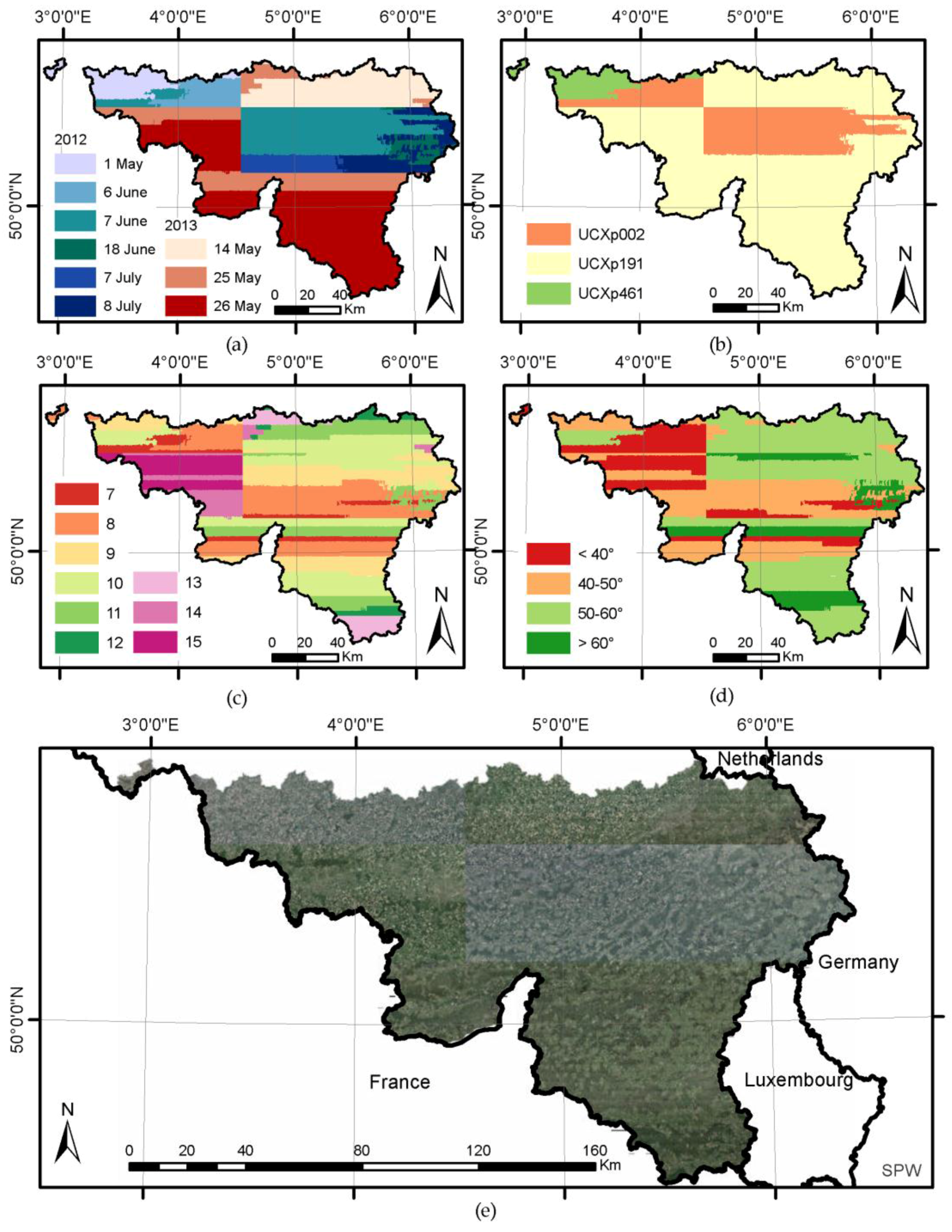

4.1.1. Aerial Photos

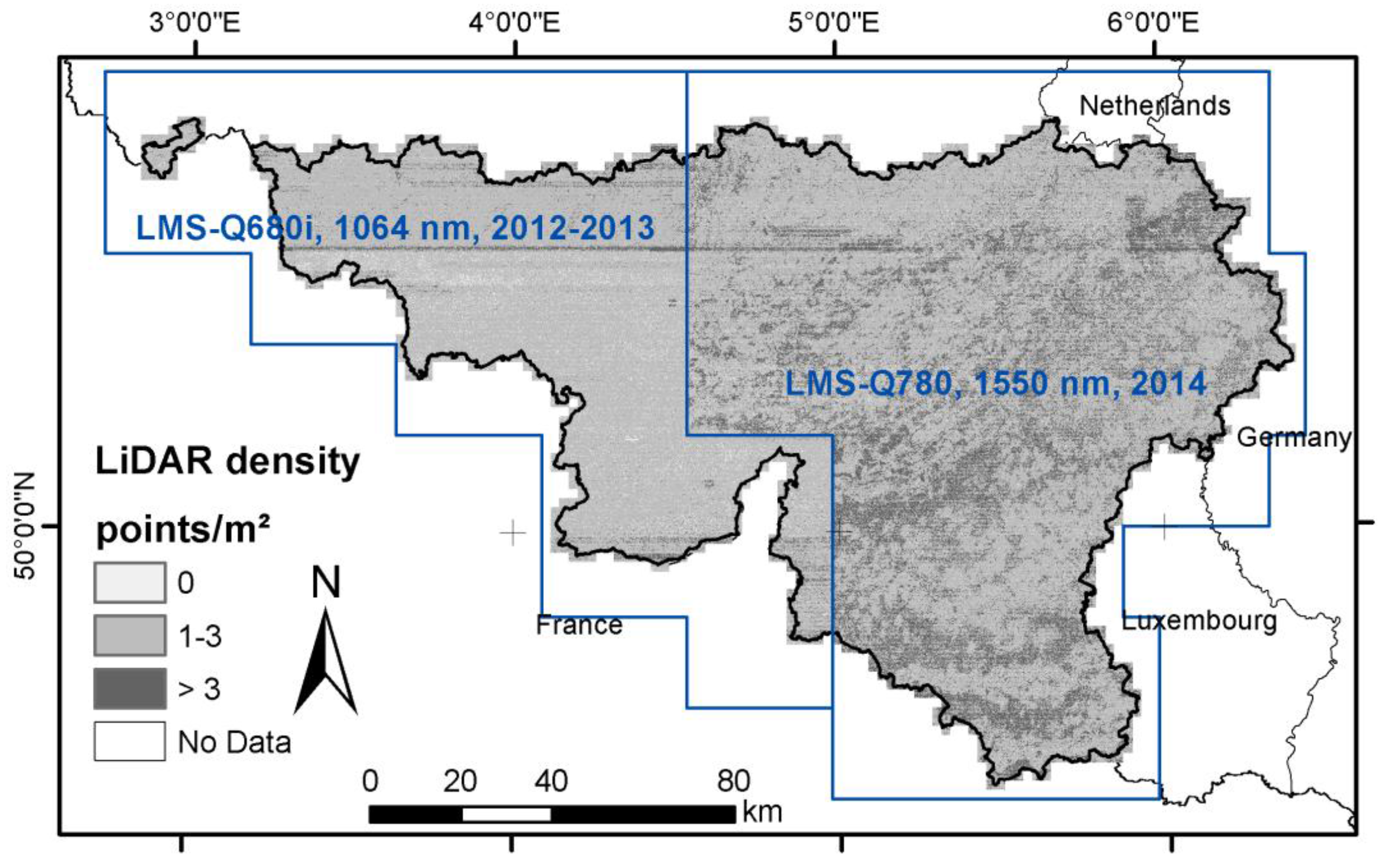

4.1.2. LiDAR

4.2. Decreasing Local Target Feature Heterogeneity

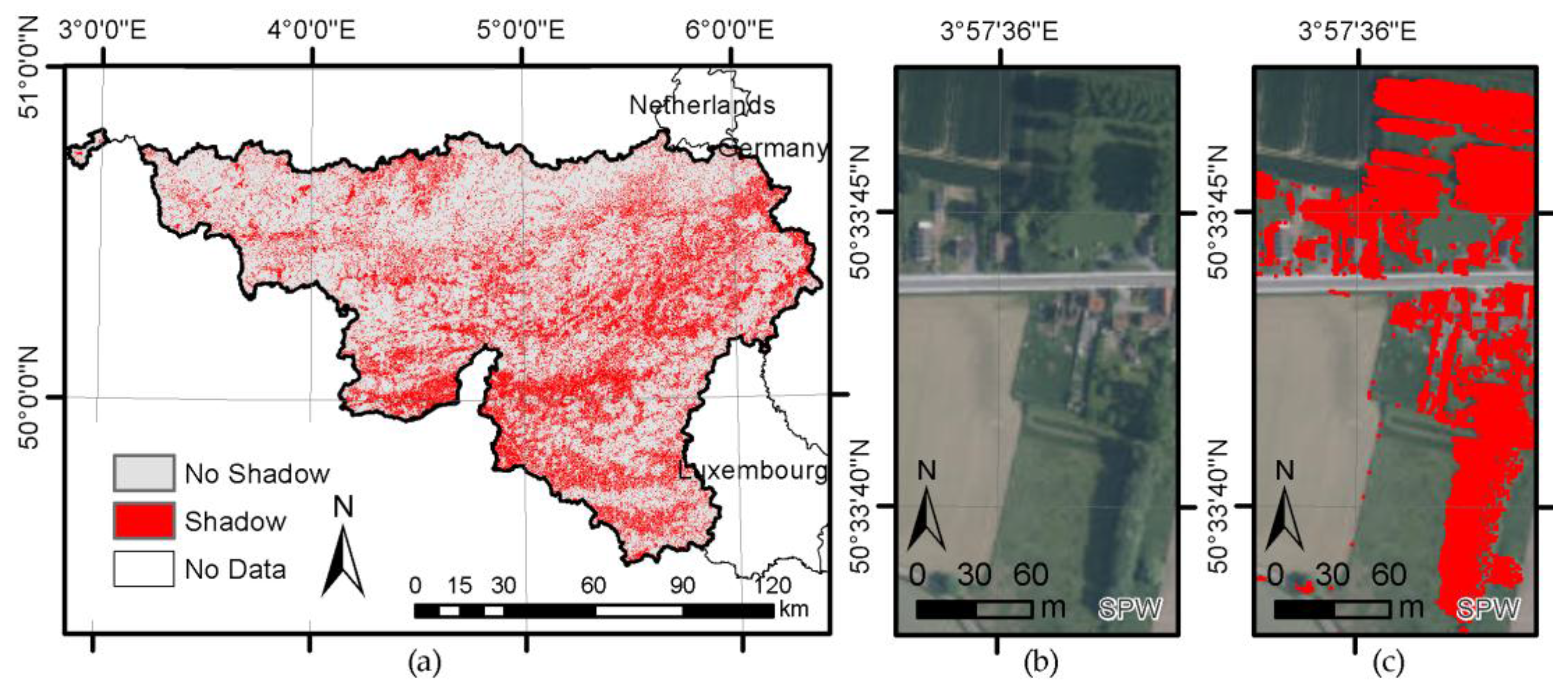

4.2.1. Neighborhood Effects: Shadow Estimation

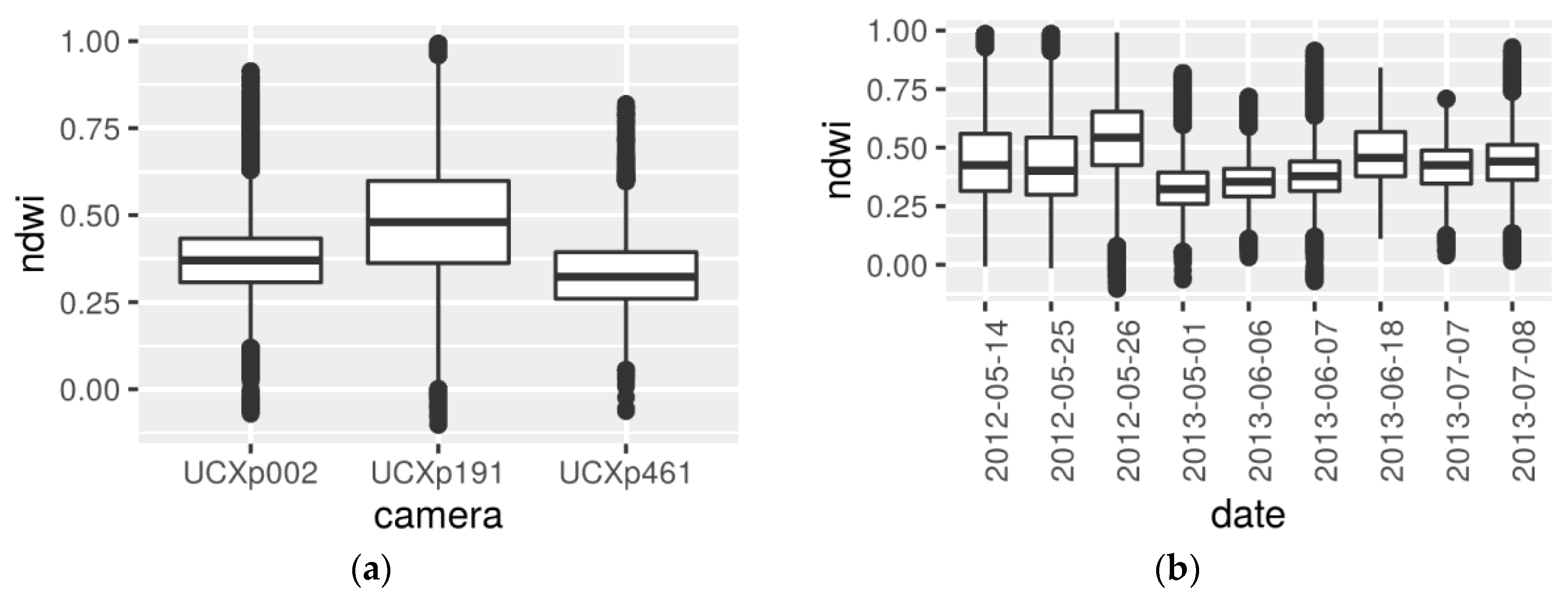

4.2.2. Within-Type Diversity

4.3. Classification and Signal Processing

4.3.1. Aerial Photo Classification

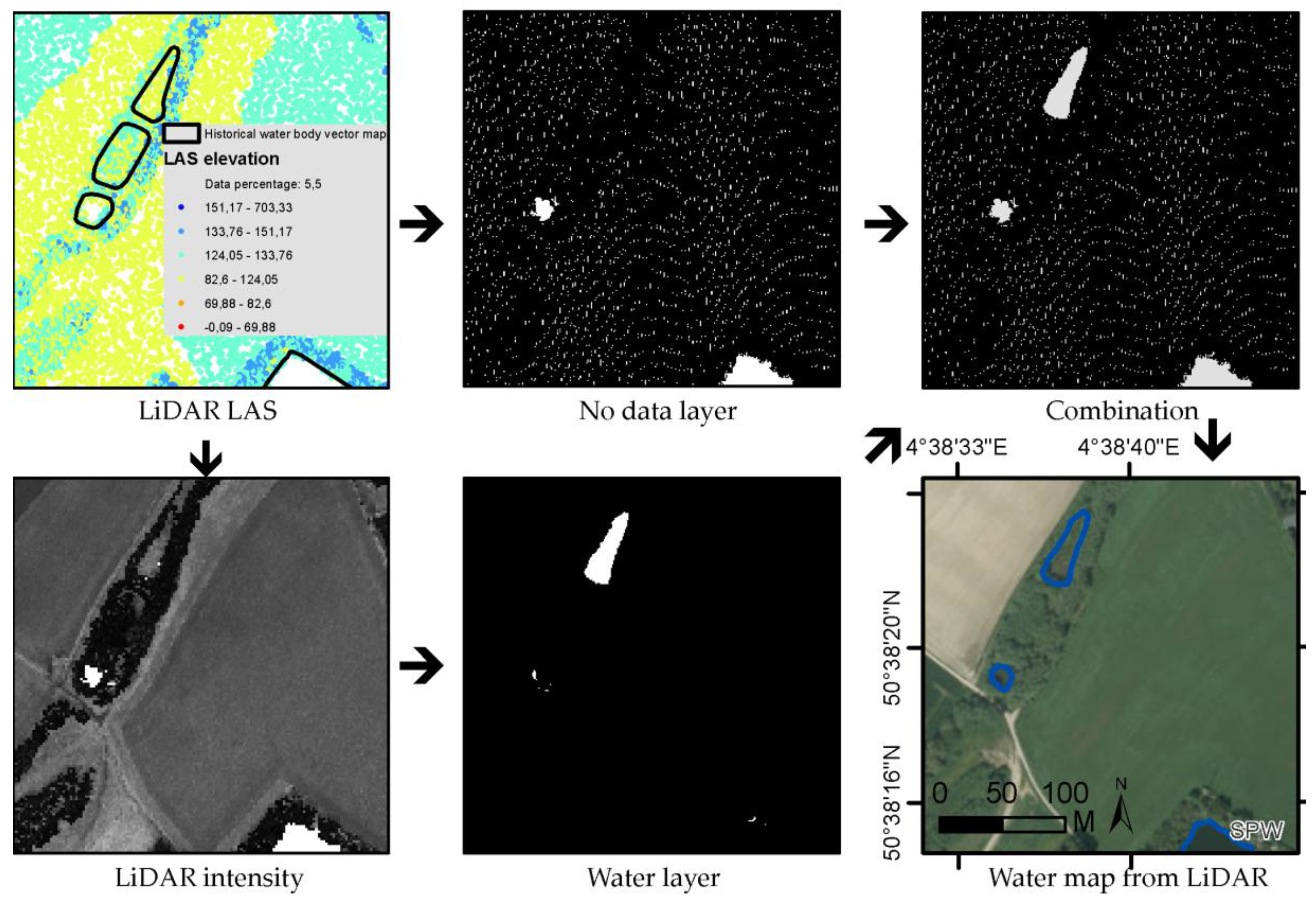

4.3.2. LiDAR Signal Processing

- Binary union of the water layer and the no data layer is eroded (3 m), and dilated (3 m). This removes most of the artifacts but will remove small water bodies.

- A recombination of the resulting layer with the water layer allows recovery of small water bodies lost in the previous steps. The resulting layer is then dilated (2 m), eroded (3 m) and finally dilated (1 m).

- This sequence is followed by the removal of the remaining buildings using ancillary data [68]. As the building ancillary data are not up to date, some buildings may remain.

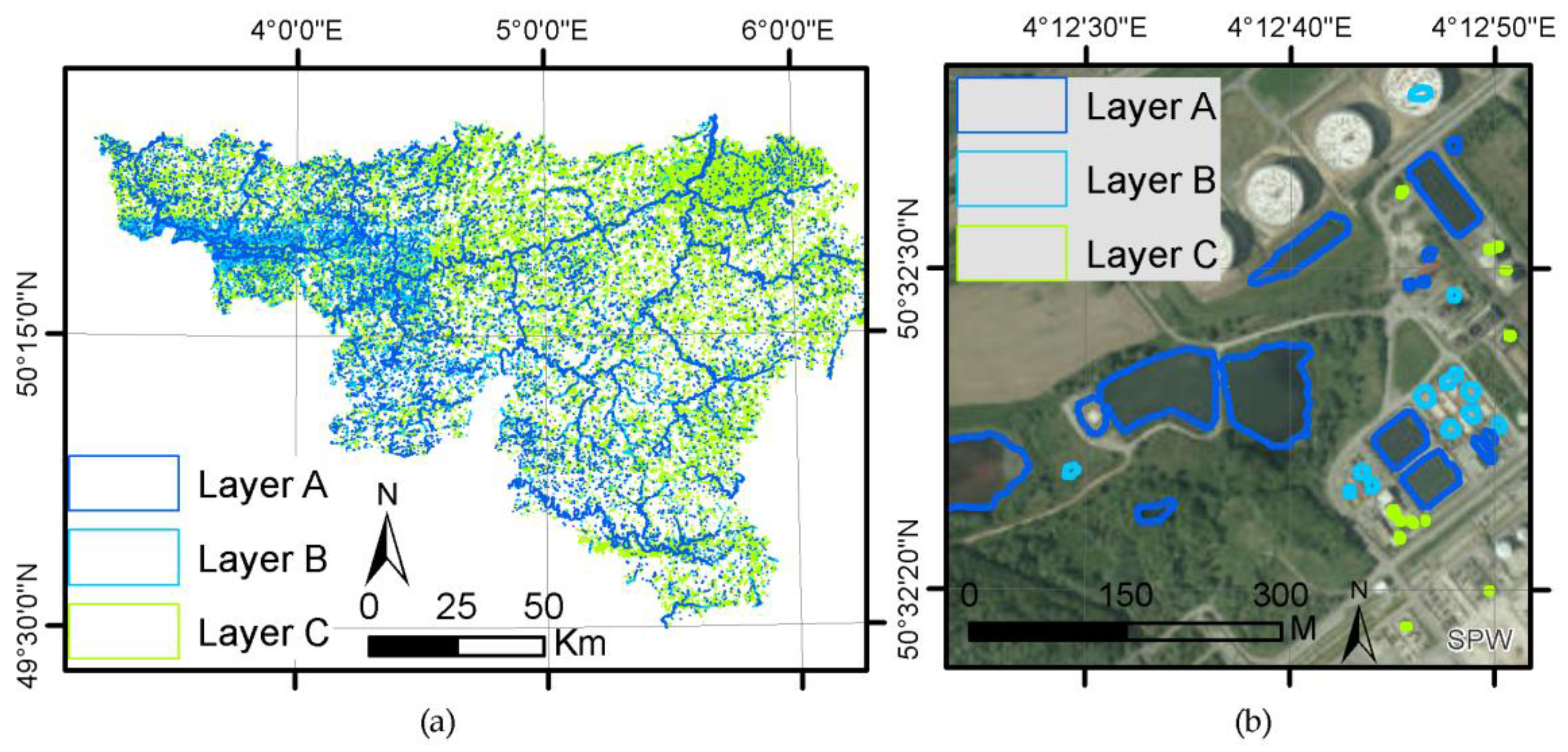

4.4. Combination and Map Update

4.5. Validation

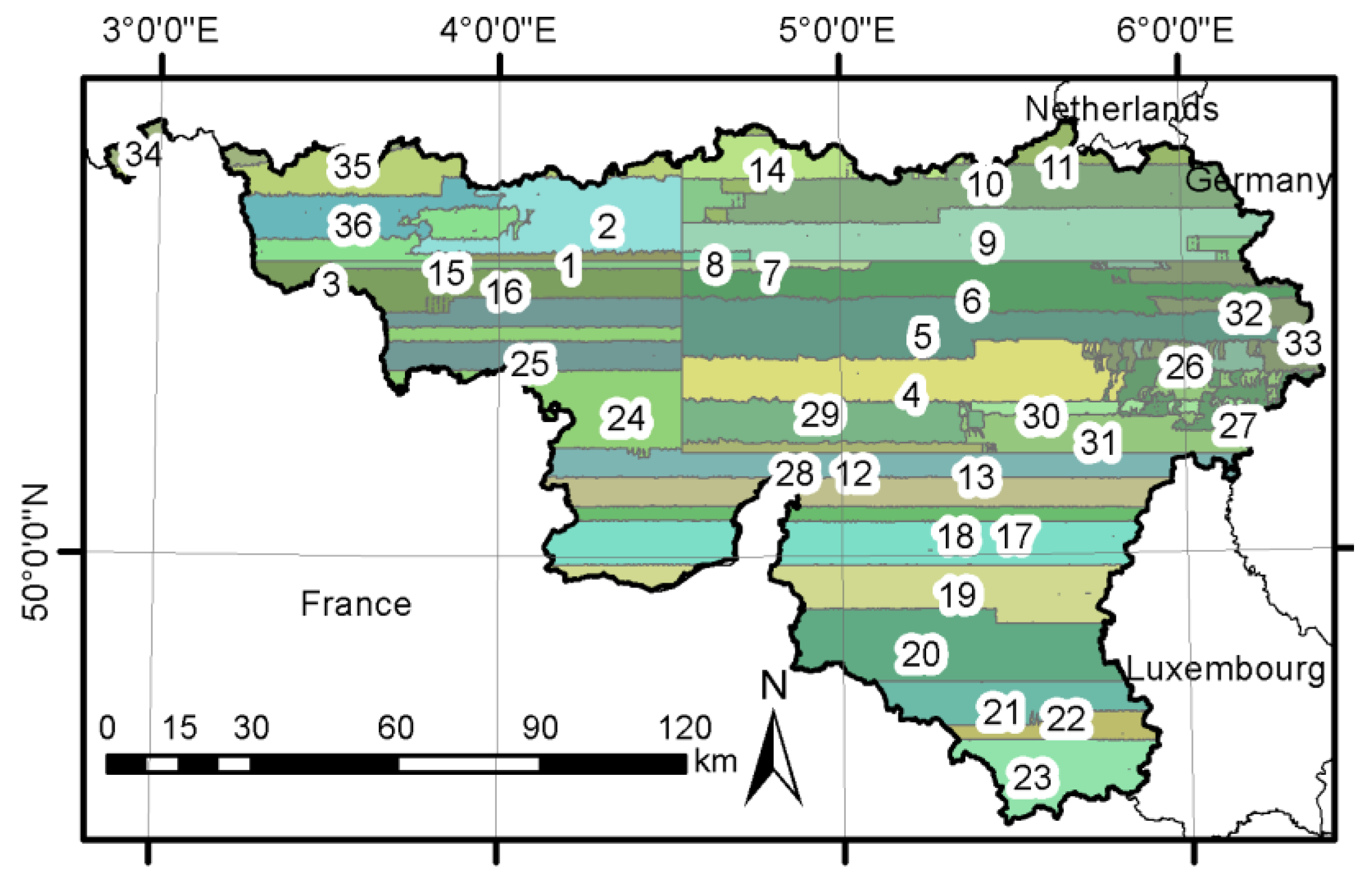

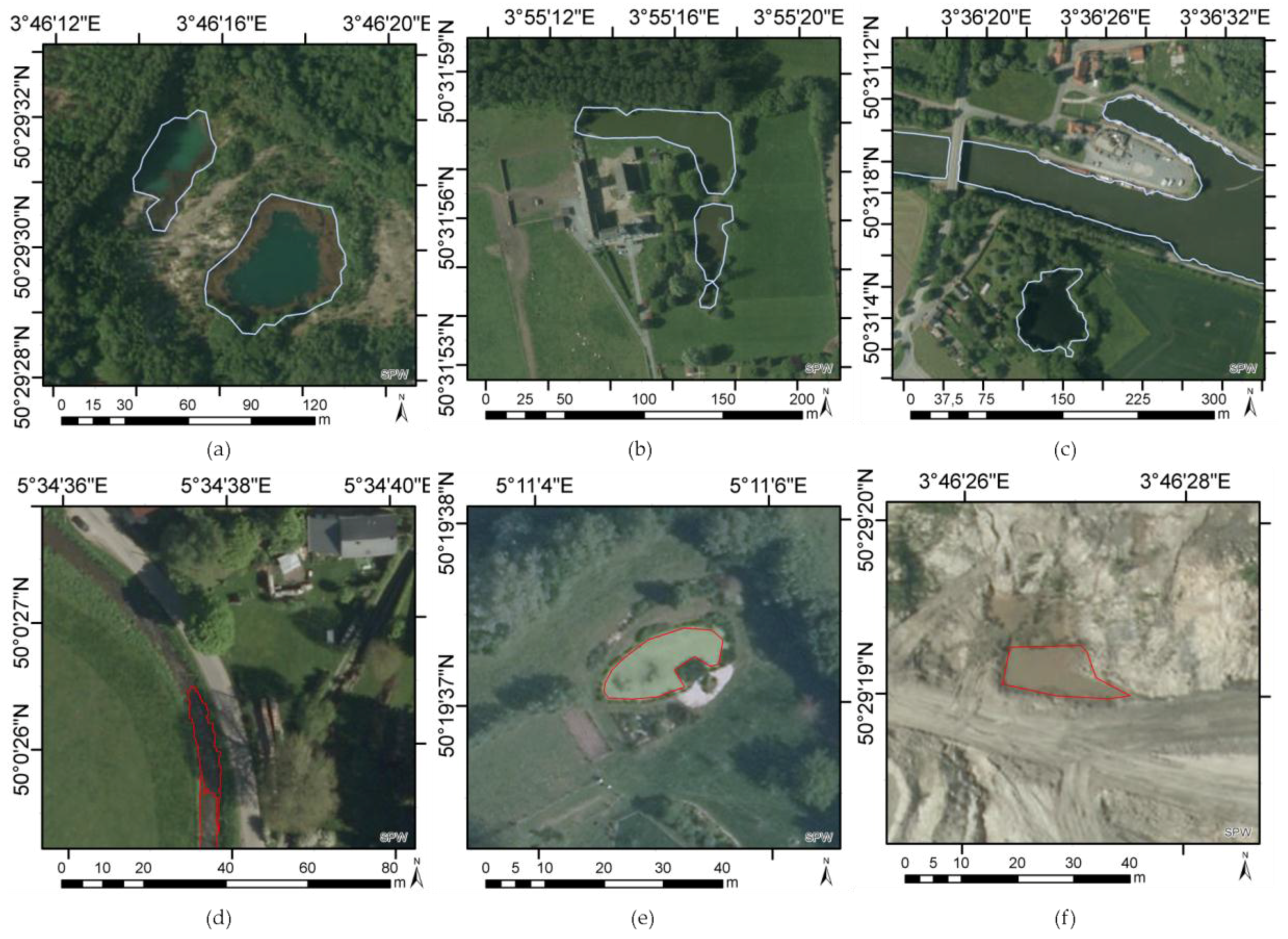

4.5.1. Validation Dataset

4.5.2. Quality Indices

5. Results

5.1. Mapping Observation Conditions: Homogenous Groupings of Data Acquisition

5.2. Local Heterogeneity Removal

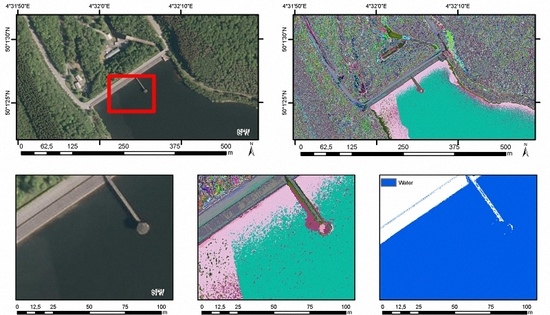

5.2.1. Shadow

5.2.2. Random Sampling and Iterative Trimming to Remove Surface Affected by the Context

5.3. Water Body Classification and Signal Processing Handling Intrinsic Variability of the Target

5.3.1. Aerial Photos Classification

5.3.2. LiDAR Signal Processing

5.4. Combinations and Update of the Water Body Map

5.5. Quality Evaluation

6. Discussion

7. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| CAP | Common Agricultural Policy |

| DSM | Digital Surface Model |

| GSD | Ground Sampling Distance |

| LIDAR | Light Detection and Ranging |

| NDWI | Normalized Water Index |

| OTB | Orféo ToolBox |

| SZA | Sun zenithal angle |

| UAV | Unmanned Aerial Vehicle |

| VRT | virtual GDAL dataset |

| VZA | view zenithal angle |

References

- Greenberg, J.; Dobrowski, S.; Ustin, S. Shadow allometry: Estimating tree structural parameters using hyperspatial image analysis. Remote Sens. Environ. 2005, 97, 15–25. [Google Scholar] [CrossRef]

- Rocchini, D.; Balkenhol, N.; Carter, G.A.; Foody, G.M.; Gillespie, T.W.; He, K.S.; Kark, S.; Levin, N.; Lucas, K.; Luoto, M.; et al. Remotely sensed spectral heterogeneity as a proxy of species diversity: Recent advances and open challenges. Ecol. Inform. 2010, 5, 318–329. [Google Scholar] [CrossRef]

- Davis, T. The Ramsar Convention Manual: A Guide to the Convention on Wetlands of International Importance Especially as Waterfowl Habitat; Ramsar Convention Bureau: Gland, Switzerland, 1994. [Google Scholar]

- Verhoeven, J.T.A. Wetlands in Europe: Perspectives for restoration of a lost paradise. Ecol. Eng. 2014, 66, 6–9. [Google Scholar] [CrossRef]

- Holgerson, M.A.; Raymond, P.A. Large contribution to inland water CO2 and CH4 emissions from very small ponds. Nat. Geosci. 2016, 9, 222–226. [Google Scholar] [CrossRef]

- Marton, J.M.; Creed, I.F.; Lewis, D.B.; Lane, C.R.; Basu, N.B.; Cohen, M.J.; Craft, C.B. Geographically Isolated Wetlands are Important Biogeochemical Reactors on the Landscape. Bioscience 2015, 65, 408–418. [Google Scholar] [CrossRef]

- Hefting, M.M.; van den Heuvel, R.N.; Verhoeven, J.T.A. Wetlands in agricultural landscapes for nitrogen attenuation and biodiversity enhancement: Opportunities and limitations. Ecol. Eng. 2013, 56, 5–13. [Google Scholar] [CrossRef]

- Dudgeon, D.; Arthington, A.H.; Gessner, M.O.; Kawabata, Z.-I.; Knowler, D.J.; Lévêque, C.; Naiman, R.J.; Prieur-Richard, A.-H.; Soto, D.; Stiassny, M.L.J.; et al. Freshwater biodiversity: Importance, threats, status and conservation challenges. Biol. Rev. 2006, 81, 163–182. [Google Scholar] [CrossRef] [PubMed]

- Sala, O.E.; Chapin, F.S.; Armesto, J.J.; Berlow, E.; Bloomfield, J.; Dirzo, R.; Huber-Sanwald, E.; Huenneke, L.F.; Jackson, R.B.; Kinzig, A.; et al. Global biodiversity scenarios for the year 2100. Science (80-) 2000, 287, 1770–1774. [Google Scholar] [CrossRef]

- Collen, B.; Whitton, F.; Dyer, E.E.; Baillie, J.E.M.; Cumberlidge, N.; Darwall, W.R.T.; Pollock, C.; Richman, N.I.; Soulsby, A.-M.; Böhm, M. Global patterns of freshwater species diversity, threat and endemism. Glob. Ecol. Biogeogr. 2014, 23, 40–51. [Google Scholar] [CrossRef] [PubMed]

- Brönmark, C.; Hansson, L.-A. Environmental issues in lakes and ponds: Current state and perspectives. Environ. Conserv. 2002, 29, 290–306. [Google Scholar] [CrossRef]

- Wu, Q.; Lane, C.; Liu, H. An Effective Method for Detecting Potential Woodland Vernal Pools Using High-Resolution LiDAR Data and Aerial Imagery. Remote Sens. 2014, 6, 11444–11467. [Google Scholar] [CrossRef]

- Alsdorf, D.E.; Rodriguez, E.; Lettenmaier, D.P. Measuring surface water from space. Rev. Geophys. 2007, 45. [Google Scholar] [CrossRef]

- Alsdorf, D.E.; Lettenmaier, D.P. Tracking Fresh Water from Space. Science (80-) 2003, 301, 1491–1494. [Google Scholar] [CrossRef] [PubMed]

- Famiglietti, J.; Cazenave, A.; Eicker, A.; Reager, J. Satellites provide the big picture. Science 2015, 349, 684–685. [Google Scholar] [CrossRef] [PubMed]

- Palmer, S.; Kutser, T.; Hunter, P. Remote sensing of inland waters: Challenges, progress and future directions. Remote Sens. Environ. 2015, 157, 1–8. [Google Scholar] [CrossRef]

- Marcus, W.A.; Fonstad, M.A. Optical remote mapping of rivers at sub-meter resolutions and watershed extents. Earth Surf. Process. Landf. 2008, 33, 4–24. [Google Scholar] [CrossRef]

- Dörnhöfer, K.; Oppelt, N. Remote sensing for lake research and monitoring—Recent advances. Ecol. Indic. 2016, 64, 105–122. [Google Scholar] [CrossRef]

- Marcus, W.; Legleiter, C.; Aspinall, R.; Boardman, J. High spatial resolution hyperspectral mapping of in-stream habitats, depths, and woody debris in mountain streams. Geomorphology 2003, 55, 363–380. [Google Scholar] [CrossRef]

- Lejot, J.; Delacourt, C.; Piégay, H.; Fournier, T.; Trémélo, M.-L.; Allemand, P. Very high spatial resolution imagery for channel bathymetry and topography from an unmanned mapping controlled platform. Earth Surf. Process. Landf. 2007, 32, 1705–1725. [Google Scholar] [CrossRef]

- Goovaerts, P. Geostatistical incorporation of spatial coordinates into supervised classification of hyperspectral data. J. Geogr. Syst. 2002, 4, 99–111. [Google Scholar] [CrossRef]

- Carbonneau, P.; Lane, S.; Bergeron, N. Feature based image processing methods applied to bathymetric measurements from airborne remote sensing in fluvial environments. Earth Surf. Process. Landf. 2006, 31, 1413–1423. [Google Scholar] [CrossRef]

- Carbonneau, P.E.; Lane, S.N.; Bergeron, N.E. Catchment-scale mapping of surface grain size in gravel bed rivers using airborne digital imagery. Water Resour. Res. 2004, 40. [Google Scholar] [CrossRef] [Green Version]

- Marcus, W.A.; Crabtree, R.; Aspinall, R.J.; Boardman, J.W.; Despain, D.; Minshall, W.; Peel, J. Validation of High-Resolution Hyperspectral Data for Stream and Riparian Habitat Analysis; Annual Report (Phase 2) to NASA EOCAP Program; Stennis Space Flight Center: Hancock County, MS, USA, 2000.

- Li, L.; Chen, Y.; Yu, X.; Liu, R.; Huang, C. Sub-pixel flood inundation mapping from multispectral remotely sensed images based on discrete particle swarm optimization. ISPRS J. Photogramm. Remote Sens. 2015, 101, 10–21. [Google Scholar] [CrossRef]

- Manakos, I.; Chatzopoulos-Vouzoglanis, K.; Petrou, Z.; Filchev, L.; Apostolakis, A. Globalland30 Mapping Capacity of Land Surface Water in Thessaly, Greece. Land 2014, 4, 1–18. [Google Scholar] [CrossRef]

- Sakthivel, S.P.; Genitha C., H.; Sivalingam, V.J.; Shanmugam, S. Super-resolution mapping of hyperspectral images for estimating the water-spread area of Peechi reservoir, southern India. J. Appl. Remote Sens. 2014, 8, 83510. [Google Scholar] [CrossRef]

- Foody, G.M.; Muslim, A.M.; Atkinson, P.M. Super-resolution mapping of the waterline from remotely sensed data. Int. J. Remote Sens. 2005, 26, 5381–5392. [Google Scholar] [CrossRef]

- Muster, S.; Heim, B.; Abnizova, A.; Boike, J. Water Body Distributions Across Scales: A Remote Sensing Based Comparison of Three Arctic TundraWetlands. Remote Sens. 2013, 5, 1498–1523. [Google Scholar] [CrossRef] [Green Version]

- Kay, S.; Hedley, J.; Lavender, S. Sun glint correction of high and low spatial resolution images of aquatic scenes: A review of methods for visible and near-infrared wavelengths. Remote Sens. 2009. [Google Scholar] [CrossRef]

- Gallant, A. The Challenges of Remote Monitoring of Wetlands. Remote Sens. 2015, 7, 10938–10950. [Google Scholar] [CrossRef]

- Campbell, J.B.; Wynne, R.H. Introduction to Remote Sensing; Guilford Press: New York, NY, USA, 2011. [Google Scholar]

- Rautiainen, M.; Nilson, T.; Lang, M.; Kuusk, A.; Mo, M. Canopy gap fraction estimation from digital hemispherical images using sky radiance models and a linear conversion method. Agric. For. Meteorol. 2010, 150, 20–29. [Google Scholar]

- Lichvar, R.; Finnegan, D.; Newman, S.; Ochs, W. Delineating and Evaluating Vegetation Conditions of Vernal Pools Using Spaceborne and Airborne Remote Sensing Techniques; Beale Air Force Base: Marysville, CA, USA, 2006. [Google Scholar]

- Brzank, A.; Heipke, C. Classification of Lidar Data into water and land points in coastal areas. Int. Arch. Photogramm. 2006, 9, 197–202. [Google Scholar]

- Höfle, B.; Vetter, M.; Pfeifer, N.; Mandlburger, G.; Stötter, J. Water surface mapping from airborne laser scanning using signal intensity and elevation data. Earth Surf. Process. Landf. 2009, 34, 1635–1649. [Google Scholar] [CrossRef]

- Hodgson, M.E.; Jensen, J.R.; Tullis, J.A.; Riordan, K.D.; Archer, C.M. Synergistic Use of Lidar and Color Aerial Photography for Mapping Urban Parcel Imperviousness. Photogramm. Eng. Remote Sens. 2003, 69, 973–980. [Google Scholar] [CrossRef]

- Chen, Y.; Su, W.; Li, J.; Sun, Z. Hierarchical object oriented classification using very high resolution imagery and LIDAR data over urban areas. Adv. Space Res. 2009, 43, 1101–1110. [Google Scholar] [CrossRef]

- Bork, E.W.; Su, J.G. Integrating LIDAR data and multispectral imagery for enhanced classification of rangeland vegetation: A meta analysis. Remote Sens. Environ. 2007, 111, 11–24. [Google Scholar] [CrossRef]

- Rodríguez-Cuenca, B.; Alonso, M. Semi-Automatic Detection of Swimming Pools from Aerial High-Resolution Images and LIDAR Data. Remote Sens. 2014, 6, 2628–2646. [Google Scholar] [CrossRef]

- Maxa, M.; Bolstad, P. Mapping northern wetlands with high resolution satellite images and LiDAR. Wetlands 2009, 29, 248–260. [Google Scholar] [CrossRef]

- Lang, M.W.; McCarty, G.W. Lidar intensity for improved detection of inundation below the forest canopy. Wetlands 2009, 29, 1166–1178. [Google Scholar] [CrossRef]

- Elaksher, A.F. Fusion of hyperspectral images and lidar-based dems for coastal mapping. Opt. Lasers Eng. 2008, 46, 493–498. [Google Scholar] [CrossRef]

- Bigdeli, B.; Samadzadegan, F.; Reinartz, P. A decision fusion method based on multiple support vector machine system for fusion of hyperspectral and LIDAR data. Int. J. Image Data Fusion 2014, 5, 196–209. [Google Scholar] [CrossRef]

- Zhu, X.; Chen, J.; Gao, F.; Chen, X.; Masek, J.G. An enhanced spatial and temporal adaptive reflectance fusion model for complex heterogeneous regions. Remote Sens. Environ. 2010, 114, 2610–2623. [Google Scholar] [CrossRef]

- Zhang, J. Multi-source remote sensing data fusion: Status and trends. Int. J. Image Data Fusion 2010. [Google Scholar] [CrossRef]

- Graitson, E. Elaboration d’un Référentiel et de Documents de Vulgarisation sur les Mares Agricoles en Région Wallonne; Rapport Final—Partie 2; ORBi: Liège, Belgium, 2009. [Google Scholar]

- Kristensen, P.; Globevnik, L. European Small Water Bodies. Biol. Environ. Proc. R. Irish Acad. 2014, 114B, 281–287. [Google Scholar] [CrossRef]

- Ernst, J.; Dewals, B.J.; Archambeau, P.; Detrembleur, S.; Erpicum, S.P.M. Integration of accurate 2D inundation modelling , vector land use database and economic damage evaluation. Flood Risk Manag. Res. Pract. 2008, 1643–1653. [Google Scholar]

- Gouvernement Wallon. Direction Générale de l’Agriculture des Ressources Naturelles et de l’Environnement (Service Public Wallonie) PICEA; Projet du Gouvernement Wallon; Gouvernement Wallon: Namur, Belgium, 2006; Volume GW VIII.

- Triggs, B.; Mclauchlan, P.; Hartley, R.; Fitzgibbon, A. Bundle Ajustment—A Modern Synthesis. Lect. Notes Comput. Sci. 2000, 1883, 298–372. [Google Scholar]

- Service Public Wallonnie (SPW). Orthophotos 2012–2013 Rapport de Production Orthophotos Couleur Numériques d’une Résolution de 25 cm; Service Public Wallonnie (SPW): Jambes, Belgium, 2013. [Google Scholar]

- Roy, D.P.; Ju, J.; Kline, K.; Scaramuzza, P.L.; Kovalskyy, V.; Hansen, M.; Loveland, T.R.; Vermote, E.; Zhang, C. Web-enabled Landsat Data (WELD): Landsat ETM+ composited mosaics of the conterminous United States. Remote Sens. Environ. 2010, 114, 35–49. [Google Scholar] [CrossRef]

- Open Geospatial Consortium. G.D.T. GDAL—Geospatial Data Abstraction Library; Open Source Geospatial Foundation: Chicago, IL, USA, 2015. [Google Scholar]

- Shahtahmassebi, A.; Yang, N.; Wang, K.; Moore, N.; Shen, Z. Review of shadow detection and de-shadowing methods in remote sensing. Chin. Geogr. Sci. 2013, 23, 403–420. [Google Scholar] [CrossRef]

- Nakajima, T.; Tao, G.; Yasuoka, Y. Simulated recovery of information in shadow areas on IKONOS image by combing ALS data. In In Proceeding of the Asian Conference on Remote Sensing (ACRS), Kathmandu, Nepal, 25–29 November 2002; Available online: http://a-a-r-s.org/aars/proceeding/ACRS2002/Papers/VHR02-2.pdf (accessed on 23 February 2017).

- Zhan, Q.; Shi, W.; Xiao, Y. Quantitative analysis of shadow effects in high-resolution images of urban areas. Sūgaku 2012, 11, 97–110. [Google Scholar]

- Dare, P.M. Shadow Analysis in High-Resolution Satellite Imagery of Urban Areas. Photogramm. Eng. Remote Sens. 2005, 71, 169–177. [Google Scholar] [CrossRef]

- McFEETERS, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Environmental Systems Research Institute. ESRI ArcGIS Desktop—Version 13; Environmental Systems Research Institute: Redlands, CA, USA, 2016. [Google Scholar]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Mahalanobis, P. On the generalized distance in statistics. Proc. Natl. Inst. Sci. 1936, 2, 49–55. [Google Scholar]

- Richards, J.A. Remote Sensing Digital Image Analysis; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Radoux, J.; Defourny, P. Automated image-to-map discrepancy detection using iterative trimming. Photogramm. Eng. Remote Sens. 2010, 76, 173–181. [Google Scholar] [CrossRef]

- Desclée, B.; Bogaert, P.; Defourny, P. Forest change detection by statistical object-based method. Remote Sens. Environ. 2006, 102, 1–11. [Google Scholar] [CrossRef]

- Matton, N.; Canto, G.; Waldner, F.; Valero, S.; Morin, D. An automated method for annual cropland mapping along the season for various globally-distributed agrosystems using high spatial and temporal resolution. Remote Sens. 2015, 7, 13208–13232. [Google Scholar] [CrossRef]

- Inglada, J.; Christophe, E. The Orfeo Toolbox Remote Sensing Image Processing Software. In Proceedings of the 2009 IEEE International Geoscience and Remote Sensing Symposium, Cape Town, South Africa, 12–17 July 2009; pp. 733–736.

- Hallot, P.; Billen, R. Rapport Final Reengineering PICC S0. 04.01-11PNSP-01; ORBi: Liège, Belgium, 2013. [Google Scholar]

- Nath, R.K.; Deb, S.K. Water-Body Area Extraction From High Resolution Satellite Images-An Introduction, Review, and Comparison. Int. J. Image Process. 2010, 3, 353–372. [Google Scholar]

- Xie, C.; Huang, X.; Zeng, W.; Fang, X. A novel water index for urban high-resolution eight-band WorldView-2 imagery. Int. J. Digit. Earth 2016, 9, 925–941. [Google Scholar] [CrossRef]

- Shahbazi, M.; Théau, J.; Ménard, P. Recent applications of unmanned aerial imagery in natural resource management. Gisci. Remote Sens. 2014, 51, 339–365. [Google Scholar] [CrossRef]

| Stratum (Area km²) | Historical Water Body Vector Map Sampling | Classification | |||

|---|---|---|---|---|---|

| Water Body (Number) | Water Body (Area m²) | Labeling Points (Number) | Water Cluster (Number) | ||

| Minimum | 16 | 2 | 434.5 | 116 | 1 |

| 1st Quartile | 190 | 77 | 1851.6 | 5465 | 8 |

| Median | 407.5 | 126 | 2662.4 | 9928 | 11 |

| Mean | 471.3 | 169 | 3198.7 | 13,171 | 11 |

| 3rd Quartile | 701.5 | 243 | 4184.2 | 18,508 | 14 |

| Maximum | 1127 | 518 | 10,817.7 | 39,953 | 26 |

| Order | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

|---|---|---|---|---|---|---|---|

| operation | union of water and no data layers | eroded (3 m) | dilated (3 m) | union with water layer | dilated (2 m) | eroded (3 m) | dilated (1 m) |

| % surface water | 100.00 | 87.75 | 88.07 | 88.10 | 88.40 | 88.00 | 82.04 |

| <50 m² | 50–100 m² | >100 m² | Total | |||||

|---|---|---|---|---|---|---|---|---|

| Detection Efficiency | Detection Cost | Detection Efficiency | Detection Cost | Detection Efficiency | Detection Cost | Detection Efficiency | Detection Cost | |

| Layer A | 6/330 (1.82%) | 43/6 (7.17) | 27/162 (16.67%) | 73/27 (2.7) | 540/843 (64.06%) | 524/540 (0.97) | 573/1334 (42.95%) | 638/573 (1.11) |

| Layer B | 16/330 (4.85%) | 270/16 (16.88) | 23/162 (14.2%) | 334/23 (14.52) | 132/843 (15.66%) | 419/132 (3.17) | 171/1334 (12.82%) | 984/171 (5.75) |

| Layer C | 42/330 (12.73%) | 1878/42 (44.71) | 28/162 (17.28%) | 626/28 (22.36) | 159/843 (18.86%) | 671/159 (4.22) | 229/1334 (17.17%) | 2148/229 (9.38) |

| Total | 64/330 (19.39%) | 2191/64 (34.23) | 78/162 (48.15%) | 1033/78 (13.24) | 831/843 (98.57%) | 1614/831 (1.94) | 973/1334 (72.93%) | 3770/973 (3.87) |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

D’Andrimont, R.; Marlier, C.; Defourny, P. Hyperspatial and Multi-Source Water Body Mapping: A Framework to Handle Heterogeneities from Observations and Targets over Large Areas. Remote Sens. 2017, 9, 211. https://doi.org/10.3390/rs9030211

D’Andrimont R, Marlier C, Defourny P. Hyperspatial and Multi-Source Water Body Mapping: A Framework to Handle Heterogeneities from Observations and Targets over Large Areas. Remote Sensing. 2017; 9(3):211. https://doi.org/10.3390/rs9030211

Chicago/Turabian StyleD’Andrimont, Raphaël, Catherine Marlier, and Pierre Defourny. 2017. "Hyperspatial and Multi-Source Water Body Mapping: A Framework to Handle Heterogeneities from Observations and Targets over Large Areas" Remote Sensing 9, no. 3: 211. https://doi.org/10.3390/rs9030211