Combining Spectral Data and a DSM from UAS-Images for Improved Classification of Non-Submerged Aquatic Vegetation

Abstract

:1. Introduction

2. Materials and Methods

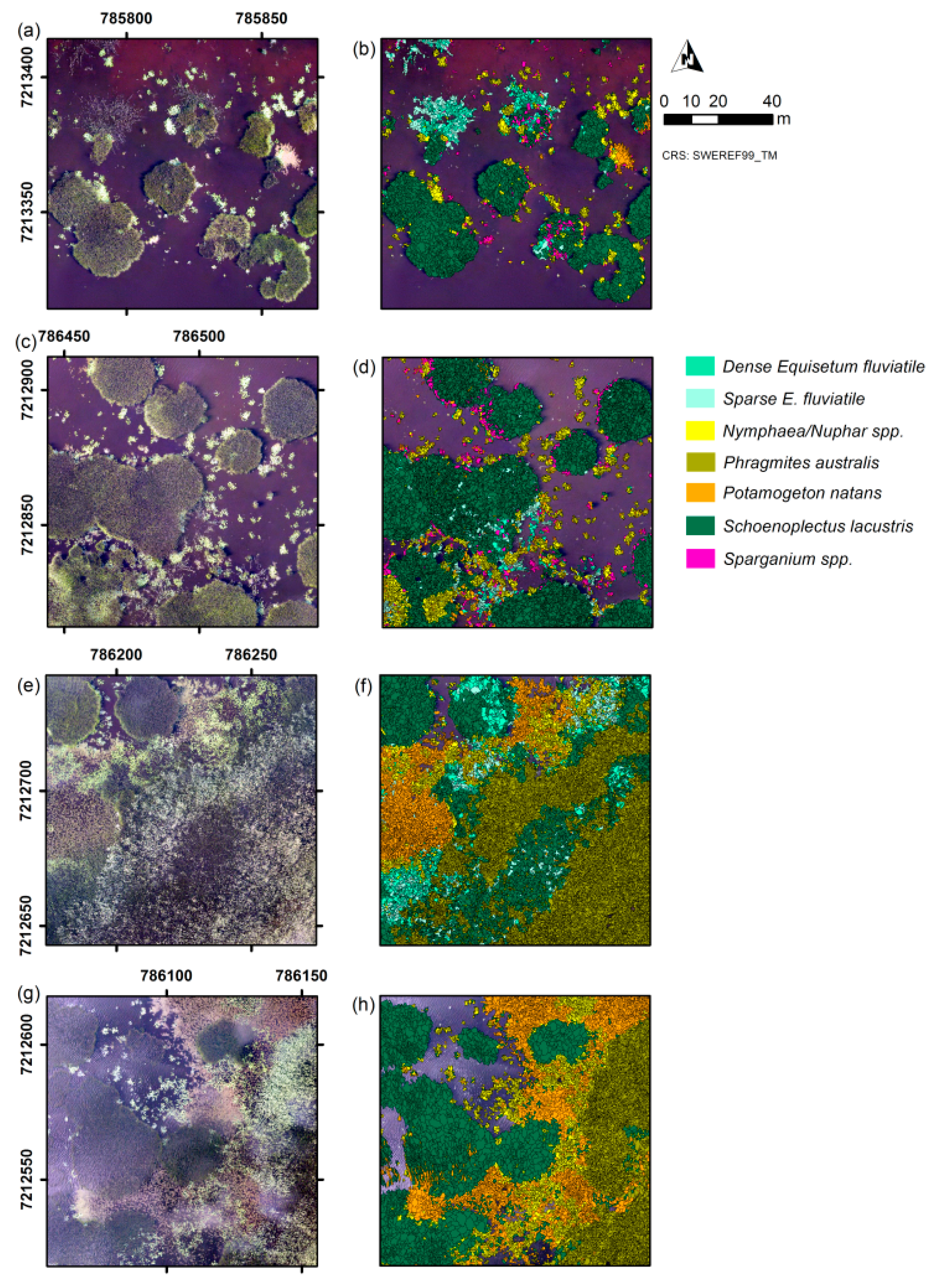

2.1. Study Area, Image Acquisition, Test Sites, and Aquatic Plant Taxa

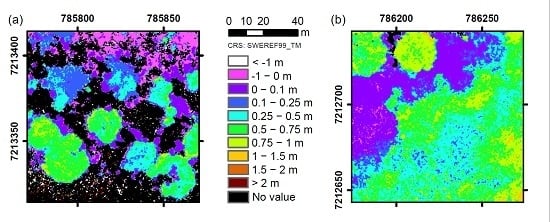

2.2. Height Data

2.3. Object-Based Image Analysis and Accuracy Assessment

2.4. Statistical Analysis

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| DSM | Digital surface model |

| LiDAR | Light detection and ranging |

| OBIA | Object-based image analysis |

| PAMS | Personal Aerial Mapping System |

| RGB | Red green blue |

| 3D | Three-dimensional |

| UAS | Unmanned aircraft system |

References

- Anderson, K.; Gaston, K.J. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef]

- Husson, E.; Ecke, F.; Reese, H. Comparison of manual mapping and automated object-based image analysis of non-submerged aquatic vegetation from very-high-resolution UAS images. Remote Sens. 2016, 8, 724. [Google Scholar] [CrossRef]

- Mossberg, B.; Stenberg, L. Den Nya Nordiska Floran; Wahlström & Widstrand: Aurskog, Norge, 2006. [Google Scholar]

- Tempfli, K.; Kerle, N.; Huurneman, G.C.; Janssen, L.L.E. Principles of Remote Sensing; ITC: Enschede, The Netherlands, 2009. [Google Scholar]

- Colwell, R. Manual of Photographic Interpretation; American Society of Photogrammetry: Washington, DC, USA, 1960. [Google Scholar]

- Madden, M.; Jordan, T.; Bernardes, S.; Cotten, D.L.; O’Hare, N.; Pasqua, A. Unmanned aerial systems and structure from motion revolutionize wetlands mapping. In Wetlands: Applications and Advances; Tiner, R.W., Lang, M.W., Klemas, V.V., Eds.; CRC Press: Boca Raton, FL, USA, 2015; pp. 195–219. [Google Scholar]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Cunliffe, A.M.; Brazier, R.E.; Anderson, K. Ultra-fine grain landscape-scale quantification of dryland vegetation structure with drone-acquired structure-from-motion photogrammetry. Remote Sens. Environ. 2016, 183, 129–143. [Google Scholar] [CrossRef] [Green Version]

- Ke, Y.H.; Quackenbush, L.J.; Im, J. Synergistic use of Quickbird multispectral imagery and Lidar data for object-based forest species classification. Remote Sens. Environ. 2010, 114, 1141–1154. [Google Scholar] [CrossRef]

- Nordkvist, K.; Granholm, A.-H.; Holmgren, J.; Olsson, H.; Nilsson, M. Combining optical satellite data and airborne laser scanner data for vegetation classification. Remote Sens. Lett. 2012, 3, 393–401. [Google Scholar] [CrossRef]

- Granholm, A.H.; Olsson, H.; Nilsson, M.; Allard, A.; Holmgren, J. The potential of digital surface models based on aerial images for automated vegetation mapping. Int. J. Remote Sens. 2015, 36, 1855–1870. [Google Scholar] [CrossRef]

- Reese, H.; Nordkvist, K.; Nyström, M.; Bohlin, J.; Olsson, H. Combining point clouds from image matching with Spot 5 multispectral data for mountain vegetation classification. Int. J. Remote Sens. 2015, 36, 403–416. [Google Scholar] [CrossRef]

- Reese, H.; Nyström, M.; Nordkvist, K.; Olsson, H. Combining airborne laser scanning data and optical satellite data for classification of alpine vegetation. Int. J. Appl. Earth Obs. Geoinf. 2014, 27, 81–90. [Google Scholar] [CrossRef]

- Gillan, J.K.; Karl, J.W.; Duniway, M.; Elaksher, A. Modeling vegetation heights from high resolution stereo aerial photography: An application for broad-scale rangeland monitoring. J. Environ. Manag. 2014, 144, 226–235. [Google Scholar] [CrossRef] [PubMed]

- Rampi, L.P.; Knight, J.F.; Pelletier, K.C. Wetland mapping in the upper midwest United States: An object-based approach integrating Lidar and imagery data. Photogramm. Eng. Remote Sens. 2014, 80, 439–448. [Google Scholar] [CrossRef]

- Al-Rawabdeh, A.; He, F.N.; Moussa, A.; El-Sheimy, N.; Habib, A. Using an unmanned aerial vehicle-based digital imaging system to derive a 3D point cloud for landslide scarp recognition. Remote Sens. 2016, 8, 95. [Google Scholar] [CrossRef]

- Vetrivel, A.; Gerke, M.; Kerle, N.; Vosselman, G. Segmentation of UAV-based images incorporating 3D point cloud information. In Proceedings of the Joint ISPRS Conference on Photogrammetric Image Analysis (PIA) and High Resolution Earth Imaging for Geospatial Information (HRIGI), Munich, Germany, 25–27 March 2015.

- Lechner, A.M.; Fletcher, A.; Johansen, K.; Erskine, P. Characterising upland swamps using object-based classification methods and hyper-spatial resolution imagery derived from an umanned aerial vehicle. In Proceedings of the XXII ISPRS Congress, Melbourne, Australia, 25 August–1 September 2012.

- Kuria, D.N.; Menz, G.; Misana, S.; Mwita, E.; Thamm, H.; Alvarez, M.; Mogha, N.; Becker, M.; Oyieke, H. Seasonal vegetation changes in the Malinda wetland using bi-temporal, multi-sensor, very high resolution remote sensing data sets. Adv. Remote Sens. 2014, 3, 33–48. [Google Scholar] [CrossRef]

- Tamminga, A.; Hugenholtz, C.; Eaton, B.; Lapointe, M. Hyperspatial remote sensing of channel reach morphology and hydraulic fish habitat using an unmanned aerial vehicle (UAV): A first assessment in the context of river research and management. River Res. Appl. 2015, 31, 379–391. [Google Scholar] [CrossRef]

- Boon, M.A.; Greenfield, R.; Tesfamichael, S. Wetland assessment using unmanned aerial vehicle (UAV) photogrammetry. In Proceedings of the XXIII ISPRS Congress, Prague, Czech Republic, 12–19 July 2016.

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Pontius, R.G.; Millones, M. Death to kappa: Birth of quantity disagreement and allocation disagreement for accuracy assessment. Int. J. Remote Sens. 2011, 32, 4407–4429. [Google Scholar] [CrossRef]

- Olofsson, P.; Foody, G.M.; Herold, M.; Stehman, S.V.; Woodcock, C.E.; Wulder, M.A. Good practices for estimating area and assessing accuracy of land change. Remote Sens. Environ. 2014, 148, 42–57. [Google Scholar] [CrossRef]

- Radoux, J.; Bogaert, P.; Fasbender, D.; Defourny, P. Thematic accuracy assessment of geographic object-based image classification. Int. J. Geogr. Inf. Sci. 2011, 25, 895–911. [Google Scholar] [CrossRef]

- Zar, J.H. Biostatistical Analysis; Prentice-Hall Inc.: Upper Saddle River, NJ, USA, 1999. [Google Scholar]

- Hirschmüller, H. Stereo processing by semi-global matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 328–341. [Google Scholar] [CrossRef] [PubMed]

- Leberl, F.; Irschara, A.; Pock, T.; Meixner, P.; Gruber, M.; Scholz, S.; Wiechert, A. Point clouds: Lidar versus 3D vision. Photogramm. Eng. Remote Sens. 2010, 76, 1123–1134. [Google Scholar] [CrossRef]

- Haala, N.; Cramer, M.; Rothermel, M. Quality of 3D point clouds from highly overlapping UAV imagery. In Proceedings of the Conference on Unmanned Aerial Vehicles in Geomatics (UAV-g), Rostock, Germany, 4–6 September 2013.

| Site | Taxa |

|---|---|

| I | Schoenoplectus lacustris, Nymphaea/Nuphar spp., Equisetum fluviatile (dense), E. fluviatile (sparse), Potamogeton natans, Sparganium spp. |

| II | S. lacustris, Nymphaea/Nuphar spp., P. natans, Sparganium spp., E. fluviatile (sparse), E. fluviatile (dense) |

| III | Nymphaea/Nuphar spp., E. fluviatile (sparse), E. fluviatile (dense), P. natans, Sparganium spp. |

| IV | Phragmites australis, S. lacustris, P. natans, Nymphaea/Nuphar spp., E. fluviatile (dense), E. fluviatile (sparse) |

| V | S. lacustris, P. natans, P. australis, Nymphaea/Nuphar spp. |

| Level of Thematic Detail | Growth Forms | Dominant Taxa | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Site | I | II | III | IV | V | I | II | III | IV | V |

| Overall accuracy (segment-based) | ||||||||||

| Height, spectral, and textural features | 84.6 | 87.1 | 78.9 | 77.7 | 78.0 | 79.1 | 81.1 | 66.4 | 81.9 | 85.4 |

| Spectral and textural features | 80.3 | 83.4 | 74.3 | 56.3 | 63.4 | 68.6 | 64.7 | 63.0 | 52.2 | 65.4 |

| Difference | 4.3 | 3.7 | 4.6 | 21.4 | 14.6 | 10.5 | 16.4 | 3.4 | 29.7 | 20.0 |

| Overall accuracy (area-based) | ||||||||||

| Height, spectral, and textural features | 94.7 | 92.9 | 82.3 | 76.6 | 74.3 | 82.7 | 85.3 | 63.2 | 82.1 | 86.2 |

| Spectral and textural features | 93.6 | 91.6 | 77.8 | 56.4 | 64.8 | 72.7 | 70.3 | 61.0 | 51.8 | 74.6 |

| Difference | 1.0 | 1.2 | 4.5 | 20.2 | 9.5 | 9.9 | 15.0 | 2.3 | 30.2 | 11.7 |

| Cohen’s Kappa coefficient | ||||||||||

| Height, spectral, and textural features | 0.75 | 0.76 | 0.58 | 0.54 | 0.64 | 0.68 | 0.67 | 0.38 | 0.74 | 0.81 |

| Spectral and textural features | 0.69 | 0.69 | 0.51 | 0.25 | 0.40 | 0.54 | 0.46 | 0.34 | 0.39 | 0.54 |

| Difference | 0.07 | 0.07 | 0.07 | 0.29 | 0.24 | 0.14 | 0.21 | 0.04 | 0.35 | 0.27 |

| Overall quantity disagreement | ||||||||||

| Height, spectral, and textural features | 6.9 | 6.9 | 11.1 | 18.6 | 11.4 | 10.7 | 10.8 | 20.7 | 13.5 | 6.0 |

| Spectral and textural features | 8.0 | 7.4 | 14.6 | 36.3 | 10.9 | 14.6 | 23.1 | 22.7 | 30.8 | 2.9 |

| Difference | −1.1 | −0.6 | −3.4 | −17.7 | 0.6 | −3.9 | −12.2 | −2.0 | −17.3 | 3.1 |

| Overall allocation disagreement | ||||||||||

| Height, spectral, and textural features | 8.6 | 6.0 | 10.0 | 3.7 | 10.6 | 10.2 | 8.1 | 12.9 | 4.7 | 8.6 |

| Spectral and textural features | 11.7 | 9.1 | 11.1 | 7.3 | 25.7 | 16.8 | 12.2 | 14.3 | 17.0 | 31.7 |

| Difference | −3.1 | −3.1 | −1.1 | −3.7 | −15.1 | −6.6 | −4.2 | −1.4 | −12.4 | −23.1 |

| Site I | Site II | Site III | Site IV | Site V | Wilcoxon Signed-Rank Test | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| W | N | H | W | N | H | W | N | H | W | N | H | W | N | H | Valid N | T | p | |

| Producer’s accuracy (segment-based) | ||||||||||||||||||

| Height, spectral, and textural features | 83 | 88 | 84 | 89 | 68 | 95 | 65 | 83 | 75 | 90 | 90 | 74 | 93 | 88 | 65 | 12 | 0 | 0.002 ** |

| Spectral and textural features | 81 | 82 | 79 | 89 | 59 | 92 | 63 | 78 | 69 | 90 | 82 | 49 | 93 | 68 | 53 | |||

| User’s accuracy (segment-based) | ||||||||||||||||||

| Height, spectral, and textural features | 98 | 65 | 88 | 86 | 94 | 86 | 83 | 93 | 43 | 25 | 58 | 98 | 38 | 88 | 87 | 14 | 11 | 0.009 * |

| Spectral and textural features | 97 | 58 | 84 | 89 | 84 | 82 | 85 | 92 | 35 | 25 | 33 | 92 | 39 | 74 | 63 | |||

| No. of validation segments | 106 | 68 | 176 | 57 | 87 | 206 | 52 | 246 | 52 | 10 | 71 | 274 | 28 | 163 | 159 | |||

| Producer’s accuracy (area-based) | ||||||||||||||||||

| Height, spectral, and textural features | 97 | 87 | 89 | 96 | 63 | 96 | 90 | 74 | 80 | 92 | 89 | 73 | 96 | 86 | 62 | 12 | 0 | 0.002 ** |

| Spectral and textural features | 97 | 83 | 87 | 96 | 54 | 96 | 89 | 70 | 68 | 92 | 77 | 49 | 96 | 69 | 56 | |||

| User’s accuracy (area-based) | ||||||||||||||||||

| Height, spectral, and textural features | 99 | 64 | 91 | 96 | 94 | 90 | 90 | 93 | 58 | 35 | 57 | 98 | 32 | 93 | 88 | 15 | 8 | 0.003 ** |

| Spectral and textural features | 99 | 59 | 89 | 97 | 89 | 88 | 87 | 92 | 49 | 35 | 30 | 92 | 34 | 80 | 73 | |||

| Total area of validation segments | 458 | 37 | 177 | 162 | 39 | 176 | 145 | 121 | 66 | 18 | 42 | 201 | 30 | 120 | 168 | |||

| Vegetation Class | |||||||

|---|---|---|---|---|---|---|---|

| Sparse E.f. | Dense E.f. | N./N. spp. | P.a. | P.n. | S.l. | S. spp. | |

| Site I | |||||||

| Producer’s accuracy (segment-based) | |||||||

| Height, spectral, and textural features | 60 | 56 | 83 | 80 | 83 | 50 | |

| Spectral and textural features | 60 | 48 | 79 | 80 | 68 | 40 | |

| User’s accuracy (segment-based) | |||||||

| Height, spectral, and textural features | 45 | 44 | 93 | 53 | 97 | 16 | |

| Spectral and textural features | 41 | 33 | 78 | 47 | 92 | 11 | |

| No. of validation segments | 15 | 27 | 95 | 10 | 206 | 10 | |

| Producer’s accuracy (area-based) | |||||||

| Height, spectral, and textural features | 76 | 43 | 86 | 91 | 87 | 48 | |

| Spectral and textural features | 76 | 41 | 83 | 91 | 74 | 31 | |

| User’s accuracy (area-based) | |||||||

| Height, spectral, and textural features | 38 | 49 | 91 | 72 | 98 | 13 | |

| Spectral and textural features | 33 | 33 | 71 | 66 | 95 | 8 | |

| Total area of validation segments | 12 | 24 | 50 | 10 | 214 | 5 | |

| Site II | |||||||

| Producer’s accuracy (segment-based) | |||||||

| Height, spectral, and textural features | 50 | 80 | 71 | 20 | 90 | 70 | |

| Spectral and textural features | 50 | 70 | 70 | 20 | 65 | 70 | |

| User’s accuracy (segment-based) | |||||||

| Height, spectral, and textural features | 21 | 47 | 93 | 11 | 97 | 35 | |

| Spectral and textural features | 12 | 29 | 84 | 6 | 92 | 27 | |

| No. of validation segments | 10 | 10 | 90 | 10 | 230 | 10 | |

| Producer’s accuracy (area-based) | |||||||

| Height, spectral, and textural features | 63 | 61 | 73 | 13 | 91 | 72 | |

| Spectral and textural features | 63 | 59 | 71 | 13 | 72 | 72 | |

| User’s accuracy (area-based) | |||||||

| Height, spectral, and textural features | 26 | 44 | 89 | 5 | 99 | 33 | |

| Spectral and textural features | 15 | 18 | 81 | 3 | 96 | 23 | |

| Total area of validation segments | 7 | 4 | 40 | 4 | 205 | 5 | |

| Site III | |||||||

| Producer’s accuracy (segment-based) | |||||||

| Height, spectral, and textural features | 50 | 59 | 70 | 50 | 70 | ||

| Spectral and textural features | 48 | 59 | 66 | 50 | 70 | ||

| User’s accuracy (segment-based) | |||||||

| Height, spectral, and textural features | 54 | 28 | 95 | 13 | 30 | ||

| Spectral and textural features | 44 | 24 | 94 | 13 | 32 | ||

| No. of validation segments | 40 | 22 | 273 | 12 | 10 | ||

| Producer’s accuracy (area-based) | |||||||

| Height, spectral, and textural features | 64 | 71 | 61 | 72 | 67 | ||

| Spectral and textural features | 62 | 71 | 57 | 72 | 67 | ||

| User’s accuracy (area-based) | |||||||

| Height, spectral, and textural features | 80 | 37 | 95 | 18 | 36 | ||

| Spectral and textural features | 75 | 32 | 95 | 20 | 38 | ||

| Total area of validation segments | 61 | 22 | 131 | 11 | 7 | ||

| Site IV | |||||||

| Producer’s accuracy (segment-based) | |||||||

| Height, spectral, and textural features | 90 | 20 | 66 | 100 | 94 | 59 | |

| Spectral and textural features | 80 | 40 | 61 | 48 | 88 | 43 | |

| User’s accuracy (segment-based) | |||||||

| Height, spectral, and textural features | 30 | 10 | 87 | 93 | 79 | 97 | |

| Spectral and textural features | 17 | 9 | 68 | 81 | 38 | 69 | |

| No. of validation segments | 10 | 10 | 41 | 170 | 33 | 100 | |

| Producer’s accuracy (area-based) | |||||||

| Height, spectral, and textural features | 98 | 13 | 59 | 100 | 96 | 74 | |

| Spectral and textural features | 92 | 23 | 56 | 43 | 89 | 51 | |

| User’s accuracy (area-based) | |||||||

| Height, spectral, and textural features | 32 | 12 | 82 | 95 | 82 | 96 | |

| Spectral and textural features | 19 | 11 | 70 | 83 | 34 | 77 | |

| Total area of validation segments | 9 | 12 | 22 | 95 | 22 | 97 | |

| Site V | |||||||

| Producer’s accuracy (segment-based) | |||||||

| Height, spectral, and textural features | 95 | 100 | 76 | 74 | |||

| Spectral and textural features | 55 | 53 | 78 | 73 | |||

| User’s accuracy (segment-based) | |||||||

| Height, spectral, and textural features | 83 | 89 | 85 | 84 | |||

| Spectral and textural features | 49 | 53 | 79 | 80 | |||

| No. of validation segments | 78 | 85 | 96 | 91 | |||

| Producer’s accuracy (area-based) | |||||||

| Height, spectral, and textural features | 93 | 100 | 77 | 85 | |||

| Spectral and textural features | 49 | 62 | 79 | 84 | |||

| User’s accuracy (area-based) | |||||||

| Height, spectral, and textural features | 82 | 83 | 87 | 89 | |||

| Spectral and textural features | 53 | 57 | 77 | 87 | |||

| Total area of validation segments | 41 | 51 | 89 | 134 | |||

| Valid N | T | p | |

|---|---|---|---|

| Producer’s accuracy (segment-based) | 19 | 18 | 0.00194 ** |

| User’s accuracy (segment-based) | 27 | 5 | 0.00001 *** |

| Producer’s accuracy (area-based) | 20 | 20 | 0.00151 ** |

| User’s accuracy (area-based) | 27 | 8 | 0.00001 *** |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Husson, E.; Reese, H.; Ecke, F. Combining Spectral Data and a DSM from UAS-Images for Improved Classification of Non-Submerged Aquatic Vegetation. Remote Sens. 2017, 9, 247. https://doi.org/10.3390/rs9030247

Husson E, Reese H, Ecke F. Combining Spectral Data and a DSM from UAS-Images for Improved Classification of Non-Submerged Aquatic Vegetation. Remote Sensing. 2017; 9(3):247. https://doi.org/10.3390/rs9030247

Chicago/Turabian StyleHusson, Eva, Heather Reese, and Frauke Ecke. 2017. "Combining Spectral Data and a DSM from UAS-Images for Improved Classification of Non-Submerged Aquatic Vegetation" Remote Sensing 9, no. 3: 247. https://doi.org/10.3390/rs9030247