A Novel Approach for Coarse-to-Fine Windthrown Tree Extraction Based on Unmanned Aerial Vehicle Images

Abstract

:1. Introduction

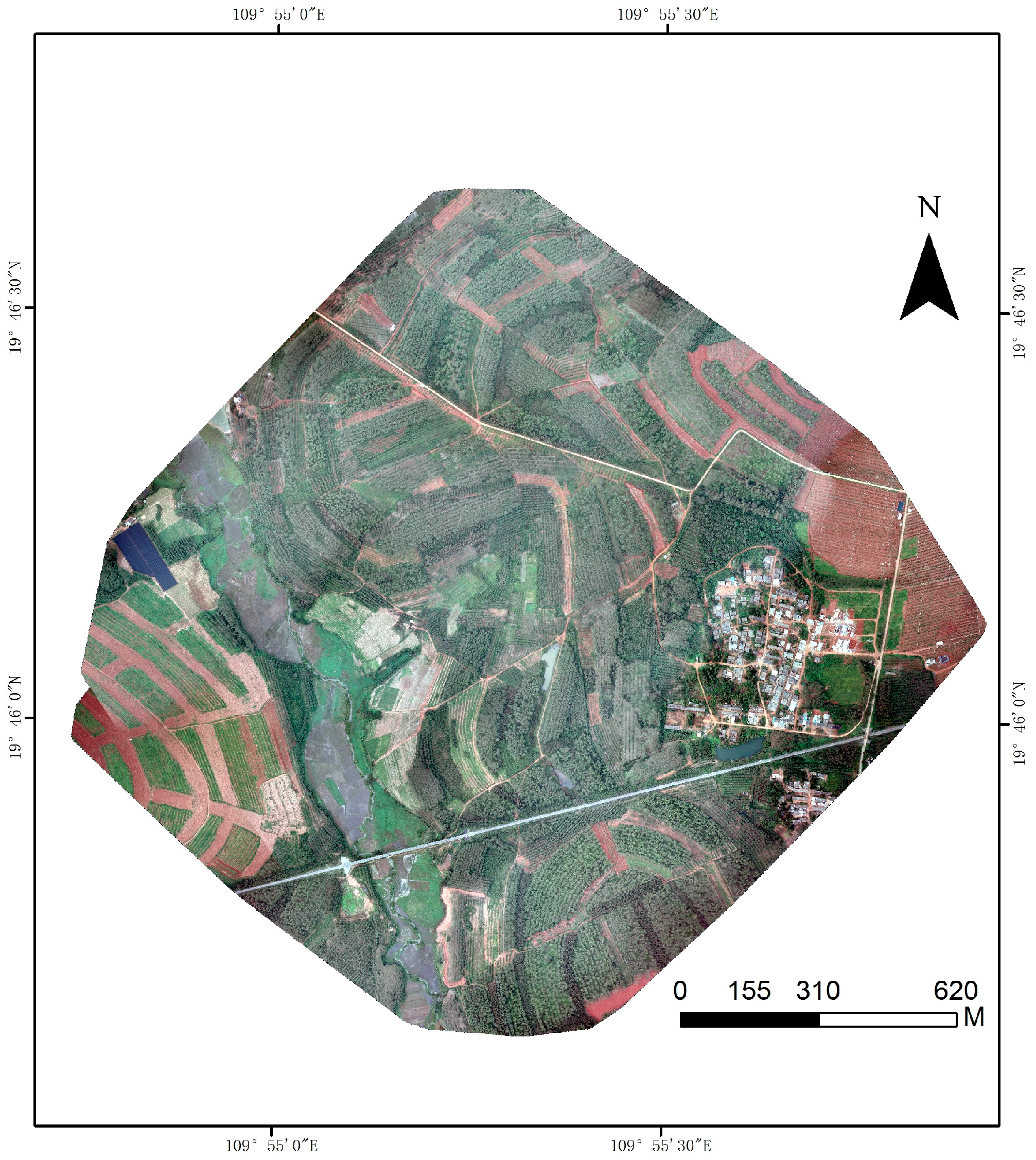

2. Study Area and Data Collection

2.1. Study Area

2.2. UAV Image Acquisition and Preprocessing

3. Methods

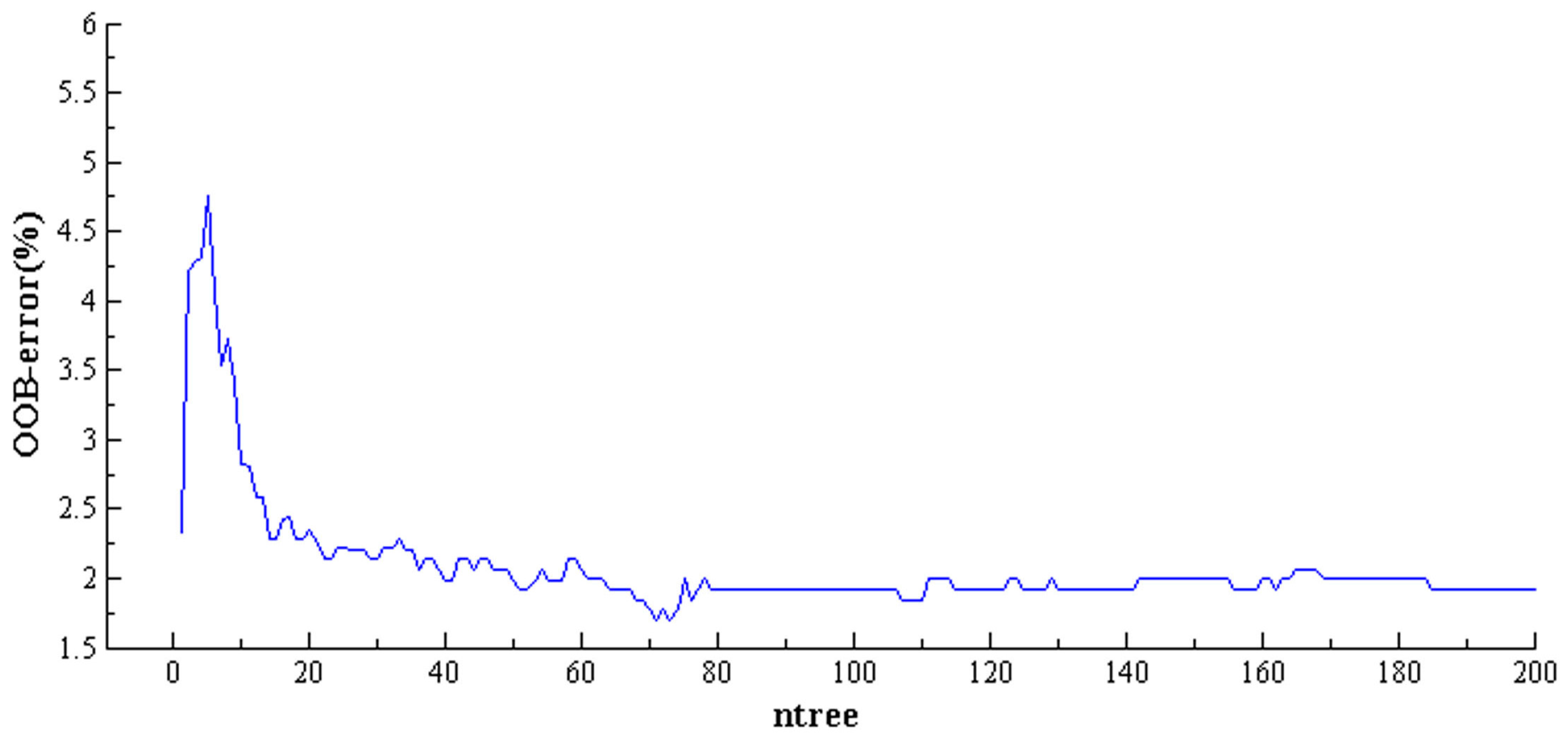

3.1. Coarse Extraction of Windthrown Trees

3.2. Fine Extraction of Individual Windthrown Trees

3.3. Accuracy Assessment

4. Results

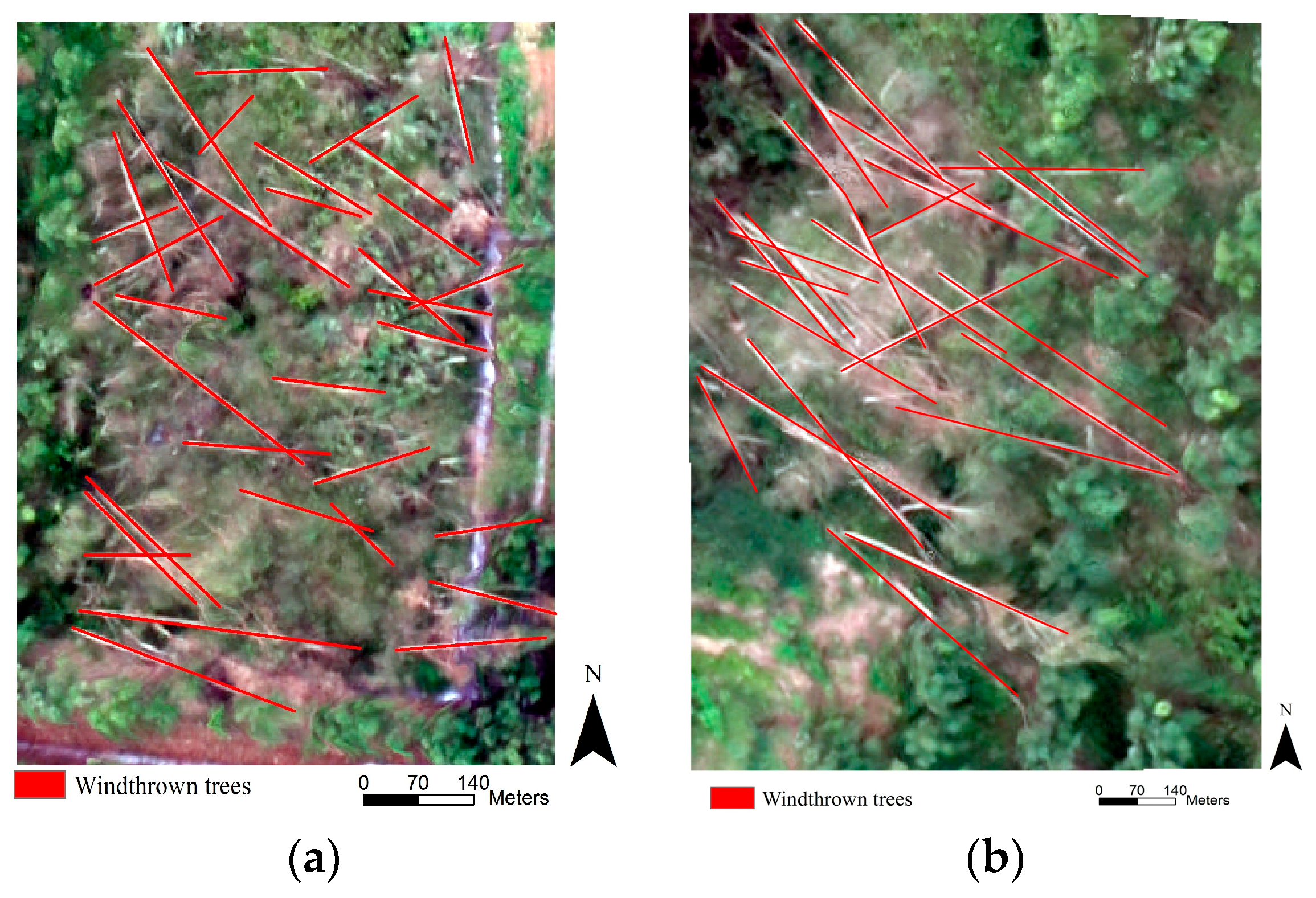

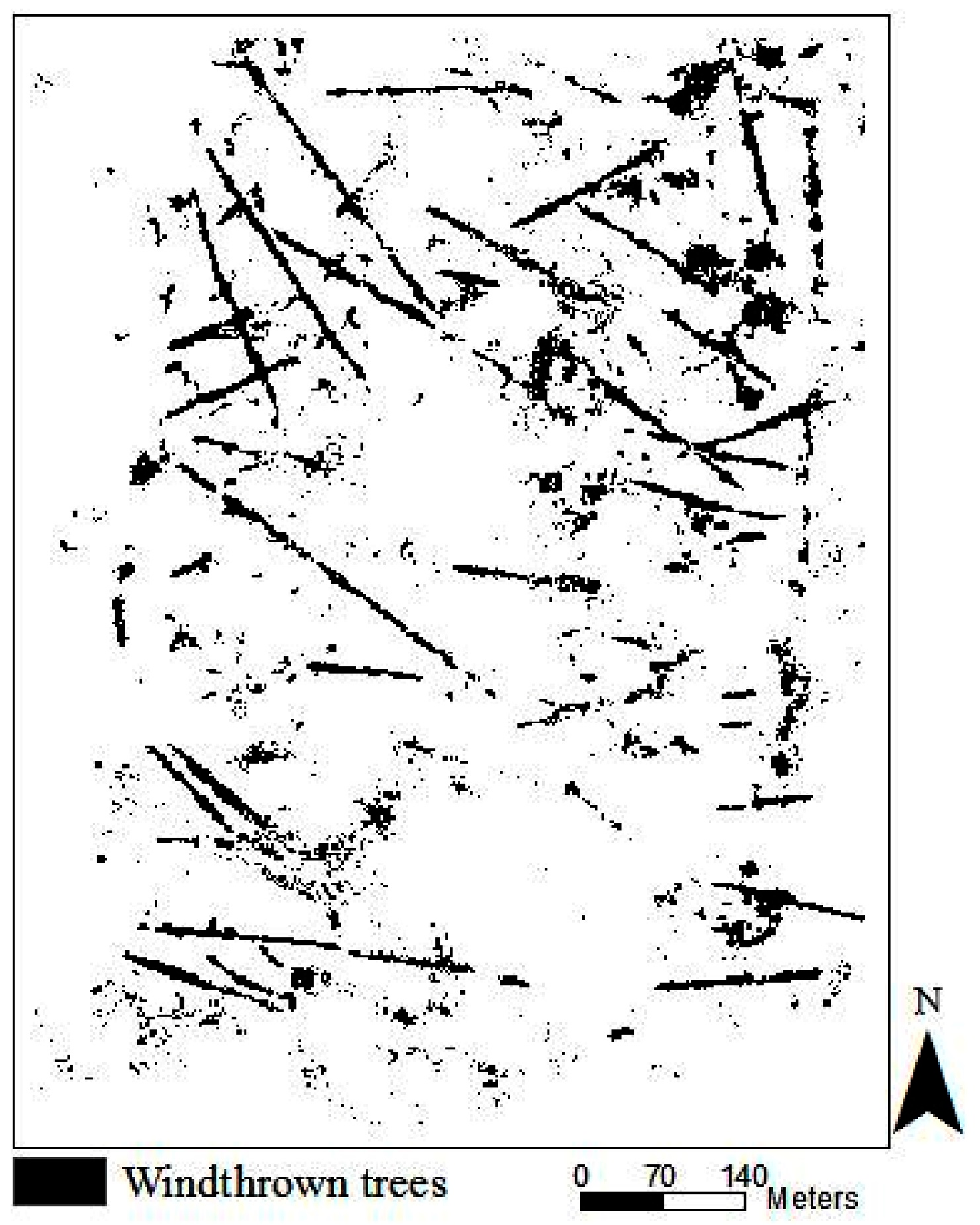

4.1. Coarse Extraction

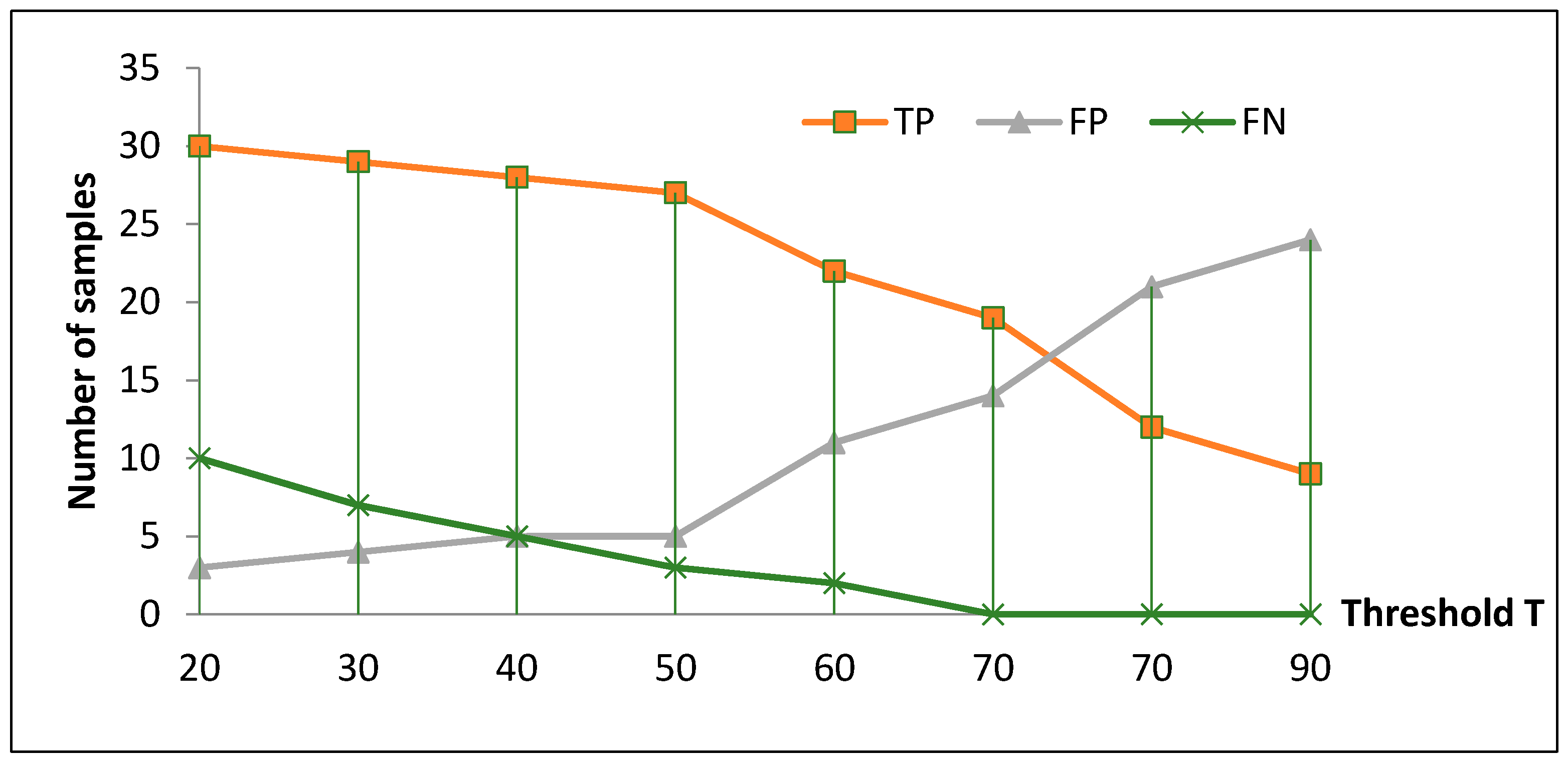

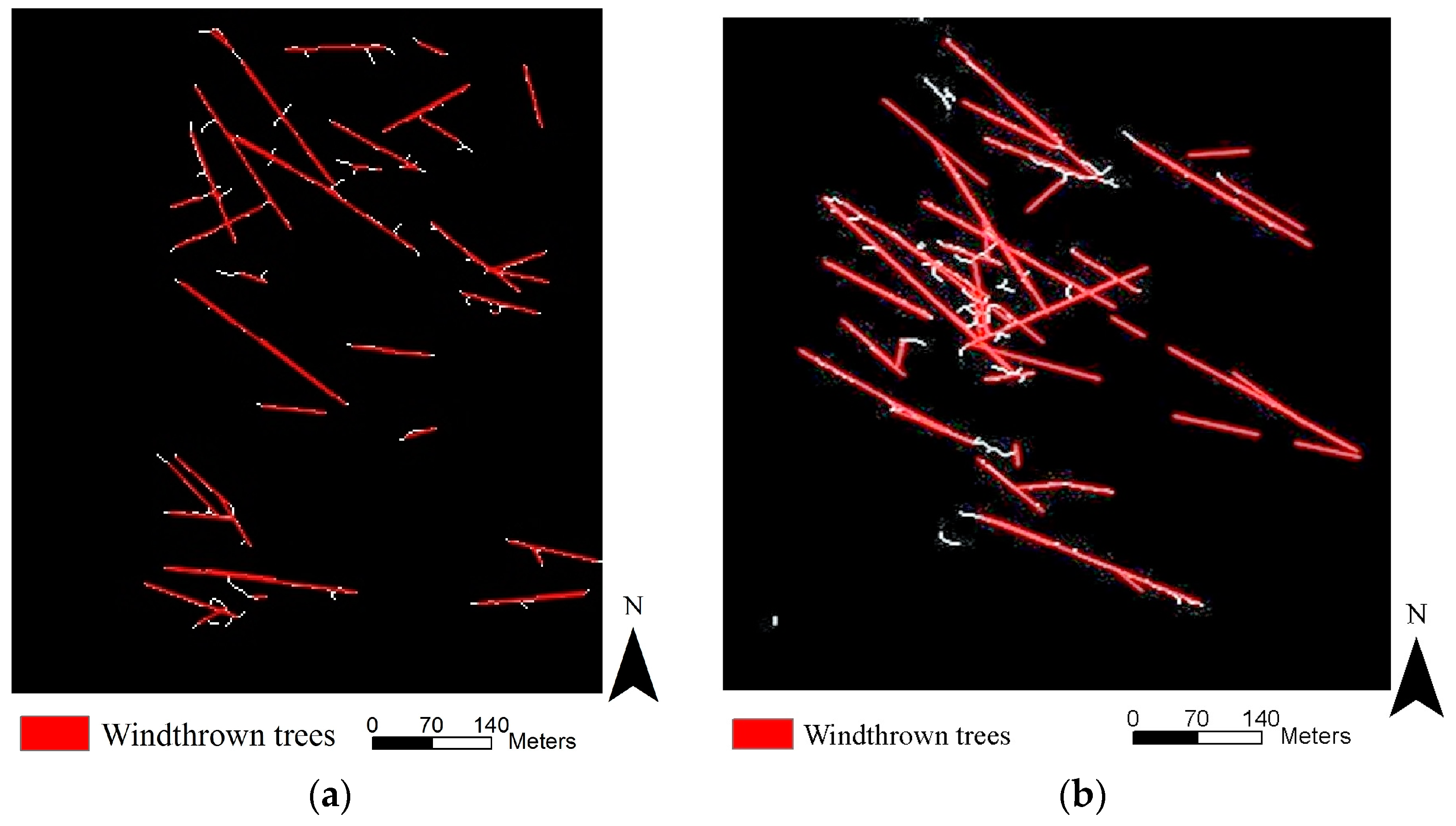

4.2. Fine Extraction

5. Discussion

5.1. Extraction Assessment

5.2. Feasibility Verification

5.3. Comparison with Conventional Methods

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Butler, R.; Angelstam, P.; Ekelund, P.; Schlaepfer, R. Dead wood threshold values for the three-toed woodpecker presence in boreal and sub-Alpine forest. Biol. Conserv. 2004, 119, 305–318. [Google Scholar] [CrossRef]

- Bouget, C.; Duelli, P. The effects of windthrow on forest insect communities: A literature review. Biol. Conserv. 2004, 118, 281–299. [Google Scholar] [CrossRef]

- Ballard, D.H.; Brown, C.M. Computer Vision; Prentice Hall: Upper Saddle River, NJ, USA, 1982. [Google Scholar]

- Fransson, J.E.S.; Magnusson, M.; Folkesson, K.; Hallberg, B. Mapping of wind-thrown forests using VHF/UHF SAR images. In Proceedings of the 2007 IEEE International Geoscience and Remote Sensing Symposium, Barcelona, Spain, 23–28 July 2007; pp. 2350–2353. [Google Scholar]

- Fransson, J.E.S.; Pantze, A.; Eriksson, L.E.B.; Soja, M.J.; Santoro, M. Mapping of wind-thrown forests using satellite SAR images. In Proceedings of the 2010 IEEE International Geoscience and Remote Sensing Symposium, Honolulu, HI, USA, 25–30 July 2010; pp. 1242–1245. [Google Scholar]

- Wang, F.; Xu, Y.J. Comparison of remote sensing change detection techniques for assessing hurricane damage to forests. Environ. Monit. Assess. 2009, 162, 311–326. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Qu, J.J.; Hao, X.; Liu, Y.; Stanturf, J.A. Post-hurricane forest damage assessment using satellite remote sensing. Agric. For. Meteorol. 2010, 150, 122–132. [Google Scholar] [CrossRef]

- Szantoi, Z.; Malone, S.; Escobedo, F.; Misas, O.; Smith, S.; Dewitt, B. A tool for rapid post-hurricane urban tree debris estimates using high resolution aerial imagery. Int. J. Appl. Earth Obs. Geoinform. 2012, 18, 548–556. [Google Scholar] [CrossRef]

- Selvarajan, S. Fusion of LiDAR and aerial imagery for the estimation of downed tree volume using support vector machines classification and region based object fitting. Ph.D. Thesis, University of Florida, Gainesville, FL, USA, 2011. [Google Scholar]

- Honkavaara, E.; Litkey, P.; Nurminen, K. Automatic storm damage detection in forests using high-altitude photogrammetric imagery. Remote Sens. 2013, 5, 1405–1424. [Google Scholar] [CrossRef]

- Wallace, L.; Watson, C.; Lucieer, A. Detecting pruning of individual stems using airborne laser scanning data captured from an unmanned aerial vehicle. Int. J. Appl. Earth Obs. Geoinform. 2014, 30, 76–85. [Google Scholar] [CrossRef]

- Tran, T.H.G.; Hollaus, M.; Nguyen, B.D.; Pfeifer, N. Assessment of wooded area reduction by airborne laser scanning. Forests 2015, 6, 1613–1627. [Google Scholar] [CrossRef]

- Niemi, M.T.; Vauhkonen, J. Extracting canopy surface texture from airborne laser scanning data for the supervised and unsupervised prediction of area-based forest characteristics. Remote Sens. 2016, 8, 582. [Google Scholar] [CrossRef]

- Blanchard, S.D.; Jakubowski, M.K.; Kelly, M. Object-based image analysis of downed logs in disturbed forested landscapes using LiDAR. Remote Sens. 2011, 3, 2420–2439. [Google Scholar] [CrossRef]

- Mücke, W.; Hollaus, M.; Pfeifer, N.; Schroiff, A.; Deàk, B. Comparison of discrete and full-waveform ALS features for dead wood detection. ISPRS Ann. Photogramm. Remote Sens. Spat. Inform. Sci. 2013, II-5/W2, 11–13. [Google Scholar]

- Mücke, W.; Hollaus, M.; Pfeifer, N. Identification of dead trees using small footprint full-waveform airborne laser scanning data. Proceedings of SilviLaser, Vancouver, BC, Canada, 16–19 September 2012; pp. 1–9. [Google Scholar]

- Mücke, W.; Deak, B.; Schroiff, A.; Hollaus, M.; Pfeifer, N. Detection of fallen trees in forested areas using small footprint airborne laser scanning data. Can. J. Remote Sens. 2013, 39, S32–S40. [Google Scholar] [CrossRef]

- Lindberg, E.; Hollaus, M.; Mücke, W.; Fransson, J.E.S.; Pfeifer, N. Detection of lying tree stems from airborne laser scanning data using a line template matching algorithm. ISPRS Ann. Photogramm. Remote Sens. Spat. Inform. Sci. 2013, II-5/W2, 169–174. [Google Scholar] [CrossRef]

- Nyström, M.; Holmgren, J.; Fransson, J.E.; Olsson, H. Detection of windthrown trees using airborne laser scanning. Int. J. Appl. Earth Obs. 2014, 30, 21–29. [Google Scholar] [CrossRef]

- Polewski, P.; Yao, W.; Heurich, M.; Krzystek, P.; Stilla, U. Detection of fallen trees in ALS point clouds using a normalized cut approach trained by simulation. ISPRS J. Photogramm. Remote Sens. 2015, 105, 252–271. [Google Scholar] [CrossRef]

- Inoue, T.; Nagai, S.; Yamashita, S.; Fadaei, H.; Ishii, R. Unmanned aerial survey of fallen trees in a deciduous broadleaved forest in eastern Japan. PLoS ONE 2014, 9. [Google Scholar] [CrossRef] [PubMed]

- Pix4D. Available online: http://pix4d.com (accessed on 1 October 2015).

- Vapnik, V.N. The Nature of Statistical Learning Theory; Springer: New York, NY, USA, 1995. [Google Scholar]

- Breiman, L. Random Forest. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Waske, B.; Van der Linden, S.; Oldenburg, C.; Jakimow, B.; Rabe, A.; Hostert, P. Image RF-A user-oriented implementation for remote sensing image analysis with Random Forests. Environ. Model. Softw. 2012, 35, 192–193. [Google Scholar] [CrossRef]

- Xu, L.; Li, J.; Brenning, A. A comparative study of different classification techniques for marine oil spill identification using RADARSAT-1 imagery. Remote Sens. Environ. 2014, 141, 14–23. [Google Scholar] [CrossRef]

- Kühnlein, M.; Appelhans, T.; Thies, B.; Nauss, T. Improving the accuracy of rainfall rates from optical satellite sensors with machine learning—A random forests-based approach applied to MSG SEVIRI. Remote Sens. Environ. 2014, 141, 129–143. [Google Scholar] [CrossRef]

- Tramontana, G.; Ichii, K.; Camps-Valls, G.; Tomelleri, E.; Papale, D. Uncertainty analysis of gross primary production upscaling using random forests, remote sensing and eddy covariance data. Remote Sens. Environ. 2015, 168, 360–373. [Google Scholar] [CrossRef]

- Galiano, V.F.R.; Ghimire, B.; Rogan, J.; Olmo, M.C.; Sanchez, J.P.R. An assessment of the effectiveness of a random forest classifier for landcover classification. ISPRS J. Photogram. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Feng, Q.; Liu, J.; Gong, J. UAV remote sensing for urban vegetation mapping using random forest and texture analysis. Remote Sens. 2015, 7, 1074–1094. [Google Scholar] [CrossRef]

- Mcdonald, T.; Chen, Y.R. Application of morphological image processing in agriculture. Trans. ASAE 1990, 33, 1345–1352. [Google Scholar] [CrossRef]

- Zhang, D. Notice of retraction one-eighth rule in the N-queens problem based on group theory and morphological gene combinations. In Proceedings of the International Forum on Information Technology and Applications, Chengdu, China, 15–17 May 2009; pp. 651–654. [Google Scholar]

- Ware, J.M.; Jones, C.B.; Bundy, G.L. A triangulated spatial model for cartographic generalization of areal objects. In Proceedings of the International Conference on Spatial Information Theory, Semmering, Austria, 21–23 September 1995; pp. 173–192. [Google Scholar]

- Ziou, D.; Tabbone, S. Edge detection techniques—An overview. Int. J. Pattern Recognit. Image Anal. 1998, 8, 537–559. [Google Scholar]

- Von Gioi, R.G.; Jakubowicz, J.; Morel, J.; Randall, G. LSD: A line segment detector. Image Process. On Line 2012, 2, 35–55. [Google Scholar] [CrossRef]

- Hough, P.C.V. Methods and Means for Recognizing Complex Patterns. US Patent 3,069,654, 18 December 1962. [Google Scholar]

- Rahnama, M.; Gloaguen, R. Teclines: A Matlab-based toolbox for tectonic lineament analysis from satellite images and DEMs, part 2: Line segments linking and merging. Remote Sens. 2014, 6, 11468–11493. [Google Scholar] [CrossRef]

- Cui, S.; Yan, Q.; Reinartz, P. Complex building description and extraction based on Hough transformation and cycle detection. Remote Sens. Lett. 2012, 3, 151–159. [Google Scholar] [CrossRef]

- Li, Z.; Liu, Y.; Walker, R.; Hayward, A.; Ross, F.; Zhang, J. Towards automatic power line detection for a UAV surveillance system using pulse coupled neural filter and an improved Hough transform. Mach. Vis. Appl. 2009, 21, 677–686. [Google Scholar] [CrossRef]

- Agüera, F.; Liu, J.G. Automatic greenhouse delineation from QuickBird and Ikonos satellite images. Comput. Electron. Agric. 2009, 66, 191–200. [Google Scholar] [CrossRef]

- Chanussot, J.; Bas, P.; Bombrun, L. Airborne remote sensing of vineyards for the detection of dead vine trees. In Proceedings of the 2005 IEEE International Geoscience and Remote Sensing Symposium, Seoul, Korea, 29–29 July 2005; pp. 3090–3093. [Google Scholar]

- Turkera, M.; Koc-San, D. Building extraction from high-resolution optical spaceborne images using the integration of support vector machine (SVM) classification, Hough transformation and perceptual grouping. Int. J. Appl. Earth Obs. Geoinform. 2015, 34, 58–69. [Google Scholar] [CrossRef]

- Heipke, C.; Mayer, H.; Wiedemann, C.; Jamet, O. Evaluation of automatic road extraction. Int. Arch. Photogramm. Remote Sens. 1997, 32, 151–160. [Google Scholar]

- Chen, D.; Stow, D. The effect of training strategies on supervised classification at different spatial resolutions. Photogramm. Eng. Remote Sens. 2002, 68, 1155–1162. [Google Scholar]

- Huiran, J.; Stephen, V.; Giorgos, M. Assessing the impact of training sample selection on accuracy of an urban classification: A case study in Denver, Colorado. Int. J. Remote Sens. 2014, 35, 2067–2068. [Google Scholar]

- Fukunaga, K.; Hayes, R. Effects of sample size in classifier design. IEEE Trans. Pattern. Anal. Mach. Intell. 1989, 11, 873–885. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Platform | Sky-01C Zhong 5 UAV |

| The type of sensor | CMOS |

| Camera | Canon EOS 5D Mark II |

| Average altitude (above the ground) | 500 m |

| Date | 19 October 2011 |

| Color channel used | Red, Green, Blue |

| Format | JPEG |

| Resolution | 5616 × 3744 |

| Quality | Fine |

| Range of experimental area (pixels) | 320 × 420 |

| Range of verification area (pixels) | 300 × 400 |

| Class No. | Class Type | # of Training Samples | # of Testing Samples |

|---|---|---|---|

| 1 | Windthrown tree patches | 450 | 600 |

| 2 | No windthrown tree patches | 1050 | 190 |

| Total | 1500 | 1700 |

| Customer Accuracy | Manufactured Accuracy | Total Accuracy | Kappa Coefficient |

|---|---|---|---|

| 92.66% | 95.24% | 94.48% | 0.86 |

| Data | Index | |||

|---|---|---|---|---|

| Commission (%) | Omission (%) | Completeness (%) | Correctness (%) | |

| Experimental area | 7.5 | 24.3 | 75.7 | 92.5 |

| Verification area | 16 | 16 | 84 | 84 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Duan, F.; Wan, Y.; Deng, L. A Novel Approach for Coarse-to-Fine Windthrown Tree Extraction Based on Unmanned Aerial Vehicle Images. Remote Sens. 2017, 9, 306. https://doi.org/10.3390/rs9040306

Duan F, Wan Y, Deng L. A Novel Approach for Coarse-to-Fine Windthrown Tree Extraction Based on Unmanned Aerial Vehicle Images. Remote Sensing. 2017; 9(4):306. https://doi.org/10.3390/rs9040306

Chicago/Turabian StyleDuan, Fuzhou, Yangchun Wan, and Lei Deng. 2017. "A Novel Approach for Coarse-to-Fine Windthrown Tree Extraction Based on Unmanned Aerial Vehicle Images" Remote Sensing 9, no. 4: 306. https://doi.org/10.3390/rs9040306