3D Digitisation of Large-Scale Unstructured Great Wall Heritage Sites by a Small Unmanned Helicopter

Abstract

:1. Introduction

2. Materials and Methods

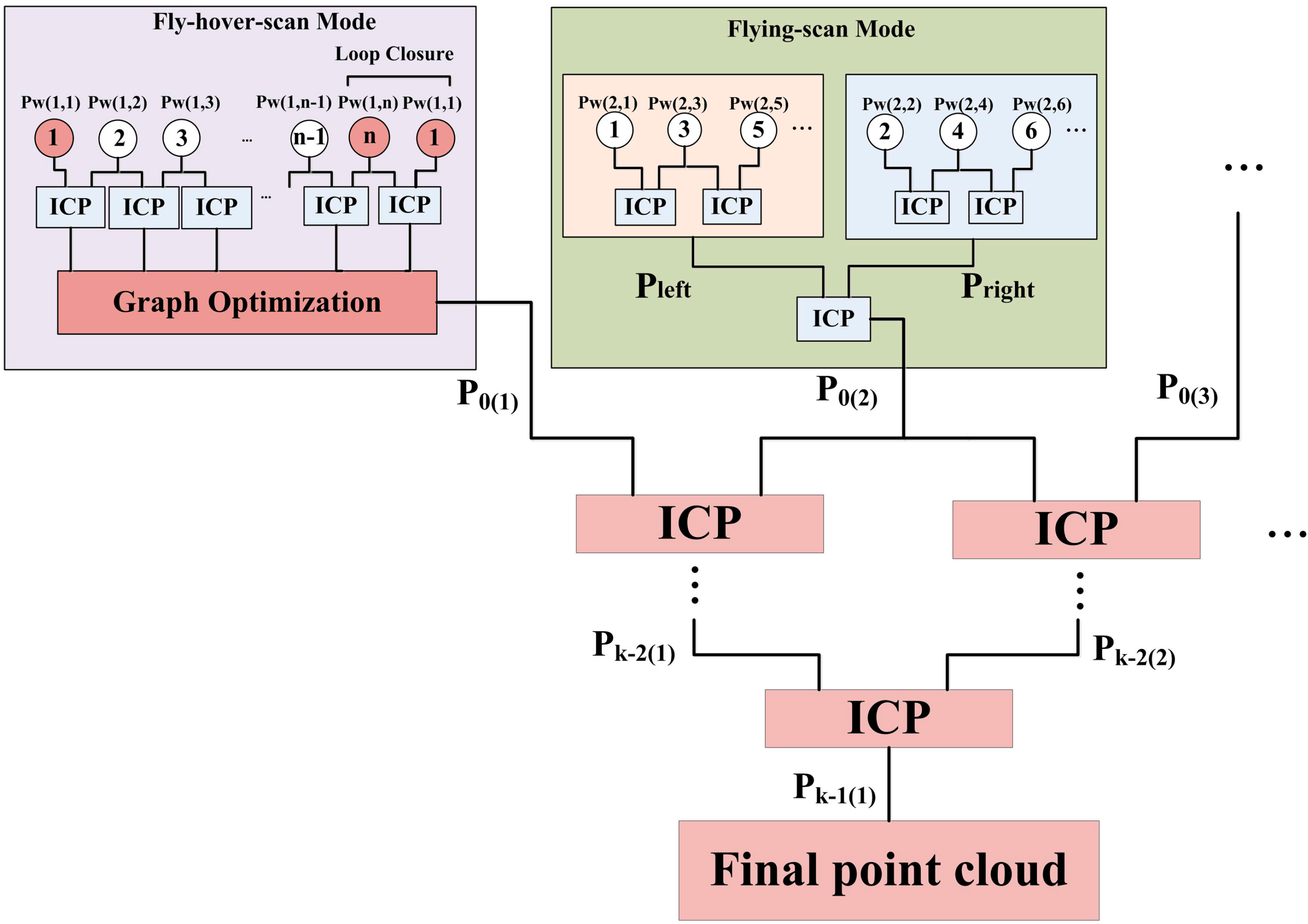

2.1. Airborne Hardware System and Processing Software

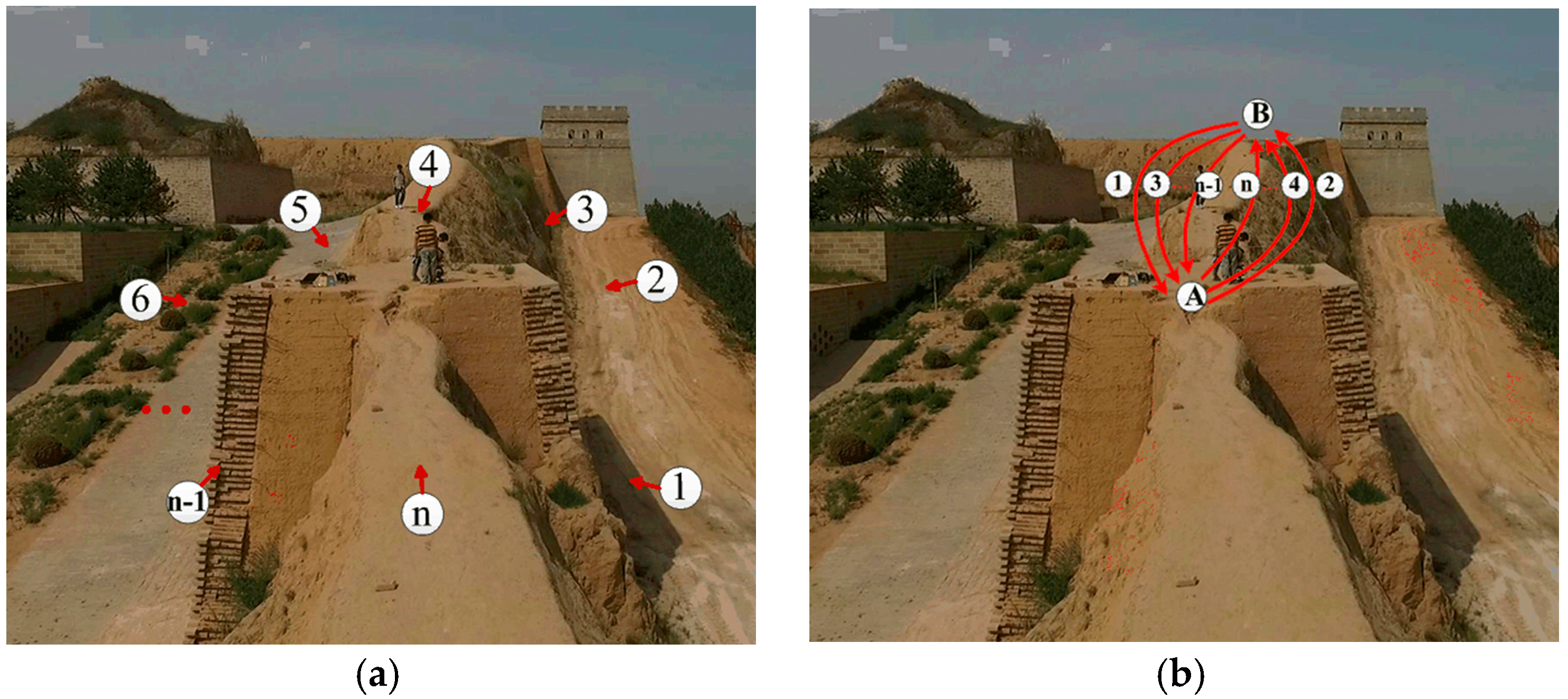

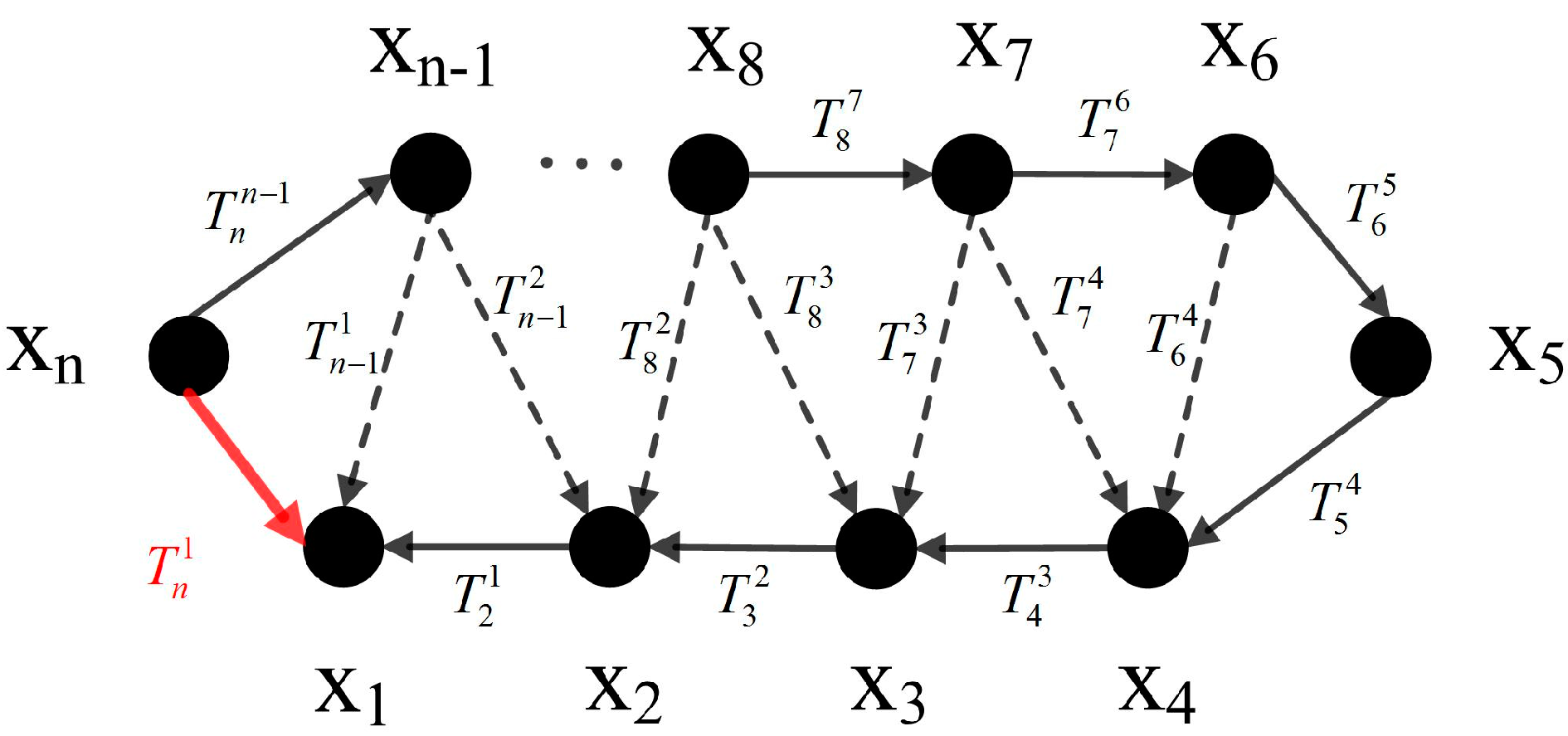

2.2. Hierarchical Optimization Framework

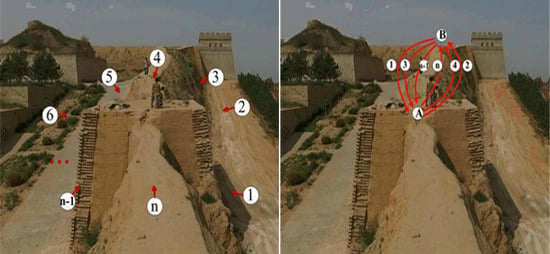

2.3. Fly-Hover-Scan Mode

2.4. Flying-Scan Mode

3. Experimental Results

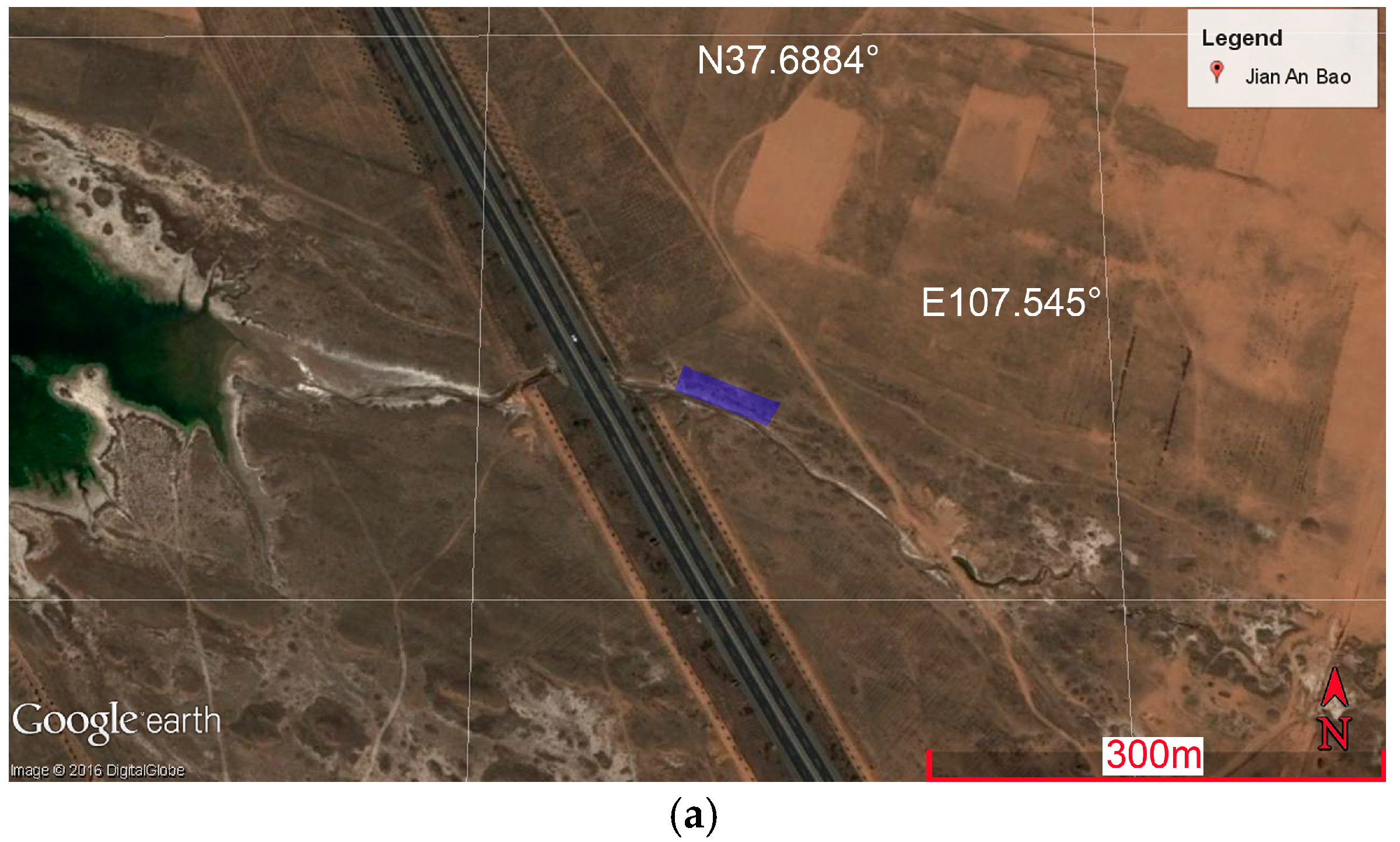

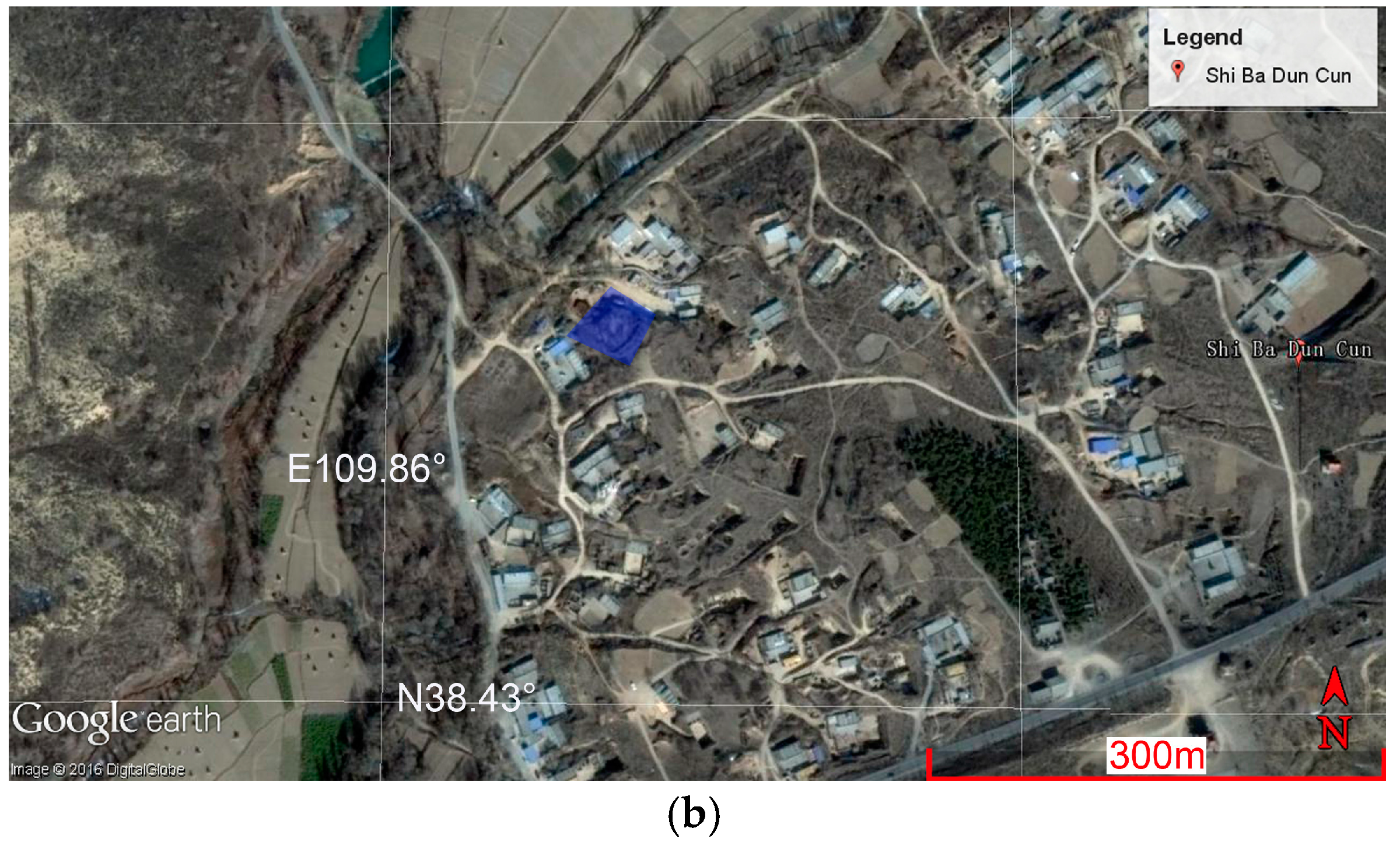

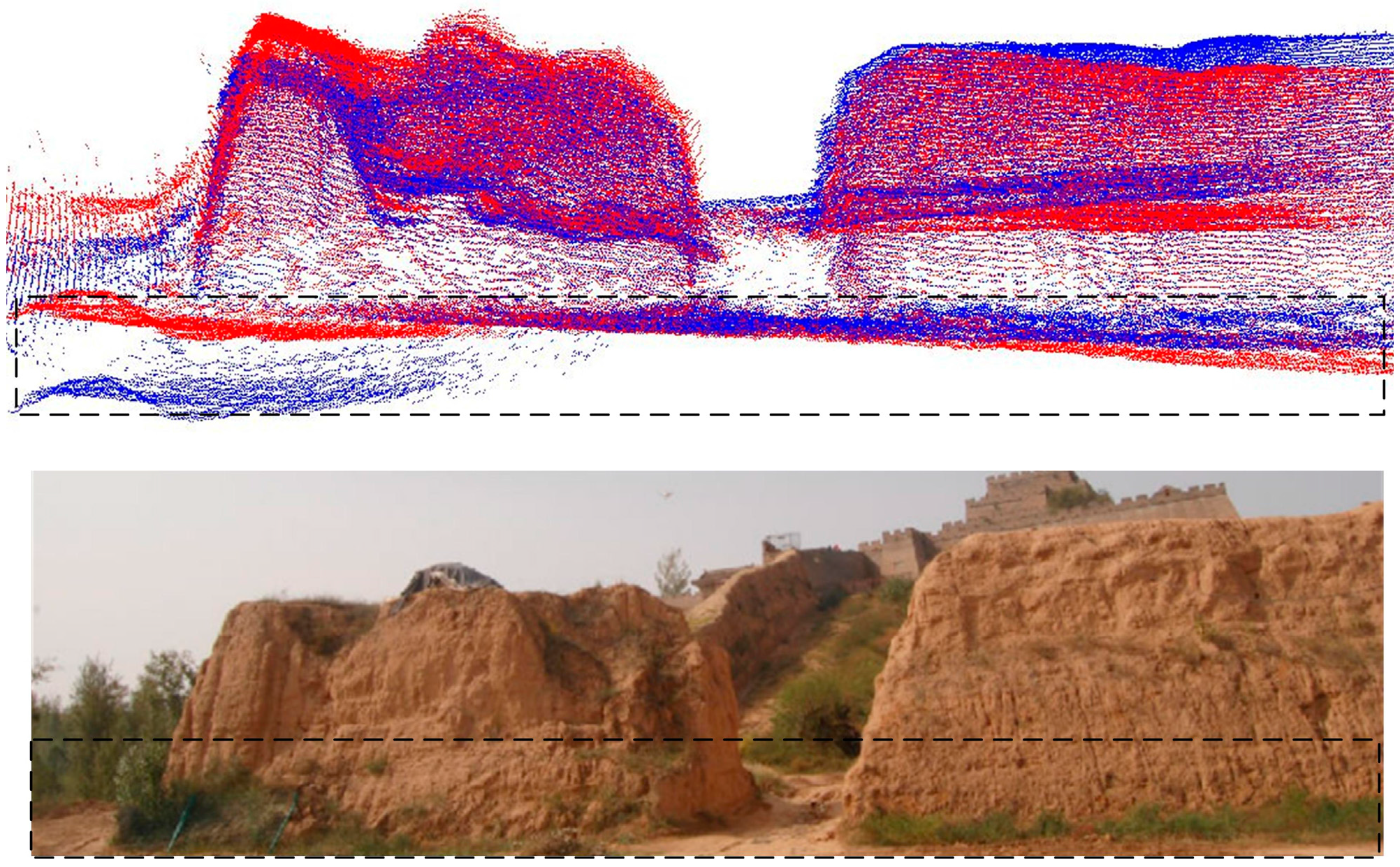

3.1. Study Area

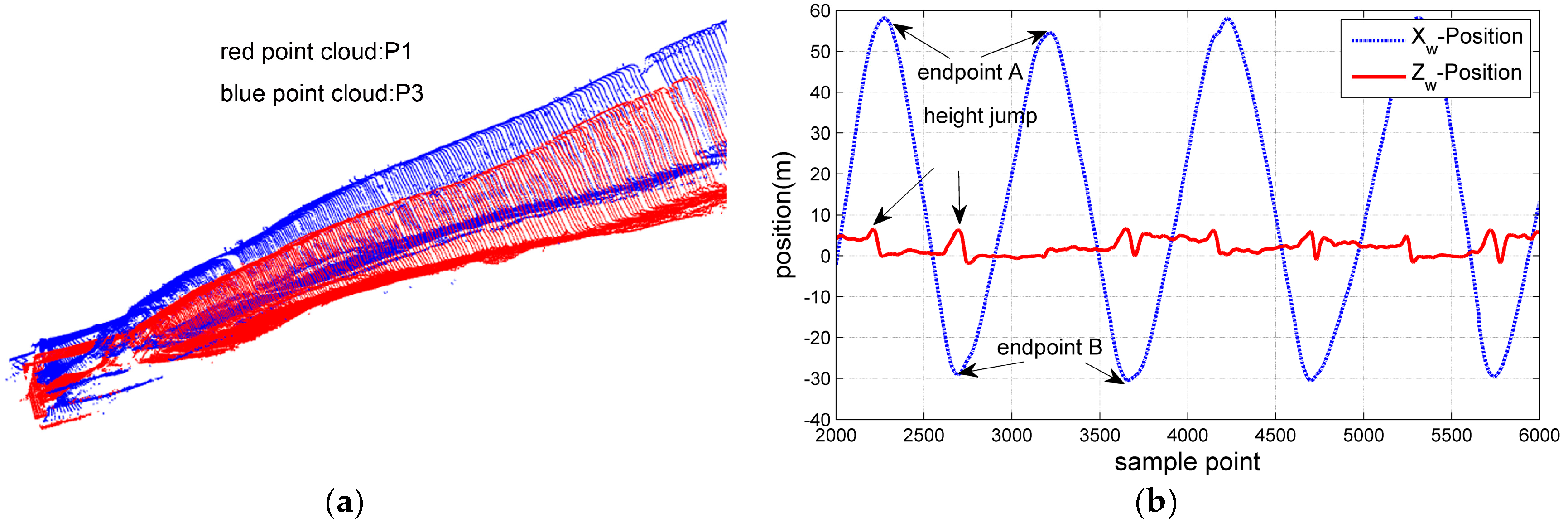

3.2. Optimization for the Fly-Hover-Scan

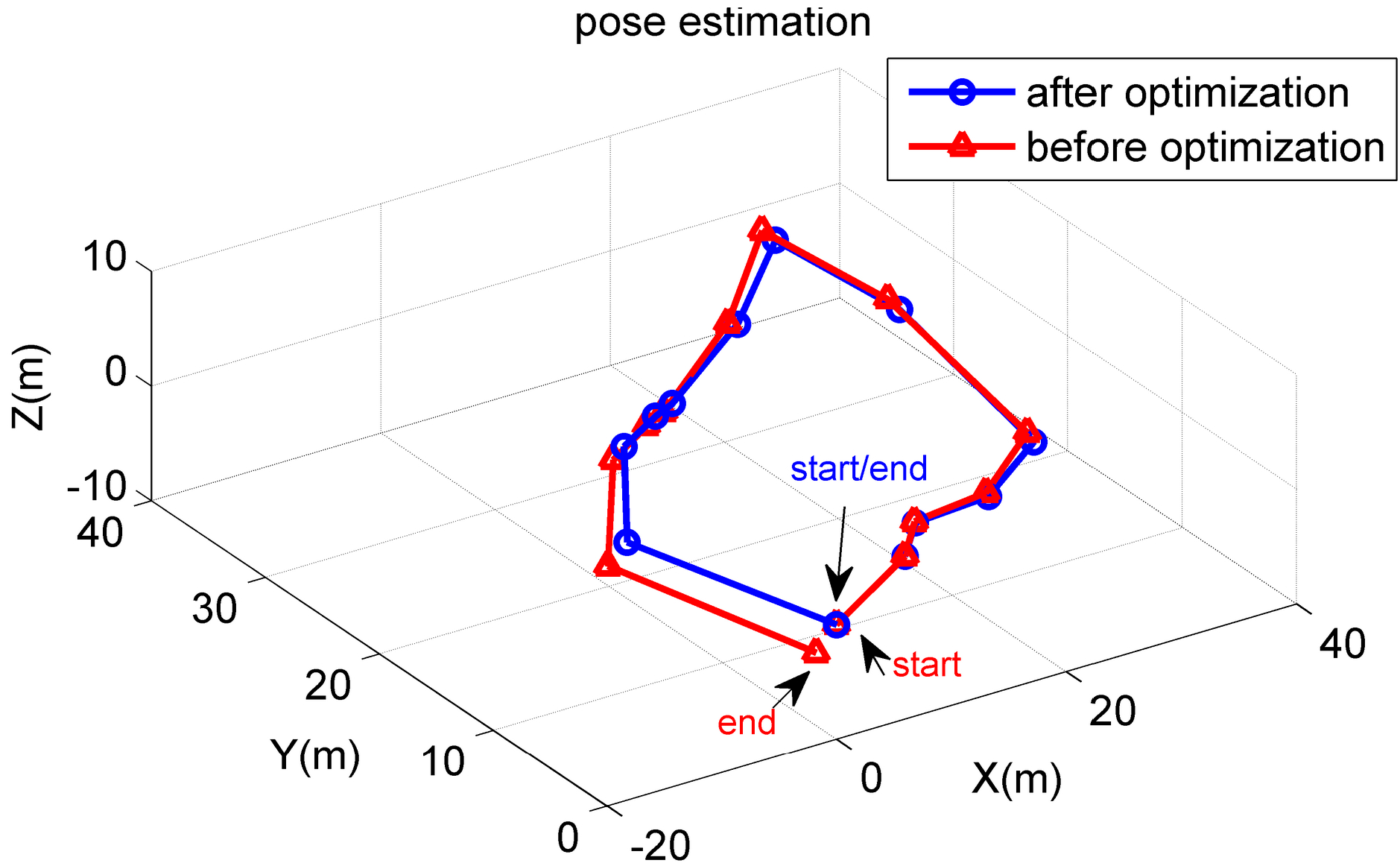

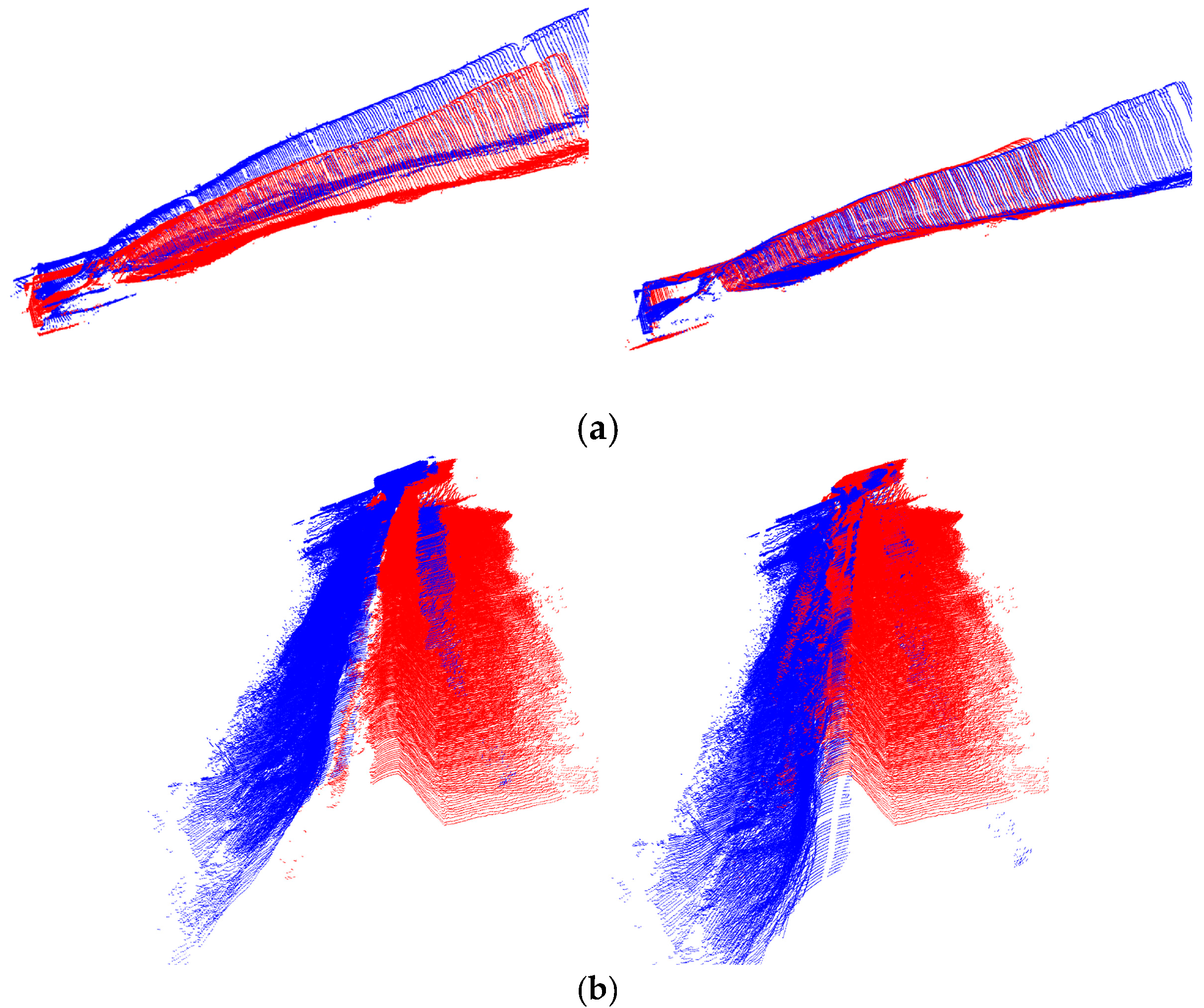

3.3. Optimization for the Flying-Scan

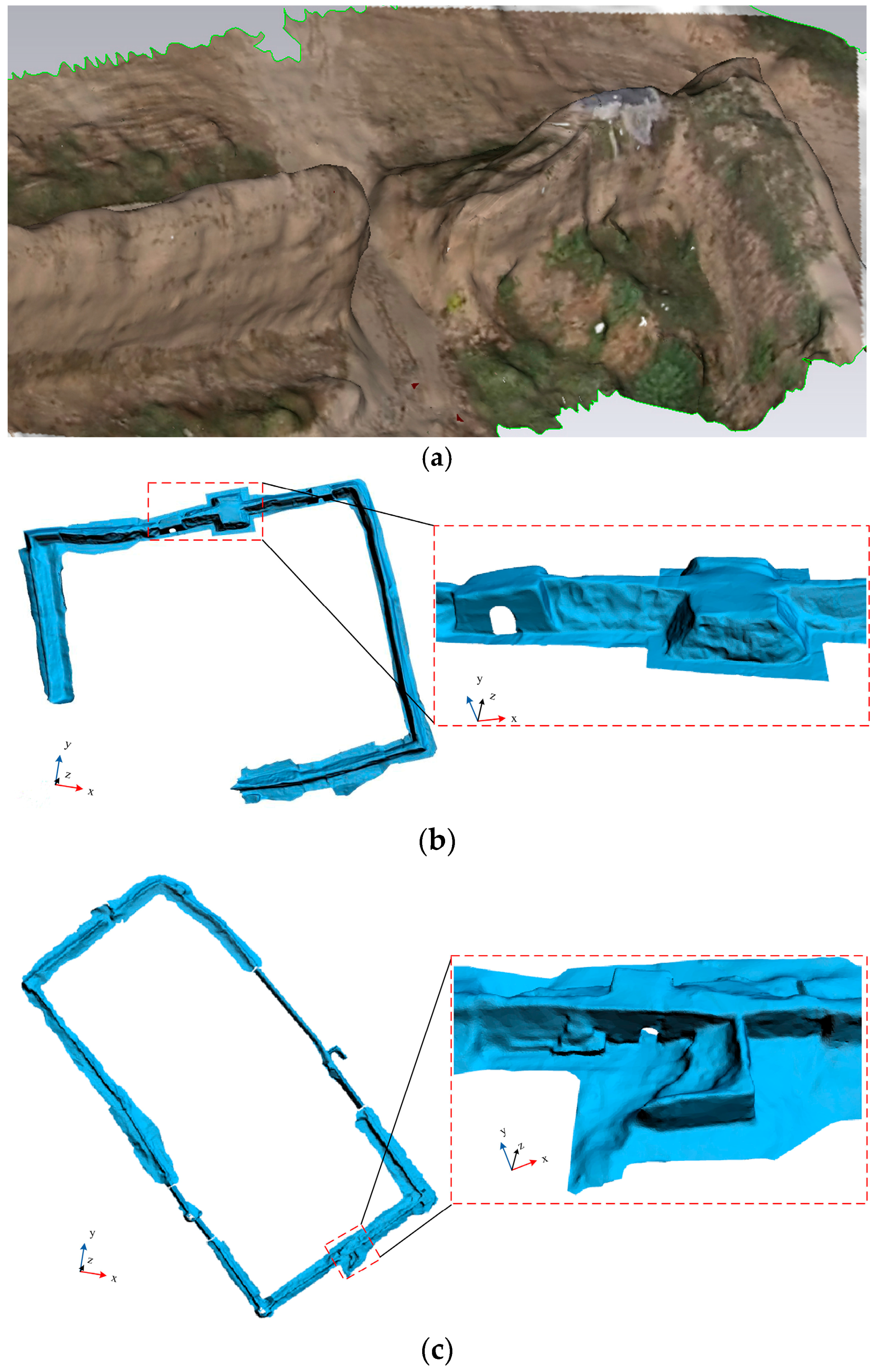

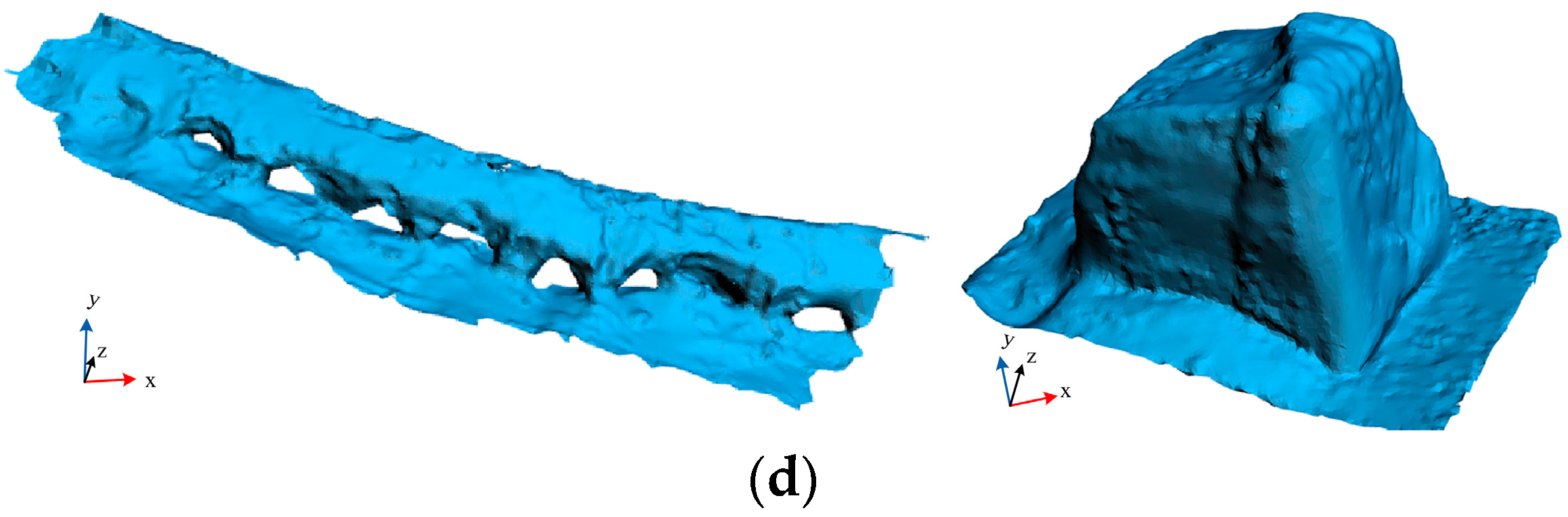

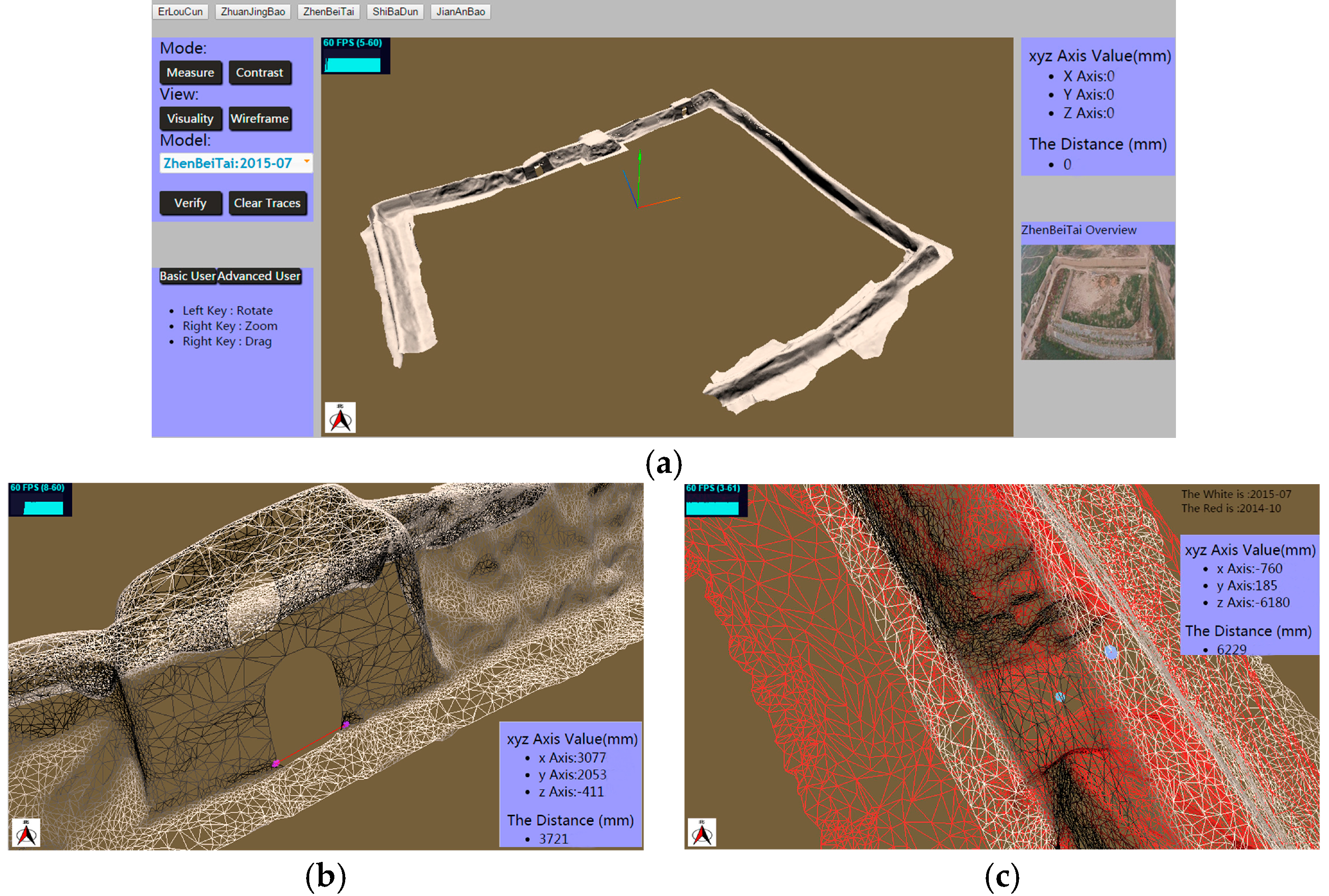

3.4. 3D Model of the Great Wall Heritage Site

4. Discussions

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Wu, G.Q.; Yang, J.J.; Xu, P.; Cheng, X. Modern technologies used for the preservation of ancient Great Wall heritage. In Proceedings of the International Conference on Remote Technology for the Preservation of Great Wall, Beijing, China, 15 September 2014. (In Chinese). [Google Scholar]

- Chinese Academy of Cultural Heritage. Research on the Huge Heritage Preservation; Heritage Publisher: Beijing, China, 2016; p. 696. (In Chinese) [Google Scholar]

- Gomes, L.; Bellon, O.R.P.; Silva, L. 3D reconstruction methods for digital preservation of cultural heritage: A survey. Pattern Recognit. Lett. 2014, 50, 3–14. [Google Scholar] [CrossRef]

- Chiabrando, F.; Andria, F.D.; Sammartano, G.; Spano, A. 3D modeling from UAV data in Hierapolis of Phrigia (TK). In Proceedings of the International Congress on Archaeology, Computer Graphics, Cultural Heritage and Innovation Arqueológica 2.0, Valencia, Spain, 5–7 September 2016. [Google Scholar]

- Remondino, F. Heritage recording and 3D modeling with photogrammetry and 3D scanning. Remote Sens. 2011, 3, 1104–1138. [Google Scholar] [CrossRef]

- Remondino, F.; Rizzi, A. Reality-based 3D documentation of natural and cultural heritage sites-techniques, problems, and examples. Appl. Geomat. 2010, 2, 85–100. [Google Scholar] [CrossRef]

- Achille, C.; Adami, A.; Chiarini, S.; Cremonesi, S.; Fassi, F.; Fregonese, L.; Taffurelli, L. UAV-based photogrammetry and integrated technologies for architectural applications-methodological strategies for the after-quake survey of vertical structures in Mantua (Italy). Sensors 2015, 15, 15520–15539. [Google Scholar] [CrossRef] [PubMed]

- Xu, Z.H.; Wu, L.X.; Shen, Y.L.; Li, F.S.; Wang, Q.L.; Wang, R. Tridimensional reconstruction applied to cultural heritage with the use of camera-equipped UAV and terrestrial laser scanner. Remote Sens. 2014, 6, 10413–10434. [Google Scholar] [CrossRef]

- Al-Khedera, S.; Al-Shawabkeh, Y.; Haala, N. Developing a documentation system for desert palaces in Jordan using 3D laser scanning and digital photogrammetry. J. Archaeol. Sci. 2009, 36, 537–546. [Google Scholar] [CrossRef]

- Guidi, G.; Remondino, F.; Russo, M.; Menna, F.; Rizzi, A.; Ercoli, S. A multi-resolution methodology for the 3D modeling of large and complex archeological areas. Int. J. Architect. Comput. 2009, 7, 39–56. [Google Scholar] [CrossRef]

- El-Hakim, S.; Gonzo, L.; Voltolini, F.; Girardi, S.; Rizzi, A.; Remondino, F.; Whiting, E. Detailed 3D modeling of castles. Int. J. Architect. Comput. 2007, 5, 200–220. [Google Scholar] [CrossRef]

- El-Hakim, S.F.; Beraldin, J.A.; Picard, M.; Godin, G. Detailed 3D reconstruction of large scale heritage sites with integrated techniques. IEEE Comput. Graph. Appl. 2004, 24, 21–29. [Google Scholar] [CrossRef] [PubMed]

- Torres-Martinez, J.A.; Seddaiu, M.; Rodriguez-Gonzalvez, P.; Hernandez-Lopez, D.; Gonzalez-Aguilera, D. A multi-data source and multi-sensor approach for the 3D reconstruction and web visualization of a complex archaeological site: the case study of “Tolmo De Minateda”. Remote Sens. 2016, 8, 550. [Google Scholar] [CrossRef]

- Zlot, R.; Bosse, M.; Greenop, K.; Jarzab, Z.; Juckes, E. Efficiently capturing large, complex cultural heritage sites with a handheld mobile 3D laser mapping system. J. Cult. Herit. 2014, 15, 670–678. [Google Scholar] [CrossRef]

- Lin, Y.; Hyyppa, J.; Jaakkola, A. Mini-UAV-Borne LIDAR for fine-scale mapping. IEEE Geosci. Remote Sens. Lett. 2011, 8, 426–430. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV-LiDAR system with application to forest inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Malenovsky, Z.; Turner, D.; Vopenka, P. Assessment of forest structure using two UAV techniques: A comparison of airborne laser scanning and structure from motion (SfM) point clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef]

- QNX. Available online: https://en.wikipedia.org/wiki/QNX (accessed on 10 March 2017).

- OpenPilot. Available online: https://en.wikipedia.org/wiki/OpenPilot (accessed on 10 March 2017).

- Scanning Range Laser. Available online: http://www.hokuyo-aut.jp/02sensor/07scanner/utm_30lx.html (accessed on 3 April 2017).

- Liu, J.Y.; Yun, T.; Zhou, Y.; Xue, L.F. Three-dimensional reconstruction of point cloud leaf based on the moving least square method. For. Mach. Woodwork. Equip. 2014, 42, 37–42. (In Chinese) [Google Scholar]

- Geomagic Studio. Available online: www.geomagic.com (accessed on 10 March 2017).

- WebGL. Available online: https://en.wikipedia.org/wiki/WebGL (accessed on 10 March 2017).

- Rehder, J.; Siegwart, R.; Furgale, P. A general approach to spatiotemporal calibration in multisensor systems. IEEE Trans. Robot. 2016, 32, 383–938. [Google Scholar] [CrossRef]

- Sasani, S.; Asgari, J.; Amiri-Simkooei, A.R. Improving MEMS-IMU/GPS integrated systems for land vehicle navigation applications. GPS Solut. 2016, 20, 89–100. [Google Scholar] [CrossRef]

- Besl, P.J.; Mckay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Kummerle, R.; Grisetti, G.; Strasdat, H.; Konolige, K.; Burgard, W. G2o: A general Framework for Graph Optimization. In Proceedings of the IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011. [Google Scholar]

- Liu, Y.; Zhong, R.F. Buildings and Terrain of Urban Area Point Cloud Segmentation Based on PCL. In Proceedings of the 35th International Symposium on Remote Sensing of Environment, Beijing, China, 22–26 April 2013. [Google Scholar]

- Levin, D. Mesh-Independent Surface Interpolation. In Geometric Modeling for Scientific Visualization. Mathematics and Visualization; Brunnett, G., Hamann, B., Müller, H., Linsen, L., Eds.; Springer: Berlin, Germany, 2004. [Google Scholar]

- Balenovic, I.; Milas, A.S.; Marjanovic, H. A comparison of stand-level volume estimates from image-based canopy height models of different spatial resolutions. Remote Sens. 2017, 9, 205. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Detection range | Max range 0.1–30 m, 270° |

| Accuracy | 0.1–10 m: 30 mm; 10–30 m: 50 mm |

| Angular resolution | 0.25° (360°/1440) |

| Scan speed | 25 ms/scan |

| Weight | 370 g |

| Sensors | Performance |

|---|---|

| Global positioning system (GPS) receiver | Horizontal position accuracy 5 m |

| Barometric altimeter | Resolution: 10 cm; Accuracy: ±1.5 mbar |

| Innertial measurement unit (IMU) | Gyroscopes: bias stability 0.007 °/s |

| Accelerometers: bias stability 0.2 mg | |

| Digital compass | Heading: accuracy 1 deg, resolution 0.1 deg |

| Pitch and Roll: accuracy 0.7 deg, resolution 0.1 deg |

| Zhenbei Tai | Jianan Bao | Erlou Cun | Shiba Dun Cun | |

|---|---|---|---|---|

| Number of points | 442,863 | 870,909 | 35,165 | 25,777 |

| Number of triangles | 885,726 | 1,623,443 | 68,338 | 50,516 |

| Spatial resolution (average) | 20.2 cm | 22.4 cm | 23.3 cm | 21.6 cm |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deng, F.; Zhu, X.; Li, X.; Li, M. 3D Digitisation of Large-Scale Unstructured Great Wall Heritage Sites by a Small Unmanned Helicopter. Remote Sens. 2017, 9, 423. https://doi.org/10.3390/rs9050423

Deng F, Zhu X, Li X, Li M. 3D Digitisation of Large-Scale Unstructured Great Wall Heritage Sites by a Small Unmanned Helicopter. Remote Sensing. 2017; 9(5):423. https://doi.org/10.3390/rs9050423

Chicago/Turabian StyleDeng, Fucheng, Xiaorui Zhu, Xiaochun Li, and Meng Li. 2017. "3D Digitisation of Large-Scale Unstructured Great Wall Heritage Sites by a Small Unmanned Helicopter" Remote Sensing 9, no. 5: 423. https://doi.org/10.3390/rs9050423