1. Introduction

Urbanization is an inevitable trend of socioeconomic development, especially in developing countries like China, which can be reflected from the spread of urban built-up areas and the evolution of city structure. “Urban village”, a typical region formed due to the rapid urbanization of cities, appears in both the outskirts and downtown areas of many major Chinese cities, such as Guangzhou and Shenzhen. Although urban villages in China and mega-slums in other developing countries are caused by different reasons, they share some similarities in their structures and patterns. They all have compact patterns, irregular distributions, and various building types within limited spaces [

1,

2,

3]. Therefore, study of the urban context of these areas can further help with the understanding and investigation of urbanization and socioeconomic development in developing countries.

As the foremost element of urban landscape, urban buildings are of great research importance in urban management, landscape architecture, and social economy [

4]. Fast and accurate acquisition of the spatial distribution, structure, and type information of urban buildings is a principal and fundamental requirement for urban studies in order to analyze and understand characteristics, patterns, and driving forces of the urbanization process, as well as facilitate urban planning, urban management, and decision-making [

5].

Remote sensing technology makes it possible to acquire building information rapidly and accurately from different perspectives, different scales, and different dimensions. The fast-developing high-resolution images (HRI) and Light Detection and Ranging (LiDAR) have provided ideal data sources for remote sensing building extraction. HRI could provide detailed spatial information including texture, color, and shape, as well as certain spectral information [

6]. Meanwhile, its lack of height information can be compensated by LiDAR data, which display building information from three-dimensional aspects, but has limited spectral information. Naturally, integration of HRI and LiDAR data serves as an ideal option for extracting building information. As a matter of fact, there have been quite a large number of published papers in this area. Most of these studies focus on the extraction of buildings’ 3D information. For example, Nevatia integrated LiDAR point clouds and the building outline of HRI to extract 3D building information [

7]. Rottensteiner built a hierarchical robust difference model to construct digital surface models for extraction and projected roof edge models generated from LiDAR data back to aerial images to further increase the accuracy [

8,

9]. Chen et al. built a set of point clouds for each building in LiDAR and then used these sets to detect boundaries and directions of buildings in HRI [

10]. Antje Thiele also pointed out in her work that integrative use of multi-source data can significantly improve building reconstruction quality [

11]. These studies have proved the superiority of using integrated HRI and LiDAR data over single data sources in extracting 3D building information. Some researchers have also focused on ways to improve the classification accuracy via additional features and information. One notable research direction is to use super-object information for remote sensing image extraction and classification. Super-object information refers to properties of the segment one pixel or sub-object is located within and its connection to that segment [

12]. As an extension of the object-oriented classification, the super-object information method has been proved to be able to facilitate accurate HRI classifications [

12,

13,

14].

Compared to the great progress in 3D building information extraction, it is only until recently that scientists began to show interest in classifying building types based on remote sensing data and techniques. Existing building type classification is usually performed to provide key information for estimating the population in a certain area [

15]. Abellán built decision trees to classify different residential houses [

16] according to the texture and shape of buildings. Block classification was employed by Qiu to distinguish single-, double-, and multi-floor family buildings through the building volumes from LiDAR data [

17]. Some researchers used extracted morphological metrics to differentiate building types and then used the results for population estimation [

18]. Yan et al. used phase analysis and 3D information to classify buildings according to their orientation, instead of their types [

19]. These studies have resulted in meaningful exploration. However, most of them simply used background information or height information for classification and could only be applied to specific regions. Additionally, their accuracy of building type classification is not satisfactory yet and may further affect the accuracy of population estimations. In order to fully utilize the advantages of both LiDAR and HRI data, it is necessary to further explore topics such as decisive features of building type classification, approaches to acquire and integrate more sources of data, or the best performing classification model.

Based on integrated LiDAR and HRI data, we are hereby proposing a building type classification scheme for urban studies of urban villages or mega-slums in developing countries. This scheme integrates multiple methods following several steps. First, a progressive morphological filter, which was firstly developed by Zhang et al. to detect non-ground LiDAR measurements [

20], is used in this study to identify and extract buildings from the grayscale grid image of elevations converted from the original LiDAR points. This is realized by gradually increasing the window size of the filter and using elevation difference thresholds. Second, data integration is performed by simply adding the grid layer of extracted buildings to the HRI data as a new band. Third, multi-resolution object-oriented segmentation is then performed to segment the integrated LiDAR and HRI data at different scales. The object-based image analysis approach segments an image, usually HRI, into relative homogeneous “segments”, which can provide rich spatial and contextual information, such as size, shape, texture, and topological relationships for accurate classification [

21]. Multi-resolution object-oriented segmentation can produce a hierarchy in which segments generated at a fine scale are nested inside of segments generated at coarser scales. Spectral, texture, size, and shape information of the larger segments, referred to as super-objects, can be assigned to their sub-objects, i.e., the smaller segments nesting in them. Jonson and Xie have found that classification accuracy of HRI can be significantly improved by incorporating super-object information [

12]. Therefore, we incorporated super-object information in the segmentation process of the integrated LiDAR and HRI data, to provide more information for classification. Finally, instead of using traditional supervised or unsupervised classification methods, we used a random forest algorithm to classify buildings into specific types based on segmentation results along with their super-object information. The random forest classifier is an ensemble classifier that uses a random subset of the input variables and a bootstrapped sample of the training data to perform a decision tree classification [

22]. It has been widely used in many areas and has been shown to perform quite well for classifying remote sensing images [

23,

24]. Based on the segmentation-based classification results, the dominant building type in each single building is then calculated in light of the LiDAR building footprints. In order to prove the necessity of integrating LiDAR and HRI data, as well as the necessity of incorporating super-object information during classification, we used different data combinations to extract and classify buildings in the study area and compared the results. Comparison to another classification method, namely the support vector machine (SVM), was also included. Validation of the proposed scheme was performed through its application in a different area.

2. Data

The remotely-sensed data used in this research include LiDAR point cloud data and high spatial resolution orthoimagery data, both collected on 19 April 2014. Two areas are chosen in this study, one as the study area and the other as a site to validate the proposed method. The LiDAR data were produced at a point spacing of 0.1 m, with an accuracy of 15 cm. The highest point in the study area is at an absolute altitude of 26.73 m above the mean sea level and the lowest point is at an absolute altitude of 14.2 m. The altitude of the highest point in the validation district is 57 m. Digital Orthophoto Map products were elaborated and corrected from raw aerial photos captured by airplanes, and has three bands (red, green, and blue) with a spatial resolution of 0.1 m. Both the LiDAR data and the orthoimagery data were transformed into the datum of Xi’an 1980.

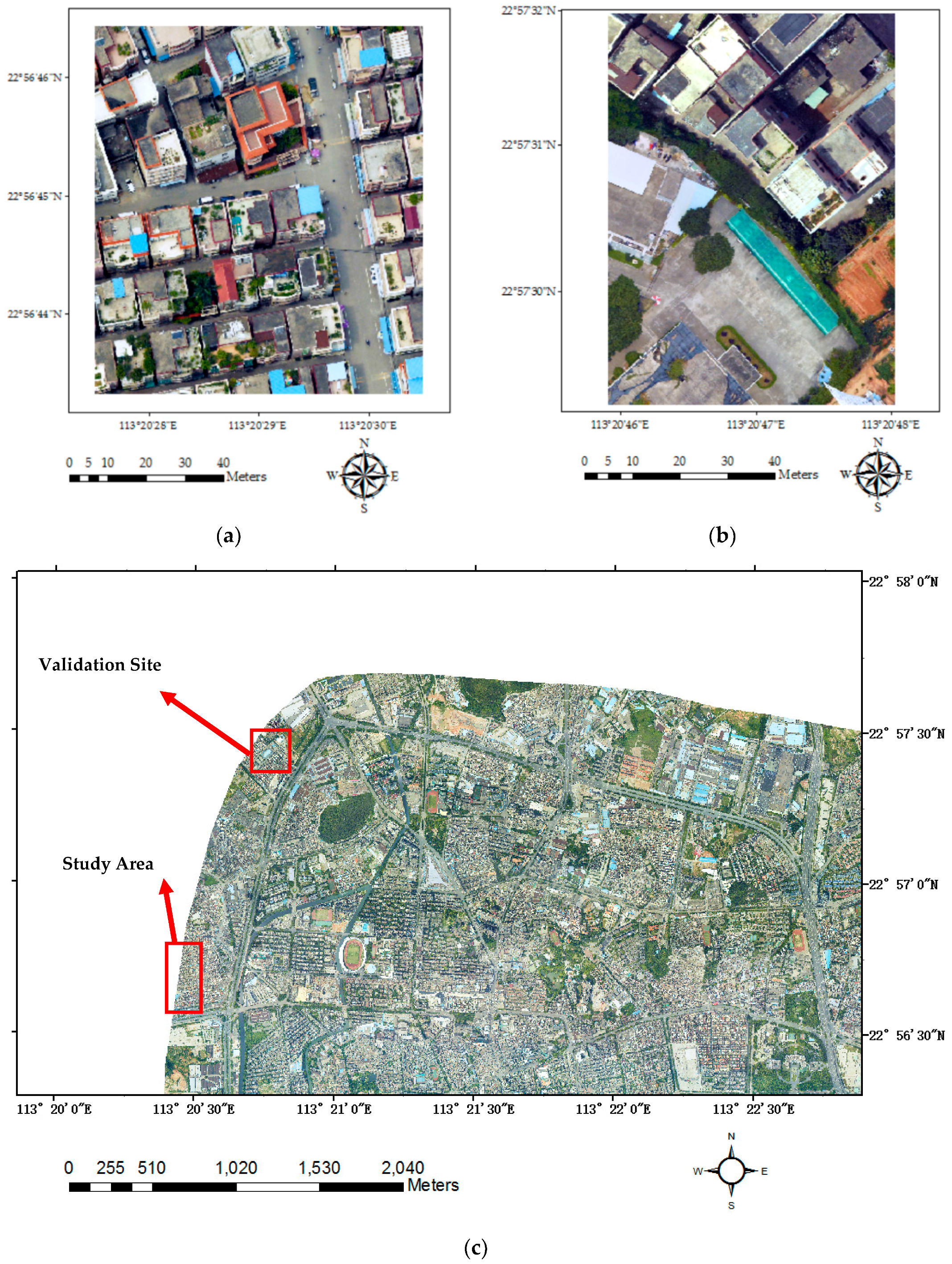

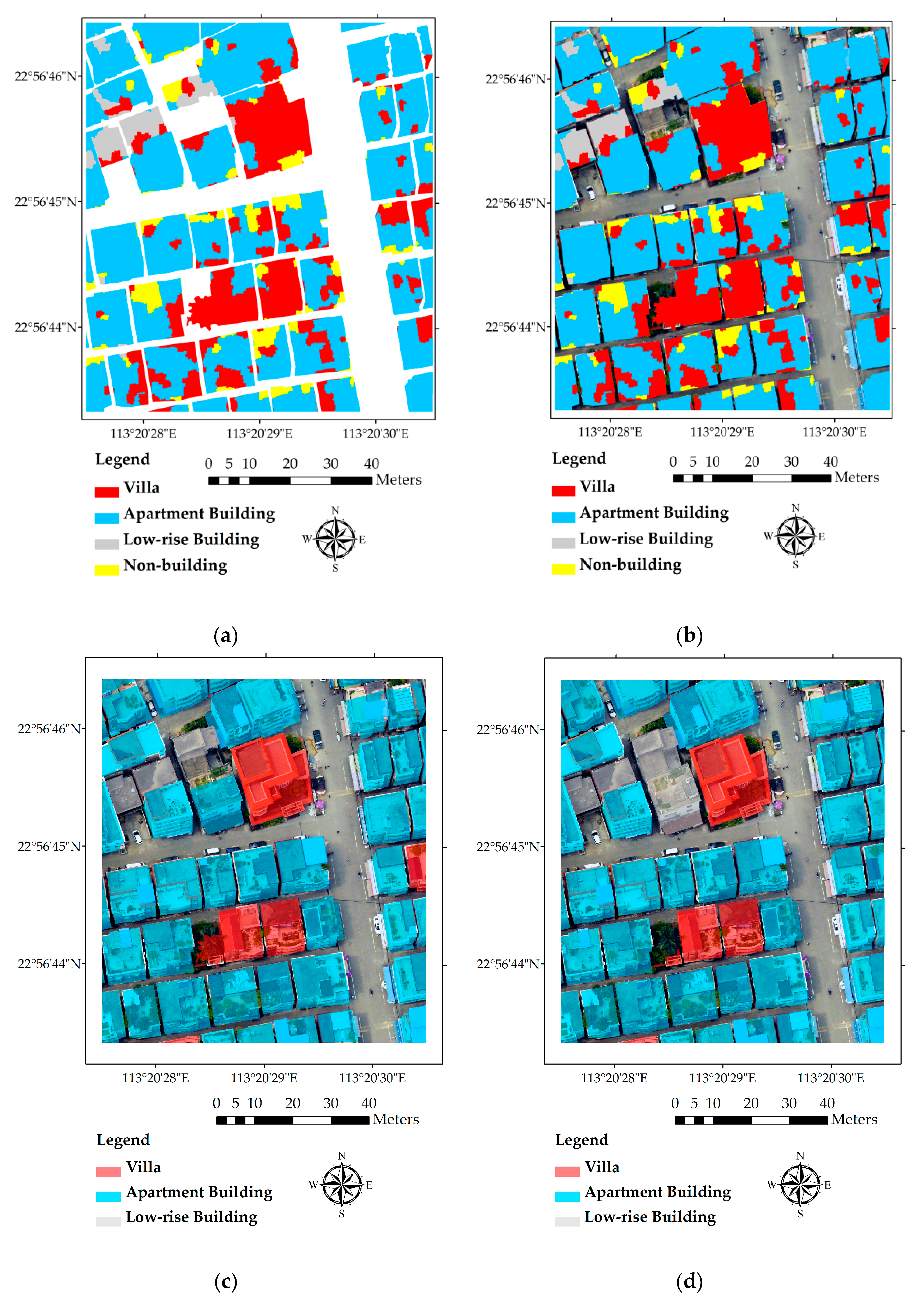

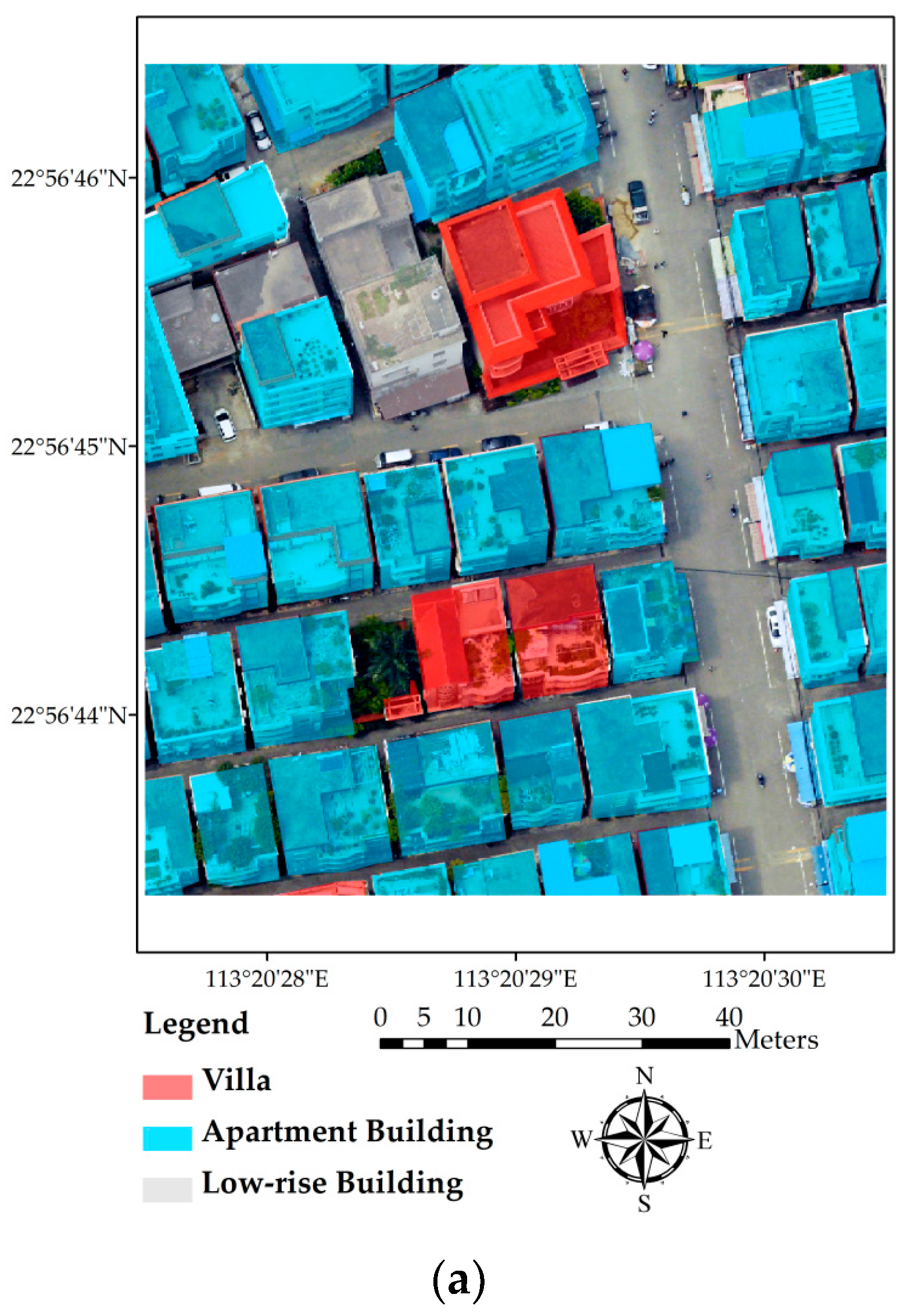

The study area we selected is located at Shiqiao street, around the downtown area of Panyu District, Guangzhou, Guangdong province, China (see

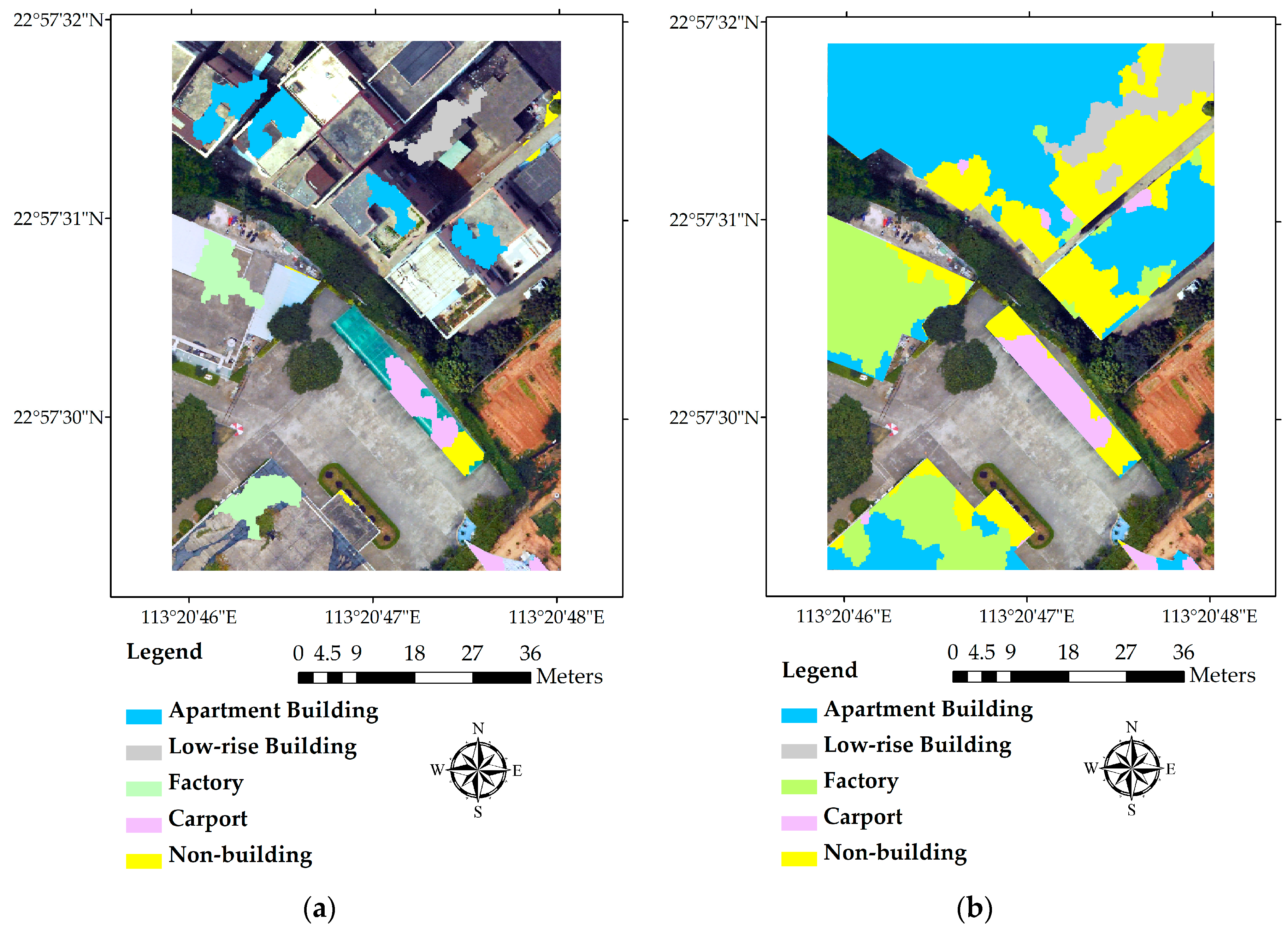

Figure 1a). Buildings are the dominant landscape feature in this area, with some trees and green plants scattered among them. Other ground objects in this area include roads, driveway, parking lots, and cars. Buildings in this area are of different types. Most of them are apartment buildings; some are villas; a few others are low-rising buildings. We also selected another area in the same administrative district (

Figure 1b) to validate our proposed method, which not only includes building types mentioned above, but also has some large factory buildings and car ports. Both of these two areas are within urban villages, surrounded by modern urban constructions. Differing from buildings in ordinary urban or rural areas, buildings in urban villages or slums are usually densely distributed and built in various shape, structure styles, roof/balcony styles, and building materials. Some buildings even have green plants grown on their roofs or balconies. All of these characteristics make it a difficult task to extract and classify buildings in the urban villages or slums. In this study, we especially chose these two areas to test the adaptability of our proposed building classification scheme for the sake of providing a globally applicable method of building classification for urban villages and slums in developing countries.

3. Methods

Building height is one of the most important and effective indicators to identify building types. However, it has not been fully utilized in building type classification studies [

25]. In this study, building height information is firstly extracted from LiDAR data using a morphological progressive filter, with the purpose of minimizing the interference of non-building areas in building classification. The extracted building height footprints are then integrated with HRI data as a supplementary band, so as to fully utilize the height information from LiDAR, as well as spatial, textural, and spectral information from HRI [

26,

27,

28,

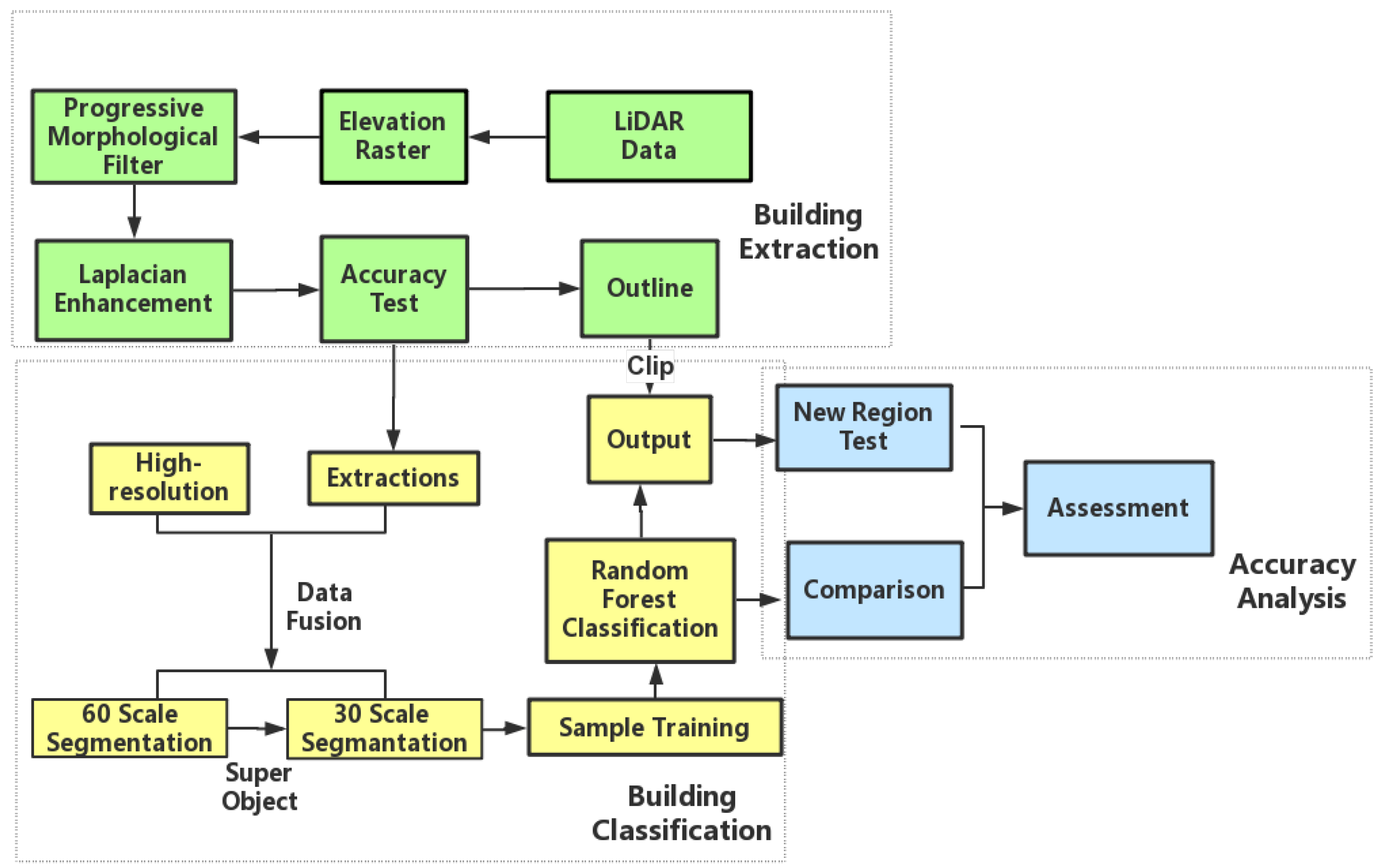

29]. Object-oriented multi-resolution image segmentations are operated on the integrated data based on the height, texture, spatial and spectral information. Segments obtained in this step are then assigned with the super-objects information, i.e., the height, spectrum, texture, size, and shape information of their super-objects from coarser segmentations of the same scene. Finally, segmented patches, after connecting to their corresponding height, spectral, surface, geometric, spatial-relation information, as well as super-object features, are set as inputs when performing the random forest classification. The informative features could not only enhance the ability of the classifier to identify building type, but also increase the homogeneity of objects and their surroundings. As the classification is based on different building patches and it is difficult to interpret the result, further modification of results includes using the building outline extracted from the LiDAR building footprints as the boundary to calculate the dominant type of each individual building. A flowchart of this study is shown in

Figure 2, with key steps described in the following subsections. Data processing and analysis are conducted in MATLAB R2015a (

www.mathworks.com), ESRI ArcGIS 10.4 (

www.esri.com/software/arcgis), and Trimble eCognition (

www.ecognition.com).

3.1. Building Extraction

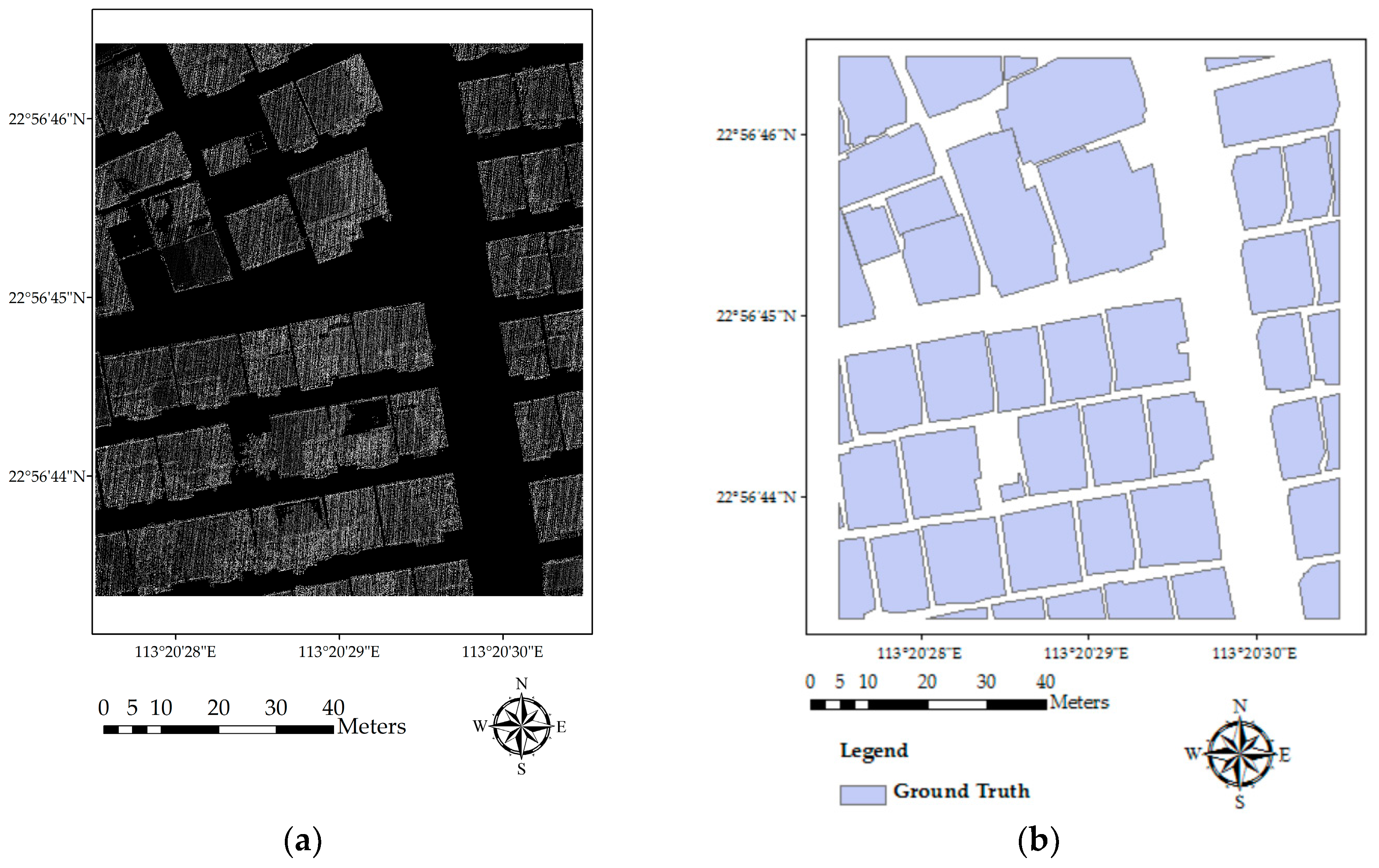

3.1.1. Conversion from LiDAR Point Clouds to the Height Raster Image

LiDAR point clouds store height information of each building, which serves as an important feature to differentiate buildings from other urban constructions. However, it is difficult to use the point cloud data directly. Therefore, the LiDAR point clouds are firstly converted to a height raster layer. Due to the irregular distribution of LiDAR points, some cells in the converted raster layer may contain more than one point or no point at all. We followed three rules during the conversion process [

30]: (1) the cell size of the height raster should be less than, or equal to, the average point space of the LiDAR data; (2) the value of a cell is determined by the height of the lowest point in that cell so that the erroneous or misleading height values produced by birds, clouds, or any other unnecessary objects could be removed; (3) when there is an empty cell, the value of that cell would be calculated by the nearest neighbor interpolation, which assigns the average height of the other eight cells around that cell as its own height.

3.1.2. Progressive Morphological Building Extraction

In most cases, LiDAR-based building extraction uses LiDAR data only [

31,

32,

33]. Some scholars have also integrated LiDAR and other sources of data [

34,

35,

36,

37] for extracting buildings. Although integrated data could provide more sources of information for building extraction, it also brings problems, like information redundancy and operation complexity. Therefore, using LiDAR only is still the first choice provided that the extraction accuracy is acceptable.

The progressive filter can adaptively adjust its threshold according to the relationship between the filter window size and the bandwidth of the height difference inside the filter window [

20,

38]. This filter can efficiently rule out ground pixels and other non-building pixels in light of the morphological features of each pixel and its neighboring pixels without manual intervention. In this study, we used the progressive morphological filter to process the height raster image derived in the last step and extract the buildings.

A suitable range of filter window sizes was first decided according to the compactness, aggregation, and proximity of the pixel, as well as the distance of different objects in the image. Then, progressive morphological iteration is executed to delineate building footprints with a step length of 1. The formula used to calculate the weight value of each pixel, proposed by Michel Morgan and Klaus Tempfli, was listed as follows [

30]. The weight value determines if a pixel is inside a building area:

In the formula, k represents the sequence of the current window; S represents the size of the window; S

min and S

max refer to the minimum and maximum sizes of the filter window range selected in the first step; i is the sequence of cell; h is the height value of the current cell, while h

min is the minimum height value in the current window; HT means the height threshold set for the current window. According to Meilin Sun et al. [

39], the height threshold is determined by:

where H

max means the maximum value of the range of heights, H

0 means the value of initial height, c refers to the size of the cell.

After this step, all of the building pixels would have low weights, while ground pixels and many other pixels containing low objects would have high weights. Finally, the building footprint is generated by filtering non-building pixels with a threshold.

In addition, the building footprint raster could be modified by applying a Laplacian filter. A possible kernel of this filter is defined as follows:

The filter can enhance the digital number (DN) values of building pixels in the image [

40,

41]. Sometimes, vegetation might mix with some low buildings. A Laplacian filter could efficiently distinguish these two kinds of objects and could clear out all of the confusing pixels at the cost of minimum information loss [

42,

43,

44]. Additionally, the difference of heights among different buildings could also be stretched by employing this filter without disturbing their basic mathematical relationship.

3.2. Building Type Classification

3.2.1. Integration of LiDAR and HRI

Although building height is one of the most important features for classification of different buildings, it is difficult to get accurate building type classification results when solely using height information. Through data integration, it is possible to fully utilize height information from LiDAR, as well as spatial, textural, and spectral information from HRI.

According to the method in

Section 3.1, a height raster image of building footprints is converted from original LiDAR data with non-building points being filtered out in order to minimize their interference to the building classification. The building footprints are then georeferenced with the HRI data to be in the same spatial range and resampled to the same spatial resolution of 0.1 m. Integration of the LiDAR and HRI data is then performed by stacking the building extraction layer with the HRI data as a supplementary feature layer. Four feature layers are obtained in total, with the red, green and blue bands showing the textural and geometric information and one band showing the height information. These two data are combined in this simple way, making the method easy to operate, and at the same time ensuring that the integration of building height information with textural and spectral information provides more information for building classification. Buildings can be more easily distinguished from their surrounding ground objects thanks to the apparent height differences. Higher classification accuracy can, hence, be expected.

3.2.2. Super-Object Information from Multi-Resolution Segmentation

Super-object information refers to the information contained by coarse segments, including spectrum, proximity, geometry, shape, texture, etc. By assigning super-object information to pixels or segments, characteristics of each fine scale segmentation could be prominently highlighted [

12].

After the integration of two sources of data, object-oriented segmentation is operated on these bands, using the multi-resolution method in the Trimble eCognition software. The multi-resolution segmentation, starting from one small pixel, can merge small objects into a large one through a pair-wise clustering process [

45]. In this study, the large scale segmentation serves as the super-object information and is related to the fine scale segmentation in order to enrich indications to classification and increase the accuracy of the method [

13].

For this study, selected super-object information can be classified into three types: (1) geometry, which mainly contains extent, length, thickness, asymmetry, density, and compactness, describing the boundary and shape of each segmentation, as well as its neighborhood and its relation to the super-object; (2) band information, which mainly contains brightness, standard deviation, mean DN values, spectral and layer information; and (3) texture information, which contains the contrast information, angular second moment and correlation of gray level co-occurrence matrix, describing Haralick texture features of segments [

46,

47]. These three types of information could speak for most of the basic characteristics of spatial objects in the study area. On one hand, they can represent the two-dimensional information of HRI, quantifying spatial features of each object; on the other hand, they connect each independent object with its surrounding objects and improve the coverage and the ability of spatial expression of super-object information. Moreover, it is proven that the super-object information fits well with the 3D height information.

3.2.3. Random Forest Building Type Classification

Random forest classification is an ensemble method which could enhance the distinction between two different unclassified classes and distinguish their own features based on the results from several decision trees [

48]. Every decision forest includes a set of expert tree classifiers, and all of these classifiers would, altogether, vote for the most probable class of an input vector [

49]. Decision trees can summarize the features of those confusing objects to increase their distinctions [

50]. Additionally, the classifier can correct each decision tree’s habit and choose the most popular classification. An appealing aspect of the random forest classifier is that it can avoid overfitting and can rapidly adapt to the training data. Moreover, the random forest classifier can still accurately estimate the missing samples and maintain its stable classification performance even when there is information loss [

22]. Random forest classification has been widely used in areas of economics, geography, medical science, and signal engineering. As for its applications in remote sensing, Ham implemented two approaches within a binary hierarchical multi-classifier system, generalizing the random forest classifiers in an analysis of hyperspectral data [

23]. Fan et al. extracted building areas from LiDAR point clouds using the random forest algorithm [

51]. Bosch et al. explored the method of classifying images using object categories by combining multi-way SVM and random forest classification [

52]. Nonetheless, all of these studies are simply using the random forest classifier for extracting building information, without further classifying these buildings into different types.

In general, the random forest classifier has better performance in urban building classification compared to other classifiers [

53] due to the complex building structures in the urban area. Therefore, we incorporated the random forest classifier in this study to classify building types based on the building segmentations obtained in the last step. In this research, random forest classification is implemented in MATLAB R2015a using the corresponding package.

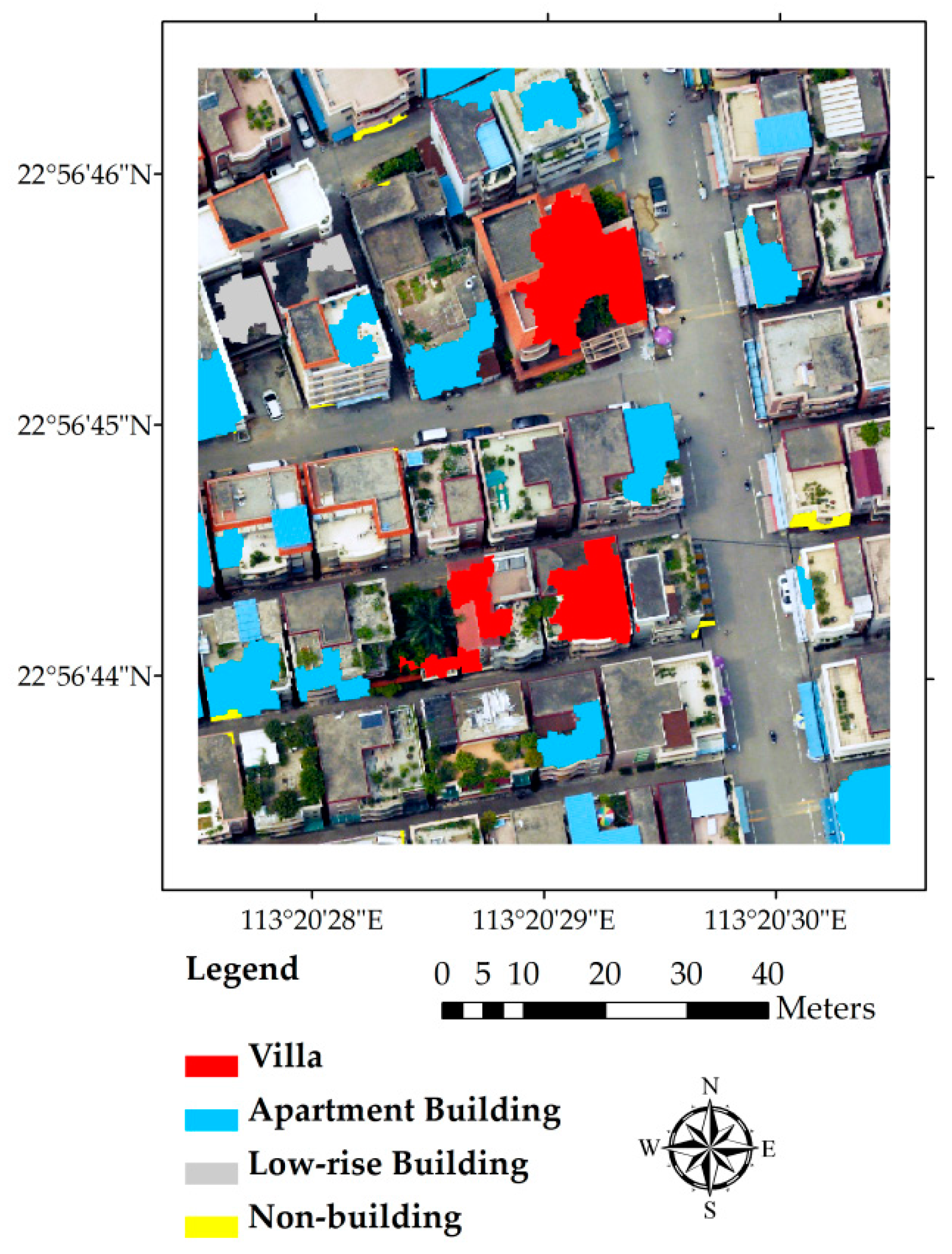

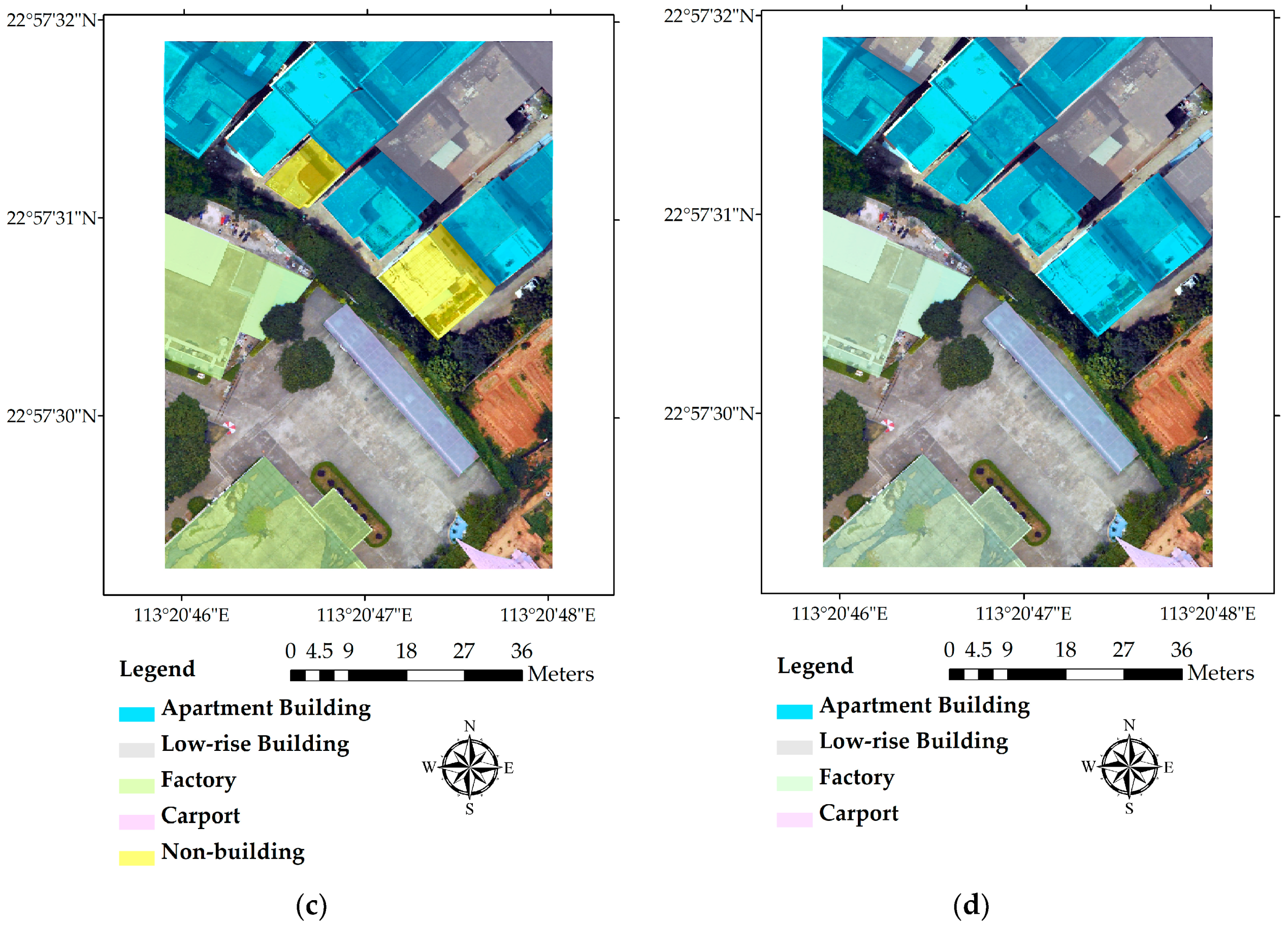

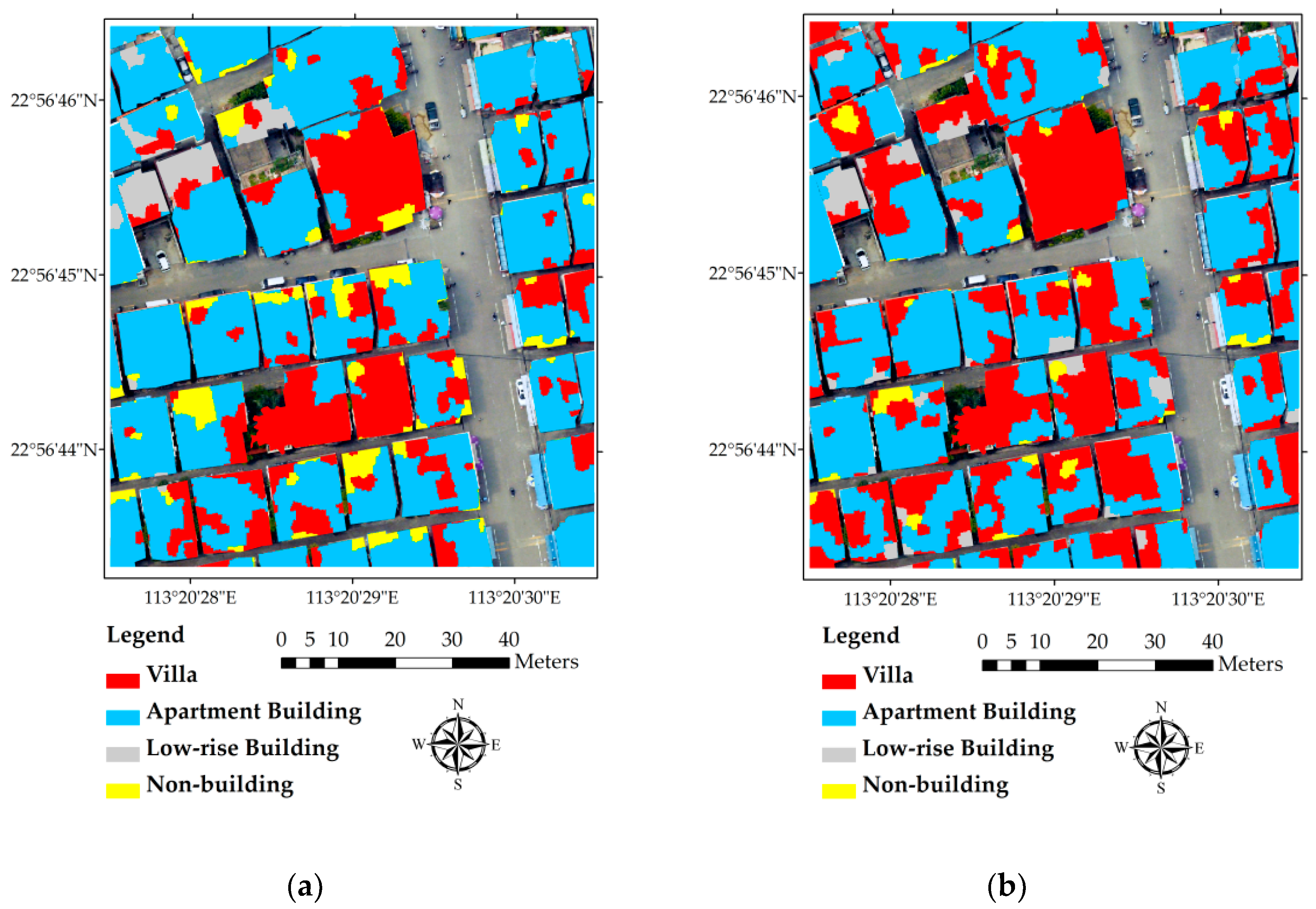

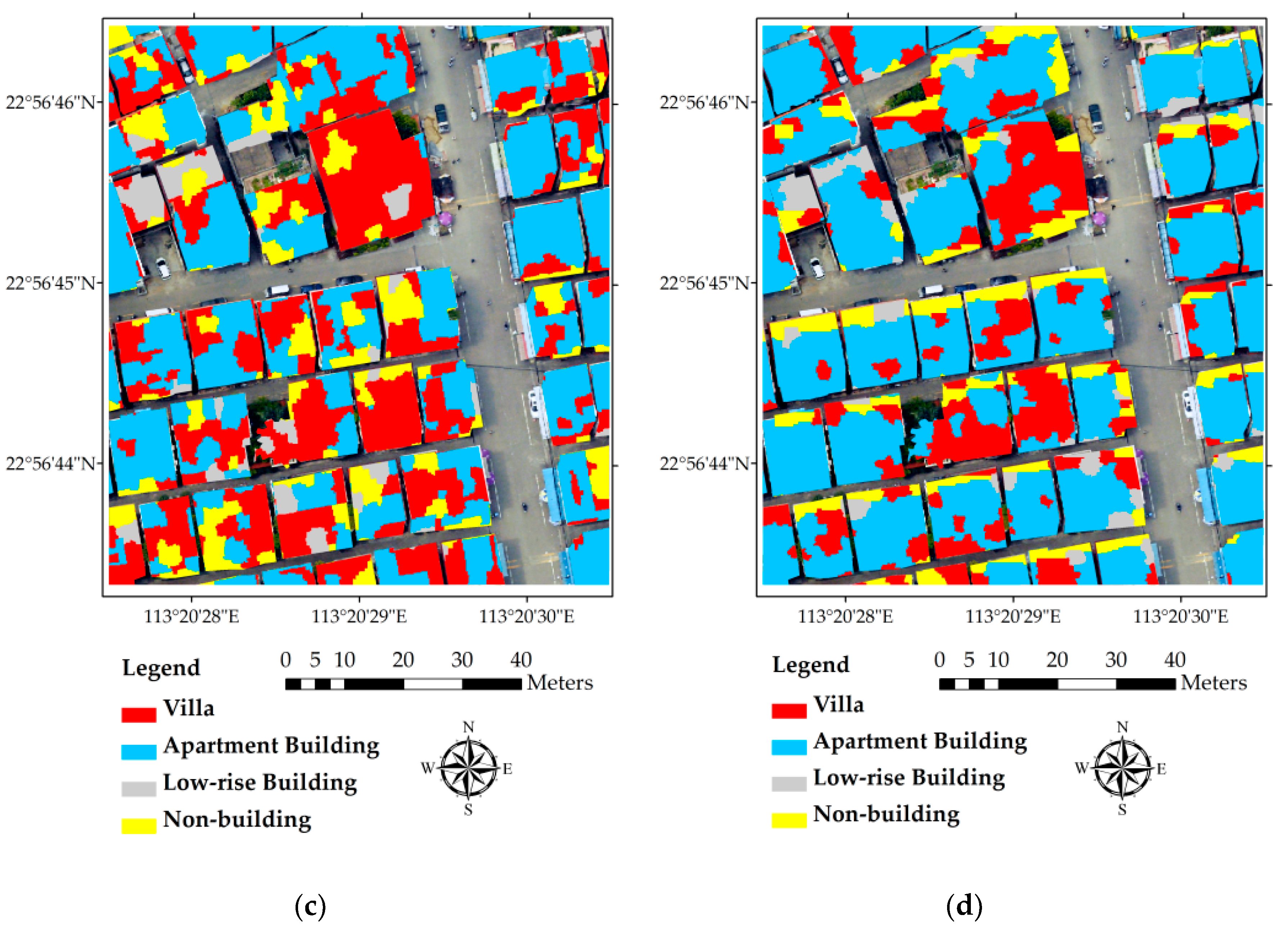

Located inside the “Urban Village”, both the study area and validation area have various types of buildings. Considering their pattern, volume fraction, building heights, as well as land use type, buildings are classified into the following categories: apartment building, villa, and low-rise building. In order to train the classifier, 164 object patches (17% of the total number) are randomly selected from the segmentation data. Seventy percent (70%) of these sample segmentations (117 patches) are used as the training set while the remaining samples are used for validation. The two main parameters of random forest method, namely number of trees and number of branches, are adjusted according to the out-of-bag error rate of the training samples. In the classification process of the study area, the number of trees is set as fifty, while the number of branches is set as three.

Random forest building type classification was executed after object-oriented segmentation of the combined data. The super-object information is appended to each patch from multi-resolution segmentation using the spatial joining tool in ESRI ArcGIS software. Patches in the training set, together with their properties and super-object information, are then input to the classifier. Selection and combination of different features are conducted randomly during the process. Features are randomly selected and then connected through random nodes to form unrelated trees and forests. By doing so, similarity and correlation among the trees are minimized. Therefore, trees can provide more decision-making supports when there are only a limited number of features relating to the buildings. Moreover, different linear sub-feature combinations are also tried to find the optimal feature space. The main steps of random forest classification [

50,

52] in this research are listed as follows:

- (1)

Select training samples from the object-oriented segmentation results. The samples should cover all of the building types and contain building type information, as well as super-object information.

- (2)

Build an initial unpruned tree for every single sample. Then every tree would modify itself and select the optimal type and branch when predicting the samples.

- (3)

Predict the next building type according to the mean reversion and the maximum number of decision trees in the last round of prediction. The out-of-bag (OOB) error rate is calculated for the building classification results to assess the accuracy and performance of the training.

- (4)

After the training process, all of these trained decision trees would be selected for the building classification of all of the data.

Since the random forest classification is performed at segment level, it is very possible that segments from a single building are classified into different building types. Therefore it is necessary to further process the segment-level classification results to get the building-level classification results. In this study, such building-level classification results were derived by assigning each building the dominant (in terms of area) building type of all the segmentations inside its outline.

6. Conclusions

This research proposes a whole scheme for classifying building types based on LiDAR data and high spatial resolution remote sensing images. The first step is using the progressive morphological filter to extract building footprints from LiDAR point clouds; the second step is combining the building height information with high-resolution images; the third step is object-oriented multi-resolution segmentation of the integrated LiDAR and high-resolution images while incorporating super-object information; the final step is classifying building types by using the random forest classifier. Comparative studies were carried out to prove the necessity of using combined LiDAR and HRI data, as well as the necessity of incorporating super-object information during classification. The proposed scheme was also validated by applying it to a different area.

The following conclusions can be made through the analysis of this study’s results. Firstly, more precise building classifications could be obtained by integrating LiDAR building footprints and high-resolution images, compared to simply using LiDAR data or high-resolution images. Secondly, both building height information and super-object information play important roles in building type classification. The accuracy of building type classification can be remarkably improved by assigning super-object information to fine-scale segments. Through super-object information, fine-scale segments could inherit properties like proximity, aggregation, spectrum, and so on from course-scaled segments, hence providing more information to the classifier. Thirdly, the random forest classifier could effectively utilize various features from the integrated LiDAR and HRI data and provide accurate building type classification results. Finally, the building type classification scheme proposed in this paper has great potential for application in areas with multiple building types and complex backgrounds, especially applicable to urban villages or slums in developing countries worldwide.

Limitations of this research include inadequate consideration of terrain effects, inconvenience in realization, and a lack of extensive testing and validation. In spite of these limitations, we strongly believe that the building type classification scheme proposed in this paper has great potential for application in many areas, such as building information updates, population estimation, urban planning, management, etc.