Cancer Diagnosis Using Deep Learning: A Bibliographic Review

Abstract

:1. Introduction

2. Steps of Cancer Diagnosis

2.1. Pre-Processing

2.2. Image Segmentation

2.3. Post-Processing

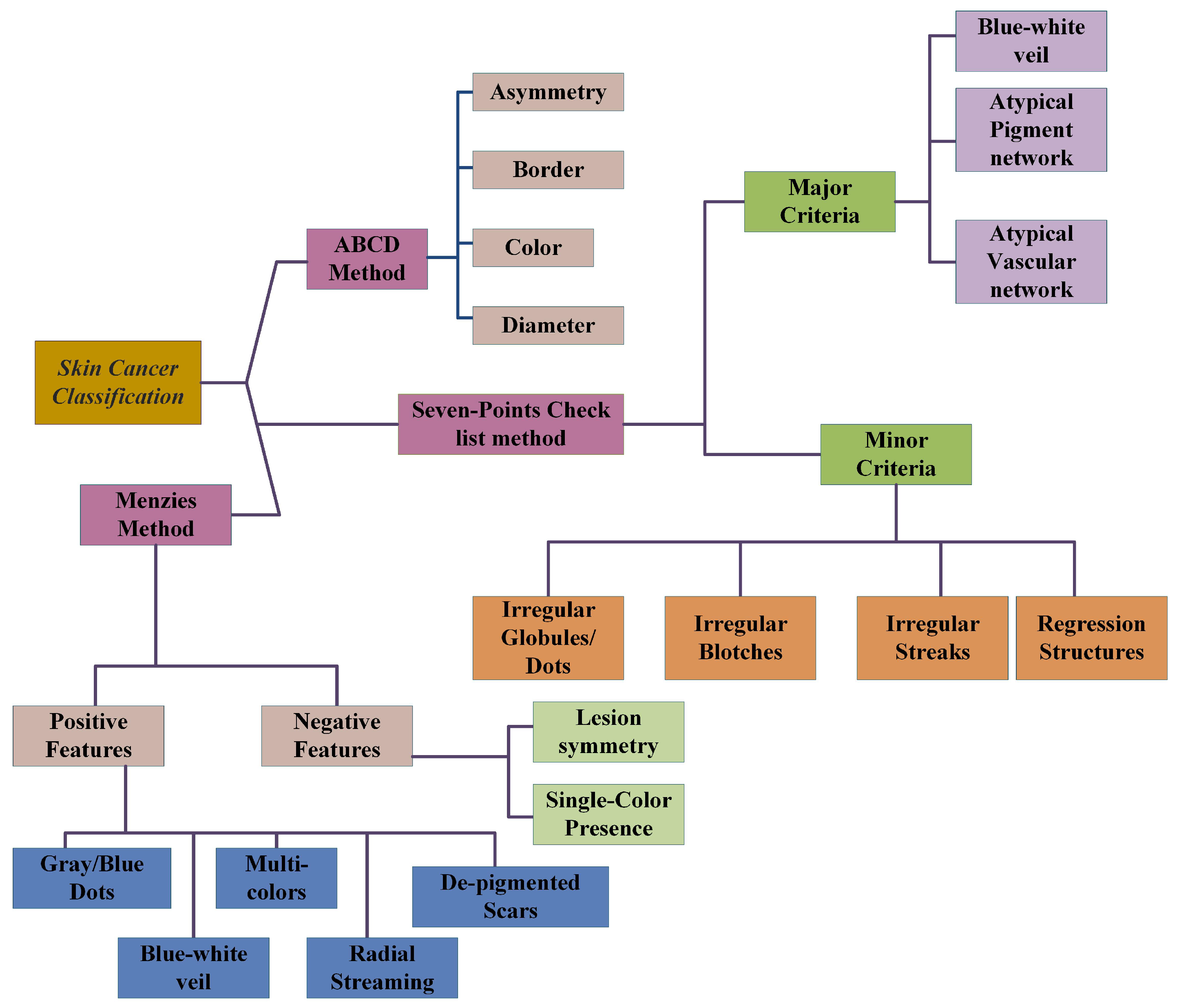

2.4. ABCD-Rule

2.5. Seven-Point Checklist Method

2.5.1. Major Criteria

2.5.2. Minor Criteria

2.6. Menzies Method

2.7. Pattern Analysis

3. Artificial Neural Networks

3.1. Convolutional Neural Networks

- (i)

- Sigmoid activation function [45] is given by the equation:It is nonlinear in nature; its combination will also be nonlinear in nature, which gives us the liberty to stack the layers together. Its range is from −2 to 2 on the x-axis and on y-axis it is fairly steep, which shows the sudden changes in the values of y with respect to small changes in the values of x. One of the advantages of this activation function is its output always remains within the range of (0,1).

- (ii)

- Tanh function is defined as follows,This is also known as the scaled sigmoid function:Its range is from −1 to 1. The gradient is stronger for the tanh than the sigmoid function.

- (iii)

- Rectified linear unit (ReLU) is the most commonly used activation function [45,46,47], where g denotes pixel-wise function, which is nonlinear in nature. That is, it gives the output x, if x is positive and it is 0 otherwise.ReLU is nonlinear in nature and its combination is also nonlinear, meaning different layers can be stacked together. Its range is from 0 to infinity, meaning it can also blow up the activation. For the pooling layer, g reduces the size of the features while acting as a layer-wise down-sampling nonlinear function. A fully connected layer has a 1 × 1 convolutional kernel. Prediction layer has a softmax which predicts the probability belonging of Xj to different possible classes.

3.2. Multi-Scale Convolutional Neural Network (M-CNN)

3.3. Multi-Instance Learning Convolutional Neural Network (MIL-CNN)

CNN Architectures

- (i)

- LeNet-5In 1998, a seven-level convolutional neural network was proposed, which was named as LeNet-5 by LeCun et al. The main advantage of this network was digit classification and was used by banks for the classification of handwritten numbers by costumers. They used 32 × 32 pixel grey-scale images as input for the classification. To process large images, high-resolution demands more convolutional layers, which limits this architecture.

- (ii)

- AlexNetAlexNet was a challenge winner architecture in 2012, by reducing the top-5 errors from 26% to 15.3%. This network is similar to LeNet but is deeper, with an increased number of filters per layer and more stacked convolutional layers. It consists of 11 × 11, 5 × 5, and 3 × 3 convolutional kernels, max pooling, dropout, data augmentation, and ReLU activations. ReLU activation is attached after every convolutional and fully connected layer. It takes two days to test this network on GPU580 Nvidia Geforce, which is why they split the network into two pipelines. The designers of ALexNet are a supervision group consisting of Alex Krizhevsky, Geoffrey Hinton, and Ilya Sutskever.

- (iii)

- ZFNetZFNet was the winner of ImageNet Large Scale Visual Recognition Competition (ILSVRC) 2013. The authors reduced the top-5 error rate to 14.8%, which is half the non-neural error rate. They achieved it by keeping the AlexNet structure the same but changing its hyperparameters.

- (iv)

- GoogleNet/ Inception V1This was the winner of ILSVRC 2014 with the top-5 error rate of 6.67%, which is very close to human-level performance, thus the creators of the network were forced to perform human evaluation. After weeks of training, the human experts achieved top-5 error rate of 5.1% (single model) and 3.6% for ensemble. The network is a CNN based on LeNet dubbed with the inception module. It uses batch normalization, image distortions, and RMSprop. This is a 22-deep-layered CNN network but can reduce the parameters from 60 million to 4 million.

- (v)

- VGGNetVGGNet was the runner-up in ILSVRC 2014. It is made up of 16 convolutional layers and a uniform architecture. It has only 3 × 3 convolution but many filters. It was trained for three weeks on 4 GPUs. Because of its architectural uniformity, it is the most appealing network for the purpose of feature extraction from images. The weighted configurations of this architecture were made public and is has been used as the baseline for many applications and challenges as the feature extractor. The biggest challenge one faces for this network is its 138 million parameters, which become difficult to handle.

- (vi)

- ResNetResidual neural network (ResNet) at the ILSVRC 2015 uses skip connections and feature batch normalization. Those skip connections are also known as gated recurrent units, which are similar to the elements being applied recently in RNNS. This network-enables training a neural network with a 152 layers and a reduced complexity comparable to VGGNet. The achieved error rate of top-5 was 3.57%, thus it beats the human-level performance on the given dataset.

3.4. Fully Convolutional Networks (FCNs)

3.4.1. U-Net Fully Convolutional Neural Network

3.4.2. Generative Adversarial Networks (GANs)

3.5. Recurrent Neural Networks (RNNs)

3.6. Long Short-Term Memory (LTSM)

3.7. Restricted Boltzmann Machine (RBM)

3.8. Autoencoders (AEs)

3.9. Stacked Autoencoders

3.10. Sparse Autoencoders SAE

3.11. Convolutional Autoencoders CAE

3.12. Deep Belief Networks (DBN)

3.13. Adaptive Fuzzy Inference Neural Network (AFINN)

4. Evaluation Metrics

4.1. Receiver Operating Characteristic Curve (ROC-Curve)

4.2. Area under the ROC Curve (AUC)

4.3. F1-Score

4.4. Accuracy

4.5. Specificity

4.6. Sensitivity

4.7. Precision

4.8. Jaccard Index

4.9. Dice-Coefficient

4.10. Average Accuracy

5. Models and Algorithms

5.1. Breast Cancer

5.2. Lung Cancer

5.3. Brain Cancer

5.4. Skin Cancer

5.5. Prostate Cancer

6. Discussion

7. Summary and Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| ABCD | Asymmetry, Border, Color Variation and Diameter |

| ABCDE | Asymmetry, Border, Color Variation, Diameter and Expansion |

| AD | Adenocarcinoma |

| ANN | Artificial neural networks |

| AI-ANN | Artificial intelligence-artificial neural networks |

| AK | Actinic Keratosis |

| AUC | Area under the characteristic curve |

| BoF | Bag-of-features |

| BCC | Basal cell carcinoma |

| BK | Benign Keratosis |

| CAD | Computer aided diagnosis |

| CAE | Convolutional autoencoders |

| CNNs | Convolutional neural networks |

| cCCNs | Combined convolutional networks |

| CT | Computed tomography |

| DANs | Deep auto encoders |

| DF | Dermatofibroma |

| FCNs | Fully convolutional networks |

| FCRN | Fully convolutional Residual networks |

| FCM | Fuzzy-c Mean |

| FPS | Fourier power spectrum |

| FP | False positives |

| FN | False negatives |

| GVF | Gradient Vector Flow |

| GAN | Generative adversarial models |

| GPU | Graphics processing units |

| GLCM | Grey level co-occurrence matrix |

| GM | Generalization mean |

| histo.path | Histopathology |

| IC | Intraepithelial carcinoma |

| ILSVRC | ImageNet large scale visual recognition competition |

| K-NN | K-Nearest neighbor |

| LBPS | Local binary patterns |

| LABS | Laboratory retrospective study |

| LTSM | Long short-term memory |

| M-CNN | Multi-scale convolutional neural network |

| MIL-CNN | Multiinstance convolutional neural network |

| MRI | Magnetic resonance image |

| mAP | multi class accuracy and mean average prediction |

| Neg.F | Negative features |

| OBS | Observational study |

| PACS | Picture archiving and communication society |

| Pos.F | Positive features |

| PCA | Principal component analysis |

| RBF | Radial basis function |

| RBM | Restricted Boltzmann’s machine |

| ReLU | Rectified linear unit |

| ROC | Receiver operating characteristic curve |

| ROI | Region of interest |

| Rsurf | Rotated speeded-up robust features |

| RNN | Recurrent neural networks |

| SAE | Stacked autoencoders |

| SA-SVM | Self-advised support vector machine |

| SCC | Squamous cell carcinoma |

| SNR | Signal too noise ratio |

| SVM | Support Vector Machine |

| TP | True positives |

| TN | True negatives |

| WPT | Wavepacket transform |

References

- Torre, L.A.; Bray, F.; Siegel, R.L.; Ferlay, J.; Lortet-Tieulent, J.; Jemal, A. Global cancer statistics, 2012. CA Cancer J. Clin. 2015, 65, 87–108. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Siegel, R.L.; Miler, K.D.; Jemal, A. Cancer Statistics, 2016. CA Cancer J. Clin. 2016, 66, 7–30. [Google Scholar] [CrossRef] [PubMed]

- Cancer Facts and Figures 2019, American Cancer Society. 2019. Available online: https://www.cancer.org/content/dam/cancer-org/research/cancer-facts-and-statistics/annual-cancer-facts-and-figures/2019/cancer-facts-and-figures-2019.pdf (accessed on 8 January 2019).

- Doi, K. Computer-aided diagnosis in medical imaging: Historical review, current status and future potential. Comput. Med. Imaging Graph. 2007, 31, 198–211. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Te Brake, G.M.; Karssemeijer, N.; Hendriks, J.H. An automatic method to discriminate malignant masses from normal tissue in digital mammograms1. Phys. Meds. Biol. 2000, 45, 2843. [Google Scholar] [CrossRef] [PubMed]

- Beller, M.; Stotzka, R.; Muller, T.; Gemmeke, H. An example-based system to support the segmentation of stellate lesions. In Bildverarbeitung fÃr die Medizin 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 475–479. [Google Scholar]

- Yin, F.F.; Giger, M.L.; Vyborny, C.J.; Schmidt, R.A. Computerized detection of masses in digital mammograms: automated alignment of breast images and its effects on bilateral-substraction technique. Phys. Med. 1994, 3, 445–452. [Google Scholar] [CrossRef] [PubMed]

- Aerts, H.J.; Velazquez, E.R.; Leijenaar, R.T.; Parmar, C.; Grossmann, P.; Cavalho, S.; Bussink, J.; Monshouwer, R.; Haibe-Kains, B.; Rietveld, D. Decoding tumour phenotype by noninvasive imaging using a quantitative radiomics approach. Nat. Commun. 2014, 5, 4006. [Google Scholar] [CrossRef] [PubMed]

- Eltonsy, N.H.; Tourassi, G.D.; Elmaghraby, A.S. A concentric morphology for the detection of masses in mammograph. IEEE Trans. Med. Imaging 2007, 26, 880–889. [Google Scholar] [CrossRef] [PubMed]

- Wei, J.; Sahiner, B.; Hadjiiski, L.M.; Chan, H.P.; Petrick, N.; Helvie, M.A.; Roubidoux, M.A.; Ge, J.; Zhou, C. Computer-aided detection of breast masses on full field digital mammograms. Med. Phys. 2005, 32, 2827–2838. [Google Scholar] [CrossRef] [Green Version]

- Hawkins, S.H.; Korecki, J.N.; Balagurunthan, Y.; Gu, Y.; Kumar, V.; Basu, S.; Hall, L.O.; Goldgof, D.B.; Gatenby, R.A.; Gillies, R.J. Predicting outcomes of nonsmall cell lung cancer using CT image features. IEEE Access 2005, 2, 1418–1426. [Google Scholar] [CrossRef]

- Balagurunthan, Y.; Gu, Y.; Wang, H.; Kumar, V.; Grove, O.; Hawkins, S.H.; Kim, J.; Goldgof, D.B.; Hall, L.O.; Gatenby, R.A. Reproducibility and prognosis of quantitative features extracted from CT images. Transl. Oncol. 2005, 7, 72–87. [Google Scholar] [CrossRef]

- Han, F.; Wang, H.; Zhang, G.; Han, H.; Song, B.; Li, L.; Moore, W.; Lu, H.; Zhao, H.; Liang, Z. Texture feature analysis for computer-aided diagnosis on pulmonary nodules. J. Digit. Imaging 2015, 28, 99–115. [Google Scholar] [CrossRef] [PubMed]

- Barata, C.; Marquees, J.S.; Rozeira, J. A system for the detection of pigment network in dermoscopy images using directional filters. IEEE Trans. Biomed. Eng. 2012, 59, 2744–2754. [Google Scholar] [CrossRef] [PubMed]

- Barata, C.; Marquees, J.S.; Celebi, M.E. Improving dermoscopy image analysis using color constancy. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 3527–3531. [Google Scholar]

- Barata, C.; Ruela, M.; Mendonca, T.; Marquees, J.S. A bag-of-features approach for the classification of melanomas in dermoscopy images: The role of color and texture descriptors. In Computer Vision Techniques for the Diagnosis of Skin Cancer; Springer: Berlin/Heidelberg, Germany, 2014; pp. 49–69. [Google Scholar]

- Sadeghi, M.; Lee, T.K.; McLean, D.; Lui, H.; Atkins, M.S. Detection and analysis of irregular streaks in dermoscopic images of skin lesions. IEEE Trans. Med. Imaging 2013, 32, 849–861. [Google Scholar] [CrossRef] [PubMed]

- Zickic, D.; Glocker, B.; Konukoglu, E.; Criminsi, A.; Demiralp, C.; Shotton, J.; Thomas, O.M.; Das, T.; Jena, R.; Price, S.J. Decision forest foe tissue-specific segmentation of high-grade gliomas in multi-channel MR. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Berlin/Heidelberg, Germany, 2012; pp. 369–376. [Google Scholar]

- Meier, R.; Bauer, S.; Slotnoom, J.; Wiest, R.; Reyes, M. A hybrid model for multi-modal brain tumor segmentation. In Proceedings of the MICCAI Challenge on MultimodalBrain Tumor Image Segmentation, NCI-MICCAI BRATS, Nagoya, Japan, 22 September 2013; pp. 31–37. [Google Scholar]

- Pinto, A.; Pereira, S.; Correia, H.; Oliveira, J.; Rasteito, D.M.; Silva, C.A. Brain tumour segmentation based on extremely randomized forest with high-level features. In Proceedings of the 37th Annual International Conference on IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 3037–3040. [Google Scholar]

- Tustison, N.J.; Shrinidhi, K.; Wintermark, M.; Durst, C.R.; Kandel, B.M.; Gee, J.C.; Grossman, M.C.; Avants, B.B. Optimal symmetric multimodal templates and concatenated random forests for supervised brain tumor segmentation(simplified) with ANTsR. Neuroinformatics 2015, 13, 209–225. [Google Scholar] [CrossRef] [PubMed]

- Bengio, Y.; Courville, A.; Vinvent, P. Representation learning: A review and new prespectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Xu, B.; Wang, N.; Chen, T.; Li, M. Empirical evaluation of rectified activations in convolutional network. arXiv 2015, arXiv:1505.00853. [Google Scholar]

- Messadi, M.; Bessaid, A.; Taleb-Ahmed, A. Extraction of specific parameters for skin tumour classification. J. Med. Eng. Technol. 2009, 33, 288–295. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Reddy, B.V.; Reddy, P.B.; Kumar, P.S.; Reddy, S.S. Developing an approach to brain MRI image preprocessing for tumor detection. Int. J. Res. 2014, 1, 2348–6848. [Google Scholar]

- Zacharaki, E.I.; Wang, S.; Chawla, S.; Soo Yoo, D.; Wolf, R.; Melhem, E.R.; Davatzikos, C. Classification of brain tumor type and grade using MRI texture and shape in a machine learning scheme. Magn. Reson. Med. 2009, 2, 1609–1618. [Google Scholar] [CrossRef]

- Miah, M.B.A.; Yousuf, M.A. Detection of lung cancer from CT image using image processing and neural network. In Proceedings of the 2015 International Conference on Electrical Engineering and Information Communication Technology (ICEEICT), Jahangirnagar University, Dhaka, Bangladesh, 21–23 May 2015. [Google Scholar]

- Ponraj, D.N.; Jenifer, M.E.; Poongodi, P.; Manoharan, J.S. A survey on the preprocessing techniques of mammogram for the detection of breast cancer. J. Emerg. Trends Comput. Inf. Sci. 2011, 2, 656–664. [Google Scholar]

- Zhang, Y.; Sankar, R.; Qian, W. Boundary delineation in transrectal ultrasound image for prostate cancer. Comput. Biol. Med. 2007, 37, 1591–1599. [Google Scholar] [CrossRef] [PubMed]

- Emre Celebi, M.; Kingravi, H.A.; Iyatomi, H.; Alp Aslandogan, Y.; Stoecker, W.V.; Moss, R.H.; Malters, J.M.; Grichnik, J.M.; Marghoob, A.A.; Rabinovitz, H.S.; et al. Border detection in dermoscopy images using statistical region merging. Skin Res. Technol. 2008, 14, 347–353. [Google Scholar] [CrossRef] [PubMed]

- Tong, N.; Lu, H.; Ruan, X.; Yang, M.H. Salient object detection via bootstrap learning. In Proceedings of the IEEE Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1884–1892. [Google Scholar]

- Bozorgtabar, B.; Abedini, M.; Garnavi, R. Sparse coding based skin lesion segmentation using dynamic rule-based refinement. In International Workshop on Machine Learning in Medical Imaging; Springer: Cham, Switzerland, 2016; pp. 254–261. [Google Scholar]

- Li, X.; Li, Y.; Shen, C.; Dick, A.; Van Den Hengel, A. Contextual hypergraph modeling for salient object detection. In Proceedings of the 2013 IEEE International Conference on the Computer Vision (ICCV), Sydney, Australia, 1–8 December 2013; pp. 3328–3335. [Google Scholar]

- Yuan, X.; Situ, N.; Zouridakis, G. A narrow band graph partitioning method for skin lesion segmentation. Pattern Recogn. 2009, 42, 1017–1028. [Google Scholar] [CrossRef]

- Sikorski, J. Identification of malignant melanoma by wavelet analysis. In Proceedings of the Student/Faculty Research Day, New York, NY, USA, 7 May 2004. [Google Scholar]

- Chiem, A.; Al-Jumaily, A.; Khushaba, N.R. A novel hybrid system for skin lesion detection. In Proceedings of the 3rd International Conference on Intelligent Sensors, Sensor Networks and Information Processing (ISSNIPâTM07), Melbourne, Australia, 3–6 December 2007; pp. 567–572. [Google Scholar]

- Maglogiannis, I.; Zafiropoulos, E.; Kyranoudis, C. Intelligent segmentation and classification of pigmented skin lesions in dermatological images. In Advances in Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2006; pp. 214–223. [Google Scholar]

- Tanaka, T.; Torii, S.; Kabuta, I.; Shimizu, K.; Tanaka, M.; Oka, H. Pattern classification of nevus with texture analysis. In Proceedings of the 26th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBCâTM04), San Francisco, CA, USA, 1–5 September 2004; pp. 1459–1462. [Google Scholar]

- Zhou, H.; Chen, M.; Rehg, J.M. Dermoscopic interest point detector and descriptor. In Proceedings of the 6th IEEE International Symposium on Biomedical Imaging: From Nano to Macro (ISBIâTM09), Boston, MA, USA, 28 June–1 July 2009; pp. 1318–1321. [Google Scholar]

- Lee, C.; Landgrebe, D.A. Feature extraction based on decision boundaries. IEEE Trans. Pattern Anal. Mach. Intell. 1993, 15, 388–400. [Google Scholar] [CrossRef]

- Garbaj, M.; Deshpande, A.S. Detection and Analysis of Skin Cancer in Skin Lesions by using Segmentation. IJARCCE 2015. Available online: https://pdfs.semanticscholar.org/2e8c/07298deb9c578077b5d0ae069fe26bd16b58.pdf (accessed on 1 August 2019).

- Johr, R.H. Dermoscopy: Alternative Melanocytic Algorithms—The ABCD Rule of Dermatoscopy, Menzies Scoring Method, and 7-Point Checklist; Elsevier: Amsterdam, The Netherlands, 2002. [Google Scholar]

- Lee, C.Y.; Gallagher, P.W.; Tu, Z. Generalizing pooling functions in convolutional neural networks: Mixed, gated, and tree. In Proceedings of the 19th International Conference on Artificial Intelligence and Statistics (AISTATS), Cadiz, Spain, 9–11 May 2016. [Google Scholar]

- Nwankpa, C.; Ijomah, W.; Gachagan, A.; Marshall, S. Activation functions: Comparison of trends in practice and research for deep learning. arXiv 2018, arXiv:1811.03378. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th International Conference on Machine Learning (ICLM), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A method of stochastic optimmization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Albarqouni, S.; Baur, C.; Achilles, F.; Belagiannis, V.; Demirci, S.; Navab, N. AggNet: Deep learning from crowds for mitosis detection in breast cancer histology images. IEEE Trans. Med. Imaging 2016, 35, 1313–1321. [Google Scholar] [CrossRef]

- Spanhol, F.A.; Oliveira, L.S.; Petitjean, C.; Heutte, L. Breast cancer histopathological image classification using convolutional neural networks. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 2560–2567. [Google Scholar]

- Wichakam, I.; Vateekul, P. Combining deep convolutional networks and SVMs for mass detection on digital mammograms. In Proceedings of the 8th International Conference on Knowledge and Smart Technology (KST), Bangkok, Thailand, 3–6 February 2016; pp. 239–244. [Google Scholar]

- Ertosun, M.G.; Rubin, D.L. Probabilistic visual search for masses within mammography images using deep learning. In Proceedings of the 2015 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Washington, DC, USA, 9–12 November 2015; pp. 1310–1315. [Google Scholar]

- Albayark, A.; Bilgin, G. Mitosis detection using convolutional neural network based features. In Proceedings of the IEEE Seventeenth International Symposium on Computational Intelligence and Informatics (CINTI), Budapest, Hungary, 17–19 November 2016; pp. 335–340. [Google Scholar]

- Swiderski, B.; Kurek, J.; Osowski, S.; Kruk, M.; Barhoumi, W. Deep learning and non-negative matrix factorization in recognition of mammograms. In Proceedings of the Eighth International Conference on Graphic and Image Processing, International Society of Optics and Photonics, Tokyo, Japan, 8 February 2017; Volume 10225, p. 102250B. [Google Scholar]

- Suzuki, S.; Zhang, X.; Homma, N.; Ichiji, K.; Sugita, N.; Kawasumi, Y.; Ishibashi, T.; Yoshizawa, M. Mass detection using deep convolutional neural networks for mammoghraphic computer-aided diagnosis. In Proceedings of the 55th Annual Conference of the Society of Intruments and Control Engineers of Japan (SICE), Tsukuba, Japan, 20–23 September 2016; pp. 1382–1386. [Google Scholar]

- Wang, C.; Elazab, A.; Wu, J.; Hu, Q. Lung nodule classification using deep feature fusion in chest radiography. Comput. Med. Imaging Graph. 2017, 57, 10–18. [Google Scholar] [CrossRef]

- Dou, Q.; Chen, H.; Yu, L.; Qin, J.; Heng, P.-A. Multilevel contextual 3-D CNNs for false positive reduction in pulmonary nodule detection. IEEE Trans. Biomed. Eng. 2017, 64, 1558–1567. [Google Scholar] [CrossRef] [PubMed]

- Shen, W.; Zhou, M.; Yang, F.; Yu, D.; Dong, D.; Yang, C.; Tian, J. Multicrop convolutional neural networks for lung nodule malignancy suspiciousness classification. Pattern Recognit. 2017, 61, 663–673. [Google Scholar] [CrossRef]

- Hua, K.L.; Hsu, C.H.; Hidayati, S.C.; Cheng, W.H.; Chen, Y.J. Computer-aided classification of lung nodules on computed tomography images via deep learning technique. Onco Targets Ther. 2015, 57, 2015–2022. [Google Scholar] [CrossRef]

- Hiryama, K.; Tan, J.K.; Kim, H. Extraction of GGO candidate regions from the LIDC database using deep learning. In Proceedings of the Sixteenth International Conference on Control, Automation and Systems (ICCAS), Gyeongju, Korea, 16–19 October 2016; pp. 724–727. [Google Scholar]

- Setio, A.A.A.; Ciompi, F.; Litjens, G.; Gerke, P.; Jacobs, C.; Van Riel, S.J.; Wille, M.M.W.; Naqibullah, M.; Sanchez, C.I.; van Ginneken, B. Pulmonary nodule detection in CT images: False positive reduction using multi-view convolutional networks. IEEE Trans. Med. Imaging 2016, 35, 1160–1169. [Google Scholar] [CrossRef] [PubMed]

- Hussein, S.; Gillies, R.; Cao, K.; Song, Q.; Bagci, U. TumorNet: Lung Nodule Characterization Using Multi-View Convolution Neural Network with Gaussian Process. In Proceedings of the IEEE 14th International Symposium on Biomedical Imaging (ISBI), Melbourne, Australia, 18–21 April 2017; pp. 1007–1010. [Google Scholar]

- Mahbod, A.; Ecker, R.; Ellinger, I. Skin lesion classification using hybrid deep neural networks. arXiv 2017, arXiv:1702.08434. [Google Scholar]

- DermQuest. Online Medical Resource. Available online: http://www.dermquest.com (accessed on 10 December 2018).

- Dey, T.K. Curve and Surface Reconstruction: Algoritms with Mathematical Analysis; Cambridge Monographs on Applied and Computational Mathematics: Cambridge, UK, 2006. [Google Scholar]

- Pomponiu, V.; Nejati, H.; Cheung, N.-M. Deepmole: Deep neural networks for skin mole lesion classification. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 2623–2627. [Google Scholar]

- Gutman, D.; Codella, N.C.; Celebi, E.; Helba, B.; Marchetti, M.; Mishra, N.; Halpern, A. Skin lesion analysis toward melanoma detection: A challenge at the international symposium on biomedical imaging (ISIC). arXiv 2016, arXiv:1605.01397. [Google Scholar]

- Majtner, T.; Yildirim-Yayilgan, S.; Hardeberg, J.Y. Combining deep learning and hand-crafted features for the skin lesion classification. In Proceedings of the Sixth International Conference on Image Processing Theory Tools and Applications (IPTA), Oulu, Finland, 12–15 December 2016; pp. 1–6. [Google Scholar]

- Demyanov, S.; Chakravorty, R.; Abedini, M.; Halpern, A.; Garnavi, R. Classification of dermoscopy patterns using deep convolutional neural networks. In Proceedings of the 13th International Symposium on Biomedical Imaging (ISBI), Prague, Czech Republic, 13–16 April 2016; pp. 364–368. [Google Scholar]

- Giotis, I.; Molders, N.; Land, S.; Biehl, M.; Jonkman, M.F.; Petkov, N. MED-NODE: A computer-assisted melanoma diagnosis system using non-dermoscopic images. Expert Syst. Appl. 2015, 42, 6578–6585. [Google Scholar] [CrossRef]

- Nasr-Esfahani, E.; Samavi, S.; Karimi, N.; Soroushmehr, S.M.R.; Jafari, M.H.; Ward, K.; Najarian, K. Melanoma detection by analysis of clinical images using convolutional neural network. In Proceedings of the IEEE 38th Annual International Conferenced of Engineering in Medincine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 1373–1376. [Google Scholar]

- An Atlas of Clinical Dermatology. 2014. Available online: http://www.danderm.dk/atlas/ (accessed on 14 September 2018).

- Online Medical Resources. 2014. Available online: http://www.dermnetnz.org (accessed on 20 November 2018).

- Interactive Dermatology Atlas. 2014. Available online: http://www.dermatlas.net/atlas/index. (accessed on 22 December 2018).

- Sabouri, P.; GholamHosseini, H. Lesion border detection using deep learning. In Proceedings of the 2016 IEEE Congress on Evolutionary Computation (CEC), Vancouver, BC, Canada, 24–29 July 2016; pp. 1416–1421. [Google Scholar]

- Litjens, G.; Toth, R.; van de Ven, W.; Hoeks, C.; Kerkstra, S.; van Ginneken, B.; Vincent, G.; Guillard, G.; Birbeck, N.; Zhang, J. Evaluation of prostate segmentation algorithms for MRI: The PROMISE12 challenge. Med. Imaging Anal. 2014, 18, 359–373. [Google Scholar] [CrossRef]

- Yan, K.; Li, C.; Wang, X.; Li, A.; Yuan, Y.; Feng, D.; Khadra, M.; Kim, J. Automatic prostate segmentation on MR images with deep network and graph model. In Proceedings of the 38th Annual International Conference of the Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–18 August 2016; pp. 635–638. [Google Scholar]

- Maa, I.; Guoa, R.; Zhanga, G.; Tadea, F.; Schustera, D.M.; Niehc, P.; Masterc, V.; Fei, B. Automatic segmentation of the prostate on CT images using deep convolutional neural network. In Proceeding of the SPIE MEdical Imaging, International Society for Optics and Photonics, Orlando, FL, USA, 11–16 February 2017; Volume 10133, p. 101332O. [Google Scholar]

- Kistler, M.; Bonaretti, S.; Pfahrer, M.; Niklaus, R.; Buchler, P. The virutal skeleton database: An open access repository for biomedical research and collaboration. J. Med. Internet Res. 2013, 15, e245. [Google Scholar] [CrossRef]

- Zhao, L.; Jia, K. Deep feature learning with discrimination mechanism for brain tumor segmentation and diagnosis. In Proceedings of the International Conference on Intelligent Information Hiding and Multimedia Signal Processing (IIH-MSP), Adelaide, SA, Australia, 23–25 September 2015; pp. 306–309. [Google Scholar]

- Pereira, S.; Pinto, A.; Alves, V.; Silva, C.A. Brain tumor segmentation using convolutional neural networks in MRI images. IEEE Trans. Med. Imaging 2016, 35, 1240–1251. [Google Scholar] [CrossRef]

- Kamnitsas, K.; Ledig, C.; Newcombe, V.F.; Simpson, J.P.; Kane, A.D.; Menon, D.K.; Rueckert, D.; Glocker, B. Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation. Med. Image Anal. 2017, 36, 61–78. [Google Scholar] [CrossRef] [PubMed]

- Zhao, X.; Wu, Y.; Song, G.; Li, Z.; Zhang, Y.; Fan, Y. A deep learning model integrating FCNNs and CRFs for brain tumor segmentation. Med. Image Anal. 2018, 43, 98–111. [Google Scholar] [CrossRef] [PubMed]

- Sitinukunwattana, K.; Snead, D.R.; Rajpoot, N.M. A stochastic polygons model for glandular structures in colon histology images. IEEE Trans. Med. Imaging 2015, 34, 2366–2378. [Google Scholar] [CrossRef] [PubMed]

- Sitinukunwattana, K.; Pluim, J.P.; Chen, H.; Qj, X.; Heng, P.-A.; Guo, Y.B.; Wang, L.Y.; Matuszewski, B.J.; Bruni, E.; Sanchez, U. Gland segmentation in colon histology images: The glas challenge contest. Med. Image Anal 2017, 35, 489–502. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, H.; Qj, X.; Yu, L.; Heng, P.-A. DCAN: Deep contour-aware networks for accurate gland segmentation. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2487–2496. [Google Scholar]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef] [PubMed]

- Paul, R.; Hawkins, S.H.; Hall, L.O.; Goldgof, D.B.; Gillies, R.J. Combining deep neural network and traditional image features to improve survival prediction accuracy for lung cancer patients from diagnostic CT. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016; pp. 2570–2575. [Google Scholar]

- Kim, D.H.; Kim, S.T.; Ro, Y.M. Latent feature representation with 3-D multi-view deep convolutional neural network for bilateral analysis in digital breast tomosynthesis. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 927–931. [Google Scholar]

- Liu, R.; Hall, L.O.; Goldgof, D.B.; Zhou, M.; Gatenby, R.A.; Ahmed, K.B. Exploring deep features from brain tumor magnetic resonance images via transfer learning. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 235–242. [Google Scholar]

- Kallen, H.; Molin, J.; Heyden, A.; Lundstrom, C.; Astrom, K. Towards grading gleason score using generically trained deep convolutional neural networks. In Proceedings of the 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI), Prague, Czech Republic, 13–16 April 2016; pp. 1163–1167. [Google Scholar]

- Gummeson, A.; Arvdsson, I.; Ohlsson, M.; Overgaard, N.C.; Krzyzanowska, A.; Heyden, A.; Bjartell, A.; Astrom, K. Automatic Gleason grading of H&E stained microscopic prostate images using deep convolutional neural networks. In Proceedings of the SPIE Medical Imaging, International Society of Optics and Photonics, Orlando, FL, USA, 11–16 February 2017; Volume 10140, p. 101400S. [Google Scholar]

- Kwak, J.T.; Hewitt, S.M. Lumen-based detection pf prostate cancer via convolutional neural networks. In Proceedings of the SPIE Medical Imaging, International Society of Optics and Photonics, Orlando, FL, USA, 11–16 February 2017; Volume 10140, p. 1014008. [Google Scholar]

- Zhu, X.; Yao, J.; Huang, J. Deep convolutional neural network for survival analysis with pathological images. In Proceedings of the 2016 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Shenzhen, China, 15–18 December 2016; pp. 544–547. [Google Scholar]

- Ahmed, K.B.; Hall, L.O.; Goldgof, D.B.; Liub, R.; Gatenby, R.A. Fine-tuning convolutional deep features for MRI based brain tumor classification. In SPIE Proceedings: Medical Imaging 2017: Computer-Aided Diagnosis; International Society for Optics and Photonics: Bellingham, WA, USA, 2017; Volume 10134, p. 101342E. [Google Scholar]

- Song, Y.; Zhang, L.; Chen, S.; Ni, D.; Lei, B.; Wang, T. Accurate segmentation of cervical cytoplasm and nuclei based on multi-scale convolutional network and graph partitioning. IEEE Trans. Biomed. Eng. 2015, 62, 2421–2433. [Google Scholar] [CrossRef] [PubMed]

- Cha, K.H.; Hadjiiski, L.; Samala, R.K.; Chan, H.P.; Caoili, E.M.; Cohan, R.H. Urinary bladder segmentation in CT urography using deep-learning convolutional neural network and level sets. Med. Phys. 2016, 43, 1882–1896. [Google Scholar] [CrossRef] [PubMed]

- Gibson, E.; Robu, M.R.; Thompson, S.; Edwards, P.E.; Schneider, C.; Schneider, C.; Gurusamy, K.; Davidson, B.; Hawkes, D.J.; Barratt, D.C.; et al. Deep residual networks for automatic segmentation of laparoscopic videos of the liver. In Medical Imaging 2017: Image-Guided Procedures, Robotic Interventions, and Modeling; International Society for Optics and Photonics: Bellingham, WA, USA, 2017; Volume 10135, p. 101351M. [Google Scholar]

- Gordon, M.; Hadjiiski, L.; Cha, K.; Chan, H.-P.; Samala, R.; Cohan, R.H.; Caoili, E.M. Segmentation of inner and outer bladder wall using deep-learning convolutional neural networks in CT urography. In Medical Imaging 2017: Computer-Aided Diagnosis; International Society for Optics and Photonics: Bellingham, WA, USA, 2017; Volume 10134, p. 1013402. [Google Scholar]

- Xu, T.; Zhang, H.; Huang, X.; Zhang, S.; Metaxas, D.N. Multimodal deep learning for cervical dysplasia diagnosis. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2016; pp. 115–123. [Google Scholar]

- BenTaieb, A.; Kawahara, J.; Hamarneh, G. Multi-loss convolutional networks for gland analysis in microscopy. In Proceedings of the IEEE Thirteenth International Symposium on Biomedical Imaging (ISBI), Prague, Czech Republic, 13–16 April 2016; pp. 642–645. [Google Scholar]

- Xing, F.; Xie, Y.; Yang, L. An automaticl learning-based framework or robust nucleus segmentation. IEEE Trans. Med. Imaging 2016, 35, 550–566. [Google Scholar] [CrossRef] [PubMed]

- Mao, Y.; Yin, Z.; Schober, J. A deep convolutional neural network trained on representative samples for circualting tumor cell detection. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; pp. 1–6. [Google Scholar]

- Li, W.; Jia, F.; Hu, Q. Automatic segmentation of liver tumor in CT images with deep convolutional neural networks. J. Comput. Commun. 2015, 3, 146. [Google Scholar] [CrossRef]

- Song, Y.; Cheng, J.-Z.; Ni, D.; Chen, S.; Lei, B.; Wang, T. Segmenting overlapping cervical cell in pap smear images. In Proceedings of the IEEE Thirteenth International Symposium on Biomedical Imaging (ISBI), Prague, Czech Republic, 13–16 April 2016; pp. 1159–1162. [Google Scholar]

- Cha, K.H.; Hafjiski, L.M.; Chan, H.-P.; Samala, R.K.; Cohan, R.H.; Caoili, E.M.; Paramagul, C.; Alva, A.; Weizer, A.Z. Bladder cancer treantment response assessment using deep learning learning in CT with transfer learning. In Medical Imaging 2017: Computer-Aided Diagnosis; International Society for Optics and Photonics: Bellingham, WA, USA, 2017; Volume 10134, p. 1013404. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional network for semantic segmentation. arXiv 2015, arXiv:1411.4038v2. [Google Scholar]

- Jain, V.; Seung, S. Natural image denoising with convolutional networks. Adv. Neural Inf. Process. Syst. 2009, 21, 769–776. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a deep convolutional network for image super-resolution. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 769–776. [Google Scholar]

- Noh, H.; Hong, S.; Han, B. Learning deconvolution network for semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 13–16 December 2015; pp. 1520–1528. [Google Scholar]

- Mahmood, F.; Borders, D.; Chen, R.; McKay, G.N.; Salimian, K.J.; Baras, A.; Durr, N.J. Deep adversarial training for multi-organ nuclei segmentation in histopathology images. IEEE Trans. Med. Imaging 2019. [Google Scholar] [CrossRef] [PubMed]

- Baur, C.; Albarqouni, S.; Navab, N. MelanoGANs: High resolution skin lesion synthesis with GANs. arXiv 2018, arXiv:1804.04338. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. arXiv 2017, arXiv:1703.10593. [Google Scholar]

- Wang, T.C.; Liu, M.Y.; Zhu, J.Y.; Tao, A.; Kautz, J.; Catanzaro, B. High-resolution image synthesis and semantic manipulation with conditional gans. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 19–21 June 2018; Volume 1, p. 5. [Google Scholar]

- Quan, T.M.; Nguyen-Duc, T.; Jeong, W.K. Compressed sensing mri reconstruction with cyclic loss in generative adversarial networks. arXiv 2017, arXiv:1709.00753. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Yu, B.; Wang, L.; Zu, C.; Lalush, D.S.; Lin, W.; Wu, X.; Zhou, J.; Shen, D.; Zhou, L. 3D conditional generative adversarial networks for high-quality pet image estimation at low dose. NeuroImage 2018, 174, 550–562. [Google Scholar] [CrossRef]

- Mardani, M.; Gong, E.; Cheng, J.Y.; Vasanawala, S.; Zaharchuk, G.; Alley, M.; Thakur, N.; Han, S.; Dally, W.; Pauly, J.M.; et al. Deep generative adversarial networks for compressed sensing automates mri. arXiv 2017, arXiv:1706.00051. [Google Scholar]

- Dou, Q.; Ouyang, C.; Chen, C.; Chen, H.; Heng, P.-A. Unsupervised cross-modality domain adaptation of convnets for biomedical image segmentations with adversarial loss. arXiv 2018, arXiv:1804.10916. [Google Scholar]

- Li, Z.; Wang, Y.; Yu, J. Brain tumor segmentation using an adversarial network. In International MICCAI Brainlesion Workshop; Springer: Cham, Switzerland, 2017; pp. 123–132. [Google Scholar]

- Rezaei, M.; Yang, H.; Meinel, C. Whole heart and great vessel segmentation with context-aware of generative adversarial networks. In Bildverarbeitung fur die Medizin; Springer Vieweg: Berlin/Heidelberg, Germany, 2018; pp. 353–358. [Google Scholar]

- Zhang, Y.; Miao, S.; Mansi, T.; Liao, R. Task driven generative modeling for unsupervised domain adaptation: Application to X-ray image segmentation. arXiv 2018, arXiv:1806.07201. [Google Scholar]

- Chen, C.; Dou, Q.; Chen, H.; Heng, P.-A. Semantic-aware generative adversarial nets for unsupervised domain adaptation in chest X-ray segmentation. arXiv 2018, arXiv:1806.00600. [Google Scholar]

- Alex, V.; Mohammed Safwan, K.P.; Chennamsetty, S.S.; Krishnamurthi, G. Generative adversarial networks for brain lesion detection. In Medical Imaging 2017: Image Processing; International Society for Optics and Photonics: Bellingham, WA, USA, 2017; Volume 10133, p. 101330G. [Google Scholar]

- Schlegl, T.; Seebock, P.; Waldstein, S.M.; Schmidt-Erfurth, U.; Langs, G. Unsupervised anomaly detection with generative adversarial networks to guide marker discovery. In International Conference on Information Processing in Medical Imaging; Springer: Cham, Switzerland, 2017; pp. 146–157. [Google Scholar]

- Mondal, A.K.; Dolz, J.; Desrosiers, C. Few-shot 3D multi-modal medical image segmentation using generative adversarial learning. arXiv 2018, arXiv:1810.12241. [Google Scholar]

- Singh, V.K.; Romani, S.; Rashwan, H.A.; Akram, F.; Pandey, N.; Sarker, M.M.K.; Abdulwahab, S.; Torrents-Barrena, J.; Saleh, A.; Arquez, M.; et al. Conditional generative adversarial and convolutional networks for X-ray breast mass segmentation and shape classification. arXiv 2018, arXiv:1805.10207v2. [Google Scholar]

- Graves, A.; Mohamed, A.R.; Hinton, G. Speech recognition with deep recurrent neural networks. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP, Vancouver, BC, Canada, 26–31 May 2013. [Google Scholar] [CrossRef]

- Zaremba, W.; Sutskever, I.; Vinyals, O. Recurrent neural network regularization. arXiv 2014, arXiv:1409.2329. [Google Scholar]

- Bishop, C.M. Neural Networks of Pattern Recognition; Oxford University Press: Oxford, UK, 1995. [Google Scholar]

- Ng, A. Sparse autoencoder. CS294A Lect. Notes 2011, 72, 1–19. [Google Scholar]

- Liu, G.; Bao, H.; Han, B. A stacked autoencoder-based deep neural network for achieving gearbox fault diagnosis. Math. Probl. Eng. 2018. [Google Scholar] [CrossRef]

- Hou, L.; Nguyen, V.; Kanevsky, A.B.; Samaras, D.; Kurc, T.M.; Zhao, T.; Gupta, R.R.; Gao, Y.; Chen, W.; Foran, D.; et al. Sparse autoencoder for unsupervised nucleus detection and representation in histopathology images. Pattern Recognit. 2019, 86, 188–200. [Google Scholar] [CrossRef]

- Guo, X.; Liu, X.; Zhu, E.; Yin, J. Deep clustering with convolutional autoencoders. In International Conference on Neural Information Processing; Springer: Cham, Switzerland, 2017; pp. 373–382. [Google Scholar]

- Zhang, Y. A Better Autoencoder for Image: Convolutional Autoencoder.ICONIP17-DCEC. Available online: http://users.cecs.anu.edu.au/ Tom.Gedeon/conf/ABCs2018/paper/ABCs2018paper58.pdf (accessed on 23 March 2017).

- Hinton, G.E. Deep belief networks. Scholarpedia 2009, 4, 5947. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, T.-W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef]

- Kallenberg, M.; Petersen, K.; Nielsen, M.; Ng, A.Y.; Diao, P.; Igel, C.; Vachon, C.M.; Holland, K.; Winkel, R.R.; Karssemeijer, N.; et al. Unsupervised deep learning applied to breast density segmentation and mammographic risk scoring. IEEE Trans. Med. Imaging 2016, 35, 1322–1331. [Google Scholar] [CrossRef]

- Dhungel, N.; Carneiro, G.; Bradley, A.P. Deep structured learning for mass segmentation from mammograms. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 2950–2954. [Google Scholar] [CrossRef]

- Dhungel, N.; Carneiro, G.; Bradley, A.P. Automated Mass Detection in Mammograms Using Cascaded Deep Learning and Random Forests. In Proceedings of the 2015 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Adelaide, Australia, 23–25 November 2015. [Google Scholar]

- Taqdir, B. Cancer detection techniques—A review. Int. Res. J. Eng. Technol. (IRJET) 2018, 4, 1824–1840. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Masood, A.; Al-Jumaily, A.; Anam, K. Self-supervised learning model for skin cancer diagnosis. In Proceedings of the Seventh International IEEE/EMBS Conference on Neural Engineering (NER), Montpellier, France, 22–24 April 2015; pp. 1012–1015. [Google Scholar]

- Yu, L.; Chen, H.; Dou, Q.; Qin, J.; Heng, P.-A. Automated melanoma recognition in dermoscopy images via very deep residual networks. IEEE Trans. Med. Imaging 2016, 36, 994–1004. [Google Scholar] [CrossRef] [PubMed]

- Chandrahasa, M.; Vadigeri, V.; Salecha, D. Detection of skin cancer using image processing techniques. Int. J. Mod. Trends Eng. Res. (IJMTER) 2016, 5, 111–114. [Google Scholar]

- Saha, S. and Gupta, R. An automated skin lesion diagnosis by using image processing techniques. Int. J. Recent Innov. Trends Comput. Commun. 2015, 5, 1081–1085. [Google Scholar]

- Mehta, P.; Shah, B. Review on techniques and steps of computer aided skin cancer diagnosis. Procedia Comput. Sci. 2016, 85, 309–316. [Google Scholar] [CrossRef]

- Bhuiyan, M.A.H.; Azad, I.; Uddin, M.K. Image processing for skin cancer features extraction. Int. J. Sci. Eng. Res. 2013, 4, 1–6. [Google Scholar]

- Sumithra, R.; Suhil, M.; Guru, D.S. Segmentation and classification of skin lesions for disease diagnosis. Procedia Comput. Sci. 2015, 45, 76–85. [Google Scholar] [CrossRef]

- He, J.; Dong, Q.; Yi, S. Prediction of skin cancer based on convolutional neural network. In Recent Developments in Mechatronics and Intelligent Robotics; Springer: Cham, Switzerland, 2018; pp. 1223–1229. [Google Scholar]

- Rehman, M.; Khan, S.H.; Rizvi, S.D.; Abbas, Z.; Zafar, A. Classification of skin lesion by interference of segmentation and convolotion neural network. In Proceedings of the 2nd International Conference on Engineering Innovation (ICEI), Bangkok, Thailand, 5–6 July 2018; pp. 81–85. [Google Scholar]

- Pham, T.C.; Luong, C.M.; Visani, M.; Hoang, V.D. Deep CNN and data augmentation for skin lesion classification: Intelligent information and database systems. In Intelligent Information and Database Systems; Springer: Cham, Switzerland, 2018; pp. 573–582. [Google Scholar]

- Zhang, X.; Wang, S.; Liu, J.; Tao, C. Towards improving diagnosis of skin diseases by combining deep neural network and human knowledge. BMC Med. Inform. Decis. Mak. 2018, 18, 59. [Google Scholar] [CrossRef]

- Vesal, S.; Ravikumar, N.; Maier, A. SkinNet: A deep learning framework for skin lesion segmentation. arXiv 2018, arXiv:1806.09522v1. [Google Scholar]

- Haenssle, H.A.; Fink, C.; Schneiderbauer, R.; Toberer, F.; Buhl, T.; Blum, A.; Kalloo, A.; Hassen, A.B.H.; Thomas, L.; Enk, A.; et al. Man against machine: Diagnostic performance of a deep learning convolutional neural network for dermoscopic melanoma recognition in comparison to 58 dermatologists. Ann. Oncol. 2018, 29, 1836–1842. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, S.; Yu, J.; Yu, D. Skin lesion segmentation using atrous convolution via DeepLab v3. arXiv 2018, arXiv:1807.08891. [Google Scholar]

- Maia, L.B.; Lima, A.; Pereira, R.M.P.; Junior, G.B.; de Almeida, J.D.S.; de Paiva, A.C. Evaluation of melanoma diagnosis using deep deatures. In Proceedings of the 25th International Conference on Systems, Signals and Image Processing (IWSSIP), Maribor, Slovenia, 20–22 June 2018. [Google Scholar]

- Vesal, S.; Patil, S.M.; Ravikumar, N.; Maier, A.K. A multi-task framework for skin lesion detection and segmentation. arXiv 2018, arXiv:1808.01676. [Google Scholar]

- Rezvantalab, A.; Safigholi, H.; Karimijeshni, S. Dermatologist level dermoscopy skin cancer classification using different deep learning convolutional neural networks algorithms. arXiv 2018, arXiv:1810.10348. [Google Scholar]

- Walker, B.N.; Rehg, J.M.; Kalra, A.; Winters, R.M.; Drews, P.; Dascalu, J.; David, E.O.; Dascalu, A. Dermoscopy diagnosis of cancerous lesions utilizing dual deep learning algorithms via visual and audio (sonification) outputs: Laboratory and prospective observational studies. EBio Med. 2019, 40, 176–183. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Horie, Y.; Yoshio, T.; Aoyama, K.; Yoshimizu, S.; Horiuchi, Y.; Ishiyama, A.; Hirasawa, T.; Tsuchida, T.; Ozawa, T.; Ishihara, S.; et al. Diagnostic outcomes of esophageal cancer by artificial intelligence using convolutional neural networks. Gastrointest. Endosc. 2019, 89, 25–32. [Google Scholar] [CrossRef] [PubMed]

- GÃmez-MartÃn, I.; Moreno, S.; Duran, X.; Pujol, R.M.; Segura, S. Diagnostic accuracy of non-melanocytic pink flat skin lesions on the legs: Dermoscopic and reflectance confocal microscopy evaluation. Acta Dermato-Venereologica 2019, 99, 33–40. [Google Scholar] [CrossRef] [PubMed]

- Pandey, P.; Saurabh, P.; Verma, B.; Tiwari, B. A multi-scale retinex with color restoration (MSR-CR) technique for skin cancer detection. In Soft Computing for Problem Solving; Springer: Singapore, 2018; pp. 465–473. [Google Scholar]

- Guo, Y.; Gao, Y.; Shen, D. Deformable MR prostate segmentation via deep feature learning and sparse patch matching. IEEE Trans. Med. Imaging 2016, 35, 1077–1089. [Google Scholar] [CrossRef] [PubMed]

- Milletari, F.; Navab, N.; Ahamdi, S.-A. V-net: Fully convolutional neural networks for volumetric medical image segmentation. In Proceedings of the Fourth International Conference on 3D-Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar]

- Yu, L.; Chen, H.; Dou, Q.; Qin, J.; Heng, P.A. Integrating online and offline three-dimensional deep learning for automanted plopy detection in colonscopy videos. IEEE J. Biomed. Health Inform. 2017, 21, 65–75. [Google Scholar] [CrossRef]

- Tian, Z.; Liu, L.; Zhang, Z.; Fei, B. PSNet: Prostate segmentation on MRI based on a convolutional neural network. J. Med. Imaging 2018, 5, 021208. [Google Scholar] [CrossRef]

- Armato, S.G.; McLennan, G.; Bidaut, L.; McNitt-Gray, M.F.; Meyer, C.R.; Reeves, A.P.; Zhao, B.; Aberle, D.R.; Henschke, C.I.; Hoffman, E.A. The lung image database consortium (LIDC) and image database resource initiative(IDRI): A compelete reference database of lung nodules on CT scans. Med. Phys. 2011, 38, 915–931. [Google Scholar] [CrossRef]

- Sabbaghi, S.; Aldeen, M.; Garnavi, R. A deep bag-of-featrues model for the classification of melanomas in dermoscopy images. In Proceedings of the IEEE 38th Annual International Conference of the Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 1369–1372. [Google Scholar]

| Pre-Processing | Image Segmentation | Post-Processing |

|---|---|---|

| Contrast adjustment | Histogram thresholding | Opening and closing operations |

| Vignetting effect removal | Distributed and localized | Island removal |

| Region identification | ||

| Color correction | Clustering & Active contours | Region merging |

| Image smoothing | Supervised learning | Border expansion |

| Hair removal | Edge detection & Fuzzy logic | Smoothing |

| Normalization and localization | Probabilistic modeling and graph theory |

| Pos.F | Neg.F |

|---|---|

| Blue-white veil | Lesion’s symmetry |

| Depegmentation like scars | Single color presence |

| Multi-colors | |

| Gray and blue dots | |

| Broadened networks | |

| Psuedopods | |

| Globules | |

| Radial streaming |

| Method Name | Online Code Link |

|---|---|

| Convolutional Autoencoder | https://sefiks.com/2018/03/23/convolutional-autoencoder-clustering-images-with-neural-networks/ |

| Stacked Autoencoder | https://github.com/siddharth-agrawal/Stacked-Autoencoder |

| Restricted Boltzmann Machine | https://github.com/echen/restricted-boltzmann-machines |

| Recurrent neural networks | https://github.com/anujdutt9/RecurrentNeuralNetwork |

| Convolutional | https://gist.github.com/JiaxiangZheng/a60cc8fe1bf6e20c1a41abc98131d518 |

| Neural networks | https://github.com/siddharth-agrawal/Convolutional-Neural-Network |

| Multi-scale CNN | https://github.com/alexhagiopol/multiscale-CNN-classifier |

| Multi-instance | https://github.com/AMLab-Amsterdam/AttentionDeepMIL |

| Learning CNN | https://github.com/yanyongluan/MINNs |

| Long short-term memory | https://github.com/wojzaremba/lstm |

| Application Type | Modality | Dataset | Reference |

|---|---|---|---|

| Breast Cancer Classification | Histo.path | BreakHis | Spanhol et al. [49] |

| Mass Detection | Histo.path | INbreast | Wichakam et al. [50] |

| Mass segmentation | Mammo.graph. | DDSM | Ertosun et al. [51] |

| Mitosis Detection | Histo.path | MITOSATYPIA-14 | Albayrak et al. [52] |

| Lesion recognition | Mammo.graph. | DDSM | Swiderski et al. [53] |

| Mass Detection | Histo.path | DDSM | Suzuki et al. [54] |

| Lung nodule (LN) Classification | CT Slices | JSRT | Wang et al. [55] |

| Pulmonary nodule Detection | Volumetric CT | LIDC-IDRI | Dou et al. [56] |

| Lung nodule (LN) Suspiciousness classification | Volumetric CT | LIDC-IDRI | Shen et al. [57] |

| Nodule characterization | Volumetric CT | LIDC-IDRI | Hua et al. [58] |

| Ground glass opacity (GCO) extraction | CT Slices | LIDC | Hirayama et al. [59] |

| Pulmonary nodules detect. | Volumetric CT | LIDC | Setio et al. [60] |

| Nodule Characterization | Volumetric CT | DLCST, LIDC, NODE09 | Hussein et al. [61] |

| Skin lesion classification | Dermo.S. | ISIC | Mahbod et al. [62] |

| Skin lesion classification | Dermo.S. | DermIS, DermQuest [63,64] | Pomponiu et al. [65] |

| Skin lesion classification | Dermo.S. | ISIC [66] | Majtner et al. [67] |

| Dermoscopy patterns classification | Dermo.S. | ISIC | Demyanov et al. [68] |

| Melanoma detection | Clinical photoghrapy | MED-NODE [69] | Nasr-Esfahani et al. [70] |

| Lesion border detection | Clinical photoghrapy | DermIS, Online dataset, DermQuest [71,72,73] | Sabouri et al. [74] |

| Prostate Segmentation | MRI | PROMISE12 [75] | Yan et al. [76] |

| Prostate Segmentation | CT Scans | PROMISE12 | Maa et al. [77] |

| Brain tumor Segmentation Cancer detection | MRI | BraTS [78] | Zhao et al. [79] |

| Brain tumor Segmentation | MRI | BraTS | Pereira et al. [80] |

| Brain tumor Segmentation | MRI | BraTS | KAmnitsas et al. [81] |

| Prostate segmentation | MRI | BraTS | Zhao et al. [82] |

| Gland segmentation | Histo.path | Warwick-QU [83,84] | Chen et al. [85] |

| Application Type | Modality | Reference |

|---|---|---|

| Dermatologists-level skin cancer | Dermo.S. | Esteva et al. [86] |

| Survival Prediction | CT Slices | Paul et al. [87] |

| Latent bi[]=lateral feature representation learning | Tomosynthesis | Kim et al. [88] |

| Feature learning of Brain tumor | MRI | Liu et al. [89] |

| Gleason grading | Histo.path | Kallen et al. [90] |

| Gleason grading | Histo.path | Gummeson et al. [91] |

| Lumen-based Prostate | Histo.path | Kwak et al. [92] |

| Survival analysis | Histo.path | Zhu et al. [93] |

| Classification of Brain tumor | MRI | Ahmed et al. [94] |

| Cervical cytoplasm and nuclei segmentation | Histo.path | Song et al. [95] |

| Urinary bladder | CT Slices segmentation | Cha et al. [96] |

| Liver segmentation on Laparoscopic videos | Laparoscopy | Gibson et al. [97] |

| Inner/outer bladder wall segmentation | CT Slices | Gordon et al. [98] |

| Cervical dysplasia diagnosis | Digital cervicigraphy | Xu et al. [99] |

| Colon adenocarcinoma glands segmentation | Histo.path | BenTaieb et al. [100] |

| Nucleus segmentation | Histo.path | Xing et al. [101] |

| Circulating tumor-cell detection | Histo.path | Mao et al. [102] |

| Liver tumor segmentation | CT Slices | Li et al. [103] |

| Cervical cytoplasm segmentation | Histo.path | Song et al. [104] |

| bladder cancer Treatment response assessment | CT Slices | Cha et al. [105] |

| Cause | Risk Factors |

|---|---|

| 1. Sunlight | (a) UV radiations leading to cancer |

| (b) Sunburn Blisters: sunburns in adults are more prone to cancer | |

| (c) Tanning | |

| 2. Tanning Booths | leads to cancer before the age of 30 and Sun lamps |

| 3. Inherited | Two or more careers of melanoma from family inherit this disease to the descendants |

| 4. Easily burnable skin | Gray/Blue eyes, Fair/Pale skin, Blond/Red hairs |

| 5. Medications Side-Effects | Side effects of anti-depressants antibiotics and Hormones |

| Application Type | Modality | Reference |

|---|---|---|

| Prostate Segmentation | 3D MRI | Yu et al. [142] |

| Prostate Segmentation | 3D MRI | Milletari et al. [163] |

| Prostate Segmentation | MRI | Zhao et al. [79] |

| Polyp detection | clonoscopy | Yu et al. [164] |

| Application Name | References | No. of Papers |

|---|---|---|

| Breast Cancer | [49,50,52,53,54,85,88,114,136,137,138] | 12 |

| Lung Cancer | [12,56,57,59,60,61,87,93] | 12 |

| Prostate Cancer | [76,77,90,91,92] | 5 |

| Brain Cancer | [79,80,81,89,94] | 5 |

| Skin Cancer | [42,65,67,68,70,74,86,141,142,143,144,145,147,148,149,150,151,152,153,154,155,156,157,158,159,161,167] | 27 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Munir, K.; Elahi, H.; Ayub, A.; Frezza, F.; Rizzi, A. Cancer Diagnosis Using Deep Learning: A Bibliographic Review. Cancers 2019, 11, 1235. https://doi.org/10.3390/cancers11091235

Munir K, Elahi H, Ayub A, Frezza F, Rizzi A. Cancer Diagnosis Using Deep Learning: A Bibliographic Review. Cancers. 2019; 11(9):1235. https://doi.org/10.3390/cancers11091235

Chicago/Turabian StyleMunir, Khushboo, Hassan Elahi, Afsheen Ayub, Fabrizio Frezza, and Antonello Rizzi. 2019. "Cancer Diagnosis Using Deep Learning: A Bibliographic Review" Cancers 11, no. 9: 1235. https://doi.org/10.3390/cancers11091235

APA StyleMunir, K., Elahi, H., Ayub, A., Frezza, F., & Rizzi, A. (2019). Cancer Diagnosis Using Deep Learning: A Bibliographic Review. Cancers, 11(9), 1235. https://doi.org/10.3390/cancers11091235