Towards Recognising Learning Evidence in Collaborative Virtual Environments: A Mixed Agents Approach †

Abstract

:1. Introduction

1.1. Collaborative Learning Environments

1.2. Techniques and Approaches

1.2.1. Agents and Multi-Agent Systems

1.2.2. Fuzzy Logic

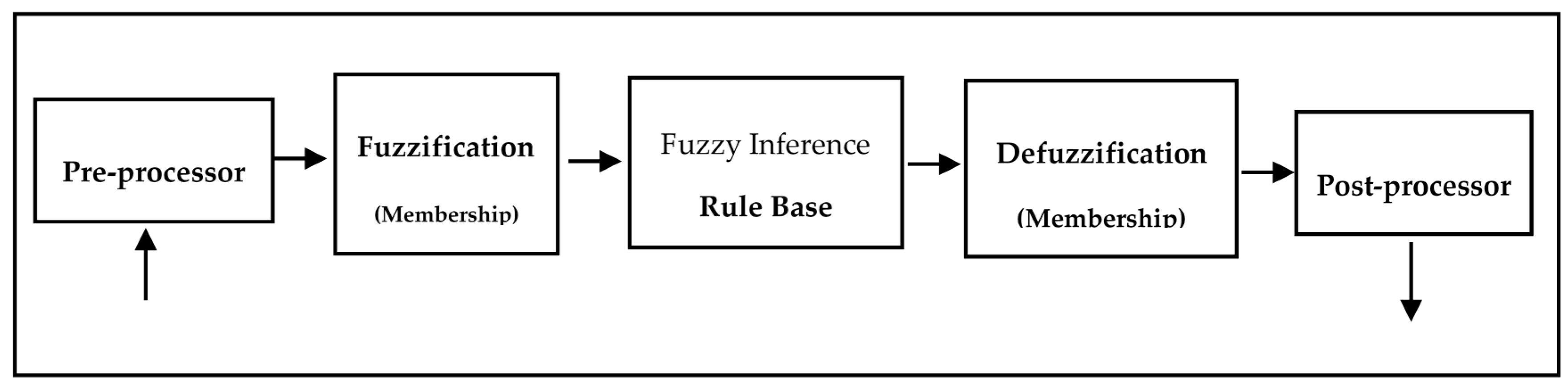

- pre-processor, the process to obtain the data (crisp values) and generate the fuzzy inputs;

- fuzzification, to convert the data into fuzzy variables by using membership functions;

- fuzzy inference contains the rule base inference engine;

- defuzzification, to convert the output of the inference into a numeric value; and

- post-processor, to reduce the final data to be sent to the process.

2. Related Work

3. Mixed Agents Model (MixAgent)

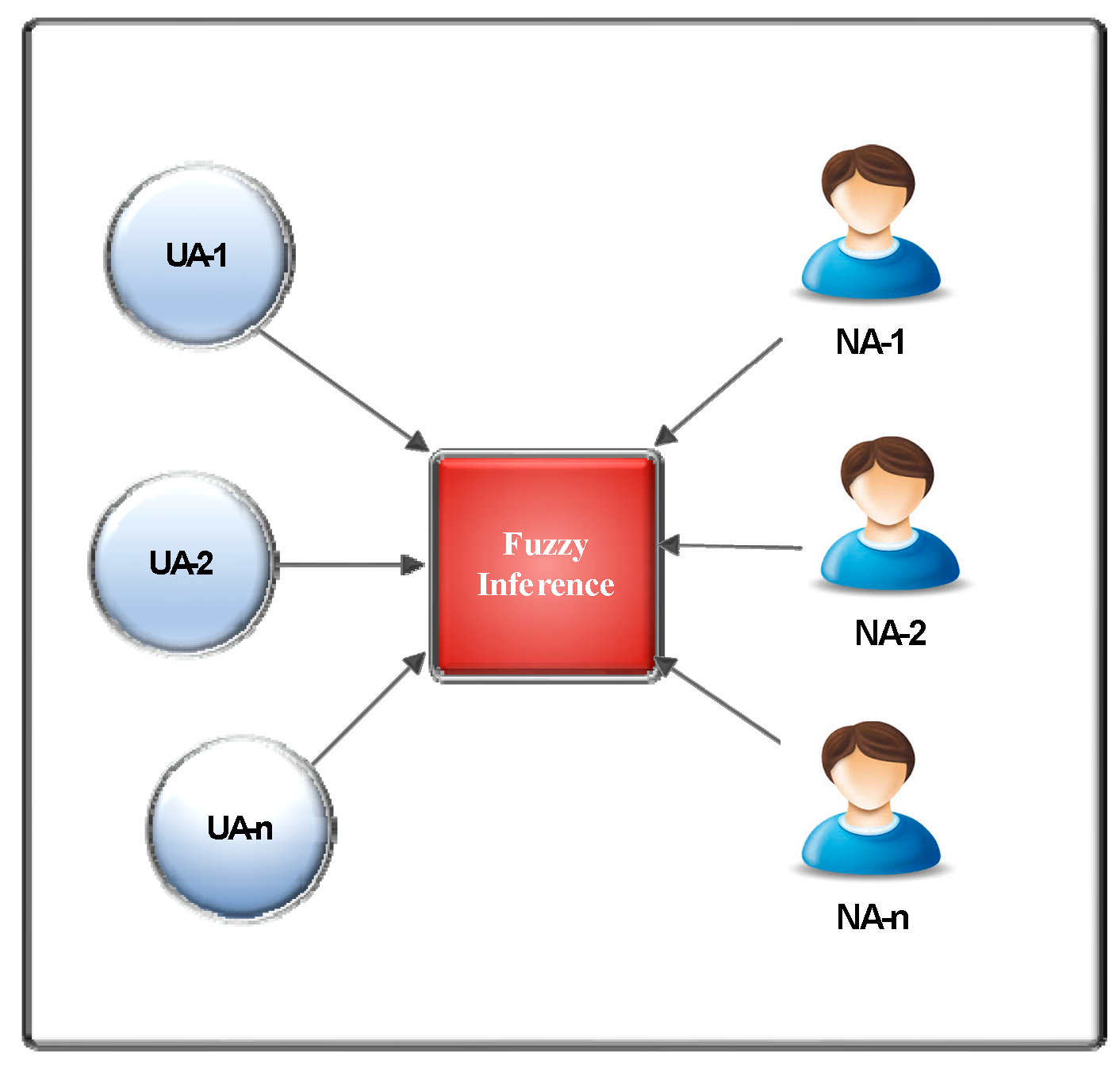

- Software Agents (User Agent (UA)): When a user has received authorisation in the virtual world, a user agent (UA) is assigned to each student. UAs are able to monitor the activities of individuals in real time, save the data, and then transfer this data to the fuzzy inference engine.

- Natural Agents (NA): Evaluation by peers is particularly suitable for group exercises [34], and can provide insight that conventional technology would struggle to pick up on [28]. The students themselves are the natural agents, providing details about the skills and qualities of other learners within the group setting. When learners are working together in assigned tasks, they can rate each other’s performance by using a rating tool. These quantitative scores are compiled and are then transferred for fuzzy reasoning. Natural agents can assist in measuring the quality of learning outcomes, which can be difficult to achieve when relying solely on automated approaches.

- Fuzzy Inference: Agents have common objectives and they collaborate in real time to amass data that can then be transferred for fuzzy inference. The inference is based on the fuzzy logic approach to make sense of the data collected by all of the agents. Once the data is collected, it can be analysed according to fuzzy rules in order to shed light on the performance of individual learners. Moreover, the inferences can be used to resolve relationships between the data and its meaning in order to infer further evidence of learning. The fuzzy logic method can handle multiple values and perform human-like reasoning, going someway to providing a unified vision of agency within our model. More details about applying the fuzzy logic inference are given in Section 5.

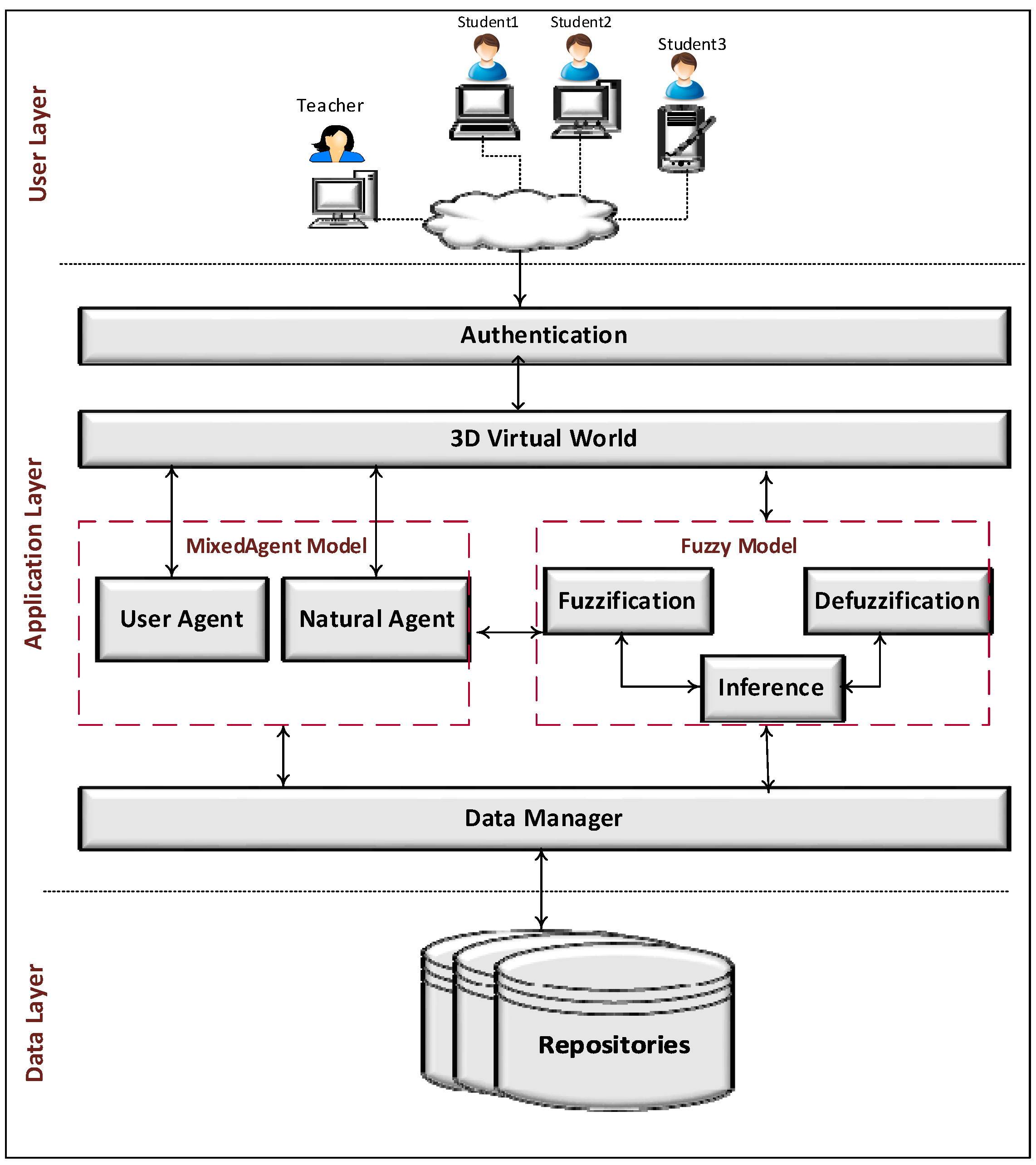

4. System Architecture

- Authentication: Identifies the learners and teachers and attributes the functions that they perform.

- Virtual 3D Environment: Provides the environment interface to enable users to fulfil their stated roles. This involves students collaborating on educational pursuits and monitoring each other’s progress. Meanwhile, teachers use the interface to construct activities and give each student details of their tasks. Once the activities have been completed, learners’ evaluation appears in the graphical user interface. Section 6 defines the learning environment used in this research.

- MixAgent Model: As previously described, this model uses software agents to monitor the activities of users in real time. Students (natural agents) are not only learning but also monitoring the performance of their peers. The data yield from natural agents is also used for the purposes of evaluation. All of the data produced is transferred to the data manager from where it can be retrieved whenever needed.

- Fuzzy Model: This model contains the different processes that the data are put through to obtain the final evaluation output. These stages are fuzzification, inferencing, and defuzzification.

- Data Manager: It controls the flow of information to and from the data repositories. Data is received from the agents and then transferred to the repositories. It also sends data to and receives data from the fuzzy logic model.

- Data Layer: This layer includes the database created to save the events and the performed actions in real time.

5. The Fuzzy Model

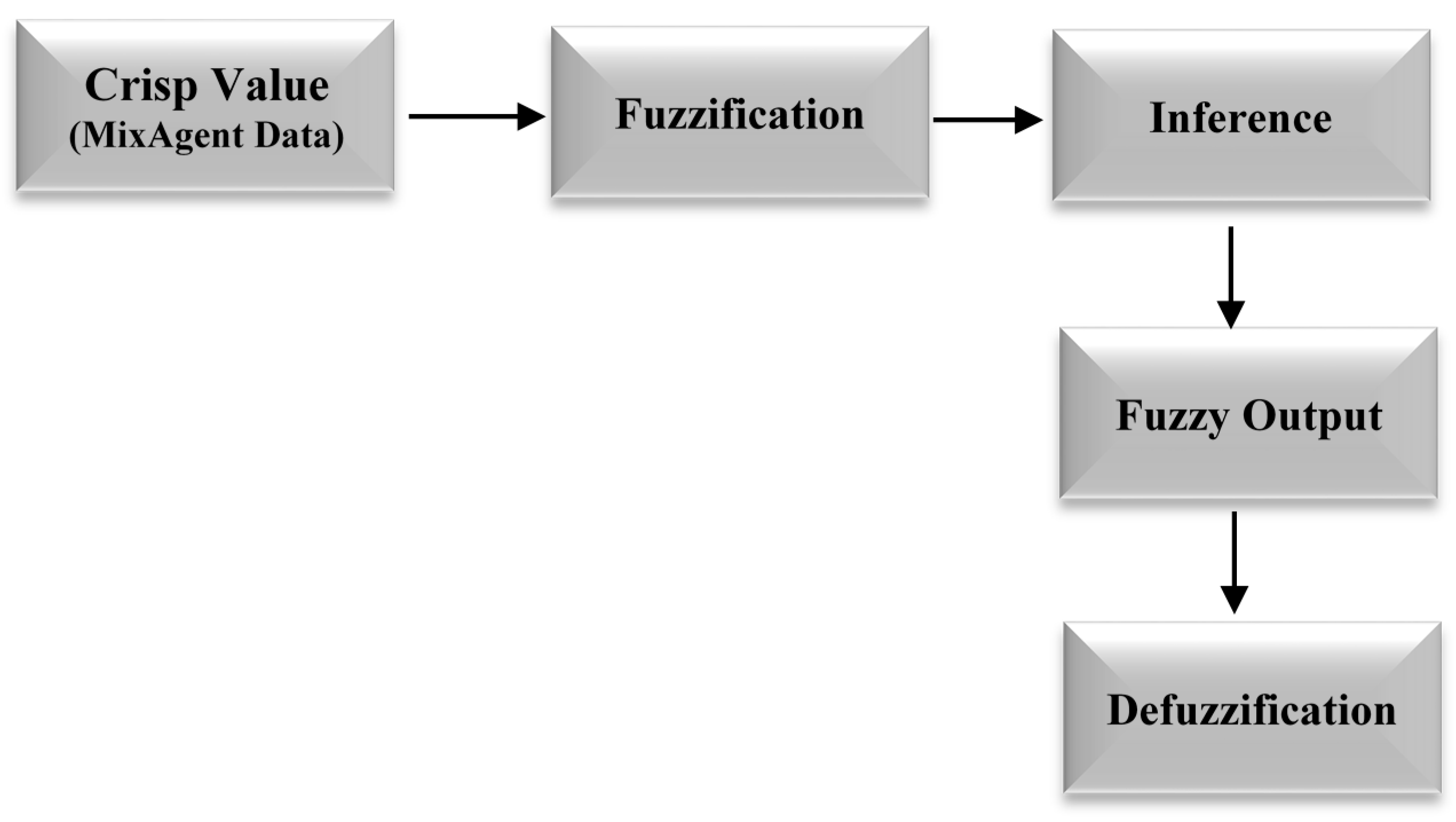

- Crisp Values: Crisp values represent the students’ data obtained by the system from both the software and natural agents.

- Fuzzification: This is the process of changing the crisp values (students’ actions and ratings) to fuzzy input values with an appropriate membership function. We have used the triangular membership function [18] to convert the data collected from users to a fuzzy set. A membership function is identified by three parameters (x, y, z), and each student data item is defined by the function. For instance, the system gathers each user’s clicks, communications, and tasks completed by the software agent and gathers the ratings from other sources (such as the natural agent), and translates the data to fuzzy sets. Then the fuzzy sets are sent to the inference engine as inputs.

- Inference: This is the development of different linguistic rules for student evaluation. We have generated linguistic IF-THEN rules to define the inputs and outputs that are used in the inference process.

- Fuzzy Output: This determines a membership function value as an output for each active rule (“IF-THEN” rule).

- Defuzzification (students’ assessment): This is the process of determining the final output (students’ assessment) by using a defuzzification technique. After completing the inference decision, the fuzzy number should be transformed into a crisp value; this process is called defuzzification. There are several defuzzification techniques which have been developed; the centre of area (centroid) method is used in this research because it is one of the most common techniques [35].

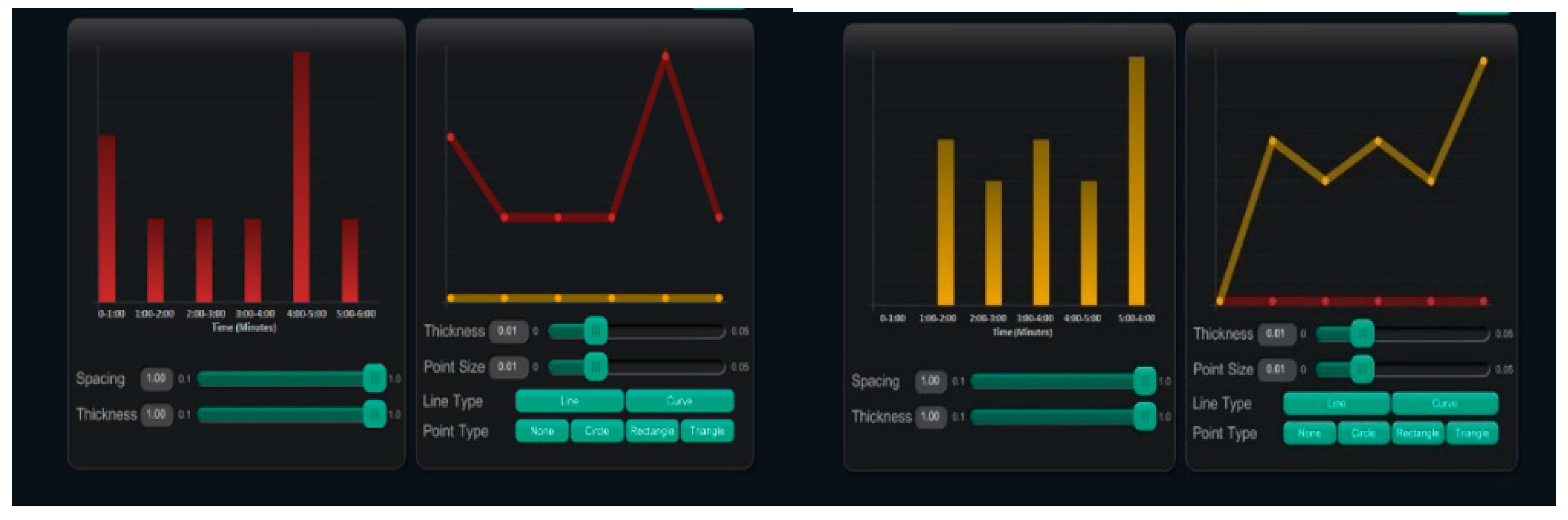

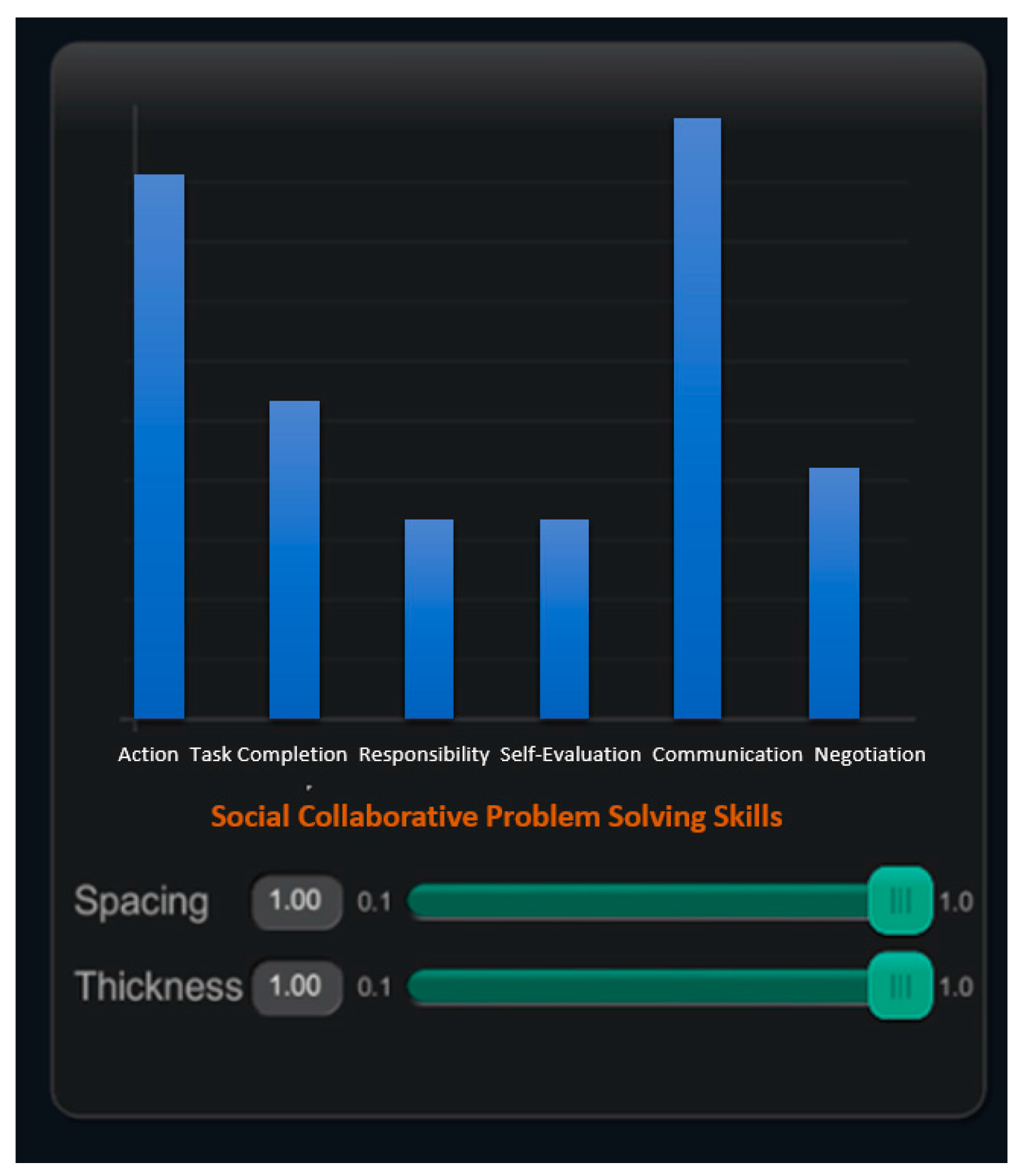

6. The Learning Environment

7. The Learning Scenario

8. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Andreas, K.; Tsiatsos, T.; Terzidou, T.; Pomportsis, A. Fostering collaborative learning in Second Life: Metaphors and affordances. Comput. Educ. 2010, 55, 603–615. [Google Scholar] [CrossRef]

- Belazoui, A.; Kazar, O.; Bourekkache, S. A Cooperative Multi-Agent System for modeling of authoring system in e-learning. J. E-Learn. Knowl. Soc. 2016, 12. [Google Scholar] [CrossRef]

- Felemban, S. Distributed pedagogical virtual machine (d-pvm). In Proceedings of the Immersive Learning Research Network Conference (iLRN 2015), Prague, Czech, 13–14 July 2015; p. 58. [Google Scholar]

- Schouten, A.P.; van den Hooff, B.; Feldberg, F. Real Decisions in Virtual Worlds: Team Collaboration and Decision Making in 3D Virtual Worlds. In Proceedings of the International Conference on Information Systems (ICIS 2010), Saint Louis, MO, USA, 12–15 December 2010. [Google Scholar]

- Dalgarno, B.; Lee, M.J. What are the learning affordances of 3-D virtual environments? Br. J. Educ. Technol. 2010, 41, 10–32. [Google Scholar] [CrossRef]

- Duncan, I.; Miller, A.; Jiang, S. A taxonomy of virtual worlds usage in education. Br. J. Educ. Technol. 2012, 43, 949–964. [Google Scholar] [CrossRef]

- Zheng, L.; Huang, R. The effects of sentiments and co-regulation on group performance in computer supported collaborative learning. Internet High. Educ. 2016, 28, 59–67. [Google Scholar] [CrossRef]

- De Meo, P.; Messina, F.; Rosaci, D.; Sarné, G.M. Combining trust and skills evaluation to form e-Learning classes in online social networks. Inf. Sci. 2017, 405, 107–122. [Google Scholar] [CrossRef]

- Gardner, M.; Elliott, J. The Immersive Education Laboratory: Understanding affordances, structuring experiences, and creating constructivist, collaborative processes, in mixed-reality smart environments. EAI Endorsed Trans. Future Intell. Educ. Environ. 2014, 14, e6. [Google Scholar] [CrossRef]

- Weiss, G. Multiagent Systems: A Modern Approach to Distributed Artificial Intelligence; MIT Press: Cambridge, MA, USA; London, UK, 1999. [Google Scholar]

- Wooldridge, M.; Jennings, N.R. Intelligent agents: Theory and practice. Knowl. Eng. Rev. 1995, 10, 115–152. [Google Scholar] [CrossRef]

- Rosaci, D. CILIOS: Connectionist inductive learning and inter-ontology similarities for recommending information agents. Inf. Syst. 2007, 32, 793–825. [Google Scholar] [CrossRef]

- Rosaci, D.; Sarné, G.M. EVA: An evolutionary approach to mutual monitoring of learning information agents. Appl. Artif. Intell. 2011, 25, 341–361. [Google Scholar] [CrossRef]

- Sánchez, J.A. A Taxonomy of Agents. Rapport Technique; ICT-Universidad de las Américas-Puebla: Puebla, México, 1997. [Google Scholar]

- Rosaci, D.; Sarné, G.M. Efficient Personalization of E-Learning Activities Using a Multi-Device Decentralized Recommender System. Comput. Intell. 2010, 26, 121–141. [Google Scholar] [CrossRef]

- Nwana, H.S. Software agents: An overview. Knowl. Eng. Rev. 1996, 11, 205–244. [Google Scholar] [CrossRef]

- Durfee, E.H.; Lesser, V.R. Negotiating task decomposition and allocation using partial global planning. Distrib. Artif. Intell. 1989, 2, 229–244. [Google Scholar]

- Yadav, R.S.; Singh, V.P. Modeling academic performance evaluation using soft computing techniques: A fuzzy logic approach. Int. J. Comput. Sci. Eng. 2011, 3, 676–686. [Google Scholar]

- Albertos, P.; Sala, A. Fuzzy Expert Control Systems: Knowledge Base Validation; UNESCO Encyclopedia of Life Support Systems: Paris, France, 2002. [Google Scholar]

- Thompson, K.; Markauskaite, L. Identifying Group Processes and Affect in Learners: A Holistic Approach to. In Cases on the Assessment of Scenario and Game-Based Virtual Worlds in Higher Education; IGI Global: Hershey, PA, USA, 2014; p. 175. [Google Scholar]

- Alrashidi, M.; Almohammadi, K.; Gardner, M.; Callaghan, V. Making the Invisible Visible: Real-Time Feedback for Embedded Computing Learning Activity Using Pedagogical Virtual Machine with Augmented Reality. In Proceedings of the International Conference on Augmented Reality, Virtual Reality and Computer Graphics, Ugento, Italy, 12–15 June 2017; Springer: Cham, Switzerland. [Google Scholar]

- Gobert, J.D.; Sao Pedro, M.A.; Baker, R.S.; Toto, E.; Montalvo, O. Leveraging educational data mining for real-time performance assessment of scientific inquiry skills within microworlds. JEDM J. Educ. Data Min. 2012, 4, 111–143. [Google Scholar]

- Schunn, C.D.; Anderson, J.R. The generality/specificity of expertise in scientific reasoning. Cogn. Sci. 1999, 23, 337–370. [Google Scholar] [CrossRef]

- Annetta, L.A.; Folta, E.; Klesath, M. Assessing and Evaluating Virtual World Effectiveness. In V-Learning; Springer: Dordrecht, The Netherlands, 2010; pp. 125–151. [Google Scholar]

- Kerr, D.; Chung, G.K. Identifying key features of student performance in educational video games and simulations through cluster analysis. JEDM J. Educ. Data Min. 2012, 4, 144–182. [Google Scholar]

- Mislevy, R.J.; Almond, R.G.; Lukas, J.F. A brief introduction to evidence-centered design. ETS Res. Rep. Ser. 2003, 2003, i-29. [Google Scholar] [CrossRef]

- Tesfazgi, S.H. Survey on Behavioral Observation Methods in Virtual Environments; Research Assignment; Delft University of Technology: Delft, The Netherlands, 2003. [Google Scholar]

- Csapó, B.; Ainley, J.; Bennett, R.; Latour, T.; Law, N. Technological Issues for Computer-Based Assessment. In Assessment and Teaching of 21st Century Skills; Griffin, P., McGaw, B., Care, E., Eds.; Springer: Dordrecht, The Netherlands, 2012; pp. 143–230. [Google Scholar]

- Ibáñez, M.B.; Crespo, R.M.; Kloos, C.D. Assessment of knowledge and competencies in 3D virtual worlds: A proposal. In Key Competencies in the Knowledge Society; Springer: Berlin/Heidelberg, Germany, 2010; pp. 165–176. [Google Scholar]

- Shute, V.J. Stealth assessment in computer-based games to support learning. Comput. Games Instr. 2011, 55, 503–524. [Google Scholar]

- Felemban, S.; Gardner, M.; Challagan, V. Virtual Observation Lenses for Assessing Online Collaborative Learning Environments. In Proceedings of the Immersive Learning Research Network (iLRN 2016), Santa Barbra, CA, USA, 27 June–1 July 2016; Verlag der Technischen Universität Graz: Santa Barbra, CA, USA, 2016; pp. 80–92. [Google Scholar]

- Felemban, S.; Gardner, M.; Callaghan, V.; Pena-Rios, A. Towards Observing and Assessing Collaborative Learning Activities in Immersive Environments. In Proceedings of the Immersive Learning Research Network: Third International Conference (iLRN 2017), Coimbra, Portugal, 26–29 June 2017; Beck, D., Allison, C., Morgado, L., Pirker, J., Khosmood, F., Richter, J., Gütl, C., Eds.; Springer: Cham, Switzerland, 2017; pp. 47–59. [Google Scholar]

- Felemban, S.; Gardner, M.; Callaghan, V. An event detection approach for identifying learning evidence in collaborative virtual environments. In Proceedings of the Computer Science and Electronic Engineering (CEEC), Colchester, UK, 28–30 September 2016. [Google Scholar]

- Excellence, E.C.F.T. How Can I Assess Group Work? Available online: https://www.cmu.edu/teaching/designteach/design/instructionalstrategies/groupprojects/assess.html (accessed on 31 May 2017).

- Padhy, N. Artificial Intelligence and Intelligent Systems; Oxford University Press: Oxford, UK, 2005. [Google Scholar]

- Pena-Rios, A. Exploring Mixed Reality in Distributed Collaborative Learning Environments. In School of Computer Science and Electronic Engineering; University of Essex: Colchester, UK, 2016. [Google Scholar]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Felemban, S.; Gardner, M.; Callaghan, V. Towards Recognising Learning Evidence in Collaborative Virtual Environments: A Mixed Agents Approach. Computers 2017, 6, 22. https://doi.org/10.3390/computers6030022

Felemban S, Gardner M, Callaghan V. Towards Recognising Learning Evidence in Collaborative Virtual Environments: A Mixed Agents Approach. Computers. 2017; 6(3):22. https://doi.org/10.3390/computers6030022

Chicago/Turabian StyleFelemban, Samah, Michael Gardner, and Victor Callaghan. 2017. "Towards Recognising Learning Evidence in Collaborative Virtual Environments: A Mixed Agents Approach" Computers 6, no. 3: 22. https://doi.org/10.3390/computers6030022