Identification of Facade Elements of Traditional Areas in Seoul, South Korea

Abstract

:1. Introduction

2. Literature Review

2.1. Street and Place

2.2. Traditional Architecture in the Modern Period of Seoul: Urban Hanok in Bukchon

2.3. Image Processing for Building Facade

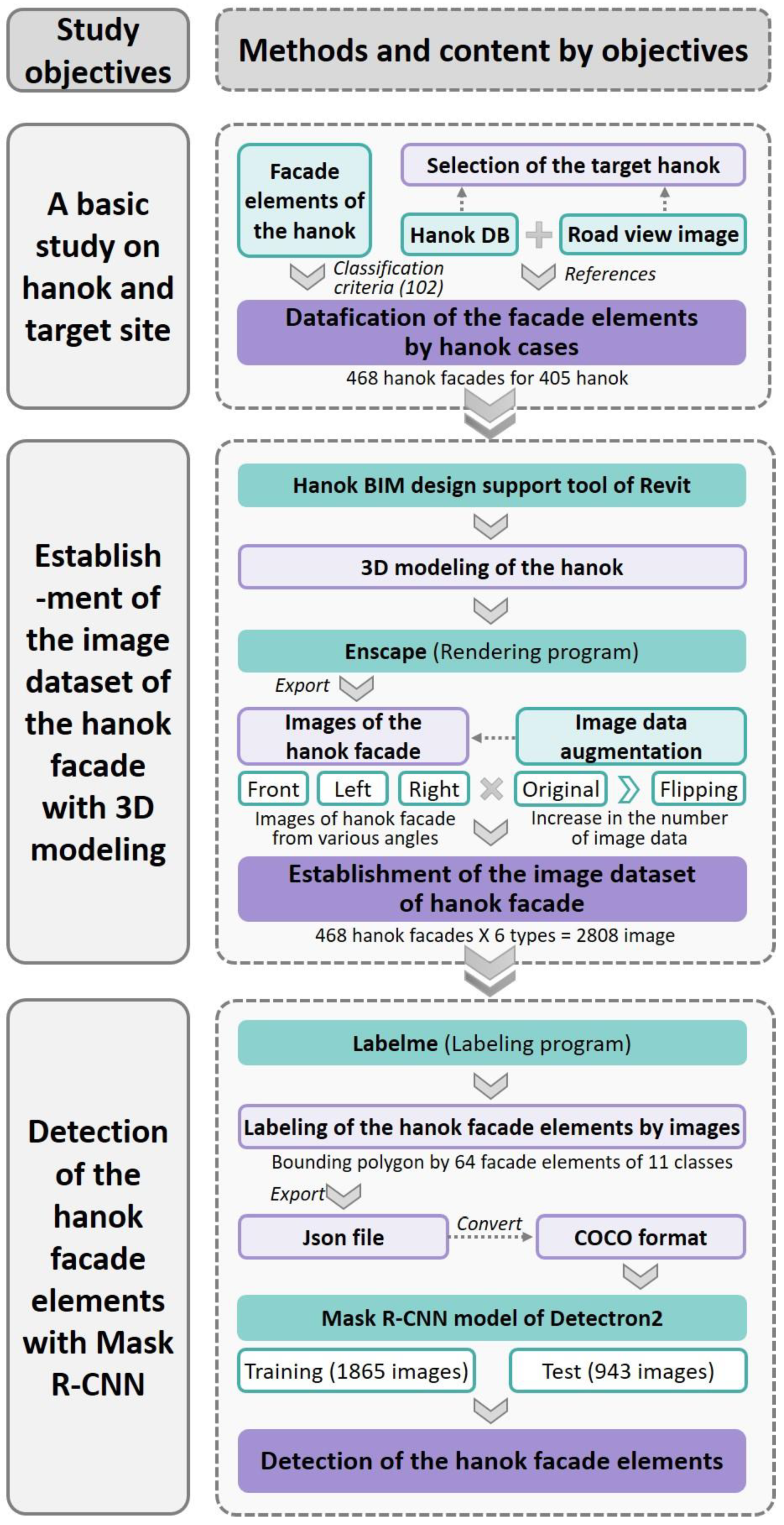

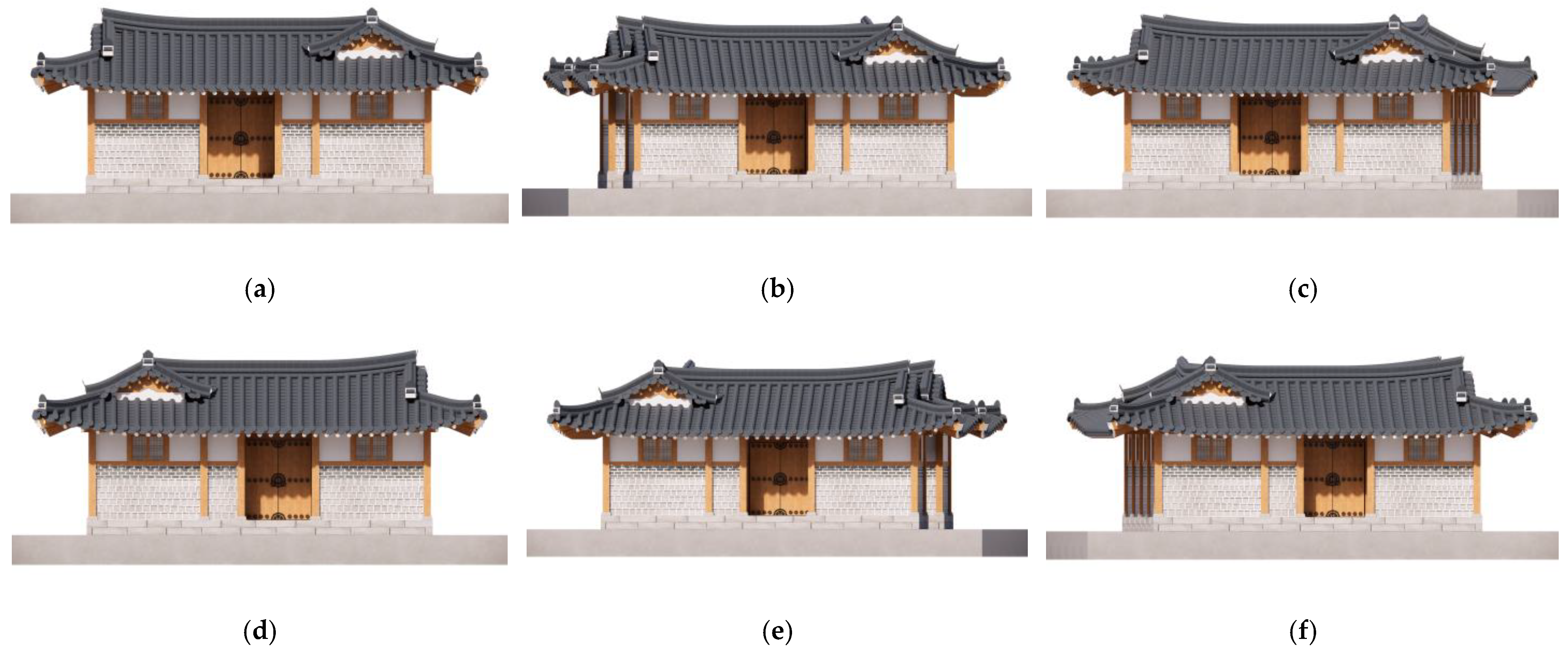

3. Building an Image Dataset of Hanok Facade Using 3D Modeling

3.1. Selection of Target Site

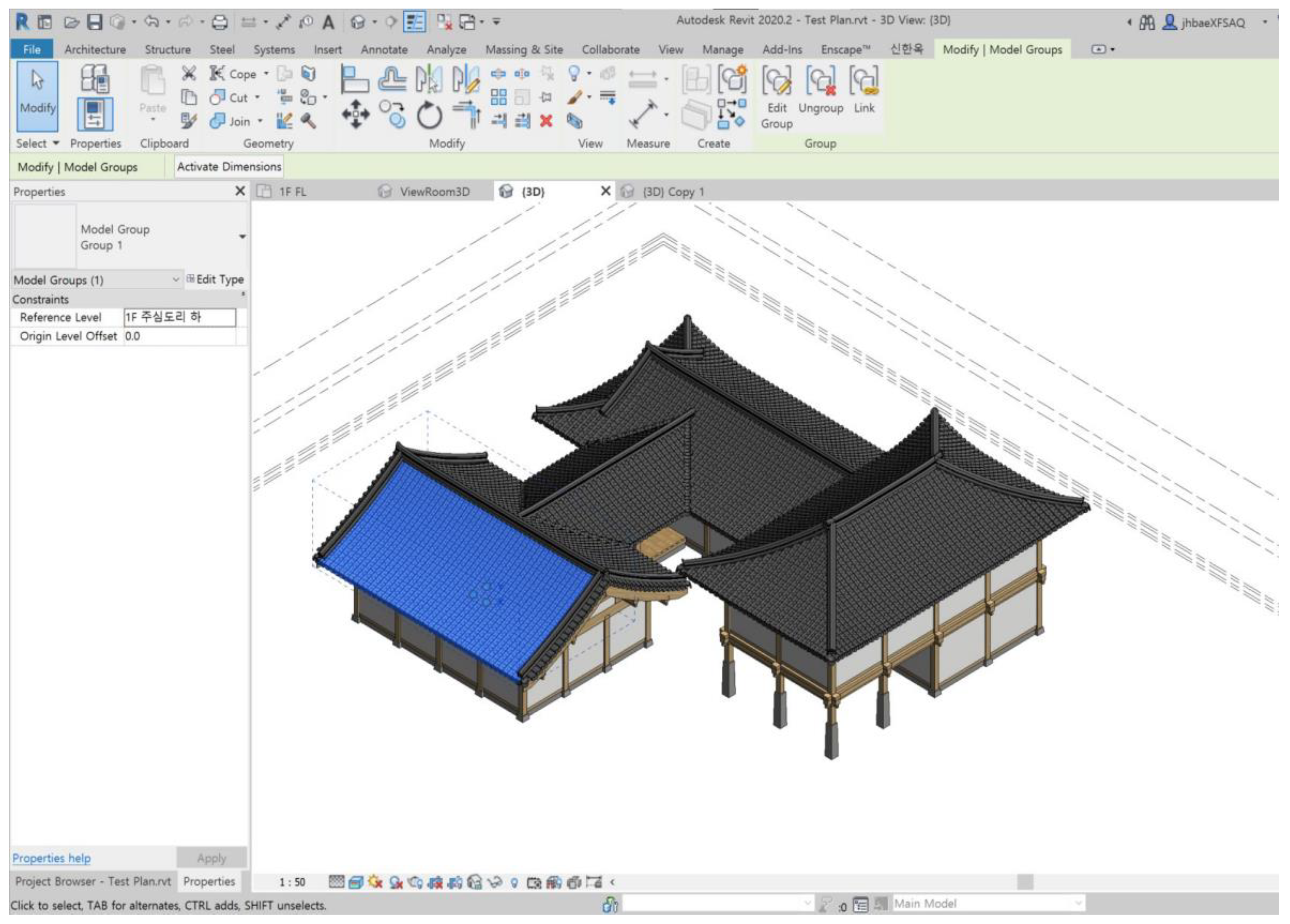

3.2. 3D Modeling of Hanoks Using Revit BIM

3.2.1. Classification of the Components of Building Exterior and Form Elements of Hanok

3.2.2. 3D Modeling of Hanok Using an Automatic Design Program

3.3. Building an Image Dataset of Hanok Facade

4. Experiment and Results

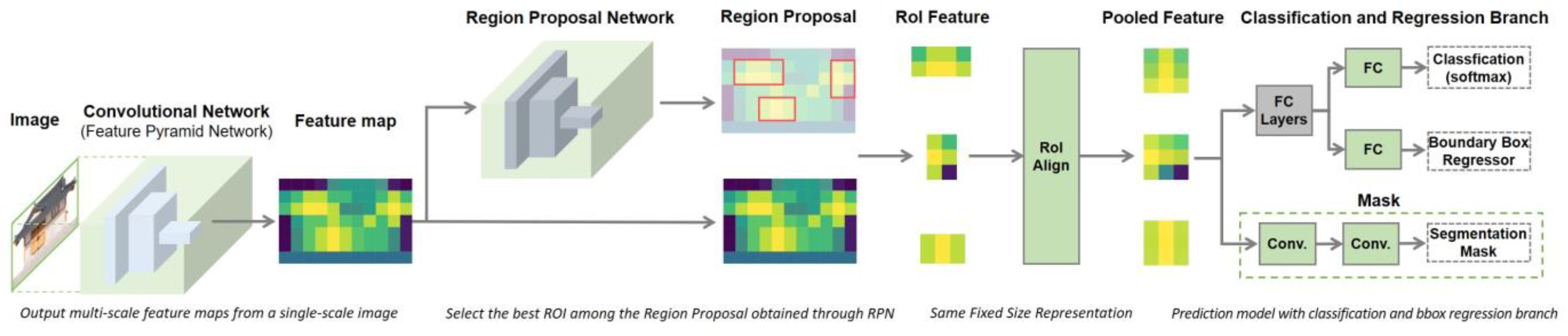

4.1. Mask R-CNN for Hanok Exterior Element Detection

4.2. Labeling for Segmentation

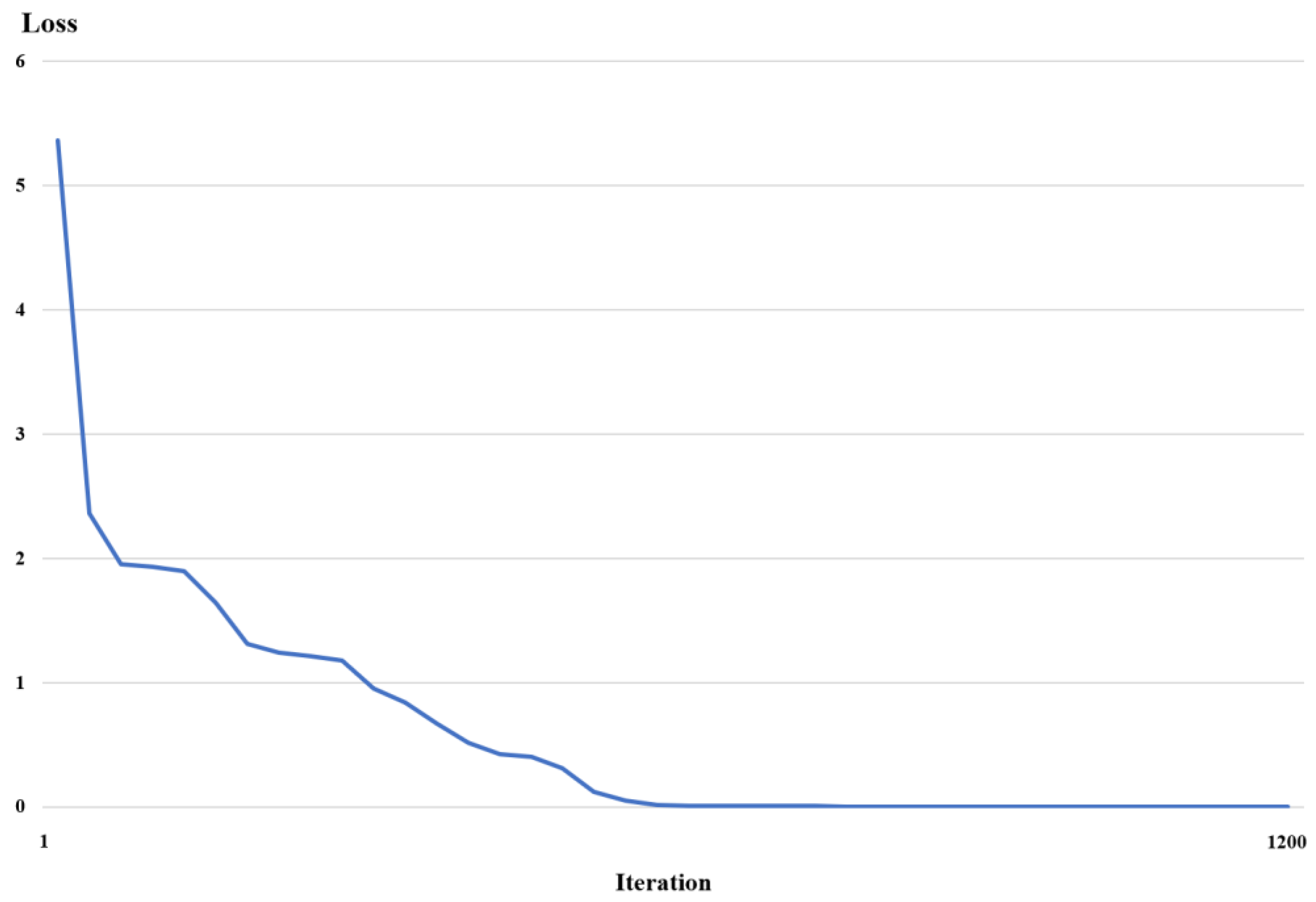

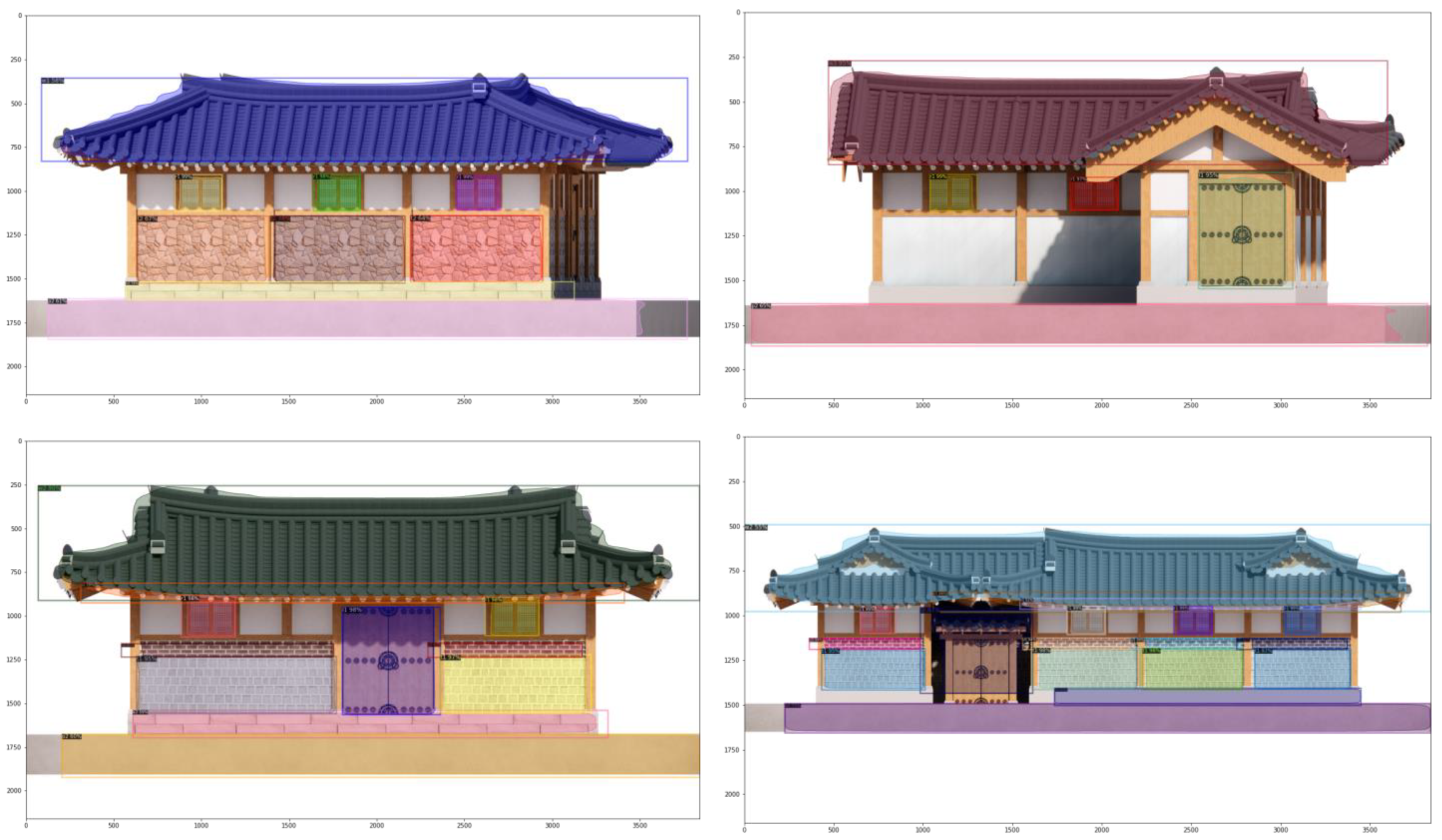

4.3. Results of Segmentation

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Jacobs, J. Death and Life of Great American Cities; Random House: New York, NY, USA, 1961. [Google Scholar]

- Lynch, K. The Image of the City; The MIT Press: Cambridge, MA, USA, 1960. [Google Scholar]

- Shon, D. Analysis of Traditional Urban Landscape Composition Using Data Mining. Ph.D. Thesis, Seoul National University, Seoul, Republic of Korea, 2018. [Google Scholar]

- Kropf, K. The Handbook of Urban Morphology; John Wiley & Sons: Hoboken, NJ, USA, 2018. [Google Scholar]

- Hall, A.C. Dealing with incremental change: An application of urban morphology to design control. J. Urban Des. 1997, 2, 221–239. [Google Scholar] [CrossRef]

- Korean Law Information Center. Building Act. Available online: https://www.law.go.kr/ (accessed on 2 December 2022).

- Korean Law Information Center. ENFORCEMENT DECREE OF THE NATIONAL LAND PLANNING AND UTILIZATION ACT. Available online: https://www.law.go.kr/ (accessed on 2 December 2021).

- Markus, T.A. Buildings and Power, Freedom and Control in the Origin of Modern Building Types; Routledge: England, UK, 1993. [Google Scholar]

- Vworld Map. Available online: https://vworld.kr/v4po_main.do (accessed on 15 November 2021).

- Seoul City 3D Map. S-MAP. Available online: https://smap.seoul.go.kr/ (accessed on 15 November 2022).

- Google Earth, 3D Map. Available online: https://earth.google.com/web/ (accessed on 15 November 2022).

- Daum Kakao Map. Available online: http://map.daum.net (accessed on 15 November 2022).

- Naver Map. Available online: http://map.naver.com (accessed on 25 November 2022).

- Form Based Code Institute.2022. Available online: https://formbasedcodes.org/definition/ (accessed on 15 November 2022).

- Halprin, L. The Landscape as Matrix. Ekistics, Vol 32; Oct.; Oxford University Press: New York, NY, USA, 1971. [Google Scholar]

- Planning in the 20th Century. 2022. Available online: https://urbanla.weebly.com/history-of-planning.html (accessed on 20 December 2022).

- Zoning in Canada. 2022. Available online: https://www.thecanadianencyclopedia.ca/en/article/zoning (accessed on 20 December 2022).

- Town Planning Code in France. 2022. Available online: https://www.legifrance.gouv.fr/codes/id/LEGITEXT000006074075/ (accessed on 20 December 2022).

- Law of Urban Planning in Japan. 2022. Available online: https://elaws.e-gov.go.jp/document?lawid=343AC0000000100 (accessed on 20 December 2022).

- Law of Zoning in South Korea. 2022. Available online: https://www.law.go.kr/LSW/eng/engLsSc.do?menuId=2§ion=lawNm&query=%EA%B5%AD%ED%86%A0%EA%B3%84%ED%9A%8D&x=36&y=20#liBgcolor0 (accessed on 20 December 2022).

- Elliott, D. A Better Way to Zone: Ten Principles to Create More Livable Cities; Island Press: Washington, DC, USA, 2008. [Google Scholar]

- Congress for the New Urbanism. Charter of the New Urbanism; McGraw-Hill Professional: New York, NY, USA, 1999. [Google Scholar]

- Relph, E. Place and Placelessness; Pion Limited: London, UK, 1976. [Google Scholar]

- Jeong, S.; Song, I.; Moon, H. Report of Bukchon Master Plan; Seoul City Press: Seoul, Republic of Korea, 2001. [Google Scholar]

- Song, I. Study on the Types of Urban Traditional Housing in Seoul from 1930 to 1960. Ph.D. Thesis, Seoul National University, Seoul, Republic of Korea, 1990. [Google Scholar]

- The Korean Law Information Center. Act on Value Enhancement of Hanok and Other Architectural Assets. 2021. Available online: https://www.law.go.kr/ (accessed on 14 June 2021).

- Lecun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and, Semantic Segmentation. In Proceedings of the IEEE Conference on Computer, Vision, and Pattern Recognition (CVPR), Columbus, OH, USA, 24–27 June 2014; pp. 580–587. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region, proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 91–99. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-time Object Detection. In Proceedings of the 29th IEEE Conference on Computer Vision and Pattern, Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, L.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Yan, C.; Teng, T.; Liu, Y.; Zhang, Y.; Wang, H.; Ji, X. Precise no-reference image quality evaluation based on distortion identification. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2021, 17, 1–21. [Google Scholar] [CrossRef]

- Yan, C.; Meng, L.; Li, L.; Zhang, J.; Wang, Z.; Yin, J.; Zheng, B. Age-invariant face recognition by multi-feature fusionand decomposition with self-attention. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2022, 18, 1–18. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Koo, W.; Yokoda, T.; Takizawa, A.; Kato, N. Image Recognition Method on Architectural Components from Architectural Photographs Glass: Openings Recognition Based on Bayes Classification. Archit. Inst. Jpn. 2006, 121, 123–128. [Google Scholar]

- Talebi, M.; Vafaei, A.; Monadjemi, A. Vision -based entrance detection in outdoor scenes. Multimed. Tools Appl. 2018, 77, 26221. [Google Scholar] [CrossRef]

- Koo, W.; Takizawa, A.; Kato, N. A Study on discriminating roof types from aerial image. Archit. Inst. Jpn. 2007, 72, 99–105. [Google Scholar] [CrossRef] [Green Version]

- Karsli, F.; Dihkan, M.; Acar, H.; Ozturk, A. Automatic building extraction from very high-resolution image and LiDAR data with SVM algorithm. Arab. J. Geosci. 2016, 9, 635. [Google Scholar] [CrossRef]

- Cai, W.; Wen, X.; Tu, Q.; Guo, X. Research on image processing of intelligent building environment based on recognition technology. J. Vis. Commun. Image Represent. 2019, 61, 141–148. [Google Scholar] [CrossRef]

- Armagan, A.; Hirzer, M.; Roth, P.M.; Lepetit, V. Accurate Camera Registration in Urban Environments Using High-Level Feature Matching. In Proceedings of the British Machine Vision Conference (BMVC), London, UK, 2 September 2017. [Google Scholar]

- Ji, S.-Y.; Jun, H.-J. Deep learning model for form recognition and structural member classification of east asian traditional buildings. Sustainability 2020, 12, 5292. [Google Scholar] [CrossRef]

- Shon, D.; Noh, B.; Byun, N. Identification and extracting method of exterior building information on 3D map. Buildings 2022, 12, 452. [Google Scholar] [CrossRef]

- Sun, Y.; Malihi, S.; Li, H.; Maboudi, M. Deepwindows: Windows instance segmentation through an improved mask R-CNN using spatial attention and relation modules. ISPRS Int. J. Geo-Inf. 2022, 11, 162. [Google Scholar] [CrossRef]

- Architecture & Urban Research Institute. Hanok Statistics White Paper; Auri Press: Sejong, Republic of Korea, 2017. [Google Scholar]

- AUTODESK, BIM Hanok Tool. 2022. Available online: https://www.autodesk.com/autodesk-university/ko/class/saeloun-seolgye-bangbeobloneul-tonghae-hanogeul-swibgo-ppaleuge-seolgyehal-su-issneun-BIM#video (accessed on 28 November 2022).

- Taylor, L.; Nitschke, G. Improving deep learning with generic data augmentation. In Proceedings of the 2018 IEEE Symposium Series on Computational Intelligence (SSCI), Bengaluru, India, 15 May 2018; pp. 1542–1547. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Van de Sande, K.E.; Uijlings, J.R.; Gevers, T.; Smeulders, A.W. Segmentation as selective search for object recognition. In Proceedings of the 2011 International Conference on Computer Vision, Washington, DC, USA, 6–13 November 2011; pp. 1879–1886. [Google Scholar]

- Detectron2. 2019. Available online: https://github.com/facebookresearch/detectron2 (accessed on 15 November 2022).

- Labelme. 2022. Available online: https://github.com/wkentaro/labelme (accessed on 15 November 2022).

| Components of Building Exterior (7) | Facade Form Elements (21) | Detailed Form Elements (102) |

|---|---|---|

| Placement | Main house types (A) * | ㄱ-shaped plan (a1), ㄷ-shaped plan (a2), ㅁ-shaped plan (a3), ㅡ-shaped plan (a4) |

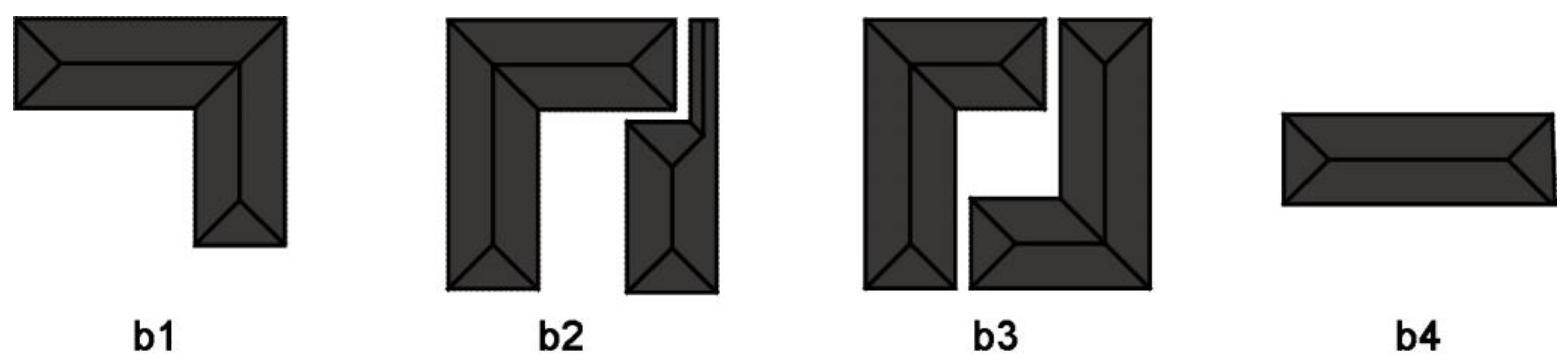

| Layout types (B) * | ㄱ-shaped layout (ㄱ-shaped main house, b1), ㄷ-shaped layout (ㄱ-shaped and ㅡ-shaped gate building, b2), ㅁ-shaped layout (ㄱ-shaped main house and ㄱ-shaped gate building, b3), ㅡ-shaped layout (ㅡ-shaped main house, b4) | |

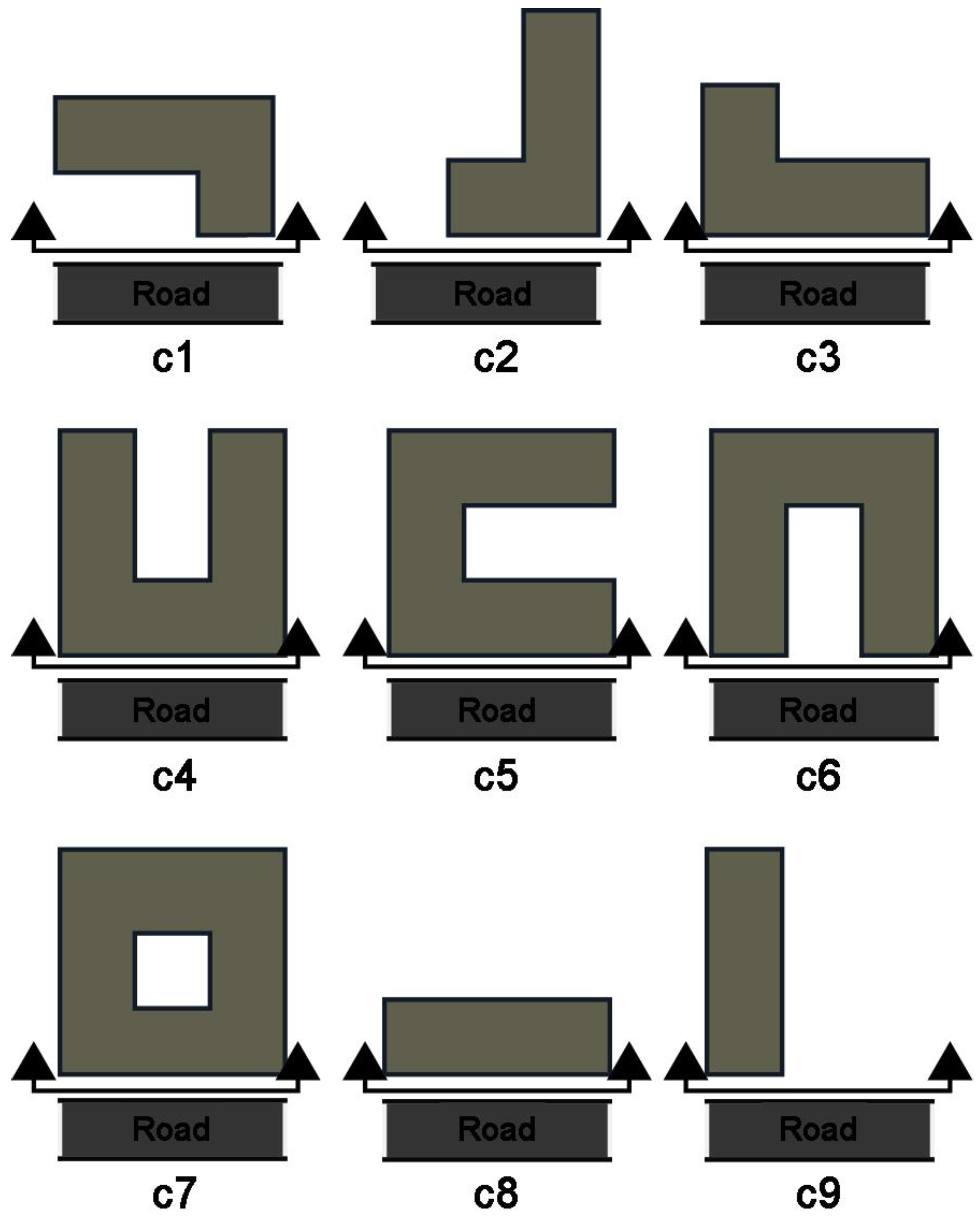

| Orientation of the road (C) * | Under the ㄱ-shaped plan (c1), right of the ㄱ-shaped plan (c2), top of the ㄱ-shaped plan (c3), left of the ㄷ-shaped plan (c4), under the ㄷ-shaped plan (c5), right of the ㄷ-shaped plan (c6), side of the ㅁ-shaped plan (c7), long side of ㅡ-shaped plan (c8), short side of ㅡ-shaped plan (c9) | |

| Roof | Roof types (W) | Hipped roof (w1), hipped-and-gabled roof (w2), gabled roof (w3), gabled roof and hipped-and-gabled roof (w4), hipped-and-gabled roof and hipped roof (w5), gabled roof and hipped roof (w6) |

| Rafter color (X) | Color (x1), colorlessness (x2) | |

| Eave types (Y) | Single eave (y1), double eave (y2) | |

| Main gate | Main gate types (L) | Pyeong daemun gate (flat gate, l1), ilgak daemun gate (two-pillar gate, l2), iron gate (l3), no gate in facade (l5) |

| Location of the main gate on the plan (D) * | No gate in facade (d1), same as the wall line (d2), set back from the wall line (d3) | |

| Location of the main gate in facade (M) * | End of the building (m1), center of the building (m2), outside the building (m3) | |

| Outer wall | Facade width (F) * | ~6 m (f1), 7~9 m (f2), 10~12 m (f3), 13~15 m (f4), 16~18 m (f5), 19~21 m (f6), 22~24 m (f7) |

| Wall configuration (S) * | Flat wall (s1), pillar wall (s2) | |

| Lower part of the wall (T) | Sagoseog (18–20 cm cubic granite, t1), natural stone (t2), layers with roof tile (t3), plastered wall (t4), gray brick (t5), red brick (t6), cement (t7), tile (t8), glass (t9) | |

| Middle part of the wall (U) | Sagoseog (18–20 cm cubic granite, u1), natural stone (u2), layers with roof tile (u3), plastered wall (u4), gray brick (u5), red brick (u6), cement (u7), tile (u8), glass (u9) | |

| Exterior wall decoration (V) | Grid rounding (v1), floral pattern (v2), design pattern (v3), not applicable (v6) | |

| Window types (R) | Grid (r1), jeongja (r3), ahja (r4), bitsal (r5), wanja (r6), general window (r7), yongja (r8), no window (r9) | |

| Stylobate | Stylobate types (K) | Natural stone stylobate (k1), rectangular stone stylobate (k2), cement stylobate (k3), brick stylobate (k4) |

| Fence wall | Fence types (I) | Sagoseog and brick and roof tile (i1), natural stone and roof tile (i2), sagoseog and layers with roof tile and roof tile (i3), sagoseog and roof tile (i4), layers with roof tile and plastered wall and roof tile (i5), sagoseog and floral pattern and roof tile (i6), brick/tile and roof tile (i7), cement and roof tile (i8), no fence (i9) |

| Fence forms (J) | Entire fence at a different level than the road (j1), partial fence at a different level than the road (j2), entire fence at the same level as the road (j3), partial fence at the same level as the road (j4) | |

| Site | Level difference between ground and road (N) * | −1~0 m (n1), 0~0.5 m (n2), 0.5~1 m (n3), 1~2 m (n4), 2~3 m (n5) |

| Level difference of ground (Q) * | Difference between ground level and road (q1), no difference between ground level and road (q2) | |

| Slope of ground (P) | Slope (p1), no slope (p2) |

| Key Values | Description | Item Values (Example) | Data Format | |

|---|---|---|---|---|

| version | JSON Format Version | “4.6.0” | STRING | |

| flags | Null | {…} | STRING | |

| shapes | Shape Format | […] | LIST | |

| └ | label | Image Class | “a1” | STRING |

| └ | points | Bounding box coordinate | {...} | DICTIONARY |

| └ | group_id | Null | Null | STRING |

| └ | Shape_type | Type of Shape | “polygon” | STRING |

| └ | flags | Null | null | STRING |

| ... (continued) | ||||

| Key Values | Description | Item Values (Example) | Data Format | |

|---|---|---|---|---|

| annotations | Annotations | [...] | LIST | |

| └ | id | Order of Images | {...} | INT |

| └ | image_id | Order of Images | “0” | INT |

| └ | category_id | Id of Labels | “13” | INT |

| └ | segmentation | Segmentation Coordinate | […] | DICTIONARY |

| └ | area | Area of Pixel | “115,672.0” | INT |

| └ | bbox | Bounding box coordinate | […] | LIST |

| └ | iscrowd | Single or Multi Object | “0 or 1” | INT |

| ... (continued) | ||||

| mAP | ||

|---|---|---|

| 62.6 | 78.75 | 69.41 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shon, D.; Byun, G.; Choi, S. Identification of Facade Elements of Traditional Areas in Seoul, South Korea. Land 2023, 12, 277. https://doi.org/10.3390/land12020277

Shon D, Byun G, Choi S. Identification of Facade Elements of Traditional Areas in Seoul, South Korea. Land. 2023; 12(2):277. https://doi.org/10.3390/land12020277

Chicago/Turabian StyleShon, Donghwa, Giyoung Byun, and Soyoung Choi. 2023. "Identification of Facade Elements of Traditional Areas in Seoul, South Korea" Land 12, no. 2: 277. https://doi.org/10.3390/land12020277