1. Introduction

Glaucoma is the collective name of a group of eye conditions that results in damage to the optic nerve at the back of the eye, which can cause vision loss. Glaucoma is one of the commonest causes of blindness and is estimated to affect around 80 million people worldwide by 2020 [

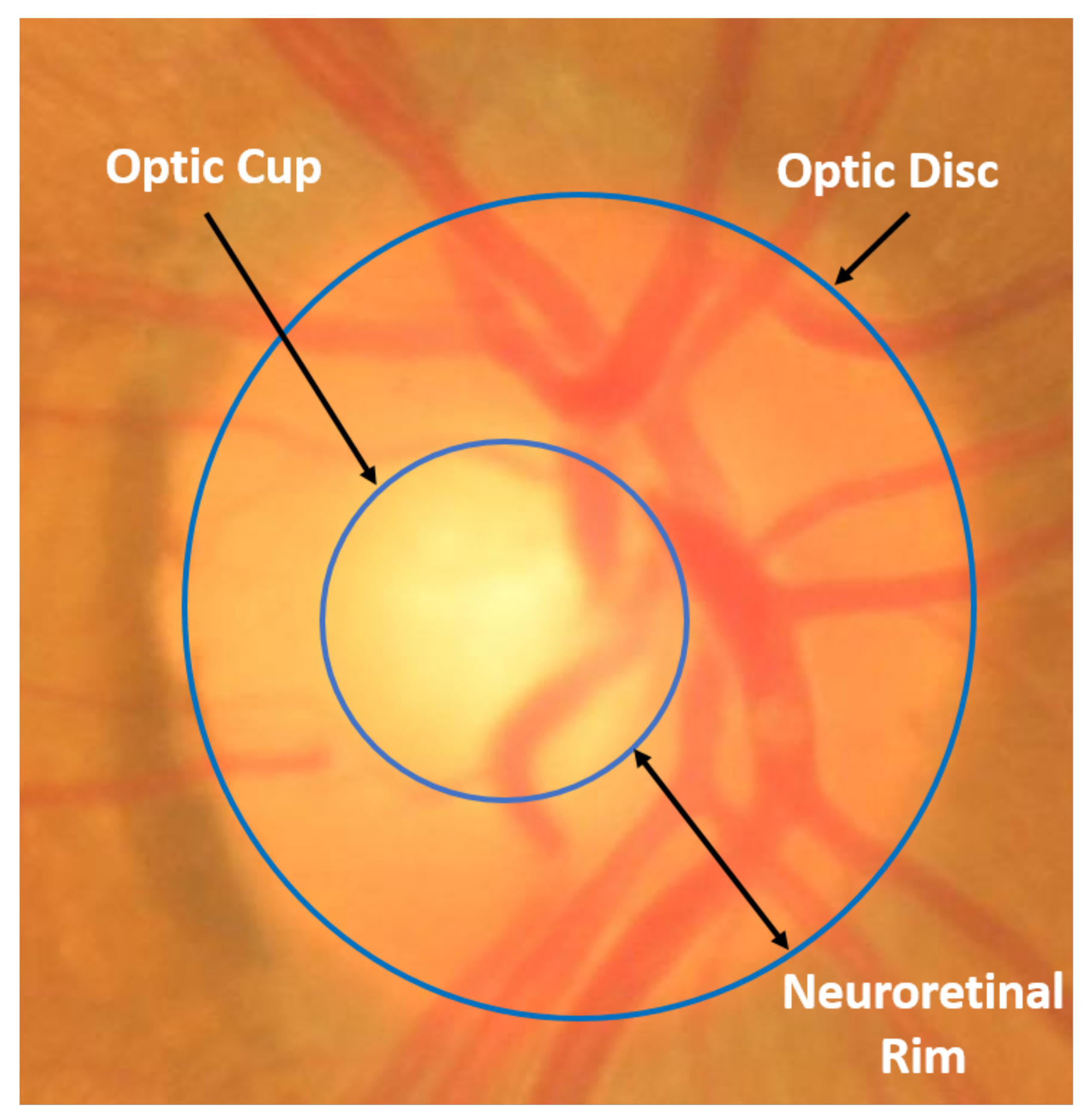

1]. Glaucoma is known as the “silent thief of vision” since, in the early phases of the disease, patients do not have any noticeable pain or symptoms of vision loss. It is only when the disease progresses to a significant loss of peripheral vision that the symptoms potentially leading to total blindness may be noticed. Early detection and timely management of glaucoma is key to helping prevent patients from suffering vision loss. There are many risk factors associated with glaucoma amongst which hypertensive intra ocular pressure (IOP) is the most accepted. It is believed that IOP can cause irreversible damage to the optic nerve head, or optic disc (OD). Since the cornea is transparent, the optic disc can be imaged by several optical imaging techniques, including colour fundus photography. In two dimensional (2D) colour fundus images, the OD can be divided into two regions as shown in

Figure 1: a peripheral zone called the neuroretinal rim and a central white region called the optic cup (OC). The ratio of the size (e.g., vertical height) of the OC to the OD, known as CDR, is often used as an indicator for the diagnosis of glaucoma [

2]. Accurate segmentation of the OD and OC is essential for useful CDR measurement. However, manual delineation of the OD and OC boundaries in fundus images by human experts is a highly subjective and time consuming process, which is impractical for use in busy clinics. On the other hand, automated segmentation approaches using computers are attractive as they can be more objective and much faster then a human grader. Many different approaches to segmenting of the OD and/or OC in fundus images have been proposed in the literature. The existing methods for automated OD and OC segmentation in fundus images can be broadly classified into three main categories: shape-based template matching [

3,

4,

5,

6,

7,

8,

9], active contours and deformable based models [

10,

11,

12,

13,

14,

15,

16,

17,

18], and more recently, machine and deep learning methods [

19,

20,

21,

22,

23,

24,

25,

26,

27,

28,

29,

30,

31,

32,

33,

34,

35]. We give a brief overview of the existing methods below.

(a) Shape-based and template matching models: These methods model the OD as a circular or elliptical object and try to fit a circle using the Hough transform [

4,

5,

8,

9], an ellipse [

3,

6] or a rounded curve using a sliding band filter [

7]. These approaches typically feature in the earlier work in optic disc and cup segmentation. In general, these shape-based modelling approaches to OD and OC segmentation are not robust enough due to intensity inhomogeneity, varying image colour, changes in disc shape by lesions such as exudates present in abnormal images, and the presence of blood vessels inside and around the OD region.

(b) Active contours and deformable based models: These methods have been widely applied for the segmentation of the OD and OC [

10,

11,

12,

13,

14,

15,

16,

17,

18]. Active contours approaches are deformable models which convert the segmentation problem into an energy minimisation problem where different energies are derived to reflect features in the image such as intensity, texture and boundary smoothness. Active contour models are often formulated as non-linear non-convex minimisation problems, thus may not achieve the global minima due to the presence of noise and anomalies. In order to achieve good results in a short time, they require a good initialisation of the OD and OC contour provided either manually or automatically, which suggests their performance is dependent on the initialisation.

(c) Machine- and deep-learning methods: Machine learning, and in particular more recent deep learning based methods have shown promising results for OD and OC segmentation [

19,

20,

21,

22,

23,

24,

25,

26,

27,

28,

29,

30,

31,

32,

33,

34,

35]. The machine learning based approaches [

19,

20,

21,

22,

23,

24,

25,

26,

27] highly depend on the type of extracted features which might be representative of a particular dataset but not of others. Also, extracting the features manually by hand is a tedious task and takes a considerable amount of time. Nowadays, deep learning approaches represented by convolutional neural networks (CNNs) are an active research topic. Such networks can learn to extract highly complex features from the input data automatically [

28,

29,

30,

31,

32,

33,

34,

35]. Lim et al. [

28] applied CNNs to feature-exaggerated inputs emphasizing disc pallor without blood vessel hindering to segment both the OD and OC. In [

29], Maninis et al. used fully-convolutional neural network [

36] based on VGG-16 net [

37] for the optic disc segmentation task. For optic cup segmentation, Guo et al. [

30] used large pixel patch based CNNs where the segmentation was achieved by classification of each pixel patch and post-processing. In [

31], a modified version of the U-Net convolutional network [

38] was presented by Sevastopolsky for automatic optic disc and cup segmentation. Furthermore, Shankaranarayana et al. [

32] proposed a joint optic disc and cup segmentation scheme using fully convolutional and adversarial networks. Moreover, a framework consisting of ensemble learning based CNNs as well as entropy sampling was presented in [

33] by Zilly et al. for optic cup and disc segmentation. In addition to that, Hong Tan et al. [

34] proposed a single CNN with seven layers to segment the OD by classifying every pixel in the image. Most recently, Fu et al. [

35] used a polar transformation with the multi-label deep learning concept by proposing a deep learning architecture, named M-Net, to segment the OD and OC simultaneously. In general, these recent deep learning methods performed well on the basis that they were trained and tested on the same dataset. They might be incapable of achieving robustness and accuracy enough for evaluating the optic disc and cup in clinical practice as there are different type of variations such as population, camera, operators, disease, and image. These concerns of their generalisation ability should be studied thoroughly.

Given the inherent and unsolved challenges encountered in the segmentation of the OD and OC in the aforementioned methods, we propose a new deep learning based method to segment the OD and OC. The proposed method utilises DenseNet incorporated with fully convolutional network (FCN). The FC-DenseNet, which was originally developed for semantic segmentation [

39], is adapted and used for the automatic segmentation of the OD and OC. The contributions of this paper are as follows

We propose a new strategy of using FC-DenseNet for simultaneous semantic segmentation of the cup and disc in fundus images. This deep network, which encourages feature re-use and reduces the number of parameters needed allows improved segmentation, particularly of the OC.

We determine the optic disc diameter (ODD) and use this to crop the images to 2ODD and rescale. This reduces the image to the region of interest automatically, which reduces computation time without requiring excessive reduction of the image resolution. In turn, this enables us to obtain more accurate segmentations of the optic disc and cup which can be used for diagnosis.

We show that this approach achieves state of the art results on a large dataset, outperforming the previous methods. We also demonstrate the effectiveness of this method on other datasets without the need of re-training the model using images from those datasets.

We carried out a most comprehensive study involving five publically available datasets. This allows for evaluation with images from many different devices and conditions, and from patients of different ethnicities in comparison with previous work and to demonstrate the robustness of our method.

The rest of this paper is organised as follows. In

Section 2, the five datasets used in this study as well as the proposed method and associated experiments are described. The obtained results are presented in

Section 3 and discussed in

Section 4. Finally,

Section 5 concludes the paper.

3. Results

All experiments were run on an HP Z440 Workstation with Intel Xeon E5-1620, 16GB RAM and an NVidia Titan X GPU which was used to train the CNN. We split the Origa dataset into 70% for training (10% of training data is randomly utilised for validation) and 30% for independent test set. The resolution of images is resized into

. The performance of the proposed method for segmenting the OD and OC when compared with the ground truth was evaluated using many evaluation metrics such as Dice coefficient (F-Measurement), Jaccard (overlapping), accuracy, sensitivity, and specificity which can be defined as follows:

where

,

,

and

refer to true positive, false positive, true negative, and false negative, respectively.

To assess the performance of proposed system, two evaluation scenarios are considered. First, study the performance of the system by training and testing the model on the same dataset (Origa). Second, study the performance of the system by training the model on a dataset (Origa) and testing it on other four independent datasets including DRIONS-DB, Drishti-GS, ONHSD, and RIM-ONE.

Table 1,

Table 2,

Table 3 and

Table 4 show the performance of the model trained and tested on the Origa for the OD, OC, joint OD-OC segmentation, respectively. It achieves Dice score (F-measurement), Jaccard score (overlap), accuracy, sensitivity, and specificity of 0.8723, 0.7788, 0.9986, 0.8768, and 0.9994, respectively for the OC segmentation and 0.964, 0.9311, 0.9989, 0.9696, and 0.9994 for the OD segmentation. The performance of segmenting rim area located between the OD and OC contours is also calculated. It achieves Dice score (F-measurement), Jaccard score (overlap), accuracy, sensitivity, and specificity of 0.8764, 0.7849, 0.9975, 0.9028, and 0.9985 on the Origa.

For Glaucoma diagnosis, CDR is typically calculated along the vertical line passing through the optic cup centre (superior-inferior) and then a suitable ratio threshold may be defined. Varying the thresholds and comparing with the expert’s glaucoma diagnosis, we achieve an area under the receiving operator curve (AUROC) of 0.7443 based on our segmentations which is very close to the 0.786 achieved using the ground truth segmentations. Since this limits us to considering only a few points on the optic disc, we extend this to incorporate the horizontal CDR (nasal-temporal). That is, we take the average CDR vertically and horizontally and consider thresholds. We thus achieve an AUROC of 0.7776 which is considerably higher than using only the vertical CDR and closer to the AUROC of 0.7717 achieved by using the expert’s annotation.

Table 5 and

Table 6 present the results of proposed system which is trained on the Origa dataset and assessed on the DRIONS-DB and ONHSD datasets, respectively. In these two datasets, only the optic disc segmentation performance are reported because the ground truth of the OC is not provided. The best results have been obtained by considering the cropped green channel along with refinement (G+C+PP) achieving Dice score (F-measurement), Jaccard score (overlap), accuracy, sensitivity, and specificity of 0.9415, 0.8912, 0.9966, 0.9232, and 0.999, respectively, on the DRIONS-DB dataset and 0.9556, 0.9155, 0.999, 0.9376, and, 0.9997 respectively on the ONHSD dataset. Further, the network trained on the Origa is tested on the Drishti-GS and RIM-ONE datasets achieved the results reported in

Table 7 and

Table 8, respectively. Also, the best obtained results on these datasets are achieved using the cropped green channel images (G+C+PP).

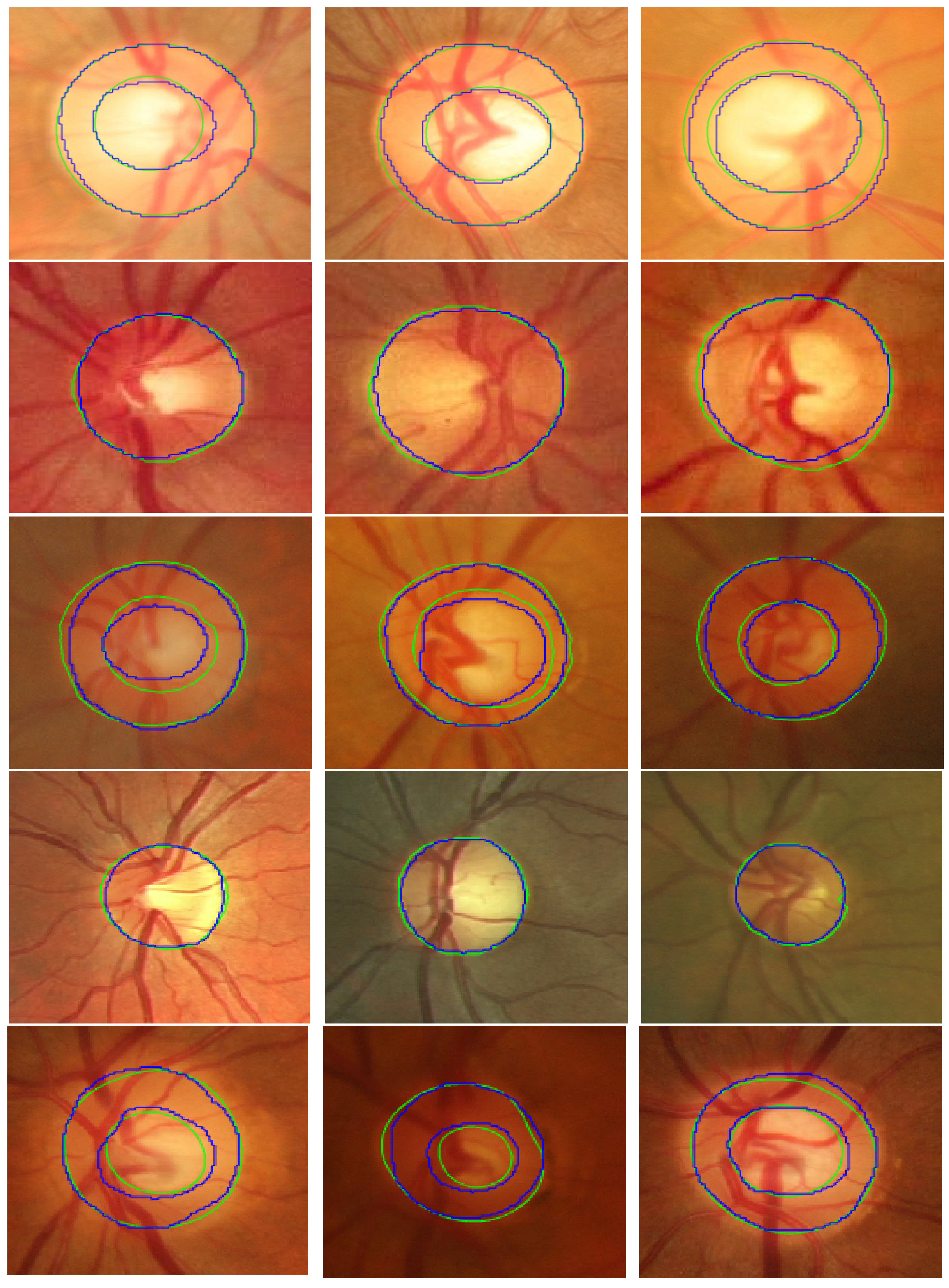

Figure 3 shows examples of the OD and OC segmentation results on fundus image from the five datasets.

4. Discussion

A novel approach based on a fully convolutional Dense network has been proposed for the joint simultaneous segmentation of the OD and OC in colour fundus images. The proposed method achieves the segmentation by extracting complex data representations from retinal images without the need of human intervention. It has been demonstrated that the performance of our proposed generalised system can outperform or achieve comparable results with competing approaches. These findings also reflect the efficiency and usefulness of FC-DenseNet for the OD and OC segmentation.

Moreover, we have demonstrated that the performance appears to be invariant to variations such as population, camera, operators, disease, and image. This is achieved by the following strategies. First, the pre-processing step was used to reduce variations such as population, camera, operators, disease, and image. Second, the DenseNet approach adopted appears to have excellent generalisation capability due to its ability to learn deeper features. Third, we have performed comprehensive evaluations by subjecting the model to five different datasets that gives reasonable diversity. In particular, we only trained the model on one dataset and applied to the other 4 datsets without further training for refinement, the results showed that our approach convincingly perform robust and accurate segmentation on ‘unseen’ images from other datasets.

In terms of comparing our proposed method to the existing methods in the literature,

Table 1,

Table 2,

Table 3 and

Table 4,

Table 9,

Table 10,

Table 11 and

Table 12 present the comparison in terms of Dice score (F-measurement), Jaccard score (overlap), accuracy, sensitivity, and specificity measurements.

Table 1 presents the comparison of the model trained and tested on the Origa with the existing methods proposed for the OD segmentation. The comparison with 15 methods shows that our method outperforms almost all of them. Wong et al. [

20] reported segmentation overlap of 0.9398 which is slightly better than 0.9334 obtained by our proposed system. However, their method only segments the OD region and they used features extracted manually which might be applicable to the dataset they have used but not to other datasets. For the OC region, our proposed method achieves the best results comparing to other existing methods as shown in

Table 2. For joint OD and OC segmentation results shown in

Table 3, our method also outperforms the proposed methods in the literature.

Table 9 and

Table 10 present the comparison of our system trained on Origa and tested on the Drishti-GS and RIM-ONE datasets respectively with the methods were trained and tested on those datasets. The results of our method on Drishti-GS outperform the results reported by Nawaldgi [

58] and Oktoeberza et al. [

59]. Sedai et al. [

27] and Zilly et al. [

33] report Dice and overlap scores slightly better than ours in segmenting the OC and OD regions. However, they used the same dataset (Drishti-GS) for training and testing their system while our system is trained on the Origa images only and tested on the Drishti-GS which make it more generalisable. Furthermore, Guo et al. [

30] and Sevastopolsky [

31] used the same dataset (Drishti-GS) for training and testing, and segmented the OD region only. For the RIM-ONE dataset, we compared our method with three methods as shown in

Table 10. Similarly, these methods were tested on the same dataset used in the learning process which makes the efficacy of their system performance doubtful on other datasets. Our performance with RIM-ONE is lower than that of Drishti-GS and competing methods which suggests that more adaptive generalisation technique may be needed for this one set. However, we have achieved better that the state-of-the-art and all competing methods on the remaining datasets.

Table 4,

Table 11 and

Table 12 show that our system trained only on Origa gives the best results compared to others on Origa, DRIONS-DB, and ONHSD datasets, respectively.

For the rim region segmentation, our system achieved an overlap of 0.7708 and balanced accuracy; which can be obtained by calculating the mean of achieved sensitivity and specificity; of 0.93 on Origa dataset. The most recent published paper for the OD and OC segmentation [

35] reported rim segmentation overlap of 0.767 and balanced accuracy of 0.941 on the Origa. Their reported results are very close to ours although they’ve used a different scheme of data splitting for training and testing. Other existing methods in the literature have not reported rim region segmentation performance.

Furthermore, AUROC curve performance shows excellent agreement between grading done by ophthalmologist and the proposed system for glaucoma diagnosis. Combining the vertical cup to disc ratio with horizontal cup to disc ratio significantly improves the automated grading results and suggests that these diagnosis results could be further improved by using complete profile of the OD.

On the other hand, it should be mentioned here that the proposed method still has some limitations including: (i) the use of the OD centre in the preprocessing stage is based on calculating it from the ground truth data; (ii) despite of the short testing time (<0.5 s), the training time has been a relatively long (≈15 h) and; (iii) the size of training set used in this work is limited to 455 images only which is a relatively small. However, these limitations can be overcome through (i) using an automated localisation of the OD centre location as suggested by the authors in [

60]; (ii) using a more efficient competing resources than that used in this study; and (iii) using a larger-size training set as more annotated data becomes available.