DSP: Schema Design for Non-Relational Applications

Abstract

:1. Introduction

- Design and development of a new model that takes into account both user and system requirements of NoSQL clients and proposes a NoSQL schema accordingly.

- Relationship classifications: mathematical formulas that calculate all relationship expectations and finally classify entities.

- Automatic prioritization of guidelines using a feedforward neural network concept.

- An algorithm that calculates parameters and maps entities based on relationship classifications.

- Accelerating incremental records view through bucketing as opposed to non-relational caching.

2. Overview of the NoSQL Databases

3. Related Work

4. The DSP Model and Components

4.1. DSP Architecture

4.2. Cardinality Notations

4.3. Relationship Classifications

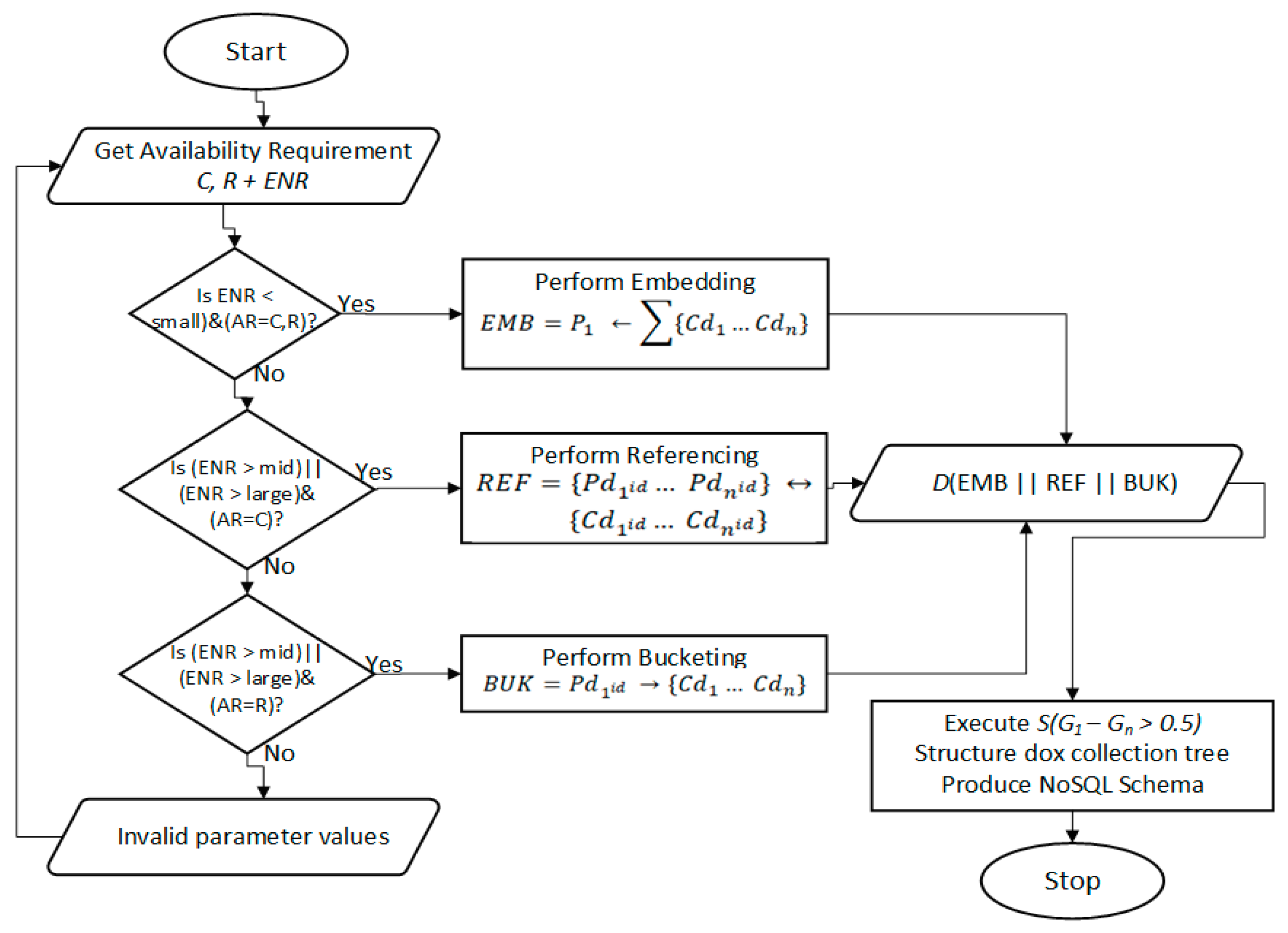

4.3.1. Embedding

4.3.2. Referencing

4.3.3. Bucketing

4.3.4. Embedding and Referencing

4.4. Schema Proposition Guidelines

4.5. DSP Algorithm

| Algorithm 1 DSP Algorithm | |

| Input: E = entities. ENR = Estimated number of records. EMB = Embedding, REF = Referencing, BUK = Bucketing. CRUD Operations Cr (C, R, U, D). Output: NoSQL Schema. Definitions: ≈ approximation, ≫ much less than. | |

| 1. | begin |

| 2. | variables (E(E + ENR), CRUD, i = 1) |

| 3. | ifE.|S| != : then |

| 4. | A ← Availability |

| 5. | Cr ← get preferred CRUD |

| 6. | while i < E.|S| do |

| 7. | ENR ← get ENR(E) |

| 8. | for each item in E do |

| 9. | if (Cr ≜ C||U) then |

| 10. | if ENR[i] ≈ ≫ 0 and ENR[i] ≈ ≪ 9! × 3 then |

| 11. | execute EMB; |

| 12. | else if ENR[i]: = ∞ || ENR[i] ≈ ≫ 11! × 2 then |

| 13. | execute REF; |

| 14. | end; |

| 15. | end; |

| 16. | if (Cr ≜ R) then |

| 17. | execute BUK; |

| 18. | for (j = 0; j <= ROUND (ENR/5! × 2); j++) |

| 19. | Tpg[j] = ROUND (ENR/5! × 2); |

| 20. | end; |

| 21. | foreach (range(Tpg) as pg) |

| 22. | Next = pg->Tpg; |

| 23. | end; |

| 24. | end; |

| 25. | end; |

| 26. | i+ = 1; |

| 27. | end; |

| 28. | else |

| 29. | return null; |

| 30. | end; |

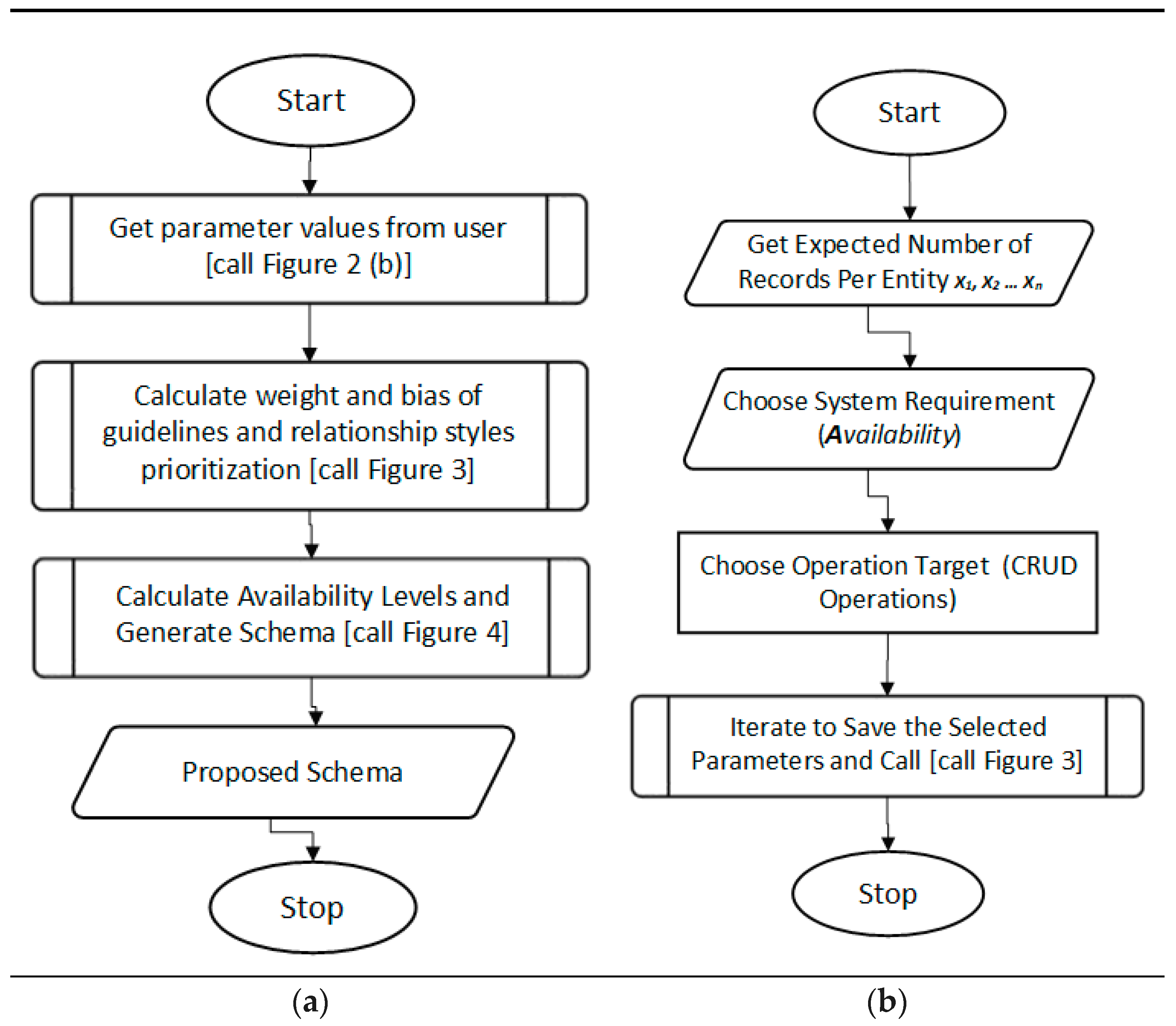

4.6. DSP Model Procedure

4.6.1. Requirements Selection

4.6.2. Requirements Computation

4.6.3. Calculate Availability

5. Method: Pilot Application and Evaluation Description

5.1. Datasets

5.2. Prototype Building Using the Datasets

5.3. Experimental Setup

5.4. The Test Queries

5.5. The Experimental Procedure

5.6. Cost Analysis Models

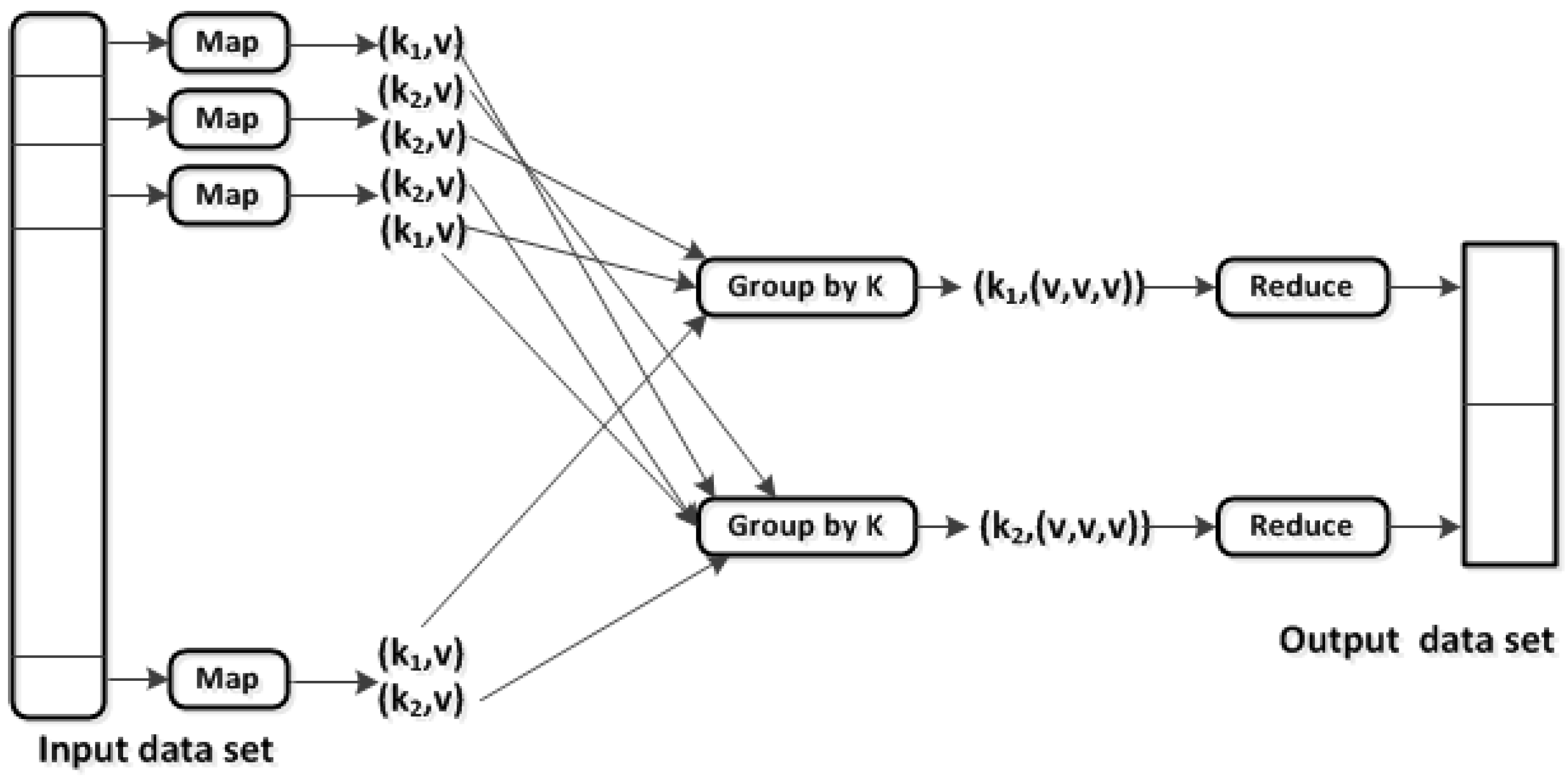

5.6.1. MapReduce Cost Model Analysis

5.6.2. Hypercube Topology and Gaian Topology Cost Model Analysis

- N be the number of nodes in the network, and

- LTx be proportion of fragments of the Logical document collection named X, and

- PLN be the average path length in a network with N nodes, and

- SLL be the size of a logical document lookup message and response (per network step), and

- SQ be the size of a query message and standard (no data) response, and

- SQR be the size of data results per logical document fragment.

- (a)

- Hypercube topology with Content Addressable Network (CAN):where is the query type and is the cost model, like hypercube CAN in this case. Further computations can be explained as

- the cost of logical document lookup in a CAN is PLN * SLL, and

- the cost of sending the query to the specific locations with that logical document is N * LTx * PLN * SQ, and

- the cost of retrieving results is PLN * SQR * N * LTx.

- (b)

- Gaian topology cost analysis model:where is the query type and is the cost model, like Gaian in this case. Further computations can be explained as

- the cost of sending the query to all nodes is 2 * N * SQ, and

- the cost of retrieving results is PLN * N * LTx * SQR.

6. Results and Discussion

6.1. Preliminary Analysis Results on DSP Foundations

6.2. Schema Performance: Evaluation of DSP Schema against Formal Methods Schemas

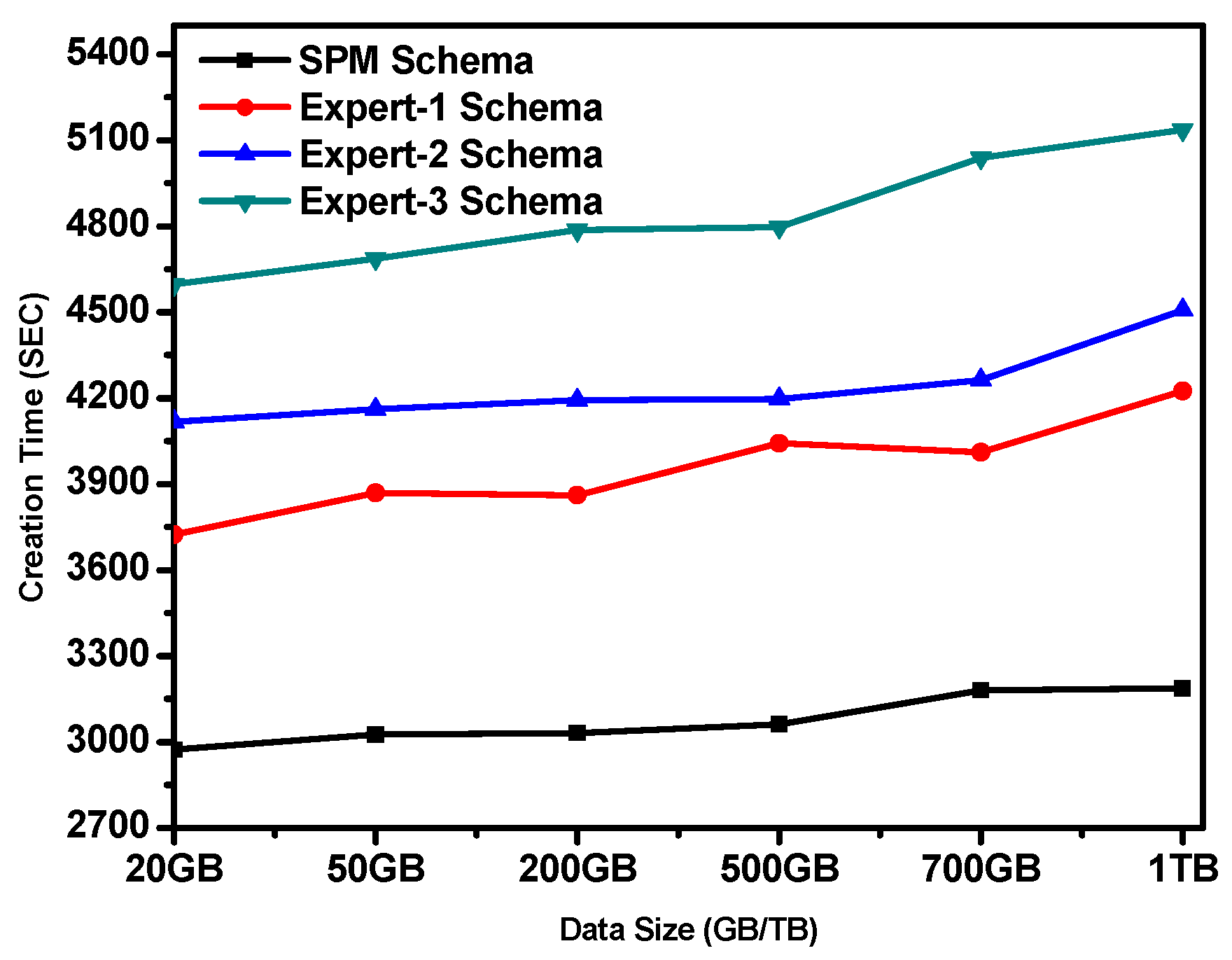

6.2.1. Scenario 1: Create Operation

6.2.2. Scenario 2: Read Operation

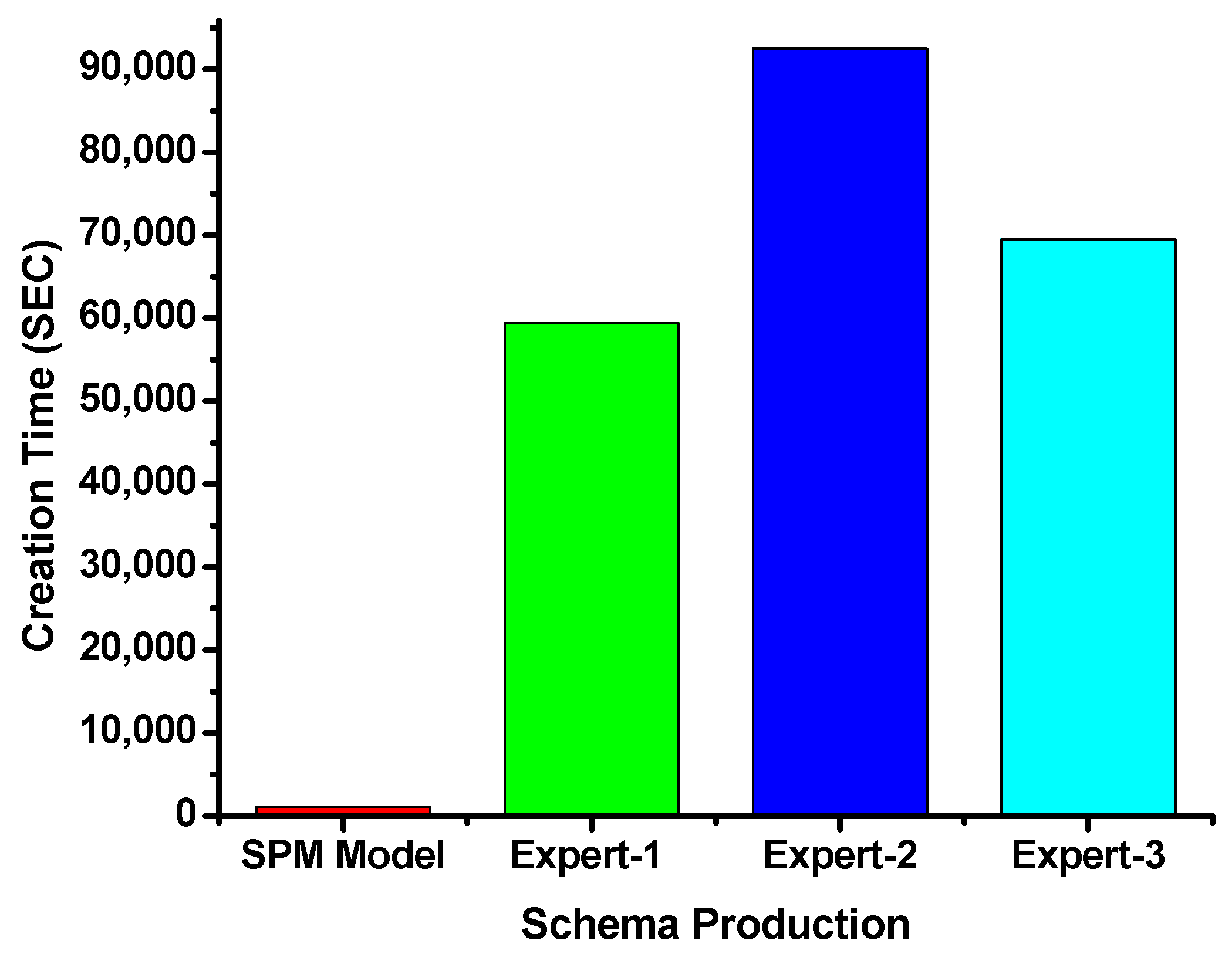

6.3. Schema Generation: Evaluation of DSP Model Process against Formal Methods

6.4. Discussion

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Mior, M.J.; Salem, K.; Aboulnaga, A.; Liu, R. NoSE: Schema design for NoSQL applications. IEEE Trans. Knowl. Data Eng. 2017, 29, 2275–2289. [Google Scholar] [CrossRef]

- Pirzadeh, P.; Carey, M.; Westmann, T. A performance study of big data analytics platforms. In Proceedings of the 2017 IEEE International Conference on Big Data (Big Data 2017), Boston, MA, USA, 11–14 December 2017; pp. 2911–2920. [Google Scholar] [CrossRef]

- Mior, M.J.; Salem, K.; Aboulnaga, A.; Liu, R. NoSE: Schema design for NoSQL applications. In Proceedings of the 2016 IEEE 32nd International Conference on Data Engineering (ICDE), Helsinki, Finland, 16–20 May 2016; Volume 4347, pp. 181–192. [Google Scholar] [CrossRef]

- Zhang, H.; Chen, G.; Ooi, B.C.; Tan, K.L.; Zhang, M. In-Memory Big Data Management and Processing: A Survey. IEEE Trans. Knowl. Data Eng. 2015, 27, 1920–1948. [Google Scholar] [CrossRef]

- Everest, G.C. Stages of Data Modeling Conceptual vs. Logical vs. Physical Stages of Data Modeling, in Carlson School of Management University of Minnesota, Presentation to DAMA, Minnesota. 2016. Available online: http://www.dama-mn.org/resources/Documents/DAMA-MN2016CvLvPstages.pdf (accessed on 12 December 2019).

- Gonzalez-Aparicio, M.T.; Younas, M.; Tuya, J.; Casado, R. A new model for testing CRUD operations in a NoSQL database. In Proceedings of the 2016 IEEE 30th International Conference on Advanced Information Networking and Applications (AINA), Crans-Montana, Switzerland, 23–25 March 2016; Volume 6, pp. 79–86. Available online: http://ieeexplore.ieee.org/lpdocs/epic03/wrapper.htm?arnumber=7474073 (accessed on 4 March 2020). [CrossRef]

- IBM. Why NoSQL? Your Database Options in the New Non-Relational World. Couchbase. 2014. Available online: https://cloudant.com/wp-content/uploads/Why_NoSQL_IBM_Cloudant.pdf (accessed on 1 May 2020).

- Ramez Elmasri, A.; Navathe Shamkant, B. Fundamentals of Database Systems, 6th ed.; Addison-Wesley Publishing Company: Boston, MA, USA, 2010; Volume 6, Available online: https://dl.acm.org/citation.cfm?id=1855347 (accessed on 12 December 2019). [CrossRef]

- Mongo, D.B. How a Database Can Make Your Organization Faster, Better, Leaner. MongoDB White Pap. 2016. Available online: http://info.mongodb.com/rs/mongodb/images/MongoDB_Better_Faster_Leaner.pdf (accessed on 1 January 2020).

- Jovanovic, V.; Benson, S. Aggregate data modeling style. In Proceedings of the Association for Information Systems, Savannah, GA, USA, 8–9 March 2013; pp. 70–75. Available online: http://aisel.aisnet.org/sais2013/15 (accessed on 12 December 2019).

- William, Z. 6 Rules of Thumb for MongoDB Schema Design. MongoDB. 2014. Available online: https://www.mongodb.com/blog/post/6-rules-of-thumb-for-mongodb-schema-design-part-1 (accessed on 23 January 2019).

- Wu, X.; Zhu, X.; Wu, G.Q.; Ding, W. Data mining with big data. IEEE Trans. Knowl. Data Eng. 2014, 26, 97–107. [Google Scholar] [CrossRef]

- Varga, V.; Jánosi, K.T.; Kálmán, B. Conceptual Design of Document NoSQL Database with Formal Concept Analysis. Acta Polytech. Hung. 2016, 13, 229–248. [Google Scholar]

- Imam, A.A.; Basri, S.; Ahmad, R.; Abdulaziz, N.; González-aparicio, M.T. New cardinality notations and styles for modeling NoSQL document-stores databases. In Proceedings of the IEEE Region 10th Conference (TENCON), Penang, MA, USA, 5–8 November 2017; p. 6. [Google Scholar]

- Naheman, W. Review of NoSQL databases and performance testing on HBase. In Proceedings of the 2013 IEEE International Conference on Mechatronics, Vicenza, Italy, 27 February–1 March 2013; pp. 2304–2309. [Google Scholar]

- Truica, C.O.; Radulescu, F.; Boicea, A.; Bucur, I. Performance evaluation for CRUD operations in asynchronously replicated document oriented database. In Proceedings of the 2015 20th International Conference on Control Systems and Computer Science, Bucharest, Romania, 27–29 May 2015; pp. 191–196. [Google Scholar] [CrossRef] [Green Version]

- Craw Cuor, R.; Makogon, D. Modeling Data in Document Databases. United States: Developer Experience & Document DB. 2016. Available online: https://www.youtube.com/watch?v=-o_VGpJP-Q0 (accessed on 12 May 2020).

- Patel, J. Cassandra Data Modeling Best Practices, Part 1, Ebaytechblog. 2012. Available online: http://ebaytechblog.com/?p=1308 (accessed on 2 August 2017).

- Korla, N. Cassandra Data Modeling—Practical Considerations@Netflix, Netflix. 2013. Available online: http://www.slideshare.net/nkorla1share/cass-summit-3 (accessed on 2 August 2017).

- Ron, A.; Shulman-Peleg, A.; Puzanov, A. Analysis and Mitigation of NoSQL Injections. IEEE Secur. Priv. 2016, 14, 30–39. [Google Scholar] [CrossRef]

- Obijaju, M. NoSQL NoSecurity—Security Issues with NoSQL Database. Perficient: Data and Analytics Blog. 2015. Available online: http://blogs.perficient.com/dataanalytics/2015/06/22/nosql-nosecuity-security-issues-with-nosql-database/ (accessed on 21 September 2016).

- Mior, M.J. Automated Schema Design for NoSQL Databases. 2014. Available online: http://dl.acm.org/citation.cfm?id=2602622.2602624 (accessed on 12 May 2019).

- González-Aparicio, M.T.; Younas, M.; Tuya, J.; Casado, R. Testing of transactional services in NoSQL key-value databases. Futur. Gener. Comput. Syst. 2018, 80, 384–399. [Google Scholar] [CrossRef] [Green Version]

- Imam, A.A.; Basri, S.; Ahmad, R.; Watada, J.; González-Aparicio, M.T. Automatic schema suggestion model for NoSQL document-stores databases. J. Big Data 2018, 5, 1–17. [Google Scholar] [CrossRef] [Green Version]

- Imam, A.A.; Basri, S.; Ahmad, R.; González-Aparicio, M.T. Schema proposition model for NoSQL applications. In Proceedings of the 3rd International Conference of Reliable Information and Communication Technology (IRICT 2018), Kuala Lumpur, Malaysia, 23–24 July 2018; Volume 843, pp. 40–46. [Google Scholar] [CrossRef]

- Atzeni, P. Data Modelling in the NoSQL World: A contradiction? In Proceedings of the International Conference on Computer Systems and Technologies, London, UK, 21–22 October 2020; pp. 23–24. [Google Scholar] [CrossRef]

- April, R. NoSQL Technologies: Embrace NoSQL as a Relational Guy—Column Family Store. DB Council. 2016. Available online: https://dbcouncil.net/category/nosql-technologies/ (accessed on 21 April 2017).

- Jatana, N.; Puri, S.; Ahuja, M. A Survey and Comparison of Relational and Non-Relational Database. Int. J. 2012, 1, 1–5. [Google Scholar]

- Bhogal, J.; Choksi, I. Handling big data using NoSQL. In Proceedings of the IEEE 29th International Conference on Advanced Information Networking and Applications Workshops, WAINA 2015, Washington, DC, USA, 24–27 March 2015; pp. 393–398. [Google Scholar] [CrossRef]

- Tauro, C.J.M.; Aravindh, S.; Shreeharsha, A.B. Comparative Study of the New Generation, Agile, Scalable, High Performance NOSQL Databases. Int. J. Comput. Appl. 2012, 48, 1–4. [Google Scholar] [CrossRef]

- Finkelstein, S.J.; Schkolnick, M.; Tiberio, P. Physical database design for relational databases. ACM Trans. Database Syst. 1988, 13, 91–128. [Google Scholar] [CrossRef]

- Agrawal, S.; Chaudhuri, S.; Narasayya, V. Automated selection of materialized views and indexes for SQL databases. In Proceedings of the 29th International Conference on Very Large Data Bases (VLDB 2003), Berlin, Germany, 12–13 September 2003; pp. 496–505. [Google Scholar]

- Zilio, D.C.; Rao, J.; Lightstone, S.; Lohman, G.; Storm, A.; Garcia-Arellano, C.; Fadden, S. DB2 design advisorIntegrated automatic physical database design. In Proceedings of the 2004 VLDB Conference, Toronto, ON, Canada, 31 August–3 September 2004; pp. 1087–1097. [Google Scholar] [CrossRef]

- Dageville, B.; Das, D.; Karl, D.; Yagoub, K.; Mohamed, Z.; Mohamed, Z. Automatic sql tuning in oracle 10 g. In Proceedings of the 2004 VLDB Conference, Toronto, ON, Canada, 31 August–3 September 2004; Volume 30, pp. 1098–1109. [Google Scholar]

- Bruno, N.; Chaudhuri, S. Automatic physical database tuning: A relaxation-based approach. In Proceedings of the 2005 ACM SIGMOD International Conference on Management of Data, Baltimore, MD, USA, 14–16 June 2005; pp. 227–238. [Google Scholar]

- Papadomanolakis, S.; Ailamaki, A. An integer linear programming approach to database design. In Proceedings of the 2007 IEEE 23rd International Conference on Data Engineering Workshop, Istanbul, Turkey, 17–20 April 2007; pp. 442–449. [Google Scholar] [CrossRef]

- Kimura, H.; Huo, G.; Rasin, A.; Madden, S.; Zdonik, S.B. CORADD: Correlation aware database designer for materialized views and indexes. Proc. VLDB Endow. 2010, 3, 1103–1113. [Google Scholar] [CrossRef]

- Dash, D.; Polyzotis, N.; Ailamaki, A. CoPhy: A scalable, portable, and interactive index advisor for large workloads. Proc. VLDB Endow. 2011, 4. [Google Scholar] [CrossRef]

- Papadomanolakis, S.; Ailamaki, A. AutoPart: Automating schema design for large scientific databases using data partitioning. In Proceedings of the International Conference on Statistical and Scientific Database Management (SSDBM), Santorini Island, Greece, 23 June 2004; Volume 16, pp. 383–392. [Google Scholar] [CrossRef] [Green Version]

- Lamb, A.; Fuller, M.; Varadarajan, R.; Tran, N.; Vandier, O.B.; Doshi, L.; Bear, C. The vertica analytic database: Cstore 7 years later. Proc. VLDB Endow. 2012, 5, 1790–1801. [Google Scholar] [CrossRef]

- Rasin, A.; Zdonik, S. An automatic physical design tool for clustered column-stores. In Proceedings of the 16th International Conference on Extending Database Technology, Genoa, Italy, 18 March 2013; pp. 203–214. [Google Scholar] [CrossRef]

- Rao, J.; Zhang, C.; Megiddo, N.; Lohman, G. Automating physical database design in a parallel database. In Proceedings of the 2002 ACM SIGMOD International Conference on Management of Data, Madison, WI, USA, 3–6 June 2002; pp. 558–569. [Google Scholar] [CrossRef] [Green Version]

- Agrawal, S.; Chaudhuri, S.; Das, A.; Narasayya, V. Automating layout of relational databases. In Proceedings of the 19th International Conference on Data Engineering, Bangalore, India, 5–8 March 2003; pp. 607–618. [Google Scholar] [CrossRef]

- Ozmen, O.; Salem, K.; Schindler, J.; Daniel, S. Workload-aware storage layout for database systems. In Proceedings of the 2010 ACM SIGMOD International Conference on Management of Data, Indianapolis, IN, USA, 6 June 2010; pp. 939–950. [Google Scholar] [CrossRef]

- Varadarajan, R.; Bharathan, V.; Cary, A.; Dave, J.; Bodagala, S. DB designer: A customizable physical design tool for Vertica Analytic Database. In Proceedings of the 2014 IEEE 30th International Conference on Data Engineering, Chicago, IL, USA, 31 March–4 April 2014; pp. 1084–1095. [Google Scholar] [CrossRef]

- Bertino, E.; Kim, W. Indexing Techniques for Queries on Nested Objects. IEEE Trans. Knowl. Data Eng. 1989, 1, 196–214. [Google Scholar] [CrossRef]

- Lawley, M.J.; Topor, R.W. A Query language for EER schemas. In Proceedings of the Australasian Database Conference, Sydney, Australia, 24–27 May 1994; pp. 292–304. [Google Scholar]

- Vajk, T.; Deák, L.; Fekete, K.; Mezei, G. Automatic NOSQL schema development: A case study. In Proceedings of the IASTED International Conference on Parallel and Distributed Computing and Networks, PDCN 2013, Innsbruck, Austria, 11–13 February 2013; pp. 656–663. [Google Scholar] [CrossRef] [Green Version]

- Chen, P.; Shan, P. The entity-relationship model—Toward a unified view of data. ACM Trans. Database Syst. 1976, 1, 9–36. Available online: http://dl.acm.org/citation.cfm?id=320434.320440 (accessed on 12 May 2020). [CrossRef]

- Everest, G.C. Basic data structure models explained with a common example. In Proceedings of the Fifth Texas Conference on Computing Systems, Austin, TX, USA, 18–19 October 1976; pp. 18–19. [Google Scholar]

- Dembczy, K. Evolution of Database Systems; Intelligent Decision Support Systems Laboratory (IDSS) Poznan, University of Technology: Poznan, Poland, 2015. [Google Scholar]

- Rumbaugh, J.; Jacobson, I.; Booch, G. The Unified Modeling Language Reference Manual; Pearson Higher Education: New York, NY, USA, 2004. [Google Scholar]

- Tsatalos, O.G.; Solomon, M.H.; Ioannidis, Y.E. The GMAP: A versatile tool for physical data independence. VLDB J. 1996, 5, 101–118. [Google Scholar] [CrossRef]

- Stonebraker, M.; Abadi, D.J.; Batkin, A.; Chen, X.; Cherniack, M.; Ferreira, M.; Lau, E.; Lin, A.; Madden, S.; O’Neil, E.; et al. C-Store: A Column-Oriented DBMS. In Proceedings of the 31st International Conference on Very Large Data Bases, Trondheim, Norway, 24 August 2005; Volume 5, pp. 553–564. [Google Scholar]

- Kleppe, A.; Warmer, J.; Bast, W. MDA explained: The model driven architecture: Practice and promise. In Computer & Technology Books; Addison-Wesley Professional: Boston, MA, USA, 2003. [Google Scholar]

- Object Management Group. Decision Model and Notation. Version 1.0. 2015. Available online: http://www.omg.org/spec/DMN/1.0/PDF/ (accessed on 17 September 2020).

- Object Management Group. Decision Model and Notation. Version 1:1. 2016. Available online: http://www.omg.org/spec/DMN/1.1/PDF/ (accessed on 17 September 2020).

- Kharmoum, N.; Ziti, S.; Rhazali, Y.; Omary, F. An automatic transformation method from the E3value model to IFML model: An MDA approach. J. Comput. Sci. 2019, 15, 800–813. [Google Scholar] [CrossRef] [Green Version]

- Dörndorfer, J.; Florian, H.; Christian, S. The SenSoMod-Modeler—A model-driven architecture approach for mobile context-aware business applications. In Proceedings of the International Conference on Advanced Information Systems Engineering, Grenoble, France, 8–12 June 2020; pp. 75–86. [Google Scholar]

- Allison, M.; Robinson, M.; Rusin, G. An autonomic model-driven architecture to support runtime adaptation in swarm behavior. In Proceedings of the Future of Information and Communication Conference, San Francisco, CA, USA, 14–15 March 2019; pp. 422–437. [Google Scholar]

- Atzeni, P.; Francesca, B.; Luca, R. Uniform access to NoSQL systems. Inf. Syst. 2014, 43, 117–133. [Google Scholar] [CrossRef]

- Tan, Z.; Shivnath, B. Tempo: Robust and self-tuning resource management in multi-tenant parallel databases. Proc. VLDB Endow. 2015. [Google Scholar] [CrossRef] [Green Version]

- Li, C. Transforming relational database into HBase: A case study. In Proceedings of the 2010 IEEE International Conference on Software Engineering and Service Sciences, ICSESS 2010, Beijing, China, 16–18 July 2010; pp. 683–687. [Google Scholar] [CrossRef]

- Max, C.; El Malki, M.; Kopliku, A.; Teste, O.; Tournier, R. Benchmark for OLAP on NoSQL technologies comparing NoSQL multidimensional data warehousing solutions. In Proceedings of the 2015 IEEE 9th International Conference on Research Challenges in Information Science (RCIS), Athens, Greece, 13–15 May 2015; pp. 480–485. [Google Scholar]

- Zhang, D.; Wang, Y.; Liu, Z.; Dai, S. Improving NoSQL Storage Schema Based on Z-Curve for Spatial Vector Data. IEEE Access 2019, 7, 78817–78829. [Google Scholar] [CrossRef]

- Buchschacher, N.; Fabien, A.; Julien, B. No-SQL Databases: An Efficient Way to Store and Query Heterogeneous Astronomical Data in DACE. ASPC 2019, 523, 405. [Google Scholar]

- Mozaffari, M.; Nazemi, E.; Eftekhari-Moghadam, A.M. Feedback control loop design for workload change detection in self-tuning NoSQL wide column stores. Expert Syst. Appl. 2020, 142, 112973. [Google Scholar] [CrossRef]

- Störl, U.; Tekleab, A.; Klettke, M.; Scherzinger, S. In for a Surprise when Migrating NoSQL Data. In Proceedings of the 2018 IEEE 34th International Conference on Data Engineering (ICDE), Paris, France, 16–20 April 2018; p. 1662. [Google Scholar]

- Ramzan, S.; Imran, S.B.; Bushra, R.; Waheed, A. Intelligent Data Engineering for Migration to NoSQL Based Secure Environments. IEEE Access 2019, 7, 69042–69057. [Google Scholar] [CrossRef]

- Imam, A.A.; Basri, S.; Ahmad, R.; María, T.G.-A.; Watada, J.; Ahmad, M. Data Modeling Guidelines for NoSQL Document-Store Databases. Int. J. Adv. Comput. Sci. Appl. 2018, 9. [Google Scholar] [CrossRef]

- Hunter, S.; Simpson, J.T. Machines, Systems, Computer-Implemented Methods, and Computer Program Products to Test and Certify Oil and Gas Equipment. U.S. Patent 10,196,878, February 2019. Available online: https://patentimages.storage.googleapis.com/a9/61/da/18454297348f9b/US10196878.pdf (accessed on 21 May 2020).

- Bondiombouy, C.; Valduriez, P. Query processing in multistore systems: An overview. Int. J. Cloud Comput. 2016, 5, 309. [Google Scholar] [CrossRef]

- An, M.; Wang, Y.; Wang, W. Using index in the MapReduce framework. In Proceedings of the 2010 12th International Asia-Pacific Web Conference, Washington, DC, USA, 6 April 2010; Volume 52, pp. 52–58. [Google Scholar] [CrossRef]

- Stone, P.D.; Dantressangle, P.; Bent, G.; Mowshowitz, A.; Toce, A.; Szymanski, B.K. Coarse grained query cost models for DDFDs. In Proceedings of the 4th Annual Conference of the International Technology Alliance, London, UK, 15–16 September 2010; Volume 1, pp. 1–3. [Google Scholar]

| Cardinalities | Notations | Examples | |

|---|---|---|---|

| 1 | One-to-One | 1:1 | Person ←→ Id card |

| 2 | One-to-Few | 1:F | Author ←→ Addresses |

| 3 | One-to-Many | 1:M | Post ←→ Comments |

| 4 | One-to-Squillions | 1:S | System ←→ Logs |

| 5 | Many-to-Many | M:M | Customers←→ Products |

| 6 | Few-to-Few | F:F | Employees ←→ tasks |

| 7 | Squillions-to-Squillions | S:S | Transactions ←→ Logs |

| S/N | Styles | Notations |

|---|---|---|

| 1 | Embedding | EMB |

| 2 | Referencing | REF |

| 3 | Bucketing | BUK |

| Read Operations | Write Operations | ||||

|---|---|---|---|---|---|

| Code | Total Scores | Priority Level | Code | Total Scores | Priority Level |

| G6 | 12 | 1 | G1 | 17 | 1 |

| G1 | 16 | 2 | G6 | 25 | 2 |

| G17 | 30 | 3 | G4 | 28 | 3 |

| G15 | 34 | 4 | G5 | 35 | 4 |

| G2 | 52 | 5 | G7 | 50 | 5 |

| G7 | 54 | 6 | G18 | 70 | 6 |

| G11 | 67 | 7 | G10 | 79 | 7 |

| G9 | 69 | 8 | G11 | 91 | 8 |

| G3 | 76 | 9 | G14 | 93 | 9 |

| G19 | 92 | 10 | G12 | 95 | 10 |

| G5 | 97 | 11 | G8 | 99 | 11 |

| G4 | 106 | 12 | G15 | 101 | 12 |

| G22 | 123 | 13 | G3 | 103 | 13 |

| G8 | I26 | 14 | G9 | 118 | 14 |

| G12 | 129 | I5 | G13 | 125 | 15 |

| G10 | 141 | 16 | G16 | 136 | 16 |

| G13 | I57 | 17 | G2 | 138 | 17 |

| G14 | 161 | 18 | G21 | 152 | 18 |

| G18 | 168 | 19 | G19 | 169 | 19 |

| G23 | 186 | 20 | G23 | 173 | 20 |

| G16 | I87 | 21 | G22 | 190 | 21 |

| G20 | 199 | 22 | G20 | 196 | 22 |

| G21 | 202 | 23 | G17 | 201 | 23 |

| Query Models | Model Application | Type | |

|---|---|---|---|

| 1 | QUERYt data WHERE time = Tx WITH Int(T) = Φ | Read, Update | Single selectivity |

| 2 | SaveFew (INTO collection of each node) WITH Int(T) = Φ | Create | Single selectivity |

| 3 | QUERYt data WHERE time >= Tx AND time >= Ty AND Ty-Tx < Δ WITH Int(T) = Φ. | Read, Update | Drill-down query |

| 4 | SaveMany (INTO collection of each node) WITH Int(T) = Φ | Create | Drill-down query |

| 5 | QUERY data WHERE time >= Tx AND time <= Ty AND Ty-Tx > Δ WITH ComNo > Δ OR ComL > Δ IF ComD in (Tx, Ty) WITH Int(T) = Φ | Read, Update | Roll-up query |

| 6 | BulkSave (INTO collection of each node) WITH Int(T) = Φ | Create | Roll-up query |

| Data | Corresponding Records | Number of Target Records | ||

|---|---|---|---|---|

| Insert | Select | Update | ||

| 50 GB | 145,000,000 | 145,000,000 (100%) | 25,000,000 (20%) | 7,250,000 (5%) |

| 100 GB | 290,000,000 | 290,000,000 (100%) | 116,000,000 (40%) | 29,000,000 (10%) |

| 300 GB | 870,000,000 | 870,000,000 (100%) | 435,000,000 (50%) | 174,000,000 (20%) |

| 500 GB | 1,450,000,000 | 1,450,000,000 (100%) | 870,000,000 (60%) | 435,000,000 (30%) |

| 1 TB | 2,900,000,000 | 2,900,000,000 (100%) | 2,030,000,000 (70%) | 1,160,000,000 (40%) |

| Data Size | DSP Schema | Expert1 | Expert2 | Expert3 |

|---|---|---|---|---|

| 10 MB | 17 | 498 | 263 | 352 |

| 20 MB | 29 | 529 | 307 | 368 |

| 30 MB | 41 | 553 | 328 | 406 |

| 40 MB | 48 | 599 | 342 | 462 |

| 50 MB | 69 | 717 | 408 | 588 |

| 60 MB | 81 | 767 | 476 | 626 |

| Data Size | DSP Schema | Expert1 | Expert2 | Expert3 |

|---|---|---|---|---|

| 20 GB | 1079 | 1637 | 1592 | 1711 |

| 50 GB | 1103 | 1656 | 1646 | 1774 |

| 200 GB | 1123 | 1671 | 1674 | 1878 |

| 500 GB | 1134 | 1758 | 1665 | 1912 |

| 700 GB | 1141 | 1853 | 1741 | 2081 |

| 1 TB | 1157 | 1973 | 1879 | 2145 |

| Data Size | DSP Schema | Expert1 | Expert2 | Expert3 |

|---|---|---|---|---|

| 20 GB | 2973 | 3724 | 4118 | 4597 |

| 50 GB | 3026 | 3870 | 4162 | 4687 |

| 200 GB | 3032 | 3862 | 4193 | 4786 |

| 500 GB | 3062 | 4042 | 4197 | 4797 |

| 700 GB | 3181 | 4011 | 4263 | 5039 |

| 1 TB | 3188 | 4225 | 4508 | 5137 |

| Data Size | DSP Schema | Expert1 | Expert2 | Expert3 |

|---|---|---|---|---|

| 20 GB | 41 | 387 | 59 | 412 |

| 50 GB | 53 | 378 | 72 | 428 |

| 200 GB | 62 | 412 | 91 | 456 |

| 500 GB | 71 | 425 | 103 | 482 |

| 700 GB | 90 | 451 | 129 | 503 |

| 1 TB | 95 | 491 | 174 | 571 |

| Data Size | DSP Schema | Expert1 | Expert2 | Expert3 |

|---|---|---|---|---|

| 20 GB | 446 | 2492 | 1367 | 2466 |

| 50 GB | 449 | 2501 | 1389 | 2575 |

| 200 GB | 478 | 2517 | 1396 | 2691 |

| 500 GB | 486 | 2530 | 1514 | 2677 |

| 700 GB | 514 | 2556 | 1542 | 2876 |

| 1 TB | 519 | 2587 | 1614 | 3174 |

| Data Size | DSP Schema | Expert1 | Expert2 | Expert3 |

|---|---|---|---|---|

| 20 GB | 1027 | 4095 | 2634 | 3120 |

| 50 GB | 1037 | 4116 | 2673 | 3141 |

| 200 GB | 1068 | 4314 | 2803 | 3349 |

| 500 GB | 1064 | 4542 | 2948 | 3767 |

| 700 GB | 1190 | 4764 | 3148 | 3889 |

| 1 TB | 1225 | 4937 | 3441 | 4262 |

| Data Size | DSP Schema | Expert1 | Expert2 | Expert3 |

|---|---|---|---|---|

| 20 GB | 2355 | 5666 | 5028 | 5922 |

| 50 GB | 2567 | 5977 | 5446 | 6622 |

| 200 GB | 2778 | 5991 | 5957 | 7298 |

| 500 GB | 2785 | 6004 | 6171 | 7838 |

| 700 GB | 2903 | 6330 | 6412 | 8288 |

| 1 TB | 2975 | 6760 | 7270 | 9231 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Imam, A.A.; Basri, S.; Ahmad, R.; Wahab, A.A.; González-Aparicio, M.T.; Capretz, L.F.; Alazzawi, A.K.; Balogun, A.O. DSP: Schema Design for Non-Relational Applications. Symmetry 2020, 12, 1799. https://doi.org/10.3390/sym12111799

Imam AA, Basri S, Ahmad R, Wahab AA, González-Aparicio MT, Capretz LF, Alazzawi AK, Balogun AO. DSP: Schema Design for Non-Relational Applications. Symmetry. 2020; 12(11):1799. https://doi.org/10.3390/sym12111799

Chicago/Turabian StyleImam, Abdullahi Abubakar, Shuib Basri, Rohiza Ahmad, Amirudin A. Wahab, María T. González-Aparicio, Luiz Fernando Capretz, Ammar K. Alazzawi, and Abdullateef O. Balogun. 2020. "DSP: Schema Design for Non-Relational Applications" Symmetry 12, no. 11: 1799. https://doi.org/10.3390/sym12111799