Recognition of Traffic Sign Based on Bag-of-Words and Artificial Neural Network

Abstract

:1. Introduction

2. Related Work

3. Research Methodology

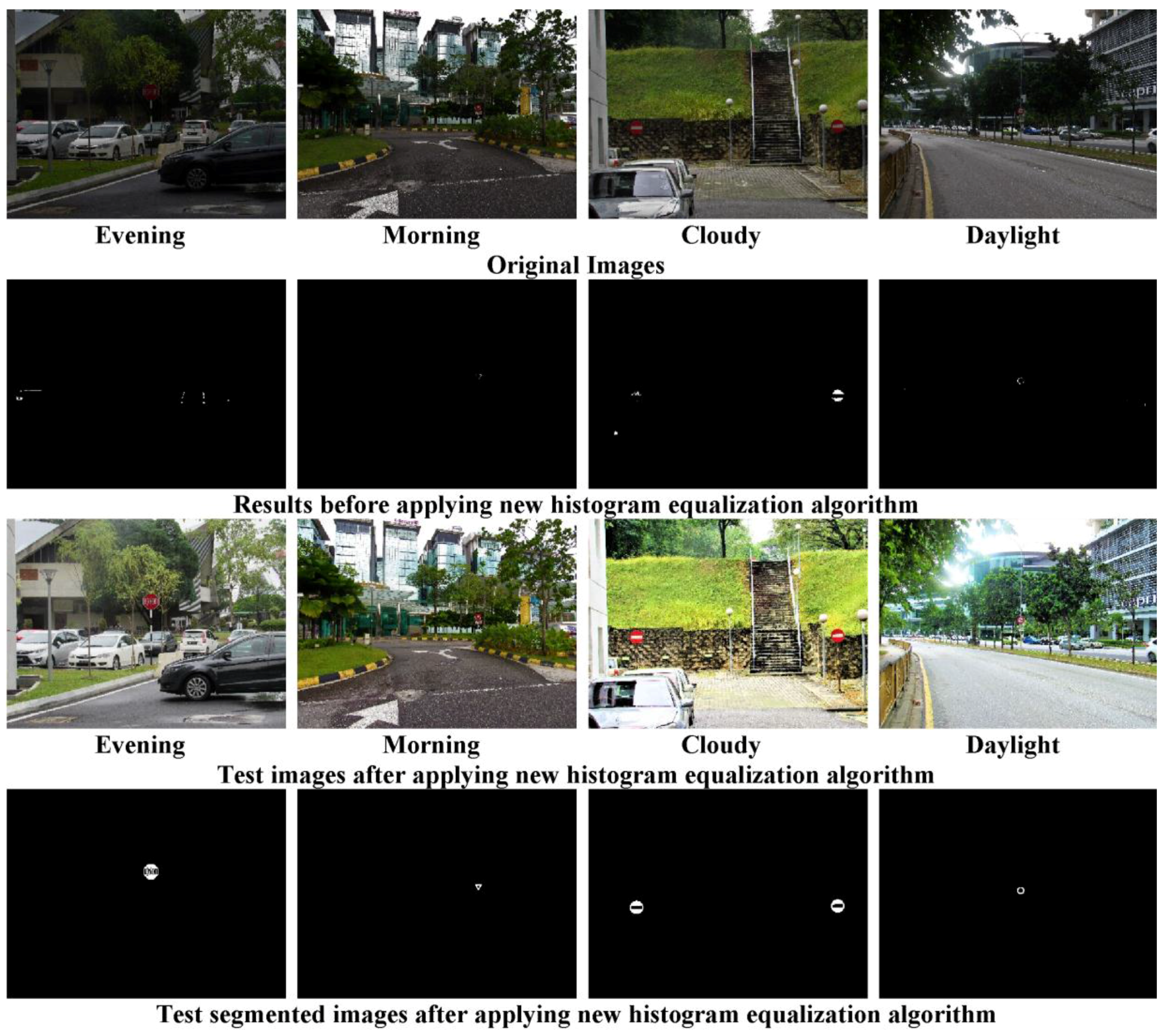

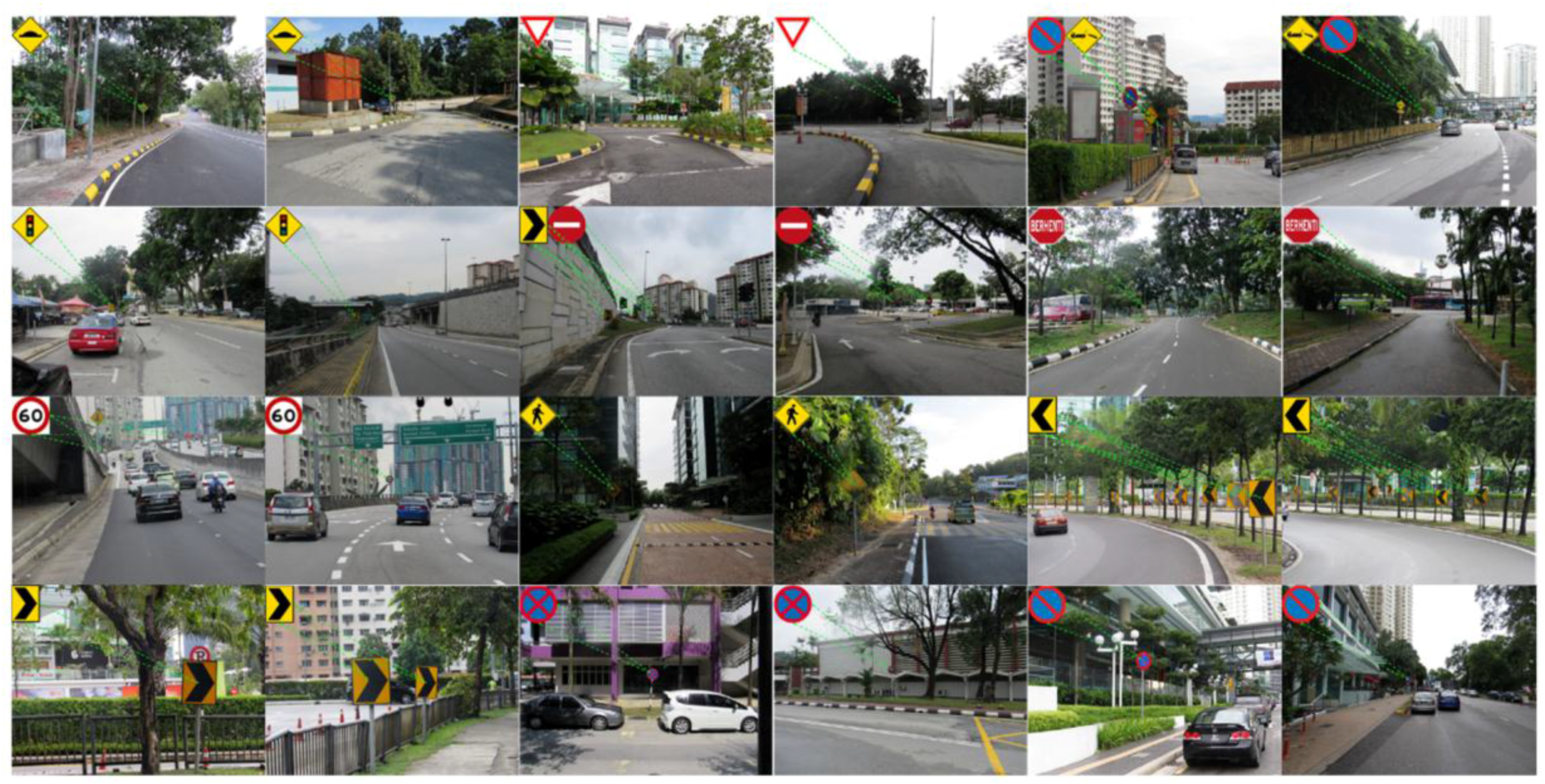

3.1. Acquisition of Image

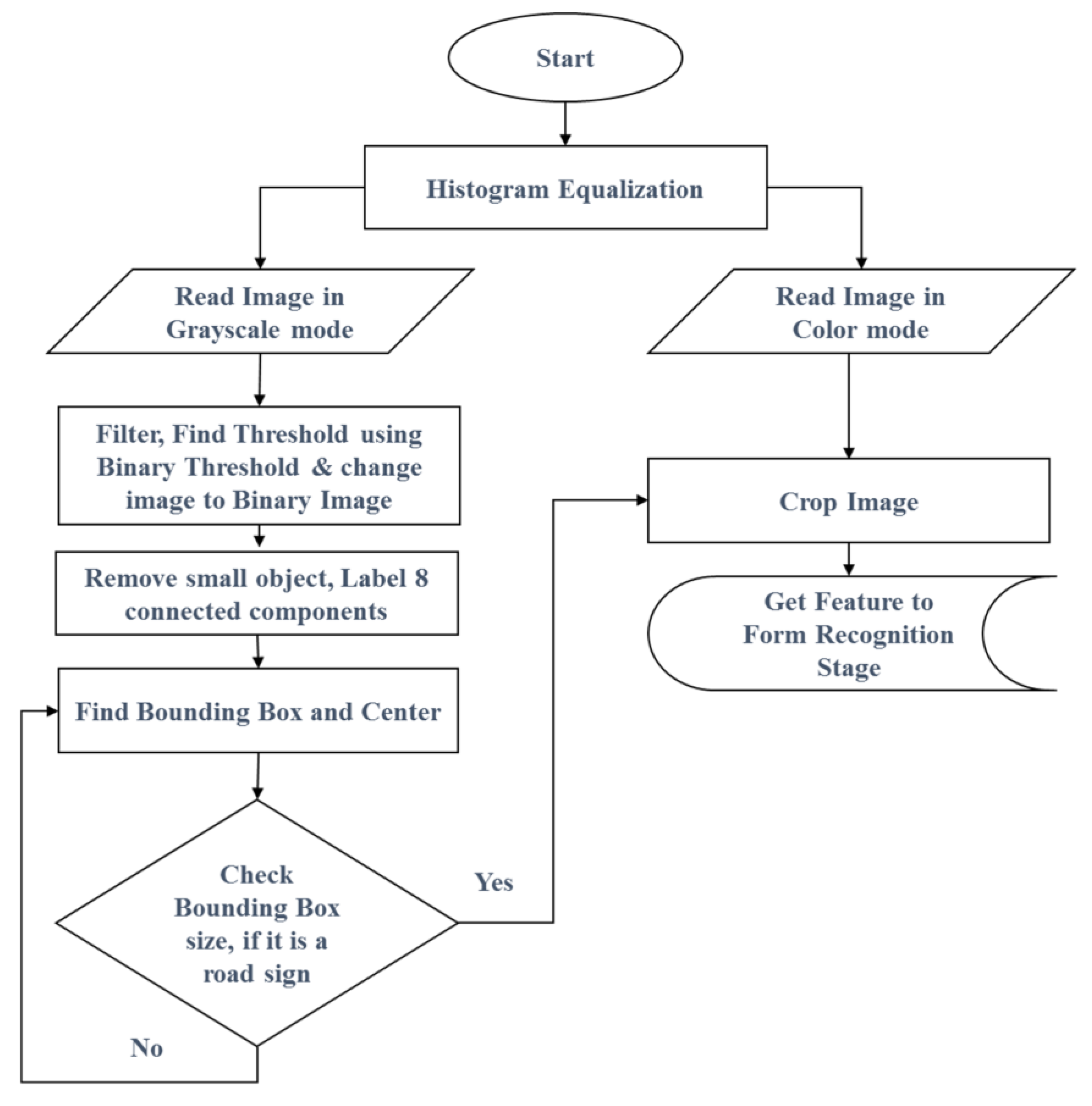

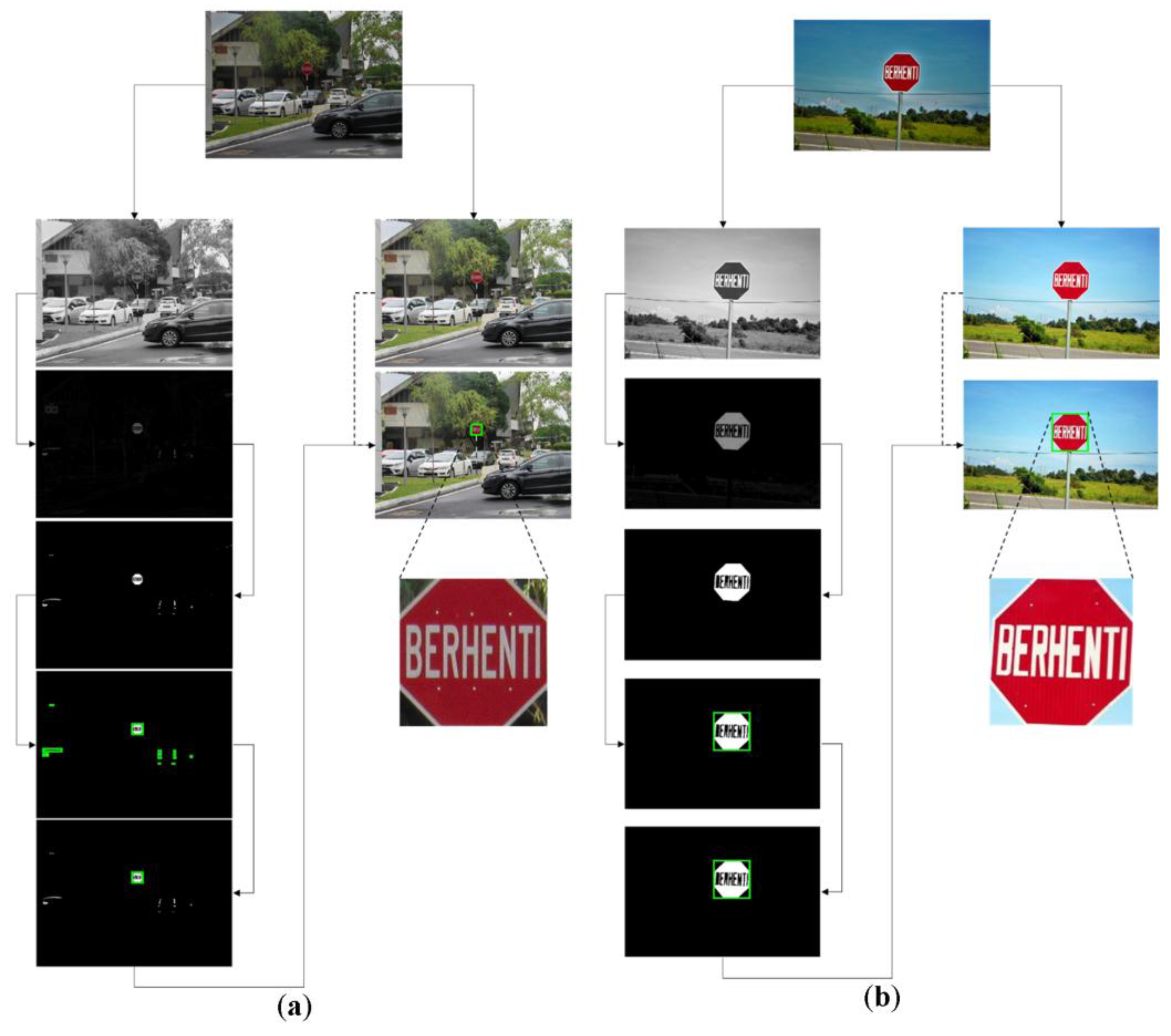

3.2. Traffic Sign Detection Phase

3.3. Traffic Sign Recognition Phase

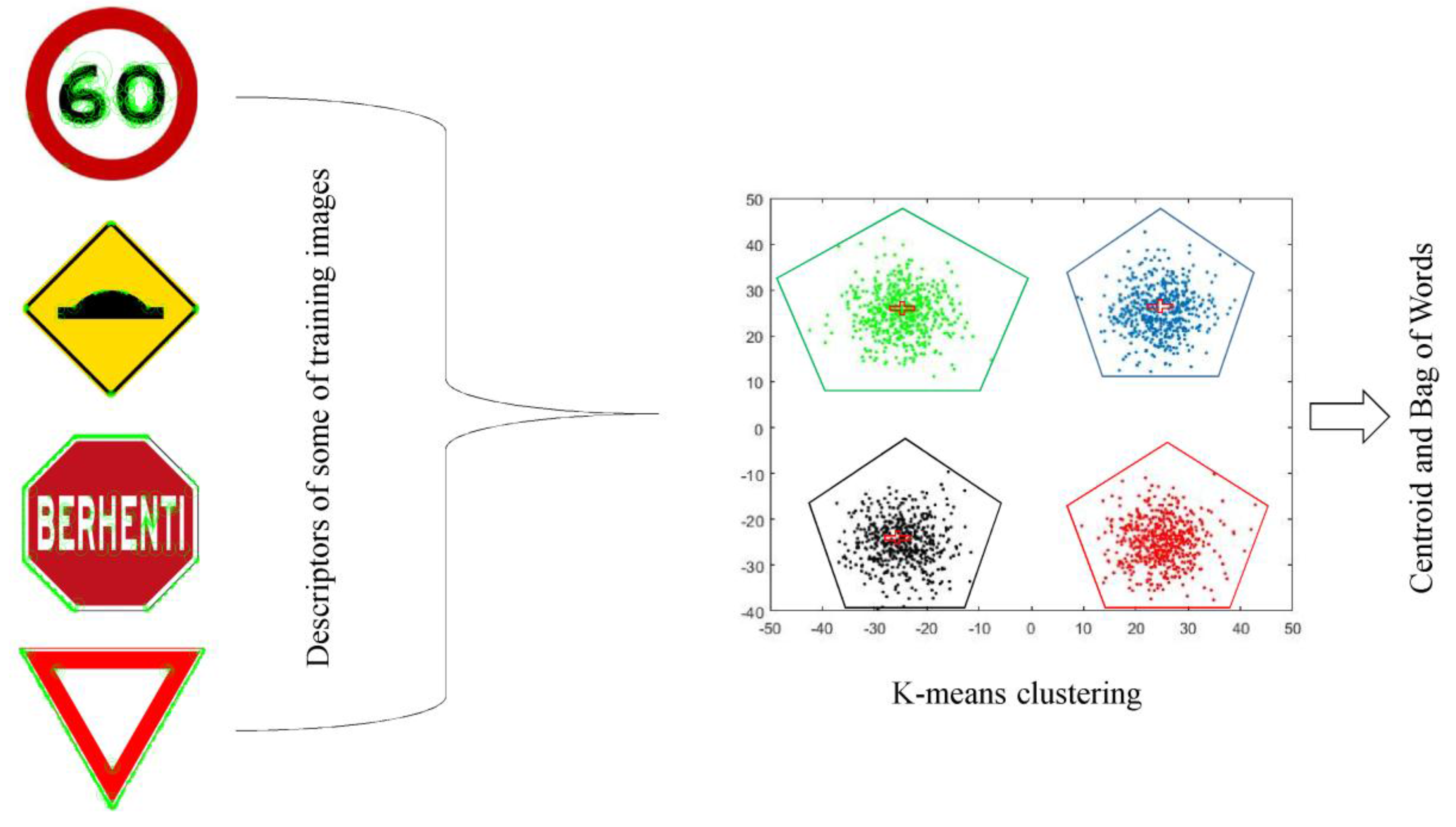

3.3.1. Feature Extraction

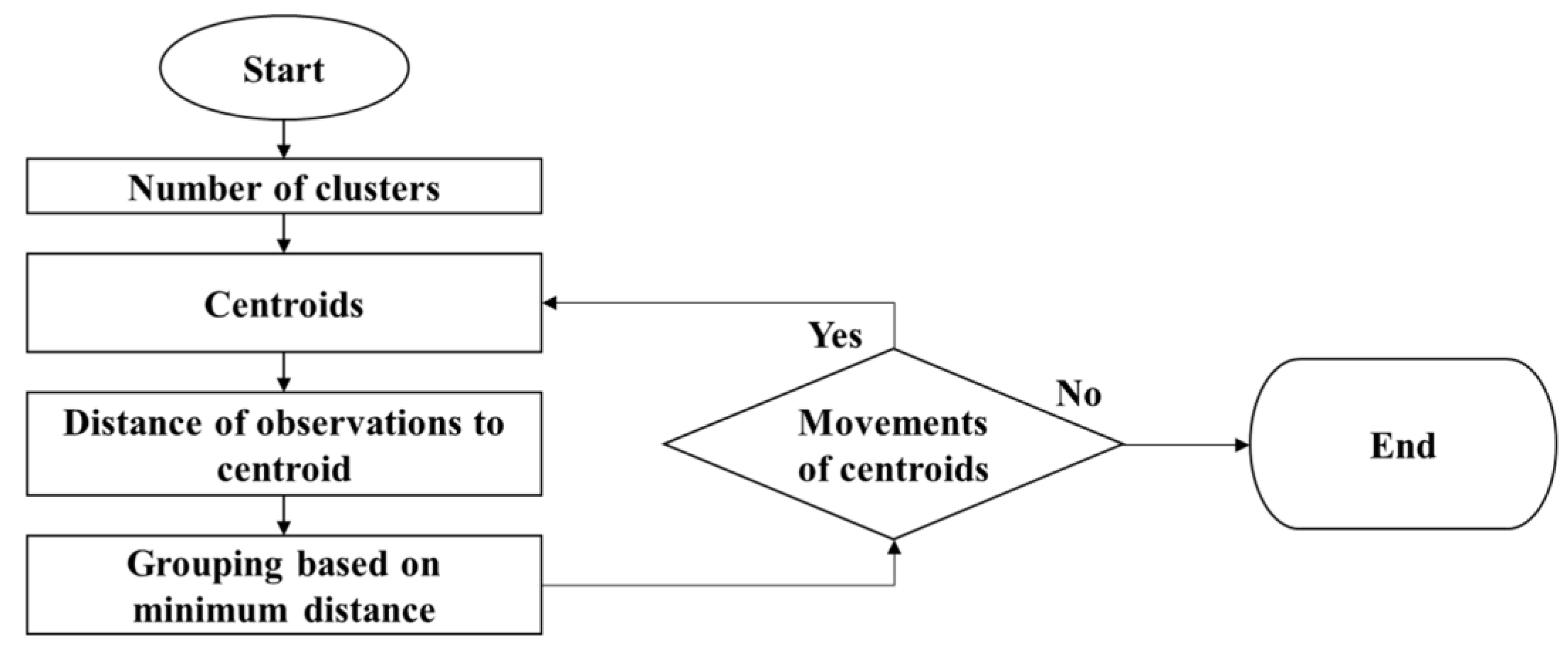

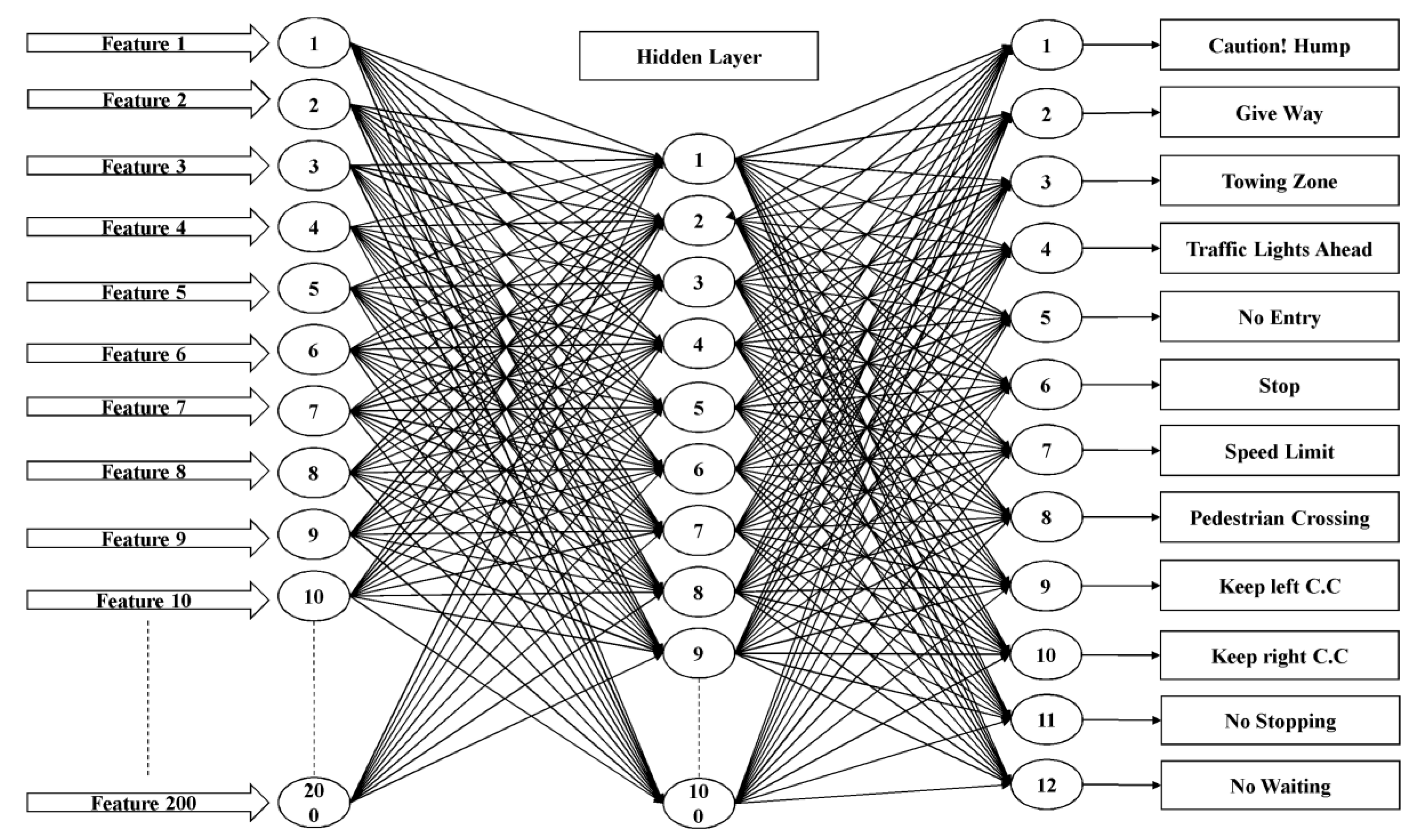

3.3.2. Classification of Traffic Sign

SVM

Ensemble Subspace kNN

ANN

3.4. Experimental Setup

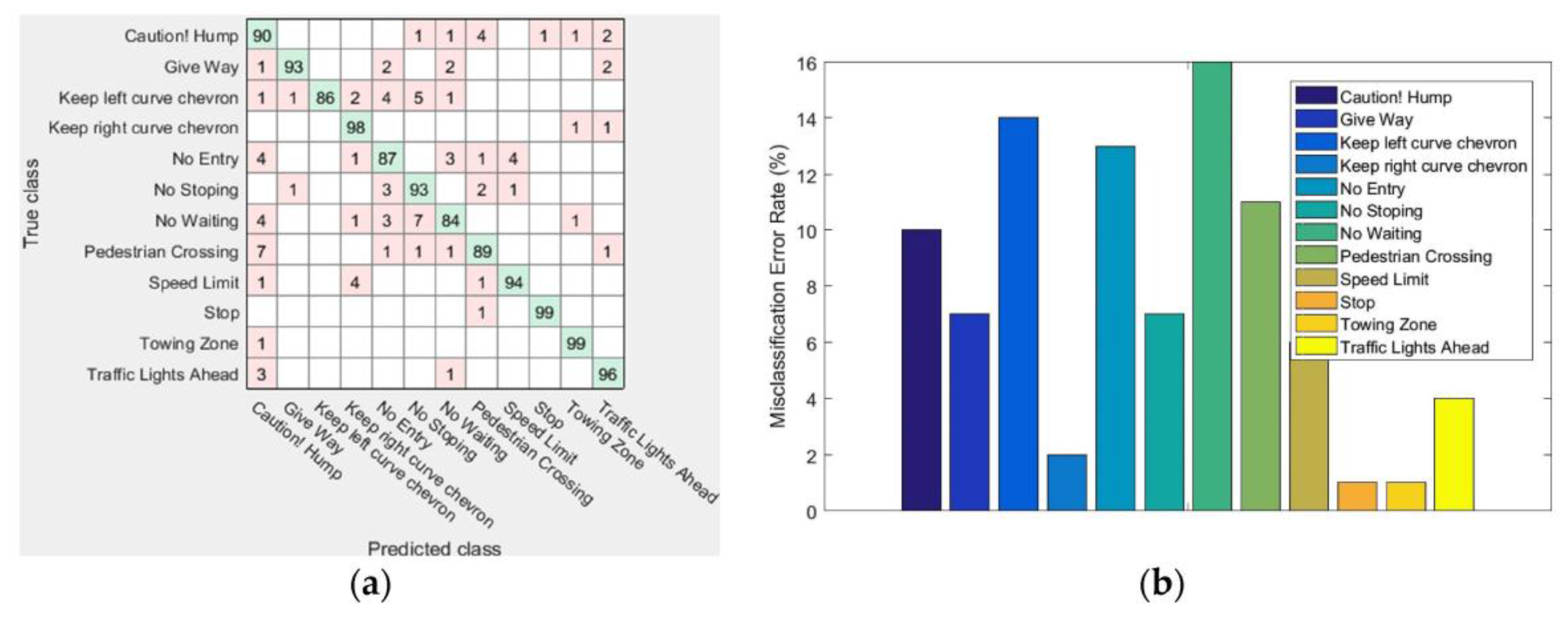

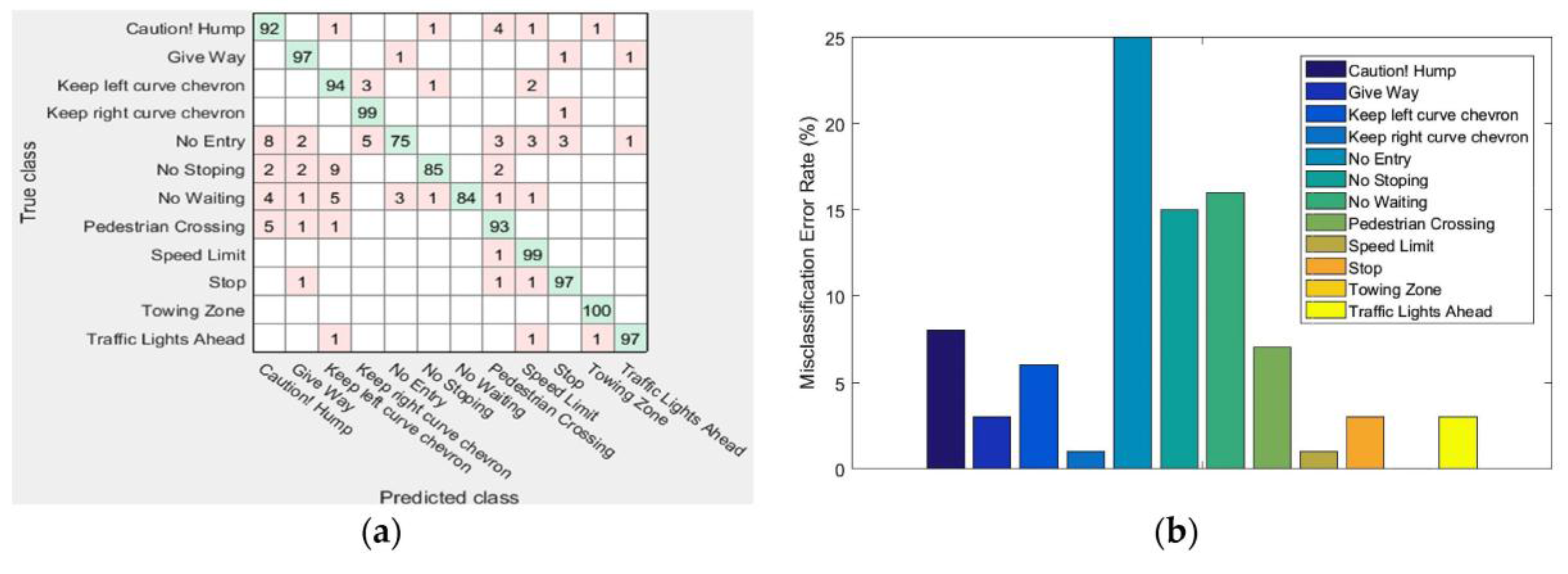

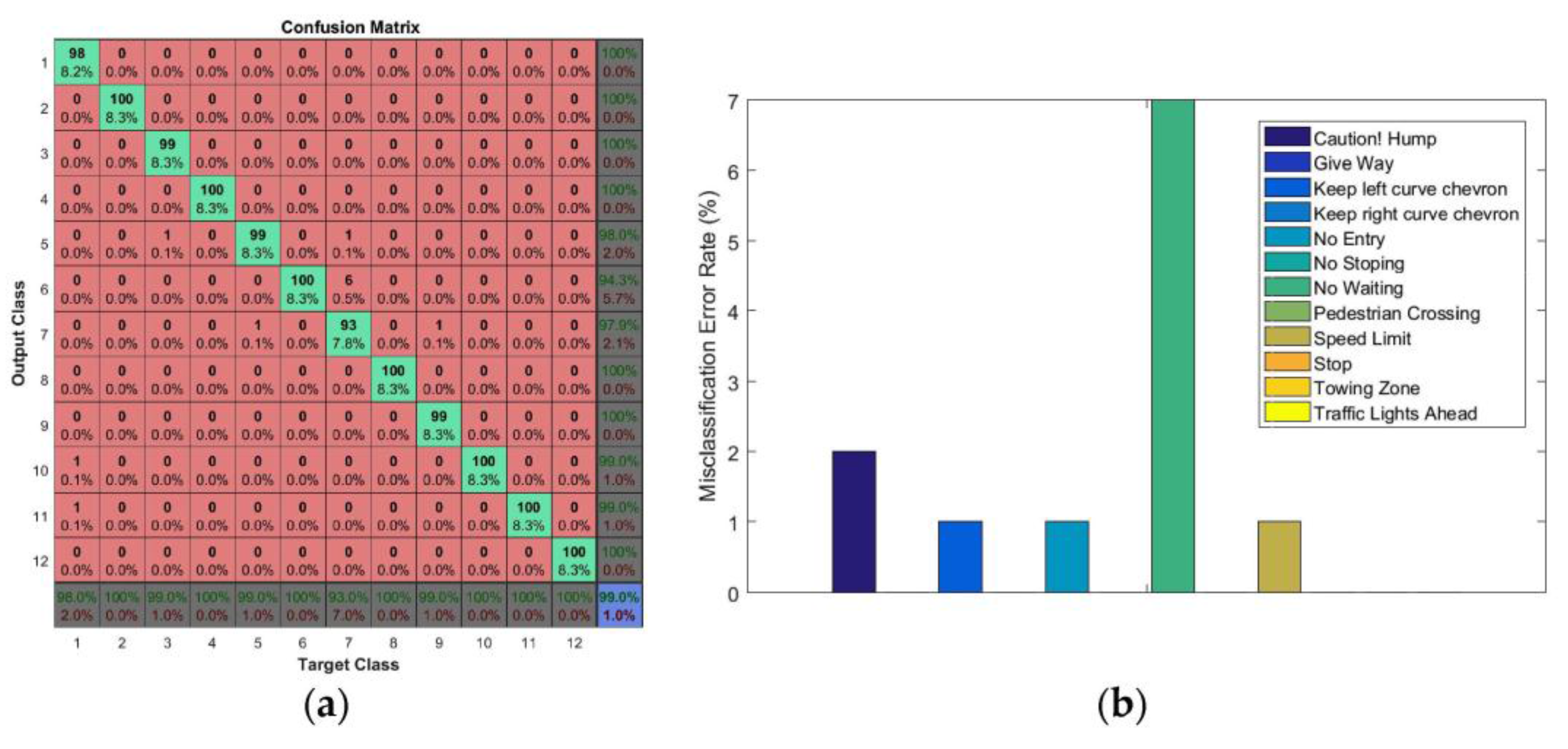

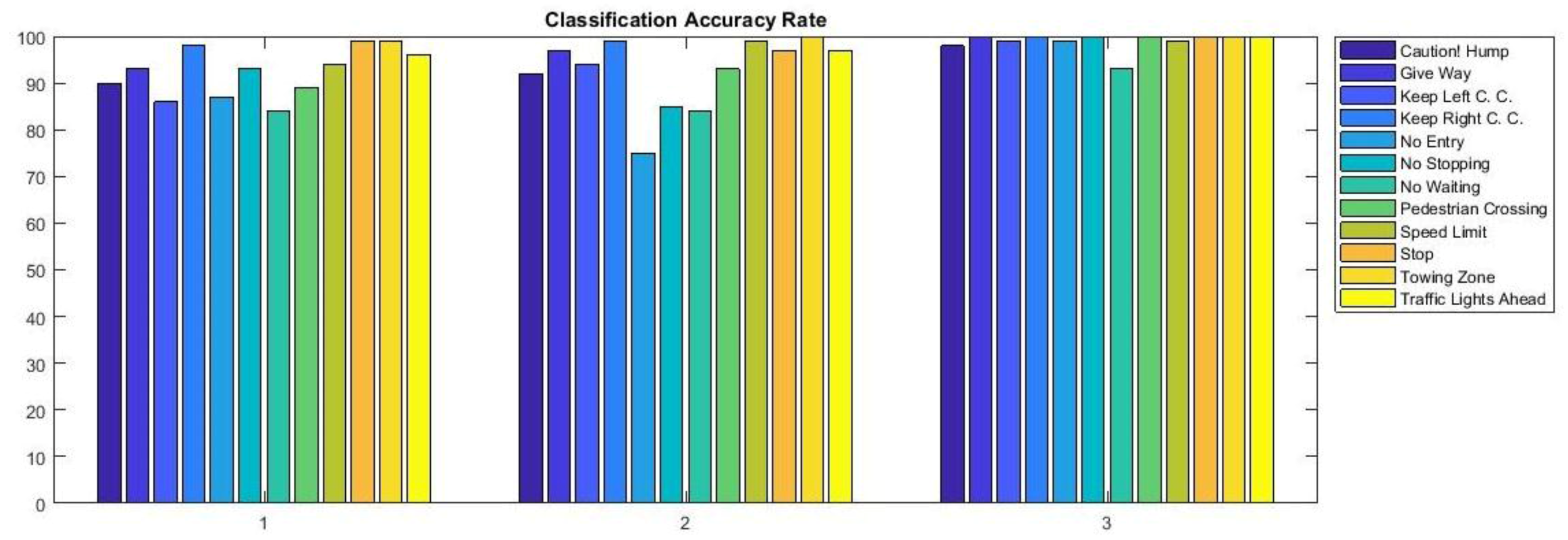

4. Experimental Results

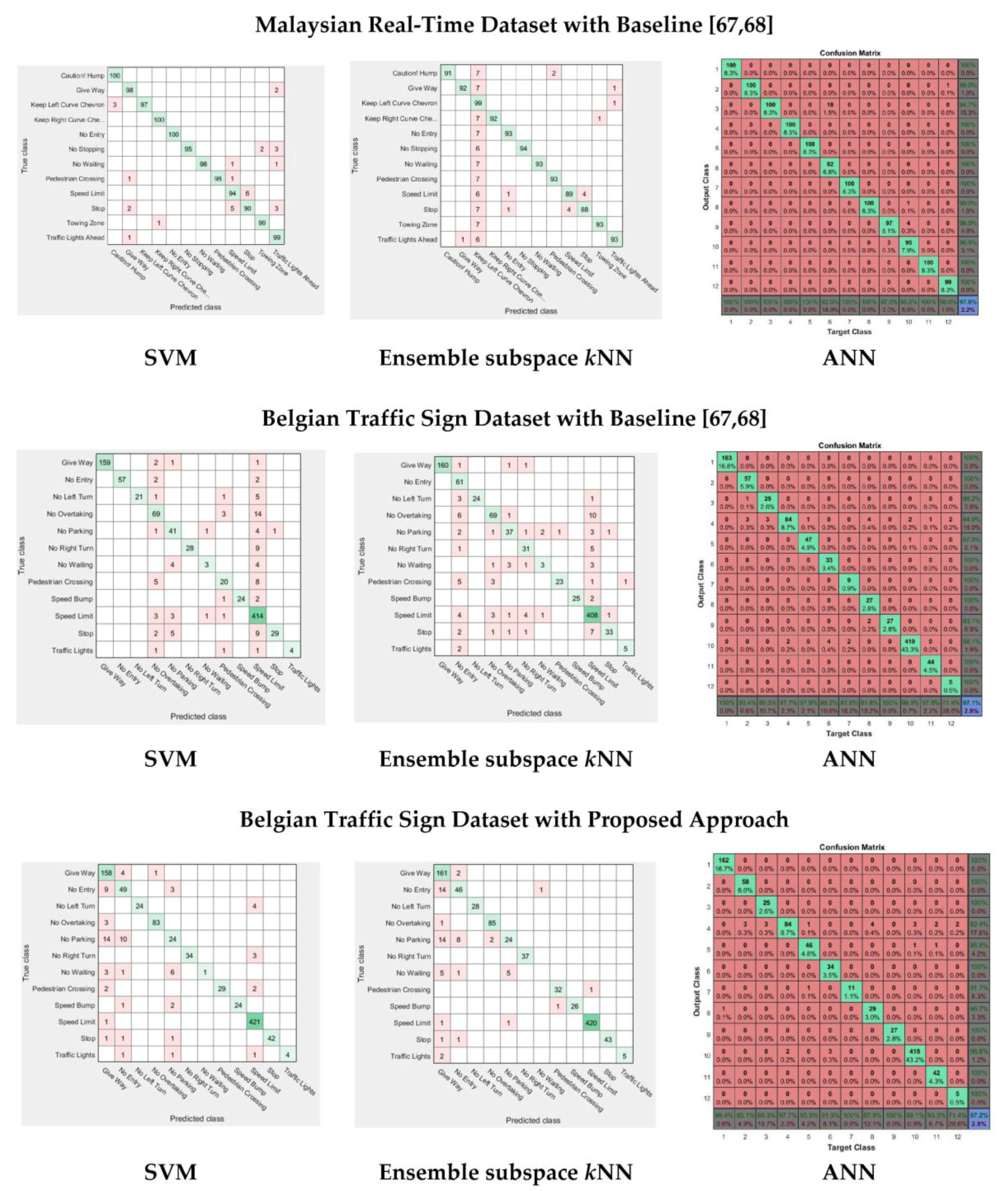

5. Discussion

6. Significance of Dataset and Proposed Approach

7. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Greenhalgh, J.; Mirmehdi, M. Real-time detection and recognition of road traffic signs. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1498–1506. [Google Scholar] [CrossRef]

- Hengstler, M.; Enkel, E.; Duelli, S. Applied artificial intelligence and trust—The case of autonomous vehicles and medical assistance devices. Technol. Forecast. Soc. Chang. 2016, 105, 105–120. [Google Scholar] [CrossRef]

- Stewart, B.T.; Yankson, I.K.; Afukaar, F.; Medina, M.C.H.; Cuong, P.V.; Mock, C. Road traffic and other unintentional injuries among travelers to developing countries. Med. Clin. N. Am. 2016, 100, 331–343. [Google Scholar] [CrossRef] [PubMed]

- World Health Organization. Global Status Report on Road Safety: Time for Action; WHO: Geneva, Switzerland, 2009. [Google Scholar]

- Sarani, R.; Rahim, S.A.S.M.; Voon, W.S. Predicting Malaysian Road Fatalities for Year 2020. In Proceedings of the 4th International Conference on Road Safety and Simulations (RSS2013), Rome, Italy, 22–25 October 2013; pp. 23–25. [Google Scholar]

- De La Escalera, A.; Moreno, L.E.; Salichs, M.A.; Armingol, J.M. Road traffic sign detection and classification. IEEE Trans. Ind. Electron. 1997, 44, 848–859. [Google Scholar] [CrossRef] [Green Version]

- Malik, R.; Khurshid, J.; Ahmad, S.N. Road sign detection and recognition using colour segmentation, shape analysis and template matching. In Proceedings of the 2007 International Conference on Machine Learning and Cybernetics, Hong Kong, China, 19–22 August 2007; pp. 3556–3560. [Google Scholar]

- Lim, K.H.; Seng, K.P.; Ang, L.-M. MIMO Lyapunov theory-based RBF neural classifier for traffic sign recognition. Appl. Comput. Intell. Soft Comput. 2012, 2012, 7. [Google Scholar] [CrossRef]

- Saha, S.K.; Chakraborty, D.; Bhuiyan, A.A. Neural network based road sign recognition. Int. J. Comput. Appl. 2012, 50, 35–41. [Google Scholar]

- Stallkamp, J.; Schlipsing, M.; Salmen, J.; Igel, C. Man vs. Computer: Benchmarking machine learning algorithms for traffic sign recognition. Neural Netw. 2012, 32, 323–332. [Google Scholar] [CrossRef] [PubMed]

- Yamamoto, J.; Karungaru, S.; Terada, K. Japanese road signs recognition using neural networks. In Proceedings of the Sice Annual Conference, Nagoya, Japan, 14–17 September 2013; pp. 1144–1150. [Google Scholar]

- Miah, M.B.A. A real time road sign recognition using neural network. Int. J. Comput. Appl. 2015, 114, 1–5. [Google Scholar]

- Li, Y.; Mogelmose, A.; Trivedi, M.M. Pushing the “speed limit”: Towards high-accuracy U.S. Traffic sign recognition with convolutional neural networks. IEEE Trans. Intell. Veh. 2016, 1, 167–176. [Google Scholar] [CrossRef]

- Islam, K.; Raj, R. Real-time (vision-based) road sign recognition using an artificial neural network. Sensors 2017, 17, 853. [Google Scholar] [CrossRef] [PubMed]

- Wali, S.B.; Hannan, M.A.; Hussain, A.; Samad, S.A. An automatic traffic sign detection and recognition system based on colour segmentation, shape matching, and svm. Math. Probl. Eng. 2015, 2015, 11. [Google Scholar] [CrossRef]

- Pazhoumand-Dar, H.; Yaghobi, M. Dtbsvms: A New Approach for Road Sign Recognition. In Proceedings of the 2010 2nd International Conference on Computational Intelligence, Communication Systems and Networks, Liverpool, UK, 28–30 July 2010; pp. 314–319. [Google Scholar]

- Maldonado-Bascon, S.; Lafuente-Arroyo, S.; Gil-Jimenez, P.; Gomez-Moreno, H.; Lopez-Ferreras, F. Road-sign detection and recognition based on support vector machines. IEEE Trans. Intell. Transp. Syst. 2007, 8, 264–278. [Google Scholar] [CrossRef]

- Wei, L.; Chen, X.; Duan, B.; Hui, D.; Pengyu, F.; Yuan, H.; Zhao, H. A system for road sign detection, recognition and tracking based on multi-cues hybrid. In Proceedings of the 2009 IEEE Intelligent Vehicles Symposium, Xi’an, China, 3–5 June 2009; pp. 562–567. [Google Scholar]

- Min, K.i.; Oh, J.S.; Kim, B.W. Traffic sign extract and recognition on unmanned vehicle using image processing based on support vector machine. In Proceedings of the 2011 11th International Conference on Control, Automation and Systems, Gyeonggi-do, Korea, 26–29 October 2011; pp. 750–753. [Google Scholar]

- Liu, C.; Chang, F.; Chen, Z. High performance traffic sign recognition based on sparse representation and svm classification. In Proceedings of the 2014 10th International Conference on Natural Computation (ICNC), Xiamen, China, 19–21 August 2014; pp. 108–112. [Google Scholar]

- Mammeri, A.; Khiari, E.H.; Boukerche, A. Road-sign text recognition architecture for intelligent transportation systems. In Proceedings of the 2014 IEEE 80th Vehicular Technology Conference (VTC2014-Fall), Vancouver, BC, Canada, 14–17 September 2014; pp. 1–5. [Google Scholar]

- García-Garrido, M.A.; Ocaña, M.; Llorca, D.F.; Arroyo, E.; Pozuelo, J.; Gavilán, M. Complete vision-based traffic sign recognition supported by an I2V communication system. Sensors 2012, 12, 1148–1169. [Google Scholar] [CrossRef] [PubMed]

- Gu, Y.; Yendo, T.; Tehrani, M.P.; Fujii, T.; Tanimoto, M. A new vision system for traffic sign recognition. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, San Diego, CA, USA, 21–24 June 2010; pp. 7–12. [Google Scholar]

- Yin, S.; Ouyang, P.; Liu, L.; Guo, Y.; Wei, S. Fast traffic sign recognition with a rotation invariant binary pattern based feature. Sensors 2015, 15, 2161–2180. [Google Scholar]

- Mathias, M.; Timofte, R.; Benenson, R.; Van Gool, L. Traffic sign recognition—How far are we from the solution? In Proceedings of the 2013 International Joint Conference on Neural Networks (IJCNN), Dallas, TX, USA, 4–9 August 2013; pp. 1–8. [Google Scholar]

- Vitabile, S.; Pollaccia, G.; Pilato, G.; Sorbello, F. Road signs recognition using a dynamic pixel aggregation technique in the HSV color space. In Proceedings of the 11th International Conference on Image Analysis and Processing, Palermo, Italy, 26–28 September 2001; pp. 572–577. [Google Scholar]

- Ohgushi, K.; Hamada, N. Traffic sign recognition by bags of features. In Proceedings of the TENCON 2009—2009 IEEE Region 10 Conference, Singapore, 23–26 January 2009; pp. 1–6. [Google Scholar]

- Kang, D.S.; Griswold, N.C.; Kehtarnavaz, N. An invariant traffic sign recognition system based on sequential color processing and geometrical transformation. In Proceedings of the IEEE Southwest Symposium on Image Analysis and Interpretation, Dallas, TX, USA, 21–24 April 1994; pp. 88–93. [Google Scholar]

- Fleyeh, H. Traffic sign recognition without color information. In Proceedings of the 2015 Colour and Visual Computing Symposium (CVCS), Gjovik, Norway, 25–26 August 2015; pp. 1–6. [Google Scholar]

- Zeng, Y.; Lan, J.; Ran, B.; Wang, Q.; Gao, J. Restoration of motion-blurred image based on border deformation detection: A traffic sign restoration model. PLoS ONE 2015, 10, e0120885. [Google Scholar] [CrossRef] [PubMed]

- Wu, J.; Si, M.; Tan, F.; Gu, C. Real-time automatic road sign detection. In Proceedings of the Fifth International Conference on Image and Graphics (ICIG ’09), Xi’an, China, 20–23 September 2009; pp. 540–544. [Google Scholar]

- Belaroussi, R.; Foucher, P.; Tarel, J.P.; Soheilian, B.; Charbonnier, P.; Paparoditis, N. Road sign detection in images: A case study. In Proceedings of the 20th International Conference on Pattern Recognition (ICPR), Istanbul, Turkey, 23–26 August 2010; pp. 484–488. [Google Scholar]

- Shoba, E.; Suruliandi, A. Performance analysis on road sign detection, extraction and recognition techniques. In Proceedings of the 2013 International Conference on Circuits, Power and Computing Technologies (ICCPCT), Nagercoil, India, 20–21 March 2013; pp. 1167–1173. [Google Scholar]

- Lai, C.H.; Yu, C.C. An efficient real-time traffic sign recognition system for intelligent vehicles with smart phones. In Proceedings of the 2010 International Conference on Technologies and Applications of Artificial Intelligence, Hsinchu, Taiwan, 18–20 November 2010; pp. 195–202. [Google Scholar]

- Virupakshappa, K.; Han, Y.; Oruklu, E. Traffic sign recognition based on prevailing bag of visual words representation on feature descriptors. In Proceedings of the 2015 IEEE International Conference on Electro/Information Technology (EIT), Dekalb, IL, USA, 21–23 May 2015; pp. 489–493. [Google Scholar]

- Shams, M.M.; Kaveh, H.; Safabakhsh, R. Traffic sign recognition using an extended bag-of-features model with spatial histogram. In Proceedings of the 2015 Signal Processing and Intelligent Systems Conference (SPIS), Tehran, Iran, 16–17 December 2015; pp. 189–193. [Google Scholar]

- Lin, C.-C.; Wang, M.-S. Road sign recognition with fuzzy adaptive pre-processing models. Sensors 2012, 12, 6415–6433. [Google Scholar] [CrossRef] [PubMed]

- Lin, Y.T.; Chou, T.; Vinay, M.S.; Guo, J.I. Algorithm derivation and its embedded system realization of speed limit detection for multiple countries. In Proceedings of the 2016 IEEE International Symposium on Circuits and Systems (ISCAS), Montreal, QC, Canada, 22–25 May 2016; pp. 2555–2558. [Google Scholar]

- Continental. Traffic Sign Recognition. Available online: http://www.conti-online.com/generator/www/de/en/continental/automotive/general/chassis/safety/hidden/verkehrszeichenerkennung_en.html (accessed on 20 April 2017).

- Choi, Y.; Han, S.I.; Kong, S.-H.; Ko, H. Driver status monitoring systems for smart vehicles using physiological sensors: A safety enhancement system from automobile manufacturers. IEEE Signal Process. Mag. 2016, 33, 22–34. [Google Scholar] [CrossRef]

- Levinson, J.; Askeland, J.; Becker, J.; Dolson, J.; Held, D.; Kammel, S.; Kolter, J.Z.; Langer, D.; Pink, O.; Pratt, V. Towards fully autonomous driving: Systems and algorithms. In Proceedings of the Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011; pp. 163–168. [Google Scholar]

- Markoff, J. Google Cars Drive Themselves, in Traffic. The New York Times, 9 October 2010. [Google Scholar]

- Guardian News and Media Limited. Google’s self-driving car in broadside collision after other car jumps red light. The Guardian, 26 September 2016. [Google Scholar]

- The Tesla Team. All Tesla Cars Being Produced Now Have Full Self-Driving Hardware. Available online: https://www.tesla.com/blog/all-tesla-cars-being-produced-now-have-full-self-driving-hardware (accessed on 19 October 2016).

- Nixon, M.S.; Aguado, A.S. Feature Extraction and Image Processing for Computer Vision; Academic Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Ce, L.; Yaling, H.; Limei, X.; Lihua, T. Salient traffic sign recognition based on sparse representation of visual perception. In Proceedings of the 2012 International Conference on Computer Vision in Remote Sensing, Xiamen, China, 16–18 December 2012; pp. 273–278. [Google Scholar]

- Xu, R.; Hirano, Y.; Tachibana, R.; Kido, S. Classification of diffuse lung disease patterns on high-resolution computed tomography by a bag of words approach. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2011; Fichtinger, G., Martel, A., Peters, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6893, Part III; pp. 183–190. [Google Scholar]

- Fei-Fei, L.; Perona, P. A bayesian hierarchical model for learning natural scene categories. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; pp. 524–531. [Google Scholar]

- Qiu, G. Indexing chromatic and achromatic patterns for content-based colour image retrieval. Pattern Recognit. 2002, 35, 1675–1686. [Google Scholar] [CrossRef]

- Cordeiro de Amorim, R.; Mirkin, B. Minkowski metric, feature weighting and anomalous cluster initializing in k-means clustering. Pattern Recognit. 2012, 45, 1061–1075. [Google Scholar] [CrossRef]

- Danielsson, P.-E. Euclidean distance mapping. Comput. Graph. Image Process. 1980, 14, 227–248. [Google Scholar] [CrossRef]

- Deb, K.; Pratap, A.; Agarwal, S.; Meyarivan, T. A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 2002, 6, 182–197. [Google Scholar] [CrossRef]

- Fernández-Delgado, M.; Cernadas, E.; Barro, S.; Amorim, D. Do we need hundreds of classifiers to solve real world classification problems. J. Mach. Learn. Res. 2014, 15, 3133–3181. [Google Scholar]

- Cristianini, N.; Shawe-Taylor, J. An Introduction to SVM; Cambridge University Press: Cambridge, UK, 1999. [Google Scholar]

- Gul, A.; Perperoglou, A.; Khan, Z.; Mahmoud, O.; Miftahuddin, M.; Adler, W.; Lausen, B. Ensemble of a subset of knn classifiers. Adv. Data Anal. Classif. 2016, 1–14. [Google Scholar] [CrossRef]

- Shin-ike, K. A two phase method for determining the number of neurons in the hidden layer of a 3-layer neural network. In Proceedings of the SICE Annual Conference 2010, Taipei, Taiwan, 18–21 August 2010; pp. 238–242. [Google Scholar]

- García-Garrido, M.A.; Ocaña, M.; Llorca, D.F.; Sotelo, M.A.; Arroyo, E.; Llamazares, A. Robust traffic signs detection by means of vision and V2I communications. In Proceedings of the 2011 14th International IEEE Conference on Intelligent Transportation Systems (ITSC), Washington, DC, USA, 5–7 October 2011; pp. 1003–1008. [Google Scholar]

- Bui-Minh, T.; Ghita, O.; Whelan, P.F.; Hoang, T. A robust algorithm for detection and classification of traffic signs in video data. In Proceedings of the International Conference on Control, Automation and Information Sciences (ICCAIS), Ho Chi Minh, Vietnam, 26–29 November 2012; pp. 108–113. [Google Scholar]

- Wolpert, D.H.; Macready, W.G. No Free Lunch Theorems for Search; Technical Report SFI-TR-95-02-010; The Santa Fe Institute: Santa Fe, Mexico, 1995. [Google Scholar]

- Burges, C.J. A tutorial on support vector machines for pattern recognition. Data Min. Knowl. Discov. 1998, 2, 121–167. [Google Scholar] [CrossRef]

- Mujtaba, G.; Shuib, L.; Raj, R.G.; Rajandram, R.; Shaikh, K.; Al-Garadi, M.A. Automatic ICD-10 multi-class classification of cause of death from plaintext autopsy reports through expert-driven feature selection. PLoS ONE 2017, 12, e0170242. [Google Scholar] [CrossRef] [PubMed]

- Mujtaba, G.; Shuib, L.; Raj, R.G.; Rajandram, R.; Shaikh, K. Automatic text classification of ICD-10 related CoD from complex and free text forensic autopsy reports. In Proceedings of the 15th IEEE International Conference on Machine Learning and Applications (ICMLA), Anaheim, CA, USA, 18–20 December 2016; pp. 1055–1058. [Google Scholar]

- Siddiqui, M.F.; Reza, A.W.; Kanesan, J. An automated and intelligent medical decision support system for brain MRI scans classification. PLoS ONE 2015, 10, e0135875. [Google Scholar] [CrossRef] [PubMed]

- Domeniconi, C.; Yan, B. Nearest neighbor ensemble. In Proceedings of the 17th International Conference on Pattern Recognition (ICPR 2004), Cambridge, UK, 26 August 2004; pp. 228–231. [Google Scholar]

- Malek, S.; Salleh, A.; Baba, M.S. A comparison between neural network based and fuzzy logic models for chlorophll-a estimation. In Proceedings of the Second International Conference on Computer Engineering and Applications (ICCEA), Bali Island, Indonesia, 19–21 March 2010; pp. 340–343. [Google Scholar]

- Jain, A.K.; Mao, J.; Mohiuddin, K.M. Artificial neural networks: A tutorial. Computer 1996, 29, 31–44. [Google Scholar] [CrossRef]

- Abedin, M.Z.; Dhar, P.; Deb, K. Traffic sign recognition using hybrid features descriptor and artificial neural network classifier. In Proceedings of the 19th International Conference on Computer and Information Technology (ICCIT), Dhaka, Bangladesh, 18–20 December 2016; pp. 457–462. [Google Scholar]

- Ellahyani, A.; El Ansari, M.; El Jaafari, I. Traffic sign detection and recognition based on random forests. Appl. Soft Comput. 2016, 46, 805–815. [Google Scholar] [CrossRef]

| Number of Hidden Neurons | Iterations | Training Time | Performance | Gradient | Results | |

|---|---|---|---|---|---|---|

| Cross Entropy Error | Percentages of Error (%E) | |||||

| 10 | 152 | 0:00:01 | 0.0174 | 0.0237 | 0.4874 | 10.5833 |

| 20 | 112 | 0:00:01 | 0.00428 | 0.0143 | 0.1905 | 4.7500 |

| 30 | 123 | 0:00:01 | 0.00322 | 0.0126 | 0.1568 | 3.9167 |

| 40 | 115 | 0:00:01 | 0.00239 | 0.00758 | 0.4729 | 2.8333 |

| 50 | 115 | 0:00:01 | 0.00363 | 0.00931 | 0.1484 | 3.6667 |

| 60 | 101 | 0:00:01 | 0.00627 | 0.0212 | 0.1912 | 3.6667 |

| 70 | 98 | 0:00:01 | 0.00348 | 0.0177 | 0.1647 | 4.0000 |

| 80 | 102 | 0:00:01 | 0.00304 | 0.0110 | 0.1388 | 3.7500 |

| 90 | 136 | 0:00:01 | 0.00192 | 0.00739 | 0.3469 | 2.4167 |

| 100 | 132 | 0:00:01 | 0.00234 | 0.00740 | 0.2021 | 1.0000 |

| 110 | 115 | 0:00:02 | 0.00197 | 0.00492 | 0.1935 | 1.4167 |

| Reference | Overall Accuracy (%) | Processing Time (s) |

|---|---|---|

| [1] | 97.60 | - |

| [15] | 95.71 | 0.43 |

| [17] | 93.60 | - |

| [24] | 98.62 | 0.36 |

| [35] | 95.20 | - |

| [37] | 92.47 | - |

| [57] | 90.27 | 0.35 |

| [58] | 86.70 | - |

| Proposed method | 99.00 | 0.28 |

| Database | Classifier | Baseline [67,68] | Proposed Approach |

|---|---|---|---|

| Malaysian Real-Time Dataset | SVM | 97.30 | 92.30 |

| Ensemble subspace kNN | 92.50 | 92.70 | |

| ANN | 97.80 | 99.00 | |

| Belgian Traffic Sign Dataset [25] | SVM | 89.80 | 92.30 |

| Ensemble subspace kNN | 90.80 | 93.70 | |

| ANN | 97.10 | 97.20 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Islam, K.T.; Raj, R.G.; Mujtaba, G. Recognition of Traffic Sign Based on Bag-of-Words and Artificial Neural Network. Symmetry 2017, 9, 138. https://doi.org/10.3390/sym9080138

Islam KT, Raj RG, Mujtaba G. Recognition of Traffic Sign Based on Bag-of-Words and Artificial Neural Network. Symmetry. 2017; 9(8):138. https://doi.org/10.3390/sym9080138

Chicago/Turabian StyleIslam, Kh Tohidul, Ram Gopal Raj, and Ghulam Mujtaba. 2017. "Recognition of Traffic Sign Based on Bag-of-Words and Artificial Neural Network" Symmetry 9, no. 8: 138. https://doi.org/10.3390/sym9080138