Assessing Tenth-Grade Students’ Mathematical Literacy Skills in Solving PISA Problems

Abstract

:1. Introduction

2. Research Questions

- (a)

- What is the level of the UAE’s students’ mathematical literacy?

- (b)

- Is there a significant difference between male and female students’ mathematical literacy?

3. Literature Review

3.1. Mathematical Literacy Framework in the PISA

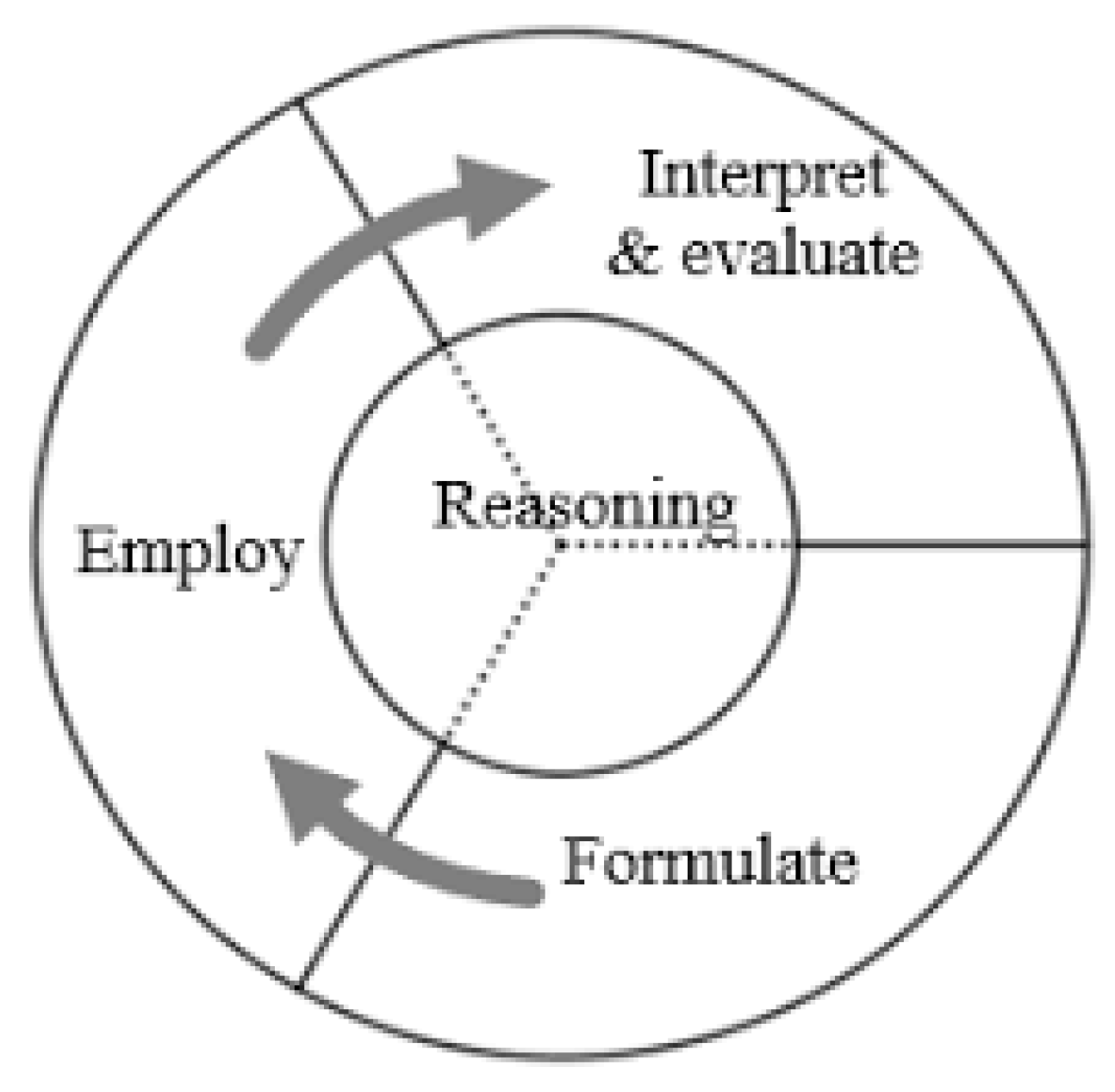

3.2. Processes Involved in Mathematical Modeling Cycle

4. Methodology

4.1. Participants

4.2. The Study Instruments

4.3. Methods of Data Analysis

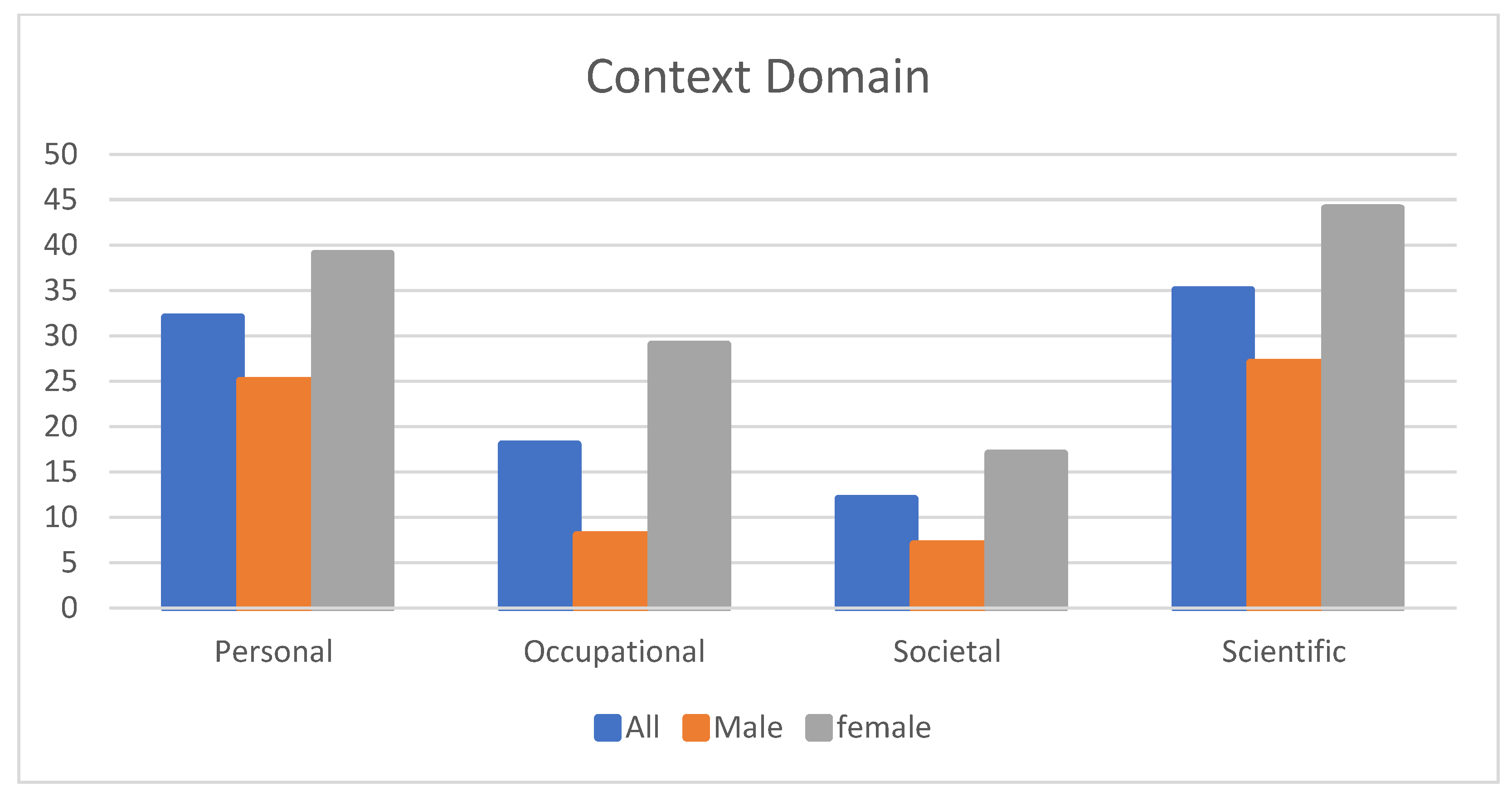

5. Findings

Descriptive Statistics

6. Discussion

7. Conclusions

8. Limitations

Implications and Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

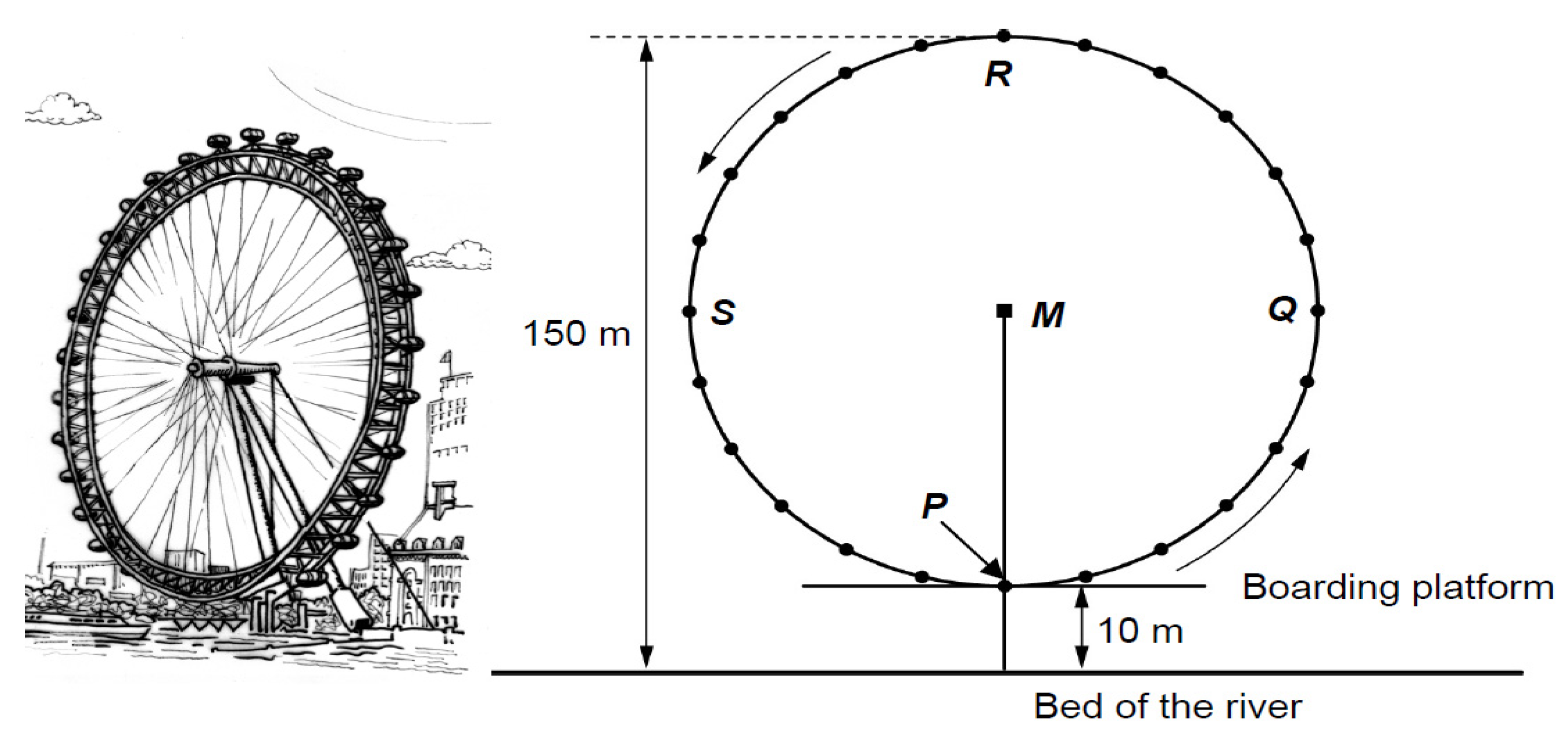

- FERRIS WHEEL

- Question 1: FERRIS WHEEL (proficiency level: 4, process: employ, content: space and shapes, context: societal)

- The letter M in the diagram indicates the center of the wheel.

- How many meters (m) above the bed of the river is point M?

- Answer: ………………………………… m

- Question 2: FERRIS WHEEL (proficiency level: 3, process: formulate, content: space and shapes, context: societal)

- The Ferris wheel rotates at a constant speed. The wheel makes one full rotation in exactly 40 min.

- John starts his ride on the Ferris wheel at the boarding point, P.

- Where will John be after half an hour?

- (A)

- At R

- (B)

- Between R and S

- (C)

- At S

- (D)

- Between S and P

References

- Almarashdi, Hanan Shaher, and Adeeb M. Jarrah. 2022. The Impact of a Proposed Mathematics Enrichment Program on UAE Students’ Mathematical Literacy Based on the PISA Framework. Sustainability 14: 11259. [Google Scholar] [CrossRef]

- Depaepe, Fien, Erik De Corte, and Lieven Verschaffel. 2010. Teachers’ approaches towards word problem solving: Elaborating or restricting the problem context. Teaching and Teacher Education 26: 152–60. [Google Scholar] [CrossRef]

- Dewantara, Andi Harpeni, Zulkardi Zulkardi, and Darmawijoyo Darmawijoyo. 2015. Assessing seventh graders’ mathematical literacy in solving PISA-like tasks. Journal on Mathematics Education 6: 117–28. [Google Scholar] [CrossRef]

- Ebel, Robert, and David Frisbie. 1991. Essentials of Educational Measurement, 5th ed. Englewood Cliffs: Prentice-Hall. [Google Scholar]

- Edo, Sri Imelda, Yusuf Hartono, and Ratu Ilma Indra Putri. 2013. Investigating Secondary School Students’ Difficulties in Modeling Problems PISA-Model Level 5 And 6. Journal on Mathematics Education 4. [Google Scholar] [CrossRef]

- Freudenthal, Hans. 1991. Revisiting Mathematics Education: China Lectures. Dordrecht: Kluwer Academic Publishers. [Google Scholar]

- Gainsburg, Julie. 2008. Real-world connections in secondary mathematics teaching. Journal of Mathematics Teacher Education 11: 199–219. [Google Scholar] [CrossRef]

- Gellert, Uwe, and Eva Jablonka. 2007. Mathematisation and Demathematisation. Rotterdam: Sense Publishers. [Google Scholar]

- Gruber, Howard E., and J. Jacques Vonèche, eds. 1977. The Essential Piaget. New York: Basic Books. [Google Scholar]

- Hiebert, James, and James Stigler. 2004. Improving Mathematics Teaching. Educational Leadership 61: 12–17. [Google Scholar]

- Jarrah, Adeeb, and Hanan Shaher Almarashdi. 2019. Mathematics teacher perceptions toward teaching gifted and talented learners in general education classrooms in the UAE. Journal for the Education of Gifted Young Scientists, 835–47. [Google Scholar] [CrossRef] [Green Version]

- National Council of Teachers of Mathematics. 2008. Principles and Standards for School Mathematics. Reston: National Council of Teachers of Mathematics. [Google Scholar]

- Novita, Rita, and Mulia Putra. 2016. Using task like PISA’s problem to support student’s creativity in Mathematics. Journal on Mathematics Education 7. [Google Scholar] [CrossRef] [Green Version]

- OECD. 2004. The PISA 2003 Assessment Framework: Mathematics, Readings, Science, and Problem-Solving Knowledge and Skills. Paris: OECD Publishing. [Google Scholar]

- OECD. 2010a. Learning Mathematics for Life: A Perspective from PISA. Paris: OECD Publishing. [Google Scholar] [CrossRef]

- OECD. 2010b. PISA 2009 Assessment Framework Key Competencies in Reading, Mathematics, and Science. Paris: OECD Publishing. [Google Scholar] [CrossRef]

- OECD. 2013. PISA 2012 Results: What Students Know and Can Do (Volume I). Paris: OECD Publishing. [Google Scholar] [CrossRef]

- OECD. 2014. PISA 2012 Technical Report. Paris: Organization for Economic Co-Operation and Development (OECD). [Google Scholar]

- OECD. 2018a. PISA 2021 Mathematics Framework (First Draft). Available online: https://www.mokykla2030.lt/wp-content/uploads/2018/12/GB-2018-4-PISA-2021-Mathematics-Framework-First-Draft.pdf (accessed on 3 December 2022).

- OECD. 2018b. PISA 2021 Mathematics Framework (Draft). Available online: http://www.oecd.org/pisa/pisaproducts/pisa-2021-mathematics-framework-draft.pdf (accessed on 3 December 2022).

- OECD. 2019. PISA 2018 Results (Volume I): What Students Know and Can Do. Paris: OECD Publishing. [Google Scholar] [CrossRef]

- OECD. 2020. “FAQ”. Available online: http://www.oecd.org/pisa/pisafaq/ (accessed on 4 February 2022).

- Putri, Ratu Ilma Indra, and Zulkardi Zulkardi. 2020. Designing Pisa-Like Mathematics Task Using Asian Games Context. Journal on Mathematics Education 11: 135–44. [Google Scholar] [CrossRef]

- Reinke, Like T. 2019. Contextual problems as conceptual anchors: An illustrative case. Research in Mathematics Education 22: 3–21. [Google Scholar] [CrossRef]

- Rotigel, Jennifer V., and Susan Fello. 2004. Mathematically Gifted Students: How Can We Meet Their Needs? Gifted Child Today 27: 46–51. [Google Scholar] [CrossRef]

- Schoenfeld, Alan H. 1991. On mathematics as sense-making: An informal attack on the unfortunate divorce of formal and informal mathematics. In Informal Reasoning and Education. Edited by David Perkins, James F. Voss and Judith W. Segal. Hillsdale: Lawrence Erlbaum, pp. 311–43. [Google Scholar]

- Schoenfeld, Alan H. 1992. Learning to think mathematically: Problem-solving, metacognition, and sense-making in mathematics. In Handbook of Research on Mathematics Teaching and Learning. Edited by Douglas A. Grouws. New York: McMillan, pp. 334–70. [Google Scholar]

- Stacey, Kaye, and Ross Turner. 2015. The evolution and key concepts of the PISA mathematics frameworks. In Assessing Mathematical Literacy: The PISA Experience. Edited by Kaye Stacey and Ross Turner. New York: Springer, pp. 5–34. [Google Scholar]

- UAE. 2021. National Agenda—The Official Portal of the UAE Government. Available online: https://u.ae/en/about-the-uae/strategies-initiatives-and-awards/federal-governments-strategies-and-plans/national-agenda#:~:text=The%20National%20Key%20Performance%20Indicators (accessed on 12 March 2021).

- Verschaffel, Lieven, Brian Greer, and Eric De Corte. 2000. Making Sense of Word Problems. Lisse: Swets & Zeitlinger. [Google Scholar]

- Wang, Ming-Te, and Jessica L. Degol. 2016. Gender Gap in Science, Technology, Engineering, and Mathematics (STEM): Current Knowledge, Implications for Practice, Policy, and Future Directions. Educational Psychology Review 29: 119–40. [Google Scholar] [CrossRef] [PubMed]

| PISA 2009 | PISA 2012 | PISA 2015 | PISA 2018 | ||||

|---|---|---|---|---|---|---|---|

| Below Level 2 | Level 5 and 6 | Below Level 2 | Level 5 and 6 | Below Level 2 | Level 5 and 6 | Below Level 2 | Level 5 and 6 |

| 51.3% | 2.9% | 46.3% | 3.5% | 48.7% | 3.7% | 45.5% | 5.4% |

| Frequency | Percentage % | ||

|---|---|---|---|

| Age (years) | 14 | 19 | 9% |

| 15 | 144 | 71% | |

| 16 | 41 | 20% | |

| Total | 204 | 100% | |

| Gender | Male | 106 | 52% |

| Female | 98 | 48% | |

| Total | 204 | 100% |

| Processes | No. | Contents | No. | Contexts | No. |

|---|---|---|---|---|---|

| Formulate | 8 | Quantity | 7 | Personal | 8 |

| Employ | 14 | Space and shape | 8 | Occupational | 4 |

| Interpret | 4 | Change and relationship | 7 | Scientific | 6 |

| Uncertainty | 4 | Societal | 8 | ||

| Total | 26 | Total | 26 | Total | 26 |

| Level of Proficiency | No. of Items | Percentage % |

|---|---|---|

| Level 1 and below | 4 | 15% |

| Level 2 | 3 | 12% |

| Level 3 | 7 | 27% |

| Level 4 | 5 | 19% |

| Level 5 | 4 | 15% |

| Level 6 | 3 | 12% |

| Total | 26 | 100% |

| Score Interval | Criterion |

|---|---|

| X > + 1.5 SDx | Very High |

| + 0.5 SDx < X ≤ +1.5 SDx | High |

| − 0.5 SDx < X ≤ + 0.5 SDx | Average |

| − 1.5 SDx < X ≤ − 0.5 SDx | Low |

| X ≤ − 1.5 SDx | Very Low |

| Description | Score | ||

|---|---|---|---|

| All | Male | Female | |

| Mean | 7.90 | 5.53 | 10.47 |

| Standard Deviation | 4.02 | 2.23 | 3.89 |

| Maximum Score | 20 | 11 | 20 |

| Minimum Score | 2 | 2 | 5 |

| No. of Students | 204 | 106 | 98 |

| Score Interval | Criterion | All | Male | Female | |||

|---|---|---|---|---|---|---|---|

| F | % | F | % | F | % | ||

| X < 25.5 | Very High | 0 | 0% | 0 | 0% | 0 | 0% |

| 19.83 < X ≤ 25.5 | High | 2 | 1% | 0 | 0% | 2 | 2% |

| 14.16 < X ≤ 19.83 | Average | 14 | 7% | 0 | 0% | 14 | 14% |

| 8.5 < X ≤ 14.16 | Low | 62 | 30% | 12 | 11% | 50 | 51% |

| X ≤ 8.5 | Very Low | 126 | 62% | 94 | 89% | 32 | 33% |

| 204 | 100% | 106 | 100% | 98 | 100% | ||

| Domain | Sub Domain (No. of Items) | Average | Standard Deviation | Max. Score | Category |

|---|---|---|---|---|---|

| Proficiency Levels | Level 1 (4) | 2.65 | 1.16 | 4 | High |

| Level 2 (3) | 1.58 | 0.95 | 3 | Average | |

| Level 3 (7) | 1.84 | 1.37 | 6 | Low | |

| Level 4 (5) | 0.46 | 0.74 | 3 | V. Low | |

| Level 5 (4) | 0.21 | 0.53 | 3 | V. Low | |

| Level 6 (3) | 0.06 | 0.31 | 2 | V. Low | |

| Content | Quantity (7) | 1.79 | 1.28 | 6 | Low |

| Change and relationship (7) | 1.02 | 0.91 | 4 | V. Low | |

| Space and shape (8) | 1.30 | 1.07 | 4 | V. Low | |

| Uncertainty and data (4) | 2.67 | 1.20 | 4 | High | |

| Process | Formulation (8) | 1.25 | 1.19 | 6 | V. Low |

| Employing (14) | 2.89 | 1.85 | 10 | V. Low | |

| Interpreting (4) | 2.67 | 1.20 | 4 | High | |

| Reasoning (8) | 1.11 | 0.97 | 4 | V. Low | |

| Context | Personal (8) | 2.54 | 1.39 | 6 | Low |

| Occupational (4) | 0.73 | 0.76 | 3 | V. Low | |

| Societal (8) | 2.82 | 1.43 | 4 | Low | |

| Scientific (6) | 0.71 | 0.97 | 6 | V. Low |

| Variable | Male (n = 106) | Female (n = 98) | t | Df | P | ||

|---|---|---|---|---|---|---|---|

| M | SD | M | SD | ||||

| MLT results | 5.53 | 2.32 | 10.47 | 3.89 | 11.109 | 202 | 0.00 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Almarashdi, H.S.; Jarrah, A.M. Assessing Tenth-Grade Students’ Mathematical Literacy Skills in Solving PISA Problems. Soc. Sci. 2023, 12, 33. https://doi.org/10.3390/socsci12010033

Almarashdi HS, Jarrah AM. Assessing Tenth-Grade Students’ Mathematical Literacy Skills in Solving PISA Problems. Social Sciences. 2023; 12(1):33. https://doi.org/10.3390/socsci12010033

Chicago/Turabian StyleAlmarashdi, Hanan Shaher, and Adeeb M. Jarrah. 2023. "Assessing Tenth-Grade Students’ Mathematical Literacy Skills in Solving PISA Problems" Social Sciences 12, no. 1: 33. https://doi.org/10.3390/socsci12010033