Evolution of Neural Dynamics in an Ecological Model

Abstract

:1. Introduction

1.1. Chaos and Its Edge

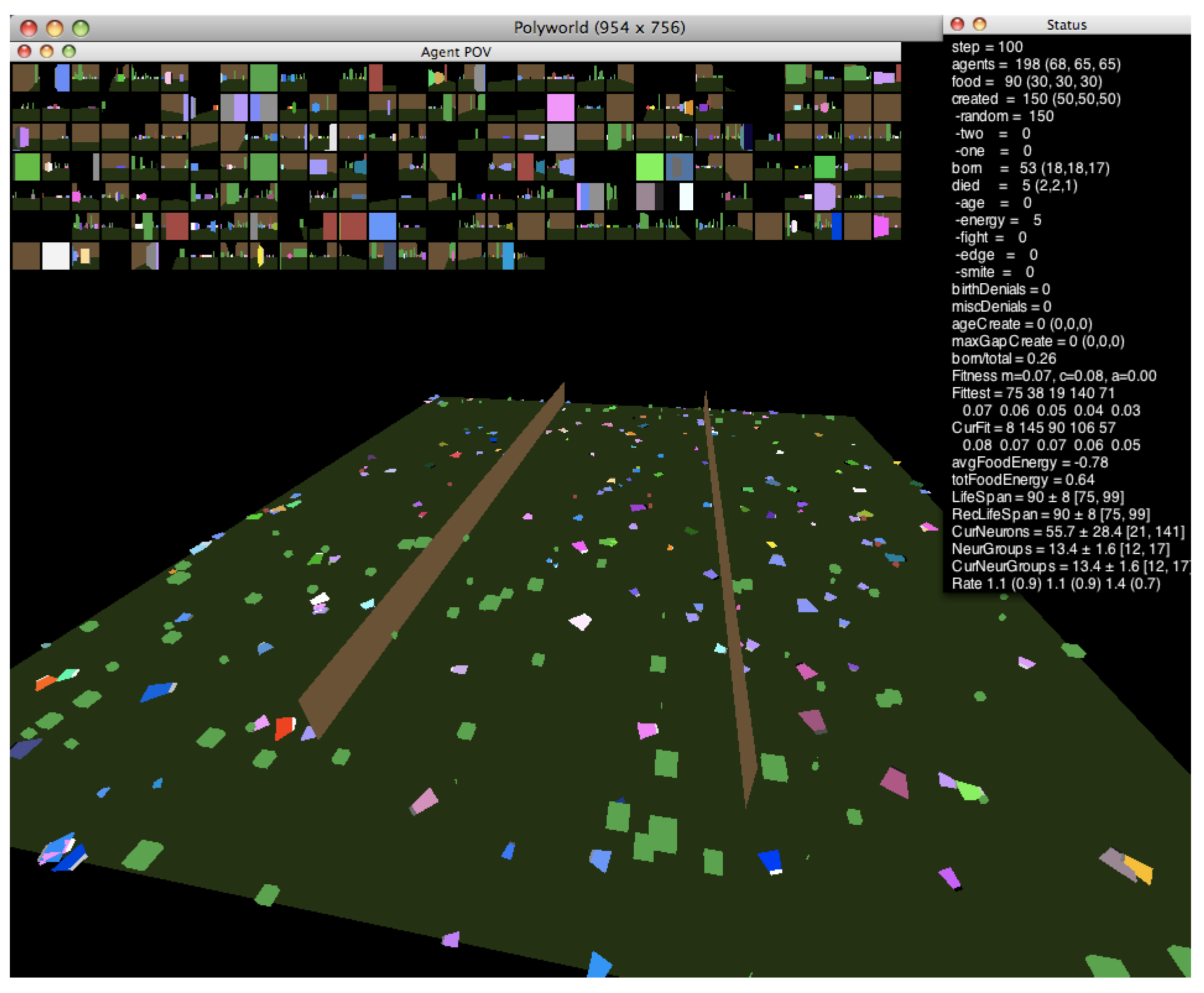

1.2. Polyworld

1.2.1. Behavioral Adaptation

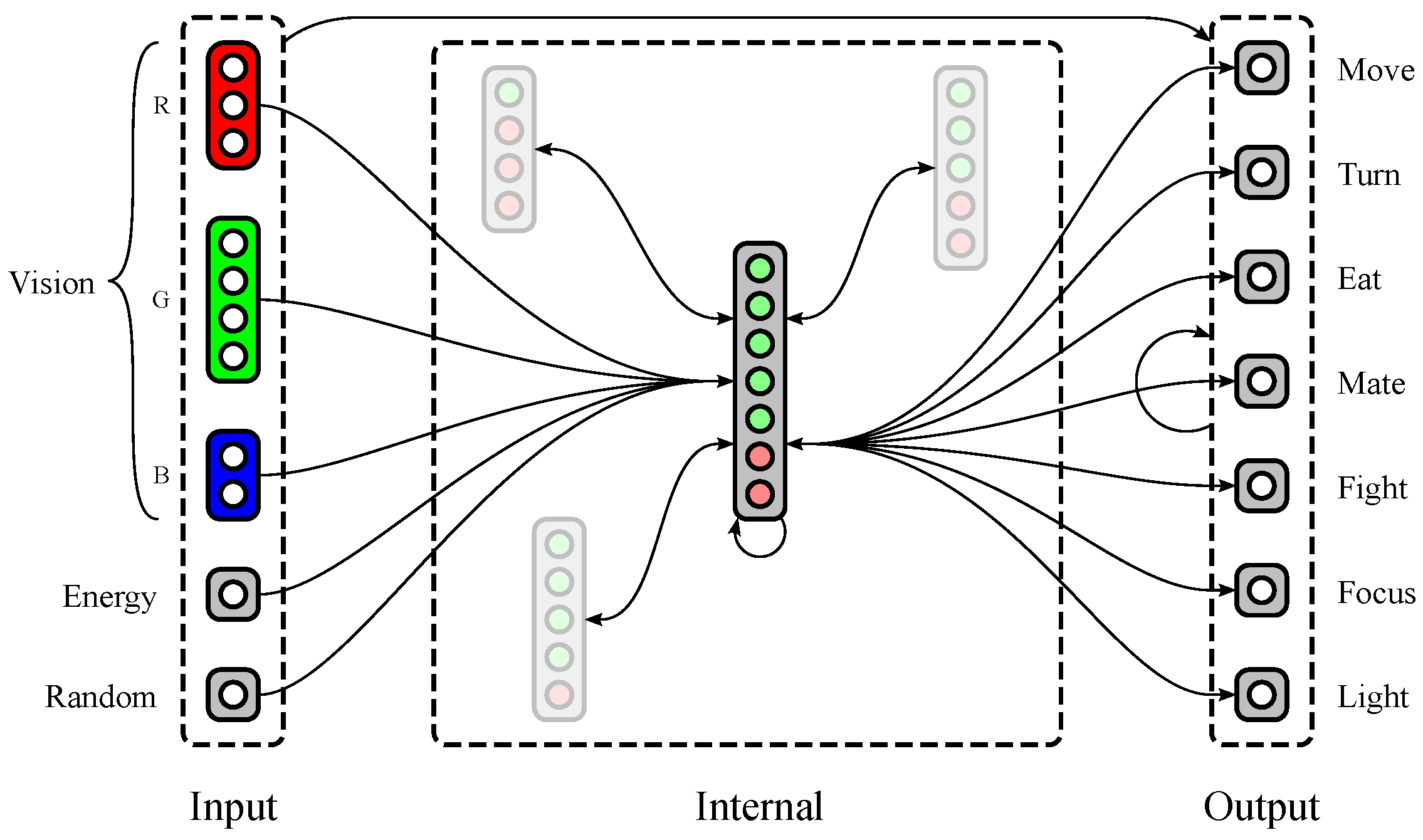

1.2.2. Neural Structure

1.3. Prior Work

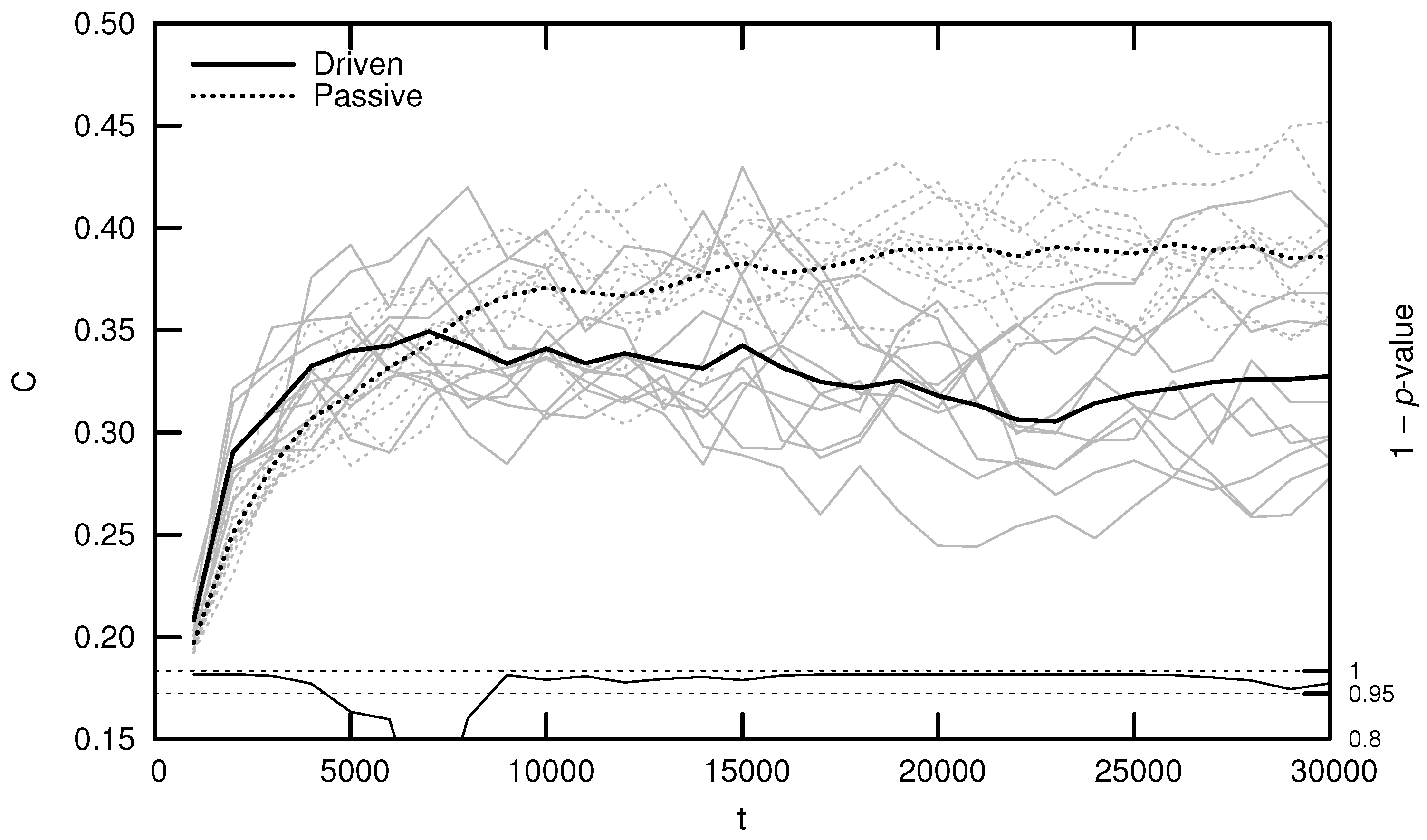

1.3.1. Driven and Passive

1.3.2. Numerical Analysis

1.4. Our Hypothesis

2. Materials and Methods

2.1. Neural Model

2.2. “In Vitro” Analysis

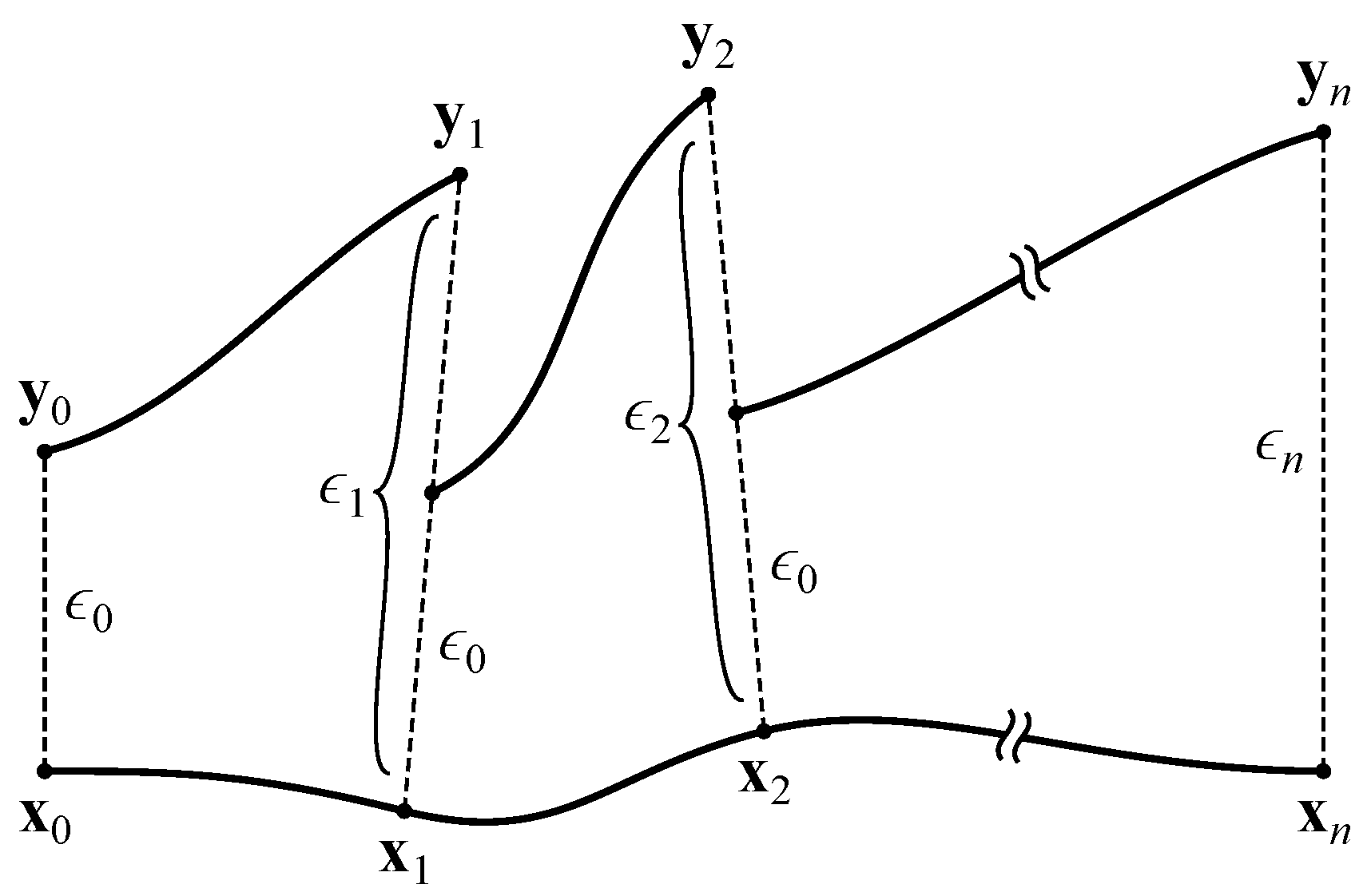

2.3. Maximal Lyapunov Exponent

2.4. Bifurcation Diagrams

2.5. Onset of Criticality

3. Results

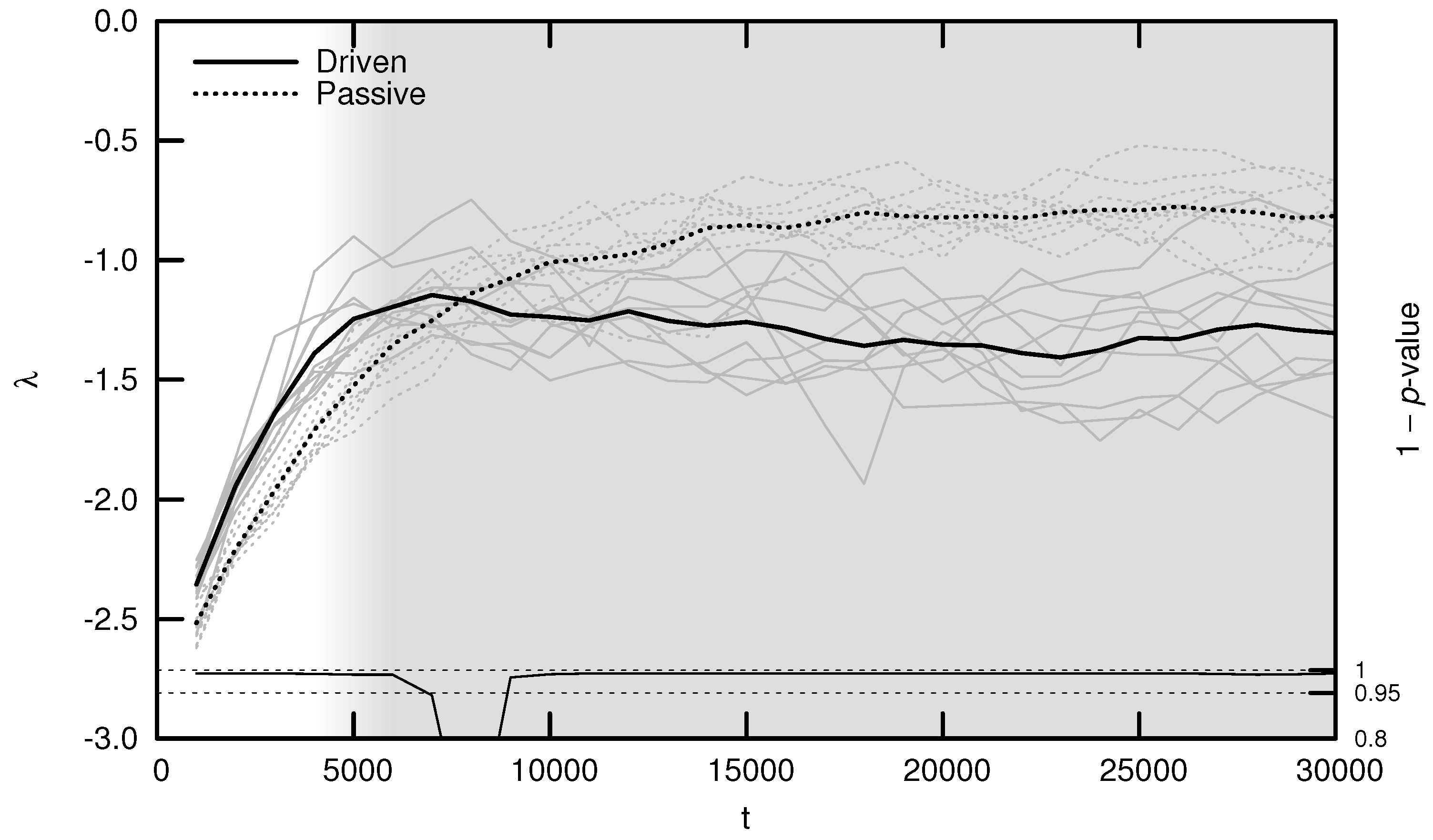

3.1. Maximal Lyapunov Exponent

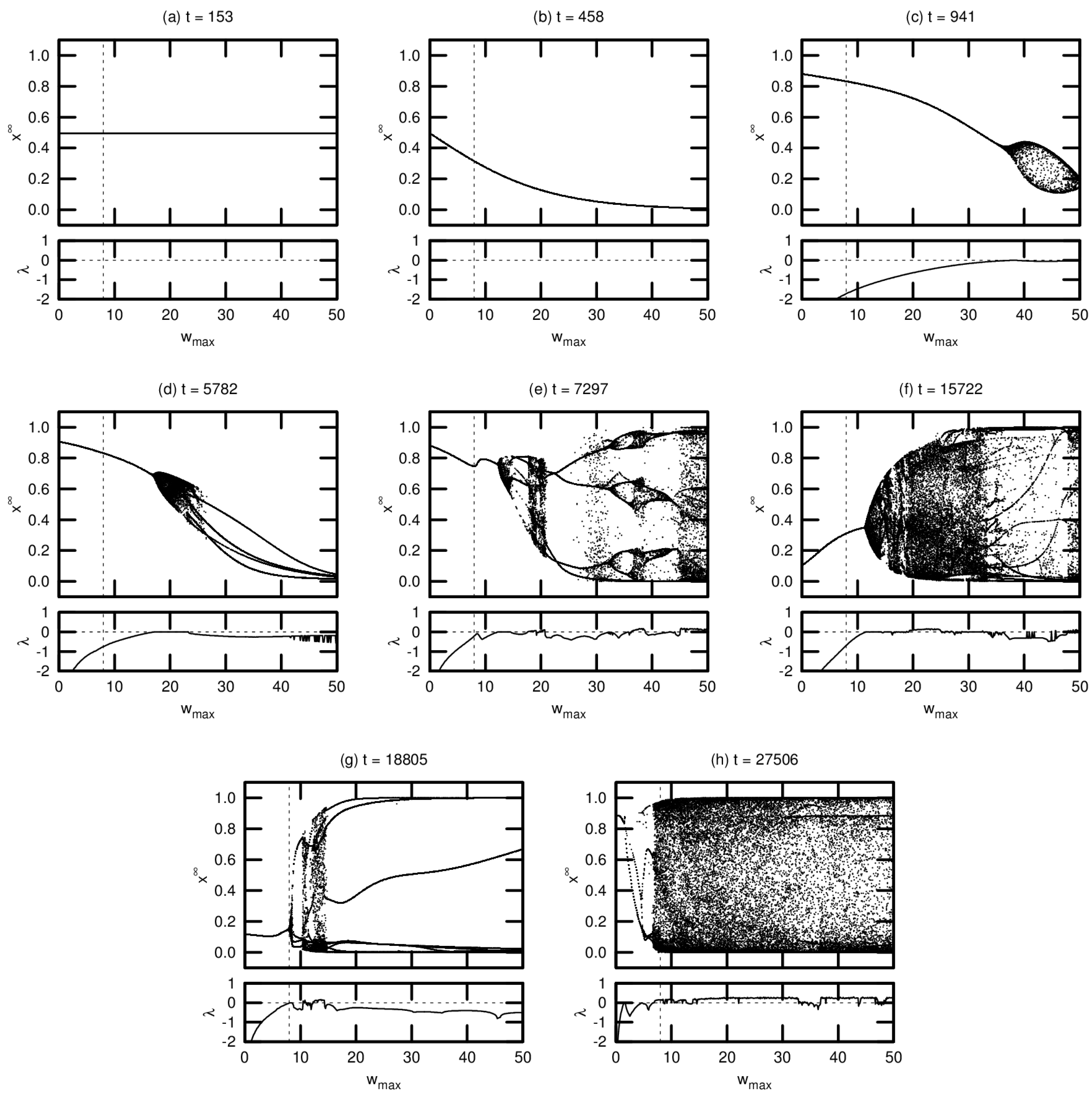

3.2. Bifurcation Diagrams

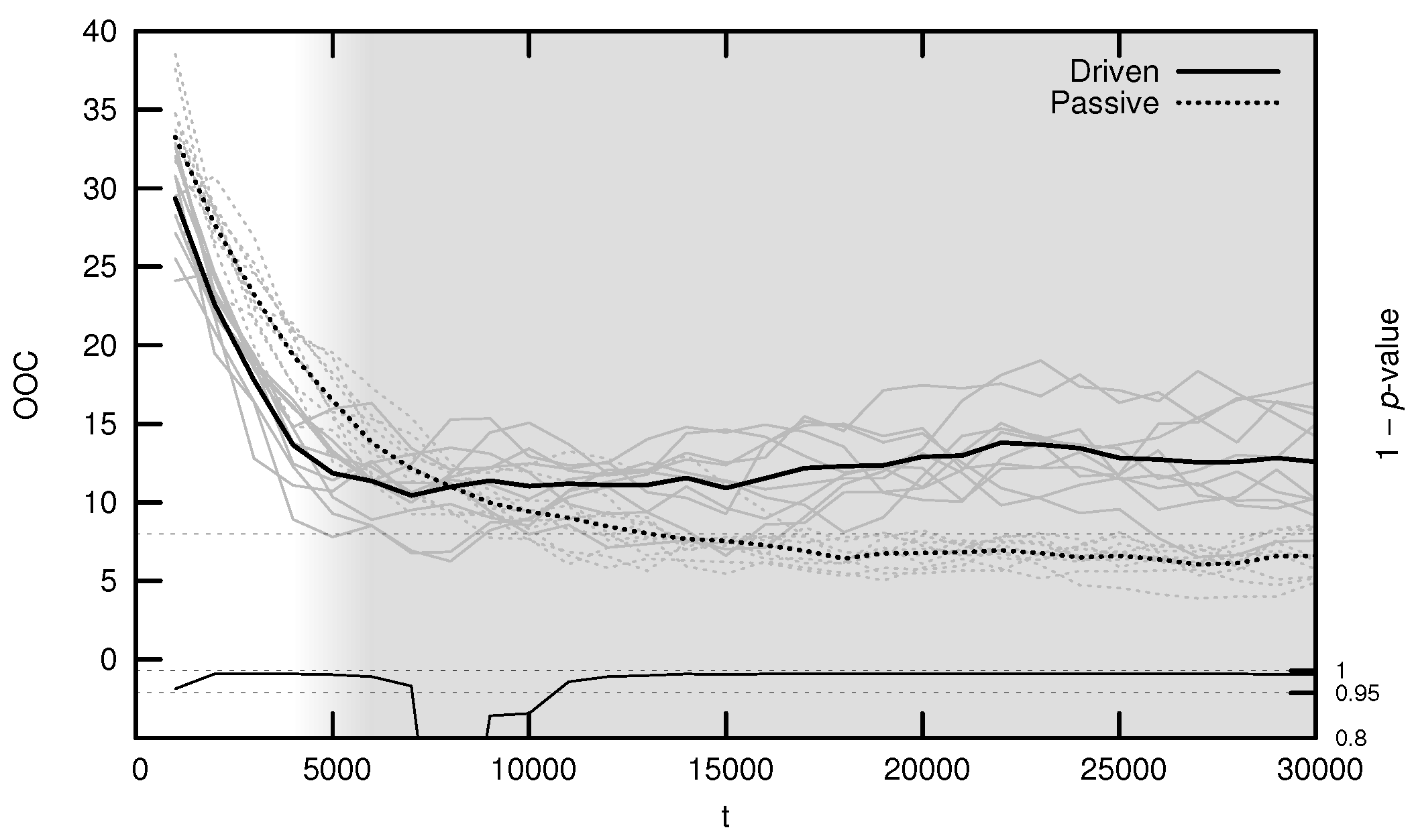

3.3. Onset of Criticality

4. Discussion

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Sprott, J.C. Chaos and Time-Series Analysis; Oxford University Press: Oxford, UK, 2003. [Google Scholar]

- Langton, C.G. Computation at the Edge of Chaos: Phase Transitions and Emergent Computation. Physica D 1990, 42, 12–37. [Google Scholar] [CrossRef]

- Bornholdt, S.; Röhl, T. Self-organized critical neural networks. Phys. Rev. E 2003, 67, 066118. [Google Scholar] [CrossRef] [PubMed]

- Bertschinger, N.; Natschläger, T. Real-Time Computation at the Edge of Chaos in Recurrent Neural Networks. Neural Comput. 2004, 16, 1413–1436. [Google Scholar] [CrossRef] [PubMed]

- Skarda, C.A.; Freeman, W.J. How brains make chaos in order to make sense of the world. Behav. Brain Sci. 1987, 10, 161–195. [Google Scholar] [CrossRef]

- Goldberger, A.L.; Rigney, D.R.; West, B.J. Chaos and Fractals in Human Physiology. Sci. Am. 1990, 262, 42–49. [Google Scholar] [CrossRef] [PubMed]

- Schiff, S.J.; Jerger, K.; Duong, D.H.; Change, T.; Spano, M.L.; Ditto, W.L. Controlling chaos in the brain. Nature 1994, 370, 615–620. [Google Scholar] [CrossRef] [PubMed]

- Boedecker, J.; Obst, O.; Lizier, J.T.; Mayer, N.M.; Asada, M. Information processing in echo state networks at the edge of chaos. Theory Biosci. 2012, 131, 205–213. [Google Scholar] [CrossRef] [PubMed]

- Goudarzi, A.; Teuscher, C.; Gulbahce, N.; Rohlf, T. Emergent Criticality Through Adaptive Information Processing in Boolean Networks. Phys. Rev. Lett. 2012, 108, 128702. [Google Scholar] [CrossRef] [PubMed]

- Lizier, J.T.; Prokopenko, M.; Zomaya, A.Y. The Information Dynamics of Phase Transitions in Random Boolean Networks. Artif. Life 2008, 11, 374–381. [Google Scholar]

- Mitchell, M.; Hraber, P.T.; Crutchfield, J.P. Revisiting the Edge of Chaos: Evolving Cellular Automata to Perform Computations. Complex Syst. 1993, 7, 89–130. [Google Scholar]

- Snyder, D.; Goudarzi, A.; Teuscher, C. Finding Optimal Random Boolean Networks for Reservoir Computing. Artif. Life 2012, 13, 259–266. [Google Scholar]

- Yaeger, L. Computational Genetics, Physiology, Metabolism, Neural Systems, Learning, Vision, and Behavior or PolyWorld: Life in a New Context; Langton, C.G., Ed.; Artificial Life III; Santa Fe Institute: Santa Fe, NM, USA, 1994; pp. 263–298. [Google Scholar]

- Ray, T.S. An Approach to the Synthesis of Life; Langton, C.G., Taylor, C., Farmer, J.D., Rasmussen, S., Eds.; Artificial Life II; Oxford University Press: Oxford, UK, 1992; pp. 371–408. [Google Scholar]

- Adami, C.; Brown, C.T. Evolutionary Learning in the 2D Artificial Life System “Avida”; Brooks, R.A., Maes, P., Eds.; Artificial Life IV; MIT Press: Cambridge, MA, USA, 1994; pp. 377–381. [Google Scholar]

- Holland, J.H. Hidden Order: How Adaptation Builds Complexity; Basic Books: New York, NY, USA, 1995. [Google Scholar]

- Griffith, V.; Yaeger, L.S. Ideal Free Distribution in Agents with Evolved Neural Architectures. In Artificial Life X: Proceedings of the Tenth International Conference on the Simulation and Synthesis of Living Systems, Bloomington, IN, USA, 3–6 June 2006; Rocha, L.M., Ed.; MIT Press: Cambridge, MA, USA, 2006; pp. 372–378. [Google Scholar]

- Murdock, J.; Yaeger, L.S. Identifying Species by Genetic Clustering; Lenaerts, T., Ed.; Advances in Artificial Life, ECAL 2011; Indiana University: Bloomington, IN, USA, 2011; pp. 564–572. [Google Scholar]

- Yaeger, L.; Griffith, V.; Sporns, O. Passive and Driven Trends in the Evolution of Complexity; Bullock, S., Noble, J., Watson, R., Bedau, M.A., Eds.; Artificial Life XI; MIT Press: Cambridge, MA, USA, 2008; pp. 725–732. [Google Scholar]

- Yaeger, L.S. How evolution guides complexity. HFSP J. 2009, 3, 328–339. [Google Scholar] [CrossRef] [PubMed]

- Yaeger, L.; Sporns, O.; Williams, S.; Shuai, X.; Dougherty, S. Evolutionary Selection of Network Structure and Function. In Artificial Life XII, Proceedings of the 12th International Conference on the Synthesis and Simulation of Living Systems, Odense, Denmark, 19–23 August 2010; Fellermann, H., Dorr, M., Hanczyc, M.M., Laursen, L.L., Maurer, S., Merkle, D., Monnard, P.A., Stoy, K., Rasmussen, S., Eds.; MIT Press: Cambridge, MA, USA, 2010; pp. 313–320. [Google Scholar]

- Lizier, J.T.; Piraveenan, M.; Pradhana, D.; Prokopenko, M.; Yaeger, L.S. Functional and Structural Topologies in Evolved Neural Networks. Adv. Artif. Life 2011, 5777, 140–147. [Google Scholar]

- Yaeger, L. Polyworld Movies. Available online: http://shinyverse.org/larryy/PolyworldMovies.html (accessed on 4 July 2017).

- Yaeger, L.S.; Sporns, O. Evolution of Neural Structure and Complexity in a Computational Ecology. In Artificial Life X: Proceedings of the Tenth International Conference on the Simulation and Synthesis of Living Systems, Bloomington, IN, USA, 3–6 June 2006; Rocha, L.M., Ed.; MIT Press: Cambridge, MA, USA, 2006; pp. 372–378. [Google Scholar]

- Gould, S.J. The Evolution of Life on the Earth. Sci. Am. 1994, 271, 85–91. [Google Scholar] [CrossRef]

- McShea, D.W. Metazoan Complexity and Evolution: Is There a Trend? Int. J. Org. Evol. 1996, 50, 477–492. [Google Scholar]

- Tononi, G.; Sporns, O.; Edelman, G.M. A measure for brain complexity: Relating functional segregation and integration in the nervous system. Proc. Natl. Acad. Sci. USA 1994, 91, 5033–5037. [Google Scholar] [CrossRef] [PubMed]

- Tononi, G.; Edelman, G.M.; Sporns, O. Complexity and coherency: integrating information in the brain. Trends Cogn. Sci. 1998, 2, 474–484. [Google Scholar] [CrossRef]

- Dennett, D.C. Kinds of Minds: Toward an Understanding of Consciousness; Basic Books: New York, NY, USA, 1996. [Google Scholar]

- McShea, D.W.; Hordijk, W. Complexity by Subtraction. Evol. Biol. 2013, 40, 504–520. [Google Scholar] [CrossRef]

- Wolf, A.; Swift, J.B.; Swinney, H.L.; Vastano, J.A. Determining Lyapunov Exponents from a Time Series. Physics D 1985, 16, 285–317. [Google Scholar] [CrossRef]

- Albers, D.J.; Sprott, J.C.; Dechert, W.D. Routes to Chaos in Neural Networks with Random Weights. Int. J. Bifurc. Chaos 1998, 8, 1463–1478. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Williams, S.; Yaeger, L. Evolution of Neural Dynamics in an Ecological Model. Geosciences 2017, 7, 49. https://doi.org/10.3390/geosciences7030049

Williams S, Yaeger L. Evolution of Neural Dynamics in an Ecological Model. Geosciences. 2017; 7(3):49. https://doi.org/10.3390/geosciences7030049

Chicago/Turabian StyleWilliams, Steven, and Larry Yaeger. 2017. "Evolution of Neural Dynamics in an Ecological Model" Geosciences 7, no. 3: 49. https://doi.org/10.3390/geosciences7030049