1. Introduction

Recently, fourth-generation (4G) systems reached maturity, and will evolve into fifth-generation (5G) systems where limited amounts of new spectrum can be utilized to meet the stringent demands of users. However, critical challenges will come from explosive growth in devices and data volumes, which require more efficient exploitation of valuable spectrum. Therefore, non-orthogonal multiple access (NOMA) is one of the potential candidates for 5G and upcoming cellular network generations [

1,

2,

3].

According to NOMA principles, multiple users are allowed to share time and spectrum resources in the same spatial layer via power-domain multiplexing, in contrast to conventional orthogonal multiple access (OMA) techniques consisting of frequency-division multiple access (FDMA) and time division multiple access (TDMA) [

4]. Interuser interference can be alleviated by performing successive interference cancellation (SIC) on the receiver side. There has been a lot of research aimed at sum rate maximization, and the results showed that higher spectral efficiency (SE) can be obtained by using NOMA, compared to baseline OMA schemes [

5,

6,

7,

8]. Zeng et al. [

5] investigated a multiple-user scenario in which users are clustered and share the same transmit beamforming vector. Di et al. [

6] proposed a joint sub-channel assignment and power allocation scheme to maximize the weighted total sum rate of the system while adhering to a user fairness constraint. Timotheou et al. [

7] studied a decoupled problem of user clustering and power allocation in NOMA systems in which the proposed user clustering approach is based on exhaustive search with a high required complexity. Liang et al. [

8] studied solutions for user pairing, and investigated the power allocation problem by using NOMA in cognitive radio (CR) networks.

Nowadays, energy consumption for wireless communications is becoming a major social and economic issue, especially with the explosive amounts of data traffic. However, limited efforts have been devoted to the energy-efficient resource allocation problem in NOMA-enabled systems [

9,

10,

11]. The authors in [

9] maximized energy efficiency subject to a minimum required data rate for each user, which leads to a nonconvex fractional programming problem. Meanwhile, a power allocation solution aiming to maximize the energy efficiency under users’ quality of service requirements was investigated [

10]. Fang et al. [

11] proposed a gradient-based binary search power allocation approach for downlink NOMA systems, but it requires high complexity. NOMA was also applied to future machine-to-machine (M2M) communications in [

12], and it was shown that the outage probability of the system can be improved when compared with OMA. Additionally, by jointly studying beamforming, user scheduling, and power allocation, the system performance of millimeter wave (mmWave) networks was studied [

13].

On the other hand, CR (one of the promising techniques to improve SE), has been extensively investigated for decades. In it, cognitive users (CUs) can utilize the licensed spectrum bands of the primary users (PUs) as long as the interference caused by the CUs is tolerable [

14,

15,

16]. Goldsmith et al. in [

17] proposed three operation models (opportunistic spectrum access, spectrum sharing, and sensing-based enhanced spectrum sharing) to exploit the CR technique in practice. It is conceivable that the combination of CR with NOMA technologies is capable of further boosting the SE in wireless communication systems. Therefore, many studies on the performance of spectrum-sharing CR combined with NOMA have been analyzed [

18,

19].

Along with the rapid proliferation of wireless communication applications, most battery-limited devices become useless if their battery power is depleted. As one of the remedies, energy harvesting (EH) exploits ambient energy resources to replenish batteries, such as solar energy [

20], radio frequency (RF) signals [

21], and both non-RF and RF energy harvesting [

22], etc. Among various kinds of renewable energy resources, solar power has been considered one of the most effective energy sources for wireless devices. However, solar power density highly depends on the environment conditions, and may vary over time. Thus, it is critical to establish proper approaches to efficiently utilize harvested energy for wireless communication systems.

Many early studies regarding NOMA applications have mainly focused on the downlink scenario. However, there are fewer contributions investigating uplink NOMA, where an evolved NodeB (eNB) has to receive different levels of transmitted power from all user devices using NOMA. Zhang et al. in [

23] proposed a novel power control scheme, and the outage probability of the system was derived. Besides, the user-pairing approach was studied in many predefined power allocation schemes in NOMA communication systems [

24] in which internet of things (IoT) devices first harvest energy from BS transmissions in the harvesting phase, and they then utilize the harvested energy to perform data transmissions using the NOMA technique during the transmission phase. The pricing and bandwidth allocation problem in terms of energy efficiency in heterogeneous networks was investigated in [

25]. In addition, the authors in [

20] proposed joint resource allocation and transmission mode selection to maximize the secrecy rate in cognitive radio networks. Nevertheless, most of the existing work on resource allocation assumes that the amount of harvested energy is known, or that traffic loads are predictable, which is hard to obtain in practical wireless networks.

Since the information regarding network dynamics (e.g., harvested energy distribution, primary user’s behavior) is sometimes unavailable in the cognitive radio system, researchers usually formulate optimization problems as the framework of a Markov decision process (MDP) [

20,

22,

26,

27]. Reinforcement learning is one of the potential approaches to obtaining the optimal solution for an MDP problem by interacting with the environment without having prior information about the network dynamics or without any supervision [

28,

29,

30]. However, it is a big issue for reinforcement learning to have to deal with large-state-space optimization problems. For this reason, deep reinforcement learning (DRL) is being investigated extensively these days in wireless communication systems where deep neural networks (DNNs) work as function approximators and are utilized to learn the optimal policy [

31,

32,

33]. Meng et al. proposed a deep reinforcement learning method for a joint spectrum sensing and power control problem in a cognitive small cell [

31]. In addition, deep Q-learning was studied for a wireless gateway that is able to derive the optimal policy to maximize throughput in cognitive radio networks [

32]. Zhang et al. [

33] proposed an asynchronous advantage, deep actor-critic-based scheme to optimize spectrum sharing efficiency and guarantee the QoS requirements of PUs and CUs.

To the best of our knowledge, there has been little research into resource allocation using deep reinforcement learning under a non-RF energy-harvesting scenario in uplink cognitive radio networks. Thus, we propose a deep actor-critic reinforcement learning framework for efficient joint power and bandwidth allocation by adopting hybrid NOMA/OMA in uplink cognitive radio networks (CRNs). In them, solar energy-powered CUs are assigned the proper transmission power and bandwidth to transmit data to a cognitive base station in every time slot in order to maximize the long-term data transmission rate of the system. Specifically, the main contributions of this paper are as follows.

We study a model of a hybrid NOMA/OMA uplink cognitive radio network adopting energy harvesting at the CUs, where solar energy-powered CUs opportunistically use the licensed channel of the primary network to transmit data to a cognitive base station using NOMA/OMA techniques. Beside that, a user-pairing algorithm is adopted such that we can assign orthogonal frequency bands to each NOMA group after pairing. We take power and bandwidth allocation into account such that the transmission power and bandwidth are optimally utilized by each CU under energy constraints and environmental uncertainty. The system is assumed to work on a time-slotted basis.

We formulate the problem of long-term data transmission rate maximization as the framework of a Markov decision process (MDP), and we obtain the optimal policy by adopting a deep actor-critic reinforcement learning (DACRL) framework under a trial-and-error learning algorithm. More specifically, we use DNNs to approximate the policy function and the value function for the actor and critic components, respectively. As a result, the cognitive base station can allocate the appropriate transmission power and bandwidth to the CUs by directly interacting with the environment, such that the system reward can be maximized in the long run by using the proposed algorithm.

Lastly, extensive numerical results are provided to assess the proposed algorithm performance through diverse network parameters. The simulation results of the proposed scheme are shown to be superior to conventional schemes where decisions on transmission power and bandwidth allocation are taken without long-term considerations.

The rest of this paper is structured as follows. The system model is presented in

Section 2. We introduce the problem formulation in

Section 3, and we describe the deep actor-critic reinforcement learning scheme for resource allocation in

Section 4. The simulation results and discussions are in

Section 5. Finally, we conclude the paper in

Section 6.

2. System Model

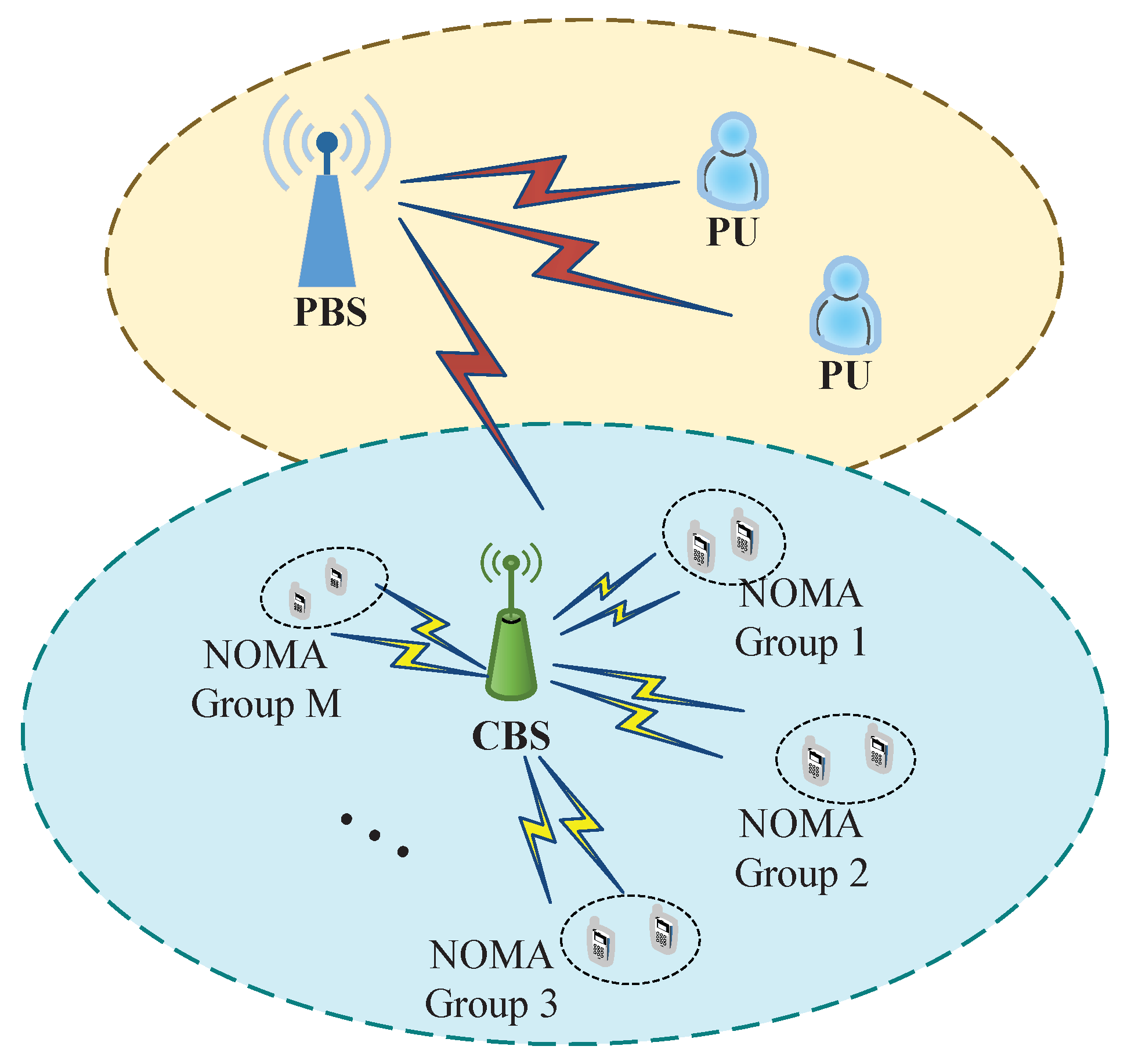

We consider an uplink CRN that consists of a cognitive base station (CBS), a primary base station (PBS), multiple primary users, and

cognitive users as illustrated in

Figure 1. Each CU is outfitted with a single antenna to transmit data to the CBS, and each is equipped with an energy-harvesting component (i.e., solar panels). The PBS and PUs have the license to use the primary channel at will. However, they do not always have data to transmit on the primary channel. Meanwhile, the CBS and the CUs can opportunistically utilize the primary channel by adopting a hybrid NOMA/OMA technique when the channel is sensed as free. To this end, the CBS divides the set of CUs into pairs according to Algorithm 1 where the CU having the highest channel gain will be coupled with the CU having the lowest channel gain, and one of available channels will be assigned to these pairs. More specifically, the CUs are arranged into

M NOMA groups, and the primary channel is divided into multiple subchannels to apply hybrid NOMA/OMA for the transmissions between the CUs and the CBS, with

denoting the set of NOMA groups. Additionally,

M NOMA groups are assigned to

M orthogonal subchannels,

, of the primary channel such that the CUs in each NOMA group can transmit on the same subchannel and will not interfere with the other groups. In this paper, successive interference cancellation (SIC) [

34] is applied at the CBS for decoding received signals, which are transmitted from the CUs. Moreover, we assume that the CUs always have data to transmit, and the CBS has complete channel state information (CSI) of all the CUs.

| Algorithm 1 User-pairing Algorithm |

- 1:

Input: channel gain, number of groups, M, number of CUs, . - 2:

Sort the channel gain of all CUs in decending order: - 3:

Define set of channel gains - 4:

for - 5:

- 6:

- 7:

- 8:

- 9:

end for - 10:

Output: Set of CU pairs.

|

The network system operation is illustrated in

Figure 2. In particular, at the beginning of a time slot, with duration

, all CUs concurrently perform spectrum sensing and report their local results to the CBS. Based on these sensing results, the CBS first decides the global sensing result as to whether the primary channel is busy or not following the combination rule [

35,

36], and then allocates power and bandwidth to all CUs for uplink data transmission. As a consequence, according to the allocated power and bandwidth of the NOMA groups, the CUs in each NOMA group can transmit their data to the CBS through the same subchannel without causing interference with other groups within duration

, where

is the total time slot duration. Information regarding the remaining energy in all the CUs is updated to the CBS at the end of each time slot. Each data transmission session of the CUs may take place in more than one time slot until all their data have been transmitted successfully.

During data transmission, the received composite signal at the CBS on subchannel

is given by

where

|

i ∈ {1,2},

m ∈{1,2,…,

M} is the transmission power of

CUi in NOMA group

Gm, in which

(

t) is the transmission energy assigned for

CUim in time slot

t;

xim(

t) denotes the transmit signal of

CUim in time slot

t, (𝔼{|

xim(

t)|

2} = 1);

ωm is the additive white Gaussian noise (AWGN) at the CBS on subchannel

SCm with zero mean and variance

σ2; and

him is the channel coefficient between

CUim and the CBS. The overall received signal at the CBS in time slot

t is given by

The received signals at the CBS on different sub-channels are independently retrieved from composite signal

using the SIC technique. In particular, the CU’s signal with the highest channel gain is firstly decoded, and then it will be removed from composite signal at the CBS, in a successive manner. The CU’s signal with the lower channel gain in the sub-channel is treated as noise of the CU with the higher channel gain. We assume perfect SIC implementation at the CBS. The achievable transmission rate for the CUs in NOMA group

are

where

is the amount of bandwidth allocated to subchannel

in time slot

t,

is the channel gain of

on subchannel

m, and

. Since the channel gain of

,

, is higher,

has a higher priority for decoding. Consequently, the signal of

is decoded first by treating the signal of

as interference. Next, user

is removed from signal

, and the signal of user

is decoded as interference-free. The sum achievable transmission rate of NOMA group

can be calculated as:

The sum achievable transmission rate at the CBS can be given as follows:

Energy Arrival and Primary User Models

In this paper, the CUs have a finite capacity battery,

, which can be constantly recharged by the solar energy harvesters. Therefore, the CUs can perform their other operations and harvest solar energy simultaneously. For many reasons (such as the weather, the season, different times of the day), the harvested energy from solar resources may vary in practice. Herein, we take into account a practical case, where the harvested energy of

in NOMA group

(denoted as

) follows a Poisson distribution with mean value

, as studied in [

37]. The arrival energy amount that

can harvest during time slot

t can be given as

where

. The cumulative distribution function can be given as follows:

Herein, we use a two-state Markov discrete-time process to model the state of the primary channel, as depicted in

Figure 3. We assume that the state of the primary channel does not change during the time slot duration,

, and the primary channel can switch states between two adjacent time slots. The state transition probabilities between two time slots are denoted as

, in which

F stands for the

free state, and

B stands for the

state. In this paper, we consider cooperative spectrum sensing, in which all CUs collaboratively detect spectrum holes based on an energy detection method, and they send these local sensing results to the CBS. Subsequently, the final decision on the primary users’ activities is attained by combining the local sensing data at the CBS [

36]. The performance of the cooperative sensing scheme can be evaluated based on probability of detection

and probability of false alarm

.

is denoted as the probability that the PU’s presence is correctly detected (i.e., the primary channel is actually used by the PUs). Meanwhile,

is denoted as the probability that the PU’s is detected to be active, but it is actually inactive (i.e., the sensing result of the primary channel is busy, but the primary channel is actually free).

4. Deep Reinforcement Learning-Based Resource Allocation Policy

In this section, we first reformulate the joint power and bandwidth allocation problem, which is aimed at maximizing the long-term data transmission rate of the system as the framework of an MDP. Then, we apply a DRL approach to solve the problem, in which the agent (i.e., the CBS) learns to create the optimal resource policy via trial-and-error interactions with the environment. One of the disadvantages of reinforcement learning is that the high computational costs can be imposed due to the long time learning process of a system with high state space and action space. However, the proposed scheme requires less computation overhead by adopting deep neural networks, as compared to other algorithms such as value iteration-based dynamic programming in partially observable Markov decision process (POMDP) framework [

20] in which the transition probability of the energy arrival is required for obtaining the solution. Thus, the complex in formulation and computation can be relieved regardless of the dynamic properties of the environment by using the proposed scheme, as compared to POMDP scheme.

Furthermore, the advantage of a deep reinforcement learning scheme as compared with the POMDP scheme is that the unknown harvested energy distribution can be estimated to create the optimal policy by interacting with the environment over the time horizon. In addition, the proposed scheme can work effectively in a large-state-and-space system by adopting deep neural networks. However, other reinforcement learning schemes such as Q-learning [

38], actor-critic learning [

39] might not be appropriate to large-state-and-space systems. In the proposed scheme, a deep neural network was trained to obtain the optimal policy where the reward of the system converges to optimal value. Then, the system can choose an optimal action at every state according to that policy learned from the training phase without re-training. Thus, deep actor-critic reinforcement learning can be more applicable to the wireless communication system.

4.1. Markov Decision Process

Generally, the purpose of reinforcement learning is for the agent to learn how to map each system state to an optimal action through a trial-and-error learning process. In this way, the accumulated sum of rewards can be maximized after a number of training time slots.

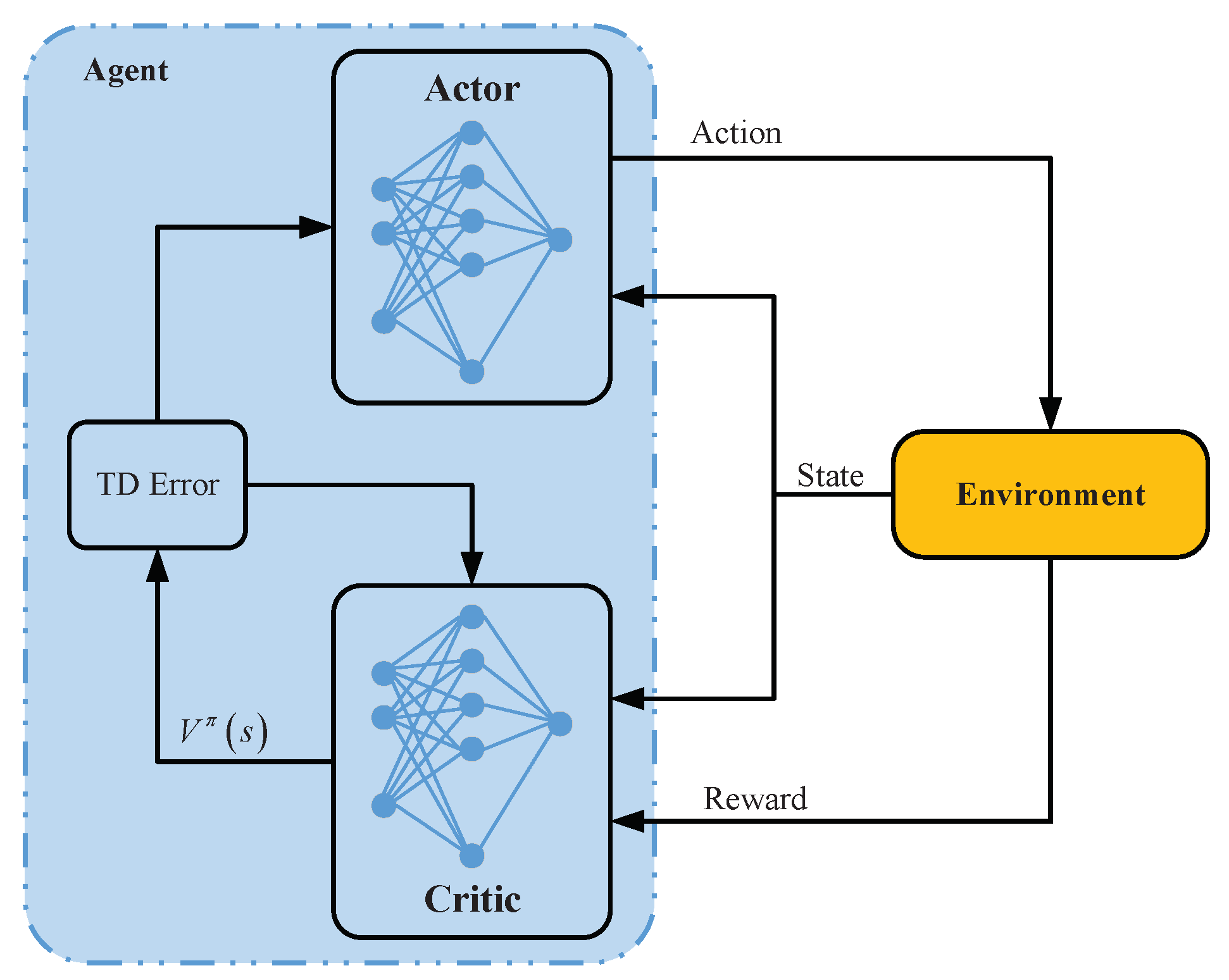

Figure 4 illustrates the traditional reinforcement learning via agent–environment interaction. In particular, the agent observers the system state and then chooses an action at the beginning of a time slot. After that, the system receives the corresponding reward at the end of the time slot, and transfers to the next state based on the performed action. The system will be updated and will then go into the next interaction between agent and environment.

We denote the state space and action space of the system in this paper as and , respectively; represents the state of the network in time slot t, where is the probability () that the primary channel is free in that time slot, and denotes a vector of remaining energy of CUs, where represents the remaining energy value of . The action at the CBS is denoted as . In this paper, we define the reward as the sum data rate of the system, as presented in Equation (5).

The decision-making process can be expressed as follows. At the beginning of time slot t, the agent observes the state, , from information about the environment, and then chooses action following a stochastic policy, , which is mapped from the environment state to the probability of taking an action. In this work, the network agent (i.e, the CBS) determines the transmission power for each CU and decides whether to allocate the bandwidth portion to the NOMA groups in each time slot. Then, the CUs perform their operations (transmit data or stay silent) according to the assigned action from the CBS. Afterward, the instant reward, , which is defined in Equation (5), is fed back to the agent, and the environment transforms to the next state, . At the end of the time slot, the CUs report information about the current remaining energy level in each CU to the CBS for network management. In the following, we describe the way to update information about the belief and the remaining energy based on the assigned actions at the CBS.

4.1.1. Silent Mode

The global sensing decision shows that the primary channel is busy in the current time slot, and thus, the CBS trusts this result and has all CUs stay silent. As a consequence, there is no reward in this time slot, i.e.,

. The belief in current time slot

t can be calculated according to Bayes’ rule [

40], as follows:

Belief

for the next time slot is updated as follows:

The remaining energy of

for the next time slot is updated as

where

is the consumed energy from the spectrum sensing process.

4.1.2. Transmission Mode

The global sensing decision indicates that the primary channel is free in the current time slot, and then, the CBS assigns transmission power levels to the CUs for transmitting their data to the CBS. We assume that the data of the CUs will be successfully decoded if the primary channel is actually free; otherwise, no data can be retrieved due to collisions between the signals of the PUs and CUs. In this case, there are two possible observations, as follows.

Observation 1 : All data are successfully received and decoded at the CBS at the end of the time slot. This result means the primary channel was actually free during this time slot, and the global sensing result was correct. The total reward for the network is calculated as

where the immediate data transmission rate of NOMA group

,

, can be computed with Equation (4). Belief

for the next time slot is updated as

The remaining energy in

for the next time slot will be

where

denotes the transmission energy assigned to

in time slot

t.

Observation 2 : The CBS can not successfully decode the data from the CUs at the end of time slot

t due to collisions between the signals of the CUs and the PUs. It implies that the primary channel was occupied, and misdetection happened. In this case, no reward is achieved, i.e.,

. Belief

for the next time slot can be updated as

The remaining energy in

for the next time slot is updated by

In reinforcement learning, the agent is capable of improving the policy based on the recursive lookup table of state-value functions. The state-value function,

, is defined as the maximum expected value of the accumulated reward starting from current state

s with the given policy, which is written as [

28]:

where

denotes the expectation, in which

is the discount factor, which can affect the agent’s decisions on myopic or foresighted operations;

is the stochastic policy, which maps environment state space

to action space

,

. The objective of the resource allocation problem is to find optimal policy

that provides the maximum discounted value function in the long run, which can satisfy the Bellman equation as follows [

41]:

The policy can be explored by using an

policy in which a random action is chosen with probability

, or an action can be selected based on the current policy with probability

during the training process [

42]. As a result, the problem of joint power and bandwidth allocation in Equation (7) can be rewritten as Equation (17), and the solution to deep actor-critic reinforcement learning will be presented in the following section.

4.2. Deep Actor-Critic Reinforcement Learning Algorithm

The maximization problem in Equation (17) can be solved by using the actor-critic method, which is derived by combining the value-based method [

43] and the policy-based method [

44]. The actor-critic structure involves two neural networks (actor and critic) and an environment, as shown in

Figure 5. The actor can determine the action according to the policy, and the critic evaluates the selected actions based on value functions and instant rewards that are fed back from the environment. The input of the actor is the state of the network, and the output is the policy, which directly affect how the agent chooses the optimal action. The output of the critic is a state-value function

, which is used to calculate the temporal difference (TD) error. Thereafter, the TD error is used to update the actor and the critic.

Herein, both the policy function in the actor and the value function in the critic are approximated with parameter vectors and , respectively, by two sequential models of a deep neural network. Both value function parameter and policy parameter are stochastically initialized and updated constantly by the critic and the actor, respectively, during the training process.

4.2.1. The Critic with a DNN

Figure 6 depicts the DNN at the critic, which is composed of an input layer, two hidden layers, and an output layer. The critic network is a feed-forward neural network that evaluates the action taken by the actor. Then, the evaluation of the critic is used by the actor to update its control policy. The input layer of the critic is an environment state, which contains

elements. Each hidden layer is a fully connected layer, which involves

neurons and uses a rectified linear unit (ReLU) activation function [

45,

46] as follows:

where

is the estimated output of the layer before applying the activation function, in which

indicates the

ith element of the input state,

, and

is the weight for the

ith input. The output layer of the DNN at the critic contains one neuron and uses the linear activation function to estimate the state-value function,

. In this paper, the value function parameter is optimized by adopting stochastic gradient descent with a back-propagation algorithm to minimize the loss function, defined as the mean squared error, which is computed by

where

is the TD error between the target value and the estimated value, which is given by

and it is utilized to evaluate selected action

of the actor. If the value of

is positive, the tendency to choose action

in the future, when the system is in the same state, will be strengthened; otherwise, it will be weakened. The critic parameter can be updated in the direction of the gradient, as follows:

where

is the learning rate of the critic.

4.2.2. The Actor with a DNN

The DNN in the actor is shown in

Figure 7, which includes an input layer, two hidden layers, and an output layer. The input layer of the actor is the current state of the environment. There are two hidden layers in the actor, where each hidden layer is comprised of

neurons. The output layer of the actor provides the probabilities of selecting actions in a given state. Furthermore, the output layer utilizes the soft-max activation function [

28] to compute the policy of each action in the action space, which is given as:

where

is the estimated value for the preference of choosing action

. In the actor, the policy can be enhanced by optimizing the state-value function as follows:

where

is the state distribution. Policy parameter

can be updated toward the gradient ascending to maximize the objective function [

39], as follows:

where

denotes the learning rate of actor network, and policy gradient

can be computed by using the TD error [

47]:

It is worth noting that TD error

is supplied by the critic. The training procedure of the proposed DACRL approach is summarized in Algorithm 2. In the algorithm, the agent interacts with the environment and learns to select optimal action in each state. The convergence of the proposed algorithm depends on number of steps per episode, the number of training episodes and the learning rate, which is discussed in the following section.

| Algorithm 2 The training procedure of the deep actor-critic reinforcement learning algorithm |

- 1:

Input:, , , , , , , , , T, W, , , . - 2:

Initialize network parameters of the actor and the critic: . - 3:

Initialize . - 4:

for each episode - 5:

Sample an initial state . - 6:

for each time step : - 7:

Observe current state , and estimate state value . - 8:

Choose an action: - 9:

- 10:

- 11:

Execute action , observe current reward . - 12:

Update epsilon rate - 13:

Update next state - 14:

Critic Process: - 15:

Estimate next state value . - 16:

Critic calculates TD error - 17:

if episode is end at time slot t: - 18:

- 19:

else - 20:

- 21:

end if - 22:

Update parameter of critic network - 23:

Actor Process: - 24:

Update parameter of actor network - 25:

end for - 26:

end for - 27:

Output: Final policy .

|

5. Simulation Results

In this section, we investigate the performance of uplink NOMA systems using our proposed scheme. The simulation results are compared with other myopic schemes [

48] (Myopic-UP, Myopic-Random, and Myopic-OMA) in terms of average data transmission rate and energy efficiency. In the myopic schemes, the system only maximizes the reward in the current time slot, and the system bandwidth is allocated to the group only if it has at least one active CU in the current time slot. In particular, with the Myopic-UP scheme, the CBS arranges the CUs into different pairs based on Algorthim 1. In the Myopic-Random scheme, the CBS randomly decouples the CUs into pairs. In the Myopic-OMA scheme, the total system bandwidth is divided equally into sub-channels in order to assign them to each active CU without applying user pairing. In the following, we analyze the influence of the network parameters on the schemes through the numerical results.

In this paper, we used Python 3.7 with the TensorFlow deep learning library to implement the DACRL algorithm. Herein, we consider a network based on different channel gain values between the CUs and the CBS, such as

dB,

dB,

dB,

dB,

dB,

dB, where

are the channel gains between

and the CBS, respectively. Two sequential DNNs are utilized to model the value function and the policy function in the proposed algorithm. Each DNN is designed with an input layer, two hidden layers and an output layer as described in

Section 4. The number of neurons in each hidden layer of the value function DNN in the critic, and the policy function in the actor, are set at

and

, respectively. For the training process, value function parameter

and the policy parameter

are stochastically initialized by using uniform Xavier initialization [

49]. The other simulation parameters for the system are shown in

Table 1.

We first examine the average transmission rates of the the DACRL scheme under different training iterations,

T, while the number of episodes,

L, increases from 1 to 400. We achieved the results by calculating the average transmission rate after separately running the simulation 20 times, as shown in

Figure 8. The curves sharply increase in the first 50 training episodes, and then gradually converge to the optimal value. We can see that the agent needs more than 350 episodes to learn the optimal policy at

iterations per episode. However, with the increment in

T, the algorithm begins to converge faster. For instance, the proposed scheme learns the optimal policy in less than 300 episodes when

. Nevertheless, it might take a very long time for the training process if each episode uses too many iterations, and the algorithm evenly converges to a locally optimal policy. As a result, the number of training iterations per episode and the number of training episodes should not be too large or too small. In the rest of the simulations, we set training episodes at 300 and training iterations at 2000.

Figure 9 shows the convergence rate of the proposed scheme according to various values of actor learning rate

and critic learning rate

. The figure shows that the reward converges faster with increments in the learning rates. In addition, we can observe that the proposed scheme with actor learning rate

and critic learning rate

provides the best performance after 300 episodes. When the learning rates of the actor and the critic increase to

and

, respectively, the algorithm converges very fast, but does not bring a good reward due to underfitting. Therefore, we set the actor and critic learning rates at

and

, respectively, for the rest of the simulations.

Figure 10 illustrates the average transmission rates under the influence of mean harvested energy. We can see that the average transmission rate of the system increases when the mean value of harvested energy grows. The reason is that with an increase in

, the CUs can harvest more solar energy, and thus, the CUs have a greater chance to transmit data to the CBS. In addition, the average transmission rate of the proposed scheme dominates the conventional schemes because the conventional schemes focus on maximizing the current reward, and they ignore the impact of the current decision on the future reward. Thus, whenever the primary channel is free, these conventional schemes allow all CUs to transmit their data by consuming most of the energy in the battery in order to maximize the instant reward. This makes the CUs stay silent in the future due to energy shortages. Although the Myopic-Random scheme had lower performance than the Myopic-UP scheme, it still had greater rewards than Myopic-OMA. This outcome demonstrates the efficiency of the hybrid NOMA/OMA approach, compared with the OMA approach, in terms of average transmission rate.

In

Figure 11, the energy efficiency of the schemes was compared with respect to the mean value of the harvested energy. In this paper, we define energy efficiency as the transmission data rate obtained at the CBS over the total energy consumption of the CUs during the operations. We can see that the energy efficiency declines as

rises. The reason is that when the harvested energy goes up, the CUs can gather more energy for their operations; however, the amount of energy overflowing the CUs’ batteries also increases. The curves show that the performance of the proposed scheme outperforms the other conventional schemes because the DACRL agent can learn about the dynamic arrival of harvested energy from the environment. Thus, the proposed scheme can make proper decision in each time slot.

In

Figure 12 and

Figure 13, we plot the average transmission rate and the energy efficiency, respectively, based on differing noise variance at the CBS. The curves show that system performance notably degrades when noise variance increases. To explain this, noise variance will degrade the data transmission rate, as shown in Equation (3). As a consequence, energy efficiency also decreases with an increment in noise variance. Based on noise variance at the CBS, the figures verify that the proposed scheme dominates the myopic schemes.