1. Introduction

With the rapid development of the Internet, the cost for ordinary users to use the network is decreasing. The Internet has entered an era in which the whole populace are netizens. The Internet has become the most important way for people to obtain and release information, for which purpose, social networks are the most popular websites. However, in recent years, the privacy literacy of users has been unable to support their privacy protection behaviour [

1], and an increasing number of privacy disclosure events have occurred; Facebook provided 50 million pieces of user data to the Cambridge company, which affected the American election in March 2018, and in November, due to the opening of the API (application programming interface) to third-party applications, 68 million users’ mobile phone photo information was leaked. In 2017, Twitter announced that it was giving up its DNT (do not track) privacy protection policy. Tumblr disclosed 65 million users’ email accounts and passwords in May 2016. In addition, a hacker organisation called “peace” sold 167 million LinkedIn users’ login information on the black market at the price of five bitcoins (approximately

$2200). On 19 October 2016, the NetEase user database was leaked, and more than 100 million users’ 163 and 126 mailbox information was leaked, including usernames, passwords, password security information, login IPs, and user birthdays. These privacy leakages may lead to a series of malicious acts [

2,

3,

4,

5], including but not limited to tracking, defamation, spam, phishing, identity theft, personal data cloning, Sybil attack, etc. However, academia has always focused on large-scale industrial networks, such as the Internet of Things, smart grids, and cloud storage networks, ignoring the privacy protection of individual users because the privacy leakage of large-scale networks causes substantial visible economic losses, but the impact of personal information leakage is scattered and small. Moreover, users’ use of social networks is a process of sharing public information. Applying privacy protection methods to traditional industrial networks will damage user experience.

The existing privacy protection methods in social networks include anonymity, decentralisation, encryption, information security regulations, fine-grained privacy settings and access control, and improving user privacy awareness and privacy behaviour [

6]. The first four methods need to rely on operators, which has proven to be unreliable. With the rapid development of attribute inference, link inference, personal identification, and community discovery, the role of anonymisation is becoming weaker and weaker. As long as users use social networks, they are disclosing information, so the adaptability of encryption is poor. Fine-grained privacy settings and access control rely on the user’s own privacy literacy, but according to the research, less than one-third of users have changed these settings, and most of the settings are not reasonable. Therefore, the most fundamental solution is to help users cultivate their privacy awareness and enhance their privacy protection behaviour. Privacy metrics are the best way to achieve this goal. These metrics quantify all aspects of the ways that users may disclose private information on social networks and transform the virtual concept of privacy into specific values in the physical space so that users can intuitively understand their privacy status. If they are not satisfied with their current privacy status, they can continuously adjust it according to the privacy score, enhance their privacy awareness in the process of adjustment, and cultivate behavioural habits of privacy protection.

The method of traditional privacy metrics basically uses mathematical calculations to obtain quantitative statistics on all the aspects that affect users’ privacy disclosure, including but not limited to attribute information, network environment information, trust between users, and publishing information content. However, these approaches face two problems. First, these approaches are inefficient. Most of these approaches first extract features, then measure them separately, and finally integrate them into a numerical value. In addition, the calculation method also faces various doubts because privacy is a virtual concept with no unifying principle, and any calculation is considered to be subjective and unconvincing. Second, this method relies too strongly on artificial feature extraction. In previous research on privacy metrics, feature extraction is a difficulty. Which features can be used for privacy measurement? Which features are more important? What associations exist between these features? These problems urgently need to be solved but have not been solved. Meanwhile, when considering the network environment of users [

7], there may be tens of millions of links around a user. Previous research methods only obtained one user’s privacy score after analysing the whole network environment, which is undoubtedly inefficient and a waste of resources.

For the above problems, deep neural networks, which are currently a popular research topic, have inspired us. A deep neural network can effectively extract the hidden relationship between features without human intervention. A large number of studies show that the hidden relationship between these features has a very large effect on the final results. Among the many neural network models, graph convolution networks (GCNs) [

8] can extract features from graph structures and combine the features of nodes, which is suitable for scenarios in social networks. However, deep learning frameworks require a large amount of labelled data for training, and the vast majority of people cannot accurately quantify privacy as a virtual concept, so data annotation is very difficult. Therefore, we label a small number of samples with the help of experts and use few-shot learning to solve this problem.

The goal of this paper is to use GCNs to build a framework to measure the privacy of online social network users. Compared with the traditional method, this framework has the following advantages. First, this framework can eliminate the interference of human subjective consciousness to the greatest extent, it only needs to select features, and it does not participate in the calculation and measurement process. Second, due to the difficulty of sample labelling in privacy measurement, our method only needs a small number of labelled samples. Finally, our method can obtain the privacy measurement scores of all the users in the whole network at the same time.

2. Related Work

For a long time, risk assessment in industrial networks, which is similar to personal privacy metrics, has been developing rapidly, but privacy metrics have been developing slowly. With the increase in the personal privacy disclosure events in recent years, some researchers have begun to focus on personal privacy.

In the early stages of privacy metrics, researchers measured a single aspect of privacy leakage. Dee et al. studied the harm caused by a large number of users’ age information being leaked on social networks [

9]. Srivastava et al. conducted an in-depth study on privacy leakage caused by character information being published by users [

10]. Liang et al. found that after deleting published pictures on various social networks, they could still be accessed for a certain period of time, and such deletion delays could cause unnecessary privacy disclosure [

11].

With the development of research, an increasing number of factors are being considered in privacy metrics. Maximilien et al. measured the sensitivity and visibility of multiple attribute information in user profiles and then calculated a unified score through Bayesian statistics [

12]. Liu et al. proposed an IRT model to integrate the sensitivity and visibility of attributes, which more reasonably calculates the privacy score [

13]. Aghasian et al. combined attribute sensitivity and visibility and used a statistical fuzzy system to solve the problem of calculating a privacy score on multiple social network platforms [

14]. Fang et al. used the above methods to provide users with a guiding template for privacy settings on their profiles [

1].

With the continuous in-depth study of social networks, the strong interactivity in social networks has attracted much attention. In addition, attribute inference, link inference, identity links, and other research topics have been greatly developed, which means researchers must extend the privacy problem to the whole network environment. The more friends around a user who pay attention to his life, the greater the risk of privacy leakage he faces [

15]. Pensa et al. use the community discovery method to group users, measure the privacy in each group, and help users make reasonable changes to privacy settings through online learning. Zeng et al. believe that a single user’s privacy disclosure depends on his trust in the friends around him and proposes a framework based on trust awareness to evaluate a user’s privacy leakage [

16]. Alsakal et al. [

17] introduced information metrics for users in a whole social network by using information entropy theory and discussed the impact of individual identifying information and combinations of different pieces of information on user information disclosure.

With the development and influence of interdisciplinary subjects, such as social science, in recent years, researchers have been committed to analysing the graph structure in social networks and found that it is highly consistent with the small-world and homogeneity principles, thus triggering a series of studies. Serfontein et al. used the method of self-organising networks to study security threats to users in a network graph [

18]. Djoudi et al. proposed an infectious communication framework that uses graph structure analysis, discussed the impact of high-trust and low-trust users in social networks, and proposed a calculation method for the trust index [

19]. Due to a large number of users and low computational efficiency in the social network graph structure, some researchers have shifted their research objectives from users to social networks and performed privacy metrics on an entire social networking site, but this measurement has little significance for users. Oukemeni et al. conducted detailed research on access control of social networking sites and various settings of users and then measured the privacy leakage of these social networking sites in different situations [

6]. Yu et al. analysed possible aspects of privacy leakage in social networking sites and constructed a framework based on user groups, information control and posts, information deletion and reply, and other aspects to measure the privacy scores of multiple social networking sites [

20]. De Salve et al. propose a Privacy Policies Recommended System (PPRS) that assists the users in defining their own privacy policies. The proposed system is able to exploits a certain set of properties (or attributes) of the users to define permissions on the shared contents [

21].

However, in recent years, the application of graph neural networks in other fields has been far ahead of the direction of privacy protection, which often causes privacy threats. In [

22], the author applies GNNs to social recommendation, which can perform social recommendation in heterogeneous networks, but it can also infer some characteristics of the target users from the recommended users. [

23] used GNNs to link identities of users in multiple social networks. Although GNNs can provide more services for users, it also increases the malicious behaviour of identity inference. Mei et al. [

24] proposed a framework for using a convolutional neural network (CNN) to carry out inference attacks on image and attribute information. Experiments showed that it can effectively and accurately infer attribute information that users do not disclose. At the same time, Li et al. [

25] proposed a method of using a CNN to infer missing information in social network personal data; the effect is very excellent, but the missing information may be deliberately hidden by users. We hope that we can also use neural networks to help users improve their privacy awareness, cultivate their privacy behaviour, and have the ability to fight against these methods that may cause privacy disclosure.

To date, the privacy metrics of social networks have remained at the stage of using basic mathematical calculations to quantify multiple aspects of privacy, but the hidden relationship between these aspects is ignored. Meanwhile, these methods are inefficient, which means that in most studies, the whole network where users are located is analysed and quantified, but only the privacy score of the target user is obtained, which is undoubtedly a waste of resources. Related fields have entered the area of artificial intelligence, but the development of artificial intelligence in privacy measurement is slow.

To address the above problems, we innovatively introduce deep learning model GCNs to obtain users’ privacy metrics on social networks. Graph convolution networks (GCNs) [

8] are an efficient variant of convolutional neural networks (CNNs) on graphs. A GCN stacks layers of learned first-order spectral filters followed by a nonlinear activation function to learn graph representations. Recently, GCNs and their subsequent variants have made state-of-the-art achievements in various application fields, including but not limited to citation networks [

8], social networks [

26], applied chemistry [

27], natural language processing [

28,

29,

30], and computer vision [

31,

32].

Few-shot learning is a method to learn a unique set of new classes by using a few labelled data in a set of base classes without overfitting. A group of mature applications have emerged: Liu et al. [

33] follow a semi-supervised learning setup but use graph-based label propagation (LP) [

34] for classification and jointly use all test images. Douze et al. [

35] extended this method to a wider range by using a large number of unlabelled images in the set of images for learning without using additional text information. In the research of Iscen et al. [

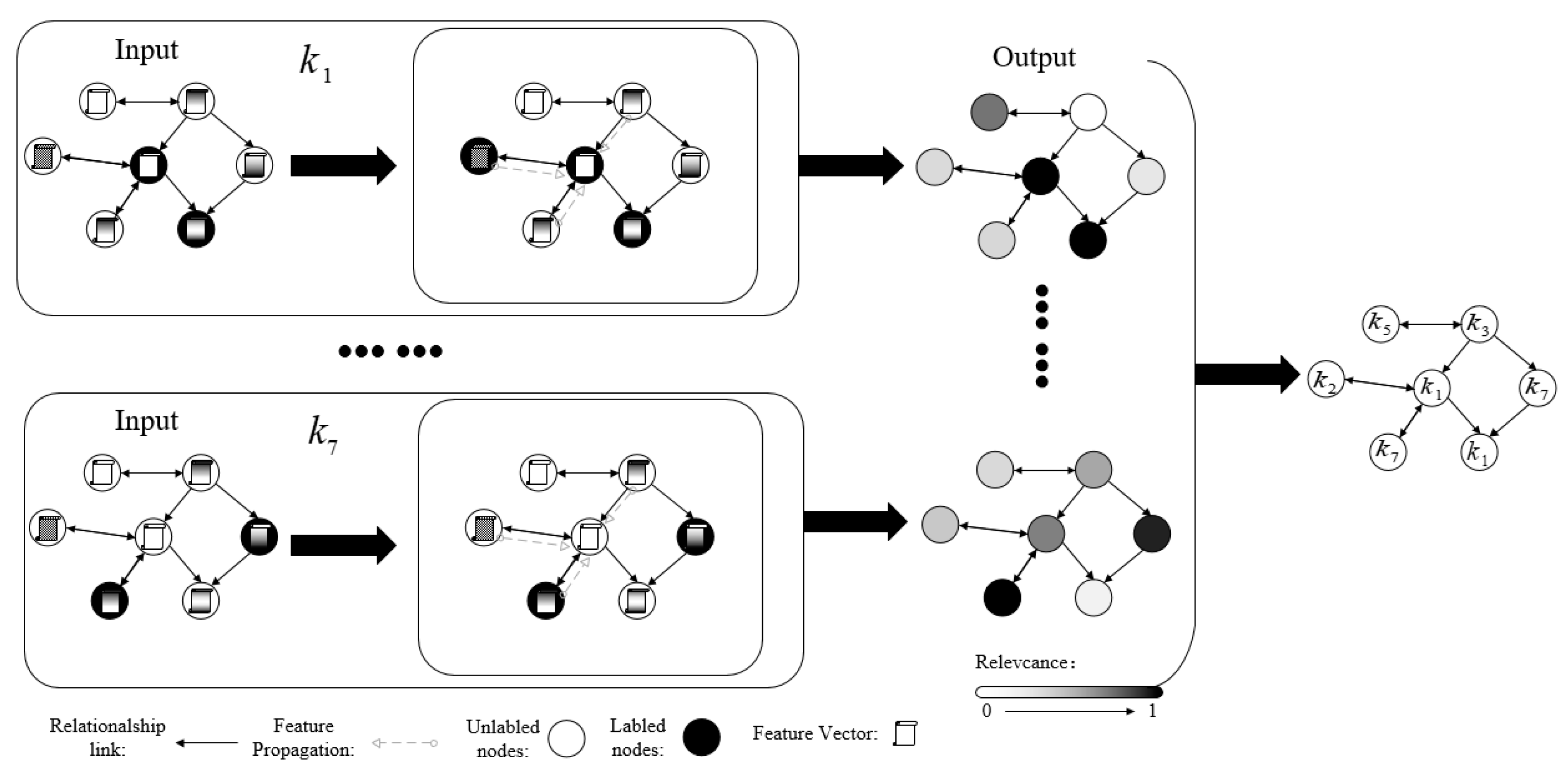

36], a method of using GCNs and few-shot learning to identify a new class of image is proposed, which inspires us. In the field of privacy security, labelled data are scarce, and experts with relevant specialties are required to finish this work, which greatly limits research in deep learning. Therefore, we introduce few-shot learning to solve this problem.

In this paper, our contributions are as follows:

- (1)

We combine a user’s attribute information, behaviour characteristics, friend relationships, and graph structure information to obtain the user’s comprehensive privacy scores.

- (2)

We innovatively introduce the deep learning framework into the field of privacy measurement, which addresses the shortcomings of previous studies that can only calculate privacy metrics for a single user each time, extracts the hidden relationship between different features, and accurately and efficiently measures the privacy of users in the whole network.

- (3)

We introduce the few-shot learning method and SGCs (simplifying graph revolutionary networks), which can alleviate the difficulties of labelled data in the security field and long, time-consuming training in deep learning.

- (4)

We crawl real datasets on social networks, perform statistical analysis, extract features, and conduct an experimental demonstration of our model.

3. Datasets and Notation

3.1. Datasets

To use a GCN, we need to collect users’ relationship graphs and features to build sample datasets. In addition, with the introduction of few-shot learning, we score a small number of samples in each category under the guidance of private security experts; these scores measure and grade the privacy status of users based on their characteristics. Then, we label the samples with the privacy scores. In this section, we will give a detailed description of the datasets and the extracted features we collect, which will provide the basis for subsequent method design and validation.

Due to the lag in the research of privacy measurement in social networks and datasets involving user privacy, there are few public datasets. The existing datasets in privacy metrics research are mainly referenced from similar fields, such as data mining, sentiment analysis, recommendation systems, link inference, community discovery, and attribute inference. However, these datasets are collected for their specific areas and do not contain all the content we need. Even for social network datasets, their content is not comprehensive, either containing only user information and graph information or containing published text information. For example, Blogcatalog is a dataset of social networks whose graph is composed of bloggers and their social relations (such as friends); labels indicate the interests of the blogger. Reddit is composed of posts from the Reddit forum. Epicions is a multigraph dataset collected from an online product review website that contains the attitudes (trust/distrust) of reviewers towards other reviewers and the rating of products by reviewers. In our study, we combine a user’s profile information, the relationship information in the user’s network environment, the user’s behaviour characteristics, and other extracted features. No public datasets contain all of this information. Therefore, we build our dataset according to our needs.

Our data were collected on Sina Weibo, which is China’s largest online social network, with 486 million monthly active users and 211 million daily active users. A user’s friend relationships, profile, and publishing content are all public. We investigated nearly 500 students, teachers, and employees at our school. Approximately one-third of these individuals are users of Sina Weibo. In a further follow-up survey, we selected 16 users with certain differences as the seed of the crawling dataset according to the following criteria: (1) These users are active users of Sina Weibo. (2) These users have as many friend relationships as possible with other seed users. The reason for our requirements is to ensure that the final dataset conforms to the small-world and homogeneity principles and enables us to extract enough behaviour characteristics that relate users. If users are randomly selected from social networks, the resulting structure graphs may be disjoint.

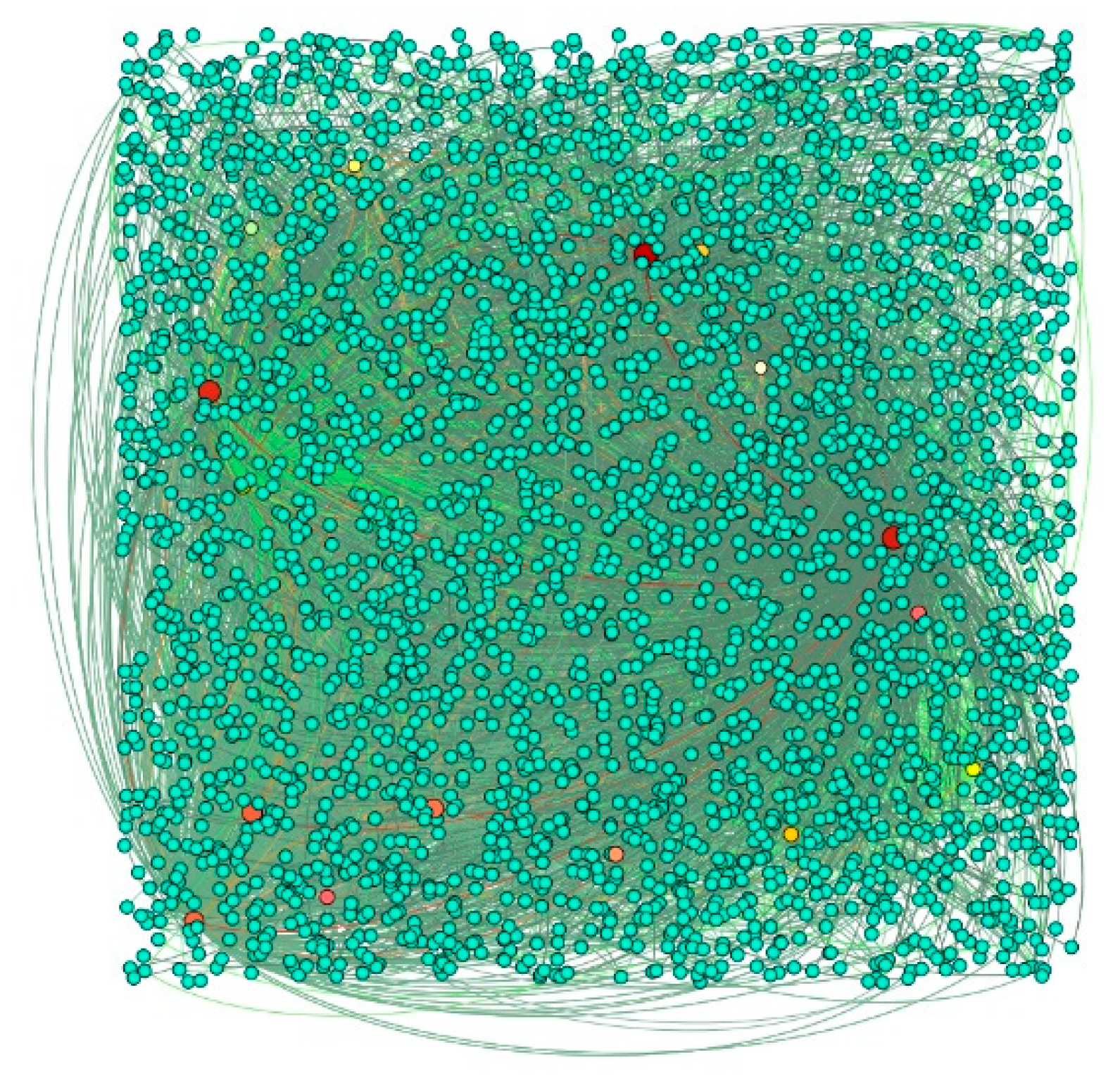

We use the crawled Sina Weibo user data to build two datasets and extract the features we need. The first dataset contains these 16 users and all their friends, including Follows and Followers. The second dataset contains the friends of these 16 users and the friends of their friends. Specific information and selected feature information are shown in

Table 1 and

Table 2. The node structure of Dataset 1 is shown in

Figure 1.

It should be noted that when we crawled the dataset, we processed users with large numbers of followers differently because most of these users are public figures and official institutions, and studying them would have little relevance to the privacy disclosure of ordinary users. Without processing, the number of followers in only one public figure account will reach hundreds of millions. Therefore, we only crawl some of the followers of these users, but the feature content is still calculated according to the actual number of followers.

The difficulty of extracting the features in

Table 2 is the average extraction difficulty of the nine attributes in

Table 3. The extraction difficulty of an attribute is set to 0 or 1. An attribute that can be directly obtained from the profile has a difficulty of 1; otherwise, it is 0. Sensitivity is the sum of the sensitivity of the exposed attributes of the user, and the sensitivity of each attribute is shown in

Table 3. Account usage time is the number of months from the registration time to the time when we crawled the data. The registration time can be directly obtained from the profile. The time of last Weibo is the number of months from the last published Weibo to the time when we crawled the data. The number of likes, comments, reposts, @-mentions, topics involved, and Weibo are obtained from the Weibo content published by users. The number of pictures and videos sent can be obtained from social networking sites.

The sensitivity of attributes is obtained through statistical analysis of an online questionnaire (

https://www.wjx.cn/report/1647730.aspx). We have the degree of anxiety of users after they disclose these attributes as an option, let users choose it according to their own ideas, and then objectively and accurately count the privacy sensitivity of attributes. We designed five options:

L1: very worried,

L2: worried,

L3: not clear,

L4: not worried, and

L5: not worried at all. The final result represented for each option is the percentage of the number of people who selected the option. We generate statistics on the data obtained from the questionnaire, take L3 as the benchmark, and use adjustment parameters and formulas to calculate the sensitivity of each attribute. The larger the value is, the more sensitive the attribute is, and the more worried the user is about the disclosure of this attribute content. The final results are shown in

Table 3.

3.2. Problem Description and Notation

In social networks, privacy metrics evaluate various factors that may cause privacy disclosure and finally obtain a number that can map the user’s privacy status, which represents the degree of the user’s privacy disclosure. In this paper, we aim to integrate the attribute information, friend relationships, and behaviour characteristics of users on social networks through the introduction of GCNs to obtain the degree of privacy exposure and achieve the purpose of privacy metrics. In addition, our method can reduce subjective intervention and the difficulty of labelling samples.

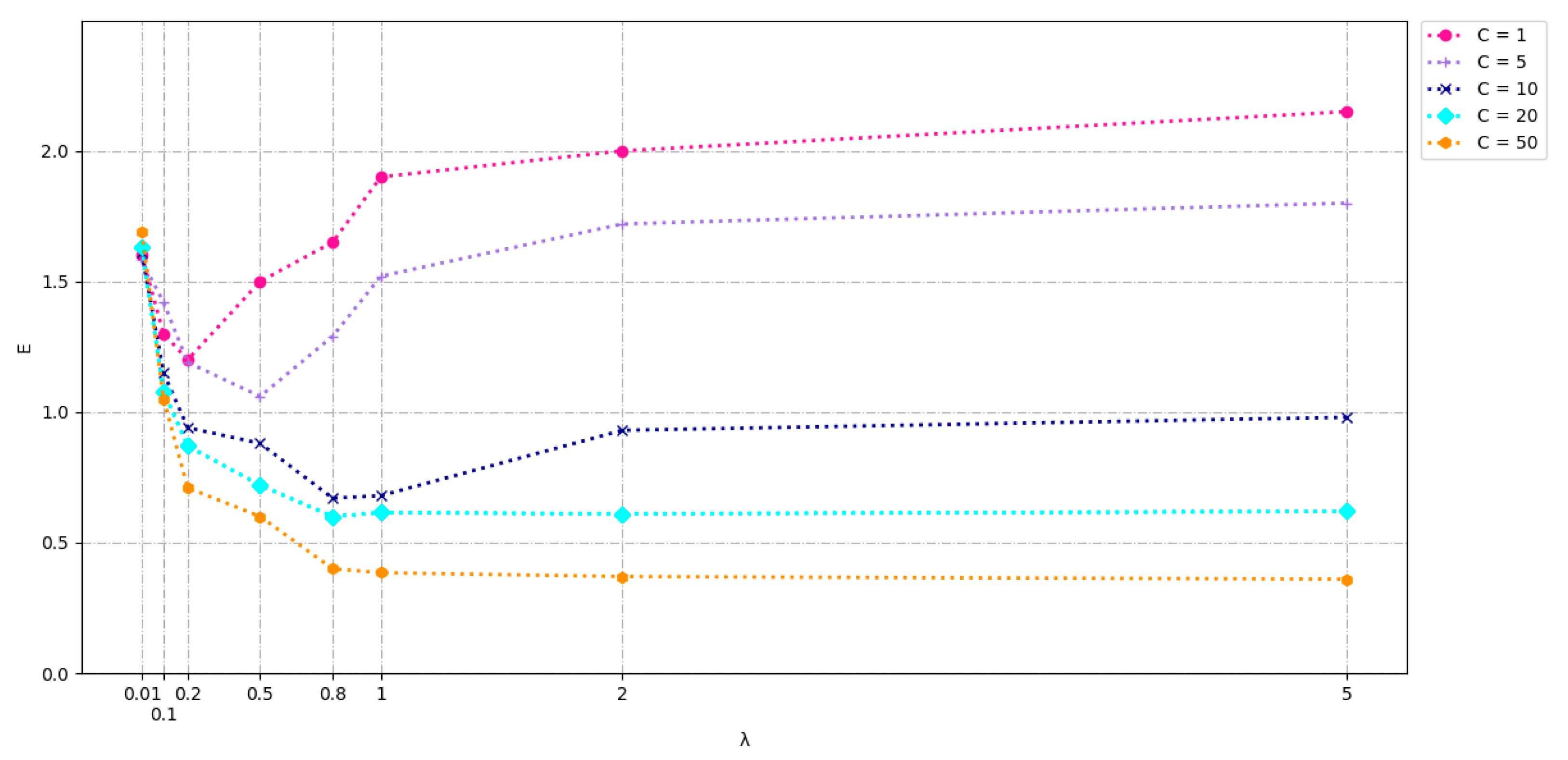

We define the sample space as . The privacy scores are defined as numbers from 1 to 7, that is, the ultimate goal is to classify unlabelled samples into . The privacy score of a sample is the corresponding category number and is ultimately classified into a certain category. In each category , there are few labelled samples , and an unlimited number of unlabelled samples . The feature set of the samples is , and finally, all the samples can be expressed as a matrix .

To combine the graph structure information, we establish the adjacency matrix of nodes, where indicates that the link of node i points to node j, and in a social network, it indicates that user i follows user j. It is worth noting that the adjacency matrix we establish is asymmetric— is not necessarily equal to —which means that the relationship between nodes is bilateral. Since the elements on the diagonal of the matrix are all 0, to be able to back-propagate in the training of the deep neural network, we normalise the adjacency matrix to obtain , where is the degree matrix of and 1 is a vector for which the elements are all 1.