Social Responses to Virtual Humans: The Effect of Human-Like Characteristics

Abstract

:1. Introduction

1.1. Social Responses to Virtual Humans

1.2. How Humans Respond Socially to Humans

1.3. How Humans Respond Socially to Virtual Humans

1.4. Virtual Human Characteristics

1.4.1. Facial Embodiment

1.4.2. Voice Embodiment

1.4.3. Emotion

1.4.4. Personality

1.5. Framework of Social Responses and Hypotheses

2. Experiment #1: Social Facilitation

2.1. Tasks

2.1.1. Anagram Task

2.1.2. Maze Task

2.1.3. Modular Arithmetic Task

2.2. Participants

2.3. Materials

2.4. Design and Procedure

- a simple task alone

- a simple task in the presence of a human

- a simple task in the presence of a virtual human

- a simple task in the presence of a graphical shape

- a complex task alone

- a complex task in the presence of a human

- a complex task in the presence of a virtual human

- a complex task in the presence of a graphical shape

- H → G → V → A

- G → V → A → H

- V → A → H → G

- A → H → G → V

- anagram → maze → modular arithmetic

- maze → modular arithmetic → anagram

- modular arithmetic → anagram → maze

2.5. Results

2.5.1. Post Hoc Analyses for Each Task Type

2.5.2. Post Hoc Analyses for Each Virtual Human Type

3. Experiment #2: Politeness Norm

3.1. Participants

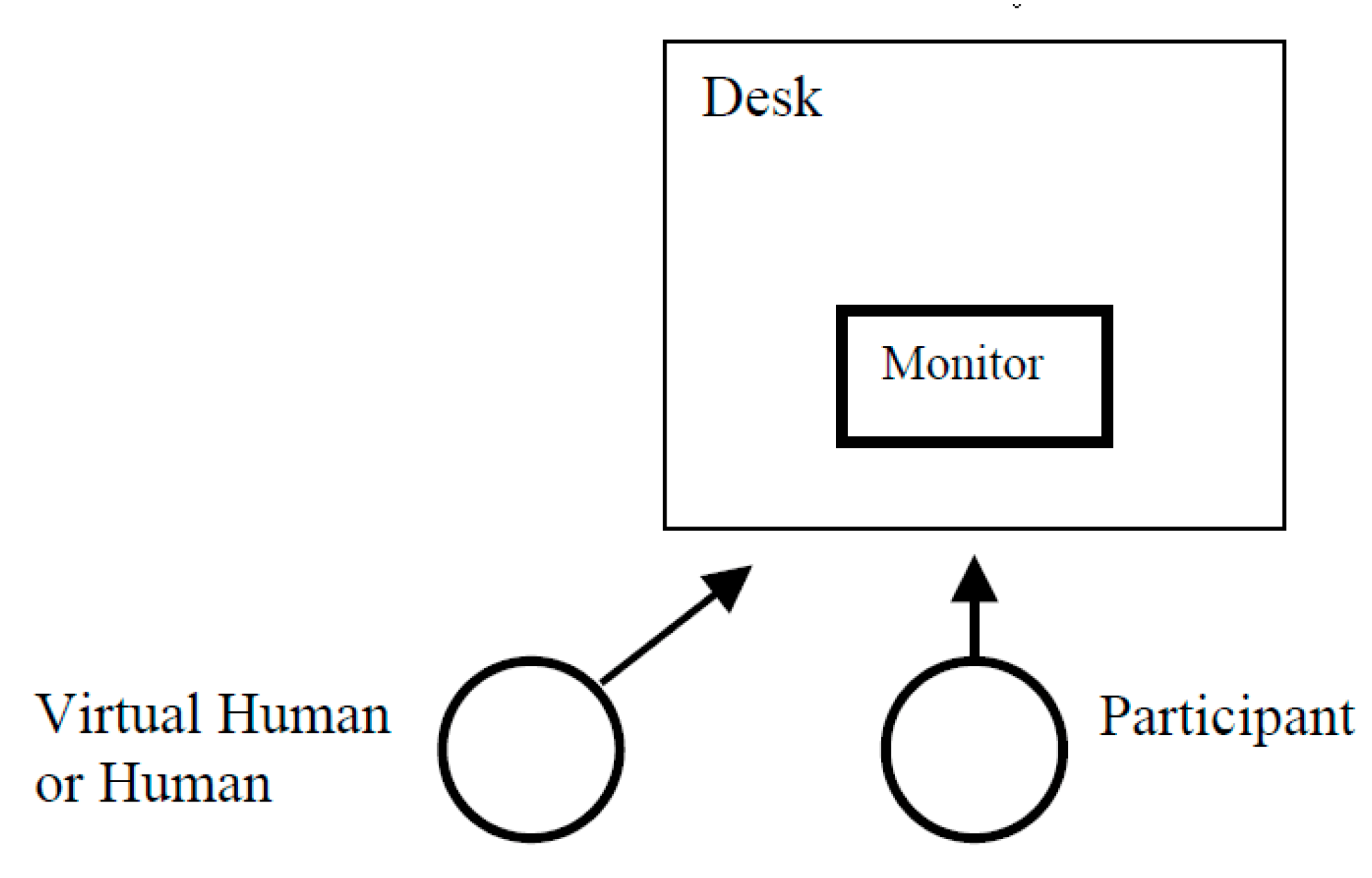

3.2. Materials

3.3. Design and Procedure

3.3.1. Presentation Session

3.3.2. Testing Session

3.3.3. Scoring Session

3.3.4. Interview Session

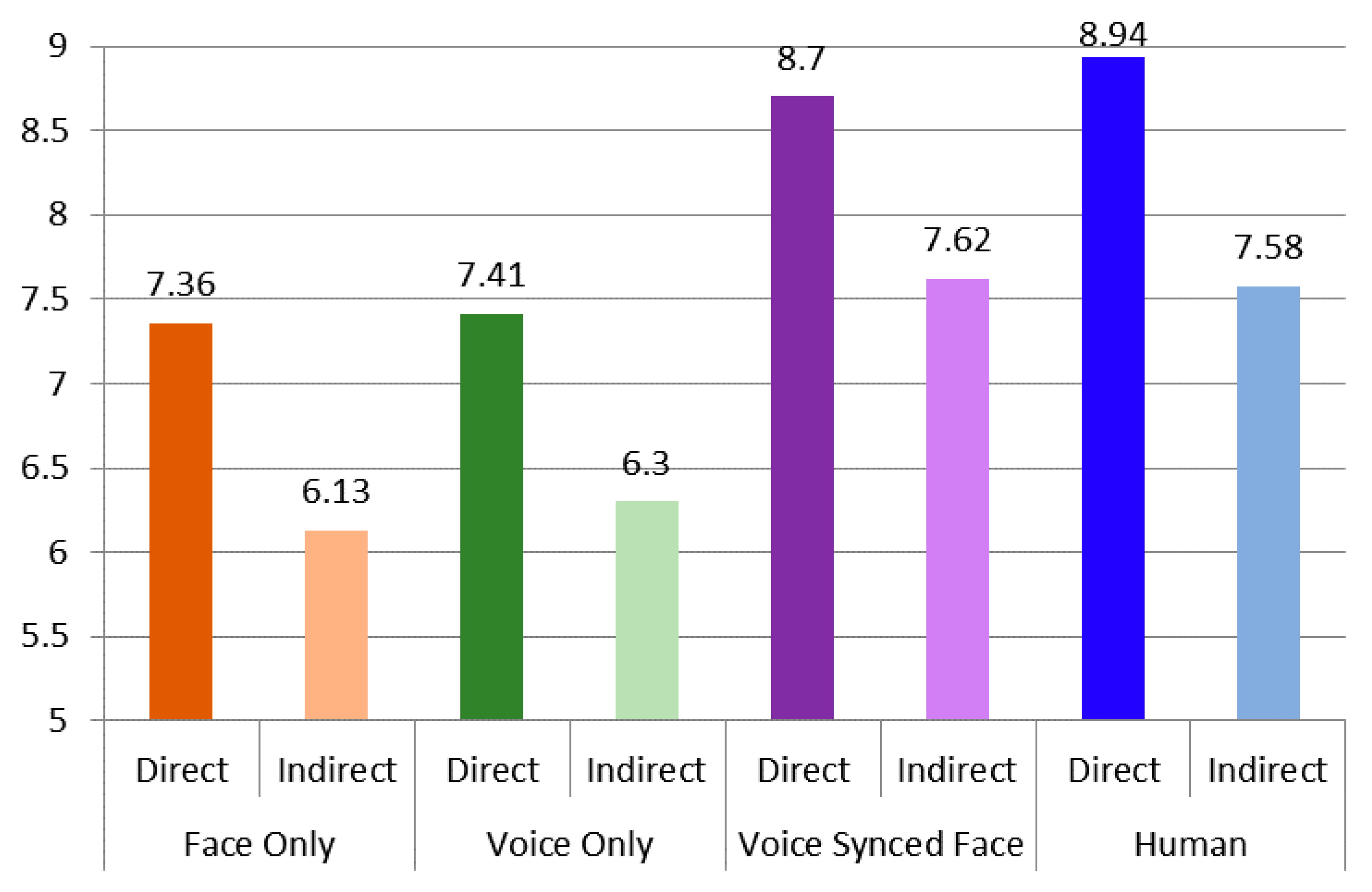

3.4. Results

Post Hoc Analyses

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

Appendix A. 20 Facts on World Geography

- Asia is a larger continent than Africa.

- The Amazon rainforest produces more than 20% of the world’s oxygen supply.

- The largest desert in Africa is the Sahara desert.

- There are more than roughly 200 million people living in South America.

- Australia is the only country that is also a continent.

- The tallest waterfall is Angel Falls in Venezuela.

- More than 25% of the world’s forests are in Siberia.

- The largest country in South America is Brazil. Brazil is almost half the size of South America.

- Europe produces just over 18 percent of all the oil in the world.

- Asia has the largest population with over 3 billion people.

- Europe is the second smallest continent with roughly 4 million square miles.

- The four largest nations are Russia, China, USA, and Canada.

- The largest country in Asia by population is China with more than 1 billion people.

- The Atlantic Ocean is saltier than the Pacific Ocean.

- The longest river in North America is roughly 3000 feet and it is the Mississippi River in the United States.

- Poland is located in central Europe and borders with Germany, Czech Republic, Russia, Belarus, Ukraine and Lithuania.

- Australia has more than 28 times the land area of New Zealand, but its coastline is not even twice as long.

- The coldest place in the Earth’s lower atmosphere is usually not over the North or South Poles.

- The largest country in Asia by area is Russia.

- Brazil is so large that it shares a border with all South American countries except Chile and Ecuador.

Appendix B. 12 Questions on World Geography

References

- Jeong, S.; Hashimoto, N.; Makoto, S. A novel interaction system with force feedback between real-and virtual human: An entertainment system: Virtual catch ball. In Proceedings of the 2004 ACM SIGCHI International Conference on Advances in Computer Entertainment Technology, Singapore, 3–5 June 2004; pp. 61–66. [Google Scholar]

- Zibrek, K.; Kokkinara, E.; McDonnell, R. The effect of realistic appearance of virtual characters in immersive environments-does the character’s personality play a role? IEEE Trans. Vis. Comput. Graph. 2018, 24, 1681–1690. [Google Scholar] [CrossRef] [Green Version]

- Zorriassatine, F.; Wykes, C.; Parkin, R.; Gindy, N. A survey of virtual prototyping techniques for mechanical product development. Proc. Inst. Mech. Eng. Part B J. Eng. Manuf. 2003, 217, 513–530. [Google Scholar] [CrossRef] [Green Version]

- Dignum, V. Social agents: Bridging simulation and engineering. Commun. ACM 2017, 60, 32–34. [Google Scholar] [CrossRef]

- Glanz, K.; Rizzo, A.S.; Graap, K. Virtual reality for psychotherapy: Current reality and future possibilities. Psychother. Theory Res. Pract. Train. 2003, 40, 55. [Google Scholar] [CrossRef]

- Javaid, M.; Haleem, A. Virtual reality applications toward medical field. Clin. Epidemiol. Glob. Health 2020, 8, 600–605. [Google Scholar] [CrossRef] [Green Version]

- Hill, R.W., Jr.; Gratch, J.; Marsella, S.; Rickel, J.; Swartout, W.R.; Traum, D.R. Virtual Humans in the Mission Rehearsal Exercise System. Künstliche Intell. 2003, 17, 5. [Google Scholar]

- Roessingh, J.J.; Toubman, A.; van Oijen, J.; Poppinga, G.; Hou, M.; Luotsinen, L. Machine learning techniques for autonomous agents in military simulations—Multum in Parvo. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 3445–3450. [Google Scholar]

- Baldwin, K. Virtual avatars: Trans experiences of ideal selves through gaming. Mark. Glob. Dev. Rev. 2019, 3, 4. [Google Scholar] [CrossRef]

- Parmar, D.; Olafsson, S.; Utami, D.; Bickmore, T. Looking the part: The effect of attire and setting on perceptions of a virtual health counselor. In Proceedings of the 18th International Conference on Intelligent Virtual Agents, Sydney, NSW, Australia, 5–8 November 2018; pp. 301–306. [Google Scholar]

- Kamińska, D.; Sapiński, T.; Wiak, S.; Tikk, T.; Haamer, R.E.; Avots, E.; Helmi, A.; Ozcinar, C.; Anbarjafari, G. Virtual reality and its applications in education: Survey. Information 2019, 10, 318. [Google Scholar] [CrossRef] [Green Version]

- Achenbach, J.; Waltemate, T.; Latoschik, M.E.; Botsch, M. Fast generation of realistic virtual humans. In Proceedings of the 23rd ACM Symposium on Virtual Reality Software and Technology, Gothenburg, Sweden, 8–10 November 2017; pp. 1–10. [Google Scholar]

- Lester, J.C.; Converse, S.A.; Kahler, S.E.; Barlow, S.T.; Stone, B.A.; Bhogal, R.S. The persona effect: Affective impact of animated pedagogical agents. In Proceedings of the ACM SIGCHI Conference on Human Factors in Computing Systems, Atlanta, GA, USA, 22–27 March 1997; pp. 359–366. [Google Scholar]

- Nass, C.; Moon, Y.; Carney, P. Are people polite to computers? Responses to computer-based interviewing systems 1. J. Appl. Soc. Psychol. 1999, 29, 1093–1109. [Google Scholar] [CrossRef]

- Reeves, B.; Nass, C. The Media Equation: How People Treat Computers, Television, and New Media Like Real People; Cambridge University Press: Cambridge, UK, 1996. [Google Scholar]

- Nass, C.; Steuer, J.; Tauber, E.R. Computers are social actors. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Boston, MA, USA, 24–28 April 1994; pp. 72–78. [Google Scholar]

- Myers, D.G.; Smith, S.M. Exploring Social Psychology; McGraw-Hill: New York, NY, USA, 2012. [Google Scholar]

- Nass, C.; Moon, Y. Machines and mindlessness: Social responses to computers. J. Soc. Issues 2000, 56, 81–103. [Google Scholar] [CrossRef]

- Turing, A.M.; Haugeland, J. Computing Machinery and Intelligence; MIT Press: Cambridge, MA, USA, 1950. [Google Scholar]

- Gilbert, D.T.; Fiske, S.T.; Lindzey, G. The Handbook of Social Psychology; Oxford University Press: Oxford, UK, 1998. [Google Scholar]

- Allport, G.W.; Clark, K.; Pettigrew, T. The Nature of Prejudice; Addison-Wesley: Boston, MA, USA, 1954. [Google Scholar]

- Fiske, S.T. Stereotyping, Prejudice and Discrimination; McGraw-Hill: New York, NY, USA, 1998. [Google Scholar]

- Shiffrin, R.M.; Schneider, W. Controlled and automatic human information processing: II. Perceptual learning, automatic attending and a general theory. Psychol. Rev. 1977, 84, 127. [Google Scholar] [CrossRef]

- Schneider, W.; Shiffrin, R.M. Controlled and automatic human information processing: I. Detection, search, and attention. Psychol. Rev. 1977, 84, 1–66. [Google Scholar] [CrossRef]

- Bargh, J.A. Conditional automaticity: Varieties of automatic influence in social perception and cognition. Unintended Thought 1989, 3–51. [Google Scholar]

- Kiesler, S.; Sproull, L. Social human-computer interaction. In Human Values and the Design of Computer Technology; Cambridge University Press: Cambridge, MA, USA, 1997; pp. 191–199. [Google Scholar]

- Blascovich, J.; Loomis, J.; Beall, A.C.; Swinth, K.R.; Hoyt, C.L.; Bailenson, J.N. Immersive virtual environment technology as a methodological tool for social psychology. Psychol. Inq. 2002, 13, 103–124. [Google Scholar] [CrossRef]

- Von der Pütten, A.M.; Krämer, N.C.; Gratch, J.; Kang, S.H. “It doesn’t matter what you are!” explaining social effects of agents and avatars. Comput. Human Behav. 2010, 26, 1641–1650. [Google Scholar] [CrossRef]

- Hegel, F.; Krach, S.; Kircher, T.; Wrede, B.; Sagerer, G. Understanding social robots: A user study on anthropomorphism. In Proceedings of the RO-MAN 2008-The 17th IEEE International Symposium on Robot and Human Interactive Communication, Munich, Germany, 1–3 August 2008; pp. 574–579. [Google Scholar]

- Fox, J.; Ahn, S.J.; Janssen, J.H.; Yeykelis, L.; Segovia, K.Y.; Bailenson, J.N. Avatars versus agents: A meta-analysis quantifying the effect of agency on social influence. Hum. Comput. Interact. 2015, 30, 401–432. [Google Scholar] [CrossRef]

- Gray, H.M.; Gray, K.; Wegner, D.M. Dimensions of mind perception. Science 2007, 315, 619. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yee, N.; Bailenson, J.N.; Rickertsen, K. A meta-analysis of the impact of the inclusion and realism of human-like faces on user experiences in interfaces. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 28 April–3 May 2007; pp. 1–10. [Google Scholar]

- Selvarajah, K.; Richards, D. The use of emotions to create believable agents in a virtual environment. In Proceedings of the Fourth International Joint Conference on Autonomous Agents and Multiagent Systems, Utrecht, The Netherlands, 25–29 July 2005; pp. 13–20. [Google Scholar]

- Zanbaka, C.; Goolkasian, P.; Hodges, L. Can a virtual cat persuade you? The role of gender and realism in speaker persuasiveness. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 22–27 April 2006; pp. 1153–1162. [Google Scholar]

- Nass, C.; Isbister, K.; Lee, E.J. Truth is beauty: Researching embodied conversational agents. Embodied Conversat. Agents 2000, 374–402. [Google Scholar]

- Park, S.; Catrambone, R. Social facilitation effects of virtual humans. Hum. Factors 2007, 49, 1054–1060. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rickenberg, R.; Reeves, B. The effects of animated characters on anxiety, task performance, and evaluations of user interfaces. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, The Hague, The Netherlands, 1–6 April 2000; pp. 49–56. [Google Scholar]

- Sproull, L.; Subramani, M.; Kiesler, S.; Walker, J.H.; Waters, K. When the interface is a face. Hum. Comput. Interact. 1996, 11, 97–124. [Google Scholar] [CrossRef]

- Bond, E.K. Perception of form by the human infant. Psychol. Bull. 1972, 77, 225. [Google Scholar] [CrossRef] [PubMed]

- Morton, J.; Johnson, M.H. CONSPEC and CONLERN: A two-process theory of infant face recognition. Psychol. Rev. 1991, 98, 164. [Google Scholar] [CrossRef] [Green Version]

- Diener, E.; Fraser, S.C.; Beaman, A.L.; Kelem, R.T. Effects of deindividuation variables on stealing among Halloween trick-or-treaters. J. Personal. Soc. Psychol. 1976, 33, 178. [Google Scholar] [CrossRef]

- Stern, S.E.; Mullennix, J.W.; Dyson, C.L.; Wilson, S.J. The persuasiveness of synthetic speech versus human speech. Hum. Factors 1999, 41, 588–595. [Google Scholar] [CrossRef]

- Mullennix, J.W.; Stern, S.E.; Wilson, S.J.; Dyson, C.L. Social perception of male and female computer synthesized speech. Comput. Human Behav. 2003, 19, 407–424. [Google Scholar] [CrossRef]

- Lee, K.M.; Nass, C. Social-psychological origins of feelings of presence: Creating social presence with machine-generated voices. Media Psychol. 2005, 7, 31–45. [Google Scholar] [CrossRef]

- Nass, C.; Foehr, U.; Brave, S.; Somoza, M. The effects of emotion of voice in synthesized and recorded speech. In Proceedings of the AAAI Symposium Emotional and Intelligent II: The Tangled Knot of Social Cognition 2001; AAAI: North Falmouth, MA, USA, 2001. [Google Scholar]

- Nass, C.; Moon, Y.; Green, N. Are machines gender neutral? Gender-stereotypic responses to computers with voices. J. Appl. Soc. Psychol. 1997, 27, 864–876. [Google Scholar] [CrossRef]

- Lee, K.M.; Nass, C. Designing social presence of social actors in human computer interaction. In Proceedings of the SIGCHI conference on Human Factors in Computing Systems, Ft. Lauderdale, FL, USA, 5–10 April 2003; pp. 289–296. [Google Scholar]

- Eyssel, F.; De Ruiter, L.; Kuchenbrandt, D.; Bobinger, S.; Hegel, F. If you sound like me, you must be more human’: On the interplay of robot and user features on human-robot acceptance and anthropomorphism. In Proceedings of the 2012 7th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Boston, MA, USA, 5–8 March 2012; pp. 125–126. [Google Scholar]

- Gratch, J.; Marsella, S. Lessons from emotion psychology for the design of lifelike characters. Appl. Artif. Intell. 2005, 19, 215–233. [Google Scholar] [CrossRef]

- Mulligan, K.; Scherer, K.R. Toward a working definition of emotion. Emot. Rev. 2012, 4, 345–357. [Google Scholar] [CrossRef]

- Scherer, K.R. What are emotions? And how can they be measured? Soc. Sci. Inf. 2005, 44, 695–729. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V.; Ellsworth, P. Emotion in the Human Face: Guidelines for Research and an Integration of Findings; Elsevier: Amsterdam, The Netherlands, 2013. [Google Scholar]

- LeDoux, J. The emotional brain, fear, and the amygdala. Cell. Mol. Neurobiol. 2003, 23, 727–738. [Google Scholar] [CrossRef] [PubMed]

- Izard, C.E. The many meanings/aspects of emotion: Definitions, functions, activation, and regulation. Emot. Rev. 2010, 2, 363–370. [Google Scholar] [CrossRef] [Green Version]

- Picard, R.W. Affective Computing for HCI. In HCI (1); Citeseer: Princeton, NJ, USA, 22 August 1999; pp. 829–833. [Google Scholar]

- Sundström, P.; Ståhl, A.; Höök, K. In situ informants exploring an emotional mobile messaging system in their everyday practice. Int. J. Hum. Comput. Stud. 2007, 65, 388–403. [Google Scholar] [CrossRef] [Green Version]

- Calvo, R.A.; D’Mello, S.; Gratch, J.M.; Kappas, A. Emotion modeling for social robots. In The Oxford Handbook of Affective Computing; Oxford University Press: Oxford, UK, 2014; pp. 296–308. [Google Scholar]

- Bartneck, C.; Reichenbach, J.; Breemen, A. In your face, robot! The influence of a character’s embodiment on how users perceive its emotional expressions. In Proceedings of the Design and Emotion 2004, Ankara, Turkey, 12–14 July 2004. [Google Scholar]

- Bates, J. The role of emotion in believable agents. Commun. ACM 1994, 37, 122–125. [Google Scholar] [CrossRef]

- Johnston, O.; Thomas, F. The Illusion of Life: Disney Animation; Disney Editions: New York, NY, USA, 1981. [Google Scholar]

- Ranjbartabar, H.; Richards, D.; Bilgin, A.; Kutay, C. First impressions count! The role of the human’s emotional state on rapport established with an empathic versus neutral virtual therapist. IEEE Trans. Affect. Comput. 2019. [Google Scholar] [CrossRef]

- Feldman, J.M. Four questions about human social behavior. In Social Psychology and Cultural Context; SAGE: New York, NY, USA, 1999; pp. 107–113. [Google Scholar]

- Moon, Y. Intimate Self-Disclosure Exhanges: Using Computers to Build Reciprocal Relationships with Consumers; Division of Research, Harvard Business School: Boston, MA, USA, 1998. [Google Scholar]

- Moon, Y.; Nass, C. How “real” are computer personalities? Psychological responses to personality types in human-computer interaction. Communic. Res. 1996, 23, 651–674. [Google Scholar] [CrossRef]

- Moon, Y.; Nass, C. Are computers scapegoats? Attributions of responsibility in human–computer interaction. Int. J. Hum. Comput. Stud. 1998, 49, 79–94. [Google Scholar] [CrossRef]

- Nass, C.; Moon, Y.; Fogg, B.J.; Reeves, B.; Dryer, D.C. Can computer personalities be human personalities. Int. J. Hum. Comput. Stud. 1995, 43, 223–239. [Google Scholar] [CrossRef]

- Isbister, K.; Nass, C. Consistency of personality in interactive characters: Verbal cues, non-verbal cues, and user characteristics. Int. J. Hum. Comput. Stud. 2000, 53, 251–267. [Google Scholar] [CrossRef] [Green Version]

- Ball, G.; Breese, J. Emotion and Personality in a Conversational Agent; Embodied Conversational Agents: 2000; MIT Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Hanna, N.; Richards, D. The influence of users’ personality on the perception of intelligent virtual agents’ personality and the trust within a collaborative context. In International Workshop on Multiagent Foundations of Social Computing; Springer: Cham, Switzerland, 2015; pp. 31–47. [Google Scholar]

- King, W.J.; Ohya, J. The representation of agents: Anthropomorphism, agency, and intelligence. In Proceedings of the Conference Companion on Human Factors in Computing Systems, Vancouver, BC, Canada, 13–18 April 1996; pp. 289–290. [Google Scholar]

- Paiva, A.; Dias, J.; Sobral, D.; Aylett, R.; Sobreperez, P.; Woods, S.; Zoll, C.; Hall, L. Caring for agents and agents that care: Building empathic relations with synthetic agents. In Proceedings of the Third International Joint Conference on Autonomous Agents and Multiagent Systems, New York, NY, USA, 19–23 July 2004; Volume 1, pp. 194–201. [Google Scholar]

- Berretty, P.M.; Todd, P.M.; Blythe, P.W. Categorization by elimination: A fast and frugal approach to categorization. In Proceedings of the Nineteenth Annual Conference of the Cognitive Science Society, Stanford, CA, USA, 7–10 August 1997; pp. 43–48. [Google Scholar]

- Bailenson, J.N.; Yee, N.; Merget, D.; Schroeder, R. The effect of behavioral realism and form realism of real-time avatar faces on verbal disclosure, nonverbal disclosure, emotion recognition, and copresence in dyadic interaction. Presence Teleoper. Virtual Environ. 2006, 15, 359–372. [Google Scholar] [CrossRef]

- Nowak, K.L.; Biocca, F. The effect of the agency and anthropomorphism on users’ sense of telepresence, copresence, and social presence in virtual environments. Presence Teleoper. Virtual Environ. 2003, 12, 481–494. [Google Scholar] [CrossRef]

- Zajonc, R.B.; Adelmann, P.K.; Murphy, S.T.; Niedenthal, P.M. Convergence in the physical appearance of spouses. Motiv. Emot. 1987, 11, 335–346. [Google Scholar] [CrossRef] [Green Version]

- Aiello, J.R.; Douthitt, E.A. Social facilitation from Triplett to electronic performance monitoring. Group. Dyn. Theory Res. Pract. 2001, 5, 163. [Google Scholar] [CrossRef]

- Hoyt, C.L.; Blascovich, J.; Swinth, K.R. Social inhibition in immersive virtual environments. Presence 2003, 12, 183–195. [Google Scholar] [CrossRef]

- Catrambone, R.; Stasko, J.; Xiao, J. ECA as user interface paradigm. In From Brows to Trust; Springer: Amsterdam, The Netherlands, 2004; pp. 239–267. [Google Scholar]

- Davidson, R.; Henderson, R. Electronic performance monitoring: A laboratory investigation of the influence of monitoring and difficulty on task performance, mood state, and self-reported stress levels. J. Appl. Soc. Psychol. 2000, 30, 906–920. [Google Scholar] [CrossRef]

- Tresselt, M.E.; Mayzner, M.S. Normative solution times for a sample of 134 solution words and 378 associated anagrams. Psychon. Monogr. Suppl. 1966, 1, 293–298. [Google Scholar]

- Rajecki, D.W.; Ickes, W.; Corcoran, C.; Lenerz, K. Social facilitation of human performance: Mere presence effects. J. Soc. Psychol. 1977, 102, 297–310. [Google Scholar] [CrossRef]

- Jackson, J.M.; Williams, K.D. Social loafing on difficult tasks: Working collectively can improve performance. J. Personal. Soc. Psychol. 1985, 49, 937. [Google Scholar] [CrossRef]

- Beilock, S.L.; Kulp, C.A.; Holt, L.E.; Carr, T.H. More on the fragility of performance: Choking under pressure in mathematical problem solving. J. Exp. Psychol. Gen. 2004, 133, 584. [Google Scholar] [CrossRef] [Green Version]

- Cahn, J.E. The generation of affect in synthesized speech. J. Am. Voice I/O Soc. 1990, 8, 1. [Google Scholar]

- Bond, C.F.; Titus, L.J. Social facilitation: A meta-analysis of 241 studies. Psychol. Bull. 1983, 94, 265. [Google Scholar] [CrossRef]

- Erdfelder, E.; Faul, F.; Buchner, A. GPOWER: A general power analysis program. Behav. Res. Methods Instrum. Comput. 1996, 28, 1–11. [Google Scholar] [CrossRef]

- Jones, E.E. Ingratiation: A Social Psychological Analysis; Appleton-Century-Crofts: New York, NY, USA, 1964. [Google Scholar]

- Singer, E.; Frankel, M.R.; Glassman, M.B. The effect of interviewer characteristics and expectations on response. Public Opin. Q. 1983, 47, 68–83. [Google Scholar] [CrossRef]

- Sudman, S.; Bradburn, N.M. Response Effects in Surveys: A Review and Synthesis; Aldine Publishing: Chicago, IL, USA, 1974. [Google Scholar]

- Nass, C.; Steuer, J. Voices, boxes, and sources of messages: Computers and social actors. Hum. Commun. Res. 1993, 19, 504–527. [Google Scholar] [CrossRef]

- Oh, C.S.; Bailenson, J.N.; Welch, G.F. A systematic review of social presence: Definition, antecedents, and implications. Front. Robot. Artif. Intell. 2018, 5, 114. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bente, G.; Rüggenberg, S.; Krämer, N.C.; Eschenburg, F. Avatar-mediated networking: Increasing social presence and interpersonal trust in net-based collaborations. Hum. Commun. Res. 2008, 34, 287–318. [Google Scholar] [CrossRef]

- Appel, J.; von der Pütten, A.; Krämer, N.C.; Gratch, J. Does humanity matter? Analyzing the importance of social cues and perceived agency of a computer system for the emergence of social reactions during human-computer interaction. Adv. Hum. Comput. Interact. 2012, 2012, 13. [Google Scholar] [CrossRef] [Green Version]

- Kim, H.; Suh, K.S.; Lee, U.K. Effects of collaborative online shopping on shopping experience through social and relational perspectives. Inf. Manag. 2013, 50, 169–180. [Google Scholar] [CrossRef]

- Beale, R.; Creed, C. Affective interaction: How emotional agents affect users. Int. J. Hum. Comput. Stud. 2009, 67, 755–776. [Google Scholar] [CrossRef]

- Brave, S.; Nass, C.; Hutchinson, K. Computers that care: Investigating the effects of orientation of emotion exhibited by an embodied computer agent. Int. J. Hum. Comput. Stud. 2005, 62, 161–178. [Google Scholar] [CrossRef]

- De Melo, C.M.; Carnevale, P.; Gratch, J. The impact of emotion displays in embodied agents on emergence of cooperation with people. Presence Teleoper. Virtual Environ. 2011, 20, 449–465. [Google Scholar] [CrossRef]

- Manstead, A.S.; Fischer, A.H. Social appraisal. Apprais. Process. Emot. Theory Methods Res. 2001, 221–232. [Google Scholar]

- Van Kleef, G.A. Emotion in conflict and negotiation: Introducing the emotions as social information (easi) model. In IACM 2007 Meetings Paper 2007; University of Amsterdam: Amsterdam, The Netherlands, 2007. [Google Scholar]

- Adam, H.; Shirako, A.; Maddux, W.W. Cultural variance in the interpersonal effects of anger in negotiations. Psychol. Sci. 2010, 21, 882–889. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Adam, H.; Shirako, A. Not all anger is created equal: The impact of the expresser’s culture on the social effects of anger in negotiations. J. Appl. Psychol. 2013, 98, 785. [Google Scholar] [CrossRef] [Green Version]

- Matsumoto, D.; Yoo, S.H. Toward a new generation of cross-cultural research. Perspect. Psychol. Sci. 2006, 1, 234–250. [Google Scholar] [CrossRef] [PubMed]

- Cohen, D.; Gunz, A. As seen by the other…: Perspectives on the self in the memories and emotional perceptions of Easterners and Westerners. Psychol. Sci. 2002, 13, 55–59. [Google Scholar] [CrossRef] [PubMed]

- Wu, S.; Keysar, B. The effect of culture on perspective taking. Psychol. Sci. 2007, 18, 600–606. [Google Scholar] [CrossRef] [PubMed]

- Bickmore, T.W. Relational Agents: Effecting Change Through Human-Computer Relationships. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2003. [Google Scholar]

- Bickmore, T.W.; Puskar, K.; Schlenk, E.A.; Pfeifer, L.M.; Sereika, S.M. Maintaining reality: Relational agents for antipsychotic medication adherence. Interact. Comput. 2010, 22, 276–288. [Google Scholar] [CrossRef]

| The Type of Virtual Human | Facial | Voice | Emotion | Cover Story |

|---|---|---|---|---|

| A: Facial appearance only | Yes | No | Neutral | By text |

| B: Voice recordings only | No | Yes | Neutral | By voice |

| C: Voice synced facial appearance | Yes | Yes | Neutral | By voice |

| D: Facial conveying emotion | Yes | No | Happy | By text |

| E: Voice conveying emotion | No | Yes | Happy | By voice |

| F: Voice synced facial conveying emotion | Yes | Yes | Happy | By voice |

| The Type of Virtual Human | Anagram Easy | Anagram Hard | Maze Easy | Maze Hard | Modular Arithmetic Easy | Modular Arithmetic Hard |

|---|---|---|---|---|---|---|

| Facial appearance only | p < 0.05 | p < 0.01 | p < 0.05 | p < 0.05 | t < 1 | t < 1 |

| Voice recordings only | p < 0.05 | p < 0.05 | p > 0.05 (t = 1.17) | p < 0.05 | p < 0.05 | p < 0.01 |

| Voice synched facial appearance | t < 1 | t < 1 | t < 1 | p < 0.01 | t < 1 | t < 1 |

| Facial appearance conveying emotion | t < 1 | p < 0.05 | p < 0.05 | p < 0.05 | t < 1 | p < 0.05 |

| Voice recordings conveying emotion | t < 1 | p < 0.05 | p < 0.05 | p < 0.05 | t < 1 | p < 0.05 |

| Voice synched facial appearance conveying emotion | t < 1 | t < 1 | t < 1 | t < 1 | t < 1 | t < 1 |

| The Type of Virtual Human | Facial Appearance | Voice Implementation |

|---|---|---|

| A: Facial appearance only | Yes | No |

| B: Voice recordings only | No | Yes |

| C: Voice synced facial appearance | Yes | Yes |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, S.; Catrambone, R. Social Responses to Virtual Humans: The Effect of Human-Like Characteristics. Appl. Sci. 2021, 11, 7214. https://doi.org/10.3390/app11167214

Park S, Catrambone R. Social Responses to Virtual Humans: The Effect of Human-Like Characteristics. Applied Sciences. 2021; 11(16):7214. https://doi.org/10.3390/app11167214

Chicago/Turabian StylePark, Sung, and Richard Catrambone. 2021. "Social Responses to Virtual Humans: The Effect of Human-Like Characteristics" Applied Sciences 11, no. 16: 7214. https://doi.org/10.3390/app11167214