RepVGG-SimAM: An Efficient Bad Image Classification Method Based on RepVGG with Simple Parameter-Free Attention Module

Abstract

:1. Introduction

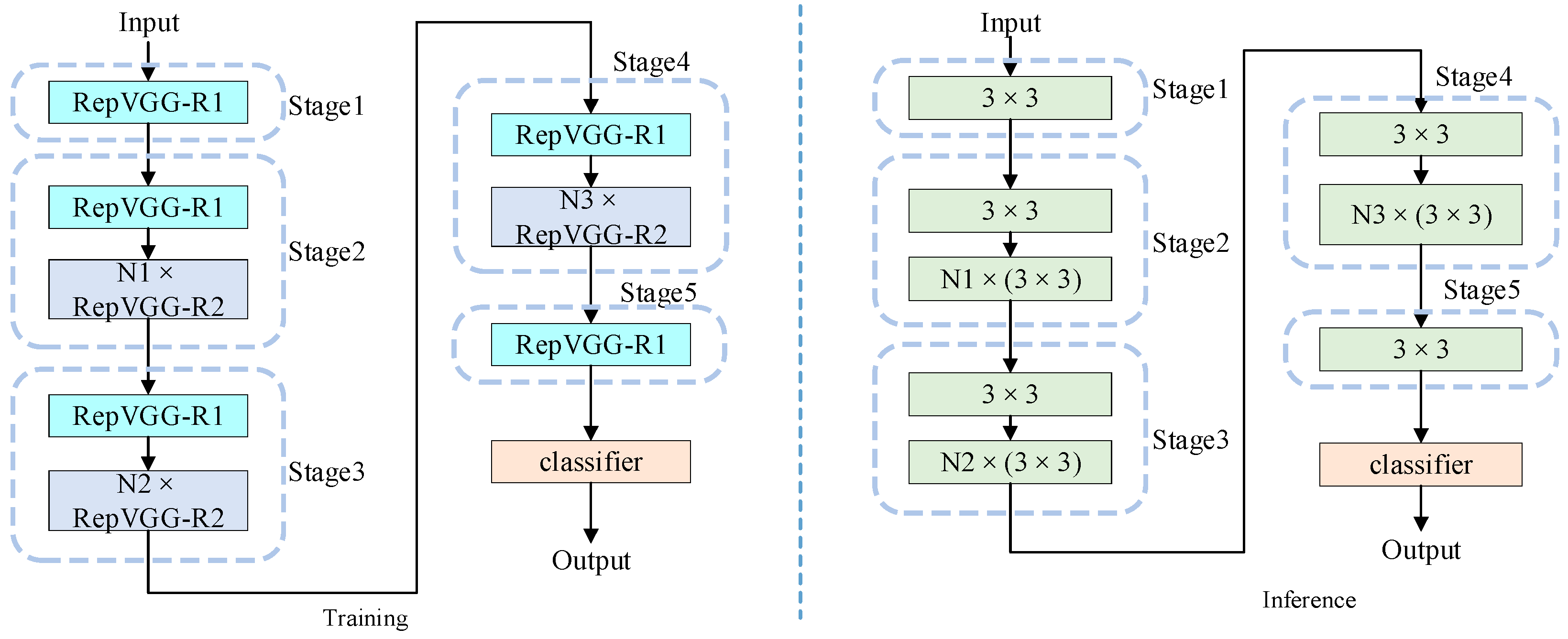

- An efficient bad image recognition method is proposed. This method separates training process from inference process and achieves both powerful feature extraction and inference speed. In addition, the residual structure of the training process not only alleviates the problem of gradient disappearance but also improves the convergence speed [19] of the network.

- Adding the SimAM attention mechanism to the original network increases the scrutiny of important features and does not introduce parameters, improving the network effect while still maintaining the original inference speed.

- The experiments show that this method has high reasoning accuracy and low time consumption and can effectively and quickly detect bad images in the network environment, providing effective support for cyberspace governance.

2. Related Work

2.1. Method Based on Traditional Machine Learning

2.2. Method Based on Deep Learning

2.3. RepVGG

2.4. SimAM

3. Proposed Method (RepVGG-SimAM)

3.1. RepVGG-SimAM Network Architecture

3.2. RepVGG-SimAM Network Structure Configuration

3.3. Structural Reparameterization

3.3.1. Fusion of Convolutional Layer and NB Layer

3.3.2. Convert Branch to 3 × 3 Convolutional Kernels

3.3.3. Fusion of Branches

3.4. SimAM Attention Mechanism

4. Experiment and Result Analysis

4.1. Experimental Data Sets

4.2. Eevaluation Criteria

4.3. Training Environment and Parameter Configuration

4.4. Experimental Result Analysis

4.4.1. Training Process Analysis

4.4.2. Comparison of Existing Algorithms

4.4.3. Comparison of Different Classification Algorithms

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Xu, X.; Wu, X.; Wang, G.; Wang, H. Violent Video Classification Based on Spatial-Temporal Cues Using Deep Learning. In Proceedings of the 2018 11th International Symposium on Computational Intelligence and Design (ISCID), Hangzhou, China, 8–9 December 2018; pp. 319–322. [Google Scholar]

- Cheng, F.; Wang, S.; Wang, X.; Liew, A.W.; Liu, G. A global and local context integration DCNN for adult image classification. Pattern Recognit. 2019, 96, 106983. [Google Scholar] [CrossRef]

- Jones, M.J.; Rehg, J.M. Statistical Color Models with Application to Skin Detection. Int. J. Comput. Vis. 2002, 46, 81–96. [Google Scholar] [CrossRef]

- Lin, Y.C.; Tseng, H.W.; Fuh, C.S. Pornography Detection Using Support Vector Machine. In Proceedings of the 16th IPPR Conference on Computer Vision, Graphics and Image Processing (CVGIP 2003), Kinmen, China, 17–19 August 2003; pp. 123–130. [Google Scholar]

- Wang, B.S.; Lv, X.Q.; Ma, X.L.; Wang, H.W. Application of Skin Detection Based on Irregular Polygon Area Boundary Constraint on YCbCr and Reverse Gamma Correction. Adv. Mater. Res. 2011, 327, 31–36. [Google Scholar] [CrossRef]

- Basilio, J.A.M.; Torres, G.A.; Gabriel, S.P.; Medina, L.T.; Meana, H.M. Explicit Image Detection Using YCbCr Space Color Model as Skin Detection. In Proceedings of the 2011 American Conference on Applied Mathematics and the 5th WSEAS International Conference on Computer Engineering and Applications, Puerto Morelos, Mexico, 29–31 January 2011; pp. 123–128. [Google Scholar]

- Zhao, Z.; Cai, A. Combining multiple SVM classifiers for adult image recognition. In Proceedings of the 2010 2nd IEEE International Conference on Network Infrastructure and Digital Content, Beijing, China, 24–26 September 2010; pp. 149–153. [Google Scholar]

- Deselaers, T.; Pimenidis, L.; Ney, H. Bag-of-Visual-Words Models for Adult Image Classification and Filtering. In Proceedings of the 19th International Conference on Pattern Recognition, Tampa, FL, USA, 8–11 December 2008; pp. 1–4. [Google Scholar]

- Lv, L.; Zhao, C.; Lv, H.; Shang, J.; Yang, Y.; Wang, J. Pornographic Images Detection Using High-Level Semantic Features. In Proceedings of the 2011 Seventh International Conference on Natural Computation, Shanghai, China, 26–28 July 2011; pp. 1015–1018. [Google Scholar]

- Gao, Y.; Wu, O.; Wang, C.; Hu, W.; Yang, J. Region-Based Blood Color Detection and Its Application to Bloody Image Filtering. In Proceedings of the 2015 International Conference on Wavelet Analysis and Pattern Recognition (ICWAPR), Guangzhou, China, 12–15 July 2015; pp. 45–50. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Zhao, F.; Zhang, J.; Meng, Z.; Liu, H.; Chang, Z.; Fan, J. Multiple vision architectures-based hybrid network for hyperspectral image classification. Expert Syst. Appl. 2023, 234, 121032. [Google Scholar] [CrossRef]

- Gao, Y.; Rezaeipanah, A. An Ensemble Classification Method Based on Deep Neural Networks for Breast Cancer Diagnosis. Intel. Artif. 2023, 26, 160–177. [Google Scholar] [CrossRef]

- Bharat, M.; Mishra, K.K.; Anoj, K. An improved lightweight small object detection framework applied to real-time autonomous driving. Expert Syst. Appl. 2023, 234, 121036. [Google Scholar]

- Wang, C.; Wang, Q.; Qian, Y.; Hu, Y.; Xue, Y.; Wang, H. DP-YOLO: Effective Improvement Based on YOLO Detector. Appl. Sci. 2023, 13, 11676. [Google Scholar] [CrossRef]

- Xie, J.; Chen, J.; Cai, Y.; Huang, Q.; Li, Q. Visual Paraphrase Generation with Key Information Retained. ACM Trans. Multimed. Comput. Commun. Appl. 2023, 19, 1–19. [Google Scholar] [CrossRef]

- Xie, G.; Lai, J. An Interpretation of Forward-Propagation and Back-Propagation of DNN. In Proceedings of the Pattern Recognition and Computer Vision. PRCV 2018, Guangzhou, China, 23–26 November 2018; pp. 3–15. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Ullah, K.; Saleem, N.; Bilal, H.; Ahmad, J.; Ibrar, M.; Jarad, F. On the convergence, stability and data dependence results of the JK iteration process in Banach spaces. Open Math. 2023, 21, 20230101. [Google Scholar] [CrossRef]

- Guo, G.; Wang, H.; Bell, D.; Bi, Y.; Greer, K. KNN model-based approach in classification. In Proceedings of the OTM Confederated International Conferences, CoopIS, DOA, and ODBASE(2003), Catania, Italy, 3–7 November 2003; pp. 986–996. [Google Scholar]

- Huang, G.; Zhou, H.; Ding, X.; Zhang, R. Extreme Learning Machine for Regression and Multiclass Classification. IEEE Trans. Syst. Man Cybern. Part B 2012, 42, 513–529. [Google Scholar] [CrossRef]

- Zhao, H.; Liu, I. Research on test data generation method of complex event big data processing system based on Bayesian network. Comput. Appl. Res. 2018, 35, 155–158. [Google Scholar]

- Ying, Z.; Shi, P.; Pan, D.; Yang, H.; Hou, M. A Deep Network for Pornographic Image Recognition Based on Feature Visualization Analysis. In Proceedings of the 2018 IEEE 4th Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 14–16 December 2018; pp. 212–216. [Google Scholar]

- Lin, X.; Qin, F.; Peng, Y. Fine-grained pornographic image recognition with multiple feature fusion transfer learning. Int. J. Mach. Learn. Cybern. 2021, 12, 73–86. [Google Scholar] [CrossRef]

- Sheena, C.P.; Sindhu, P.K.; Suganthi, S.; Saranya, J.; Selvakumar, V.S. An Efficient DenseNet for Diabetic Retinopathy Screening. Int. J. Eng. Technol. Innov. 2023, 13, 125–136. [Google Scholar] [CrossRef]

- Cai, Z.; Hu, X.; Geng, Z.; Zhang, J.; Feng, Y. An Illegal Image Classification System Based on Deep Residual Network and Convolutional Block Attention Module. Int. J. Netw. Secur. 2023, 25, 351–359. [Google Scholar]

- Mumtaz, A.; Sargano, A.B.; Habib, Z. Violence Detection in Surveillance Videos with Deep Network Using Transfer Learning. In Proceedings of the 2018 2nd European Conference on Electrical Engineering and Computer Science (EECS), Bern, Switzerland, 20–22 December 2018; pp. 558–563. [Google Scholar]

- Jebur, S.A.; Hussein, K.A.; Hoomod, H.K.; Alzubaidi, L. Novel Deep Feature Fusion Framework for Multi-Scenario Violence Detection. Computers 2023, 12, 175. [Google Scholar] [CrossRef]

- Ye, L.; Liu, T.; Han, T.; Ferdinando, H.; Seppänen, T.; Alasaarela, E. Campus Violence Detection Based on Artificial Intelligent Interpretation of Surveillance Video Sequences. Remote Sens. 2021, 13, 628. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. RepVGG: Making VGG-style ConvNets Great Again. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 13728–13737. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision, ECCV 2018, Munich, Germany, 8–14 September 2018; pp. 3–9. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Yang, L.; Zhang, R.; Li, L.; Xie, X. SimAM: A simple parameter-free attention module for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Vienna, Austria, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Ishtiaq, U.; Saleem, N.; Uddin, F.; Sessa, S.; Ahmad, K.; di Martino, F. Graphical Views of Intuitionistic Fuzzy Double-Controlled Metric-Like Spaces and Certain Fixed-Point Results with Application. Symmetry 2022, 14, 2364. [Google Scholar] [CrossRef]

- Yu, X.; Wang, X.; Rong, J.; Zhang, M.; Ou, L. Efficient Re-Parameterization Operations Search for Easy-to-Deploy Network Based on Directional Evolutionary Strategy. Neural Process. Lett. 2023, 1–24. [Google Scholar] [CrossRef]

- Saleem, N.; Ahmad, K.; Ishtiaq, U.; De la Sen, M. Multivalued neutrosophic fractals and Hutchinson-Barnsley operator in neutrosophic metric space. Chaos Solitons Fractals 2023, 172, 113607. [Google Scholar] [CrossRef]

| Characteristics | References | Methods | Limitations |

|---|---|---|---|

| Classification based on skin area | [3] | RGB color space | The characteristics of classification basis are not restricted to bad images, and the accuracy of classification is not ideal. |

| [4] | RGB + SVM | ||

| [5,6] | YCbCr color space | ||

| Classification based on shape and texture | [7] | fusing texture and SIFT features | |

| [8] | BoWV | ||

| [9] | BoVWS | ||

| Classification based on red area | [10] | bloody area + SVM | |

| [23] | CNN | Deep neural networks increase the complexity of the network and affect its inference speed | |

| [25] | fusing four DenseNet121 | ||

| [26] | RenNet101 + CBAM | ||

| [27] | GoogleNet | ||

| [28] | fusing Xception, Inception, InceptionResNet | ||

| [29] | C3D |

| Stage | Output Size | First Layer of This Stage | Other Layers of This Stage |

|---|---|---|---|

| 1 | 112 × 112 | 1 × (96−R1) | |

| 2 | 56 × 56 | 1 × (96−R1) | 1 × (96−R2) |

| 3 | 28 × 28 | 1 × (192−R1) | 3 × (192−R2) |

| 4 | 14 × 14 | 1 × (384−R1) | 13 × (384−R2) |

| 5 | 7 × 7 | 1 × (768−R1) |

| Stage | Output Size | First Layer of This Stage | Other Layers of This Stage |

|---|---|---|---|

| 1 | 112 × 112 | 1 × (96−R1) | SimAM |

| 2 | 56 × 56 | 1 × (96−R1) | 1 × (96−R2) |

| 3 | 28 × 28 | 1 × (192−R1) | 3 × (192−R2) |

| 4 | 14 × 14 | 1 × (384−R1) | 13 × (384−R2) |

| 5 | 7 × 7 | 1 × (768−R1) | SimAM |

| Parameters | Setting |

|---|---|

| Optimizer | SGD |

| Scheduler | Cosine annealing |

| Learning rate | 0.001 |

| Batch size | 32 |

| Epoch | 100 |

| Momentum | 0.9 |

| Paper | Method | Accuracy (%) | FAR (%) |

|---|---|---|---|

| [3] | RGB-skin | 61.0 | - |

| [4] | RGB-skin + svm | 75 | 35 |

| [6] | YCbCr-skin | 75.4 | 10.13 |

| [7] | texture | 87.6 | 14.7 |

| [9] | BoVWS | 87.6 | - |

| [23] | CNN | 86.9 | - |

| [26] | ResNet101 + CBAM | 93.2 | - |

| Ours | RepVGG-SimAM | 94.5 | 4.3 |

| Methods | Precision (%) | Recall (%) | F1 (%) | FAR (%) | Accuracy (%) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Porn | Viol | Norm | Porn | Viol | Norm | Porn | Viol | Norm | |||

| VGG11 | 90.1 | 91.9 | 83.2 | 88.9 | 94 | 80.9 | 89.5 | 92.9 | 82.1 | 9.8 | 89.4 |

| VGG13 | 87.6 | 92.3 | 82.6 | 88.9 | 93.9 | 78.2 | 88.3 | 93.1 | 80.3 | 10.2 | 89.8 |

| VGG16 | 87.5 | 91.7 | 80.1 | 89.9 | 92.8 | 77.5 | 88.7 | 92.3 | 78.7 | 9.7 | 88.7 |

| VGG19 | 89.8 | 90.1 | 81.3 | 86.8 | 94.5 | 79.5 | 88.4 | 92.3 | 80.4 | 9.6 | 88.5 |

| Resnet26 | 87.4 | 92.1 | 78.7 | 88.6 | 91.3 | 78.9 | 87.9 | 91.7 | 78.8 | 10.9 | 87.7 |

| Resnet34 | 89.3 | 92 | 77.8 | 88.7 | 90.5 | 79.1 | 88.9 | 91.3 | 78.4 | 12.1 | 86.9 |

| Resnet50 | 88 | 92.4 | 78.1 | 88.2 | 91.4 | 80 | 88.1 | 91.8 | 79 | 10.9 | 87.7 |

| Resnet101 | 87.8 | 92 | 78.8 | 86.9 | 91.1 | 79.8 | 87.4 | 91.6 | 79.3 | 10.6 | 87.9 |

| RepVGG-A2 | 90.1 | 93.8 | 82.9 | 89.4 | 94.3 | 83.9 | 90.1 | 94.1 | 83.4 | 6.5 | 90.8 |

| RepVGG-SimAM | 92.4 | 94.9 | 85.2 | 90.5 | 95.1 | 86.4 | 91.4 | 95 | 85.8 | 4.3 | 94.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cai, Z.; Qiao, X.; Zhang, J.; Feng, Y.; Hu, X.; Jiang, N. RepVGG-SimAM: An Efficient Bad Image Classification Method Based on RepVGG with Simple Parameter-Free Attention Module. Appl. Sci. 2023, 13, 11925. https://doi.org/10.3390/app132111925

Cai Z, Qiao X, Zhang J, Feng Y, Hu X, Jiang N. RepVGG-SimAM: An Efficient Bad Image Classification Method Based on RepVGG with Simple Parameter-Free Attention Module. Applied Sciences. 2023; 13(21):11925. https://doi.org/10.3390/app132111925

Chicago/Turabian StyleCai, Zengyu, Xinyang Qiao, Jianwei Zhang, Yuan Feng, Xinhua Hu, and Nan Jiang. 2023. "RepVGG-SimAM: An Efficient Bad Image Classification Method Based on RepVGG with Simple Parameter-Free Attention Module" Applied Sciences 13, no. 21: 11925. https://doi.org/10.3390/app132111925