Abstract

Integrating Artificial Intelligence (AI) in healthcare represents a transformative shift with substantial potential for enhancing patient care. This paper critically examines this integration, confronting significant ethical, legal, and technological challenges, particularly in patient privacy, decision-making autonomy, and data integrity. A structured exploration of these issues focuses on Differential Privacy as a critical method for preserving patient confidentiality in AI-driven healthcare systems. We analyze the balance between privacy preservation and the practical utility of healthcare data, emphasizing the effectiveness of encryption, Differential Privacy, and mixed-model approaches. The paper navigates the complex ethical and legal frameworks essential for AI integration in healthcare. We comprehensively examine patient rights and the nuances of informed consent, along with the challenges of harmonizing advanced technologies like blockchain with the General Data Protection Regulation (GDPR). The issue of algorithmic bias in healthcare is also explored, underscoring the urgent need for effective bias detection and mitigation strategies to build patient trust. The evolving roles of decentralized data sharing, regulatory frameworks, and patient agency are discussed in depth. Advocating for an interdisciplinary, multi-stakeholder approach and responsive governance, the paper aims to align healthcare AI with ethical principles, prioritize patient-centered outcomes, and steer AI towards responsible and equitable enhancements in patient care.

1. Introduction

Integrating Artificial Intelligence (AI) in healthcare marks a significant milestone, signaling the advent of a new era in precision medicine. This transformative shift holds immense promise for revolutionizing patient care, offering advancements that were once considered futuristic. By harnessing AI’s capabilities, healthcare systems stand on the brink of a paradigm shift characterized by enhanced diagnostic accuracy, personalized treatment strategies, and increased efficiency in healthcare delivery. The ability of AI to process and analyze vast health data sets with unparalleled precision heralds a new age of medicine where disease prediction models are significantly more accurate, therapies are increasingly targeted to individual patient needs, and patient outcomes are markedly improved. This integration of AI into healthcare goes beyond mere technological advancement; it represents a fundamental change in the approach to patient care, where data-driven insights and machine learning algorithms have the potential to uncover patterns and solutions previously hidden in vast amounts of health data. From predicting patient risks to tailoring treatment plans, AI’s impact is profound, enabling healthcare providers to make more informed decisions and offer care that is both effective and personalized. However, the integration of AI into healthcare has its challenges. Foremost among these is the need to safeguard patient privacy in an environment where data are both a valuable resource and a potential vulnerability. Handling sensitive health information by AI systems raises significant concerns about privacy, data protection, and the risk of data breaches. Additionally, the increasing reliance on AI for decision-making in healthcare poses questions about maintaining human autonomy in medical decisions. Ensuring that AI assists rather than overrides the judgment of healthcare professionals is crucial for maintaining the human element in healthcare.

This literature review investigates the specific privacy challenges associated with the deployment of AI in healthcare, with a focus on large-scale data processing, Differential Privacy, and anonymization techniques. It also explores how these challenges contribute to a gap between patients’ perceptions and privacy and security scenarios in AI-integrated healthcare systems. The sensitive nature of health data and their potential for misuse necessitate urgent attention to these issues. AI’s reliance on extensive patient data amplifies concerns about data security, informed consent, data ownership, and the risk of unauthorized exploitation. In addition to privacy concerns, this review expands its scope to consider broader ethical issues, such as the impact of AI on healthcare decision-making autonomy and the potential for algorithmic bias and discrimination. These concerns are particularly crucial as AI systems become more integral to healthcare, raising questions about the transparency and fairness of decision-making processes, especially for diverse patient populations.

Addressing these complex issues, our review underscores the importance of transparent communication with patients and the public to demystify AI’s role in healthcare. This approach aims to bridge the gap between perceived and actual AI applications in healthcare, addressing misconceptions and fostering informed understanding among patients and healthcare providers. Crucially, we draw upon key insights from works such as those by [1,2], which delve into the privacy risks of private entities’ AI control and the ethical challenges in its implementation. Through this comprehensive exploration, our review aims to develop balanced strategies and policies that optimize the benefits of AI while protecting patient rights and trust. Central to our discussion is advocating for a nuanced understanding of AI’s role in healthcare, particularly in privacy, data security, and ethical integration, to guide effective policy-making and strategic development in this essential field.

2. Methodology

2.1. Protocol

In conducting this literature review, we adhered to the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) guidelines outlined in [3]. These guidelines provided a robust framework for conducting a systematic approach to the literature search, ensuring a comprehensive and unbiased review of the existing literature on integrating Artificial Intelligence (AI) in healthcare. To enhance the efficiency and effectiveness of our literature search, we incorporated advanced AI research tools. These tools were guided by specific Boolean search queries composed of carefully selected keywords and common abbreviations, ensuring a focused and relevant literature search. The primary keywords used in our search strategy included terms such as ‘Artificial Intelligence’, ‘AI’, ‘Systemic’, ‘Oversight’, ‘Healthcare’, ‘Decision-Making’, ‘Autonomy’, ‘Dignity’, ‘Advancing’, ‘Data’, ‘Anonymization’, ‘Protection’, ‘Promoting’, ‘Transparency’, ‘Dialogue’, ‘Privacy’, ‘Disparities’, and ‘Patient Understanding’. This comprehensive approach allowed us to capture studies pertinent to our research objectives. Table 1 illustrates the detailed process of our systematic review, outlining the steps involved, the number of articles reviewed at each stage, the AI tools utilized, and the specific keywords and abbreviations that guided our search. This table provides a clear overview of our methodical approach and the extensive scope of our literature review.

Table 1.

Systematic review process for AI in healthcare research.

The rationale for our keyword selection was strategically crafted to align with the core objectives of our research, which delves into the integration of AI in healthcare and its associated challenges. Keywords such as ‘Artificial Intelligence’ and ‘Healthcare’ directly target the central theme, addressing the intersection of AI and healthcare. To explore privacy concerns, keywords like ‘Differential Privacy’ and ‘Data Security’ were chosen, emphasizing how AI systems manage and protect sensitive patient information. ‘Algorithmic Bias’ and ‘Ethical AI Implementation’ were selected to shed light on potential biases in AI algorithms and the need for ethical frameworks. ‘Patient Agency’ and ‘Patient-Centric Care’ were included to ensure our review encapsulates AI’s impact on patient autonomy and decision-making. Lastly, ‘Regulatory Oversight’ and ‘AI Transparency’ were incorporated to investigate AI’s legal and clarity aspects in healthcare, focusing on transparent operations and robust regulatory frameworks.

Our literature review’s inclusion and exclusion criteria were stringently defined to ensure a focused and relevant collection of studies. We included peer-reviewed articles published from 2018 to 2023, reflecting contemporary developments in this rapidly evolving field. The focus was on articles that addressed AI integration in healthcare, particularly those delving into its ethical, legal, and practical implications. Studies highlighting patient privacy, data security, algorithmic bias, and regulatory challenges were prioritized. Conversely, we excluded articles published outside the specified date range, non-peer-reviewed articles, opinion pieces, and editorials to uphold academic rigor. Studies not directly addressing AI in healthcare or lacking a focus on the specified dimensions were also excluded. Due to language constraints, non-English articles were not considered.

The PRISMA checklist served as a strategic tool in structuring the review process. It guided the identification, screening, eligibility assessment, and inclusion of studies, ensuring that each step was conducted systematically and transparently. We meticulously examined the titles and abstracts of articles using the keywords above and their variants, including full terms and abbreviations, such as ‘AI’ for ‘Artificial Intelligence’. This dual approach ensured that we did not overlook relevant studies due to variations in terminology. Simultaneously, the AI research tools were utilized to automate and streamline the search and selection processes. These tools allowed us to efficiently scan a large volume of literature and identify articles matching our specific search terms and exclusion criteria. By combining the methodical rigor of the PRISMA guidelines with the advanced capabilities of AI research tools, we conducted a thorough and systematic literature review that contributed valuable insights into the role of AI in healthcare.

2.2. Information Sources

The literature search for this study was conducted using a comprehensive and systematic approach, using various electronic databases and advanced Artificial Intelligence (AI) research tools. These tools enhanced the search process’s efficiency and effectiveness, allowing for a more nuanced and targeted exploration of academic literature. One of the primary AI tools used was Elicit, which performs semantic searches across the vast corpus of academic papers available in the Semantic Scholar Academic Graph dataset. Elicit employs a unique model that stores embeddings of titles and abstracts in a vector database. When a research query—comprising our specific keywords such as ‘Artificial Intelligence’, ‘AI’, ‘Healthcare’, and others—is entered, it is embedded using the same model, and the vector database returns the closest embeddings, thus identifying the most relevant papers. Another AI assistant used in our search was SciSpace, which leverages the RETA-LLM model for retrieval-augmented large language model systems. This model enhances the search process by incorporating information retrieval systems that generate factual responses through content retrieved from external corpora. It is particularly adept at answering in-domain questions that require more than just the world knowledge stored in parameters. Additionally, we employed Mirrorthink, a platform that utilizes GPT-4, a state-of-the-art neural network capable of generating natural language responses to queries. Mirrorthink’s integration with databases such as PubMed ensures the accuracy and reliability of the data and results obtained.

These AI research tools were used to search various electronic databases, employing a strategic combination of full terms and abbreviations of our selected keywords. This allowed for a more comprehensive and efficient exploration of the available literature, ensuring that the most relevant and up-to-date studies were included in the review. Using these advanced AI tools in the literature search process represents a novel approach to conducting literature reviews. It demonstrates the potential of AI to significantly contribute to academic research by streamlining the identification and retrieval of pertinent studies, thereby facilitating a more thorough and systematic review of the literature on AI in healthcare.

2.3. Eligibility Criteria

This review encompasses peer-reviewed articles published within the timeframe of 2018–2023. These articles were selected by specific inclusion criteria, emphasizing the semantic similarity of the article’s title and abstract to the research question. Re-ranking the papers based on relevance ensured that the most pertinent studies were included in the review. The results were analyzed through a systematic, three-step process. The first step utilized AI research tools to gather a significant number of articles. During this phase, the titles and abstracts of the articles were meticulously examined for specific search terms and exclusion criteria. To guarantee the uniqueness of the articles, duplicates were automatically removed by the AI tools and then manually by the researchers. In the second step, the articles were independently screened based on their title and abstract to identify those that generally met the inclusion criteria. This step involved a comprehensive assessment of each article by reading the complete text, ensuring that only the most pertinent studies were chosen for further analysis. The final step involved retrieving the full-text articles and conducting data extraction. This process was streamlined by a modified template from the PRISMA method, which offered a structured approach to data extraction. This phase also employed AI tools to extract insights and information from the chosen articles. This innovative approach to data extraction enhanced the process’s efficiency and ensured the extracted data’s accuracy and reliability. This methodical approach to data analysis, supported by AI tools and guided by the PRISMA method, ensured a thorough and accurate analysis of the articles. The use of in-text and narrative citations further strengthened the credibility of the analysis.

2.4. Results

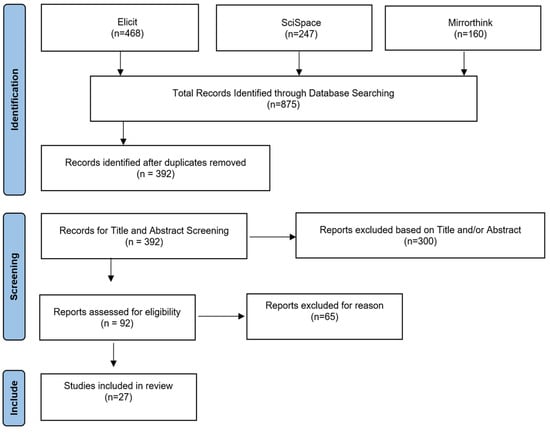

The initial search of information sources yielded 875 articles from various databases, all of which were deemed relevant to the research question by the AI tool. In the second step, 483 duplicate articles were removed, and the abstracts of the remaining 392 articles were manually reviewed for relevance to the research question. However, 300 of these articles were found to be outside the scope of the review. In the third step, the remaining 92 articles were thoroughly reviewed. Out of these, 65 were excluded for various reasons: some were not related to healthcare, some did not discuss AI, some did not address privacy challenges, some did not discuss large-scale data processing, Differential Privacy, or anonymization schemes, some did not discuss patient perceptions, some were published before the specified date range, some were not published in English, and some had low quality or a high risk of bias. After this rigorous screening process, only 27 of the original 875 articles were deemed suitable for review using the PRISMA template, as shown in Figure 1. This methodical approach ensured that only the most relevant and high-quality articles were included in the review, enhancing the strength and validity of the research findings.

Figure 1.

Process map of research methodology.

3. Transformative Impact and Ethical Implications of AI in Healthcare

3.1. AI’s Capabilities and Its Impact on Healthcare

Integrating Artificial Intelligence (AI) into healthcare, particularly in data processing and analysis, is heralding a new era of medical care. This integration is not just about technological advancement but also encompasses a profound shift in the paradigm of patient care, providing opportunities to enhance healthcare delivery through more effective and efficient practices. However, this transformation is accompanied by intricate ethical challenges that must be navigated with caution and foresight. AI’s capabilities in healthcare are extensive and diverse, ranging from diagnostics to treatment strategies and healthcare administration. For instance, AI systems have shown remarkable proficiency in structuring vast medical data, identifying clinical patterns, and validating medical hypotheses. This capability has led to improvements in healthcare quality and efficiency, alleviating the workload of healthcare professionals. The application of AI to making complex clinical decisions, particularly those that hinge on patient preferences, is a significant development. In scenarios like cardio-pulmonary resuscitation and determining Do Not Attempt to Resuscitate (DNAR) status, AI can offer computational assistance that aligns with patient preferences while mitigating human biases in decision-making under stress and time pressure [4,5,6].

The advancement of AI in healthcare has also been instrumental in enhancing diagnostic accuracy, tailoring personalized treatment strategies, and improving patient outcomes. AI’s predictive capabilities in healthcare are particularly noteworthy, enabling proactive interventions and contributing to public health by identifying potential health risks and disease patterns. These capabilities are facilitated by AI’s ability to process and analyze extensive datasets, including patient histories, diagnostic information, and treatment outcomes. As AI models become increasingly sophisticated and integrated into clinical settings, they necessitate a strong emphasis on data integrity and security. However, the reliance on extensive health data for AI algorithms brings critical ethical challenges to the fore. These include concerns related to patient privacy, decision-making autonomy, and data integrity. The need for large volumes of data to effectively train and operate AI systems poses inherent risks of privacy infringement. Therefore, there is a pressing need for careful management of health data collection, storage, and processing to uphold patient confidentiality. Moreover, using AI in disease prediction and risk factor analysis requires an ethical approach to handling sensitive health information. The potential for bias in AI algorithms, resulting from training on datasets not representative of the diverse patient population, could lead to inaccurate results and raise ethical concerns about equitable patient care [4,5,6].

The introduction of AI into healthcare decision-making processes also raises questions about the autonomy of healthcare providers and patients. The extent to which AI should influence medical decisions is a matter of ongoing debate, underscoring the need to balance AI recommendations with human judgment. While AI’s capabilities in healthcare offer significant opportunities for enhancing patient care, addressing these ethical concerns is paramount. Responsible use of AI in healthcare requires navigating complex issues around privacy, bias, autonomy, and the integrity of decision-making processes. As AI continues to evolve, it is crucial to focus on ethical considerations to realize its full potential in a manner that aligns with the core values of healthcare [4].

Comprehensive insights into the complexities and ethical considerations surrounding the use of AI in healthcare are provided, especially in the context of decision-making processes for cardiopulmonary resuscitation and Do Not Attempt to Resuscitate (DNAR) status. The study conducted at a university hospital highlights the potential of AI to improve healthcare delivery and decision-making, especially in high-pressure, ethically sensitive situations. The AI systems discussed in the paper are primarily designed to assist with complex clinical decisions, especially those that depend heavily on patient preferences, such as resuscitation decisions. These systems can analyze vast data sets to generate suggestions consistent with patient preferences and likely outcomes, potentially reducing the impact of stress, time pressure, personal biases, and conflicts of interest that may affect human decision-making. The paper acknowledges the challenges in healthcare decision-making, such as insufficient knowledge about patient preferences, time pressure, and personal biases that guide care considerations. It suggests that AI-based decision support could significantly improve the status quo by helping healthcare professionals and legal representatives make more informed decisions about a patient’s DNAR status. The AI system would support rather than replace human decision-making, acting as a consultative tool to enhance decision-making [6].

However, the development of such AI systems is ethically demanding. It requires careful consideration of several preconditions, including the role of AI relative to human decision-making, legal liabilities, and the balance between algorithmic suggestions and human judgment [6]. The paper emphasizes the importance of transparency, explicability, bias monitoring, and user trust in ensuring the ethical application of AI in healthcare [4]. It also highlights the need for compatibility with hospital information systems, practical user training, and awareness of the limitations and risks of AI support in decision-making. The study’s conclusion underscores the need for a well-defined ethical framework and evaluation standards for AI-based decision-support systems. As AI systems gain recognition for their accuracy and reliability, they raise critical questions at the intersection of medicine, ethics, and computer science, including bias, fairness, autonomy, and accountability [5]. The paper advocates for including various perspectives, particularly those of patients and legal representatives, in addressing these ethical and policy concerns to ensure the responsible use of AI in healthcare.

3.2. Addressing Privacy and Patient Agency through AI

The integration of AI in healthcare brings forth complex privacy challenges, particularly in the realms of data control and usage by private corporations. As AI systems increasingly handle sensitive patient data, concerns regarding the stewardship of this information by private entities become paramount. Establishing transparent and accountable data governance frameworks that respect patient privacy and agency is essential to addressing the potential for misuse or unauthorized exploitation of health data. These challenges underscore the need for a delicate balance between technological advancement and the protection of patient rights [1].

In the age of digital healthcare transformation, AI-driven systems are increasingly at the forefront of medical innovation. However, with this advancement come significant challenges concerning patient privacy and agency. The risk of privacy infringement due to the misuse of pseudo-anonymized patient data [7,8] is a primary concern. Sophisticated techniques can potentially re-engineer anonymized data to identify individuals, thus breaching patient confidentiality. The complexity of AI algorithms often makes it challenging to track patient data utilization within these systems [9,10], leading to potential misuse or a lack of transparency.

3.3. Implementing Ethical AI in Healthcare

Integrating Artificial Intelligence (AI) in healthcare represents a transformative shift in how medical services are delivered and managed. AI can improve diagnostic accuracy, personalize treatment plans, optimize resource allocation, and predict patient outcomes more precisely. However, the deployment of AI in healthcare must be cautiously approached due to the sensitive nature of medical data and the ethical implications surrounding its use [11]. Safeguarding patient data privacy is a critical issue in the healthcare sector, particularly with the integration of AI technologies that rely on medical records replete with sensitive personal information. Mishandling such data could result in significant privacy violations and misuse [7,12]. To mitigate these risks, a suite of sophisticated technologies has been developed to maintain the confidentiality and integrity of patient information while harnessing AI’s capabilities.

Blockchain technology provides a secure, decentralized method for storing and managing healthcare data, utilizing a distributed ledger system to ensure that patient records are immutable and verifiable, thereby thwarting unauthorized access and potential data breaches. It also enables secure data sharing among verified entities. In parallel, Federated Learning (FL) allows for training AI models on decentralized devices or servers that hold local data samples, negating the need to transfer sensitive patient data and thus reducing centralization concerns [13]. Homomorphic Encryption (HE) offers a solution for performing computations on encrypted data without decryption, allowing AI to process and learn from the data. At the same time, it remains encrypted, thus upholding privacy even when third-party analysis is required [9]. Lastly, Differential Privacy (DP) introduces controlled noise to data sets to obscure individual identities, permitting the training of AI models on population-representative data without compromising individual privacy [10]. Collectively, these technologies form a robust framework for protecting patient privacy in the age of AI-driven healthcare. Implementing these technologies reflects a commitment to ethical standards in healthcare AI. They address technical challenges and demonstrate a dedication to respecting and protecting patient rights, particularly regarding privacy and autonomy. As AI continues to evolve and become more integral to healthcare delivery, the role of these technologies in maintaining a balance between innovation and ethical responsibility becomes increasingly vital. They represent solutions to current challenges and a vision for a future where healthcare AI advances with ethical considerations, ensuring that patient welfare remains at the core of technological progress in the healthcare sector.

3.3.1. Blockchain Technology: Reinforcing Security and Transparency in Healthcare AI

Integrating Artificial Intelligence (AI) in healthcare marks a significant advancement in medical technology, offering potential benefits in enhancing patient care. However, it also introduces substantial ethical challenges concerning privacy and patient autonomy. These concerns necessitate the adoption of advanced technologies that can safeguard patient data while leveraging the benefits of AI in healthcare [11]. Blockchain technology has emerged as a promising solution to enhance data security and integrity in healthcare AI. Its decentralized nature eliminates the need for central authority, reducing the risk of a single point of failure that could compromise the entire system. In healthcare, blockchain can create a secure and unforgeable record of patient data transactions, ensuring that medical records are accurate, consistent, and tamper-proof. For a detailed exploration of how blockchain technology influences privacy and decision-making in AI healthcare, see the insights in Table 2. The blockchain application extends beyond just data storage; it can also facilitate secure data sharing between different healthcare stakeholders. Smart contracts, which are self-executing contracts with the terms of the agreement directly written into code, can automate the consent process for data sharing, ensuring that patient data are only accessed by authorized parties and under specific conditions agreed upon by the patient. Moreover, blockchain can be integrated with other technologies, such as AI and IoT devices, to enable secure and real-time monitoring of patient health data, providing healthcare professionals with reliable data for decision-making while preserving patient privacy [7,12].

Table 2.

Deeper impact of blockchain technology on privacy and decision-making in AI healthcare.

Recent research underscores the collective potential of blockchain technology in bolstering the security and privacy of healthcare AI systems. The authors of [14] introduced an IoT-based model for secure big data transfer in healthcare, leveraging blockchain for data integrity and security. The authors of [15] proposed an architecture combining AI and blockchain to protect smart healthcare systems from data integrity attacks, focusing on wearable devices. The authors of [16] envisioned a healthcare metaverse where AI and blockchain work together to offer secure and efficient remote health monitoring and virtual consultations. The authors of [17] provided a bibliometric analysis highlighting the synergy between AI and blockchain in enhancing security and calling for more research to develop stable systems.

These studies collectively advocate for integrating blockchain with AI to address security concerns, each contributing unique perspectives on system architecture, data transmission, and the application of smart contracts. By synthesizing their findings, it becomes evident that the convergence of these technologies can lead to innovative solutions for managing healthcare data with improved security and patient privacy. However, they also agree on the necessity for continued research to overcome data privacy, security, and trust challenges, ensuring the practical implementation of these advanced systems in real-world healthcare settings. Continuing from the synthesized research findings, it is clear that the application area of blockchain in healthcare AI is vast and multifaceted. The integration of blockchain technology is not limited to securing electronic health records but extends to enhancing the entire healthcare delivery system. This includes patient data management, drug traceability, clinical trials, and personalized medicine, where secure and transparent data handling is paramount. As the healthcare industry continues to innovate, blockchain and AI will likely expand into new areas, such as advanced biometrics for patient identification and decentralized autonomous organizations (DAOs) for patient-led research initiatives. To realize these advancements, stakeholders must address existing challenges through interdisciplinary collaboration, investment in technology infrastructure, and the development of regulatory frameworks that balance innovation with ethical considerations. Continuous dialogue between technologists, healthcare professionals, patients, and policymakers is essential to navigating the complexities of integrating these transformative technologies into healthcare. With a concerted effort, integrating blockchain and AI can lead to a more secure, efficient, and patient-centered healthcare system [18].

The convergence of blockchain and AI in healthcare applications presents a promising avenue for addressing data privacy and integrity challenges. Blockchain’s attributes of decentralization, immutability, and transparency complement AI’s capabilities, creating a secure environment for handling sensitive healthcare data. The studies by [14,15,16,17] collectively underscore the potential of blockchain technology in enhancing the security and efficiency of healthcare AI systems. As the healthcare industry continues to evolve with the integration of AI and blockchain, it is crucial to address the ongoing challenges and threats to data security. Continuous research and development are necessary to refine these technologies and their applications in healthcare. Future research directions may include optimizing blockchain and AI algorithms, developing more robust security measures, and exploring new application areas within the healthcare sector. Blockchain technology offers a robust framework for addressing AI’s healthcare privacy and data integrity challenges. By leveraging the strengths of blockchain and AI, healthcare systems can achieve higher security and efficiency, ultimately leading to improved patient care and trust in digital healthcare services. The body of research discussed herein provides valuable insights and a solid foundation for future advancements in this interdisciplinary field.

3.3.2. Federated Learning: Enhancing Privacy in Healthcare AI

Federated Learning (FL) represents a paradigm shift in developing Artificial Intelligence (AI) models, particularly in sensitive healthcare. By enabling multiple institutions to collaborate on AI model development without exchanging patient data, FL addresses the most pressing concerns in healthcare AI: privacy and data integrity. Privacy is a fundamental concern when dealing with healthcare data due to its sensitive nature. Traditional machine learning approaches often require centralizing data from various sources, which poses significant privacy risks. FL circumvents this issue by allowing each participating institution to retain its data locally. As Ref. [19] explains, only the model parameters or gradients—essentially the learned features or updates to the model—are shared with a central server or among participants. This ensures that the raw patient data, which may contain personally identifiable information, never leaves the institution’s premises where it was collected. Using techniques such as Secure Multi-party Computation (SMC) and Differential Privacy (DP) further enhances privacy in FL. SMC allows for the computation of functions across different inputs while keeping those inputs private, which is ideal for scenarios where data cannot be pooled together. DP provides a mathematical framework that guarantees that the outcome of an analysis is not significantly affected by the inclusion or exclusion of any individual’s data. This means that even if someone had access to the output of a model trained with DP, they would not be able to infer much about any individual’s data [13].

Data integrity is another critical challenge in healthcare AI. Data quality and consistency across different healthcare institutions can vary greatly, leading to biased or non-generalizable AI models. FL offers a solution to this by enabling the development of models that learn from a diverse and representative dataset. Since each institution trains a local model on its own data, the global model benefits from a wide range of data sources, leading to more accurate and robust AI models. These models are better equipped to perform well across different patient populations and healthcare settings, as they are not limited to data from a single source. Moreover, FL can help address the issue of data fragmentation in healthcare. As healthcare data are often siloed across various institutions, FL allows for connecting these fragmented data sources while maintaining privacy. This is particularly beneficial for tasks such as patient similarity learning, representation learning, and predictive modeling, where the ability to draw insights from a comprehensive dataset is crucial. By leveraging data from multiple institutions, FL can help create more accurate and generalizable models that reflect the global patient population, thus improving the quality of care [13].

Despite the advantages of FL in preserving privacy and ensuring data integrity, several challenges need to be addressed to fully realize its potential in healthcare AI. The authors of [19] highlight the statistical variance in data distribution among clients, communication efficiency, and the potential for malicious clients as operational challenges in FL. The statistical heterogeneity of data across different institutions can lead to skewed models if not correctly managed. Communication efficiency becomes a concern when dealing with many participants, as the exchange of model updates can be bandwidth-intensive. Additionally, the risk of malicious clients attempting to compromise the model or infer sensitive information requires robust security mechanisms [13].

To address these challenges, future research in FL should focus on improving data quality, incorporating expert knowledge into the learning process, developing incentive mechanisms to encourage participation, and personalizing healthcare applications. Ensuring high-quality data are essential for the success of FL, as the models are only as good as the data they learn from. Expert knowledge can guide the learning process, making the models more practical and clinically relevant. Incentive mechanisms are necessary to motivate institutions to participate in FL, while personalization is critical to tailoring healthcare solutions to individual patient needs. Federated Learning holds significant promise for overcoming privacy and data integrity challenges in healthcare AI. FL can facilitate the development of robust, accurate, and generalizable AI models by enabling collaborative model training across various institutions while safeguarding data privacy. However, realizing the full potential of FL will require ongoing research to tackle operational challenges, improve data quality, and ensure the models are clinically relevant and personalized for patient care. As the healthcare industry continues to evolve, FL is a beacon of innovation, guiding the way toward more secure, efficient, and patient-centric AI solutions [13].

3.3.3. Unlocking Privacy: Harnessing Homomorphic Encryption for Healthcare AI

Homomorphic Encryption (HE) stands as a transformative technology in data security, particularly within the healthcare sector, where the confidentiality and integrity of patient information are of utmost importance. The ability of HE to enable computations on encrypted data without the need to decrypt it first offers a powerful tool for maintaining privacy while still allowing for the valuable analysis and utilization of healthcare data by Artificial Intelligence (AI) systems. The study by [20] delves into the intricacies of HE and its application in healthcare AI, providing a detailed exploration of how HE can address the twin challenges of privacy and data integrity. The authors elucidate the operational mechanisms of HE, the evolution of various HE schemes, and the practical considerations for its implementation in healthcare environments. One of the pivotal advantages of HE in healthcare AI is its role in cloud computing. As healthcare providers increasingly rely on cloud services for data storage and computation, the risk of exposing sensitive patient data to third-party service providers becomes a significant concern. HE mitigates this risk by ensuring that only encrypted data are processed by these external entities, thus maintaining the confidentiality of patient information even in a distributed computing environment [9].

The operational mechanism of HE is a multi-step process that begins with the encryption of data by the client before it is sent to the cloud service provider. The provider then performs the necessary computations on the encrypted data using HE techniques, which results in encrypted outputs. A notable challenge in this process is the accumulation of noise with each operation, which can degrade the quality of the encryption. Techniques such as bootstrapping and squashing are employed to manage this noise and maintain the integrity of the encrypted data. The client then decrypts the results, ensuring that the sensitive information remains secure. The authors of [20] also provide a historical perspective on the evolution of HE schemes, tracing back to the early concept of ‘privacy homomorphism’. This foundational work laid the groundwork for subsequent developments in the field, including the Goldwasser-Micali and Elgamal schemes, which introduced improvements in security and functionality. The additive homomorphic method allowed for operations on encrypted text that corresponded to addition operations on plaintext, while the Paillier cryptosystem expanded the capabilities of HE to include both addition and multiplication operations on encrypted data [9].

Despite the promising capabilities of HE, challenges still need to be fully addressed to harness its potential in healthcare AI. One of the primary challenges is the computational intensity associated with HE operations. The encryption and decryption processes and the computations on encrypted data are resource-intensive and can be significantly slower than operations on plaintext. This presents a hurdle for the real-time analysis and processing of large datasets commonly encountered in healthcare applications. Researchers are optimizing HE algorithms to improve efficiency and make them more practical for widespread use in healthcare AI. Another area of focus is the scalability of HE solutions. As healthcare systems generate vast amounts of data, the HE methods must be able to scale accordingly to handle the increasing volume while maintaining the same level of security and privacy. This requires advancements in the underlying cryptographic techniques and the infrastructure that supports HE, such as cloud computing platforms and specialized hardware [9].

Furthermore, the integration of HE into existing healthcare IT systems poses its own set of challenges. Healthcare providers must navigate the complexities of implementing HE within their current workflows, ensuring compatibility with existing software and hardware, and training staff to use the new systems effectively. The cost of adoption is also a consideration, as deploying HE solutions may require significant investment in technology and expertise. Despite these challenges, the benefits of HE in healthcare AI are clear. By enabling secure and private data analysis, HE facilitates the development of more accurate and personalized predictive models, which can lead to better patient outcomes. It also allows for secure data sharing between healthcare providers and researchers, fostering collaboration and innovation while protecting patient privacy. As the technology matures and solutions to the current challenges are found, HE is poised to play a critical role in the future of healthcare AI, offering a path to harness the power of data while upholding the highest standards of privacy and data integrity. As the healthcare industry continues to evolve with AI and cloud computing integration, HE will remain an essential tool for ensuring that patient data are handled with the utmost security and confidentiality, ultimately contributing to advancing healthcare technology and patient care [9].

3.3.4. Differential Privacy: Advanced Techniques in Healthcare AI

Differential Privacy (DP) is a critical privacy-preserving technique that has gained significant traction in healthcare AI due to its ability to provide strong privacy guarantees. It functions by introducing a calculated amount of random noise, either to the data itself or to the outputs of algorithms, thereby making it statistically challenging for attackers to deduce sensitive information about any individual in the dataset. This method is advantageous for sharing insights from health data, such as statistical summaries or AI model parameters, while safeguarding patient confidentiality. The core principle of DP is to ensure that the presence or absence of any individual’s data do not substantially alter the outcome of an analysis. This feature is vital in healthcare settings where the sensitivity of patient data necessitates the utmost levels of confidentiality. DP’s application extends to various algorithms, including but not limited to boosting, principal component analysis, support vector machines, and deep learning. It is also effectively employed in Federated Learning scenarios involving decentralized data processing across multiple entities, such as hospitals or research institutions, to prevent indirect data leakage—a prevalent issue in collaborative environments [19].

Despite its benefits, it is crucial to acknowledge that DP is inherently a ‘lossy’ technique. While it excels at enhancing privacy, it can simultaneously lead to reduced accuracy of predictions or analyses due to the added noise. This inherent trade-off between privacy and utility is a pivotal consideration in the deployment of DP within healthcare AI. As privacy protection intensifies with increased noise, the usefulness of the data for research and the accuracy of AI models may be compromised. To counterbalance this, researchers often integrate DP with other methods like Secure Multi-party Computation (SMC) to balance privacy and data utility [19]. This hybrid approach is designed to control the escalation of noise with the number of participants, thus maintaining both privacy and the practical value of the data. DP presents a robust framework for preserving individual privacy in healthcare AI applications, particularly in distributed data processing contexts. Its capability to prevent data leakage must be carefully weighed against the potential diminution in prediction accuracy and data utility. Therefore, meticulous implementation and, where necessary, incorporating additional privacy-preserving techniques are essential to optimizing the benefits of DP in healthcare AI [10].

3.4. Ethical Technologies as Cornerstones in Healthcare AI

Integrating blockchain, Federated Learning (FL), Homomorphic Encryption, and Differential Privacy into healthcare AI systems is a multifaceted endeavor that addresses the pressing need for ethical standards and the protection of patient rights in the rapidly evolving field of healthcare technology. Collectively, these technologies form a robust framework for managing the challenges associated with the privacy and security of sensitive healthcare data while fostering innovation and efficiency in healthcare delivery.

Blockchain technology stands out for its unique attributes of decentralization, immutability, and transparency, which are particularly beneficial in the context of healthcare AI systems. By creating a secure and tamper-proof ledger for medical data transactions, blockchain technology ensures the integrity and traceability of patient data, which is paramount in clinical settings. For instance, blockchain can be used to securely manage electronic health records (EHRs), providing a reliable source of patient data for healthcare professionals and AI algorithms while preventing unauthorized access and data breaches [19]. Federated Learning (FL) represents a paradigm shift in data analysis and machine learning, particularly within the healthcare sector. By enabling the training of AI models on decentralized data sources, FL ensures that sensitive patient information remains within the confines of the local institution, thereby preserving privacy. This approach is crucial for predicting hospital readmission risks and screening for medical conditions using wearable device data. FL’s ability to integrate data from various sources without compromising privacy is a testament to its potential to enhance the capabilities of healthcare AI systems. Homomorphic Encryption is a cryptographic technique that allows computations to be performed on encrypted data without the need to decrypt it first. Healthcare providers can analyze and process sensitive patient data while it remains encrypted, significantly reducing the risk of data exposure. Homomorphic Encryption in healthcare AI systems enables secure data utilization, ensuring patient confidentiality is maintained even during complex data analysis processes [15]. Differential Privacy is a technique that adds a certain amount of noise to data queries, making it difficult to identify individual records while allowing for accurate aggregate analysis. This approach is particularly relevant in healthcare, where patient data are susceptible. Differential Privacy ensures that the privacy of individual patients is not compromised when their data are used for research or to train AI models. By integrating Differential Privacy into healthcare AI systems, researchers and clinicians can gain valuable insights from large datasets without risking the exposure of personal health information, thus maintaining patients’ trust and complying with stringent privacy regulations such as GDPR and HIPAA [21].

The Internet of Healthcare Things (IoHT) represents a significant advancement in healthcare data management, with devices such as smartwatches and wearable trackers collecting a wealth of health-related data. These data are invaluable for healthcare professionals to make informed decisions regarding disease treatment and management. However, the traditional centralized machine learning models face challenges related to data access restrictions, potential biases, and high resource demands. Integrating blockchain, FL, Homomorphic Encryption, and Differential Privacy within IoHT systems offers solutions to these challenges, ensuring that data are managed ethically and securely while enabling innovative healthcare solutions [15]. Despite the promise of FL, there are inherent challenges, such as the statistical diversity of data across clients and communication efficiency. Techniques like Agnostic Federated Learning and q-Fair Federated Learning have been proposed to address the issue of data heterogeneity. At the same time, client selection protocols, model compression, and update reduction strategies aim to improve communication efficiency. Moreover, integrating Secure Multi-party Computation and Differential Privacy within FL frameworks enhances the privacy and security of the data, making FL a powerful tool for healthcare applications [19].

As AI continues to reshape healthcare, integrating blockchain, FL, Homomorphic Encryption, and Differential Privacy is critical for addressing the complex challenges of intelligent healthcare systems. These technologies enhance the security and privacy of healthcare delivery and ensure that ethical standards are upheld. The collective application of these technologies represents a vision for a future where healthcare AI advances in tandem with ethical considerations, with patient welfare remaining at the core of technological progress [21]. The detailed integration of blockchain, Federated Learning, Homomorphic Encryption, and Differential Privacy into healthcare AI systems is a testament to the commitment to ethical standards and the protection of patient rights. These technologies address the technical challenges of privacy and data security, encapsulating a dedication to respecting and protecting patient autonomy. As the healthcare industry continues to innovate, the role of these technologies in maintaining a balance between innovation and ethical responsibility is vital. They offer solutions for a future where the advancement of healthcare AI occurs hand-in-hand with safeguarding patient welfare, ensuring that the benefits of technology do not come at the expense of privacy and trust.

Integrating these advanced technologies into healthcare AI systems is not merely a technical exercise but a patient-centric approach that prioritizes the well-being and rights of individuals. By leveraging blockchain, healthcare providers can offer a more transparent and patient-controlled exchange of medical data, empowering patients to have a say in who accesses their information. Federated Learning enables the development of more personalized treatment plans by analyzing data from a diverse patient population while keeping individual data localized and private. Homomorphic Encryption and Differential Privacy further contribute to this patient-centric approach by allowing for the secure and private analysis of health data, ensuring that patients’ identities are shielded from potential misuse [15]. The healthcare sector is heavily regulated to protect patient information and ensure the ethical use of data. The technologies discussed herein play a crucial role in helping healthcare organizations navigate the complex landscape of regulatory compliance. By adopting these technologies, healthcare providers can meet the requirements of laws such as HIPAA in the United States and GDPR in Europe, which mandate strict data privacy and security standards. The ethical considerations of patient data use are also addressed, as these technologies provide mechanisms to ensure that patient consent is obtained and that data are used to respect individual autonomy and dignity [19].

Integrating blockchain, FL, Homomorphic Encryption, and Differential Privacy into healthcare AI systems requires collaborative efforts across various disciplines, including computer science, healthcare, law, and ethics. Interdisciplinary teams must work together to design systems that are both technologically sound, ethically responsible, and legally compliant. This collaboration is essential for creating healthcare AI systems trusted by patients, clinicians, and society. While the potential of these technologies is immense, there are still challenges to be addressed, such as ensuring data quality, incorporating expert knowledge into AI models, designing efficient incentive mechanisms for data sharing, and improving the precision of AI algorithms. Addressing these challenges will require ongoing research, innovation, and a commitment to continuous improvement. As the healthcare industry moves forward, these technologies must evolve to meet the ever-changing needs of healthcare delivery and the expectations of patients. Ensuring data quality is critical, as the efficacy of AI models heavily depends on the input data’s accuracy and reliability. Involving medical experts in the training process is equally essential to providing domain-specific insights that can enhance the performance and relevance of AI algorithms [21].

Designing efficient incentive mechanisms is another area that requires attention. These mechanisms are necessary to encourage the sharing of data among institutions and individuals, which is fundamental for the success of Federated Learning [9]. Without proper incentives, the potential of collaborative AI model training may not be fully realized. The personalization of healthcare through AI also presents both a challenge and an opportunity. As AI systems become more sophisticated, they can be tailored to individual patient needs, leading to more effective and personalized treatment plans. However, achieving this level of personalization requires a deep understanding of individual patient data and the ability to adapt AI models accordingly. Finally, improving the precision of AI models in healthcare is an ongoing pursuit. As models become more accurate, they can provide better predictions and recommendations, improving patient outcomes. However, this requires a careful balance between model complexity and interpretability, ensuring that healthcare professionals can understand and trust the AI-driven decisions [5].

As we look to the future, the ethical imperative for developing and integrating AI in healthcare remains paramount. The technologies of blockchain, Federated Learning, Homomorphic Encryption, and Differential Privacy offer a framework for ethical AI by prioritizing the privacy, security, and autonomy of patient data. These technologies must be developed and implemented with a clear ethical vision that places patients’ well-being above all else. Integrating blockchain, Federated Learning, Homomorphic Encryption, and Differential Privacy into healthcare AI systems is a complex but necessary step toward a more secure, private, and ethical healthcare landscape [9]. These technologies provide the tools needed to navigate the challenges of data security and patient privacy in an increasingly digital world. As AI continues to transform healthcare, the commitment to ethical standards and the protection of patient rights must remain at the forefront of technological advancements. The future of healthcare AI is not just about harnessing the power of data but doing so in a way that respects and enhances the human element of healthcare. With continued research, collaboration, and ethical vigilance, the integration of these technologies will lead to a healthcare system that is more efficient, effective, and more aligned with society’s values [5].

The ongoing dialogue between technology developers, healthcare providers, policymakers, and patients is crucial to ensuring that the deployment of AI in healthcare reflects a collective vision that values privacy, security, and ethical responsibility. As these stakeholders engage in this conversation, it is essential to consider the broader implications of technology on accessibility, equity, and the potential for disparities in healthcare outcomes. The promise of AI in healthcare must be accessible to all population segments. This includes ensuring underserved communities have the same opportunities to benefit from AI-driven healthcare advancements. Equity in healthcare AI also means that algorithms are free from biases that could perpetuate disparities in health outcomes. Integrating blockchain, FL, Homomorphic Encryption, and Differential Privacy can help mitigate these risks by providing a secure and unbiased data analysis framework [9].

Research and development efforts must continue to improve the technologies underpinning healthcare AI systems. This includes enhancing the scalability of blockchain, the efficiency of Federated Learning algorithms, the performance of Homomorphic Encryption techniques, and the effectiveness of Differential Privacy measures. Additionally, interdisciplinary research can explore new ways to integrate these technologies, creating even more robust solutions for healthcare data management. As technology evolves, so must the regulatory frameworks governing its use. Regulators must stay abreast of technological advancements to ensure that laws and guidelines protect patient data and promote ethical AI practices. This may involve updating existing regulations or creating new ones that address the unique challenges posed by integrating blockchain, FL, Homomorphic Encryption, and Differential Privacy in healthcare. The journey towards integrating advanced technologies into healthcare AI systems is ongoing and requires a concerted effort from all stakeholders. The potential benefits are vast, including improved patient outcomes, enhanced privacy and security, and a healthcare system that is more responsive to the needs of all individuals. By maintaining a focus on ethical standards, patient rights, and societal values, the future of AI in healthcare cannot only leverage the power of data but also embody the principles of compassion, fairness, and respect for human dignity [5,9,21].

The complexity of integrating blockchain, FL, Homomorphic Encryption, and Differential Privacy into healthcare AI systems suggests that public-private partnerships could play a significant role. Collaboration between government agencies, technology companies, healthcare institutions, and academic researchers can accelerate innovation, share risks, and pool resources to tackle the challenges associated with these technologies. As AI becomes more ingrained in healthcare, there is a growing need for ethical AI governance structures. These structures should oversee the development and deployment of AI systems, ensuring that they adhere to ethical guidelines and that there is accountability for their impact on patients and society. This governance could be internal ethics boards within organizations, industry-wide consortia, or independent oversight bodies [9,21].

Patients must actively participate in conversations about how their data are used in healthcare AI. Engaging with patients to understand their concerns and preferences can lead to better-designed AI systems that respect patient autonomy and promote empowerment. Education and awareness are critical components in the successful integration of these technologies. Healthcare professionals must be educated about the capabilities and limitations of AI systems, and patients must be informed about how their data are being used and protected. This transparency is essential for building trust and ensuring all parties are comfortable using AI in healthcare. Blockchain can facilitate patient engagement by giving individuals control over their health data and who can access it. Integrating blockchain, Federated Learning, Homomorphic Encryption, and Differential Privacy into healthcare AI systems is a complex but necessary endeavor to ensure that the digital transformation of healthcare is conducted ethically and responsibly. It requires a collaborative, interdisciplinary approach that balances technological innovation with protecting patient rights and societal values. As we move forward, it is crucial to continue exploring and addressing the technical, ethical, and regulatory challenges that arise to create a healthcare system that is not only intelligent but also just and equitable for all [9,21].

3.5. Algorithmic Fairness, Transparency, and Trust in AI-Driven Healthcare

In AI-driven healthcare, a multifaceted approach is vital to ensure algorithmic fairness, transparency, and trust, which are pivotal for equitable and ethical healthcare delivery. Mitigating algorithmic bias is crucial for equitable patient treatment and involves analyzing and adjusting AI models to reflect diverse patient demographics. Including diverse datasets in AI model training is essential for minimizing biases and promoting equitable health outcomes [8,22]. Additionally, AI fairness and bias detection tools are essential for analyzing and readjusting algorithms to ensure fair treatment across patient demographics. Developing transparent and understandable AI systems is critical to building trust among healthcare stakeholders, addressing the nature of AI algorithms’ ‘black box’ nature, and improving the understanding of AI processes [2,23]. Explainable AI (XAI) plays a foundational role in making AI decisions and processes more comprehensible, thus enhancing transparency and fostering trust. This comprehensive methodology ensures that AI applications cater effectively to the diverse needs of patient populations and establishes a trust-based relationship between AI applications and healthcare users, ensuring AI’s role remains informative and supportive [13].

Incorporating fairness and explanation in AI-informed healthcare decision-making, as noted by [24], is paramount to ensuring ethical AI integration. AI systems must refrain from perpetuating or introducing new forms of discrimination, especially given the diversity of patient populations. Detecting and mitigating biases in AI models is essential for equitable treatment across all patient groups. The development of AI models that are both fair and explainable allows healthcare providers and patients to understand and trust AI decisions, reinforcing patient autonomy and agency. Moreover, integrating AI into healthcare demands attention to patient dignity and respect. The authors of [25] explore how AI perceptions in healthcare are tied to how these systems uphold human dignity, highlighting the need for AI applications to respect patient values. This aligns with broader ethical considerations in healthcare AI, particularly concerning patient autonomy, data privacy, and algorithmic bias. In the broader context of AI decision-making, [26] points out the risk of AI simplifying patient narratives, emphasizing the need for AI systems to augment personalized care while maintaining essential human elements for adequate healthcare. The authors of [27] emphasize patient engagement, advocating for informed and active involvement in AI-related healthcare decisions. Addressing algorithmic discrimination, enhancing transparency, and fostering trust in AI applications are crucial for ethical and patient-centered healthcare. Adopting frameworks that emphasize fairness, transparency, and explanation, alongside a focus on human dignity and patient engagement, AI in healthcare can achieve more equitable and trustworthy outcomes, aligning technological innovation with ethical considerations.

Integrating the AI-driven Internet of Things (AIIoT) in healthcare presents unique challenges and opportunities for ensuring algorithmic fairness, transparency, and trust. Mitigating bias in AI algorithms is paramount, especially considering the diverse nature of patient populations and the varied nature of diseases and conditions. The research by [21] highlights the rapid adoption of AIIoT in healthcare, significantly enhancing the quality and effectiveness of healthcare services. This adoption is particularly valuable for chronic conditions, elderly care, and continuous monitoring. Incorporating diverse data sets into AI model training minimizes biases and promotes equitable health outcomes. The use of AIIoT, including remote monitoring and communication, significantly enhances patient treatments. Mobile medical applications and wearable devices enable patients to capture personal health data, empowering them to actively manage their health. This approach aligns with the need for diverse data sets, capturing a wide range of patient data and leading to more personalized and effective healthcare solutions.

The importance of reducing medical errors through AI-driven decision-support systems cannot be overstated. AIIoT has evolved to use complex algorithms like neural networks, decision trees, and support vector machines, which are instrumental in making health-related decisions more effectively. These technologies, however, must be developed with fairness in mind, ensuring they do not perpetuate existing biases or create new ones. Security and privacy are critical concerns in the AIIoT framework. The emphasis is placed on the need for well-defined architecture standards, including interfaces, data models, and relevant protocols, to support a variety of devices and languages. Identity management within the Internet of Things is crucial to solving both security and privacy risks associated with AIIoT. This involves exchanging identifying information between devices and employing cryptography and other techniques to prevent identity theft. Ensuring algorithmic fairness, transparency, and trust in AI-driven healthcare necessitates focusing on diverse data sets and fairness tools. This approach contributes to equitable patient treatment and outcomes by enhancing personalized care and reducing medical errors. Additionally, addressing security and privacy challenges in AIIoT is essential for successfully implementing and scaling up these technologies in healthcare. Implementing a proper governance framework across various aspects of AIIoT architecture and standardization is crucial for realizing the full potential of AI in transforming healthcare [22].

The control and sharing of healthcare data have been central to discussions about AI in healthcare. It is emphasized that the flow of data towards AI is crucial, with arguments against approaches that restrict this flow. Such restrictions could hinder the development of future AI applications and diminish the need for data to train them. They suggest that existing experiences in monetizing digitalized content, such as music, offer a practical solution to incentivize patients to share their data without restricting data flows. The AI market’s dominance by a few mega-firms necessitates policies that allow individuals to control their data and represent their interests collectively. The article outlines various initiatives, like the Health Data Hub, SOLID, and DECODE, which aim to control data flow and induce artificial scarcity. However, this may not apply to AI, as AI is ‘data-hungry’. Less data lead to fewer AI applications, thus limiting the demand for data to train and furnish these applications [8].

AI’s reliance on data underscores the need for organized markets to facilitate data flow toward AI. However, transaction costs associated with data trade often exceed the value of the exchange itself, making the negotiation and licensing of data unprofitable. Thus, agents must bargain for some indemnification directly with AI firms. Attention is suggested to be shifted towards methods that allow for unrestricted data flows, which is vital for ensuring algorithmic fairness, transparency, and trust. The concept of Collective Data Management (CDM) is introduced as a means to enable firms to accumulate, process, utilize, and benefit from data while jointly overseeing data usage and negotiating some form of ex-post compensation. Drawing from the experiences of Collective Rights Management (CRM), this approach seeks to establish transparency and accountability in data governance, thereby nurturing public trust and confidence [8].

The integration of CDM into the legal framework is crucial for its success. The technical infrastructure of CDM must respect data governance processes designed to achieve transparency, integrity, security, and accountability. This is vital for acknowledging the social relationship created by using healthcare data, which entails responsibility and awareness of how data use aligns with societal values. Such governance is essential in fostering a trust-based relationship between AI applications and healthcare users, ensuring AI’s role remains informative and supportive. Adopting frameworks emphasizing fairness, transparency, and explanation in AI-informed healthcare decision-making is paramount. Such frameworks must be grounded in existing legal practices and adapted to new challenges in data management, ensuring that technological innovation aligns with ethical considerations for equitable and trustworthy outcomes in healthcare. The concept of CDM, as proposed by [8], offers a pathway to balance the need for data to fuel AI development with the rights of individuals to control and benefit from their data. This balance is critical to maintaining the integrity of healthcare data and the trust of those it serves. Furthermore, the integration of AIIoT in healthcare, as highlighted by [21], underscores the importance of a robust governance framework that addresses algorithmic fairness and ensures patient data security and privacy. Developing fair, transparent, and trustworthy AI applications requires a concerted effort to standardize AIIoT architecture, promote diverse datasets, and implement fairness tools that can detect and mitigate biases. The future of AI-driven healthcare hinges on the ability to create systems that are not only technologically advanced but also ethically sound and socially responsible. By fostering algorithmic fairness, ensuring transparency, and building trust through explainability and data governance, AI can significantly enhance healthcare delivery while respecting the dignity and autonomy of patients. AI will become a supportive and informative ally in pursuing better health outcomes for all through these efforts.

3.6. Legal and Regulatory Considerations

Integrating Artificial Intelligence (AI) in healthcare, while offering transformative potential, necessitates a rigorous alignment with ethical standards and medical ethics principles to ensure patient welfare and equitable access. The legal and ethical challenges, particularly concerning data privacy and patient consent, are highlighted by the authors of [28], who stress the importance of robust legal frameworks that are adaptable and responsive to the evolving nature of AI applications. These frameworks must harmonize with regulations such as the General Data Protection Regulation (GDPR) to ensure ethical AI usage in healthcare. The incorporation of blockchain technology in AI-driven healthcare, as explored by [29], adds another layer of complexity to these challenges. Blockchain offers opportunities for enhancing data security and patient privacy but also challenges aligning with GDPR requirements. Specialized regulatory guidance tailored to blockchain applications in healthcare is necessary to address blockchain’s technical intricacies and ensure ethical and legal compliance. Furthermore, private entities’ control of health data in the AI commercial sector, as exemplified by the DeepMind-Royal Free London NHS Foundation Trust case, underscores the need for dynamic and adaptable legal and regulatory frameworks. These frameworks must safeguard patient interests and ensure responsible AI utilization in healthcare [1]. The intersection of blockchain technology with GDPR in AI-driven healthcare requires a comprehensive approach encompassing technological adaptability, ethical considerations, and the development of responsive legal frameworks.

Integrating Artificial Intelligence (AI) in healthcare necessitates a multifaceted approach to uphold ethical standards and protect patient welfare. To navigate the complexities of privacy and data security, it is imperative to implement rigorous data protection measures, ensuring AI technologies conform to the highest standards through advanced data anonymization and encryption techniques. Private entities’ governance of patient data access, usage, and control demands clear guidelines and oversight, addressing the inherent risks of AI, such as errors, biases, and the opacity of algorithmic decision-making processes. To counter the risks of privacy breaches and the potential reidentification of individuals from anonymized datasets, healthcare systems must fortify their defenses with sophisticated computational strategies and ongoing risk assessments [1,28].

Addressing the legal and regulatory challenges involves drafting comprehensive contracts that clearly define the rights and obligations of all stakeholders in AI healthcare initiatives, with regulations mandating that patient data remain within its original jurisdiction except under specific conditions. The exploration of generative models to create synthetic patient data emerges as a promising solution to privacy concerns, enabling the advancement of machine learning without relying on actual patient data. A strong emphasis on patient agency and informed consent is also critical, ensuring transparent communication regarding data withdrawal rights and the necessity for repeated consent for new data applications. As AI technology rapidly evolves, regulatory innovation is essential to keep pace. This includes developing new data protection methods and a regulatory framework that mandates private data custodians to utilize cutting-edge and secure data privacy practices. By embedding these measures within the legal and regulatory frameworks that govern AI in healthcare, we can maintain patient and public trust, respect patient dignity and autonomy, and adhere to medical ethics principles. Such an environment is conducive to the responsible development and application of AI, enabling healthcare systems to harness AI’s potential to improve patient outcomes, increase care efficiency, and provide equitable access to state-of-the-art medical technology [1,28,29].

3.7. Balancing Innovation with Ethical Standards

Integrating Artificial Intelligence (AI) necessitates a critical balance between technological innovation and adherence to ethical standards in the rapidly evolving healthcare field. This equilibrium is essential as the healthcare sector seeks to leverage AI’s potential to enhance patient outcomes significantly. The ethical integration of AI in healthcare demands a commitment to the core principles of medical ethics: beneficence, non-maleficence, autonomy, and justice. These principles dictate that AI applications must prioritize patient welfare and avoid harm, supporting patient decision-making and providing comprehensive, unbiased information while respecting the patient’s right to informed choice. Justice in AI deployment requires equitable access to healthcare and the prevention of biases that might lead to treatment disparities. Moreover, as AI becomes more prevalent in healthcare, it is vital to mitigate the risk of dehumanizing patient care. AI should enhance, not replace, the human elements of empathy and understanding in patient care. Active patient involvement in AI-related healthcare decisions fosters a sense of agency and empowerment for patient satisfaction and trust in the healthcare system. Concurrently, evolving legal and regulatory frameworks governing AI’s use in healthcare must address unique challenges such as data privacy, patient consent, and liability issues, ensuring AI technologies’ safe and compliant deployment in healthcare [1,2,27]. The healthcare sector’s journey toward integrating AI must be marked by a harmonious blend of innovation and ethical responsibility, ensuring that technological advancements in patient care align with patient dignity, rights, and well-being.

4. Advancing Data Integrity and Security in Healthcare AI: A Focused Approach to Blockchain and Advanced Data Validation Algorithms

4.1. In-Depth Analysis of Data Integrity in AI Healthcare Applications

The importance of maintaining data integrity and security in AI healthcare applications cannot be overstated. This is because it directly impacts patient safety and the effectiveness of healthcare delivery. Highlighting this [5] underscores the crucial nature of AI’s impact on healthcare. Additionally, the authors of [7,8] emphasize the essential role of data integrity in ensuring reliable healthcare outcomes. They advocate for rigorous verification and validation mechanisms, which gain particular significance in precision-critical fields like ophthalmology, as noted in [30]. The importance of robust data management practices is further stressed by [31]. These practices include continuous monitoring for anomalies to uphold the integrity of health data. The seamless transition from robust data management to addressing patient data access and control challenges is crucial. These challenges, especially when data are private, underscore the need for robust regulatory frameworks and systemic oversight. This transition highlights the interdependence of data integrity and privacy, emphasizing how effective data management addresses access and control challenges. Moreover, the risks of reidentification in anonymized data require enhanced privacy and security measures to protect patient data against computational strategies capable of reidentifying individuals. This concern naturally leads us to the broader implications for patient trust and the ethical application of AI in healthcare, as argued by the authors [1,9,10].