Advancements in the Intelligent Detection of Driver Fatigue and Distraction: A Comprehensive Review

Abstract

:1. Introduction

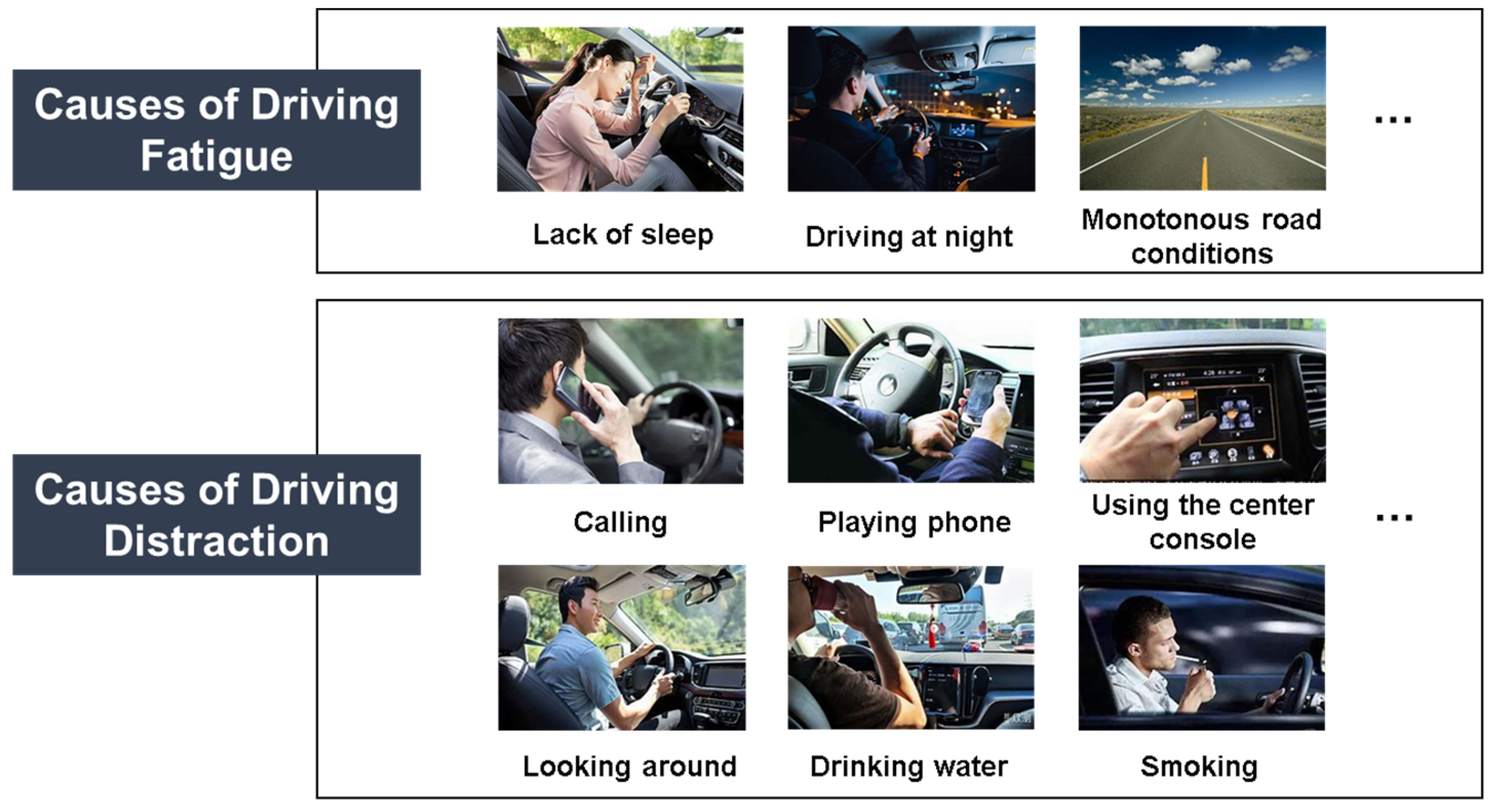

2. The Impact of Fatigue and Distraction on Driving Behavior

3. Intelligent Detection Methods for Driver Fatigue and Distraction

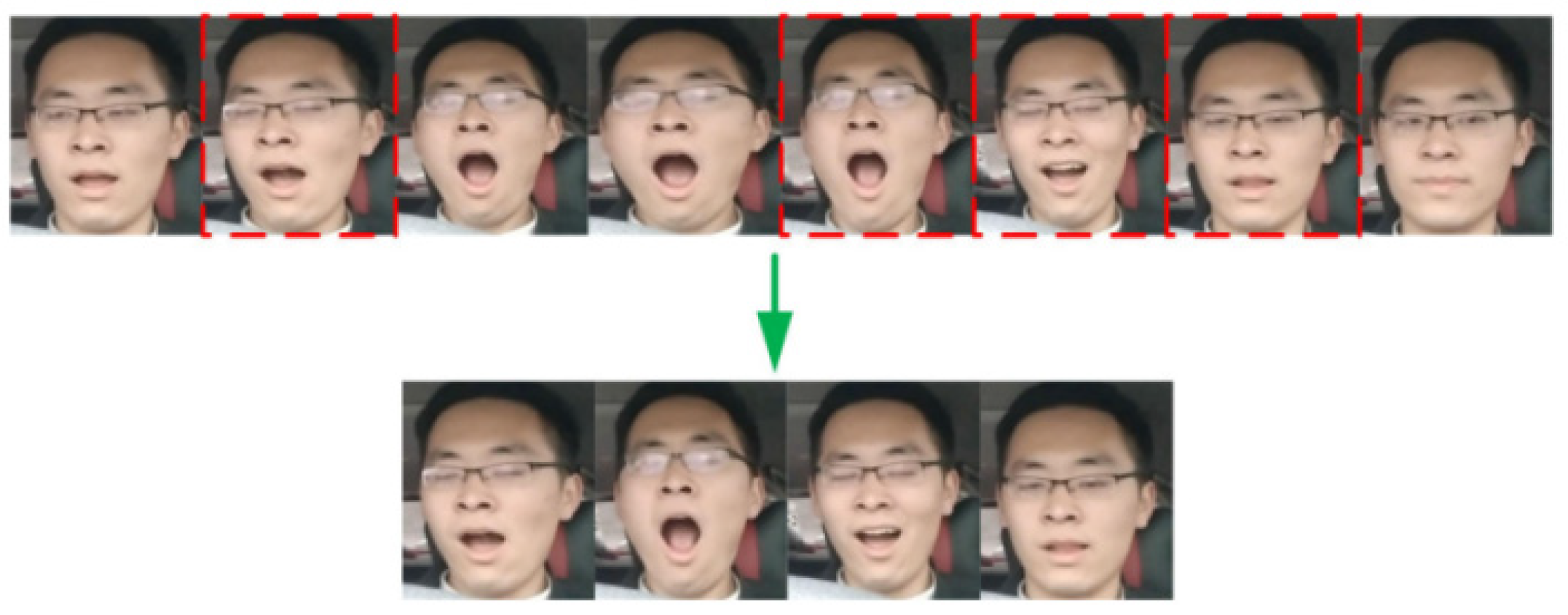

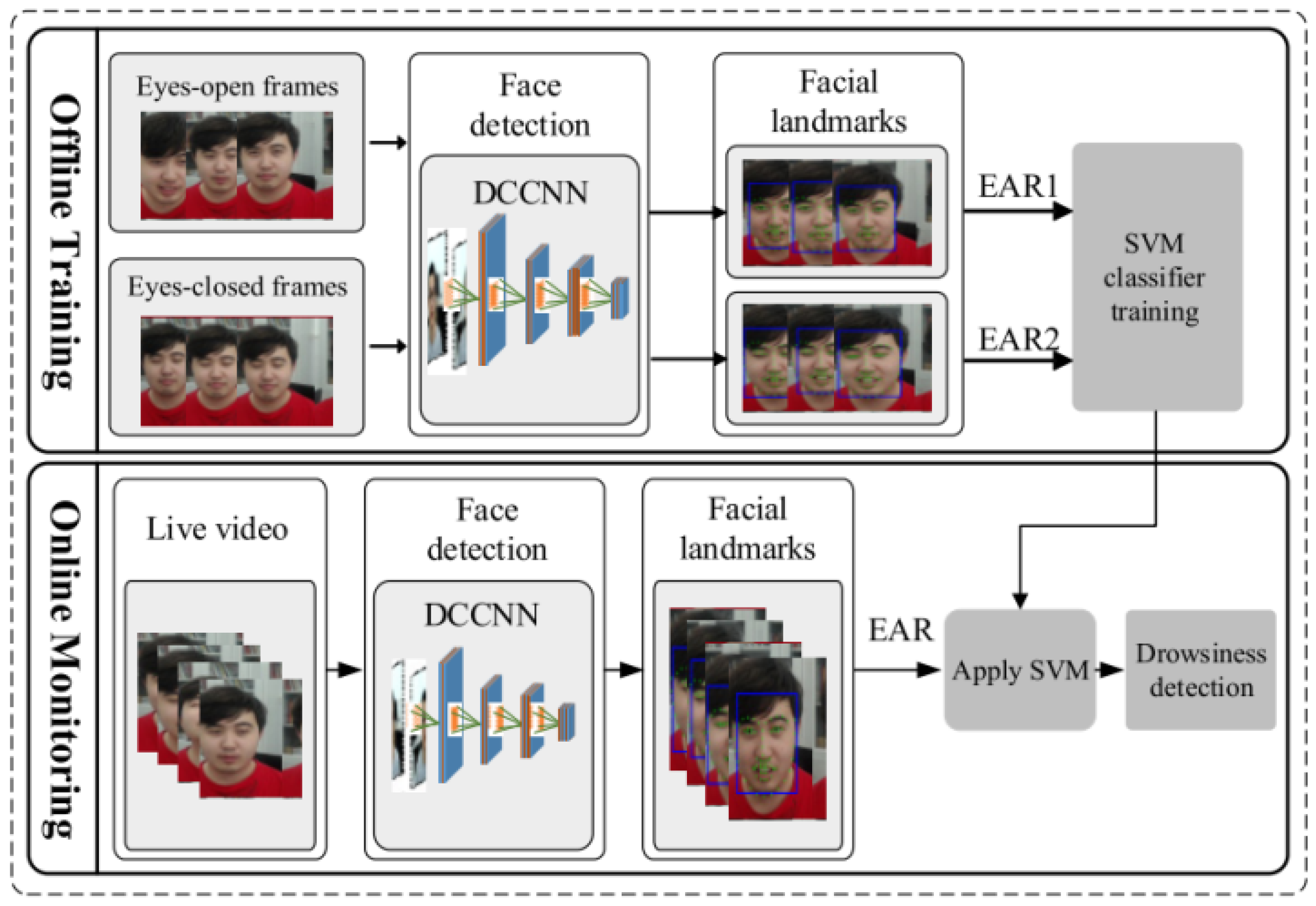

3.1. Intelligent Detection Methods Based on Driver’s Facial Features

- The actual precision of facial feature extraction is easily affected by the environment. Facial appearance may vary significantly under different lighting conditions, angle changes, and facial expressions, making it difficult to maintain stability and accuracy in facial feature extraction. Additionally, face occlusions, wearing glasses, and makeup can also affect the effectiveness of facial feature extraction.

- In detection methods based on facial features, most use facial feature parameters such as EAR and MAR, etc., to judge fatigue or distraction states. However, the setting of thresholds often relies on subjective experience, lacking objectivity and unified standards.

- Detection methods based on facial features are greatly affected by individual driver differences. While people may display facial states similar to fatigue or distraction, such as yawning, blinking, or looking down, in everyday life, it does not necessarily mean they are truly in a state of fatigue or distraction. Therefore, judging whether a driver is fatigued or distracted based solely on facial features presents potential risks of misjudgment or omission.

3.2. Intelligent Detection Methods Based on Driver’s Head Posture

3.3. Intelligent Detection Methods Based on Driver’s Behavioral Actions

3.4. Intelligent Detection Methods Based on Driver’s Physiological Characteristics

3.5. Intelligent Detection Methods Based on Vehicle Travel Data

3.6. Intelligent Detection Methods Based on Multimodal Fusion Feature

4. Safety Warning and Response Strategies Based on Fatigue and Distraction Detection

4.1. Warning Prompts Based on the Driving Cockpit

4.2. Safety Response Based on Advanced Driver Assistance Systems

4.3. Multi-Level Response Mechanism Combining Autonomous Driving Technology

5. Conclusions and Outlook

- Detection methods based on image information, especially those relying on machine learning and deep learning technologies, have significantly improved the accuracy of facial feature recognition, particularly in the analysis of face detection, eye, and mouth movements. However, these methods are highly sensitive to environmental conditions such as lighting and obstruction of the driver’s head, while also neglecting individual differences among drivers, somewhat limiting their widespread application potential.

- Detection methods based on the physiological characteristics of drivers, by analyzing physiological signals like electroencephalograms (EEG), electrocardiograms (ECG), and heart rate (HR), provide more direct indicators for assessing the attention level and fatigue state of the driver. These methods can avoid external environmental interference to a certain extent and provide relatively stable detection results. Nonetheless, physiological signal detection often requires the use of invasive sensors, which may cause discomfort to the driver and have limited applicability in actual driving environments.

- Intelligent detection methods based on vehicle driving data assess the driver’s level of attention distraction indirectly by analyzing the correlation between driving behavior and the vehicle operation status. These methods are easy to implement and cost-effective, but their detection accuracy is influenced by the complexity of the driving environment and the difficulty in directly reflecting changes in the driver’s physiological and psychological state.

- To enhance the robustness, stability, and overall performance of detection systems, detection methods based on multimodal feature fusion have emerged, integrating features from image information, physiological signals, and vehicle data to improve the comprehensiveness and accuracy of detection. Although this approach effectively utilizes the advantages of different data sources and enhances the robustness of the detection system, it also introduces higher implementation costs, technical complexity, and computational demands.

- In terms of safety warning research, current systems primarily rely on in-cabin human–machine interaction designs, issuing warnings to drivers through visual, auditory, and tactile means. These solutions can enhance driver alertness to some extent and reduce accidents caused by fatigue or distraction. However, the effectiveness of these systems is often limited by the driver’s subjective acceptance and real-time response capability. Moreover, safety response measures, such as the intervention of Advanced Driver Assistance Systems (ADAS) and the application of autonomous driving technology, can reduce risks to some extent, but their capability to handle complex traffic environments and the collaboration between drivers and systems require further research.

- Future research should focus on the adaptability of algorithms in complex environments, such as stability under different lighting conditions and changes in the driver’s posture, developing lightweight and efficient neural network models to ensure the rapidity of data processing and high accuracy of detection results.

- The innovation of non-invasive sensors and related algorithms could be advanced by collecting physiological signals through non-contact or minimally invasive methods, reducing driver discomfort, and expanding application scenarios.

- The application of machine learning and artificial intelligence technologies in analyzing the relationship between driving behavior and vehicle performance could be strengthened, precisely predicting driver states through detailed data collection and analysis.

- Data fusion technologies and model integration strategies could be optimized, exploring effective feature fusion algorithms to enhance the complementarity and accuracy of analysis between different data sources.

- Future safety warning schemes should pay more attention to personalization and intelligence, providing customized warning signals based on the driver’s behavior patterns and physiological state to enhance the effectiveness of warnings.

- Research should be conducted on the seamless switching mechanism between advanced driver assistance systems and autonomous driving technology, improving the safety and flexibility of the system, exploring the data fusion of in-vehicle and external environment perception systems to provide comprehensive decision support for ADAS and autonomous driving technology.

- An integrated framework that categorizes the intelligent detection methods based on deep learning developed over the past five years and proposes a comprehensive safety warning and response scheme. This scheme features varied warning and response mechanisms tailored to the different levels of vehicle automation.

- A critical evaluation of the limitations inherent in the current methodologies and the potential for leveraging emerging technologies such as AI and machine learning to address these challenges.

- The identification of areas that could significantly benefit from further research, including non-invasive sensing techniques, the integration of multimodal data, and the development of adaptive, personalized detection systems.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- National Bureau of Statistics of China. Statistical Communique of the People’s Republic of China on the 2022 National Economic and Social Development. Available online: https://www.stats.gov.cn/english/PressRelease/202302/t20230227_1918979.html (accessed on 1 March 2024).

- Mohammed, A.A.; Ambak, K.; Mosa, A.M.; Syamsunur, D. A review of traffic accidents and related practices worldwide. Open Transp. J. 2019, 13, 65–83. [Google Scholar] [CrossRef]

- Sharma, B.R. Road traffic injuries: A major global public health crisis. Public Health 2008, 122, 1399–1406. [Google Scholar] [CrossRef]

- Zhang, Y.; Jing, L.; Sun, C.; Fang, J.; Feng, Y. Human factors related to major road traffic accidents in China. Traffic Inj. Prev. 2019, 20, 796–800. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, S.K.; Mohammed, M.G.; Abdulqadir, S.O.; El-Kader, R.G.A.; El-Shall, N.A.; Chandran, D.; Rehman, M.E.U.; Dhama, K. Road traffic accidental injuries and deaths: A neglected global health issue. Health Sci. Rep. 2023, 6, e1240. [Google Scholar] [CrossRef] [PubMed]

- Jie, Q.; Xin, X.; Chuanpan, L.; Junwei, Z.; Yongtao, L. A review of the influencing factors and intervention methods of drivers’ hazard perception ability. China Saf. Sci. J. 2022, 32, 34–41. [Google Scholar]

- Khan, M.Q.; Lee, S. A comprehensive survey of driving monitoring and assistance systems. Sensors 2019, 19, 2574. [Google Scholar] [CrossRef] [PubMed]

- Behzadi Goodari, M.; Sharifi, H.; Dehesh, P.; Mosleh-Shirazi, M.A.; Dehesh, T. Factors affecting the number of road traffic accidents in Kerman province, southeastern Iran (2015–2021). Sci. Rep. 2023, 13, 6662. [Google Scholar] [CrossRef] [PubMed]

- Mesken, J.; Hagenzieker, M.P.; Rothengatter, T.; De Waard, D. Frequency, determinants, and consequences of different drivers’ emotions: An on-the-road study using self-reports,(observed) behaviour, and physiology. Transp. Res. Part F Traffic Psychol. Behav. 2007, 10, 458–475. [Google Scholar] [CrossRef]

- Hassib, M.; Braun, M.; Pfleging, B.; Alt, F. Detecting and influencing driver emotions using psycho-physiological sensors and ambient light. In Proceedings of the IFIP Conference on Human-Computer Interaction, Paphos, Cyprus, 2–6 September 2019; Springer: Cham, Switzerland, 2019; pp. 721–742. [Google Scholar]

- Dong, W.; Shu, Z.; Yutong, L. Research hotspots and evolution analysis of domestic traffic psychology. Adv. Psychol. 2019, 9, 13. [Google Scholar]

- Villán, A.F. Facial attributes recognition using computer vision to detect drowsiness and distraction in drivers. ELCVIA Electron. Lett. Comput. Vis. Image Anal. 2017, 16, 25–28. [Google Scholar] [CrossRef]

- Moslemi, N.; Soryani, M.; Azmi, R. Computer vision-based recognition of driver distraction: A review. Concurr. Comput. Pract. Exp. 2021, 33, e6475. [Google Scholar] [CrossRef]

- Morooka, F.E.; Junior, A.M.; Sigahi, T.F.; Pinto, J.D.S.; Rampasso, I.S.; Anholon, R. Deep learning and autonomous vehicles: Strategic themes, applications, and research agenda using SciMAT and content-centric analysis, a systematic review. Mach. Learn. Knowl. Extr. 2023, 5, 763–781. [Google Scholar] [CrossRef]

- Dash, D.P.; Kolekar, M.; Chakraborty, C.; Khosravi, M.R. Review of machine and deep learning techniques in epileptic seizure detection using physiological signals and sentiment analysis. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2024, 23, 1–29. [Google Scholar] [CrossRef]

- Hu, Y.; Qu, T.; Liu, J.; Shi, Z.; Zhu, B.; Cao, D.; Chen, H. Research status and prospect of human-machine collaborative control of intelligent vehicles. Acta Autom. Sin. 2019, 45, 20. [Google Scholar]

- Hatoyama, K.; Nishioka, M.; Kitajima, M.; Nakahira, K.; Sano, K. Perception of time in traffic congestion and drivers’ stress. In Proceedings of the International Conference on Transportation and Development 2019, Alexandria, VA, USA, 9–12 June 2019; American Society of Civil Engineers: Reston, VA, USA, 2019; pp. 165–174. [Google Scholar]

- Stutts, J.; Reinfurt, D.; Rodgman, E. The role of driver distraction in crashes: An analysis of 1995–1999 Crashworthiness Data System Data. Annu. Proc. Assoc. Adv. Automot. Med. 2001, 45, 287–301. [Google Scholar]

- Zhang, H.; Ni, D.; Ding, N.; Sun, Y.; Zhang, Q.; Li, X. Structural analysis of driver fatigue behavior: A systematic review. Transp. Res. Interdiscip. Perspect. 2023, 21, 100865. [Google Scholar] [CrossRef]

- Al-Mekhlafi, A.B.A.; Isha, A.S.N.; Naji, G.M.A. The relationship between fatigue and driving performance: A review and directions for future research. J. Crit. Rev. 2020, 7, 134–141. [Google Scholar]

- Li, W.; Huang, J.; Xie, G.; Karray, F.; Li, R. A survey on vision-based driver distraction analysis. J. Syst. Archit. 2021, 121, 102319. [Google Scholar] [CrossRef]

- Goodsell, R.; Cunningham, M.; Chevalier, A. Driver Distraction: A Review of Scientific Literature; ARRB Report Project; National Transportation Commission: Melbourne, Australia, 2019; p. 013817.

- Bioulac, S.; Micoulaud-Franchi, J.A.; Arnaud, M.; Sagaspe, P.; Moore, N.; Salvo, F.; Philip, P. Risk of motor vehicle accidents related to sleepiness at the wheel: A systematic review and meta-analysis. Sleep 2017, 40, zsx134. [Google Scholar] [CrossRef]

- Klauer, S.G.; Guo, F.; Simons-Morton, B.G.; Ouimet, M.C.; Lee, S.E.; Dingus, T.A. Distracted driving and risk of road crashes among novice and experienced drivers. New Engl. J. Med. 2014, 370, 54–59. [Google Scholar] [CrossRef]

- Öztürk, İ.; Merat, N.; Rowe, R.; Fotios, S. The effect of cognitive load on Detection-Response Task (DRT) performance during day-and night-time driving: A driving simulator study with young and older drivers. Transp. Res. Part F Traffic Psychol. Behav. 2023, 97, 155–169. [Google Scholar] [CrossRef]

- Bassani, M.; Catani, L.; Hazoor, A.; Hoxha, A.; Lioi, A.; Portera, A.; Tefa, L. Do driver monitoring technologies improve the driving behaviour of distracted drivers? A simulation study to assess the impact of an auditory driver distraction warning device on driving performance. Transp. Res. Part F: Traffic Psychol. Behav. 2023, 95, 239–250. [Google Scholar] [CrossRef]

- Philip, P.; Sagaspe, P.; Taillard, J.; Valtat, C.; Moore, N.; Åkerstedt, T.; Charles, A.; Bioulac, B. Fatigue, sleepiness, and performance in simulated versus real driving conditions. Sleep 2005, 28, 1511–1516. [Google Scholar] [CrossRef] [PubMed]

- Large, D.R.; Pampel, S.M.; Merriman, S.E.; Burnett, G. A validation study of a fixed-based, medium fidelity driving simulator for human–machine interfaces visual distraction testing. IET Intell. Transp. Syst. 2023, 7, 1104–1117. [Google Scholar] [CrossRef]

- Chen, H.; Zhe, M.; Hui, Z. The influence of driver fatigue on takeover reaction time in human-machine co-driving environment. In Proceedings of the World Transport Congress, Beijing, China, 4 November 2022; China Association for Science and Technology: Beijing, China, 2022; pp. 599–604. [Google Scholar]

- Yongfeng, M.; Xin, T.; Shuyan, C. The impact of distracted driving behavior on ride-hailing vehicle control under natural driving conditions. In Proceedings of the 17th China Intelligent Transportation Annual Conference, Macau, China, 8–12 October 2022; pp. 46–47. [Google Scholar]

- Ngxande, M.; Tapamo, J.-R.; Burke, M. Driver drowsiness detection using behavioral measures and machine learning techniques: A review of state-of-art techniques. In Proceedings of the 2017 Pattern Recognition Association of South Africa and Robotics and Mechatronics (PRASA-RobMech), Bloemfontein, South Africa, 30 November–1 December 2017; pp. 156–161. [Google Scholar]

- Dinges, D.F.; Grace, R. PERCLOS: A Valid Psychophysiological Measure of Alertness as Assessed by Psychomotor Vigilance; Tech. Rep. MCRT-98-006; Federal Motor Carrier Safety Administration: Washington, DC, USA, 1998. [CrossRef]

- Feng, Z.; Jian, C.; Jingxin, C. Driver fatigue detection based on improved Yolov3. Sci. Technol. Eng. 2022, 23, 11730–11738. [Google Scholar]

- Wang, J.; Yu, X.; Liu, Q.; Yang, Z. Research on key technologies of intelligent transportation based on image recognition and anti-fatigue driving. EURASIP J. Image Video Process. 2019, 2019, 1–13. [Google Scholar] [CrossRef]

- Yang, H.; Liu, L.; Min, W.; Yang, X.; Xiong, X. Driver yawning detection based on subtle facial action recognition. IEEE Trans. Multimed. 2020, 23, 572–583. [Google Scholar] [CrossRef]

- Li, K.; Gong, Y.; Ren, Z. A fatigue driving detection algorithm based on facial multi-feature fusion. IEEE Access 2020, 8, 101244–101259. [Google Scholar] [CrossRef]

- You, F.; Li, X.; Gong, Y.; Wang, H.; Li, H. A real-time driving drowsiness detection algorithm with individual differences consideration. IEEE Access 2019, 7, 179396–179408. [Google Scholar] [CrossRef]

- Zheng, H.; Wang, Y.; Liu, X. Adaptive Driver Face Feature Fatigue Detection Algorithm Research. Appl. Sci. 2023, 13, 5074. [Google Scholar] [CrossRef]

- Liu, W.; Qian, J.; Yao, Z.; Jiao, X.; Pan, J. Convolutional two-stream network using multi-facial feature fusion for driver fatigue detection. Future Internet 2019, 11, 115. [Google Scholar] [CrossRef]

- Ahmed, M.; Masood, S.; Ahmad, M.; Abd El-Latif, A.A. Intelligent driver drowsiness detection for traffic safety based on multi CNN deep model and facial subsampling. IEEE Trans. Intell. Transp. Syst. 2021, 23, 19743–19752. [Google Scholar] [CrossRef]

- Kim, W.; Jung, W.-S.; Choi, H.K. Lightweight driver monitoring system based on multi-task mobilenets. Sensors 2019, 19, 3200. [Google Scholar] [CrossRef] [PubMed]

- He, J.; Chen, J.; Liu, J.; Li, H. A lightweight architecture for driver status monitoring via convolutional neural networks. In Proceedings of the 2019 IEEE International Conference on Robotics and Biomimetics (ROBIO), Dali, China, 6–8 December 2019; pp. 388–394. [Google Scholar]

- Guo, Z.; Liu, Q.; Zhang, L.; Li, Z.; Li, G. L-TLA: A Lightweight Driver Distraction Detection Method Based on Three-Level Attention Mechanisms. IEEE Trans. Reliab. 2024, 99, 1–12. [Google Scholar] [CrossRef]

- Gupta, K.; Choubey, S.; Yogeesh, N.; William, P.; Kale, C.P. Implementation of motorist weariness detection system using a conventional object recognition technique. In Proceedings of the 2023 International Conference on Intelligent Data Communication Technologies and Internet of Things (IDCIoT), Bengaluru, India, 5–7 January 2023; pp. 640–646. [Google Scholar]

- Zhao, Z.; Xia, S.; Xu, X.; Zhang, L.; Yan, H.; Xu, Y.; Zhang, Z. Driver distraction detection method based on continuous head pose estimation. Comput. Intell. Neurosci. 2020, 2020, 1–10. [Google Scholar] [CrossRef]

- Ansari, S.; Naghdy, F.; Du, H.; Pahnwar, Y.N. Driver mental fatigue detection based on head posture using new modified reLU-BiLSTM deep neural network. IEEE Trans. Intell. Transp. Syst. 2021, 23, 10957–10969. [Google Scholar] [CrossRef]

- Xing, Y.; Lv, C.; Wang, H.; Cao, D.; Velenis, E.; Wang, F.Y. Driver activity recognition for intelligent vehicles: A deep learning approach. IEEE Trans. Veh. Technol. 2019, 68, 5379–5390. [Google Scholar] [CrossRef]

- Ma, C.; Wang, H.; Li, J. Driver behavior recognition based on attention module and bilinear fusion network. In Proceedings of the Second International Conference on Digital Signal and Computer Communications (DSCC 2022), Changchun, China, 8–10 April 2022; pp. 381–386. [Google Scholar]

- Zhang, C.; Li, R.; Kim, W.; Yoon, D.; Patras, P. Driver behavior recognition via interwoven deep convolutional neural nets with multi-stream inputs. IEEE Access 2020, 8, 191138–191151. [Google Scholar] [CrossRef]

- Fasanmade, A.; He, Y.; Al-Bayatti, A.H.; Morden, J.N.; Aliyu, S.O.; Alfakeeh, A.S.; Alsayed, A.O. A fuzzy-logic approach to dynamic bayesian severity level classification of driver distraction using image recognition. IEEE Access 2020, 8, 95197–95207. [Google Scholar] [CrossRef]

- Xie, Z.; Li, L.; Xu, X. Real-time driving distraction recognition through a wrist-mounted accelerometer. Hum. Factors 2022, 64, 1412–1428. [Google Scholar] [CrossRef]

- Wagner, B.; Taffner, F.; Karaca, S.; Karge, L. Vision based detection of driver cell phone usage and food consumption. IEEE Trans. Intell. Transp. Syst. 2021, 23, 4257–4266. [Google Scholar] [CrossRef]

- Shen, K.Q.; Li, X.P.; Ong, C.J.; Shao, S.Y.; Wilder-Smith, E.P. EEG-based mental fatigue measurement using multi-class support vector machines with confidence estimate. Clin. Neurophysiol. 2008, 119, 1524–1533. [Google Scholar] [CrossRef] [PubMed]

- Bundele, M.M.; Banerjee, R. Detection of fatigue of vehicular driver using skin conductance and oximetry pulse: A neural network approach. In Proceedings of the 11th International Conference on Information Integration and Web-Based Applications & Services, Kuala Lumpur, Malaysia, 14–16 December 2009; pp. 739–744. [Google Scholar]

- Chaudhuri, A.; Routray, A. Driver fatigue detection through chaotic entropy analysis of cortical sources obtained from scalp EEG signals. IEEE Trans. Intell. Transp. Syst. 2019, 21, 185–198. [Google Scholar] [CrossRef]

- Li, G.; Yan, W.; Li, S.; Qu, X.; Chu, W.; Cao, D. A temporal–spatial deep learning approach for driver distraction detection based on EEG signals. IEEE Trans. Autom. Sci. Eng. 2021, 19, 2665–2677. [Google Scholar] [CrossRef]

- Fu, R.; Wang, H. Detection of driving fatigue by using noncontact EMG and ECG signals measurement system. Int. J. Neural Syst. 2014, 24, 1450006. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.L.; Zhao, Y.; Zhang, J.; Zou, J.Z. Automatic detection of alertness/drowsiness from physiological signals using wavelet-based nonlinear features and machine learning. Expert Syst. Appl. 2015, 42, 7344–7355. [Google Scholar] [CrossRef]

- Fan, C.; Peng, Y.; Peng, S.; Zhang, H.; Wu, Y.; Kwong, S. Detection of train driver fatigue and distraction based on forehead EEG: A time-series ensemble learning method. IEEE Trans. Intell. Transp. Syst. 2021, 23, 13559–13569. [Google Scholar] [CrossRef]

- Yang, J.; Chang, T.N.; Hou, E. Driver distraction detection for vehicular monitoring. In Proceedings of the IECON 2010–36th Annual Conference on IEEE Industrial Electronics Society, Glendale, AZ, USA, 7–10 November 2010; pp. 108–113. [Google Scholar]

- Wang, X.; Xu, R.; Zhang, S.; Zhuang, Y.; Wang, Y. Driver distraction detection based on vehicle dynamics using naturalistic driving data. Transp. Res. Part C Emerg. Technol. 2022, 136, 103561. [Google Scholar] [CrossRef]

- Sun, Q.; Wang, C.; Guo, Y.; Yuan, W.; Fu, R. Research on a cognitive distraction recognition model for intelligent driving systems based on real vehicle experiments. Sensors 2020, 20, 4426. [Google Scholar] [CrossRef]

- Zhenyu, W.; Yong, L.; Jianxi, L. Fatigue detection system based on multi-source information source fusion. Electron. Des. Eng. 2022, 19, 30. [Google Scholar]

- Du, Y.; Raman, C.; Black, A.W.; Morency, L.P.; Eskenazi, M. Multimodal polynomial fusion for detecting driver distraction. arXiv 2018, arXiv:181010565. [Google Scholar]

- Abbas, Q.; Ibrahim, M.E.; Khan, S.; Baig, A.R. Hypo-driver: A multiview driver fatigue and distraction level detection system. CMC Comput. Mater Contin 2022, 71, 1999–2017. [Google Scholar]

- Lu, J.; Peng, Z.; Yang, S.; Ma, Y.; Wang, R.; Pang, Z.; Feng, X.; Chen, Y.; Cao, Y. A review of sensory interactions between autonomous vehicles and drivers. J. Syst. Archit. 2023, 141, 102932. [Google Scholar] [CrossRef]

- Wogalter, M.S.; Mayhorn, C.B.; Laughery, K.R., Sr. Warnings and hazard communications. In Handbook of Human Factors and Ergonomics; John Wiley & Sons: Hoboken, NJ, USA, 2021; pp. 644–667. [Google Scholar]

- Sun, X.; Zhang, Y. Improvement of autonomous vehicles trust through synesthetic-based multimodal interaction. IEEE Access 2021, 9, 28213–28223. [Google Scholar] [CrossRef]

- Murali, P.K.; Kaboli, M.; Dahiya, R. Intelligent In-Vehicle Interaction Technologies. Adv. Intell. Syst. 2022, 4, 2100122. [Google Scholar] [CrossRef]

- Zhou, X.; Zheng, J.; Zhang, W. Intelligent Connected Vehicle Information System (CVIS) for Safer and Pleasant Driving. In Human-Automation Interaction: Transportation; Springer: Cham, Switzerland, 2022; pp. 469–479. [Google Scholar]

- Rosekind, M.R.; Michael, J.P.; Dorey-Stein, Z.L.; Watson, N.F. Awake at the wheel: How auto technology innovations present ongoing sleep challenges and new safety opportunities. Sleep 2023, 47, zsad316. [Google Scholar] [CrossRef] [PubMed]

- Yurtsever, E.; Lambert, J.; Carballo, A.; Takeda, K. A survey of autonomous driving: Common practices and emerging technologies. IEEE Access 2020, 8, 58443–58469. [Google Scholar] [CrossRef]

- Geisslinger, M.; Poszler, F.; Betz, J.; Lütge, C.; Lienkamp, M. Autonomous driving ethics: From trolley problem to ethics of risk. Philos. Technol. 2021, 34, 1033–1055. [Google Scholar] [CrossRef]

- Petit, J.; Shladover, S.E. Potential cyberattacks on automated vehicles. IEEE Trans. Intell. Transp. Syst. 2014, 16, 546–556. [Google Scholar] [CrossRef]

- Parkinson, S.; Ward, P.; Wilson, K.; Miller, J. Cyber threats facing autonomous and connected vehicles: Future challenges. IEEE Trans. Intell. Transp. Syst. 2017, 18, 2898–2915. [Google Scholar] [CrossRef]

- Petrillo, A.; Pescape, A.; Santini, S. A secure adaptive control for cooperative driving of autonomous connected vehicles in the presence of heterogeneous communication delays and cyberattacks. IEEE Trans. Cybern. 2020, 51, 1134–1149. [Google Scholar] [CrossRef] [PubMed]

- Guo, J.; Li, L.; Wang, J.; Li, K. Cyber-physical system-based path tracking control of autonomous vehicles under cyber-attacks. IEEE Trans. Ind. Inform. 2022, 19, 6624–6635. [Google Scholar] [CrossRef]

- Sheehan, B.; Murphy, F.; Mullins, M.; Ryan, C. Connected and autonomous vehicles: A cyber-risk classification framework. Transp. Res. Part A Policy Pract. 2019, 124, 523–536. [Google Scholar] [CrossRef]

- Van Wyk, F.; Wang, Y.; Khojandi, A.; Masoud, N. Real-time sensor anomaly detection and identification in automated vehicles. IEEE Trans. Intell. Transp. Syst. 2019, 21, 1264–1276. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, Q.; Mihankhah, E.; Lv, C.; Wang, D. Detection and isolation of sensor attacks for autonomous vehicles: Framework, algorithms, and validation. IEEE Trans. Intell. Transp. Syst. 2021, 23, 8247–8259. [Google Scholar] [CrossRef]

- Li, T.; Shang, M.; Wang, S.; Filippelli, M.; Stern, R. Detecting stealthy cyberattacks on automated vehicles via generative adversarial networks. In Proceedings of the 2022 IEEE 25th International Conference on Intelligent Transportation Systems (ITSC), Macau, China, 8–12 October 2022; pp. 3632–3637. [Google Scholar]

| Author | Year | Methodological Innovations | Extraction of Features | Machine Learning Models | Dataset | Accuracy | Improvement over Traditional Methods |

|---|---|---|---|---|---|---|---|

| Zhang et al. [33] | 2022 | Solving the problem of missed detection due to occlusion or misjudgment. | PERCLOS value, maximum eye closure time, number of yawns | Improves the yolov3+Kalman filter algorithm | Self-built real-vehicle dataset | 92.50% | Solves the problem of the low accuracy of recognizing face parts in traditional methods. |

| Wang et al. [34] | 2019 | Proposing a bidirectional integral projection method to realize the precise localization of the human eye. | Blink frequency | KNN algorithm | Self-constructed simulator dataset | 87.82% | |

| Yang et al. [35] | 2021 | Recognizing yawning behavior based on subtle facial movements for improved accuracy. | Subtle facial changes | 3D-LTS combining 3D convolution + Bi-LSTM | YawDD dataset | 92.10% | |

| Li et al. [36] | 2020 | Offline construction of driver identity database to analyze driving status from driver features. | Facial features | Improved yolov3-tiny + improved dlib library | DSD dataset | 95.10% | Consideration of driver characteristics and their variability. |

| You et al. [37] | 2019 | Analyzing changes in binocular aspect ratio using neural network training. | Eye aspect ratio | Deep cascaded convolutional neural network | FDDB dataset + self-built simulator dataset | 94.80% | |

| Han et al. [38] | 2023 | Weakening environmental effects and individual differences, improving dlib method to enhance the accuracy of facial feature point extraction. | 64 feature points, EAR, MAR | ShuffleNet V2K16 neural network | Self-constructed real-vehicle dataset | 98.8% | |

| Liu et al. [39] | 2019 | Introducing dual-stream neural network to combine static and dynamic image information for fatigue detection; utilizing gamma correction method to improve nighttime detection accuracy. | Static and dynamic image fusion information | Multi-task cascaded convolutional neural network | NTHU-DDD dataset | 97.06% | Research on high-performance deep learning models to improve detection accuracy. |

| Ahmed et al. [40] | 2022 | Proposing a deep learning integration model and introducing the InceptionV3 module for feature extraction of eye and mouth subsamples. | Eye and mouth images | Multi-task cascaded convolutional neural network | NTHU-DDD dataset | 97.10% | |

| Kim et al. [41] | 2019 | Reducing arithmetic requirements, realizing end-to-end detection, and improving detection efficiency. | Raw images | Multi-task lightweight neural network | Self-built simulator dataset | Face orientation: 96.40% Eyes closed: 77.56% Mouth open: 93.93% | Research on lightweight models to promote technology application. |

| He et al. [42] | 2019 | Building and integrating multiple lightweight deep learning models to recognize fatigue and risky driving behaviors. | Part recognition with extended range images | SSD-MobileNet model | 300 W + self-constructed validation dataset | 95.10% | |

| Guo et al. [43] | 2024 | Adaptive detection of distracting behaviors not included in the training set to ensure lightweight and enhance generalization capability. | Full depth images | Visual Transformer model | Self-built real-vehicle dataset MAS | 98.98% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fu, S.; Yang, Z.; Ma, Y.; Li, Z.; Xu, L.; Zhou, H. Advancements in the Intelligent Detection of Driver Fatigue and Distraction: A Comprehensive Review. Appl. Sci. 2024, 14, 3016. https://doi.org/10.3390/app14073016

Fu S, Yang Z, Ma Y, Li Z, Xu L, Zhou H. Advancements in the Intelligent Detection of Driver Fatigue and Distraction: A Comprehensive Review. Applied Sciences. 2024; 14(7):3016. https://doi.org/10.3390/app14073016

Chicago/Turabian StyleFu, Shichen, Zhenhua Yang, Yuan Ma, Zhenfeng Li, Le Xu, and Huixing Zhou. 2024. "Advancements in the Intelligent Detection of Driver Fatigue and Distraction: A Comprehensive Review" Applied Sciences 14, no. 7: 3016. https://doi.org/10.3390/app14073016

APA StyleFu, S., Yang, Z., Ma, Y., Li, Z., Xu, L., & Zhou, H. (2024). Advancements in the Intelligent Detection of Driver Fatigue and Distraction: A Comprehensive Review. Applied Sciences, 14(7), 3016. https://doi.org/10.3390/app14073016