Long-Range Imaging LiDAR with Multiple Denoising Technologies

Abstract

:1. Introduction

2. Methods

2.1. Description of the Time-Gated Long-Range Imaging LiDAR System

2.2. PMT-Based Time-Gated Measurement

2.3. Data Acquisition

2.4. Image Reconstruction

3. Results and Discussion

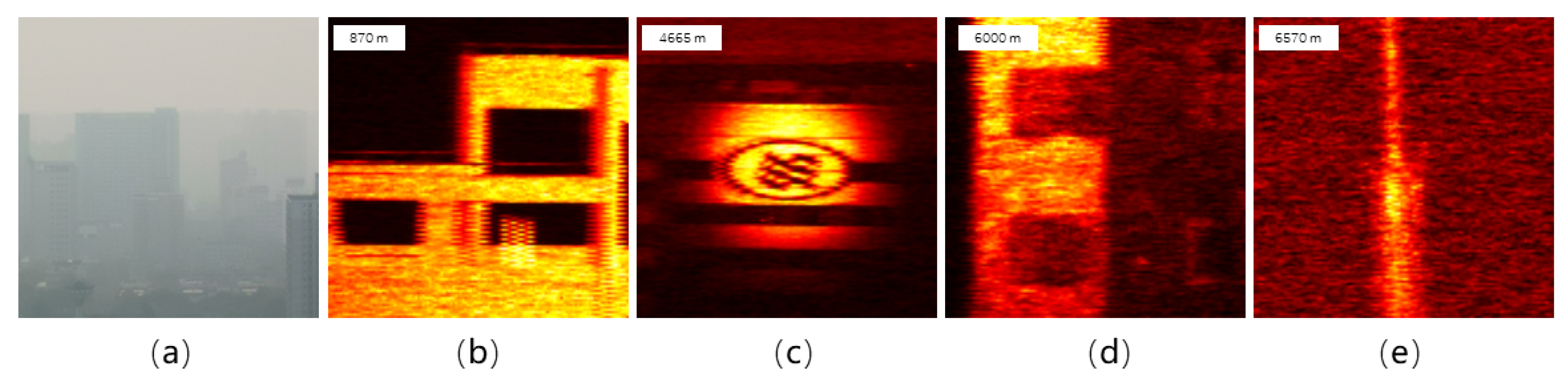

3.1. Imaging on a Hazy Day

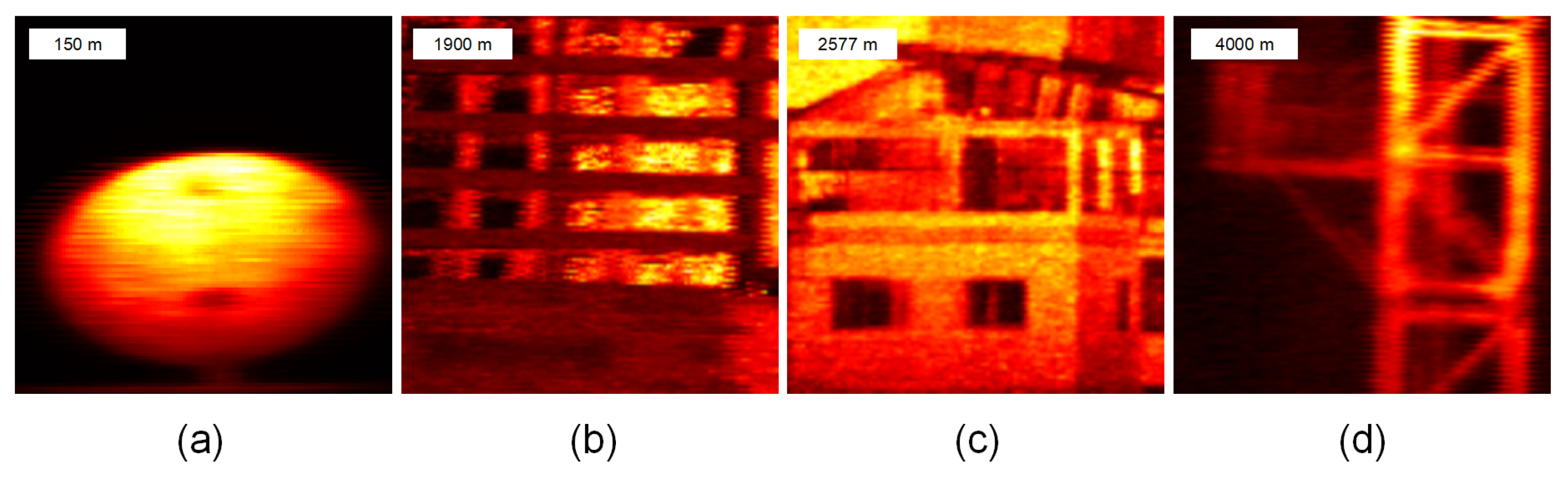

3.2. Imaging in Bright Daylight

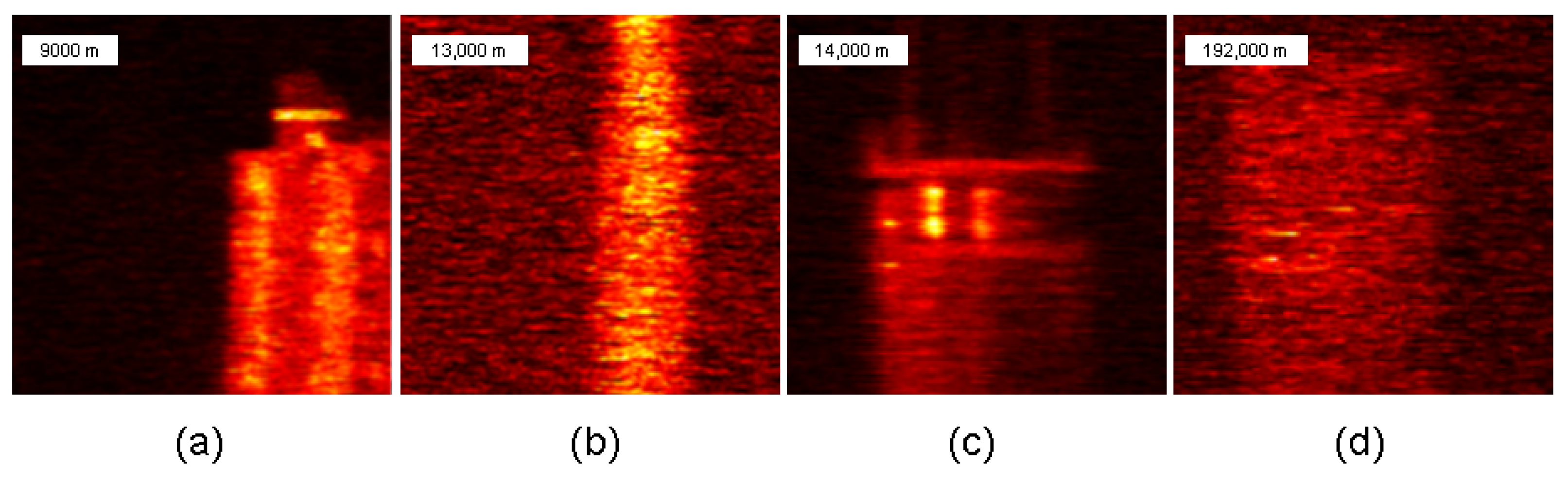

3.3. Imaging at Night

3.4. Imaging on a Rainy Day

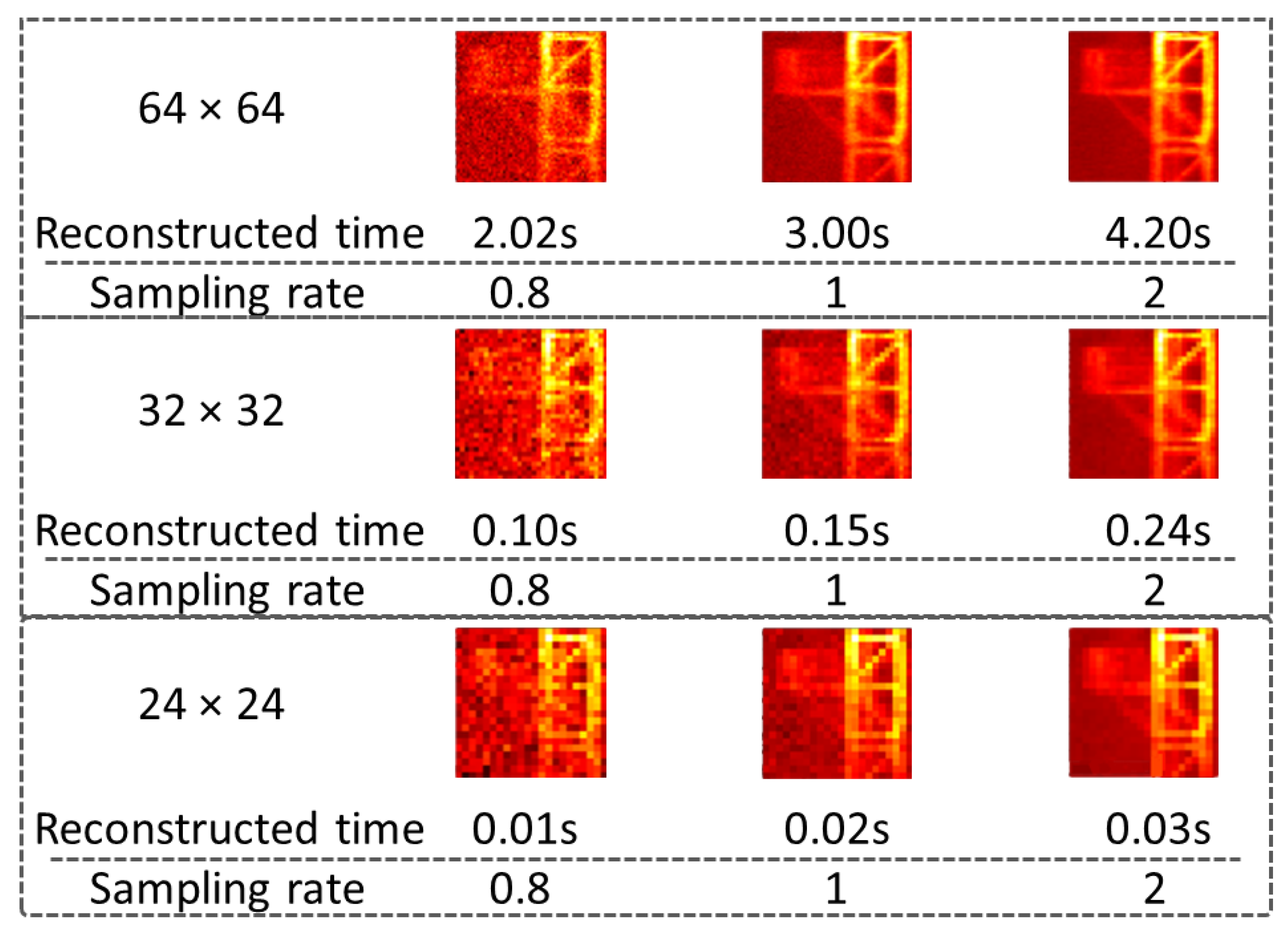

3.5. Time Consumed by Imaging Reconstruction

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Besl, P.J. Active, optical range imaging sensors. Mach. Vis. Appl. 1988, 1, 127–152. [Google Scholar] [CrossRef]

- Shi, Z.; Yu, L.; Cao, D.; Wu, Q.; Yu, X.; Lin, G. Airborne ultraviolet imaging system for oil slick surveillance: Oil–seawater contrast, imaging concept, signal-to-noise ratio, optical design, and optomechanical model. Appl. Opt. 2015, 54, 7648–7655. [Google Scholar] [CrossRef] [PubMed]

- Zeng, R.; Wen, Y.; Zhao, W.; Liu, Y.J. View planning in robot active vision: A survey of systems, algorithms, and applications. Comput. Vis. Media 2020, 6, 225–245. [Google Scholar] [CrossRef]

- Xiong, J.; Hsiang, E.L.; He, Z.; Zhan, T.; Wu, S.T. Augmented reality and virtual reality displays: Emerging technologies and future perspectives. Light. Sci. Appl. 2021, 10, 216. [Google Scholar] [CrossRef] [PubMed]

- Dix-Matthews, B.P.; Schediwy, S.W.; Gozzard, D.R.; Savalle, E.; Esnault, F.X.; Lévèque, T.; Gravestock, C.; D’Mello, D.; Karpathakis, S.; Tobar, M.; et al. Point-to-point stabilized optical frequency transfer with active optics. Nat. Commun. 2021, 12, 515. [Google Scholar] [CrossRef] [PubMed]

- Herissé, B.; Hamel, T.; Mahony, R.; Russotto, F.X. Landing a VTOL unmanned aerial vehicle on a moving platform using optical flow. IEEE Trans. Robot. 2011, 28, 77–89. [Google Scholar] [CrossRef]

- Tippetts, B.; Lee, D.J.; Lillywhite, K.; Archibald, J. Review of stereo vision algorithms and their suitability for resource-limited systems. J.-Real-Time Image Process. 2016, 11, 5–25. [Google Scholar] [CrossRef]

- Angelsky, O.V.; Bekshaev, A.Y.; Hanson, S.G.; Zenkova, C.Y.; Mokhun, I.I.; Jun, Z. Structured light: Ideas and concepts. Front. Phys. 2020, 8, 114. [Google Scholar] [CrossRef]

- Raj, T.; Hanim Hashim, F.; Baseri Huddin, A.; Ibrahim, M.F.; Hussain, A. A survey on LiDAR scanning mechanisms. Electronics 2020, 9, 741. [Google Scholar] [CrossRef]

- Royo, S.; Ballesta-Garcia, M. An Overview of Lidar Imaging Systems for Autonomous Vehicles. Appl. Sci. 2019, 9, 4093. [Google Scholar] [CrossRef]

- Schied, C.; Peters, C.; Dachsbacher, C. Gradient estimation for real-time adaptive temporal filtering. Proc. Acm Comput. Graph. Interact. Tech. 2018, 1, 1–16. [Google Scholar] [CrossRef]

- Fan, L.; Zhang, F.; Fan, H.; Zhang, C. Brief review of image denoising techniques. Vis. Comput. Ind. Biomed. Art 2019, 2, 1–12. [Google Scholar] [CrossRef]

- Onuki, M.; Ono, S.; Yamagishi, M.; Tanaka, Y. Graph signal denoising via trilateral filter on graph spectral domain. IEEE Trans. Signal Inf. Process. Over Netw. 2016, 2, 137–148. [Google Scholar] [CrossRef]

- Abubakar, A.; Zhao, X.; Li, S.; Takruri, M.; Bastaki, E.; Bermak, A. A block-matching and 3-D filtering algorithm for Gaussian noise in DoFP polarization images. IEEE Sens. J. 2018, 18, 7429–7435. [Google Scholar] [CrossRef]

- Hu, Y.; Hou, A.; Zhang, X.; Han, F.; Zhao, N.; Xu, S.; Ma, Q.; Gu, Y.; Dong, X.; Chen, Y.; et al. Assessment of Lateral Structural Details of Targets using Principles of Full-Waveform Light Detection and Ranging. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–16. [Google Scholar] [CrossRef]

- Hu, Y.; Zhang, X.; Hou, A.; Xu, S.; Gu, Y.; Lu, H.; Ma, Q.; Zhao, N.; Fang, J. Laser echo waveform modulation modelling from lateral structure using a mathematical formula. Int. J. Remote Sens. 2023, 44, 2382–2399. [Google Scholar] [CrossRef]

- Wang, D.; Watkins, C.; Xie, H. MEMS mirrors for LiDAR: A review. Micromachines 2020, 11, 456. [Google Scholar] [CrossRef] [PubMed]

- Poulton, C.V.; Yaacobi, A.; Cole, D.B.; Byrd, M.J.; Raval, M.; Vermeulen, D.; Watts, M.R. Coherent solid-state LIDAR with silicon photonic optical phased arrays. Opt. Lett. 2017, 42, 4091–4094. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.M.; Lee, E.S.; Chun, K.W.; Jin, J.; Oh, M.C. Compact solid-state optical phased array beam scanners based on polymeric photonic integrated circuits. Sci. Rep. 2021, 11, 10576. [Google Scholar] [CrossRef]

- Ren, M.; Gu, X.; Liang, Y.; Kong, W.; Wu, E.; Wu, G.; Zeng, H. Laser ranging at 1550 nm with 1-GHz sine-wave gated InGaAs/InP APD single-photon detector. Opt. Express 2011, 19, 13497–13502. [Google Scholar] [CrossRef]

- Warburton, R.E.; McCarthy, A.; Wallace, A.M.; Hernandez-Marin, S.; Hadfield, R.H.; Nam, S.W.; Buller, G.S. Subcentimeter depth resolution using a single-photon counting time-of-flight laser ranging system at 1550 nm wavelength. Opt. Lett. 2007, 32, 2266–2268. [Google Scholar] [CrossRef] [PubMed]

- Henriksson, M.; Larsson, H.; Grönwall, C.; Tolt, G. Continuously scanning time-correlated single-photon-counting single-pixel 3-D lidar. Opt. Eng. 2017, 56, 031204. [Google Scholar] [CrossRef]

- Entwistle, M.; Itzler, M.A.; Chen, J.; Owens, M.; Patel, K.; Jiang, X.; Slomkowski, K.; Rangwala, S. Geiger-mode APD camera system for single-photon 3D LADAR imaging. In Proceedings of the Advanced Photon Counting Techniques VI. SPIE, Baltimore, MD, USA, 23–27 April 2012; Volume 8375, pp. 78–89. [Google Scholar]

- Gordon, K.; Hiskett, P.; Lamb, R. Advanced 3D imaging lidar concepts for long range sensing. In Proceedings of the Advanced Photon Counting Techniques VIII. SPIE, Baltimore, MD, USA, 5–9 May 2014; Volume 9114, pp. 49–55. [Google Scholar]

- Li, Z.P.; Ye, J.T.; Huang, X.; Jiang, P.Y.; Cao, Y.; Hong, Y.; Yu, C.; Zhang, J.; Zhang, Q.; Peng, C.Z.; et al. Single-photon imaging over 200 km. Optica 2021, 8, 344–349. [Google Scholar] [CrossRef]

- Li, Z.P.; Huang, X.; Cao, Y.; Wang, B.; Li, Y.H.; Jin, W.; Yu, C.; Zhang, J.; Zhang, Q.; Peng, C.Z.; et al. Single-photon computational 3D imaging at 45 km. Photonics Res. 2020, 8, 1532–1540. [Google Scholar] [CrossRef]

- Donoho, D. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Chan, S.; Halimi, A.; Zhu, F.; Gyongy, I.; Henderson, R.K.; Bowman, R.; McLaughlin, S.; Buller, G.S.; Leach, J. Long-range depth imaging using a single-photon detector array and non-local data fusion. Sci. Rep. 2019, 9, 8075. [Google Scholar] [CrossRef]

- Rani, M.; Dhok, S.B.; Deshmukh, R.B. A systematic review of compressive sensing: Concepts, implementations and applications. IEEE Access 2018, 6, 4875–4894. [Google Scholar] [CrossRef]

| Aperture/Focal Length | Imaging |

|---|---|

| 105 mm/600 mm |  |

| 200 mm/2000 mm |  |

| Sampling Rate | 50% | 20% | 10% | 5% |

|---|---|---|---|---|

| Sampling Pattern |  |  |  |  |

| Compressed Sensing |  |  |  |  |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, H.; Han, Y.; Qiu, L.; Zong, Y.; Li, J.; Zhou, Y.; He, Y.; Liu, J.; Wang, G.; Chen, H.; et al. Long-Range Imaging LiDAR with Multiple Denoising Technologies. Appl. Sci. 2024, 14, 3414. https://doi.org/10.3390/app14083414

Zheng H, Han Y, Qiu L, Zong Y, Li J, Zhou Y, He Y, Liu J, Wang G, Chen H, et al. Long-Range Imaging LiDAR with Multiple Denoising Technologies. Applied Sciences. 2024; 14(8):3414. https://doi.org/10.3390/app14083414

Chicago/Turabian StyleZheng, Huaibin, Yuyuan Han, Long Qiu, Yanfeng Zong, Jingwei Li, Yu Zhou, Yuchen He, Jianbin Liu, Gao Wang, Hui Chen, and et al. 2024. "Long-Range Imaging LiDAR with Multiple Denoising Technologies" Applied Sciences 14, no. 8: 3414. https://doi.org/10.3390/app14083414