A Hardware-Efficient Vector Quantizer Based on Self-Organizing Map for High-Speed Image Compression

Abstract

:1. Introduction

2. The Basic Principle of Self-Organizing Map

3. Proposed Vector Quantizer Based on SOM

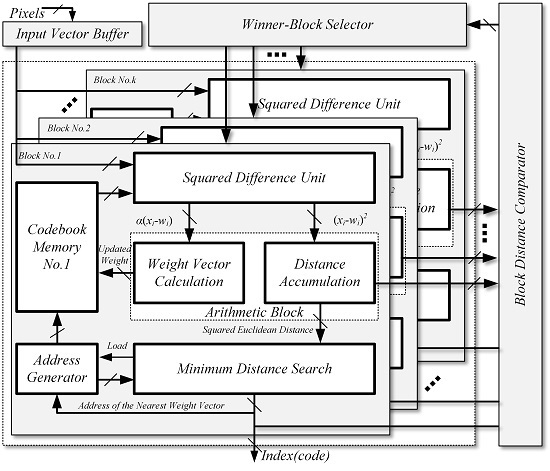

3.1. The Overall Architecture

3.2. The Squared Difference Unit and Memory Block

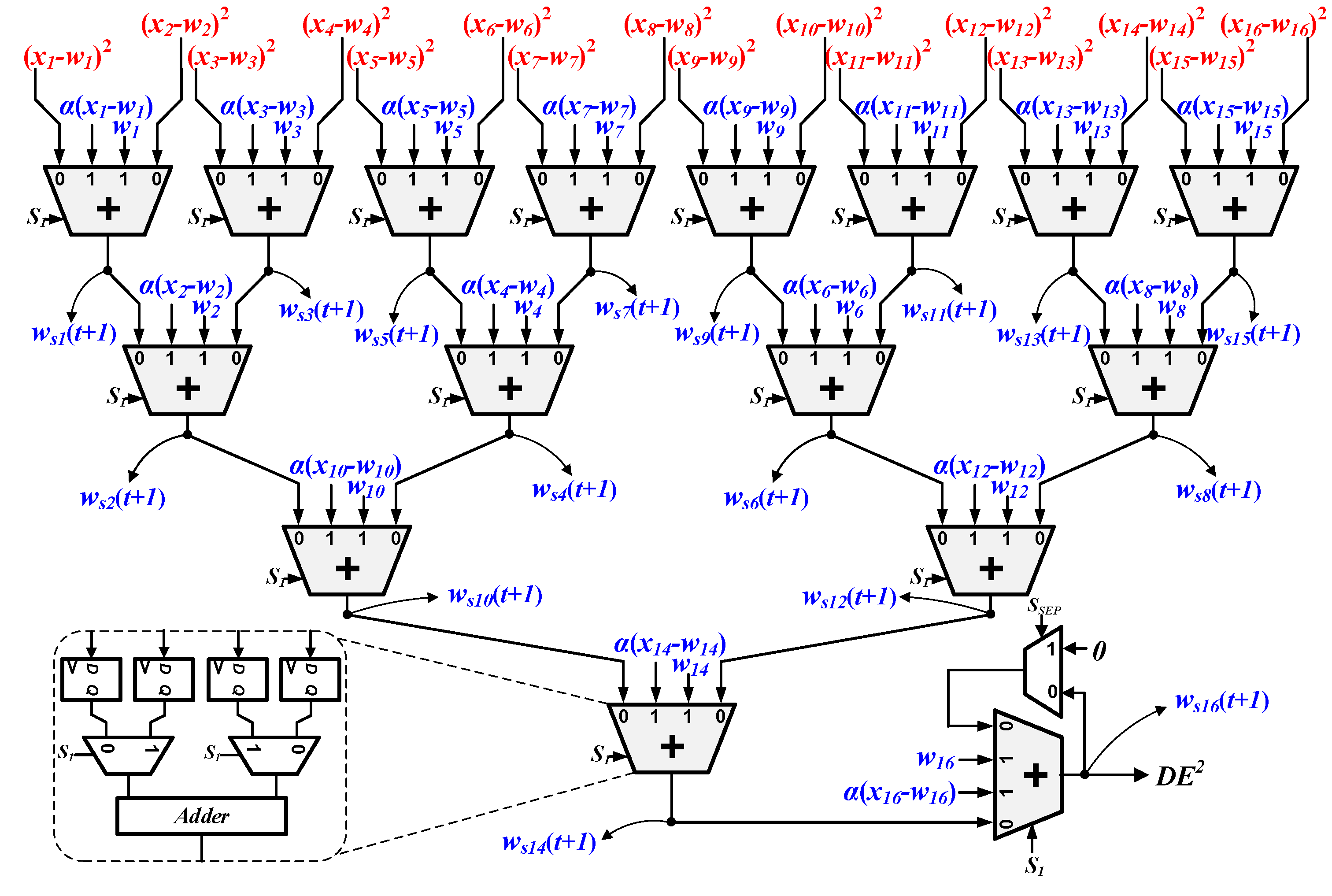

3.3. The Arithmetic Block

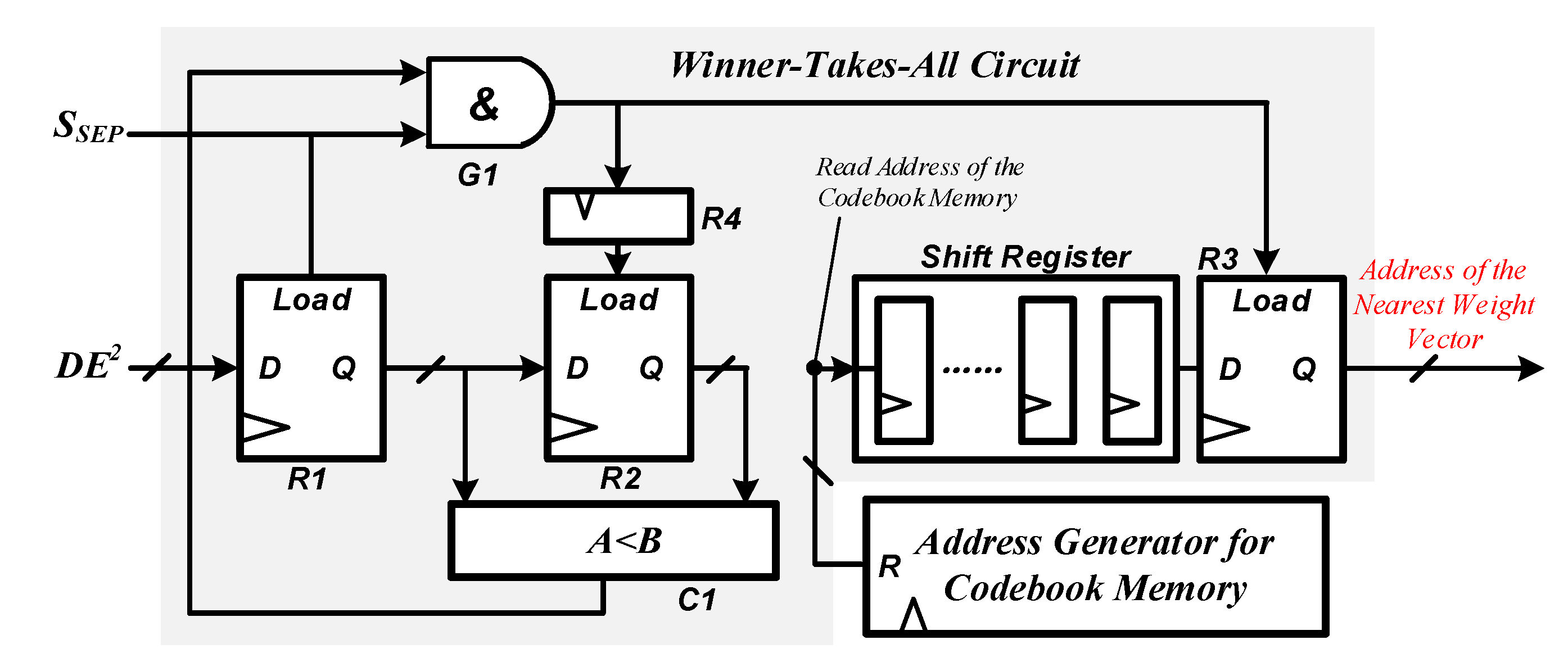

3.4. Minimum Distance Search Circuit

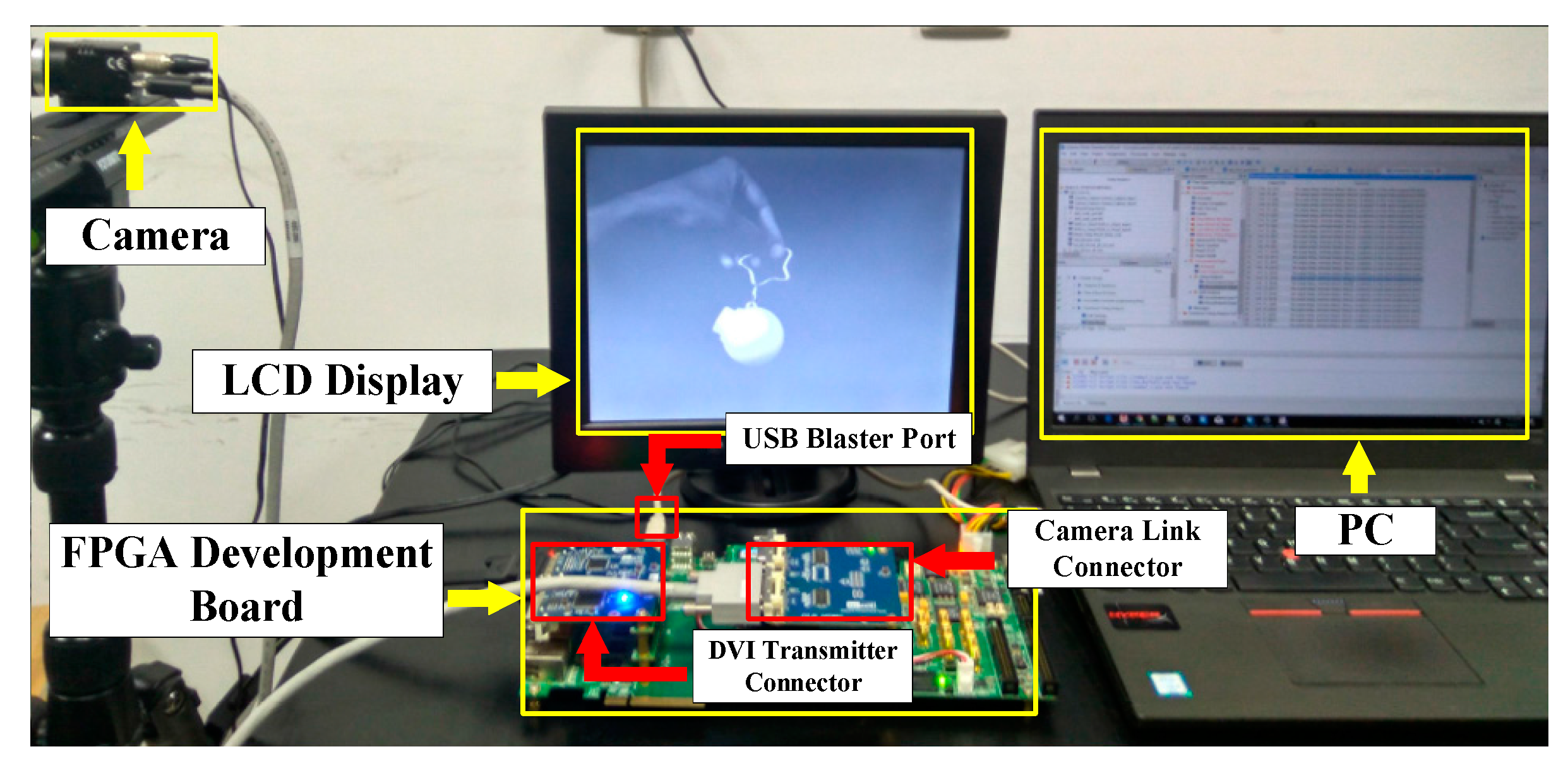

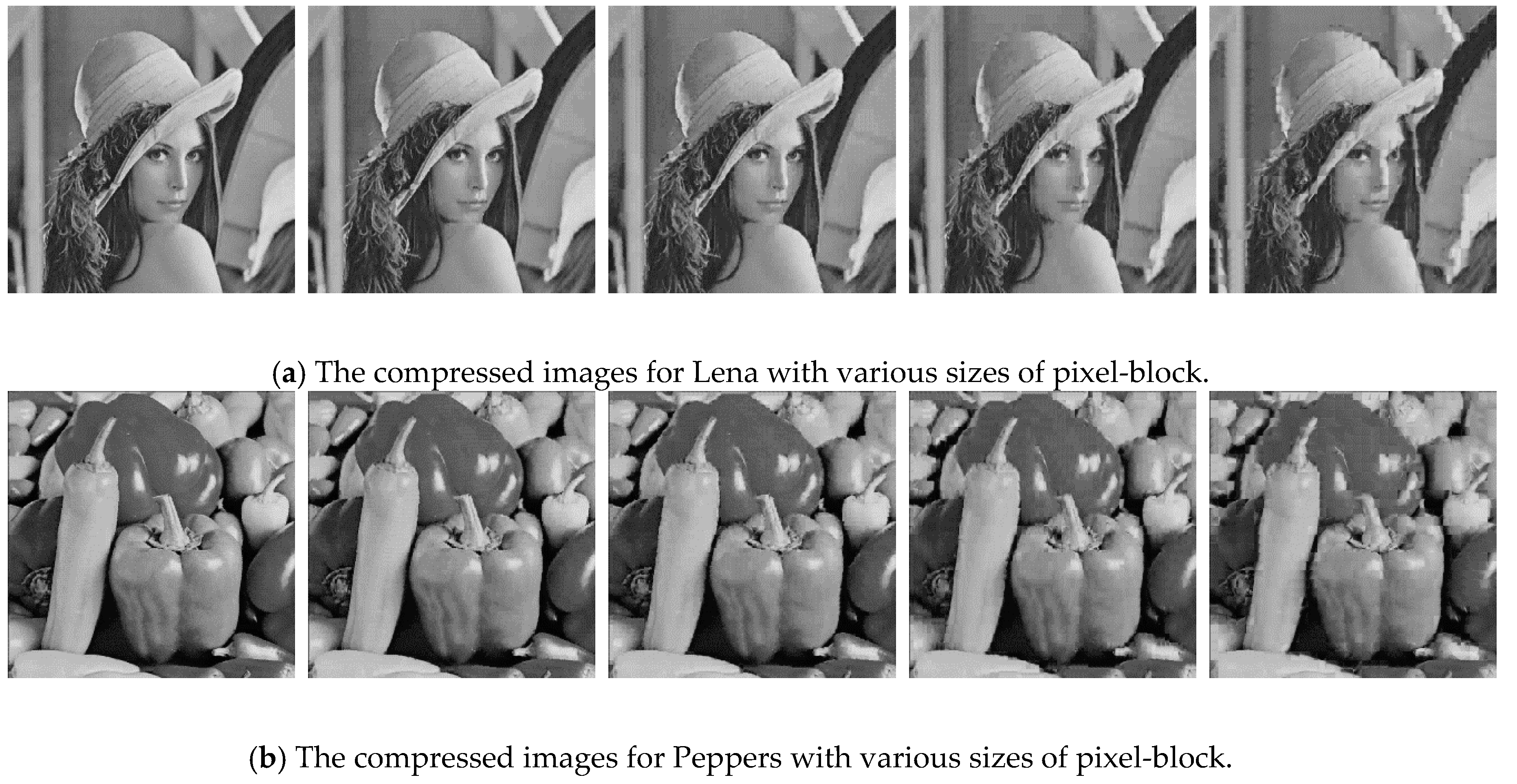

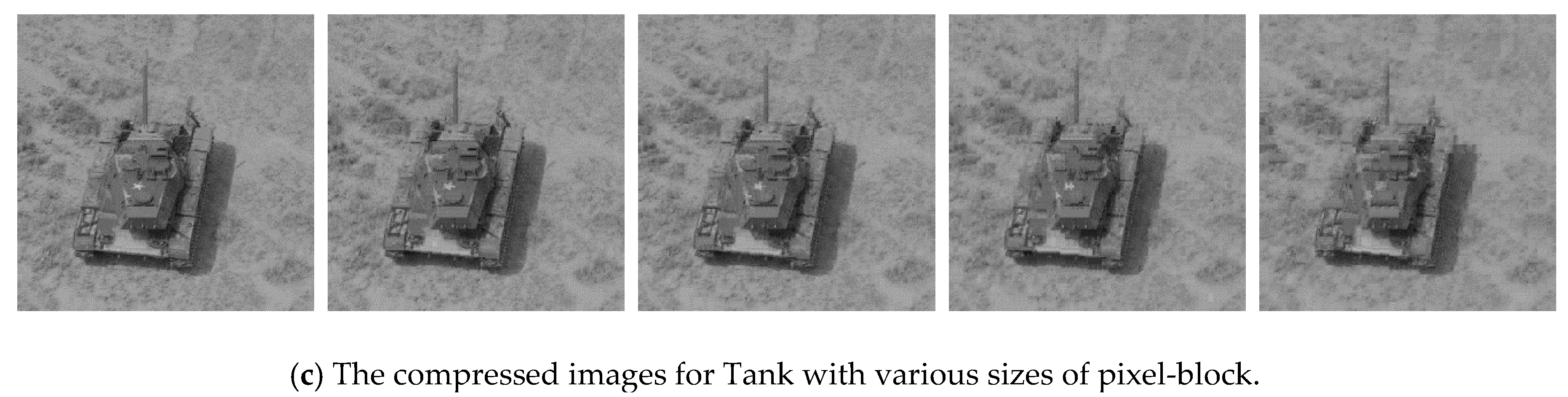

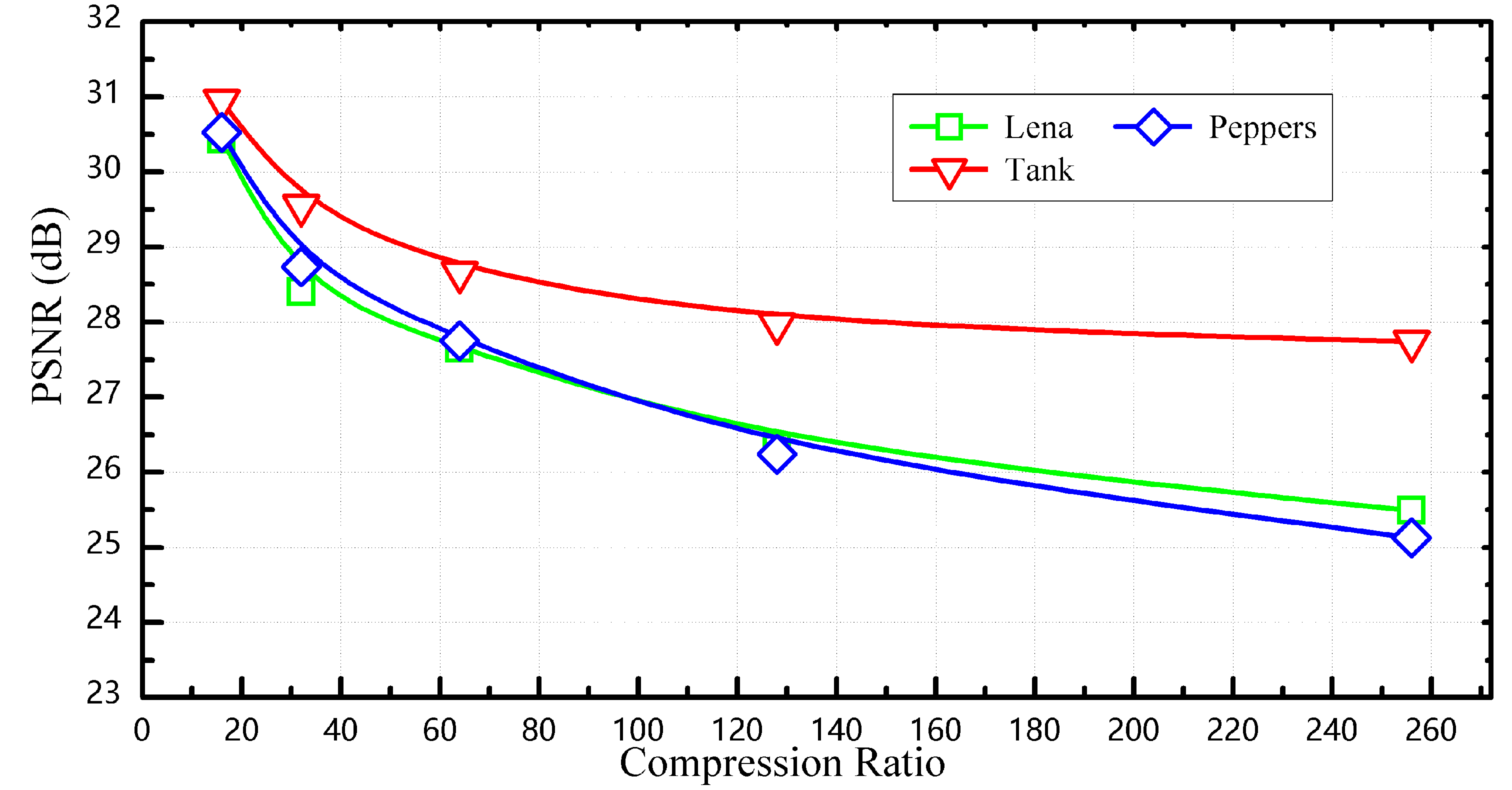

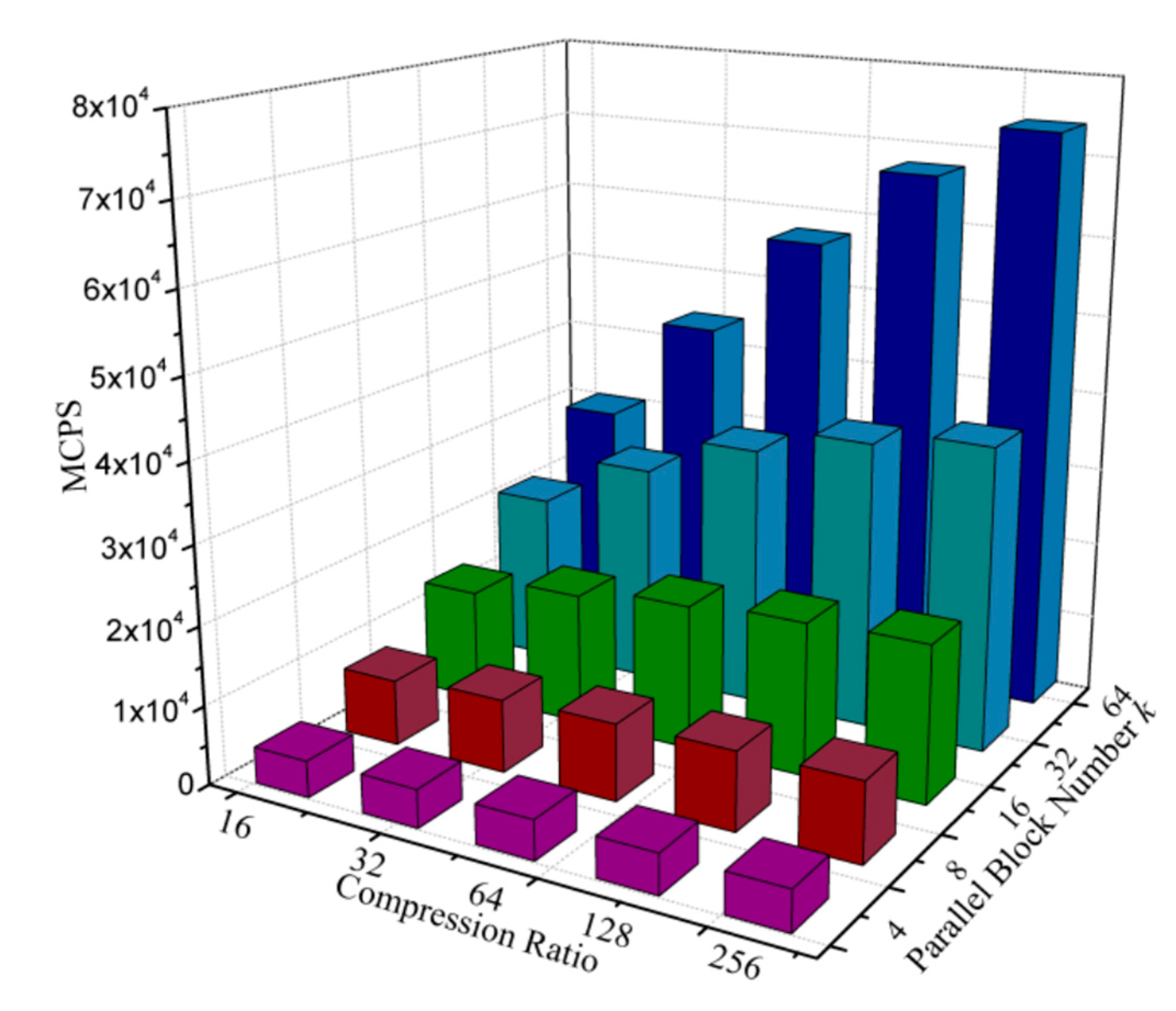

4. Experimental Results and Discussion

4.1. Hardware Implementation

4.2. Speed Analysis

4.3. Comparisons

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Xu, R.; Ng, W.C.; Yuan, J.; Yin, S.; Wei, S.A. 1/2.5 inch VGA 400 fps CMOS image sensor with high sensitivity for machine vision. IEEE J. Solid-State Circuits 2014, 49, 2342–2351. [Google Scholar] [CrossRef]

- Ishii, I.; Taniguchi, T.; Yamamoto, K.; Takaki, T. High-frame-rate optical flow system. IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 105–112. [Google Scholar] [CrossRef]

- Jiang, M.; Gu, Q.; Aoyama, T.; Takaki, T.; Ishii, I. Real-Time Vibration Source Tracking Using High-Speed Vision. IEEE Sen. J. 2017, 17, 1513–1527. [Google Scholar] [CrossRef]

- Liu, X.; Sun, Q.; Zhang, C.; Wu, L. High-Speed Visual Analysis of Fluid Flow and Heat Transfer in Oscillating Heat Pipes with Different Diameters. Appl. Sci. 2016, 6, 321–336. [Google Scholar] [CrossRef]

- Cho, C.; Kim, J.; Kim, J.; Lee, S.J.; Kim, K.J. Detecting for high speed flying object using image processing on target place. Cluster Comput. 2016, 19, 285–292. [Google Scholar] [CrossRef]

- Baig, M.Y.; Lai, E.M.; Punchihewa, A. Compressed sensing-based distributed image compression. Appl. Sci. 2014, 4, 128–147. [Google Scholar] [CrossRef]

- Nishikawa, Y.; Kawahito, S.; Furuta, M.; Tamura, T. A high-speed CMOS image sensor with on-chip parallel image compression circuits. Proceedings of 2007 IEEE Custom Integrated Circuits Conference, San Jose, CA, USA, 16–19 September 2007; pp. 833–836. [Google Scholar]

- Huang, C.M.; Bi, Q.; Stiles, G.S.; Harris, R.W. Fast full search equivalent encoding algorithms for image compression using vector quantization. IEEE Trans. Image Process. 1992, 1, 413–416. [Google Scholar] [CrossRef] [PubMed]

- Horng, M.H. Vector quantization using the firefly algorithm for image compression. Expert Syst. Appl. 2012, 39, 1078–1091. [Google Scholar] [CrossRef]

- Fujibayashi, M.; Nozawa, T.; Nakayama, T.; Mochizuki, K.; Konda, M.; Kotani, K.; Ohmi, T. A still-image encoder based on adaptive resolution vector quantization featuring needless calculation elimination architecture. IEEE J. Solid-State Circuits 2003, 726–733. [Google Scholar] [CrossRef]

- Ramirez-Agundis, A.; Gadea-Girones, R.; Colom-Palero, R. hardware design of a massive-parallel, modular NN-based vector quantizer for real-time video coding. Microprocess. Microsyst. 2008, 32, 33–44. [Google Scholar] [CrossRef]

- Kurdthongmee, W. A novel hardware-oriented Kohonen SOM image compression algorithm and its FPGA implementation. J. Syst. Archit. 2008, 54, 983–994. [Google Scholar] [CrossRef]

- Kurdthongmee, W.A. A hardware centric algorithm for the best matching unit searching stage of the SOM-based quantizer and its FPGA implementation. J. Real-Time Image Proc. 2016, 12, 71–80. [Google Scholar] [CrossRef]

- Zhang, X.; An, F.; Chen, L.; Mattausch, H.J. Reconfigurable VLSI implementation for learning vector quantization with on-chip learning circuit. Jpn. J. Appl. Phys. 2016, 55, 04EF02. [Google Scholar] [CrossRef]

- Rauber, A.; Merkl, D.; Dittenbach, M. The growing hierarchical self-organizing map: Exploratory analysis of high-dimensional data. IEEE Trans. Neural Netw. 2002, 13, 1331–1341. [Google Scholar] [CrossRef] [PubMed]

- Hikawa, H.; Maeda, Y. Improved Learning Performance of Hardware Self-Organizing Map Using a Novel Neighborhood Function. IEEE Trans. Neural Netw. Learn. Syst. 2015, 26, 2861–2873. [Google Scholar] [CrossRef] [PubMed]

| Resources | Used | Available | Utilization |

|---|---|---|---|

| Combinational ALUTs | 74,368 | 182,400 | 40% |

| Memory ALUTs | 0 | 91,200 | 0% |

| Total registers | 62,784 | N/A | N/A |

| Total block memory bits | 36,864 | 14,625,792 | <1% |

| DSP block 18-bit elements | 0 | 1288 | 0% |

| Total PLLs | 1 | 8 | 12.5% |

| Design | [11] | [13] | This Work | |

|---|---|---|---|---|

| k = 16 | k = 32 | |||

| FPGA family | Virtex II | Virtex IV | Stratic IV | |

| Compression ratio (CR) | 16 (Fixed) | 3 (Fixed) | ≥16 (Adjustable) | |

| PSNR (dB) | 31.28 | 37.10 | 30.69 (CR = 16) | |

| Frequency (Hz) | 71.43 M | 19.6 M | 80.1 M | 79.8 M |

| MCPS | 11,026 | N/A | 14,247 @ CR = 16 | 21,845 @ CR = 16 |

| LUTs | 40,280 | 17,6130 | 37,214 | 74,368 |

| Compression speed (frames/s) | 160 | 107 | 212 @ CR = 16 275 @ CR = 64 296 @ CR = 256 | 324 @ CR = 16 500 @ CR = 64 585 @ CR = 256 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, Z.; Zhang, X.; Chen, L.; Zhu, Y.; An, F.; Wang, H.; Feng, S. A Hardware-Efficient Vector Quantizer Based on Self-Organizing Map for High-Speed Image Compression. Appl. Sci. 2017, 7, 1106. https://doi.org/10.3390/app7111106

Huang Z, Zhang X, Chen L, Zhu Y, An F, Wang H, Feng S. A Hardware-Efficient Vector Quantizer Based on Self-Organizing Map for High-Speed Image Compression. Applied Sciences. 2017; 7(11):1106. https://doi.org/10.3390/app7111106

Chicago/Turabian StyleHuang, Zunkai, Xiangyu Zhang, Lei Chen, Yongxin Zhu, Fengwei An, Hui Wang, and Songlin Feng. 2017. "A Hardware-Efficient Vector Quantizer Based on Self-Organizing Map for High-Speed Image Compression" Applied Sciences 7, no. 11: 1106. https://doi.org/10.3390/app7111106