Joint QoS and Congestion Control Based on Traffic Prediction in SDN

Abstract

:1. Introduction

1.1. Overview of Our Contributions

- (i)

- To the best of our knowledge, there is no related work that considers traffic prediction in resource reallocation. This is the first work that exploits predicted flow matrix to dynamically reschedule the network. In addition, this is the first work that studies the impact of flow traffic estimation on network resource reallocation. The simulation results show significant reduction in the total packet loss along with improvement in the network throughput.

- (ii)

- The proposed approach can support different traffic classes with various QoS requirements in flow level granularity. To do this, delay and bandwidth are aimed to be fulfilled while packet loss is minimized for current and predicted traffic load.

- (iii)

- Two schemes are proposed for solving the introduced QRTP problem: (I) an optimal solution which is time consuming; and (II) a relaxed but fast one.

- (iv)

- The proposed schemes are compared with the performance perspective. In this way, we use a real network traffic and topology for experiments.

- (v)

- Our formulation makes a trade-off between performance and computational complexity. In this way, it reschedules the network with flow granularity which can be the size of the exchanged information of “a special application” or “all communications from one data center to another one”.

1.2. Paper Organization

2. Related Work

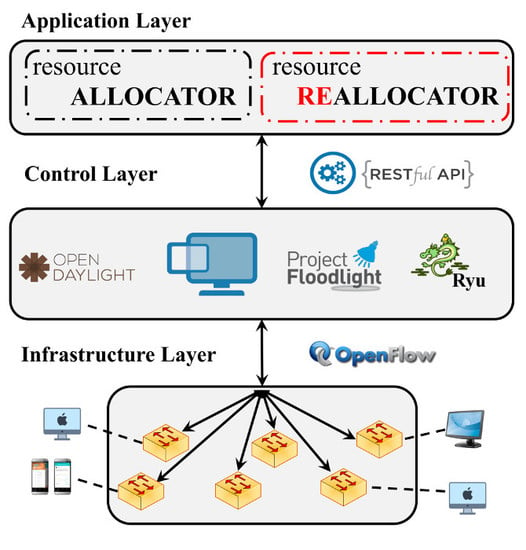

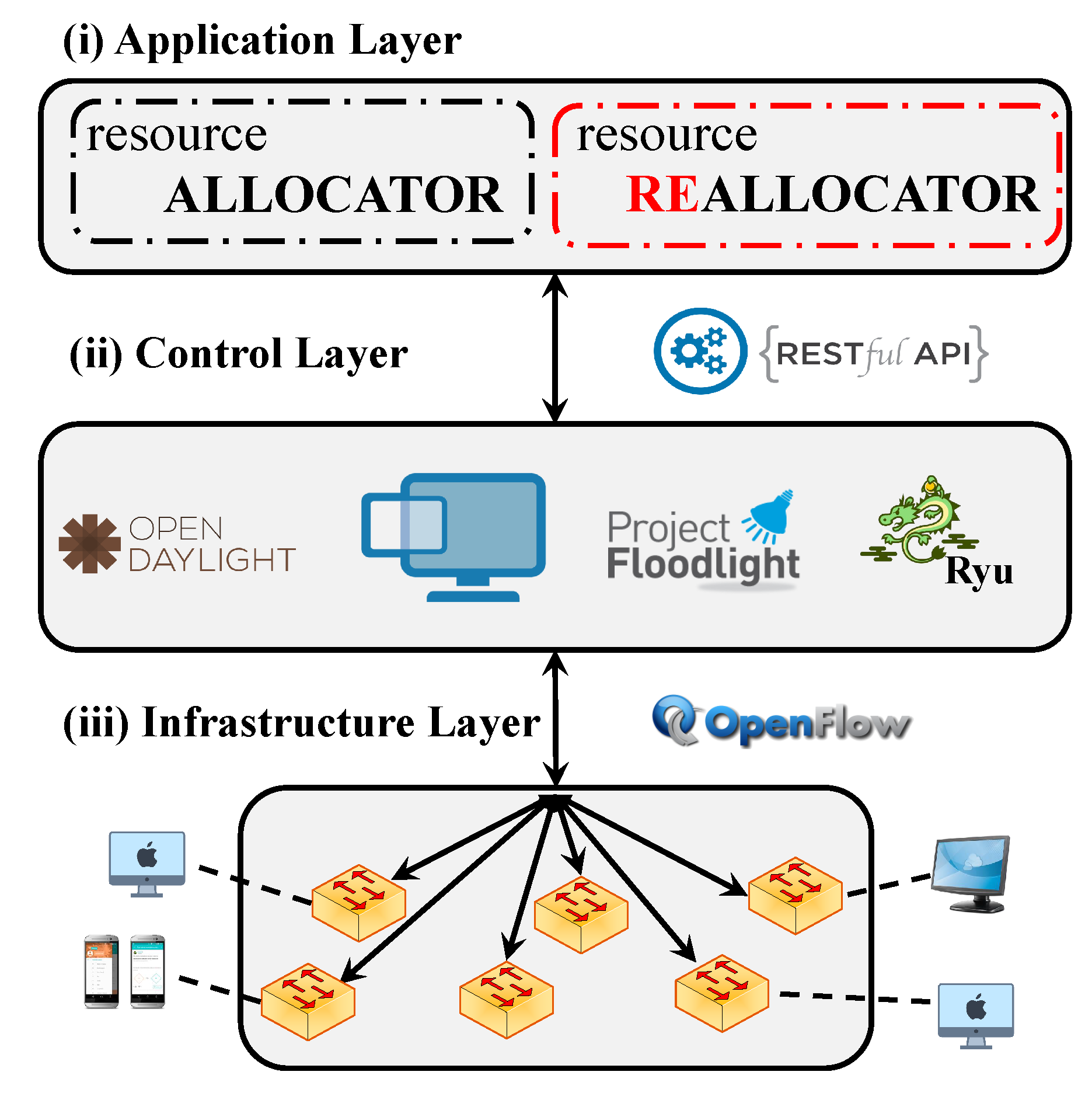

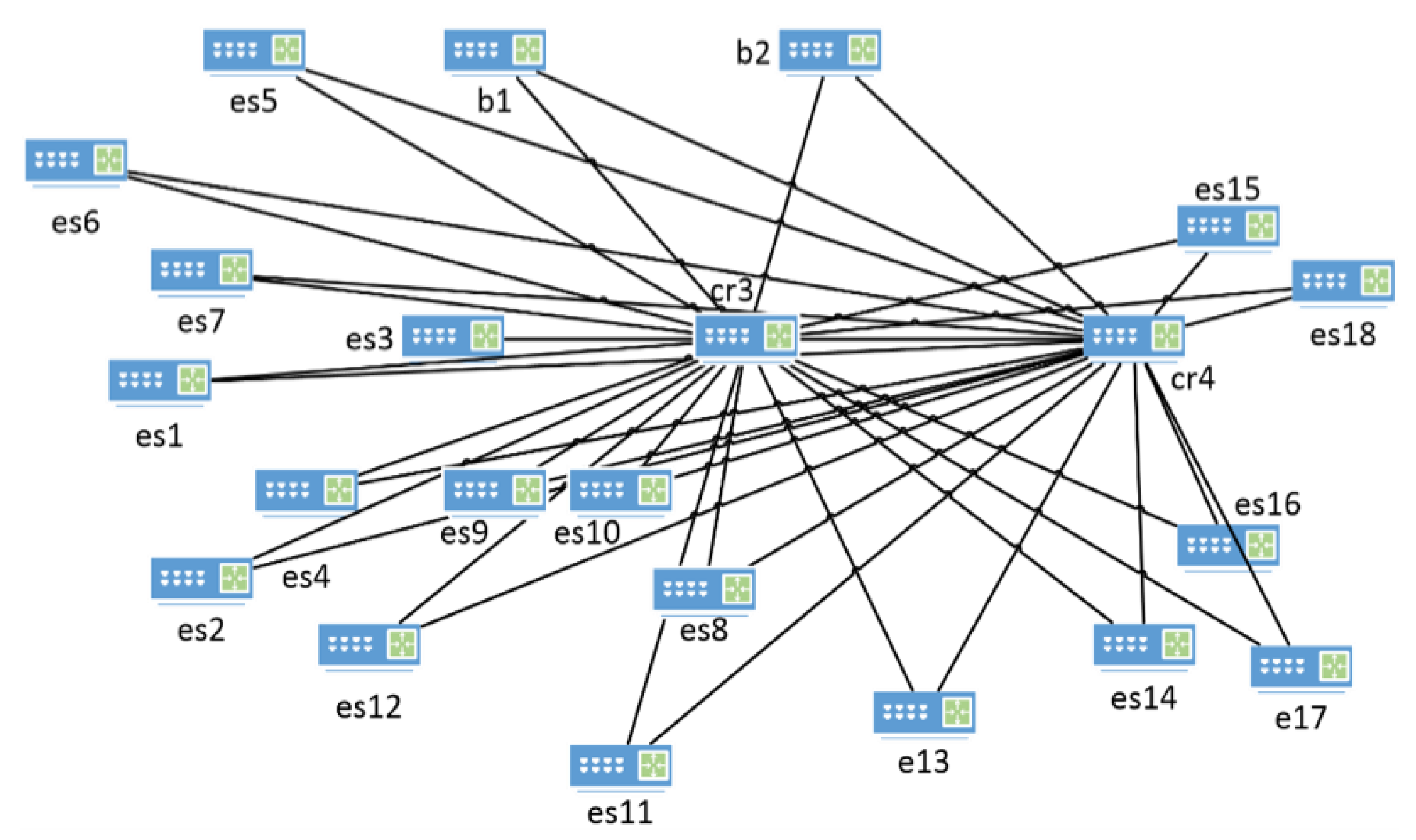

3. System Architecture

- Resource allocator (routing module): this module belongs to the application layer. When a new flow enters the network, the resource allocator assigns the required resources to the flow. This module does not reroute existing flows and only focuses on newly arrived ones.

- Resource re-allocator (re-routing module): this module belongs to the application layer. It is capable of performing a reallocation of some flows in order to react to some event (like congestion) or periodically to optimize the use of resources or the user perceived QoS.

| Algorithm 1: Outline |

|

4. System Model

Problem Formulation

5. Proposed Solution

5.1. Forwarding Table Entries Compression

5.2. Relaxing Objective Function

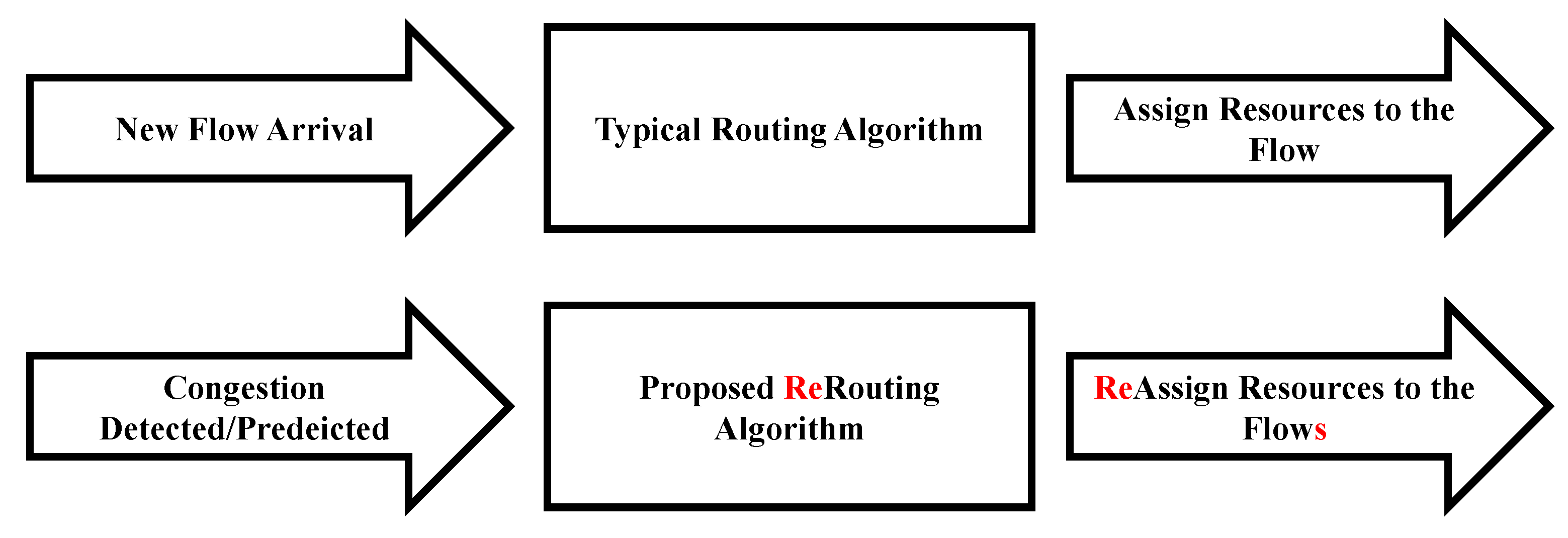

6. Description Scenario

6.1. Experimental Setup

6.2. Experimental Metrics

- Packet loss: the ratio of the number of lost packets to the total number of sent packets;

- Reconfiguration network effect: the number of elements that should be set into the switches forwarding tables; and

- Link utilization: the percentage of a network’s bandwidth that is currently being consumed by the SDN traffic;

- Maximum link utilization: the utilization of the link that has the maximum amount of link utilization.

- Computational complexity: the time in seconds that it takes to run each set of flow in QRTP and relaxed QRTP (RQRTP).

7. Simulation Results

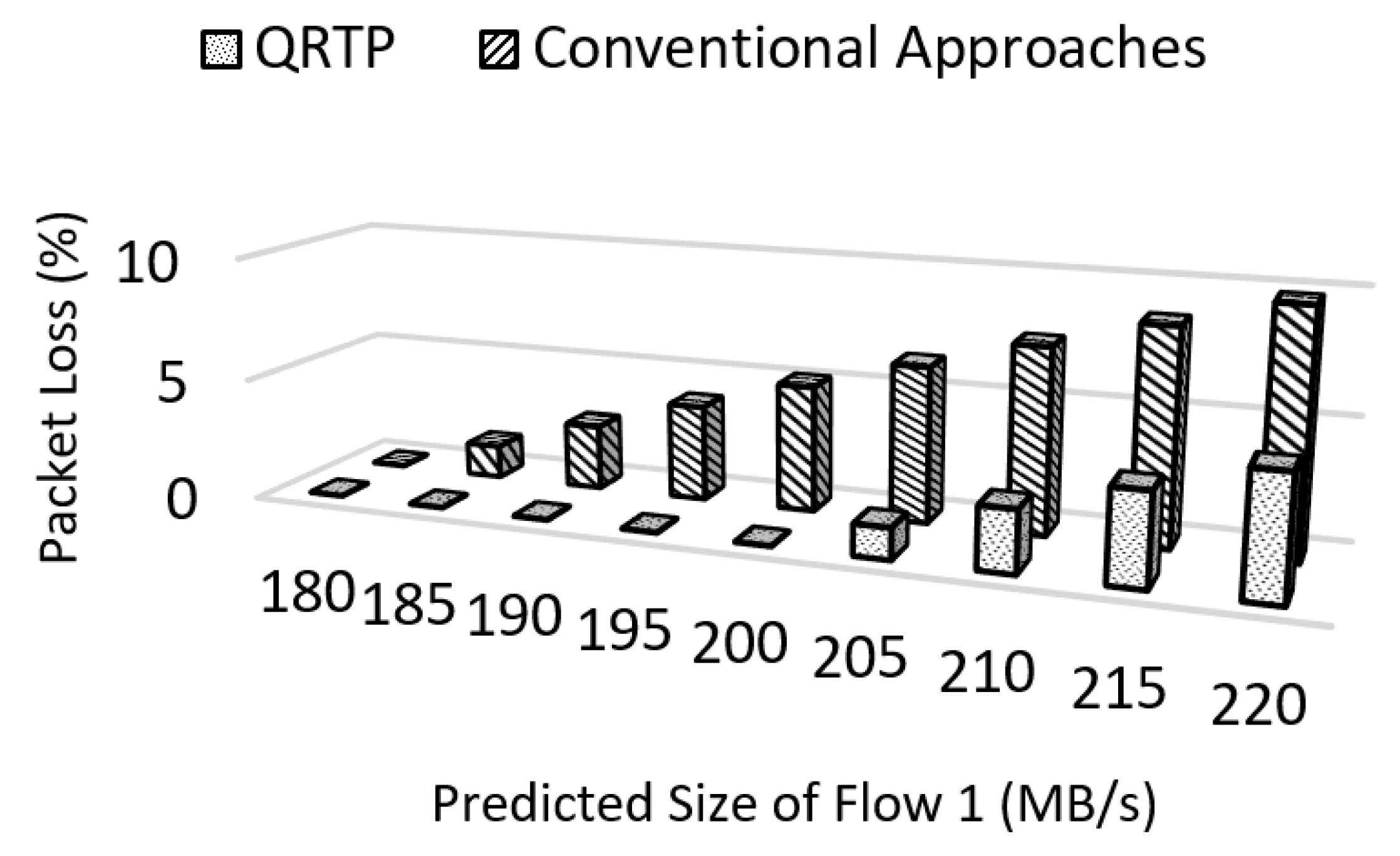

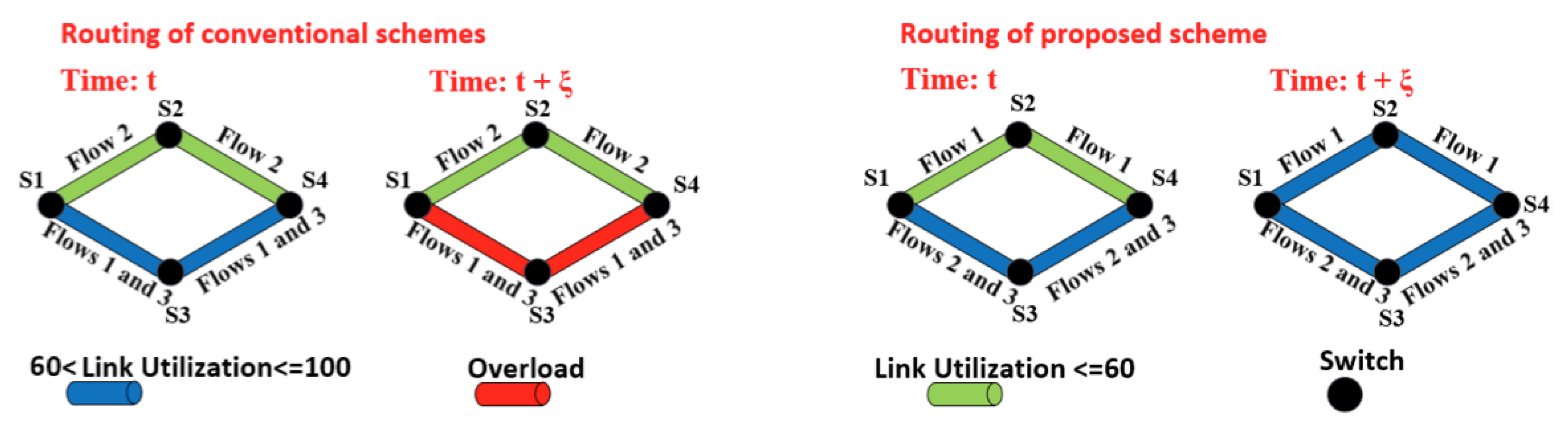

7.1. Packet Loss

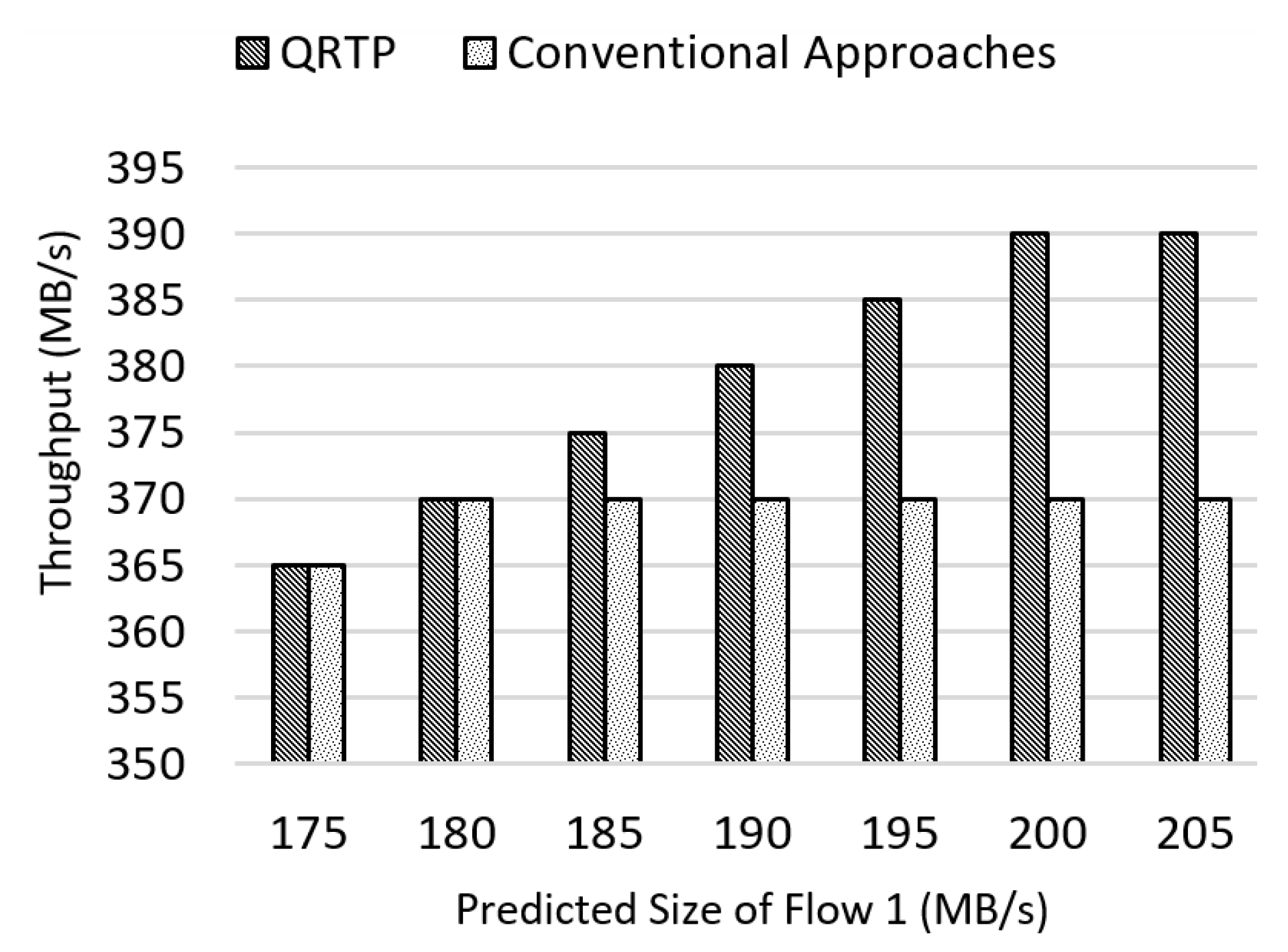

7.2. Link Utilization and Throughput

7.3. Network Reconfiguration Effect

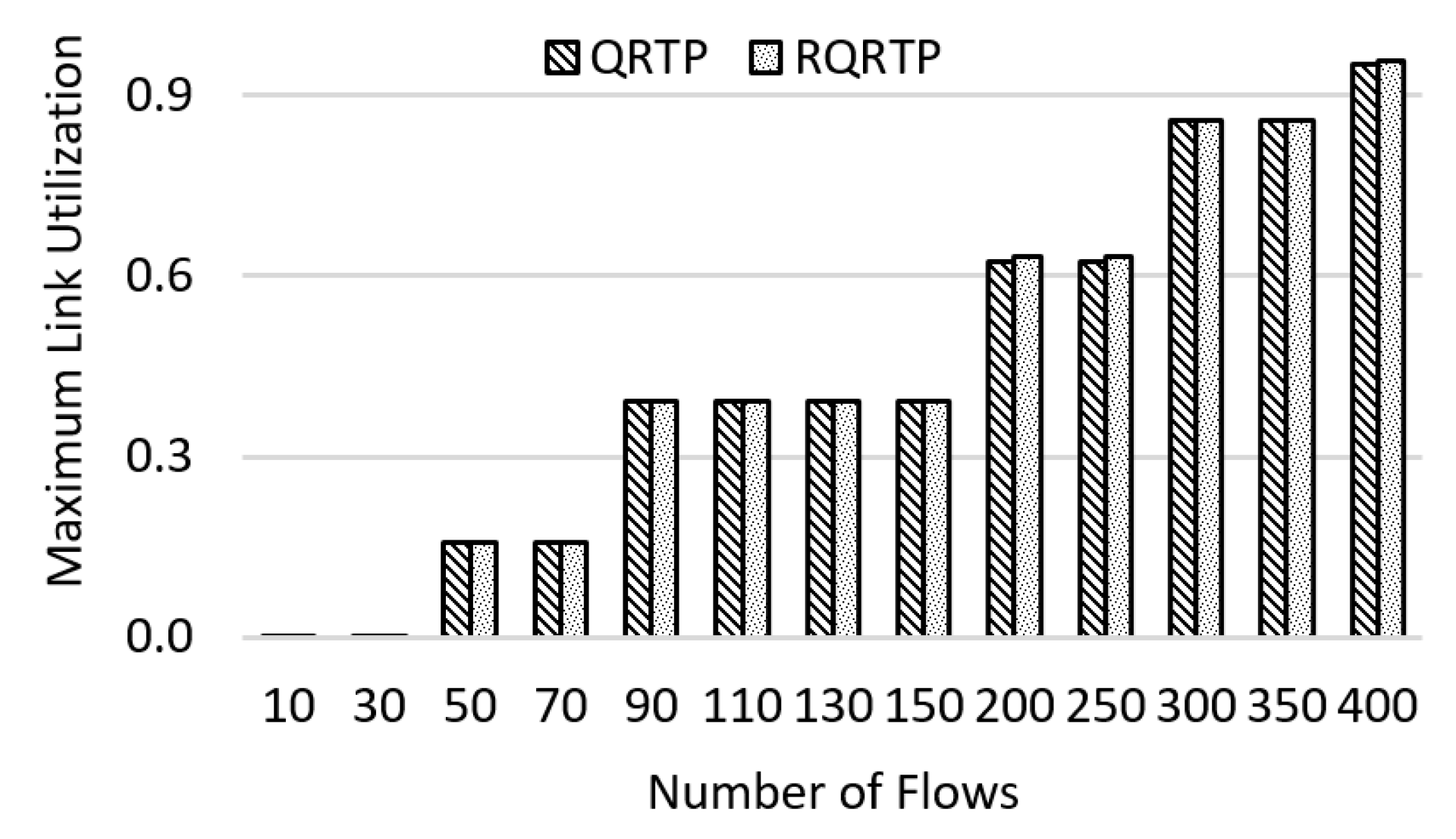

7.4. Computational Complexity and Maximum Link Utilization

8. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Nomenclature

| ACS | Ant colony system |

| API | Application programming interface |

| ECMP | Equal-cost multi-path routing |

| FTP | File transfer protocol |

| GA | Genetic algorithm |

| ILP | Integer linear programming |

| OF | OpenFlow |

| OTT | Over the top |

| QoS | Quality of service |

| QRA | QoS-aware resource allocation |

| QRTP | QoS-aware resource reallocation traffic prediction algorithm |

| RQRTP | Relatexed QoS-aware resource reallocation traffic prediction algorithm |

| SDN | Software defined network |

| SLA | Service level agreement |

| VM | Virtual machine |

References

- Wang, N.; Ho, K.; Pavlou, G.; Howarth, M. An overview of routing optimization for internet traffic engineering. IEEE Commun. Surv. Tutor. 2008, 10, 36–56. [Google Scholar] [CrossRef] [Green Version]

- Hong, C.Y.; Kandula, S.; Mahajan, R.; Zhang, M.; Gill, V.; Nanduri, M.; Wattenhofer, R. Achieving high utilization with software-driven WAN. ACM SIGCOMM Comput. Commun. Rev. 2013, 43, 15–26. [Google Scholar] [CrossRef]

- Jain, S.; Kumar, A.; Mandal, S.; Ong, J.; Poutievski, L.; Singh, A.; Venkata, S.; Wanderer, J.; Zhou, J.; Zhu, M.; et al. B4: Experience with a globally-deployed software defined WAN. ACM SIGCOMM Comput. Commun. Rev. 2013, 43, 3–14. [Google Scholar] [CrossRef]

- Medina, A.; Taft, N.; Salamatian, K.; Bhattacharyya, S.; Diot, C. Traffic matrix estimation: Existing techniques and new directions. ACM SIGCOMM Comput. Commun. Rev. 2002, 32, 161–174. [Google Scholar] [CrossRef]

- Kumar, P.; Yuan, Y.; Yu, C.; Foster, N.; Kleinberg, R.; Soulé, R. Kulfi: Robust traffic engineering using semi-oblivious routing. arXiv, 2016; arXiv:1603.01203. [Google Scholar]

- Applegate, D.; Cohen, E. Making intra-domain routing robust to changing and uncertain traffic demands: Understanding fundamental tradeoffs. In Proceedings of the 2003 Conference on Applications, Technologies, Architectures, and Protocols for Computer Communications, Karlsruhe, Germany, 25–29 August 2003; ACM: New York, NY, USA, 2003; pp. 313–324. [Google Scholar]

- Shojafar, M.; Cordeschi, N.; Baccarelli, E. Energy-efficient adaptive resource management for real-time vehicular cloud services. IEEE Trans. Cloud Comput. 2016, 99, 1–14. [Google Scholar] [CrossRef]

- Curtis, A.R.; Kim, W.; Yalagandula, P. Mahout: Low-overhead datacenter traffic management using end-host-based elephant detection. In Proceedings of the 2011 Proceedings IEEE INFOCOM, Shanghai, China, 10–15 April 2011; pp. 1629–1637. [Google Scholar]

- Benson, T.; Anand, A.; Akella, A.; Zhang, M. MicroTE: Fine grained traffic engineering for data centers. In Proceedings of the Seventh Conference on Emerging Networking EXperiments and Technologies, Tokyo, Japan, 6–9 December 2011; ACM: New York, NY, USA, 2011; p. 8. [Google Scholar]

- Liotou, E.; Tseliou, G.; Samdanis, K.; Tsolkas, D.; Adelantado, F.; Verikoukis, C. An SDN QoE-Service for dynamically enhancing the performance of OTT applications. In Proceedings of the 2015 Seventh International Workshop on Quality of Multimedia Experience (QoMEX), Pylos-Nestoras, Greece, 26–29 May 2015; pp. 1–2. [Google Scholar]

- Tajiki, M.M.; Akbari, B.; Mokari, N. Optimal Qos-aware network reconfiguration in software defined cloud data centers. Comput. Netw. 2017, 120, 71–86. [Google Scholar] [CrossRef]

- Tajiki, M.M.; Salsano, S.; Shojafar, M.; Chiaraviglio, L.; Akbari, B. Energy-efficient Path Allocation Heuristic for Service Function Chaining. In Proceedings of the 2018 21th Conference on Innovations in Clouds, Internet and Networks (ICIN), Paris, France, 20–22 February 2018; pp. 1–8. [Google Scholar]

- Tajiki, M.M.; Salsano, S.; Shojafar, M.; Chiaraviglio, L.; Akbari, B. Joint Energy Efficient and QoS-aware Path Allocation and VNF Placement for Service Function Chaining. arXiv, 2017; arXiv:1710.02611. [Google Scholar]

- Vilalta, R.; Mayoral, A.; Pubill, D.; Casellas, R.; Martínez, R.; Serra, J.; Verikoukis, C.; Muñoz, R. End-to-End SDN orchestration of IoT services using an SDN/NFV-enabled edge node. In Proceedings of the 2016 Optical Fiber Communications Conference and Exhibition (OFC), Anaheim, CA, USA, 20–24 March 2016; pp. 1–3. [Google Scholar]

- Khoshbakht, M.; Tajiki, M.M.; Akbari, B. SDTE: Software Defined Traffic Engineering for Improving Data Center Network Utilization. Int. J. Inf. Commun. Technol. Res. 2016, 8, 15–24. [Google Scholar]

- Egilmez, H.E.; Civanlar, S.; Tekalp, A.M. An optimization framework for QoS-enabled adaptive video streaming over OpenFlow networks. IEEE Trans. Multimed. 2013, 15, 710–715. [Google Scholar] [CrossRef]

- Egilmez, H.E.; Gorkemli, B.; Tekalp, A.M.; Civanlar, S. Scalable video streaming over OpenFlow networks: An optimization framework for QoS routing. In Proceedings of the 2011 18th IEEE International Conference on Image Processing (ICIP), Brussels, Belgium, 11–14 September 2011; pp. 2241–2244. [Google Scholar]

- Egilmez, H.E.; Dane, S.T.; Bagci, K.T.; Tekalp, A.M. OpenQoS: An OpenFlow controller design for multimedia delivery with end-to-end Quality of Service over Software-Defined Networks. In Proceedings of the 2011 Asia Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPAASC), Hollywood, CA, USA, 3–6 December 2012; pp. 1–8. [Google Scholar]

- Egilmez, H.E.; Civanlar, S.; Tekalp, A.M. A distributed QoS routing architecture for scalable video streaming over multi-domain OpenFlow networks. In Proceedings of the 2012 19th IEEE International Conference on Image Processing (ICIP), Orlando, FL, USA, 30 September–3 October 2012; pp. 2237–2240. [Google Scholar]

- Civanlar, S.; Parlakisik, M.; Tekalp, A.M.; Gorkemli, B.; Kaytaz, B.; Onem, E. A qos-enabled openflow environment for scalable video streaming. In Proceedings of the 2010 IEEE Globecom Workshops (GC Wkshps), Miami, FL, USA, 6–10 December 2010; pp. 351–356. [Google Scholar]

- Liang, B.; Yu, J. One multi-constraint QoS routing algorithm CGEA based on ant colony system. In Proceedings of the 2015 2nd International Conference on Information Science and Control Engineering (ICISCE), Shanghai, China, 24–26 April 2015; pp. 848–851. [Google Scholar]

- Leela, R.; Thanulekshmi, N.; Selvakumar, S. Multi-constraint qos unicast routing using genetic algorithm (muruga). Appl. Soft Comput. 2011, 11, 1753–1761. [Google Scholar] [CrossRef]

- Kulkarni, S.; Sharma, R.; Mishra, I. New QoS routing algorithm for MPLS networks using delay and bandwidth constraints. Int. J. Inf. 2012, 2, 285–293. [Google Scholar]

- Zhao, J.; Ge, X. QoS multi-path routing scheme based on ACR algorithm in industrial ethernet. In Proceedings of the Third International Conference on Communications, Signal Processing, and Systems; Springer: Cham, Switzerland; pp. 593–601.

- Wang, J.M.; Wang, Y.; Dai, X.; Bensaou, B. SDN-based multi-class QoS-guaranteed inter-data center traffic management. In Proceedings of the 2014 IEEE 3rd International Conference on Cloud Networking (CloudNet), Luxembourg, 8–10 October 2014; pp. 401–406. [Google Scholar]

- Ghosh, A.; Ha, S.; Crabbe, E.; Rexford, J. Scalable multi-class traffic management in data center backbone networks. IEEE J. Sel. Areas Commun. 2013, 31, 2673–2684. [Google Scholar] [CrossRef]

- Ongaro, F. Enhancing Quality of Service in Software-Defined Networks. Ph.D. Thesis, Computer Engineering Department, University of Bologna, Bologna, Italy, 2014. [Google Scholar]

- Haleplidis, E.; Pentikousis, K.; Denazis, S.; Salim, J.H.; Meyer, D.; Koufopavlou, O. Software-Defined Networking (SDN): Layers and Architecture Terminology; Technical Report; Internet Research Task Force (IRTF): Vancouver, BC, Canada, 2015. [Google Scholar]

- Hopps, C. RFC 2992: Analysis of an Equal-Cost Multi-Path Algorithm [OL]; Internet Engineering Task Force (IETF): Fremont, CA, USA, 2000. [Google Scholar]

- Theophilus, B. New Paradigms for Managing the Complexity and Improving the Performance of Enterprise Networks. Ph.D. Thesis, University of Wisconsin-Madison, Madison, WI, USA, 2012. [Google Scholar]

| Symbol | Definition |

|---|---|

| Mathematical Parameters | |

| n | Number of switches |

| p | Number of flows |

| Current flow matrix | |

| Predicted flow matrix | |

| Maximum size of flow | |

| Link bandwidth matrix | |

| Flow requirement matrix | |

| Link delay matrix | |

| Source switch of flows | |

| Destination switch of flows | |

| Link bandwidth matrix | |

| Maximum link utilization | |

| Decision Variable | |

| Routing matrix | |

| Flow | Source Switch | Destination Switch | Rate |

|---|---|---|---|

| 1 | A | B | 32 Kb/s |

| 2 | A | B | 48 Kb/s |

| 3 | B | D | 64 Kb/s |

| 4 | A | B | 32 Kb/s |

| 5 | X | Y | 640 Kb/s |

| 6 | A | B | 80 Mb/s |

| 7 | B | D | 12 Kb/s |

| 8 | A | B | 45 Kb/s |

| Flow | Source Switch | Destination Switch | Rate |

|---|---|---|---|

| Aggregate 1 | A | B | 112 Kb/s |

| Aggregate 2 | B | D | 76 Kb/s |

| 5 | X | Y | 640 Kb/s |

| 6 | A | B | 80 Mb/s |

| 8 | A | B | 45 Kb/s |

| Flow | QRTP (s) | RQRTP (s) |

|---|---|---|

| 10 | 2.16 | 2.12 |

| 50 | 2.32 | 2.31 |

| 100 | 3.16 | 2.66 |

| 200 | 27.89 | 2.98 |

| 300 | 40.89 | 4.22 |

| 400 | 106.70 | 27.53 |

| 500 | 178.13 | 41.45 |

| 1000 | 1227.26 | 46.07 |

| 2000 | >1 h | 88.77 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tajiki, M.M.; Akbari, B.; Shojafar, M.; Mokari, N. Joint QoS and Congestion Control Based on Traffic Prediction in SDN. Appl. Sci. 2017, 7, 1265. https://doi.org/10.3390/app7121265

Tajiki MM, Akbari B, Shojafar M, Mokari N. Joint QoS and Congestion Control Based on Traffic Prediction in SDN. Applied Sciences. 2017; 7(12):1265. https://doi.org/10.3390/app7121265

Chicago/Turabian StyleTajiki, Mohammad Mahdi, Behzad Akbari, Mohammad Shojafar, and Nader Mokari. 2017. "Joint QoS and Congestion Control Based on Traffic Prediction in SDN" Applied Sciences 7, no. 12: 1265. https://doi.org/10.3390/app7121265