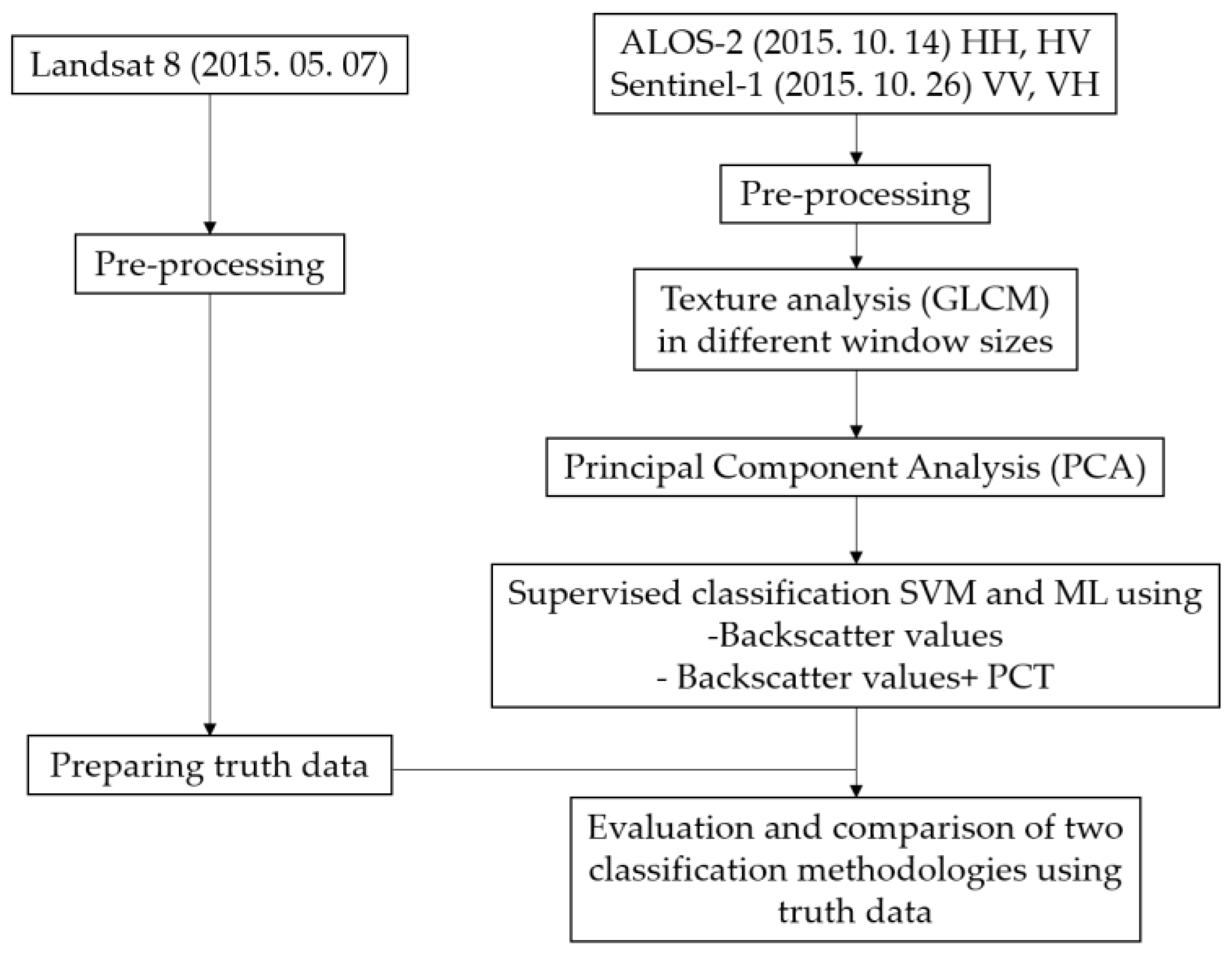

This study aims to classify the land covers in Tehran city with high accuracy using appropriate datasets and methodologies. A multi-spectral Landsat 8 image and SAR data (ALOS-2 and Sentinel-1) were used to evaluate the performance of the supervised classification algorithms, maximum likelihood and support vector machine. The comparison and evaluation of the results from the optical and SAR sensors are presented in the following.

4.1. Multi-Spectral Optical Image

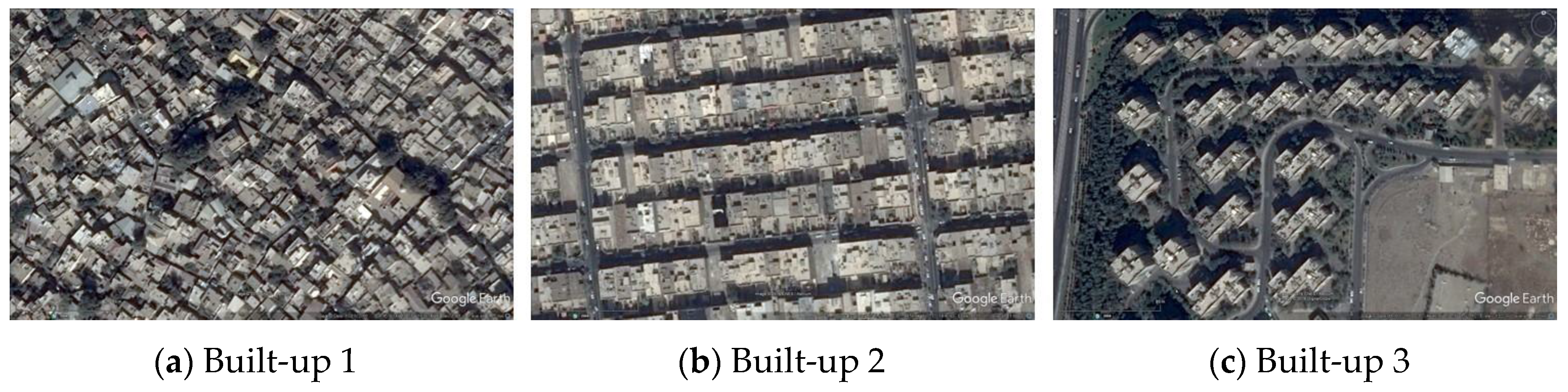

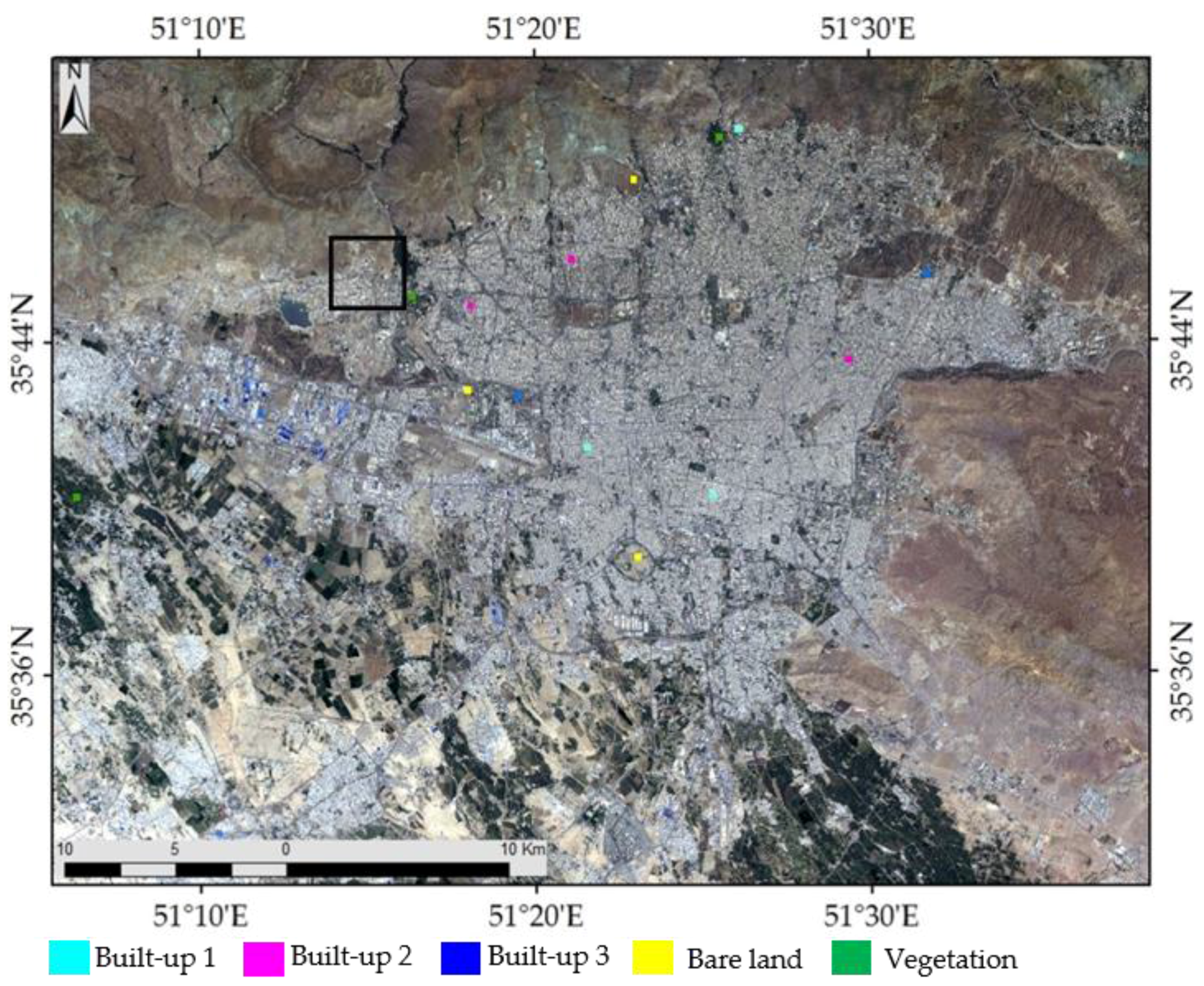

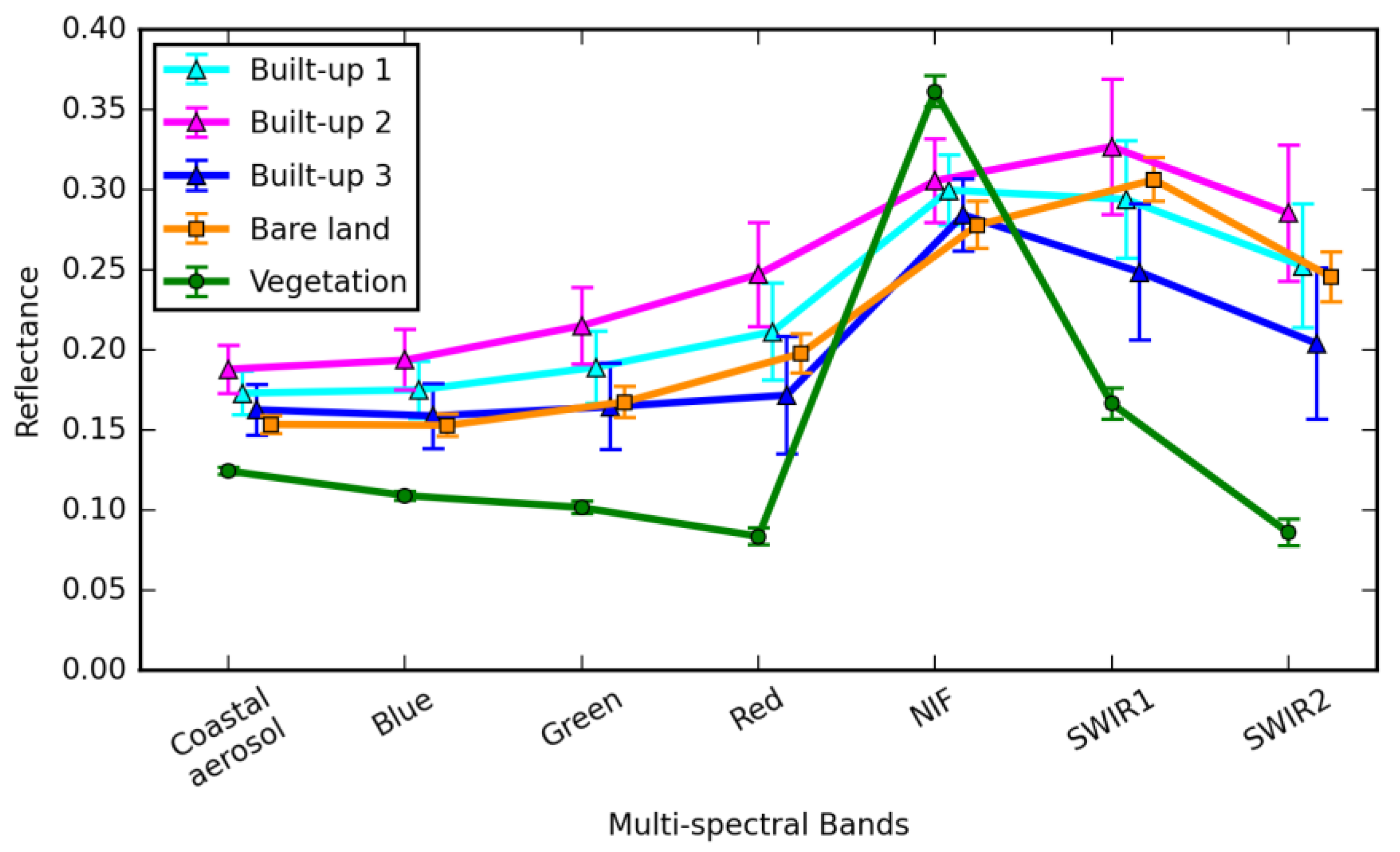

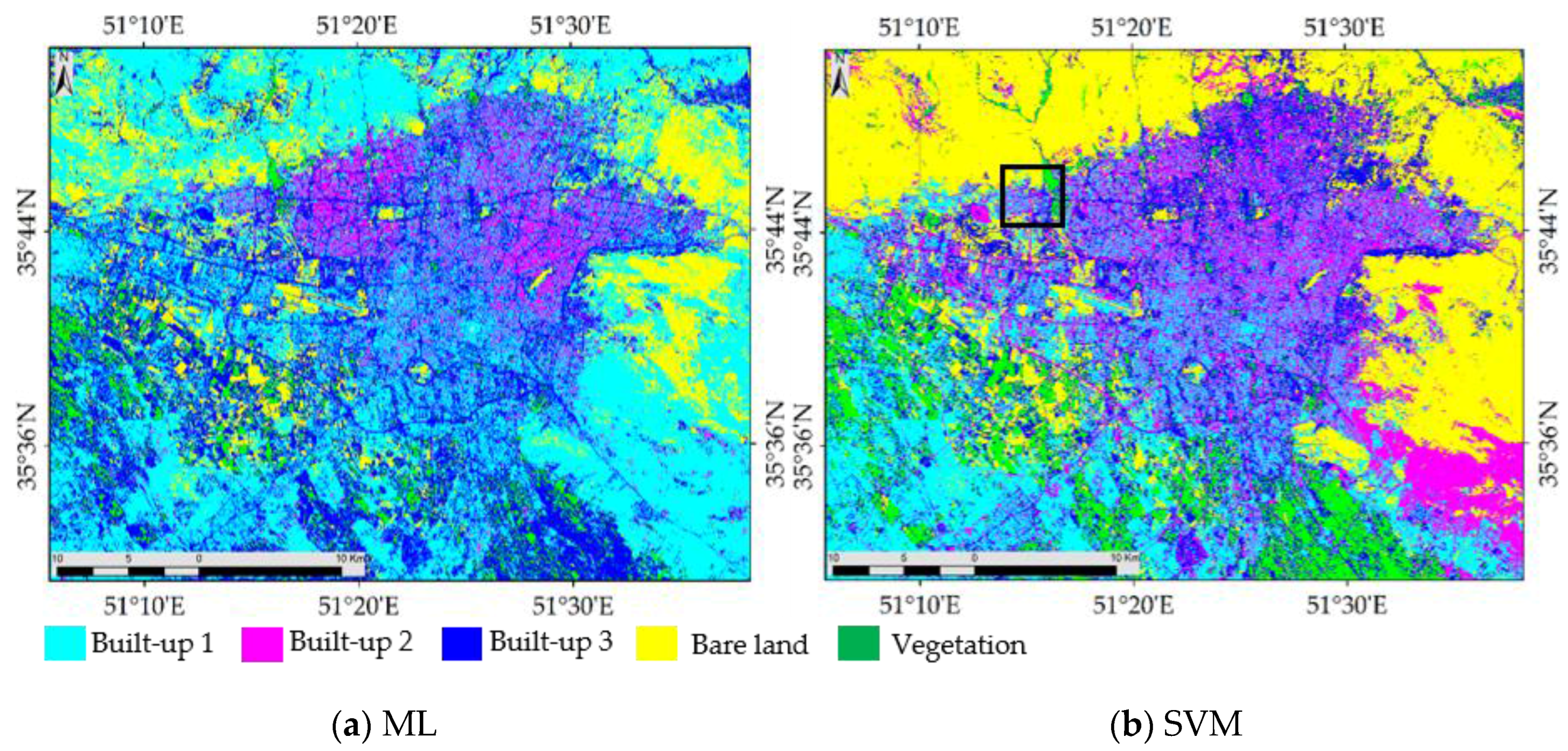

Regarding the aim of this study, we begin by observing the performance of the multi-spectral optical image. First, the spectral signatures of the different land cover samples from the Landsat 8 image (

Figure 4) for the built-up 1, built-up 2, built-up 3, bare land, and vegetation classes are shown in

Figure 5. The spectral reflectance characteristics of bare lands and built-up features are remarkably similar, while the vegetation signature shows a different pattern. The geography of the study area makes it difficult to differentiate the urban area from the mountainous and desert areas that surround Tehran. ML and SVM classifications were applied to the Landsat 8 image using a total of fifteen training samples from five different land cover types. The results are shown in

Figure 6. The ML classification result (

Figure 6a) indicates that almost all bare land and mountain areas around Tehran were selected as built-up area (built-up 1). Additionally, the SVM result (

Figure 6b) shows that some bare land areas in the southeast and west of Tehran were classified incorrectly as built-up areas (built-up 1 and 2).

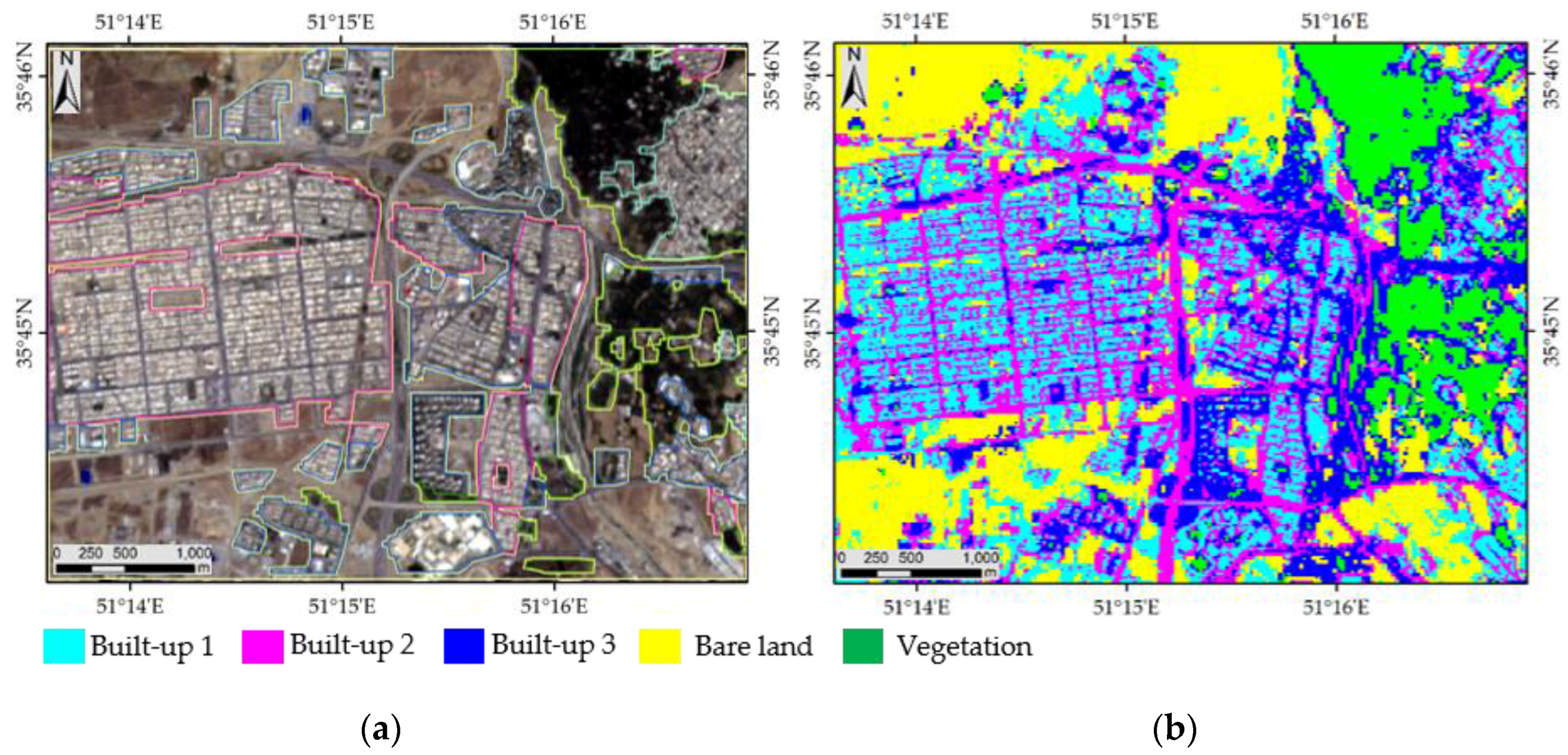

To validate the classification result, we prepared the confusion matrix using the truth data.

Figure 7a represents a closer look of the validation area, which is shown by a black frame in

Figure 4 and

Figure 6b as well.

Table 2 illustrates the confusion matrix results from Landsat 8 using SVM and ML classification. The SVM classification gave an overall accuracy and kappa coefficient of 41.1% and 0.26, respectively, while ML gave the values of 35.3% and 0.22. Moreover, the producer and user accuracy are shown in table below. The producer accuracy represents the correctly classified pixels out of the truth pixels of related land cover classes. The user accuracy, illustrates the correctly classified pixels out of the total classified pixels. The SVM algorithm provided higher accuracy than ML. A closer look at the SVM result is shown in

Figure 7b. It is observed that some classes were incorrectly classified, such as built-up 2, which was mostly classified as built-up 1. Therefore, the Landsat 8 image does not seem appropriate for classifying the land cover of the study area, Tehran city.

4.2. SAR Data

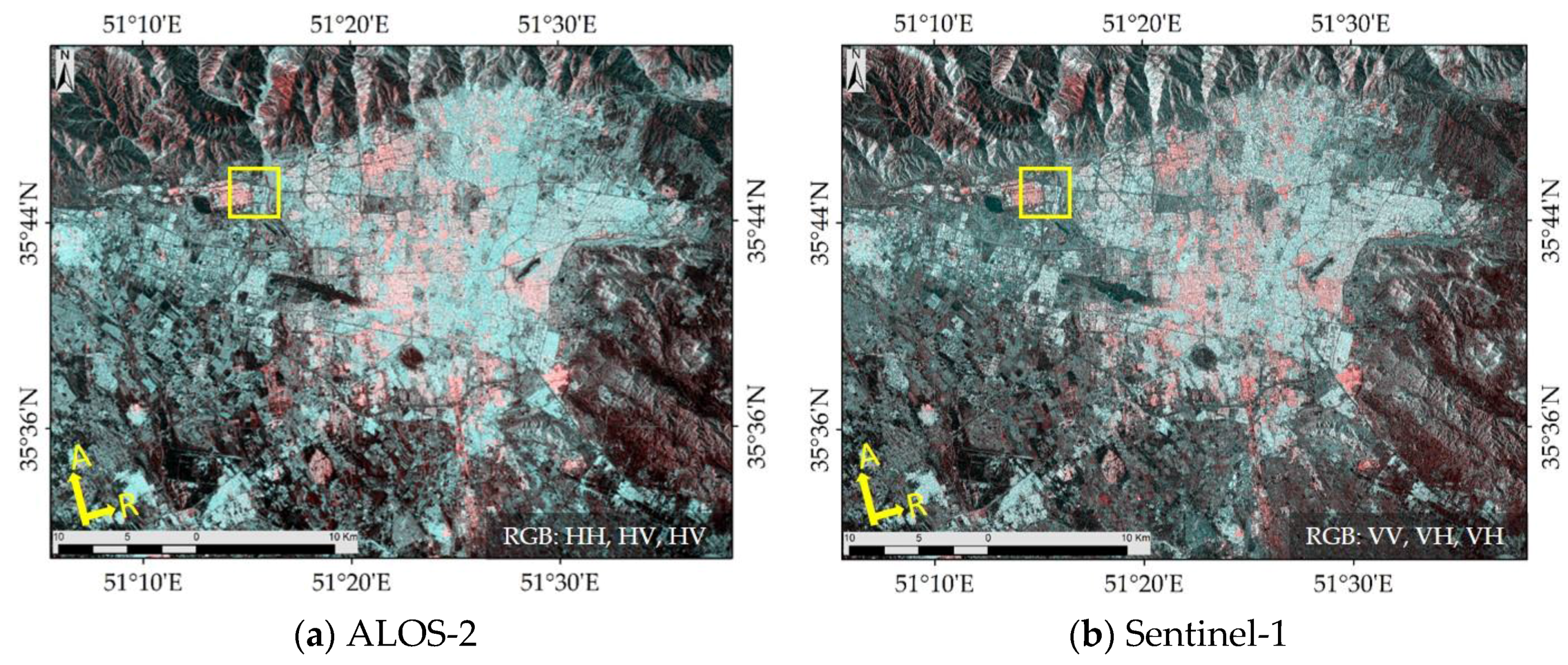

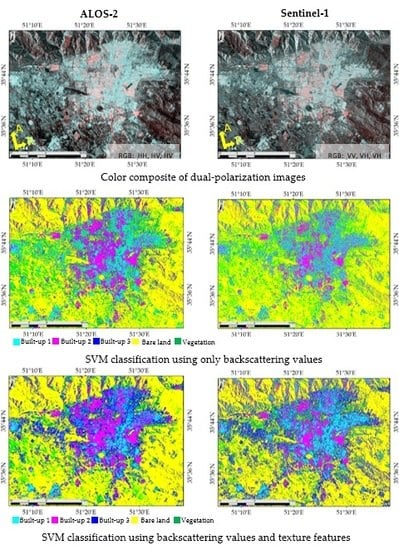

Considering the limitation of the Landsat 8 image in land cover classification and detection of various built-up classes and bare land in the study area, ALOS-2 and Sentinel-1 images with the capability of obtaining ground surface information based on the backscatter coefficient were selected to overcome this issue.

Figure 8 represents the color composites of the dual-polarization intensity images from the ALOS-2 and Sentinel-1 data. The cyan and red colors indicate different orientation and geometrical positions of the residential areas in Tehran.

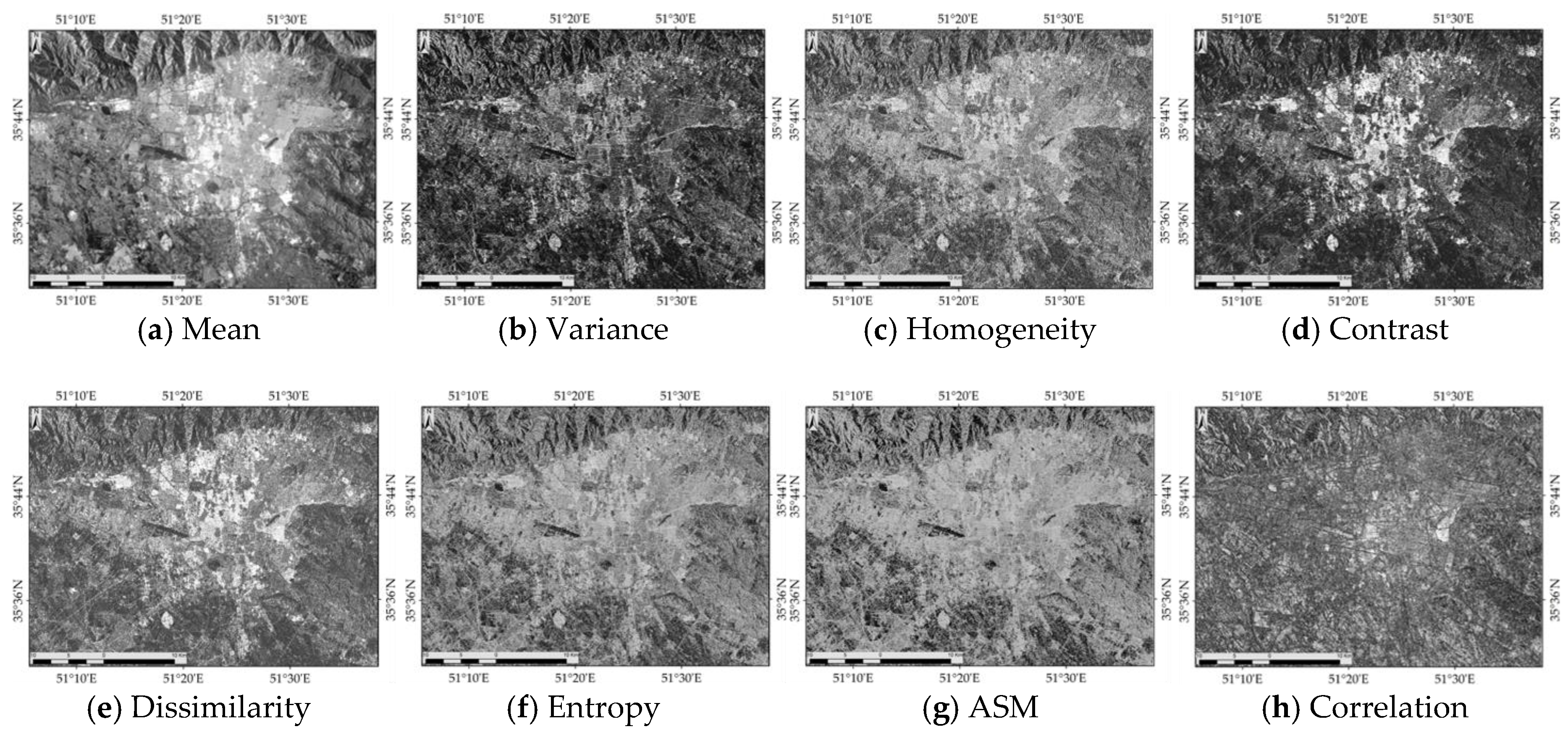

To increase the accuracy in the supervised classification from SAR data, texture analysis (GLCM) was applied to the dual-polarization images.

Figure 9 depicts the texture features obtained from ALOS-2 HH polarization. Texture analysis was applied to each backscatter value of HH, HV, VV, and VH. Thus, for each polarized image, eight texture measures were obtained: mean, contrast, ASM, correlation, homogeneity, variance, entropy, and dissimilarity. Then, the PCA was applied to reduce the number of textures measured.

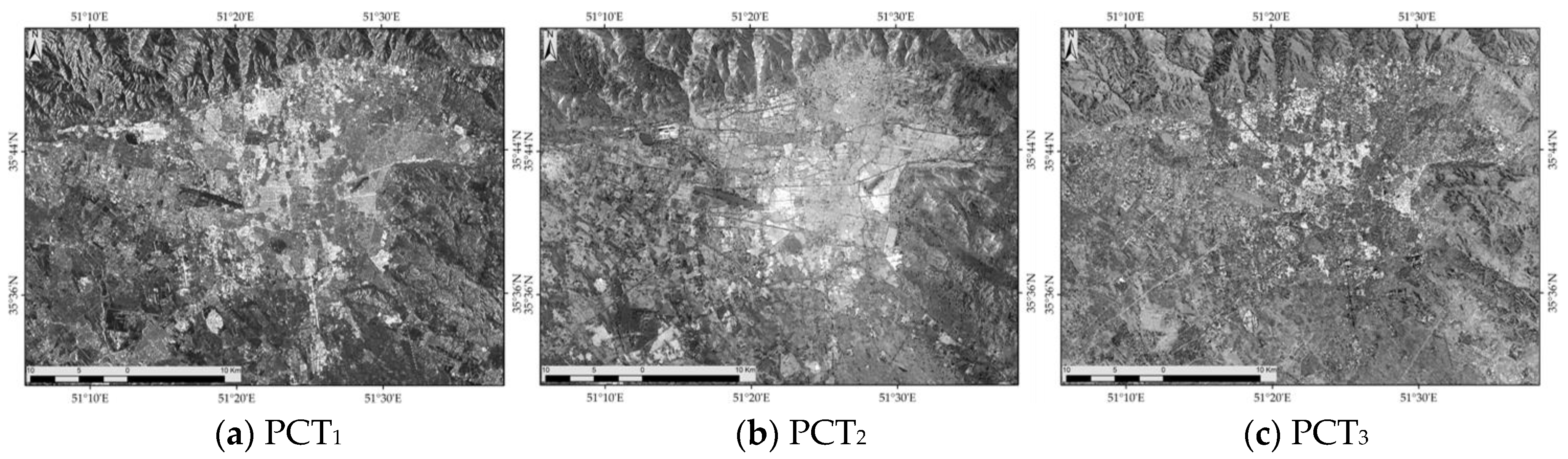

In order to select the appropriate number of principal components for texture measures (PCT) to perform the classification, we examined the region shown as a yellow frame in

Figure 8 using the HH and HV polarizations of ALOS-2. The SVM classification was carried out for two datasets. In the first dataset, the two backscatter values (HH and HV) and all their PCTs (18 layers in total) were used. In the second dataset, the two backscatter values and their first three PCTs (eight layers in total) were used. In both datasets the PCT was calculated from texture features obtained using a window size of 13 × 13. The first three components contain almost 99 percent of the variation of the input elements.

Table 3 shows the overall accuracy and the kappa coefficient obtained from the classification of the two datasets. A comparison shows a difference of only 1.26% for the overall accuracy and a difference of 0.02 for the kappa coefficient. Although the dataset with all the PCTs gained higher accuracy, the required time was significantly larger than that of the second dataset. Therefore, in this study, only the first three principal components were used.

Figure 10 illustrates the first three PCTs of the HH polarization. The classifications using ML and SVM were performed using the same fifteen training samples shown in

Figure 4. As mentioned before, the performance of both ML and SVM are compared to the truth data to observe which method produces the highest accuracy.

The window size is an important parameter for the texture measures. In this study, window sizes ranging from 3 × 3 to 21 × 21 were all evaluated to estimate the most suitable window size.

Figure 11 shows the kappa coefficients for the classification using only the backscatter values of ALOS-2 (HH, HV) and Sentinel-1 (VV, VH), and those by the layer stacking of the backscatter values and their PCTs results for different window sizes. The numbers of pixels in each land cover class in the truth data are not equal, thus the kappa coefficient is plotted in

Figure 11. The solid lines represent the results from SVM classification and the dashed lines those from ML classification, both for ALOS-2 and Sentinel-1. The graph shows an increase of the kappa coefficient by introducing the texture measures for the classification. The performance of SVM is better than that of ML classification. For the ALOS-2 satellite, the difference between the both methods increases significantly when the texture window size increases. The highest kappa coefficient was observed in the classification for ALOS-2 with window size 13 by SVM as shown by green arrow in the graph. The highest kappa coefficient of Sentinel-1 classification results was obtained from SVM in window size 11 × 11. Moreover, ALOS-2 provided better performance than Sentinel-1.

Figure 12 illustrates the SVM classification of Tehran using the layer stacking of backscatter values and their PCTs for the both ALOS-2 and Sentinel-1 satellites. The PCT was calculated using a window sizes of 13 × 13 and 11 × 11 for the ALOS-2 and Sentinel-1, respectively.

Herein, further evaluation is performed only for the datasets that produced the highest kappa coefficient. Thus, for the sake of brevity, the classification result from the ALOS-2 backscatter values and their first three PCTs using a window size of 13 × 13 is referred as ALOS-2-PCT. Similarly, the classification result from the Sentinel-1 backscatter values and their first three PCTs using a window size of 11 × 11 is referred as Sentinel-1-PCT.

Table 4,

Table 5 and

Table 6 illustrate the confusion matrix for the Landsat-8 image, ALOS-2 (backscatter values only), and ALOS-2-PCT, respectively.

Table 7 and

Table 8 represent the confusion matrix for the Sentinel-1, and the Sentinel-1 PCT, respectively. The tables show a remarkable improvement in both overall accuracy and kappa coefficient for the datasets that include texture measures over the datasets of only backscattering values and Landsat 8. The Landsat 8 produced an overall accuracy and kappa coefficient of 41.1% and 0.25, respectively.

Table 5 and

Table 6 depict that the overall accuracy and kappa coefficient of the ALOS-2-PCT is greater than the ALOS-2. The overall accuracy increased from 53.0% to 69.7% and the kappa coefficient from 0.38 to 0.58 when the texture measures of window size 13 × 13 were included. Moreover, the Sentinel-1 PCT shows higher overall accuracy and kappa coefficient than the Sentinel-1.

Table 7 and

Table 8 show an increase from 45.7% to 54.2% for the overall accuracy and an increment from 0.29 to 0.41 for the kappa coefficient when the textures of window size 11 × 11 were included.

The confusion matrix includes the producer and user accuracies as well. The diagonal elements in these tables depict the correctly classified pixels in each land cover class. The comparison shows that the highest producer accuracy was obtained when the texture features were included (

Table 6 and

Table 8). It can be observed from

Table 4 and

Table 5 that, although the producer accuracy increased for the bare land, built-up 1 and built-up 2 classes when the SAR backscatter values in classification is used instead of the Landsat 8, the producer accuracy decreased for the built-up 3 and vegetation classes from 27.1% and 63.8% to 15.6% and 59.6%. However, when texture features are included, an improvement is observed for all the land cover classes. Thus, in our study area, classification of land cover using SAR backscatter and texture feature is superior in terms of accuracy than classification from the Landsat 8 and SAR backscatter only. Furthermore, the user accuracy increased in all classes except vegetation for the ALOS-2-PCT. In case of the Sentinel-1, the user accuracy for the dataset including the texture measures improved in all the classes comparing with the dataset of backscattering values only and there was no improvement for the bare land and vegetation comparing with the Landsat 8 dataset.

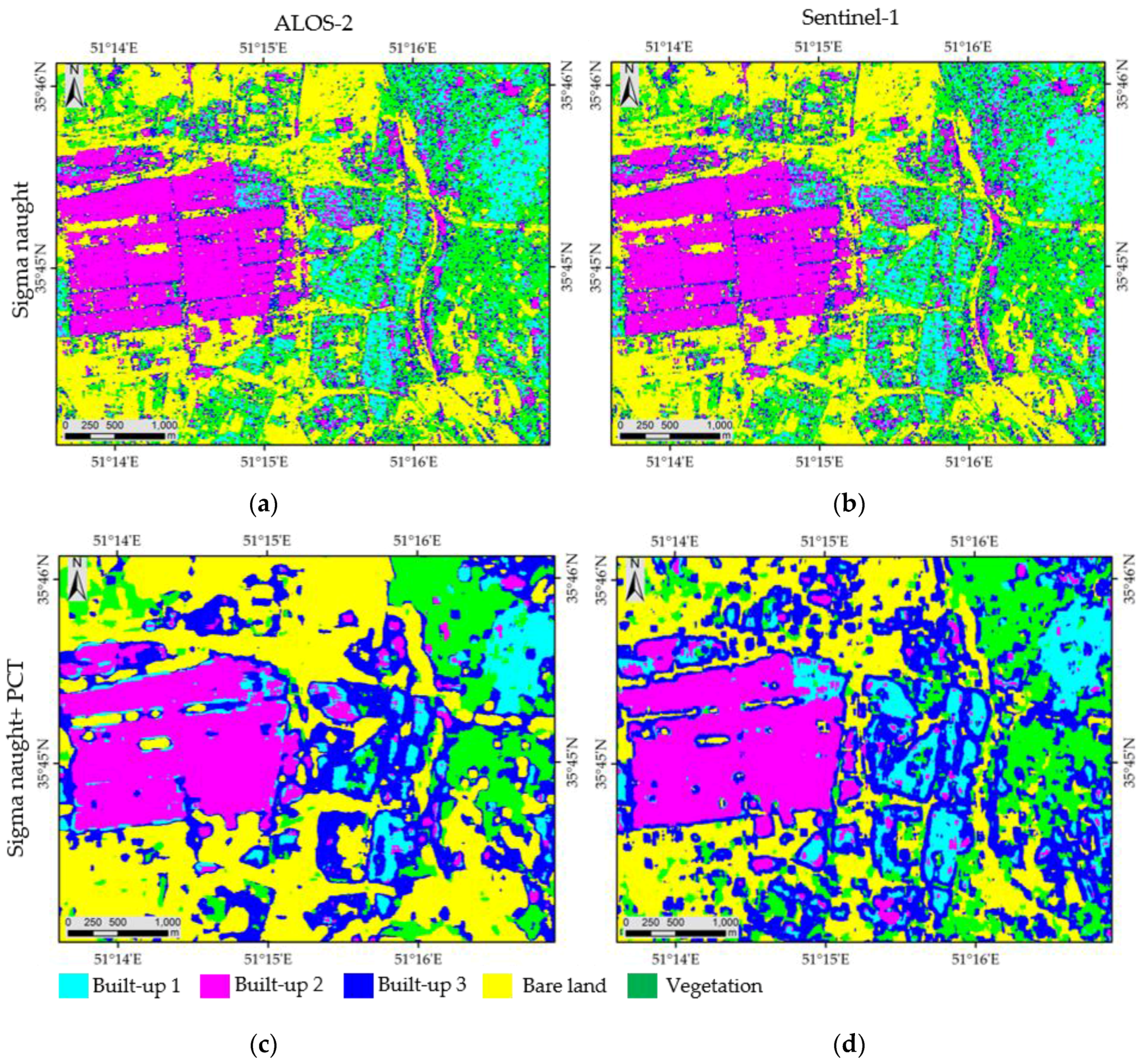

Figure 13 shows a closer look of SVM classification for the ALOS-2, Sentinel-1, ALOS-2-PCT, and Sentinel-1-PCT. The location of the area is shown in

Figure 12. The improvement in the accuracy mentioned before can be appreciated visually in this figure. The most remarkable observation is that when using texture measures of the SAR data for classification, the producer accuracy improved significantly, therefore, the amount of noise and misclassified pixels for all classes decreased and the classes become more uniform comparing to that using only SAR backscatter values or optical data.