Design of a Huggable Social Robot with Affective Expressions Using Projected Images

Abstract

:1. Introduction

2. Designing Pepita

2.1. The Form of Pepita

2.2. The Function of Pepita

2.2.1. Affective Expressions Using Projected Images

2.2.2. Sensing Tangible Affective Expressions

3. System Overview

3.1. Robot’s Components

3.2. Hug Detection Method

4. Performance Evaluation

4.1. Exploring the Design of Pepita

4.1.1. Questionnaire Overview

Based on your first impression, express using the following scale how acceptable you find the robot’s expressions of emotions?

4.1.2. Results

- Positive aspects (mentions): Shape (8), Projector (7), Color (7), Cute (5), Size (5), Flowers (5), Tail (4), Kind (3), Huggable (3), Interactive (2)

- Negative aspects (mentions): Scary eyes (9), Shape (7), Face (6), Texture (6), Artificial (4), Appearance (4), Tail (3), Hard (2), Not huggable (2), Quality (2)

4.2. Hug Detection Performance

4.2.1. Experiment Setup

- Hug,

- Press with both hands on the right and left sides,

- Press with both hands on the upper and lower areas.

4.2.2. Results

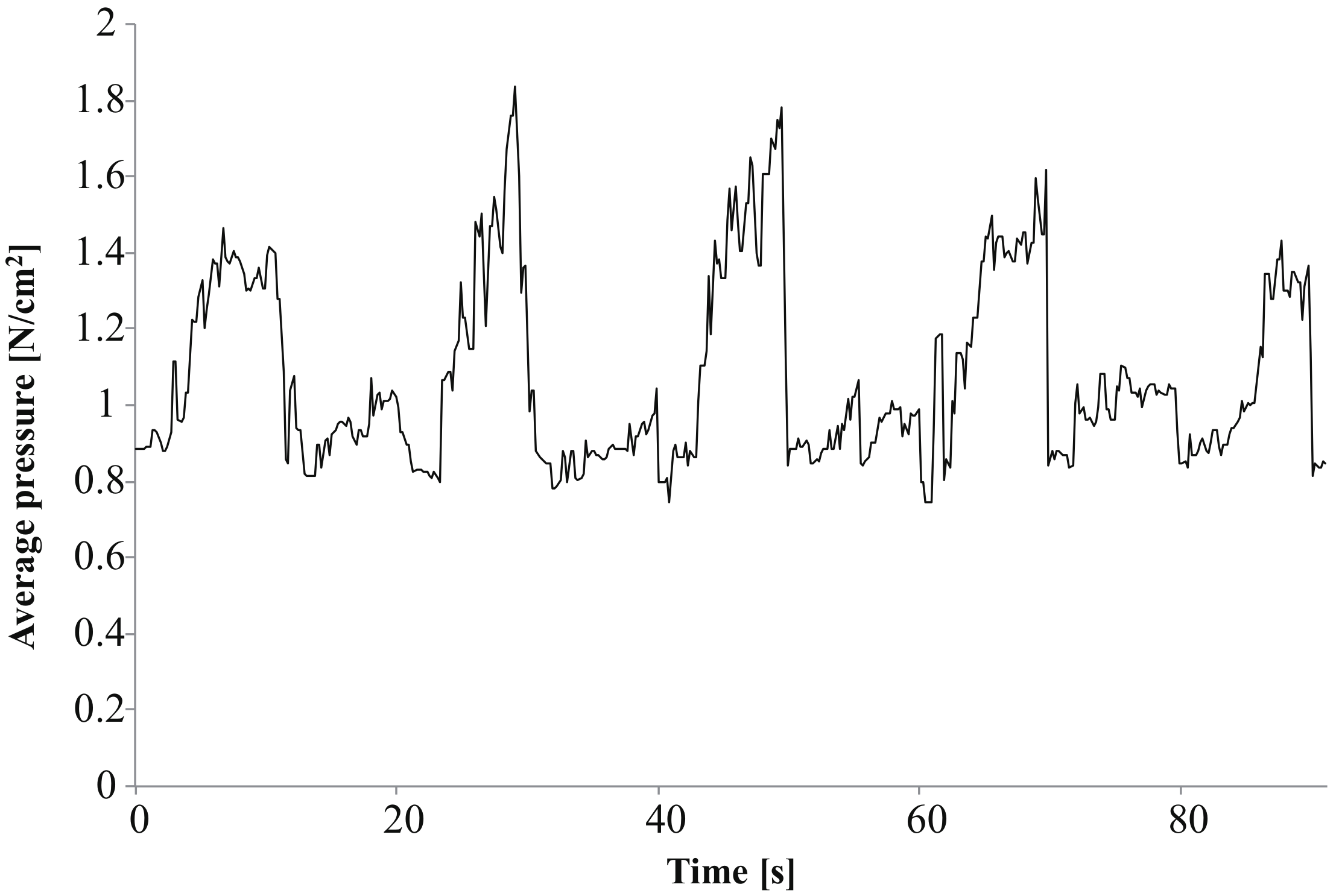

4.3. Force Test for the Hug Sensor

Results

4.4. Tail Pulling Detection Performance

4.4.1. Experiment Setup

- Pull,

- Shake,

- Grasp.

4.4.2. Results

4.5. Affective Feedback Using Projected Avatars

4.5.1. Questionnaire Overview

- Can each visual element displayed by the robotic device represent the intended affective expression?

- Comparing the LED and projected avatars, which is more efficient at representing the selected affective expression?

- When the robot is projecting avatars, is it perceived as one entity (the robot and its avatar) or two separate entities (a robot and an avatar)?

- In my general impression, I consider that the perceived behavior of the robot makes reference to a happy-like behavior.

- In my general impression, I consider that the perceived behavior of the robot makes reference to a sad-like behavior.

- I perceive the robot body interface as two entities: an avatar and a robot,

- I perceive the robot body interface as one entity: the robot and its avatar.

4.5.2. Results

5. Discussion

5.1. Exploring the Design of Pepita

5.2. Hug and Tail Pulling Detection Performance

5.3. Affective Feedback Using Projected Avatars

6. Application Scenarios

6.1. Description of the Social Context of Pepita

- Jane is a college student living away from her family. Every morning before going to the university, she leaves a message to her parents by hugging her robot. Jane observes how her avatar displays a happy expression and then sets the robot on the sofa. She comes back home in the evening and observes her parents’ avatar displaying a sad expression, which she understands as “I received a hug from my parents a long time ago”, she takes her Pepita and hugs it, observing her avatar appearing with a happy expression, and conveying to her parents that she is now home.

- On the weekend, Jane is talking with her mother by phone sharing stories of the recent days. She takes her robot and hugs it sending a message. Her father, who is in the living room watching TV, observes their robot reacting showing Jane’s robot’s "happy" avatar. Then, he asks to speak to her and say hello. While talking to her, he hugs back.

6.2. Combining Robots with Projectors

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A. Questionnaire for Exploring the Design of Pepita

- Page 1: The questionnaire consists of different photos and videos, and you will be asked to give your impressions. Before starting please be aware of: (1) In case you do not understand an English word, please refer to a dictionary to be sure of the meaning before answering, (2) Look at the picture and answer based on your first impressions, (3) Read all the sentences and instructions, (4) The entire questionnaire will take approx. 15min.

- Page 2: Consent to participate in the research.

- Page 3: Nationality, age, gender.

- Page 4: Part 1: This section will introduce different huggable robots. In other words, a robot that can sense hugging actions. These robots differ in size, appearance, and shape. You will be asked to rate them using a scale.

- Page 5: Please rate the following statements for each presented picture. (Photo of 1 of the 4 huggable robots, counterbalanced order) The robot looks huggable (5 points scale, from strongly disagree to “strongly agree”). The robot looks easy to hug (5 points scale, from “strongly disagree” to “strongly agree”). The robot looks appealing to hug (5 points scale, from “strongly disagree” to “strongly agree”).

- Page 6: (Photo of 1 of the 4 huggable robots, counterbalanced order) The robot looks huggable (5 points scale, from strongly disagree to “strongly agree”). The robot looks easy to hug (5 points scale, from strongly disagree to “strongly agree”). The robot looks appealing to hug (5 points scale, from strongly disagree to “strongly agree”).

- Page 7: (Photo of 1 of the 4 huggable robots, counterbalanced order) The robot looks huggable (5 points scale, from “strongly disagree” to “strongly agree”). The robot looks easy to hug (5 points scale, from “strongly disagree” to “strongly agree”). The robot looks appealing to hug (5 points scale, from “strongly disagree” to “strongly agree”).

- Page 8: (Photo of 1 of the 4 huggable robots, counterbalanced order) The robot looks huggable (5 points scale, from “strongly disagree” to “strongly agree”). The robot looks easy to hug (5 points scale, from “strongly disagree” to “strongly agree”). The robot looks appealing to hug (5 points scale, from “strongly disagree” to “strongly agree”).

- Page 9: When you rated the different huggable robots, how relevant were these features for your answers? (5 points scale, from unimportant to extremely important) (1) The shape of the robot body, (2) Size of the robot body, (3) Weight of the robot body, (4) Texture of the skin, (5) Softness of the robot body, (6) Appearance of the robot.

- Page 10: Part 2: When interacting with people, robots need to understand and convey a representation of emotions. In this section, you will watch four different videos in succession displaying different robot’s expressions. Then, using some photos as reference, you will be asked to give your general impressions about them.

- Page 11: (video of 1 of the 4 robots displaying facial expression by a display or with a mechanical face, counterbalanced order).

- Page 12: (Photo showing the previous robot’s expressions) Based on your first impression, please express using the following scale how acceptable for you is the robot’s expressions of emotions? (5 points scale using emoticons from sad to happy).

- Page 13: (video of 1 of the 4 robots displaying facial expression by a display or with a mechanical face, counterbalanced order).

- Page 14: (Photo showing the previous robot’s expressions) Based on your first impression, express using the following scale how acceptable for you is the robot’s expressions of emotions? (5 points scale using emoticons from sad to happy).

- Page 15: (video of 1 of the 4 robots displaying facial expression by a display or with a mechanical face, counterbalanced order).

- Page 16: (Photo showing the previous robot’s expressions) Based on your first impression, express using the following scale how acceptable for you is the robot’s expressions of emotions? (5 points scale using emoticons from sad to happy).

- Page 17: (video of 1 of the 4 robots displaying facial expression by a display or with a mechanical face, counterbalanced order).

- Page 18: (Photo showing the previous robot’s expressions) Based on your first impression, express using the following scale how acceptable for you is the robot’s expressions of emotions? (5 points scale using emoticons from sad to happy).

- Page 19: Part 3: In this section, you will be asked to give your general impression about the social robot companion Pepita. This robotic device was designed to be placed at home and interact with people in everyday life. (Video of a person interacting with Pepita)

- Page 20: (Photo of Pepita) Please express your impressions of Pepita using the following scale: (7 points scale with 8 items, from Awful to Nice, from Machinelike to Humanlike, from Artificial to Lifelike, from Unpleasant to Pleasant, from Fake to Natural, from Unfriendly to Friendly, from Unconscious to Conscious, from Unkind to Kind). This question was followed by two blank spaces to collect the features of Pepita that positively and negatively impacted the answers.

Appendix B. Questionnaire for Exploring the Affective Feedback Using Projected Avatars

- Page 1: The questionnaire consists of two sets of two videos followed by some questions: (1) The videos display Pepita, a robotic device displaying different visual feedback; (2) Then, you will be asked about your perception and impressions, (3) The entire questionnaire will take approx. 10 min.

- Page 2: Consent to participate in the research.

- Page 3: Nationality, age, gender.

- Page 4: Task overview.

- Page 5: Case 1: In the following video, the robot is displaying light color patterns. (You can play this video multiple times) (Player showing Projector or LED condition, happy or sad. All the options are counterbalanced)

- Page 6: Case 2: In the following video, the robot is displaying light color patterns. (You can play this video multiple times) (Player showing Projector or LED condition, happy or sad. All the options are counterbalanced)

- Page 7: Please select the option that reflects your immediate response to each statement. Do not think too long about each statement. Make sure you answer every question. (Photo of case 1) As a total impression, I consider that the perceived behavior of the robot makes reference to the following statements: Happy-like behavior (5 points scale, from “strongly disagree” to “strongly agree”). (Photo of case 2) As a total impression, I consider that the perceived behavior of the robot makes reference to the following statements: Happy-like behavior (5 points scale, from strongly disagree to strongly agree).

- Page 8: Case 3: In the following video, the robot is displaying light color patterns. (You can play this video multiple times) (Player showing Projector or LED condition, happy or sad. All the options are counterbalanced)

- Page 9: Case 4: In the following video, the robot is displaying light color patterns. (You can play this video multiple times) (Player showing Projector or LED condition, happy or sad. All the options are counterbalanced)

- Page 11: Please select the option that reflects your immediate response to each statement. Do not think too long about each statement. Make sure you answer every question. (Photo of case 3) As a total impression, I consider that the perceived behavior of the robot makes reference to the following statements: Happy-like behavior (5 points scale, from “strongly disagree” to “strongly agree”). (Photo of case 4) As a total impression, I consider that the perceived behavior of the robot makes reference to the following statements: Happy-like behavior (5 points scale, from strongly disagree to strongly agree).

- Page 12: (Photo of Pepita projecting avatars) From the following statements, choose the one that most closely reflects your perception about the robot body interface: (1) I perceive the robot body interface as two entities: an avatar and a robot. (2) I perceive the robot body interface as one entity: the robot and its avatar.

References

- Steunebrink, B.R.; Vergunst, N.L.; Mol, C.P.; Dignum, F.; Dastani, M.; Meyer, J.J.C. A Generic Architecture for a Companion Robot. In Proceedings of the ICINCO-RA (2), Funchal, Portugal, 11–15 May 2008; pp. 315–321. [Google Scholar]

- Hegel, F.; Muhl, C.; Wrede, B.; Hielscher-Fastabend, M.; Sagerer, G. Understanding social robots. In Proceedings of the Second International Conferences on Advances in Computer-Human Interactions (ACHI’09), Cancun, Mexico, 1–7 February 2009; pp. 169–174. [Google Scholar]

- AvatarMind’s iPal Robot. 2016. Available online: https://www.ipalrobot.com (accessed on 1 September 2018).

- Kędzierski, J.; Kaczmarek, P.; Dziergwa, M.; Tchoń, K. Design for a robotic companion. Int. J. Hum. Robot. 2015, 12, 1550007. [Google Scholar] [CrossRef]

- SoftBank Robotics’s Pepper Robot. 2014. Available online: https://www.softbank.jp/en/robot/ (accessed on 1 September 2018).

- Robinson, H.; MacDonald, B.; Kerse, N.; Broadbent, E. The psychosocial effects of a companion robot: A randomized controlled trial. J. Am. Med. Directors Assoc. 2013, 14, 661–667. [Google Scholar] [CrossRef] [PubMed]

- Friedman, B.; Kahn, P.H., Jr.; Hagman, J. Hardware companions?: What online AIBO discussion forums reveal about the human–robotic relationship. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Lauderdale, FL, USA, 27 April 27–2 May 2003; pp. 273–280. [Google Scholar]

- Hasbro’s Joy for All. 2015. Available online: https://joyforall.com/products/companion-cats (accessed on 1 September 2018).

- Jeong, S.; Santos, K.D.; Graca, S.; O’Connell, B.; Anderson, L.; Stenquist, N.; Fitzpatrick, K.; Goodenough, H.; Logan, D.; Weinstock, P.; et al. Designing a socially assistive robot for pediatric care. In Proceedings of the 14th International Conference on Interaction Design and Children, Boston, MA, USA, 21–24 June 2015; pp. 387–390. [Google Scholar]

- Sproutel’s Jerry the Bear. 2017. Available online: https://www.jerrythebear.com (accessed on 1 September 2018).

- Milliez, G. Buddy: A Companion Robot for the Whole Family. In Proceedings of the Companion of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018; p. 40. [Google Scholar]

- Asus’s Zenbo. 2016. Available online: https://zenbo.asus.com (accessed on 1 September 2018).

- Future Robot’s Furo-i. 2017. Available online: http://www.myfuro.com/furo-i/service-feature/ (accessed on 1 September 2018).

- Jibo. 2017. Available online: https://www.jibo.com (accessed on 1 September 2018).

- Fong, T.; Nourbakhsh, I.; Dautenhahn, K. A survey of socially interactive robots. Robot. Auton. Syst. 2003, 42, 143–166. [Google Scholar] [CrossRef] [Green Version]

- Goetz, J.; Kiesler, S.; Powers, A. Matching robot appearance and behavior to tasks to improve human–robot cooperation. In Proceedings of the 12th IEEE International Workshop on Robot and Human Interactive Communication, Millbrae, CA USA, 31 October–2 November 2003; pp. 55–60. [Google Scholar]

- Harbers, M.; Peeters, M.M.; Neerincx, M.A. Perceived autonomy of robots: Effects of appearance and context. In A World with Robots; Springer: Berlin, Germany, 2017; pp. 19–33. [Google Scholar]

- Lohse, M.; Hegel, F.; Wrede, B. Domestic applications for social robots: An online survey on the influence of appearance and capabilities. J. Phys. Agents 2008, 2. [Google Scholar] [CrossRef]

- Hegel, F.; Lohse, M.; Swadzba, A.; Wachsmuth, S.; Rohlfing, K.; Wrede, B. Classes of applications for social robots: A user study. In Proceedings of the 16th IEEE International Symposium on Robot and Human Interactive Communication, Jeju, Korea, 26–29 August 2007; pp. 938–943. [Google Scholar]

- Scassellati, B.; Admoni, H.; Matarić, M. Robots for use in autism research. Annu. Rev. Biomed. Eng. 2012, 14, 275–294. [Google Scholar] [CrossRef] [PubMed]

- Minato, T.; Shimada, M.; Ishiguro, H.; Itakura, S. Development of an android robot for studying human–robot interaction. In Proceedings of the 17th International Conference on Industrial and Engineering Applications of Artificial Intelligence and Expert Systems, Ottawa, ON, Canada, 17–20 May 2004; pp. 424–434. [Google Scholar]

- MacDorman, K.F.; Ishiguro, H. The uncanny advantage of using androids in cognitive and social science research. Interact. Stud. 2006, 7, 297–337. [Google Scholar] [CrossRef]

- Kaplan, F. Free creatures: The role of uselessness in the design of artificial pets. In Proceedings of the 1st Edutainment Robotics Workshop, Sankt Augustin, Germany, 27–28 September 2000; pp. 45–47. [Google Scholar]

- Heerink, M.; Albo-Canals, J.; Valenti-Soler, M.; Martinez-Martin, P.; Zondag, J.; Smits, C.; Anisuzzaman, S. Exploring requirements and alternative pet robots for robot assisted therapy with older adults with dementia. In International Conference on Social Robotics; Springer: Berlin, Germany, 2013; pp. 104–115. [Google Scholar]

- Melson, G.F.; Kahn, P.H., Jr.; Beck, A.; Friedman, B. Robotic pets in human lives: Implications for the human—Animal bond and for human relationships with personified technologies. J. Soc. Issues 2009, 65, 545–567. [Google Scholar] [CrossRef]

- Chen, T.L.; Kemp, C.C. Lead me by the hand: Evaluation of a direct physical interface for nursing assistant robots. In Proceedings of the 5th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Osaka, Japan, 2–5 March 2010; pp. 367–374. [Google Scholar]

- Weiss, A.; Bader, M.; Vincze, M.; Hasenhütl, G.; Moritsch, S. Designing a service robot for public space: An action and experiences-approach. In Proceedings of the 2014 ACM/IEEE International Conference on Human-Robot Interaction, Bielefeld, Germany, 3–6 March 2014; pp. 318–319. [Google Scholar]

- Sankai, Y. HAL: Hybrid assistive limb based on cybernics. In Robotics Research; Springer: Berlin, Germany, 2010; pp. 25–34. [Google Scholar]

- Saldien, J.; Goris, K.; Yilmazyildiz, S.; Verhelst, W.; Lefeber, D. On the design of the huggable robot Probo. J. Phys. Agents 2008, 2, 3–11. [Google Scholar] [CrossRef] [Green Version]

- Sebastian, J.; Tai, C.Y.; Lindholm, K.; Hsu, Y.L. Development of caricature robots for interaction with older adults. In International Conference on Human Aspects of IT for the Aged Population; Springer: Cham, Switzerland, 2015; pp. 324–332. [Google Scholar]

- Saldien, J.; Goris, K.; Vanderborght, B.; Vanderfaeillie, J.; Lefeber, D. Expressing emotions with the social robot Probo. Int. J. Soc. Robot. 2010, 2, 377–389. [Google Scholar] [CrossRef]

- Cameron, D.; Fernando, S.; Collins, E.; Millings, A.; Moore, R.; Sharkey, A.; Evers, V.; Prescott, T. Presence of life-like robot expressions influences children’s enjoyment of human–robot interactions in the field. In Proceedings of the 4th International Symposium on New Frontiers in Human-Robot, Canterbury, UK, 21–22 April 2015. [Google Scholar]

- Gockley, R.; Simmons, R.; Wang, J.; Busquets, D.; DiSalvo, C.; Caffrey, K.; Rosenthal, S.; Mink, J.; Thomas, S.; Adams, W.; et al. Grace and George: Social Robots at AAAI. In Proceedings of the Nineteenth National Conference on Artificial Intelligence (AAAI), San Jose, CA, USA, 25–29 July 2004; Volume 4, pp. 15–20. [Google Scholar]

- Marcos, S.; Gómez-García-Bermejo, J.; Zalama, E. A realistic, virtual head for human—Computer interaction. Interact. Comput. 2010, 22, 176–192. [Google Scholar] [CrossRef]

- Michaud, F.; Laplante, J.F.; Larouche, H.; Duquette, A.; Caron, S.; Létourneau, D.; Masson, P. Autonomous spherical mobile robot for child-development studies. IEEE Trans. Syst. Man Cybern. A Syst. Hum. 2005, 35, 471–480. [Google Scholar] [CrossRef]

- Terada, K.; Yamauchi, A.; Ito, A. Artificial emotion expression for a robot by dynamic color change. In Proceedings of the RO-MAN, Paris, France, 9–13 September 2012; pp. 314–321. [Google Scholar]

- Baraka, K.; Rosenthal, S.; Veloso, M. Enhancing human understanding of a mobile robot’s state and actions using expressive lights. In Proceedings of the 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; pp. 652–657. [Google Scholar]

- Yonezawa, T.; Yoshida, N.; Kuboshima, K. Design of Pet Robots with Limitations of Lives and Inherited Characteristics. In Proceedings of the 9th EAI International Conference on Bio-Inspired Information and Communications Technologies (Formerly BIONETICS), New York, NY, USA, 3–5 December 2016; pp. 69–72. [Google Scholar]

- Song, S.; Yamada, S. Expressing Emotions through Color, Sound, and Vibration with an Appearance-Constrained Social Robot. In Proceedings of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; pp. 2–11. [Google Scholar]

- Hemphill, M. A note on adults’ color—Emotion associations. J. Gen. Psychol. 1996, 157, 275–280. [Google Scholar] [CrossRef] [PubMed]

- Mayer, P.; Beck, C.; Panek, P. Examples of multimodal user interfaces for socially assistive robots in Ambient Assisted Living environments. In Proceedings of the IEEE 3rd International Conference on Cognitive Infocommunications (CogInfoCom), Kosice, Slovakia, 2–5 December 2012; pp. 401–406. [Google Scholar]

- Panek, P.; Edelmayer, G.; Mayer, P.; Beck, C.; Rauhala, M. User acceptance of a mobile LED projector on a socially assistive robot. In Ambient Assist. Living; Springer: Berlin/Heidelberg, Germany, 2012; pp. 77–91. [Google Scholar]

- Delaunay, F.; De Greeff, J.; Belpaeme, T. Towards retro-projected robot faces: An alternative to mechatronic and android faces. In Proceedings of the 18th IEEE International Symposium on Robot and Human Interactive Communication, Toyama, Japan, 27 September–2 October 2009; pp. 306–311. [Google Scholar]

- Pierce, B.; Kuratate, T.; Vogl, C.; Cheng, G. “Mask-Bot 2i”: An active customisable robotic head with interchangeable face. In Proceedings of the12th IEEE-RAS International Conference on Humanoid Robots (Humanoids), Osaka, Japan, 29 November–1 December 2012; pp. 520–525. [Google Scholar]

- Mollahosseini, A.; Graitzer, G.; Borts, E.; Conyers, S.; Voyles, R.M.; Cole, R.; Mahoor, M.H. Expressionbot: An emotive lifelike robotic face for face-to-face communication. In Proceedings of the 14th IEEE-RAS International Conference on Humanoid Robots (Humanoids), Madrid, Spain, 18–20 November 2014; pp. 1098–1103. [Google Scholar]

- Smith, J.; MacLean, K. Communicating emotion through a haptic link: Design space and methodology. Int. J. Hum.-Comput. Stud. 2007, 65, 376–387. [Google Scholar] [CrossRef]

- Hertenstein, M.J.; Holmes, R.; McCullough, M.; Keltner, D. The communication of emotion via touch. Emotion 2009, 9, 566. [Google Scholar] [CrossRef] [PubMed]

- App, B.; McIntosh, D.N.; Reed, C.L.; Hertenstein, M.J. Nonverbal channel use in communication of emotion: How may depend on why. Emotion 2011, 11, 603. [Google Scholar] [CrossRef] [PubMed]

- Forsell, L.M.; Åström, J.A. Meanings of hugging: From greeting behavior to touching implications. Compr. Psychol. 2012, 1, 13. [Google Scholar] [CrossRef]

- Hassenzahl, M.; Heidecker, S.; Eckoldt, K.; Diefenbach, S.; Hillmann, U. All you need is love: Current strategies of mediating intimate relationships through technology. ACM Trans. Comput.-Hum. Interact. 2012, 19, 30. [Google Scholar] [CrossRef]

- Sumioka, H.; Nakae, A.; Kanai, R.; Ishiguro, H. Huggable communication medium decreases cortisol levels. Sci. Rep. 2013, 3, 3034. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bonarini, A.; Garzotto, F.; Gelsomini, M.; Romero, M.; Clasadonte, F.; Yilmaz, A.N.Ç. A huggable, mobile robot for developmental disorder interventions in a multi-modal interaction space. In Proceedings of the 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; pp. 823–830. [Google Scholar]

- Fong, A.; Ashktorab, Z.; Froehlich, J. Bear-with-me: An embodied prototype to explore tangible two-way exchanges of emotional language. In Proceedings of the CHI’13 Extended Abstracts on Human Factors in Computing Systems, Paris, France, 27 April–2 May 2013; pp. 1011–1016. [Google Scholar]

- Kim, J.; Alspach, A.; Leite, I.; Yamane, K. Study of children’s hugging for interactive robot design. In Proceedings of the 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; pp. 557–561. [Google Scholar]

- Slyper, R.; Poupyrev, I.; Hodgins, J. Sensing through structure: Designing soft silicone sensors. In Proceedings of the Fifth International Conference on Tangible, Embedded, and Embodied Interaction, Funchal, Portugal, 22–26 January 2011; pp. 213–220. [Google Scholar]

- SoSci Survey (Version 2.6.00) [Computer Software]. Available online: https://www.soscisurvey.de (accessed on 1 September 2018).

- DiSalvo, C.; Gemperle, F.; Forlizzi, J.; Montgomery, E. The hug: An exploration of robotic form for intimate communication. In Proceedings of the 12th IEEE International Workshop on Robot and Human Interactive Communication, Millbrae, CA, USA, 2 November 2003; pp. 403–408. [Google Scholar]

- Nuñez, E.; Uchida, K.; Suzuki, K. PEPITA: A Design of Robot Pet Interface for Promoting Interaction. In International Conference on Social Robotics; Springer: Berlin, Germany, 2013; pp. 552–561. [Google Scholar]

- Bartneck, C. Who like androids more: Japanese or US Americans? In Proceedings of the 17th IEEE International Symposium on Robot and Human Interactive Communication, Munich, Germany, 1–3 August 2008; pp. 553–557. [Google Scholar]

- Gao, X.P.; Xin, J.H.; Sato, T.; Hansuebsai, A.; Scalzo, M.; Kajiwara, K.; Guan, S.S.; Valldeperas, J.; Lis, M.J.; Billger, M. Analysis of cross-cultural color emotion. Color Res. Appl. 2007, 32, 223–229. [Google Scholar] [CrossRef]

- Sokolova, M.V.; Fernández-Caballero, A. A review on the role of color and light in affective computing. Appl. Sci. 2015, 5, 275–293. [Google Scholar] [CrossRef]

- Feldmaier, J.; Marmat, T.; Kuhn, J.; Diepold, K. Evaluation of a RGB-LED-based Emotion Display for Affective Agents. arXiv, 2016; arXiv:1612.07303. [Google Scholar]

- Zhang, J.; Sharkey, A. Contextual recognition of robot emotions. In Towards Autonomous Robotic Systems; Springer: Berlin, Germany, 2011; pp. 78–89. [Google Scholar]

- Grigore, E.C.; Pereira, A.; Yang, J.J.; Zhou, I.; Wang, D.; Scassellati, B. Comparing Ways to Trigger Migration Between a Robot and a Virtually Embodied Character. In International Conference on Social Robotics; Springer: Berlin, Germany, 2016; pp. 839–849. [Google Scholar]

- Gomes, P.F.; Segura, E.M.; Cramer, H.; Paiva, T.; Paiva, A.; Holmquist, L.E. ViPleo and PhyPleo: Artificial pet with two embodiments. In Proceedings of the 8th International Conference on Advances in Computer Entertainment Technology, Lisbon, Portugal, 8–11 November 2011; p. 3. [Google Scholar]

- Segura, E.M.; Cramer, H.; Gomes, P.F.; Nylander, S.; Paiva, A. Revive!: Reactions to migration between different embodiments when playing with robotic pets. In Proceedings of the 11th International Conference on Interaction Design and Children, Bremen, Germany, 12–15 June 2012; pp. 88–97. [Google Scholar]

- Stiehl, W.D.; Lieberman, J.; Breazeal, C.; Basel, L.; Lalla, L.; Wolf, M. Design of a therapeutic robotic companion for relational, affective touch. In Proceedings of the IEEE International Workshop on Robot and Human Interactive Communication, Nashville, TN, USA, 13–15 August 2005; pp. 408–415. [Google Scholar]

| n = 52 | Looks Huggable | Looks Easy to Hug | Looks Appealing |

|---|---|---|---|

| (mean ± SD) | (mean ± SD) | (mean ± SD) | |

| Pepita | 3.21 ± 1.04 | 3.56 ± 1.05 | 2.77 ± 1.19 |

| Probo | 3.10 ± 1.23 | 3.31 ± 1.12 | 1.96 ± 0.96 |

| The huggable | 3.88 ± 0.95 | 3.83 ± 1.01 | 3.52 ± 1.18 |

| The hug | 2.83 ± 1.05 | 2.96 ± 1.14 | 2.42 ± 1.20 |

| n = 26 | Projector | LED | ||

|---|---|---|---|---|

| Happy | Sad | Happy | Sad | |

| Score as “Happy” (mean± SD) | ||||

| Score as “Sad” (mean± SD) | ||||

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nunez, E.; Hirokawa, M.; Suzuki, K. Design of a Huggable Social Robot with Affective Expressions Using Projected Images. Appl. Sci. 2018, 8, 2298. https://doi.org/10.3390/app8112298

Nunez E, Hirokawa M, Suzuki K. Design of a Huggable Social Robot with Affective Expressions Using Projected Images. Applied Sciences. 2018; 8(11):2298. https://doi.org/10.3390/app8112298

Chicago/Turabian StyleNunez, Eleuda, Masakazu Hirokawa, and Kenji Suzuki. 2018. "Design of a Huggable Social Robot with Affective Expressions Using Projected Images" Applied Sciences 8, no. 11: 2298. https://doi.org/10.3390/app8112298