Within and Across-Language Comparison of Vocal Emotions in Mandarin and English

Abstract

:1. Introduction

2. Methods

2.1. Speech Materials

2.2. Subjects

2.3. Recording Procedure

2.4. Listening Tests

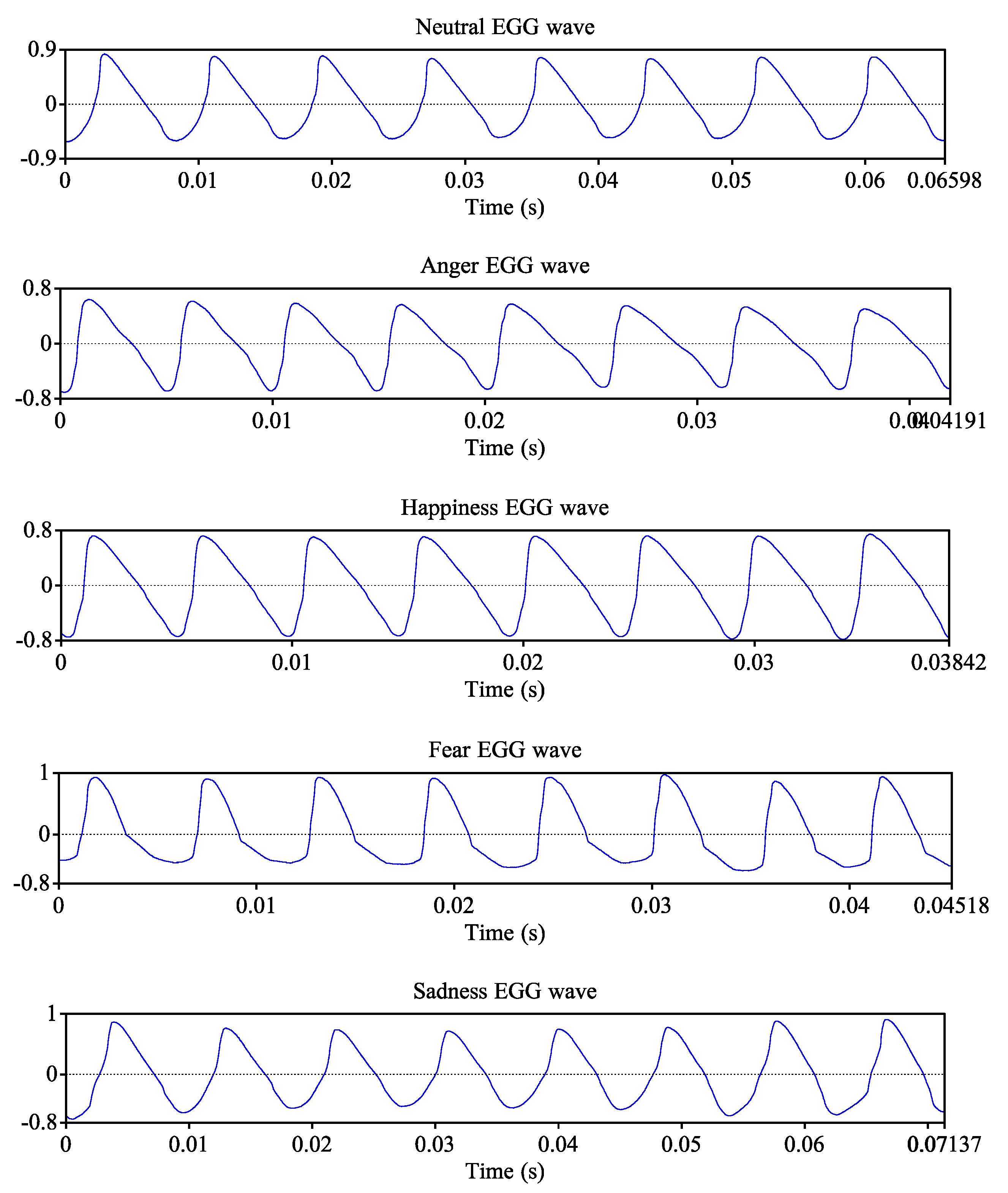

2.5. Measurements

3. Results

3.1. Within-Language Comparison of Vocal Emotions

3.1.1. Mandarin

3.1.2. English

3.2. Across-Language Comparison of Vocal Emotions: Mandarin versus English

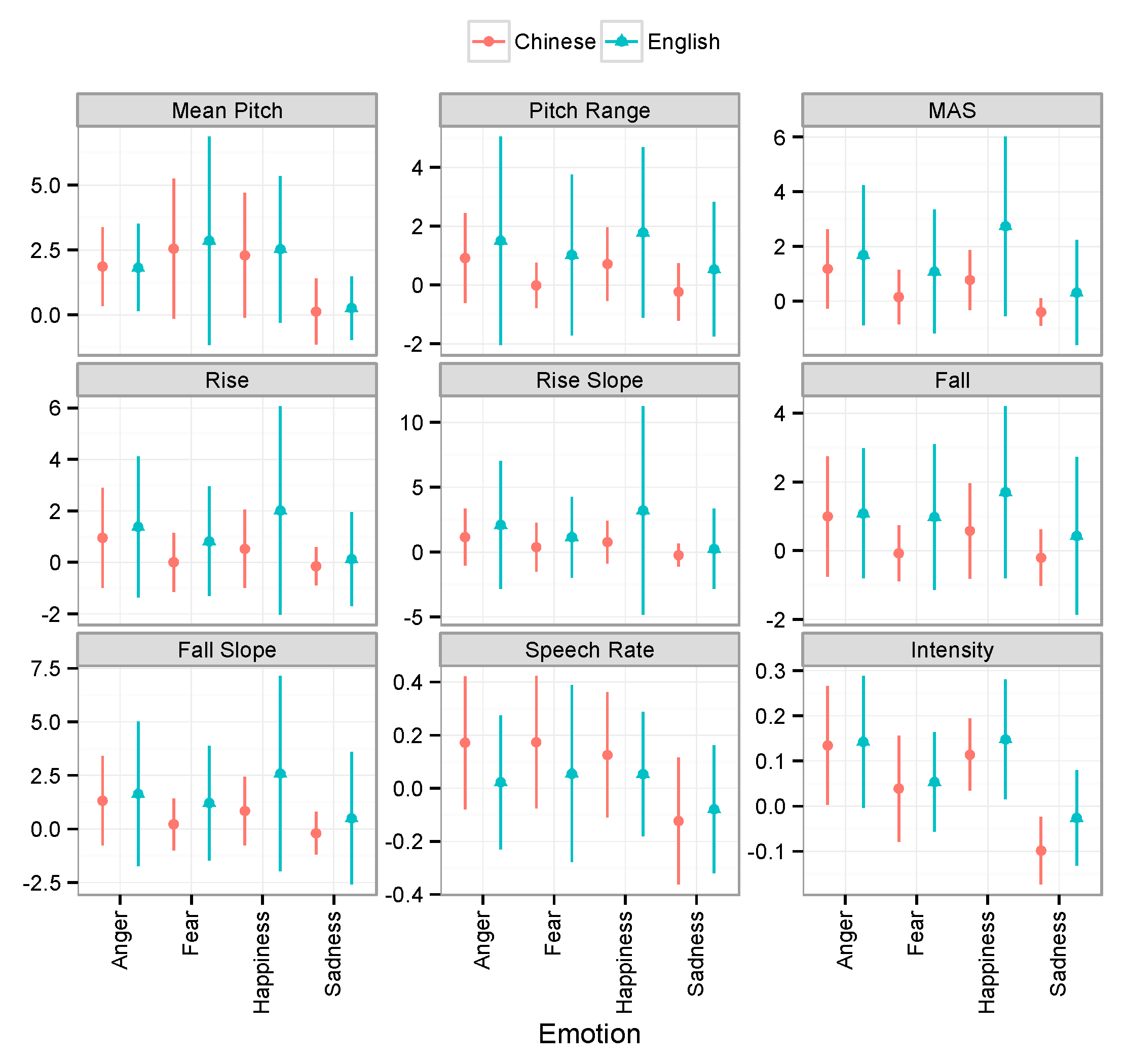

3.2.1. Prosodic Cues for Encoding Emotions in Mandarin and English

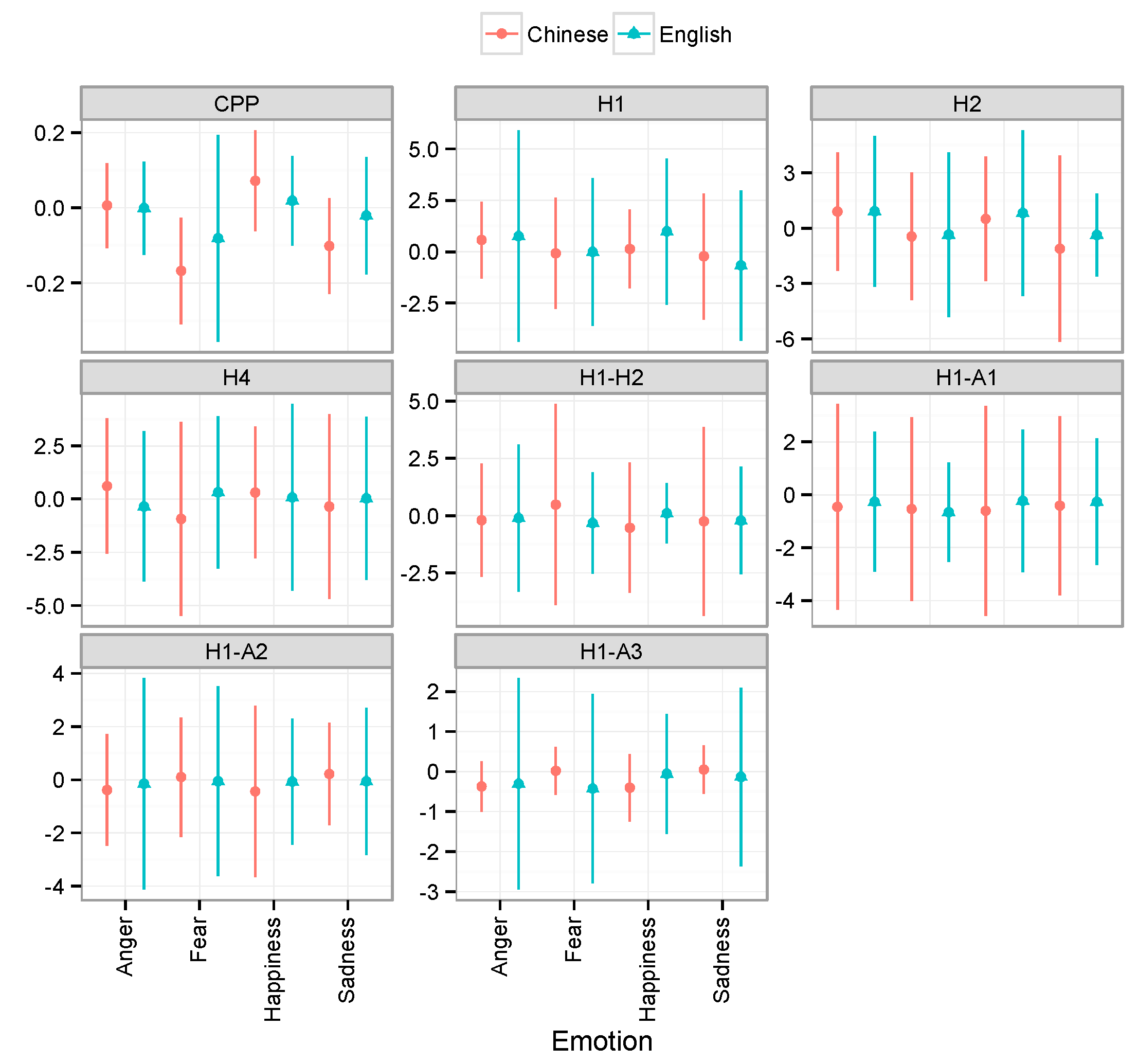

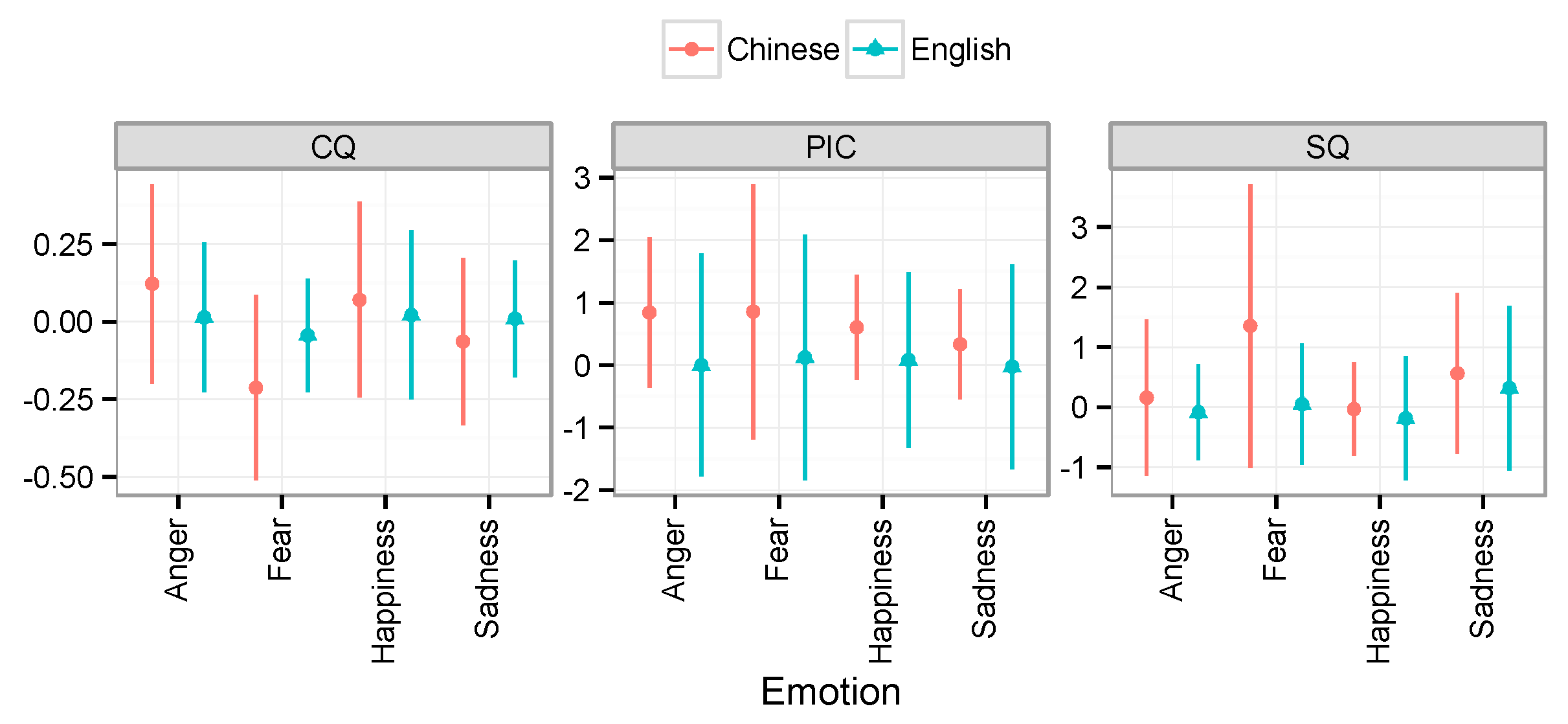

3.2.2. Phonation Cues for Encoding Emotions in Mandarin and English

4. Discussion and Conclusions

4.1. Acoustic and Physiological Patterns of Each Vocal Emotion in Mandarin and English

4.2. Multidimensionality of the Acoustic Realization of Vocal Emotions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Xu, Y. Speech melody as articulatorily implemented communicative functions. Speech Commun. 2005, 46, 220–251. [Google Scholar] [CrossRef] [Green Version]

- Juslin, P.N.; Laukka, P. Communication of emotions in vocal expression and music performance: Different channels, same code? Psychol. Bull. 2003, 129, 770–814. [Google Scholar] [CrossRef] [PubMed]

- Murray, I.R.; Arnott, J.L. Toward the simulation of emotion in synthetic speech: A review of the literature on human vocal emotion. J. Acoust. Soc. Am. 1993, 93, 1097–1108. [Google Scholar] [CrossRef]

- Scherer, K.R. Vocal communication of emotion: A review of research paradigms. Speech Commun. 2003, 40, 227–256. [Google Scholar] [CrossRef] [Green Version]

- Ekman, P. Are there basic emotions? Psychol. Rev. 1992, 99, 550–553. [Google Scholar] [CrossRef] [PubMed]

- Juslin, P.N.; Scherer, K.R. Vocal expression of affect. In The New Handbook of Methods in Nonverbal Behavior Research; Oxford University Press: New York, NY, USA, 2005; pp. 65–135. [Google Scholar]

- Pell, M.D.; Paulmann, S.; Dara, C.; Alasseri, A.; Kotz, S.A. Factors in the recognition of vocally expressed emotions: A comparison of four languages. J. Phon. 2009, 37, 417–435. [Google Scholar] [CrossRef]

- Toivanen, J.; Waaramaa, T.; Alku, P.; Laukkanen, A.-M.; Seppänen, T.; Väyrynen, E.; Airas, M. Emotions in [a]: A perceptual and acoustic study. Logop. Phoniatr. Vocology 2006, 31, 43–48. [Google Scholar] [CrossRef]

- Zhang, S. Emotion recognition in Chinese natural speech by combining prosody and voice quality features. In International Symposium on Neural Networks; Springer: Berlin, Germany, 2008; pp. 457–464. [Google Scholar]

- Patel, S.; Scherer, K.R.; Björkner, E.; Sundberg, J. Mapping emotions into acoustic space: The role of voice production. Biol. Psychol. 2011, 87, 93–98. [Google Scholar] [CrossRef]

- Xu, Y.; Kelly, A.; Smillie, C. Emotional expressions as communicative signals. In Prosody Iconicity; John Benjamins Publishing Company: Amsterdam, The Netherlands, 2013; pp. 33–60. [Google Scholar]

- Xu, Y.; Lee, A.; Wu, W.-L.; Liu, X.; Birkholz, P. Human vocal attractiveness as signaled by body size projection. PLoS ONE 2013, 8, e62397. [Google Scholar] [CrossRef]

- Chuenwattanapranithi, S.; Xu, Y.; Thipakorn, B.; Maneewongvatana, S. Encoding emotions in speech with the size code. Phonetica 2008, 65, 210–230. [Google Scholar] [CrossRef]

- Liu, X.; Xu, Y. Body size projection and its relation to emotional speech—Evidence from Mandarin Chinese. In Proceedings of the Seventh Speech Prosody Conference 2014, Dublin, Ireland, 20–23 May 2014; pp. 974–977. [Google Scholar]

- Wang, T.; Ding, H.; Kuang, J.; Ma, Q. Mapping Emotions into Acoustic Space: The Role of Voice Quality. In Proceedings of the Fifteenth Annual Conference of the International Speech Communication Association (INTERSPEECH 2014), Singapore, 14–18 September 2014; pp. 1978–1982. [Google Scholar]

- Scherer, K.R.; Banse, R.; Wallbott, H.G. Emotion inferences from vocal expression correlate across languages and cultures. J. Cross-Cult. Psychol. 2001, 32, 76–92. [Google Scholar] [CrossRef]

- Thompson, W.F.; Balkwill, L.-L. Decoding speech prosody in five languages. Semiotica 2006, 2006, 407–424. [Google Scholar] [CrossRef]

- Graham, C.R.; Hamblin, A.W.; Feldstein, S. Recognition of emotion in English voices by speakers of Japanese, Spanish and English. IRAL-Int. Rev. Appl. Linguist. Lang. Teach. 2001, 39, 19–37. [Google Scholar] [CrossRef]

- Li, A.-J.; Jia, Y.; Fang, Q.; Dang, J.-W. Emotional intonation modeling: A cross-language study on Chinese and Japanese. In Proceedings of the Signal and Information Processing Association Annual Summit and Conference (APSIPA), Kaohsiung, Taiwan, 29 October–1 November 2013; IEEE: New York, NY, USA, 2013; pp. 1–6. [Google Scholar]

- Wang, T.; Ding, H.; Gu, W. Perceptual Study for Emotional Speech of Mandarin Chinese. In Proceedings of the Sixth International Conference on Speech Prosody 2012, Shanghai, China, 22–25 May 2012; pp. 653–656. [Google Scholar]

- Anolli, L.; Wang, L.; Mantovani, F.; De Toni, A. The voice of emotion in Chinese and Italian young adults. J. Cross-Cult. Psychol. 2008, 39, 565–598. [Google Scholar] [CrossRef]

- Cruttenden, A. Intonation; Cambridge University Press: Cambridge, UK, 1997. [Google Scholar]

- Hirst, D.; Wakefield, J.; Li, H.Y. Does lexical tone restrict the paralinguistic use of pitch? Comparing melody metrics for English, French, Mandarin and Cantonese. In Proceedings of the International Conference on the Phonetics of Languages in China, Hong Kong, China, 2–4 December 2013; pp. 15–18. [Google Scholar]

- Ross, E.D.; Edmondson, J.A.; Seibert, G.B. The effect of affect on various acoustic measures of prosody in tone and non-tone languages: A comparison based on computer analysis of voice. J. Phon. 1986, 14, 283–302. [Google Scholar]

- Wang, T.; Lee, Y. Does restriction of pitch variation affect the perception of vocal emotions in Mandarin Chinese? J. Acoust. Soc. Am. 2015, 137, EL117–EL123. [Google Scholar] [CrossRef]

- Wang, T.; Qian, Y. Are pitch variation cues indispensable to distinguish vocal emotions? In Proceedings of the 9th International Conference on Speech Prosody 2018, Poznań, Poland, 13–16 June 2018; pp. 324–328. [Google Scholar]

- Chong, C.; Kim, J.; Davis, C. Exploring acoustic differences between Cantonese (tonal) and English (non-tonal) spoken expressions of emotions. In Proceedings of the Sixteenth Annual Conference of the International Speech Communication Association (INTERSPEECH 2015), Dresden, Germany, 6–10 September 2015; pp. 1522–1526. [Google Scholar]

- Laver, J. The phonetic description of voice quality. Camb. Stud. Linguist. Lond. 1980, 31, 1–186. [Google Scholar]

- Gobl, C.; Chasaide, A.N. The role of voice quality in communicating emotion, mood and attitude. Speech Commun. 2003, 40, 189–212. [Google Scholar] [CrossRef]

- Stevens, K.N. Diverse acoustic cues at consonantal landmarks. Phonetica 2000, 57, 139–151. [Google Scholar] [CrossRef]

- Wang, T.; Lee, Y.-C.; Ma, Q. An experimental study of emotional speech in Mandarin and English. In Proceedings of the 8th International Conference on Speech Prosody 2016, Boston, MA, USA, 31 May–3 June 2016; pp. 430–434. [Google Scholar]

- Qualtrics Software; Version 2018; Qualtrics: Provo, UT, USA; Available online: https://www.qualtrics.com (accessed on 7 December 2018).

- Bigi, B.; Hirst, D. SPeech Phonetization Alignment and Syllabification (SPPAS): A tool for the automatic analysis of speech prosody. In Proceedings of the Sixth International Conference on Speech Prosody 2012, Shanghai, China, 22–25 May 2012; pp. 19–22. [Google Scholar]

- Hirst, D. The analysis by synthesis of speech melody: From data to models. J. Speech Sci. 2011, 1, 55–83. [Google Scholar]

- Hirst, D. Melody metrics for prosodic typology: Comparing English, French and Chinese. In Proceedings of the 14th Annual Conference of the International Speech Communication Association (INTERSPEECH 2013), Lyon, France, 25–29 August 2013; pp. 572–576. [Google Scholar]

- De Looze, C.; Hirst, D.J. The OMe (Octave-Median) scale: A natural scale for speech melody. In Proceedings of the Seventh International Conference on Speech Prosody, Dublin, Ireland, 20–23 May 2014; pp. 910–913. [Google Scholar]

- Shue, Y.-L.; Keating, P.A.; Vicenik, C.; Yu, K. Voicesauce: A Program for Voice Analysis. In Proceedings of the 17th International Conference of Phonetic Sciences (ICPhS 17), Hong Kong, China, 17–21 August 2011. [Google Scholar]

- Hillenbrand, J.; Cleveland, R.A.; Erickson, R.L. Acoustic Correlates of Breathy Vocal Quality. J. Speech Hear. Res. 1994, 37, 769–778. [Google Scholar] [CrossRef] [PubMed]

- Johnson, K. The Auditory/Perceptual Basis for Speech Segmentation; Department of Linguistics, Ohio State University: Columbus, OH, USA, 1997. [Google Scholar]

- Kuang, J.; Keating, P. Vocal fold vibratory patterns in tense versus lax phonation contrasts. J. Acoust. Soc. Am. 2014, 136, 2784–2797. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tehrani, H. EggWorks. 2012. Available online: http://www.linguistics.ucla.edu/faciliti/facilities/physiology/EGG.htm (accessed on 13 August 2014).

- Rothenberg, M.; Mahshie, J.J. Monitoring Vocal Fold Abduction through Vocal Fold Contact Area. J. Speech Hear. Res. 1988, 31, 338–351. [Google Scholar] [CrossRef] [PubMed]

- Keating, P.; Esposito, C.M.; Garellek, M.; Khan, S.U.D.; Kuang, J. Phonation Contrasts across Languages. In Proceedings of the 17th International Conference of Phonetic Sciences (ICPhS 17), Hong Kong, China, 17–21 August 2011; Volume 108, pp. 188–202. [Google Scholar]

- Marasek, K. Glottal correlates of the word stress and the tense/lax opposition in German. In Proceedings of the Fourth International Conference on Spoken Language Processing (ICSLP ’96), Philadelphia, PA, USA, 3–6 October 1996; Volume 3, pp. 1573–1576. [Google Scholar]

- Kuznetsova, A.; Brockhoff, P.B.; Christensen, R.H.B. lmerTest Package: Tests in Linear Mixed Effects Models. J. Stat. Softw. 2017, 82. [Google Scholar] [CrossRef] [Green Version]

- R Core Team. A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2015. [Google Scholar]

- Gelman, A.; Hill, J. Data Analysis Using Regression and Multilevel/Hierarchical Models; Cambridge University Press: New York, NY, USA, 2006. [Google Scholar]

- Nasoz, F.; Alvarez, K.; Lisetti, C.L.; Finkelstein, N. Emotion recognition from physiological signals using wireless sensors for presence technologies. Cogn. Techol. Work 2004, 6, 4–14. [Google Scholar] [CrossRef]

- Scherer, K.R. Vocal affect expression: Review and a model for future research. Psychol. Bull. 1986, 99, 143–165. [Google Scholar] [CrossRef] [PubMed]

- Johnstone, T.; Scherer, K.R. Vocal communication of emotion. In Handbook of Emotions; Lewis, M., Haviland-Jones, J.M., Eds.; The Guilford Press: New York, NY, USA, 2000; pp. 220–235. ISBN 978-1-57230-529-8. [Google Scholar]

- Yuan, J.; Shen, L.; Chen, F. The acoustic realization of anger, fear, joy and sadness in Chinese. In Proceedings of the Seventh International Conference on Spoken Language Processing, Denver, CO, USA, 16–20 September 2002; pp. 2025–2028. [Google Scholar]

- Williams, C.E.; Stevens, K.N. Emotions and Speech: Some Acoustical Correlates. J. Acoust. Soc. Am. 1972, 52, 1238–1250. [Google Scholar] [CrossRef] [PubMed]

- Juslin, P.N.; Laukka, P. Impact of intended emotion intensity on cue utilization and decoding accuracy in vocal expression of emotion. Emotion 2001, 1, 381–412. [Google Scholar] [CrossRef] [PubMed]

- Liu, P.; Pell, M.D. Recognizing vocal emotions in Mandarin Chinese: A validated database of Chinese vocal emotional stimuli. Behav. Res. 2012, 44, 1042–1051. [Google Scholar] [CrossRef] [Green Version]

- Nwe, T.L.; Foo, S.W.; De Silva, L.C. Speech emotion recognition using hidden Markov models. Speech Commun. 2003, 41, 603–623. [Google Scholar] [CrossRef]

| Emotional Context | Mandarin | English |

|---|---|---|

| Happiness | A:你看起来很开心啊. | A: You look so happy. |

| B:我刚收到学校的邮件.是好消息哦!(高兴地说)导师不来参加我的汇报了.他是系里最严厉的老师,他会指出学生报告中的任何一点儿小错误. | B: I just got an email from school. (In a happy mood) My advisor won’t come to my presentation. He is the toughest advisor in the department and points out every little mistake during presentations. | |

| Anger | A:我听说你今天要做报告.你的导师也来参加吧? | A: I heard you are giving a presentation today. Your advisor is coming today. Right? |

| B:他不来!(生气地说)导师不来参加我的汇报了.他都不关心我做什么! | B: NO! (In an angry mood) My advisor won’t come to my presentation. He never keeps his promises. | |

| Sadness | A:你看起来很伤心,怎么了? | A: You seem sad. What’s the matter? |

| B:我为了准备这次汇报很辛苦,就想在导师面前留个好印象.但是我刚收到导师的邮件说他有事.(伤心地说)导师不来参加我的汇报了. | B: I’ve been working so hard for this presentation but I just got an email from my advisor that he is not feeling well. (In a sad mood), My advisor won’t come to my presentation. | |

| Fear | A:你怎么了? | A: What’s wrong? |

| B:我今天要做汇报,本来导师在场,想着被问蒙了的时候他可以帮帮我.但是我收到导师的邮件说他儿子生病了.(害怕地说)导师不来参加我的汇报了.他不在,我汇报的时候更紧张啊. | B: I am giving a presentation today but I got an email from my advisor that his son is now in hospital. (In a fearful mood) My advisor won’t come to my presentation. I am so afraid of giving a talk without him today. |

| Language | Measurement | Emotion | ||||

|---|---|---|---|---|---|---|

| Anger | Fear | Happiness | Neutral | Sadness | ||

| Mandarin | Mean pitch | 0.474 | 0.889 | 0.664 | −1.111 | −0.952 |

| Pitch range | 1.392 | −0.228 | 1.055 | −0.050 | −0.656 | |

| Mean absolute slope | 1.561 | −0.023 | 0.945 | −0.165 | −0.864 | |

| Rise | 1.363 | −0.206 | 0.648 | 0.006 | −0.417 | |

| Fall | −1.539 | 0.267 | −0.843 | −0.009 | 0.502 | |

| Rise slope | 1.231 | 0.070 | 0.669 | −0.206 | −0.716 | |

| Fall slope | −1.492 | 0.032 | −0.852 | 0.184 | 0.636 | |

| Speech rate | 1.059 | 1.098 | 0.742 | 0.034 | −0.670 | |

| Intensity | 1.157 | 0.056 | 0.954 | −0.308 | −1.466 | |

| CPP | 0.470 | −1.223 | 1.119 | 0.450 | −0.525 | |

| H1 | 0.902 | 0.376 | 0.757 | 0.063 | −1.352 | |

| H2 | 1.103 | −0.401 | 0.956 | 0.256 | −1.088 | |

| H4 | 1.011 | −0.387 | 1.038 | 0.539 | −1.100 | |

| H1-H2 | −0.694 | 1.380 | −0.580 | −0.325 | −0.110 | |

| H1-A1 | −0.864 | 0.872 | −0.668 | 0.065 | 0.084 | |

| H1-A2 | −0.686 | 0.619 | −0.814 | 0.252 | 0.584 | |

| H1-A3 | −0.536 | 0.615 | −0.589 | 0.619 | 0.732 | |

| CQ | 0.947 | −1.230 | 0.630 | 0.225 | −0.218 | |

| PIC | 0.710 | 0.537 | 0.365 | −0.920 | −0.394 | |

| SQ | −0.431 | 1.091 | −0.566 | −0.445 | 0.180 | |

| English | Mean pitch | 0.350 | 0.903 | 0.785 | −1.189 | −0.849 |

| Pitch range | 0.542 | −0.054 | 1.008 | −0.953 | −0.542 | |

| Mean absolute slope | 0.433 | −0.065 | 1.279 | −0.927 | −0.721 | |

| Rise | 0.179 | −0.170 | 0.430 | −0.220 | −0.219 | |

| Fall | −0.309 | −0.064 | −0.937 | 0.813 | 0.497 | |

| Rise slope | 0.475 | −0.090 | 0.905 | −0.702 | −0.587 | |

| Fall slope | −0.324 | −0.059 | −0.994 | 0.821 | 0.556 | |

| Speech rate | 0.058 | 0.254 | 0.233 | −0.039 | −0.506 | |

| Intensity | 0.898 | −0.009 | 0.993 | −0.787 | −1.094 | |

| CPP | 0.158 | −0.632 | 0.463 | 0.084 | −0.073 | |

| H1 | 0.416 | 0.085 | 0.743 | −0.651 | −0.593 | |

| H2 | 0.456 | −0.166 | 0.841 | −0.507 | −0.624 | |

| H4 | 0.494 | −0.323 | 0.769 | −0.359 | −0.581 | |

| H1-H2 | −0.198 | 0.761 | −0.567 | −0.288 | 0.292 | |

| H1-A1 | −0.447 | 0.623 | −0.714 | −0.085 | 0.623 | |

| H1-A2 | −0.408 | 0.240 | −0.513 | 0.030 | 0.651 | |

| H1-A3 | −0.589 | 0.101 | −0.274 | 0.148 | 0.613 | |

| CQ | 0.111 | −0.632 | 0.192 | 0.123 | 0.205 | |

| PIC | −0.158 | 0.153 | 0.162 | 0.230 | −0.388 | |

| SQ | −0.362 | 0.048 | −0.724 | 0.270 | 0.769 | |

| Language | Measurement | F-A | H-A | N-A | S-A | H-F | N-F | S-F | N-H | S-H | S-N |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Mandarin | Mean pitch | *** | ** | *** | *** | *** | *** | *** | *** | *** | ** |

| Pitch range | *** | *** | *** | *** | *** | * | *** | *** | *** | *** | |

| Mean absolute slope | *** | *** | *** | *** | *** | . | *** | *** | *** | *** | |

| Rise | *** | *** | *** | *** | *** | . | . | *** | *** | *** | |

| Fall | *** | *** | *** | *** | *** | ** | * | *** | *** | *** | |

| Rise slope | *** | *** | *** | *** | *** | ** | *** | *** | *** | *** | |

| Fall slope | *** | *** | *** | *** | *** | *** | *** | *** | *** | ||

| Speech rate | *** | *** | *** | *** | *** | *** | *** | *** | *** | ||

| Intensity | *** | *** | *** | *** | *** | *** | *** | *** | *** | *** | |

| CPP | *** | *** | *** | *** | *** | *** | *** | *** | *** | ||

| H1 | *** | *** | *** | *** | *** | *** | *** | *** | *** | ||

| H2 | *** | . | *** | *** | *** | *** | *** | *** | *** | *** | |

| H4 | *** | *** | *** | *** | *** | *** | *** | *** | *** | ||

| H1-H2 | *** | *** | *** | *** | *** | *** | ** | *** | * | ||

| H1-A1 | *** | *** | *** | *** | *** | *** | *** | *** | |||

| H1-A2 | *** | *** | *** | *** | ** | *** | *** | ** | |||

| H1-A3 | *** | *** | *** | *** | *** | *** | |||||

| CQ | *** | *** | *** | *** | *** | *** | *** | *** | *** | *** | |

| PIC | ** | *** | *** | *** | *** | *** | *** | *** | |||

| SQ | *** | *** | *** | *** | *** | *** | *** | ||||

| English | Mean pitch | *** | *** | *** | *** | *** | *** | *** | *** | *** | |

| Pitch range | *** | *** | *** | *** | *** | *** | *** | *** | *** | ** | |

| Mean absolute slope | *** | *** | *** | *** | *** | *** | *** | *** | *** | . | |

| Rise | ** | ** | ** | ||||||||

| Fall | . | *** | *** | *** | *** | *** | *** | *** | *** | . | |

| Rise slope | *** | ** | *** | *** | *** | *** | ** | *** | *** | ||

| Fall slope | *** | *** | *** | *** | *** | *** | *** | *** | |||

| Speech rate | ** | *** | *** | . | |||||||

| Intensity | *** | *** | *** | *** | *** | *** | *** | *** | ** | ||

| CPP | *** | *** | *** | ** | ** | ||||||

| H1 | . | . | *** | *** | *** | *** | *** | *** | *** | ||

| H2 | *** | * | *** | *** | *** | * | ** | *** | *** | ||

| H4 | *** | *** | *** | *** | *** | *** | |||||

| H1-H2 | *** | . | * | *** | *** | * | *** | ** | |||

| H1-A1 | *** | * | *** | *** | *** | *** | *** | *** | |||

| H1-A2 | *** | * | *** | *** | * | ** | *** | *** | |||

| H1-A3 | *** | *** | *** | . | * | * | *** | * | |||

| CQ | *** | *** | *** | *** | |||||||

| PIC | * | * | ** | ||||||||

| SQ | * | * | *** | *** | *** | *** | *** | *** | ** |

| Measurement | Variable | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Emotion (Within-Subject) | Language (Between-Subject) | Emotion × Language | |||||||

| F (3,24) | p | η2 | F (1,8) | p | η2 | F (3,24) | p | η2 | |

| Mean pitch | 19.905 | 0.000 *** | 0.713 | 0.666 | 0.438 | 0.077 | 0.367 | 0.778 | 0.044 |

| Pitch range | 31.567 | 0.000 *** | 0.798 | 7.768 | 0.024 * | 0.493 | 1.285 | 0.302 | 0.138 |

| Mean absolute slope | 50.404 | 0.000 *** | 0.863 | 34.760 | 0.000 *** | 0.813 | 7.734 | 0.001 ** | 0.492 |

| Rise | 33.377 | 0.000 *** | 0.807 | 12.769 | 0.007 ** | 0.615 | 5.902 | 0.025 * | 0.425 |

| Fall | 23.507 | 0.000 *** | 0.746 | 30.419 | 0.001 ** | 0.792 | 5.158 | 0.007 ** | 0.392 |

| Rise slope | 16.980 | 0.001 *** | 0.680 | 22.950 | 0.001 ** | 0.742 | 3.210 | 0.098 | 0.286 |

| Fall slope | 27.416 | 0.000 *** | 0.774 | 22.086 | 0.002 ** | 0.734 | 5.095 | 0.023 * | 0.389 |

| Speech rate | 29.519 | 0.000 *** | 0.787 | 12.342 | 0.007 ** | 0.226 | 5.291 | 0.006 ** | 0.398 |

| Intensity | 47.668 | 0.000 *** | 0.856 | 0.851 | 0.383 | 0.096 | 2.157 | 0.119 | 0.212 |

| CPP | 18.852 | 0.000 *** | 0.702 | 2.006 | 0.194 | 0.200 | 5.101 | 0.007 ** | 0.389 |

| H1 | 3.595 | 0.051 | 0.310 | 0.004 | 0.953 | 0.000 | 0.465 | 0.637 | 0.055 |

| H2 | 7.918 | 0.010 * | 0.497 | 0.011 | 0.919 | 0.001 | 0.812 | 0.431 | 0.092 |

| H4 | 1.467 | 0.263 | 0.155 | 0.116 | 0.743 | 0.014 | 2.802 | 0.123 | 0.259 |

| H1-H2 | 0.993 | 0.413 | 0.110 | 0.048 | 0.832 | 0.006 | 2.460 | 0.087 | 0.235 |

| H1-A1 | 0.462 | 0.539 | 0.055 | 0.483 | 0.507 | 0.057 | 0.049 | 0.859 | 0.006 |

| H1-A2 | 1.368 | 0.282 | 0.146 | 0.021 | 0.889 | 0.003 | 0.695 | 0.470 | 0.080 |

| H1-A3 | 1.344 | 0.285 | 0.144 | 0.363 | 0.563 | 0.043 | 1.906 | 0.200 | 0.192 |

| CQ | 20.772 | 0.000 *** | 0.722 | 0.331 | 0.581 | 0.040 | 9.524 | 0.006 ** | 0.543 |

| PIC | 2.932 | 0.054 | 0.268 | 0.946 | 0.359 | 0.106 | 0.894 | 0.458 | 0.101 |

| SQ | 20.408 | 0.000 *** | 0.718 | 4.954 | 0.057 | 0.382 | 11.031 | 0.000 *** | 0.580 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, T.; Lee, Y.-c.; Ma, Q. Within and Across-Language Comparison of Vocal Emotions in Mandarin and English. Appl. Sci. 2018, 8, 2629. https://doi.org/10.3390/app8122629

Wang T, Lee Y-c, Ma Q. Within and Across-Language Comparison of Vocal Emotions in Mandarin and English. Applied Sciences. 2018; 8(12):2629. https://doi.org/10.3390/app8122629

Chicago/Turabian StyleWang, Ting, Yong-cheol Lee, and Qiuwu Ma. 2018. "Within and Across-Language Comparison of Vocal Emotions in Mandarin and English" Applied Sciences 8, no. 12: 2629. https://doi.org/10.3390/app8122629