Healthcare Trust Evolution with Explainable Artificial Intelligence: Bibliometric Analysis

Abstract

:1. Introduction

- Transparency: A sculpture is said to be translucent if it has the capacity to make sense on its own. Thus, lucidity is the contradiction of a black box [5].

- Interpretability: The term “interpretability” describes the capacity to comprehend and articulate how a complicated system, such as a machine learning model or an algorithm, makes decisions. It entails obtaining an understanding of the variables that affect the system’s outputs and how it generates its conclusions [6]. Explainability is an area within the realm of interpretability, and it is closely linked to the notion that explanations serve as a means of connecting human users with artificial intelligence systems. The process encompasses the categorization of artificial intelligence that is both accurate and comprehensible to human beings [6].

- Where in the interest of fairness and to help customers make an informed decision, an explanation is necessary.

- Where the consequences of a wrong AI decision can be very far-reaching (such as recommending surgery that is unnecessary).

- In cases where a mistake results in unnecessary financial costs, health risks, and trauma, such as malignant tumor misclassification.

- Where domain experts or subject matter experts must validate a novel hypothesis generated by the AI.

- The EU’s General Data Protection Regulation (GDPR) [8] gives consumers the right to explanations when data are accessed through an automated mechanism.

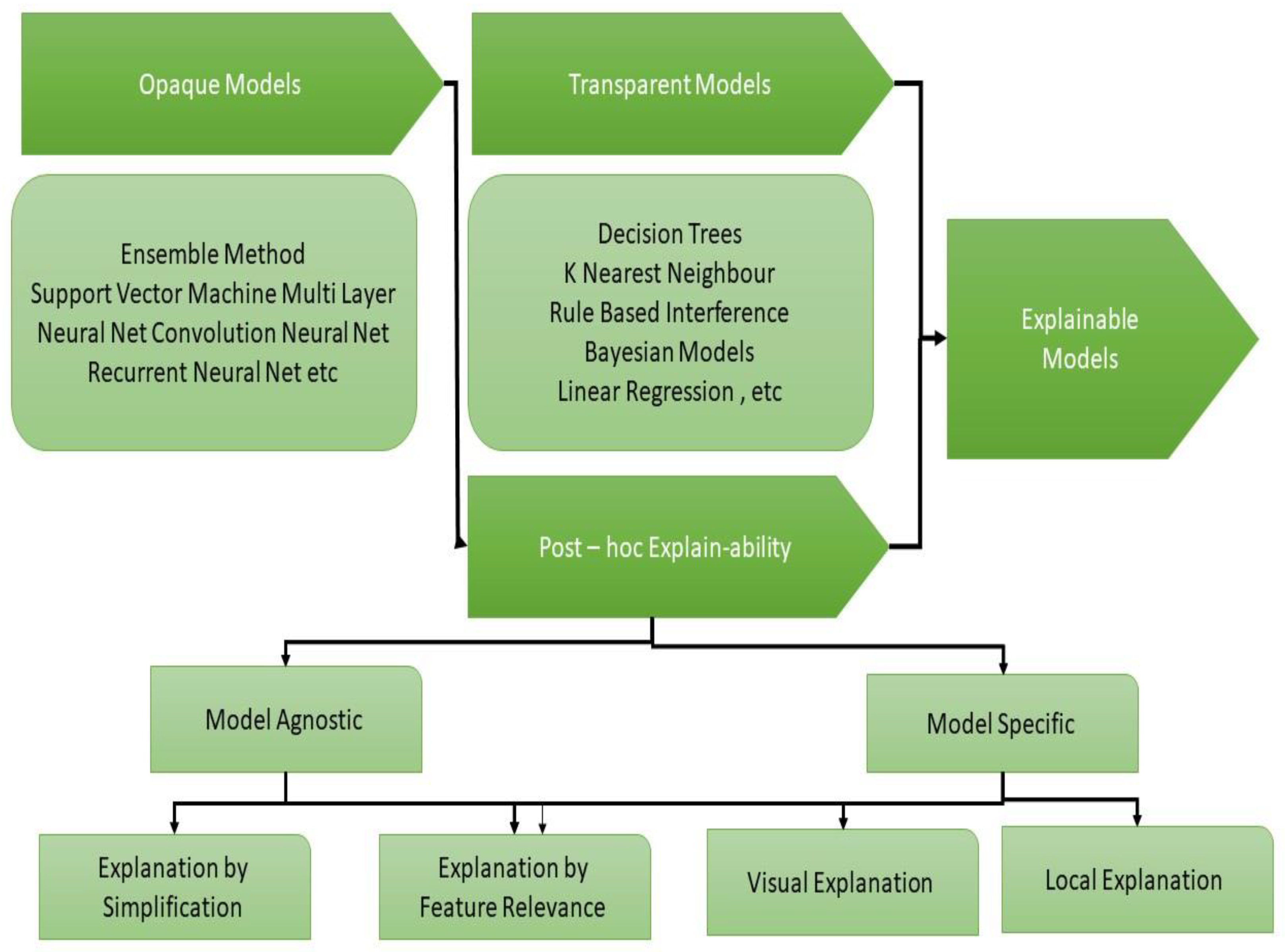

1.1. Taxonomy of XAI

1.1.1. Translucent Model

1.1.2. Opaque Models

1.1.3. Model-Agnostic Techniques

1.1.4. Model-Specific Techniques

1.1.5. Simplification of Enlightenment

1.1.6. Relevance of Explanation by Feature

1.1.7. Graphic Explanation

1.1.8. Narrow Explanation

- It discusses the latest papers investigating the intermingling of XAI with the healthcare domain.

- Based on the various research published in the past year, it elaborates on various publishing patterns.

- It shows how much different nations or areas have contributed to this area of study.

- It talks about the importance of academic writers who have contributed considerably to the integration of XAI in the healthcare industry.

- It talks about a lot of places where publishing patterns are dependent on relationships (colleges/organizations).

- It displays the number of citations a publication received for each contribution connected to the impact of XAI on clinical health practices and increases transparency for predictive analysis, which is essential in the healthcare industry.

2. Literature Review

- Medical Imaging and Diagnosis

- Chronic Disease Detection

- COVID-19 Diagnosis

- Global Health Goals

- Pain Assessment

- Biometric Signal Analysis

- Stroke Recognition

2.1. Methodology

2.1.1. Planning

2.1.2. Data Collection

2.1.3. Search Strategy

(“XAI” OR “Explainable Artificial Intelligence”) AND (“Health Care” OR “Diagnosis” OR “Classification”).

2.1.4. Screening

TITLE-ABS-KEY (((“XAI” OR “explainable artificial intelligence”) AND (“health care” OR “diagnosis” OR “classification”))) AND (LIMIT-TO (OA, “all”)) AND (EXCLUDE (SUBJAREA, “MATH”) OR EXCLUDE (SUBJAREA, “PHYS”) OR EXCLUDE (SUBJAREA, “MATE”) OR EXCLUDE (SUBJAREA, “MULT”) OR EXCLUDE (SUBJAREA, “BUSI”) OR EXCLUDE (SUBJAREA, “SOCI”) OR EXCLUDE (SUBJAREA, “ARTS”) OR EXCLUDE (SUBJAREA, “EART”) OR EXCLUDE (SUBJAREA, “ENVI”) OR EXCLUDE (SUBJAREA, “ECON”) OR EXCLUDE (SUBJAREA, “ENER”)) AND (LIMIT-TO (DOCTYPE, “ar”) OR LIMIT-TO (DOCTYPE, “cp”)) AND (LIMIT-TO (LANGUAGE, “English”)) AND (LIMIT-TO (SRCTYPE, “j”) OR LIMIT-TO (SRCTYPE, “p”)) AND (EXCLUDE (SUBJAREA, “DECI”)) AND (EXCLUDE (SUBJAREA, “ENGI”)) AND (EXCLUDE (SUBJAREA, “AGRI”)) AND (EXCLUDE (PUBYEAR, 2023))

2.1.5. Performance Scrutiny

3. Data Analysis and Results

3.1. Overview of the Data Collected and Annual Scientific Production

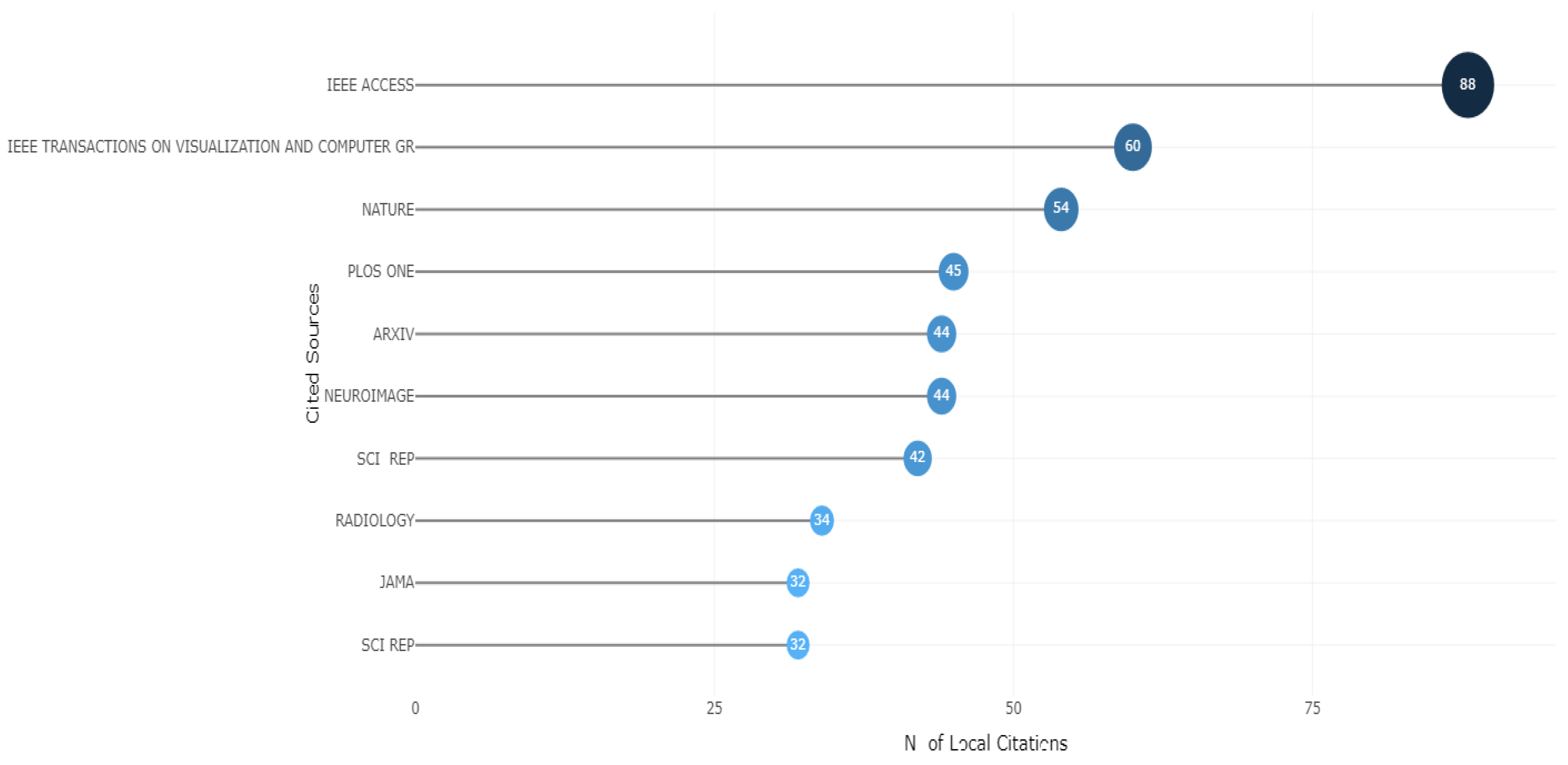

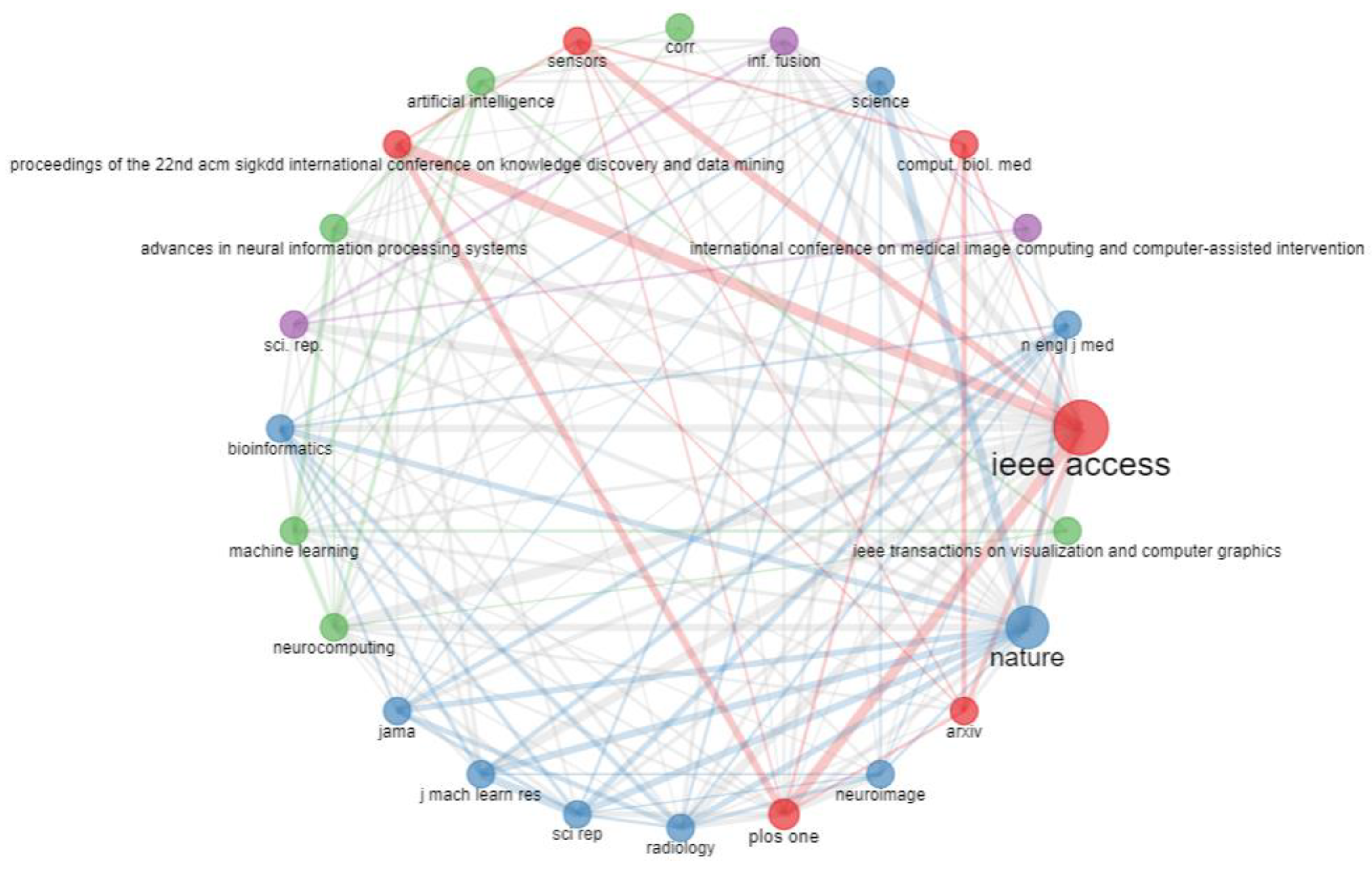

3.2. Most Relevant Sources

3.2.1. Most Locally Cited Sources

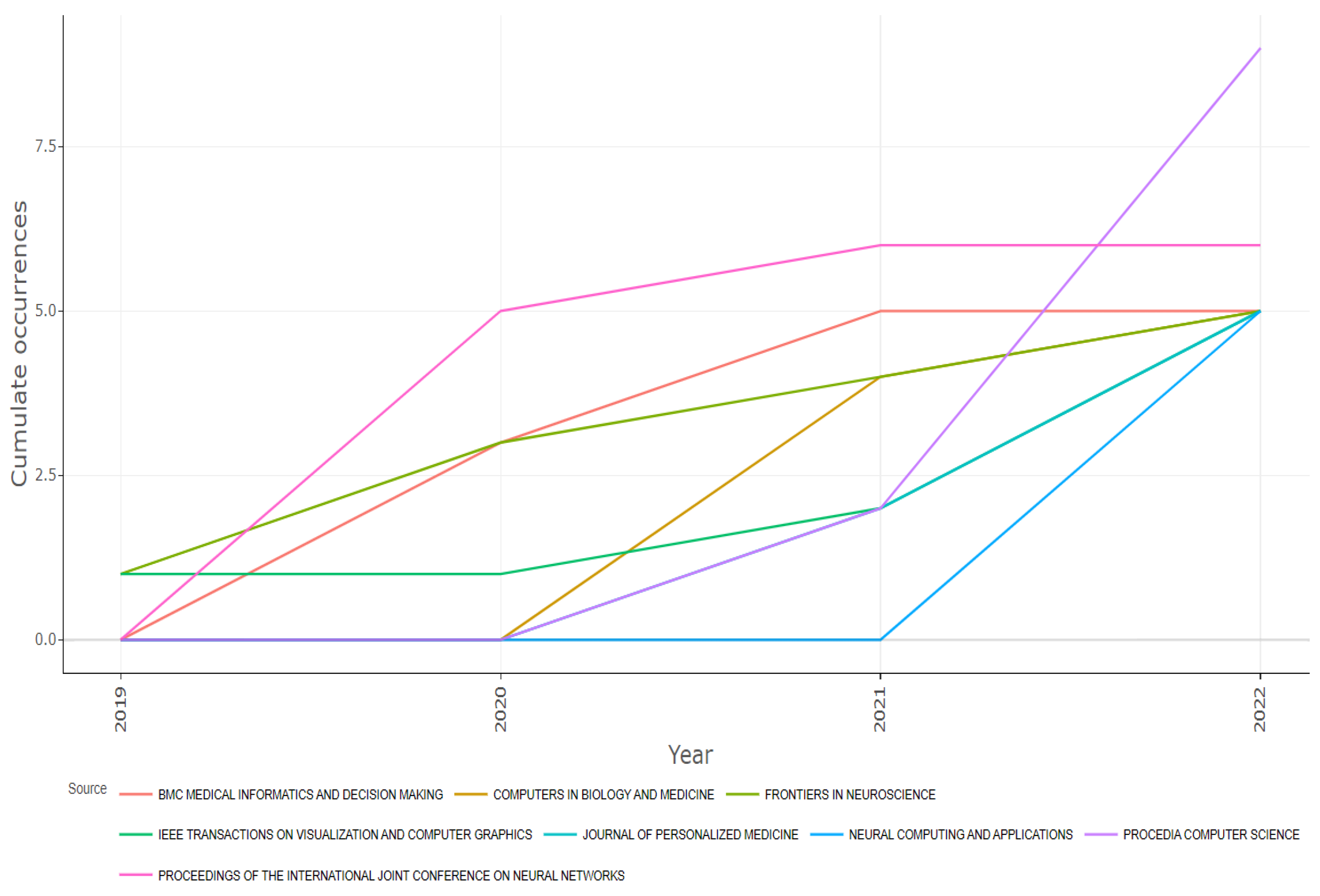

3.2.2. Source Dynamics

3.2.3. Most Relevant Authors

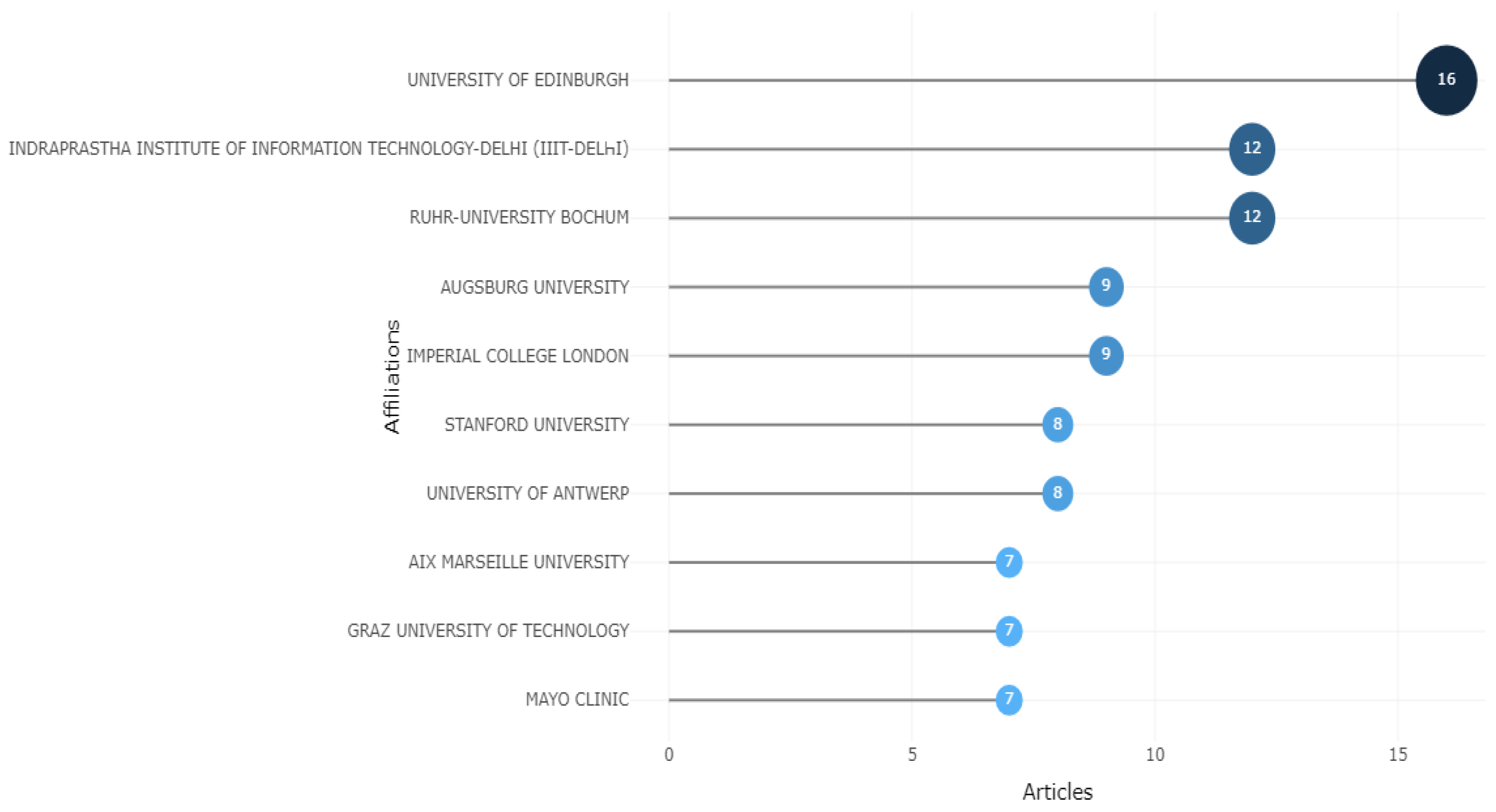

3.3. Analysis of Documents by Affiliation

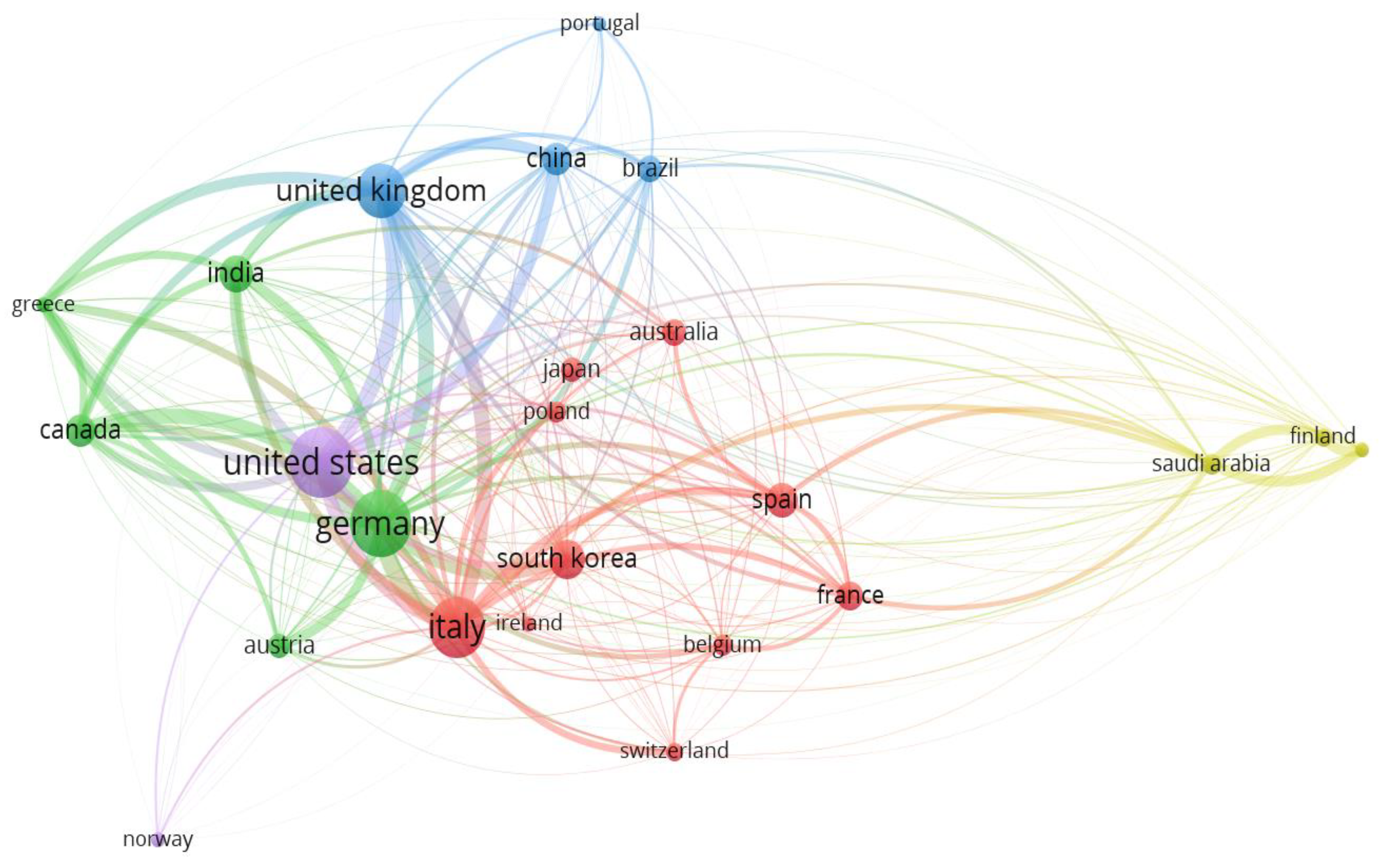

3.4. Most Relevant Countries

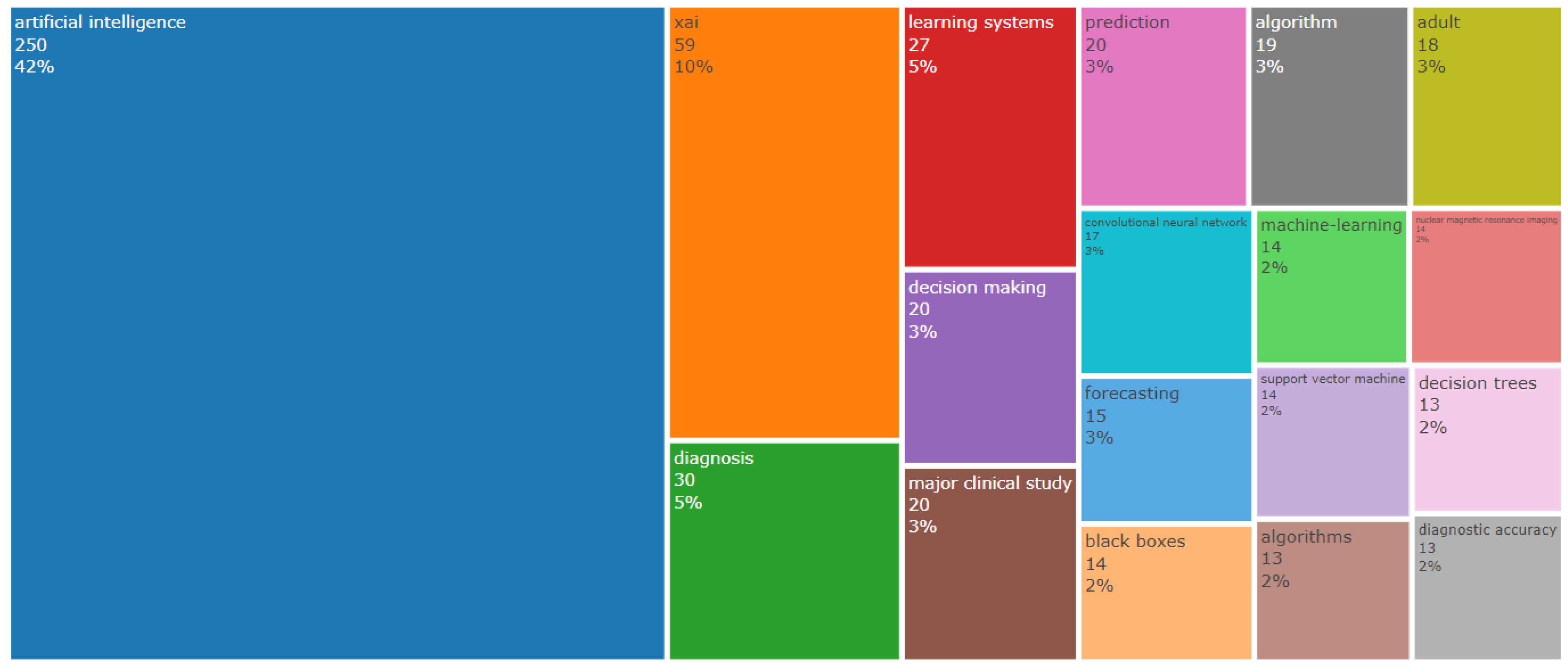

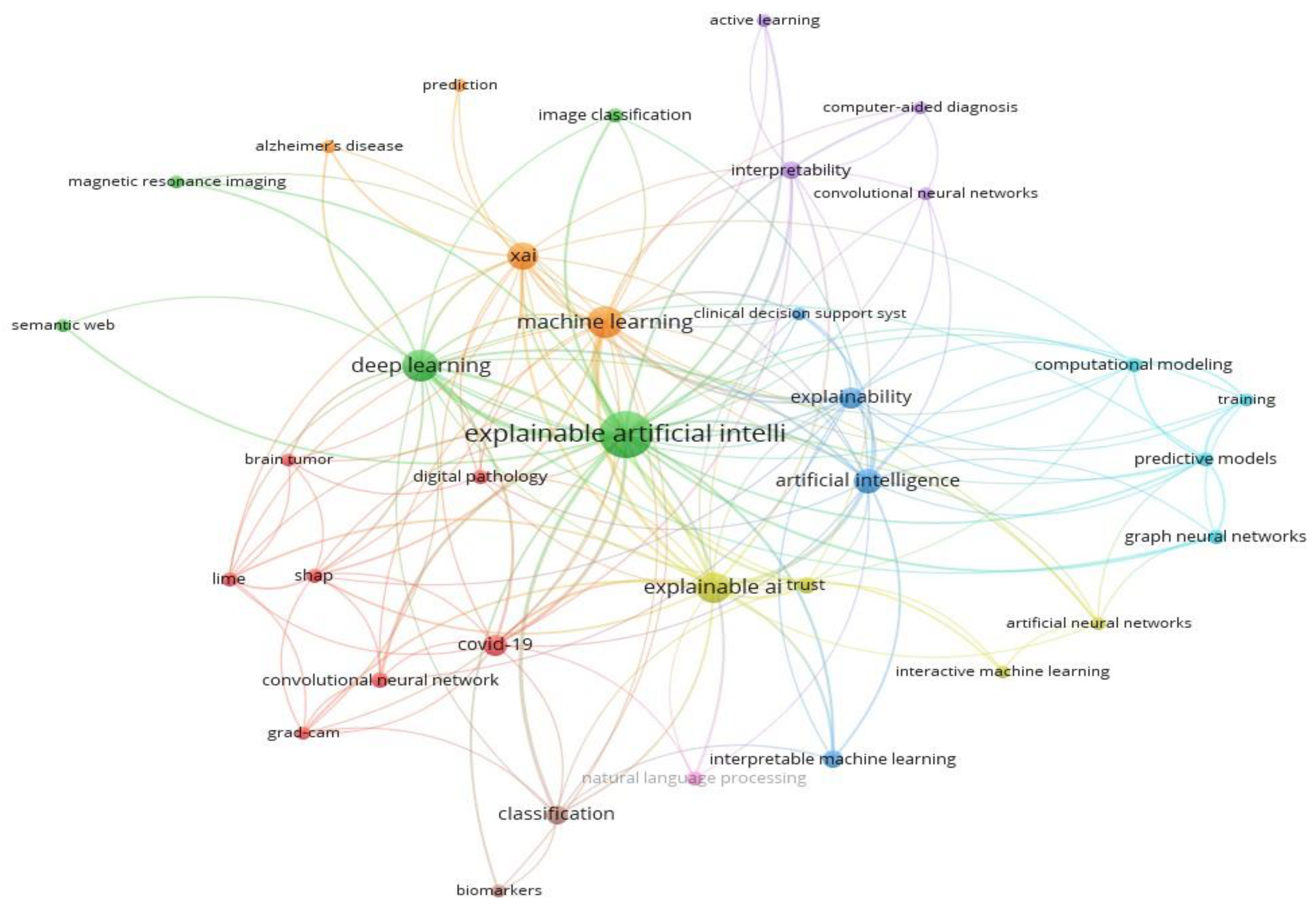

3.4.1. Co-Occurrence Research for All Keywords

- Can the accuracy and reliability of MRI diagnoses be enhanced by incorporating Explainable Artificial Intelligence (XAI) in conjunction with deep learning methods for image categorization, within the context of the Semantic Web framework?

- When XAI (Explainable Artificial Intelligence) and deep learning techniques are employed for the purpose of classifying MRI (Magnetic Resonance Imaging) images, some ethical concerns arise. Future researchers can dig into the ethical issues and propose potential strategies to mitigate them. In what ways may the application of Semantic Web principles facilitate the efficient organization and retrieval of data, while simultaneously upholding the ideals of patient privacy and informed consent?

- How does implementing XAI within Semantic Web-driven clinical decision support systems affect user trust and acceptance in AI-driven diagnostics, particularly for MRI image classification, and what cross-domain knowledge transfer opportunities exist to improve model performance [54]?

- How might the utilization of XAI approaches, specifically LIME and SHAP, contribute to the improvement of interpretability in Convolutional Neural Networks (CNNs) within the field of digital pathology, ultimately leading to enhanced accuracy in disease detection?

- What are the potential biomarkers for the early diagnosis of Alzheimer’s disease utilizing machine learning (ML) and deep learning (DL) models, and how may XAI techniques enhance their interpretability?

- In what ways may active learning methodologies be utilized to train Artificial Neural Networks (ANNs) for MRI-based diagnoses, with the aim of enhancing user trust and confidence in AI-driven healthcare decisions?

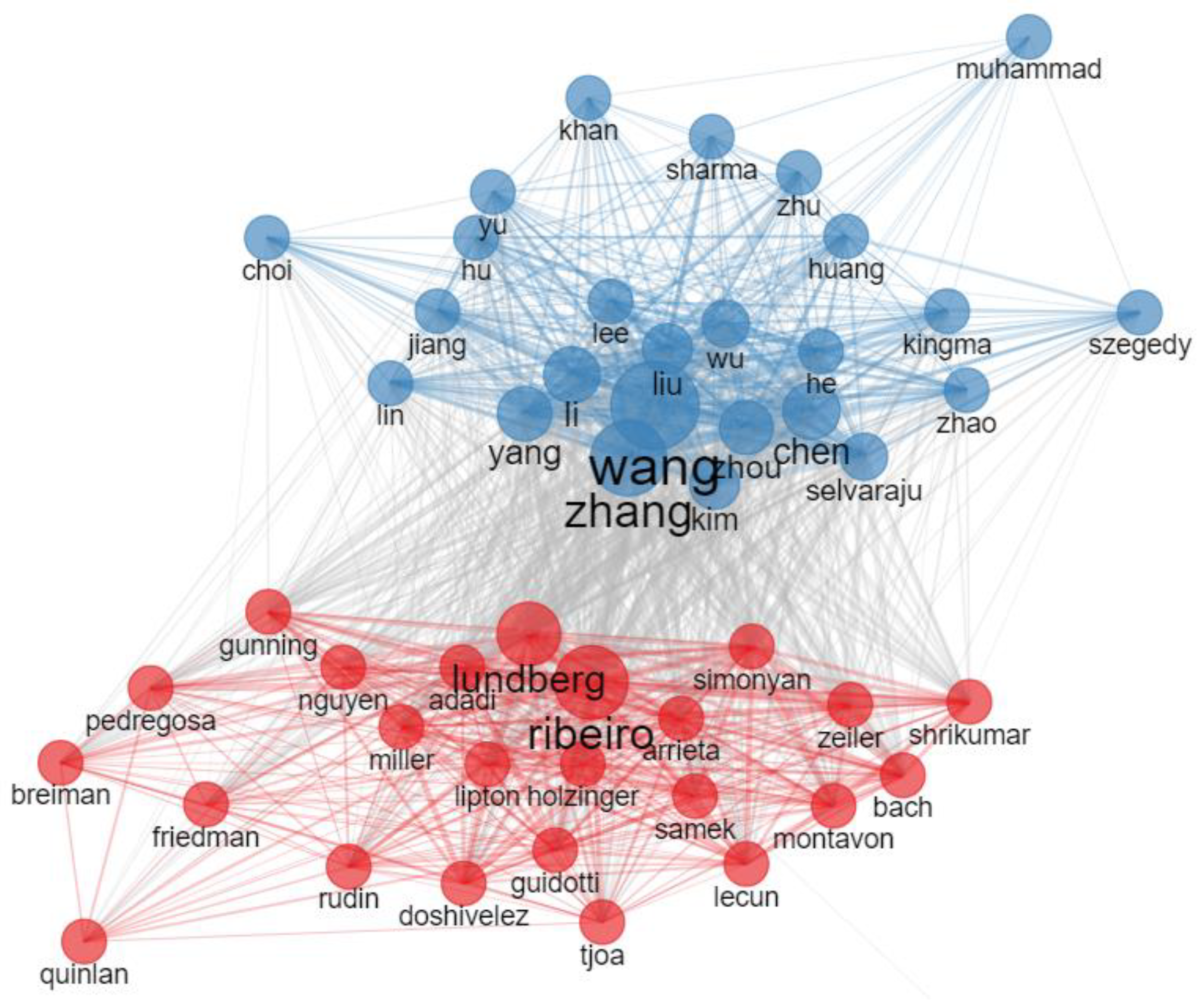

3.4.2. Network for Co-Citation

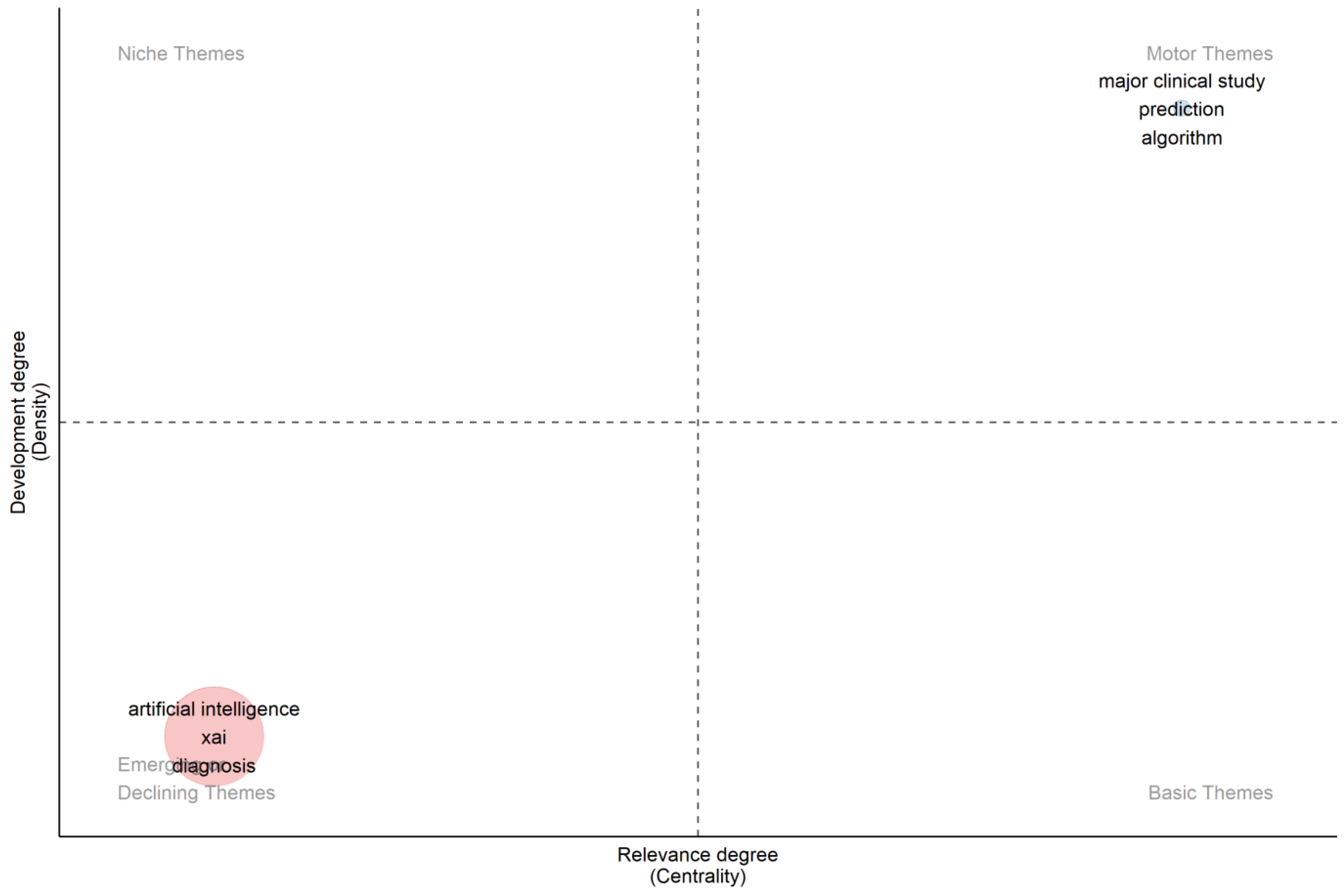

3.5. Conceptual Structure

Thematic Map

3.6. Social Structure

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bonkra, A.; Dhiman, P. IoT Security Challenges in Cloud Environment. In Proceedings of the 2021 2nd International Conference on Computational Methods in Science & Technology, Mohali, India, 17–18 December 2021; pp. 30–34. [Google Scholar] [CrossRef]

- Barredo Arrieta, A.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Van Lent, M.; Fisher, W.; Mancuso, M. An explainable artificial intelligence system for small-unit tactical behavior. In Proceedings of the Nineteenth National Conference on Artificial Intelligence, Sixteenth Conference on Innovative Applications of Artificial Intelligence, San Jose, CA, USA, 25–29 July 2004; pp. 900–907. [Google Scholar]

- Mukhtar, M.; Bilal, M.; Rahdar, A.; Barani, M.; Arshad, R.; Behl, T.; Bungau, S. Nanomaterials for diagnosis and treatment of brain cancer: Recent updates. Chemosensors 2020, 8, 117. [Google Scholar] [CrossRef]

- Adadi, A.; Berrada, M. Peeking Inside the Black-Box: A Survey on Explainable Artificial Intelligence (XAI). IEEE Access 2018, 6, 52138–52160. [Google Scholar] [CrossRef]

- Gilpin, L.H.; Bau, D.; Yuan, B.Z.; Bajwa, A.; Specter, M.; Kagal, L. Explaining explanations: An overview of interpretability of machine learning. In Proceedings of the 5th IEEE International Conference on Data Science and Advanced Analytics, DSAA 2018, Turin, Italy, 1–3 October 2018; pp. 80–89. [Google Scholar] [CrossRef]

- Ahmad, M.A.; Eckert, C.; Teredesai, A. Explainable AI in Healthcare. SSRN Electron. J. 2019. [Google Scholar] [CrossRef]

- Sheu, R.K.; Pardeshi, M.S. A Survey on Medical Explainable AI (XAI): Recent Progress, Explainability Approach, Human Interaction and Scoring System. Sensors 2022, 22, 68. [Google Scholar] [CrossRef]

- Dieber, J.; Kirrane, S. Why model why? Assessing the strengths and limitations of LIME. arXiv 2012, arXiv:2012.00093. [Google Scholar]

- Bach, S.; Binder, A.; Montavon, G.; Klauschen, F.; Müller, K.R.; Samek, W. On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PLoS ONE 2015, 10, e0130140. [Google Scholar] [CrossRef]

- Tritscher, J.; Ring, M.; Schlr, D.; Hettinger, L.; Hotho, A. Evaluation of Post-hoc XAI Approaches through Synthetic Tabular Data. In Foundations of Intelligent Systems, 25th International Symposium, ISMIS 2020, Graz, Austria, 23–25 September 2020; 2020; pp. 422–430. [Google Scholar] [CrossRef]

- Chattopadhay, A.; Sarkar, A.; Howlader, P.; Balasubramanian, V.N. Grad-CAM++: Generalized gradient-based visual explanations for deep convolutional networks. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision, WACV 2018, Lake Tahoe, NV, USA, 12–15 March 2018; pp. 839–847. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2020, 128, 36–359. [Google Scholar] [CrossRef]

- Alsharif, A.H.; Md Salleh, N.Z.; Baharun, R.; A. Rami Hashem, E. Neuromarketing research in the last five years: A bibliometric analysis. Cogent Bus. Manag. 2021, 8, 1978620. [Google Scholar] [CrossRef]

- Antoniadi, A.M.; Du, Y.; Guendouz, Y.; Wei, L.; Mazo, C.; Becker, B.A.; Mooney, C. Current challenges and future opportunities for xai in machine learning-based clinical decision support systems: A systematic review. Appl. Sci. 2021, 11, 5088. [Google Scholar] [CrossRef]

- Kaur, H.; Koundal, D.; Kadyan, V. Image fusion techniques: A survey. Arch. Comput. Methods Eng. 2021, 28, 4425–4447. [Google Scholar] [CrossRef]

- Kaushal, C.; Kaushal, K.; Singla, A. Firefly optimization-based segmentation technique to analyse medical images of breast cancer. Int. J. Comput. Math. 2021, 98, 1293–1308. [Google Scholar] [CrossRef]

- Naik, H.; Goradia, P.; Desai, V.; Desai, Y.; Iyyanki, M. Explainable Artificial Intelligence (XAI) for Population Health Management—An Appraisal. Eur. J. Electr. Eng. Comput. Sci. 2021, 5, 64–76. [Google Scholar] [CrossRef]

- Dash, S.C.; Agarwal, S.K. Incidence of chronic kidney disease in India. Nephrol. Dial. Transplant. 2006, 21, 232–233. [Google Scholar] [CrossRef] [PubMed]

- Refat, M.A.R.; Al Amin, M.; Kaushal, C.; Yeasmin, M.N.; Islam, M.K. A Comparative Analysis of Early Stage Diabetes Prediction using Machine Learning and Deep Learning Approach. In Proceedings of the 2021 6th International Conference on Signal Processing, Computing and Control (ISPCC), Solan, India, 7–9 October 2021; pp. 654–659. [Google Scholar] [CrossRef]

- Tiwari, S.; Kumar, S.; Guleria, K. Outbreak Trends of Coronavirus Disease-2019 in India: A Prediction. Disaster Med. Public Health Prep. 2020, 14, e33–e38. [Google Scholar] [CrossRef] [PubMed]

- Pai, R.R.; Alathur, S. Bibliometric Analysis and Methodological Review of Mobile Health Services and Applications in India. Int. J. Med. Inform. 2021, 145, 104330. [Google Scholar] [CrossRef] [PubMed]

- Madanu, R.; Abbod, M.F.; Hsiao, F.-J.; Chen, W.-T.; Shieh, J.-S. Explainable AI (XAI) Applied in Machine Learning for Pain Modeling: A Review. Technologies 2022, 10, 74. [Google Scholar] [CrossRef]

- Li, H.; Lin, Z.; An, Z.; Zuo, S.; Zhu, W.; Zhang, Z.; Mu, Y.; Cao, L.; Garcia, J.D.P. Automatic electrocardiogram detection and classification using bidirectional long short-term memory network improved by Bayesian optimization. Biomed. Signal Process. Control 2022, 73, 103424. [Google Scholar] [CrossRef]

- Merna Said, A.S.; Omaer, Y.; Safwat, S. Explainable Artificial Intelligence Powered Model for Explainable Detection of Stroke Disease. In Proceedings of the 8th International Conference on Advanced Intelligent Systems and Informatics, Cairo, Egypt, 20–22 November 2022; pp. 211–223. [Google Scholar] [CrossRef]

- Tasleem Nizam, S.Z. Explainable Artificial Intelligence (XAI): Conception, Visualization and Assessment Approaches towards Amenable XAI. In Explainable Edge AI: A Futuristic Computing Perspective; Springer International Publishing: Cham, Switzerland, 2023; pp. 35–52. [Google Scholar]

- Rajkomar, A.; Oren, E.; Chen, K.; Dai, A.M.; Hajaj, N.; Hardt, M.; Liu, P.J.; Dean, J. Scalable and accurate deep learning with electronic health records. NPJ Dig. Med. 2018, 1, 18. [Google Scholar] [CrossRef] [PubMed]

- Praveen, S.; Joshi, K. Explainable Artificial Intelligence in Health Care: How XAI Improves User Trust in High-Risk Decisions. In Explainable Edge AI: A Futuristic Computing Perspective; Springer International Publishing: Cham, Switzerland, 2022; pp. 89–99. [Google Scholar]

- Albahri, A.S.; Duhaim, A.M.; Fadhel, M.A.; Alnoor, A.; Baqer, N.S.; Alzubaidi, L.; Deveci, M. A systematic review of trustworthy and explainable artificial intelligence in healthcare: Assessment of quality, bias risk, and data fusion. Inf. Fusion 2023, 96, 156–191. [Google Scholar] [CrossRef]

- Loh, H.W.; Ooi, C.P.; Seoni, S.; Barua, P.D.; Molinari, F.; Acharya, U.R. Application of explainable artificial intelligence for healthcare: A systematic review of the last decade (2011–2022). Comput. Methods Programs Biomed. 2022, 226, 107161. [Google Scholar] [CrossRef]

- Manresa-Yee, C.; Roig-Maimó, M.F.; Ramis, S.; Mas-Sansó, R. Advances in XAI: Explanation Interfaces in Healthcare. In Handbook of Artificial Intelligence in Healthcare: Vol 2: Practicalities and Prospects; Springer International Publishing: Cham, Switzerland, 2021; pp. 357–369. [Google Scholar]

- Narin, F.; Olivastro, D.; Stevens, K.A. Bibliometrics/Theory, Practice and Problems. Eval. Rev. 1994, 18, 65–76. [Google Scholar] [CrossRef]

- Iqbal, Q. Scopus: Indexing and abstracting database. 2018. [Google Scholar] [CrossRef]

- Pranckutė, R. Web of science (Wos) and scopus: The titans of bibliographic information in today’s academic world. Publications 2021, 9, 12. [Google Scholar] [CrossRef]

- Alryalat, S.A.S.; Malkawi, L.W.; Momani, S.M. Comparing bibliometric analysis using pubmed, scopus, and web of science databases. J. Vis. Exp. 2019, 2019. [Google Scholar] [CrossRef]

- Available online: https://drive.google.com/file/d/1CyXmpCAopvCz5or6tMKHdIesI3iFDu-1/view?usp=drive_link (accessed on 26 June 2023).

- Aria, M.; Cuccurullo, C. bibliometrix: An R-tool for comprehensive science mapping analysis. J. Informetr. 2017, 11, 959–975. [Google Scholar] [CrossRef]

- van Eck, N.J.; Waltman, L. Visualizing Bibliometric Networks. In Measuring Scholarly Impact; Springer International Publishing: Cham, Switzerland, 2014. [Google Scholar] [CrossRef]

- Moral-Muñoz, J.A.; Herrera-Viedma, E.; Santisteban-Espejo, A.; Cobo, M.J. Software tools for conducting bibliometric analysis in science: An up-to-date review. El Prof. De La Inf. 2020, 29, e290103. [Google Scholar] [CrossRef]

- van Eck, N.J.; Waltman, L. VOSviewer Manual; Univeristeit Leiden: Leiden, The Netherlands, 2013; Available online: http://www.vosviewer.com/documentation/Manual_VOSviewer_1.6.1.pdf (accessed on 26 June 2023).

- van Eck, N.J.; Waltman, L. Software survey: VOSviewer, a computer program for bibliometric mapping. Scientometrics 2010, 84, 523–538. [Google Scholar] [CrossRef] [PubMed]

- Osinska, V.; Klimas, R. Mapping science: Tools for bibliometric and altmetric studies. Inf. Res. Int. Electron. J. 2021, 26, 1–18. [Google Scholar] [CrossRef]

- Giuste, F.; Shi, W.; Zhu, Y.; Naren, T.; Isgut, M.; Sha, Y.; Tong, L.; Gupte, M.; Wang, M.D. Explainable Artificial Intelligence Methods in Combating Pandemics: A Systematic Review. IEEE Rev. Biomed. Eng. 2022, 16, 5–21. [Google Scholar] [CrossRef]

- Bhatt, K.; Seabra, C.; Kabia, S.K.; Ashutosh, K.; Gangotia, A. COVID Crisis and Tourism Sustainability: An Insightful Bibliometric Analysis. Sustainability 2022, 14, 12151. [Google Scholar] [CrossRef]

- Gupta, A.; Sukumaran, R.; John, K.; Teki, S. Hostility Detection and COVID-19 Fake News Detection in Social Media. arXiv 2021, arXiv:2101.05953. [Google Scholar]

- Kaushal, C.; Refat, M.A.R.; Islam, M.K. Comparative Micro Blogging News Analysis on the COVID-19 Pandemic Scenario. In Lecture Notes in Networks and Systems, Proceedings of the International Conference on Data Science and Applications, Virtual, 10–11 April 2021; Springer: Singapore, 2021; Volume 148, pp. 241–248. [Google Scholar]

- Dhiman, P.; Kaur, A.; Iwendi, C.; Mohan, S.K. A Scientometric Analysis of Deep Learning Approaches for Detecting Fake News. Electronics 2023, 12, 948. [Google Scholar] [CrossRef]

- Bonkra, A.; Bhatt, P.K.; Rosak-Szyrocka, J.; Muduli, K.; Pilař, L.; Kaur, A.; Chahal, N.; Rana, A.K. Apple Leave Disease Detection Using Collaborative ML/DL and Artificial Intelligence Methods: Scientometric Analysis. Int. J. Environ. Res. Public Health 2023, 20, 3222. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Yu, Q.; Zheng, F.; Long, C.; Lu, Z.; Duan, Z. Comparing keywords plus of WOS and author keywords: A case study of patient adherence research. J. Assoc. Inf. Sci. Technol. 2016, 67, 967–972. [Google Scholar] [CrossRef]

- McNaught, C.; Lam, P. Using wordle as a supplementary research tool. Qual. Rep. 2010, 15, 630–643. [Google Scholar] [CrossRef]

- Zeng, Z. Explainable Artificial Intelligence (XAI) for Healthcare Decision-Making. Doctoral Thesis, Nanyang Technological University, Singapore, 2022. Available online: https://hdl.handle.net/10356/155849 (accessed on 26 June 2023).

- Gong, H.; Wang, M.; Zhang, H.; Elahe, M.F.; Jin, M. An Explainable AI Approach for the Rapid Diagnosis of COVID-19 Using Ensemble Learning Algorithms. Front. Public Health 2022, 10, 874455. [Google Scholar] [CrossRef] [PubMed]

- Khan, S.A.; Gulzar, Y.; Turaev, S.; Peng, Y.S. A Modified HSIFT Descriptor for Medical Image Classification of Anatomy Objects. Symmetry 2021, 13, 1987. [Google Scholar] [CrossRef]

- Ali, J.; Jusoh, A.; Idris, N.; Abbas, A.F.; Alsharif, A.H. Nine Years of Mobile Healthcare Research: A Bibliometric Analysis. Int. J. Online Biomed. Eng. 2021, 17, 144–159. [Google Scholar] [CrossRef]

- Surwase, G.; Sagar, A.; Kademani, B.S.; Bhanumurthy, K. Co-citation Analysis: An Overview. In Proceedings of the BOSLA National Conference Proceedings, CDAC, Mumbai, India, 16–17 September 2011; p. 9. [Google Scholar]

- Zavaraqi, R. Author Co-Citation Analysis (ACA): A powerful tool for representing implicit knowledge of scholar knowledge workers. In Proceedings of the Sixth International Conference on Webometrics, Informetrics and Scientometrics & Eleventh COLLNET Meeting, Mysore, India, 19–22 October 2010; pp. 871–883. [Google Scholar]

- Katz, J.S.; Martin, B.R. What is research collaboration? Res. Policy 1997, 26, 1–18. [Google Scholar] [CrossRef]

- Bansal, S.; Mahendiratta, S.; Kumar, S.; Sarma, P.; Prakash, A.; Medhi, B. Collaborative research in modern era: Need and challenges. Indian J. Pharmacol. 2019, 51, 137–139. [Google Scholar] [CrossRef]

| Description | Results |

|---|---|

| MAIN INFORMATION ABOUT DATA | |

| Timespan | 2019:2022 |

| Sources (journals, books, etc.) | 104 |

| Documents | 171 |

| Document average age | 0.725 |

| Average citations per doc | 8.947 |

| References | 8631 |

| DOCUMENT CONTENTS | |

| Keywords Plus (ID) | 1767 |

| Authors’ keywords (DE) | 551 |

| AUTHORS | |

| Authors | 863 |

| Authors of single-authored docs | 4 |

| AUTHOR COLLABORATION | |

| Single-authored docs | 4 |

| Co-authors per doc | 5.23 |

| International co-authorships % | 30.41 |

| DOCUMENT TYPES | |

| Articles | 134 |

| Conference papers | 37 |

| Year | Articles |

|---|---|

| 2019 | 10 |

| 2020 | 25 |

| 2021 | 44 |

| 2022 | 92 |

| Year | MeanTCperArt | MeanTCperYear |

|---|---|---|

| 2019 | 60.50 | 20.17 |

| 2020 | 14.88 | 7.44 |

| 2021 | 6.75 | 6.75 |

| 2022 | 2.78 |

| Country | No of Articles | Total Citations |

|---|---|---|

| USA | 109 | 280 |

| Germany | 105 | 63 |

| Italy | 94 | 123 |

| UK | 63 | 107 |

| India | 50 | 9 |

| Spain | 47 | 4 |

| China | 46 | 23 |

| South Korea | 42 | 182 |

| Japan | 37 | 13 |

| France | 33 | 164 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dhiman, P.; Bonkra, A.; Kaur, A.; Gulzar, Y.; Hamid, Y.; Mir, M.S.; Soomro, A.B.; Elwasila, O. Healthcare Trust Evolution with Explainable Artificial Intelligence: Bibliometric Analysis. Information 2023, 14, 541. https://doi.org/10.3390/info14100541

Dhiman P, Bonkra A, Kaur A, Gulzar Y, Hamid Y, Mir MS, Soomro AB, Elwasila O. Healthcare Trust Evolution with Explainable Artificial Intelligence: Bibliometric Analysis. Information. 2023; 14(10):541. https://doi.org/10.3390/info14100541

Chicago/Turabian StyleDhiman, Pummy, Anupam Bonkra, Amandeep Kaur, Yonis Gulzar, Yasir Hamid, Mohammad Shuaib Mir, Arjumand Bano Soomro, and Osman Elwasila. 2023. "Healthcare Trust Evolution with Explainable Artificial Intelligence: Bibliometric Analysis" Information 14, no. 10: 541. https://doi.org/10.3390/info14100541