Effective Image Retrieval Using Texture Elements and Color Fuzzy Correlogram

Abstract

:1. Introduction

2. Related Work

3. The Proposed Feature Extraction Methods

3.1. Color Layer-Based Texture Elements Histogram

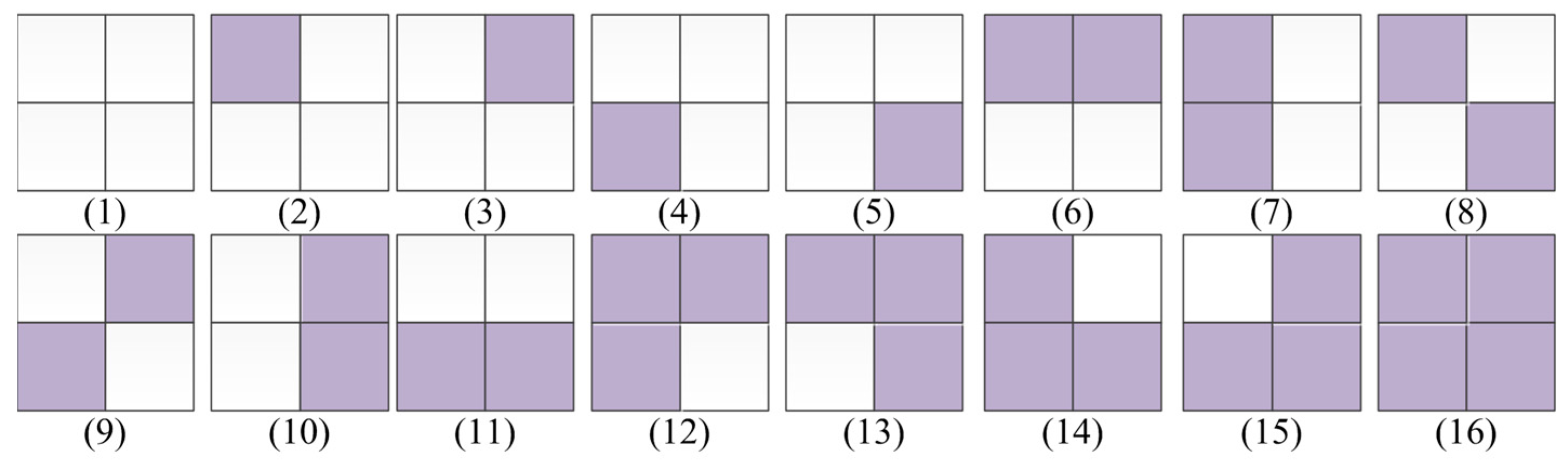

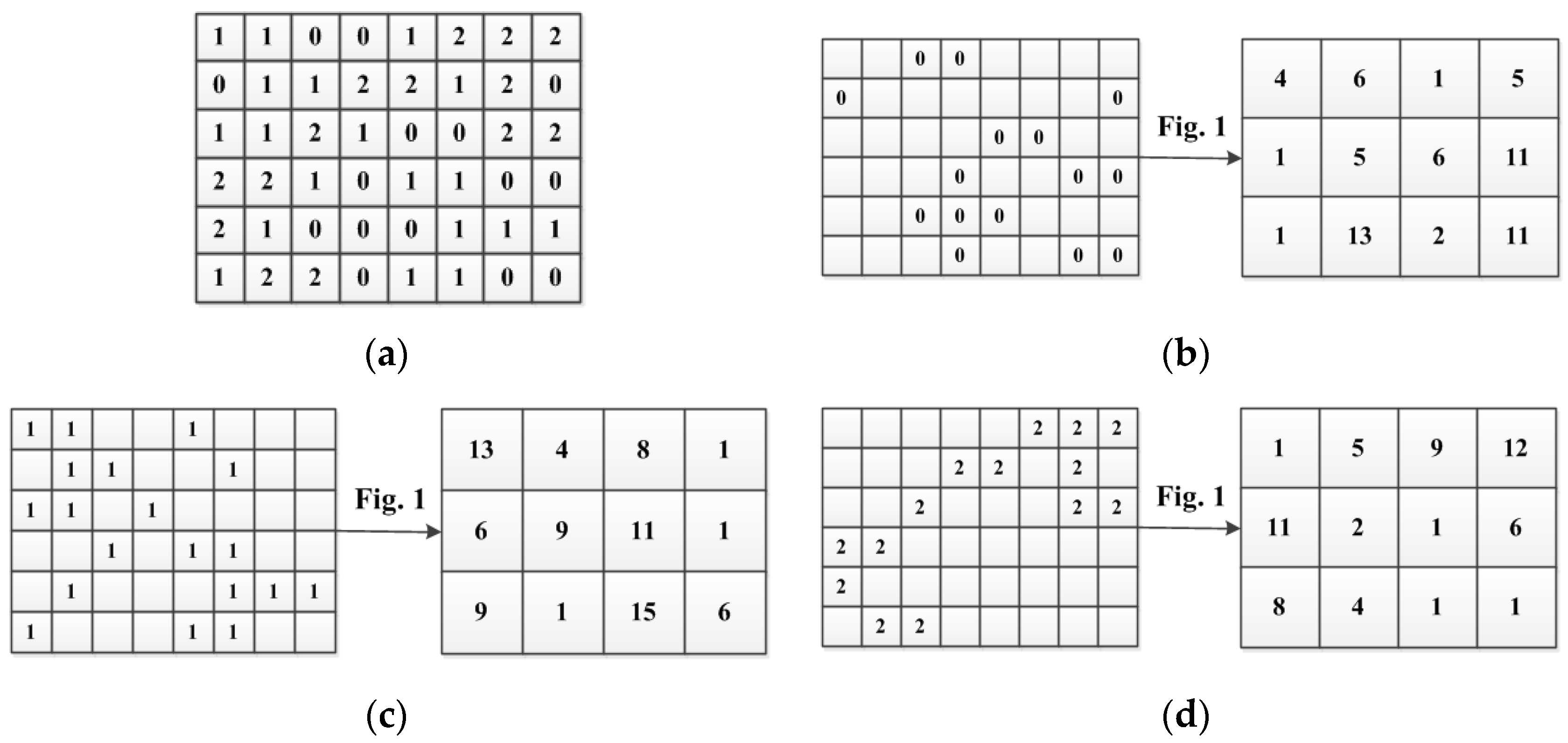

3.1.1. Texture Elements Definition

3.1.2. Feature Extraction

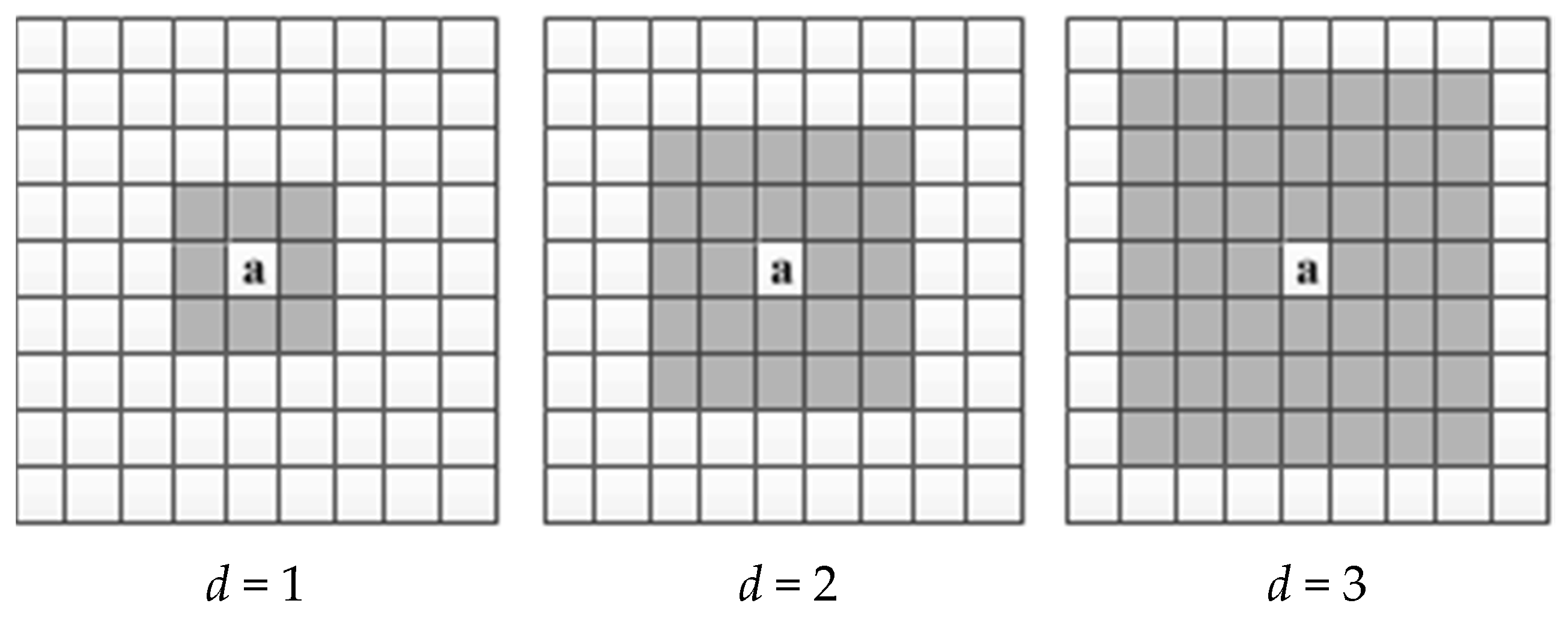

3.2. Color Fuzzy Correlogram

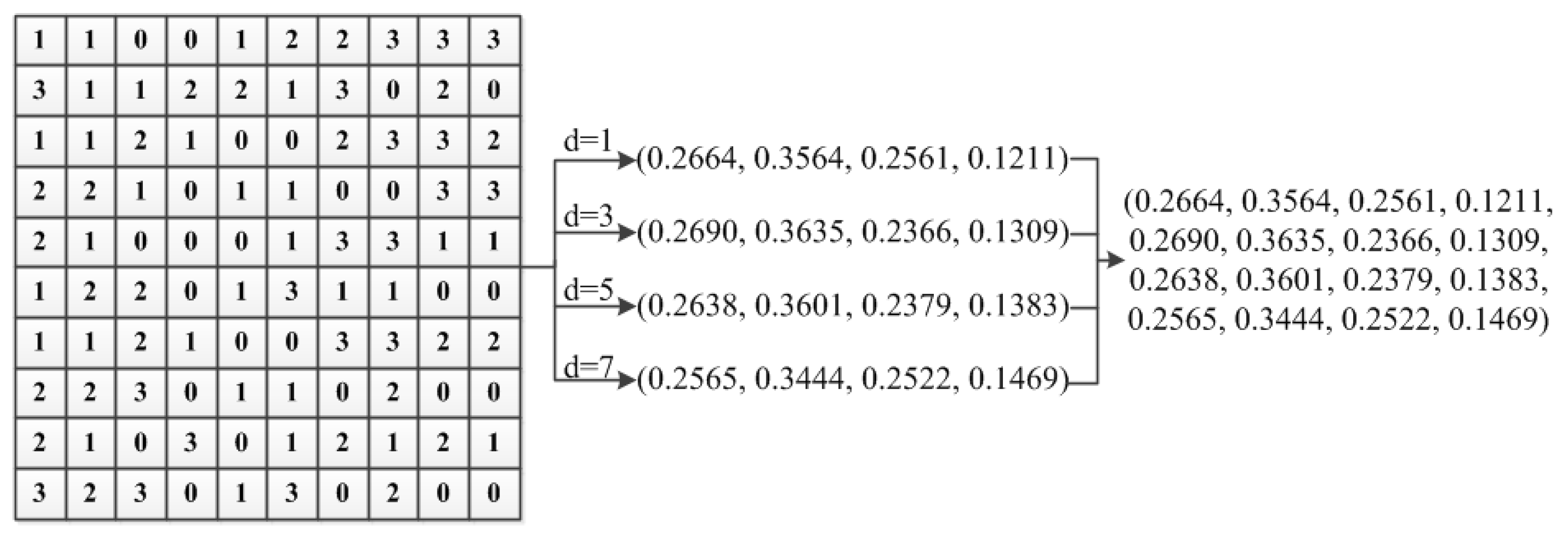

3.2.1. The Calculation of Color Fuzzy Correlogram

3.2.2. Color Feature Extraction

4. Experiments

4.1. Similarity Measurement between Images

4.2. Performance Evaluation

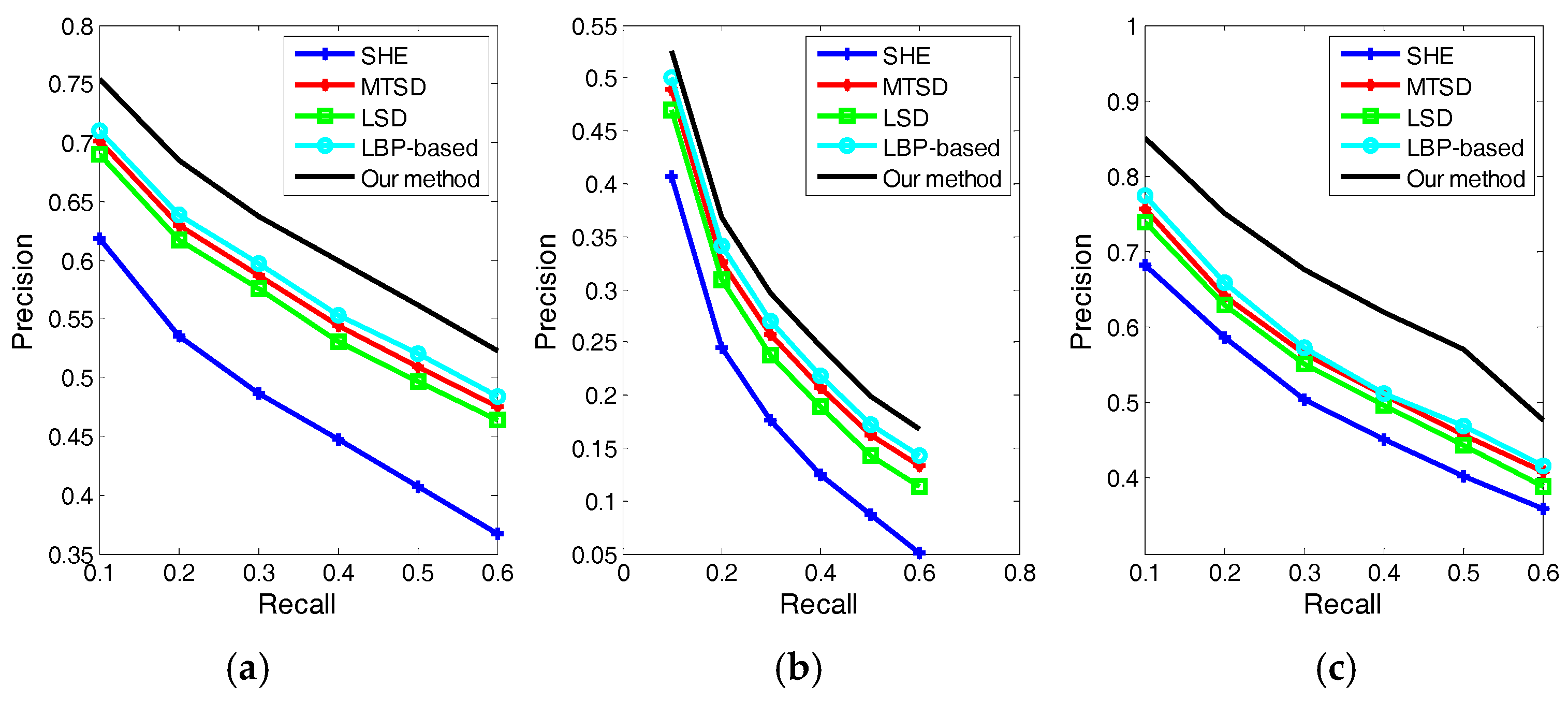

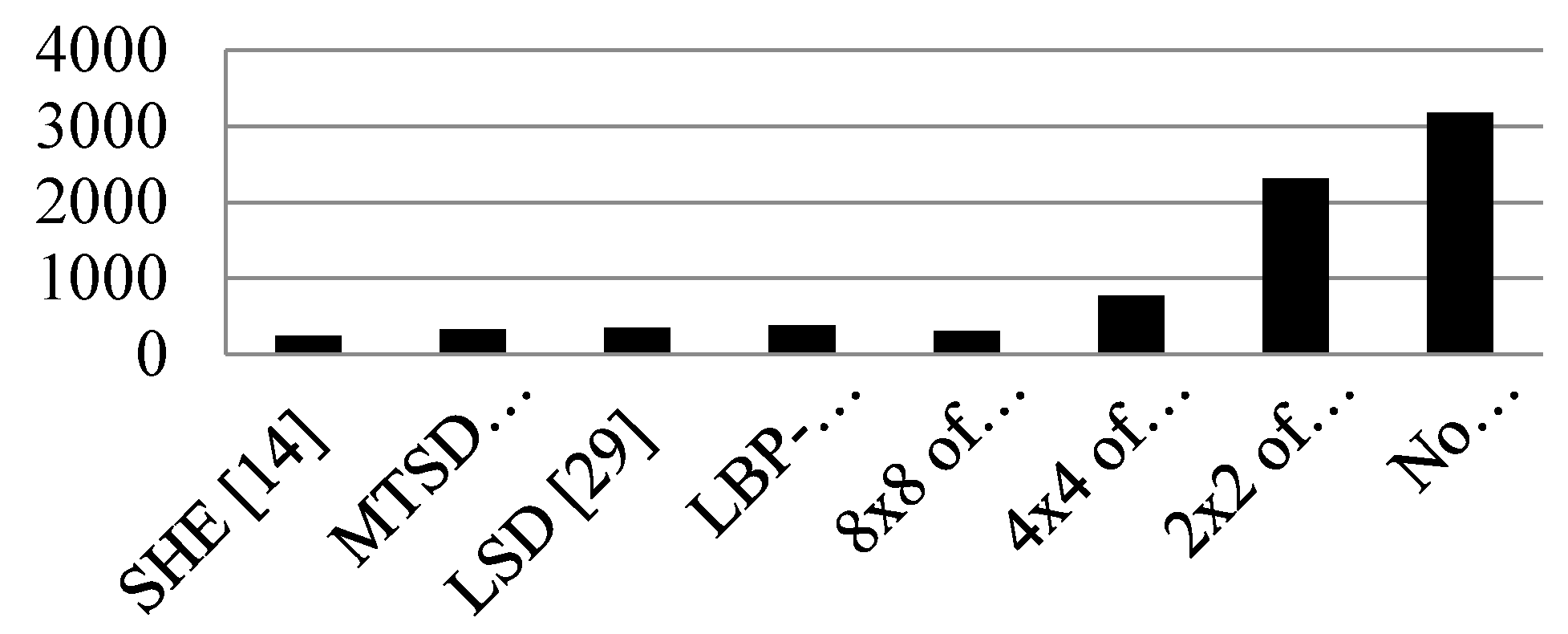

4.3. Experimental Results

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Swain, M.J.; Ballard, D.H. Color indexing. Int. J. Comput. Vis. 1991, 7, 11–32. [Google Scholar] [CrossRef]

- Huang, J.; Kumar, R.; Mitra, M.; Zhu, W.; Zabih, R. Image indexing using color correlograms. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Juan, Puerto Rico, 17–19 June 1997; pp. 762–768.

- Stricker, M.; Orengo, M. Similarity of color images. In Proceedings of the SPIE Storage and Retrieval for Image and Video Databases, San Jose, CA, USA, 5 February 1995; Volume 2420, pp. 381–392.

- Pass, G.; Zabih, R.; Miller, J. Comparing images using color coherence vectors. In Proceedings of the fourth ACM international conference on Multimedia, Boston, MA, USA, 18–22 November 1996; pp. 65–73.

- Haralick, R.M. Statistical and structural approaches to texture. Proc. IEEE 1979, 67, 786–804. [Google Scholar] [CrossRef]

- Mao, J.; KJain, A. Texture classification and segmentation using multiresolution simultaneous autoregressive models. Pattern Recognit. 1992, 25, 173–188. [Google Scholar] [CrossRef]

- Manjunath, B.; Ma, W. Texture features for browsing and retrieval of image data. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 837–842. [Google Scholar] [CrossRef]

- Diaz, M.; Manian, V.; Vásquez, R. Wavelet features for color image classification. In Proceedings of the Imaging and Geospatial Information Society Annual Conference, Orlando, FL, USA, 25 April 2000.

- Bres, S.; Schettini, R. Detection of interest points for image indexation. In Proceedings of the International Conference on Advances in Visual Information Systems, Amsterdam, The Netherlands, 2–4 June 1999; pp. 427–435.

- Jain, A.K.; Zhong, Y.; Lakshmanan, S. Object matching using deformable templates. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 267–278. [Google Scholar] [CrossRef]

- Wang, X.Y.; Yang, H.Y.; Li, D.M. A new content-based image retrieval technique using color and texture information. Comput. Electr. Eng. 2013, 39, 746–761. [Google Scholar] [CrossRef]

- Walia, E.; Pal, A. Fusion framework for effective color image retrieval. J. Vis. Commun. Image R 2014, 25, 1335–1348. [Google Scholar] [CrossRef]

- Guo, J.-M.; Prasetyo, H. Content-based image retrieval using features extracted from halftoning-based block truncation coding. IEEE Trans. Image Process. 2015, 24, 1010–1024. [Google Scholar] [PubMed]

- Feng, L.; Wu, J.; Liu, S.; Zhang, H. Global correlation descriptor: A novel image representation for image retrieval. J. Vis. Commun. Image Represent. 2015, 33, 104–114. [Google Scholar] [CrossRef]

- Moghaddam, H.A.; Khajoie, T.T.; Rouhi, A.H.; Tarzjan, M.S. Wavelet correlogram: A new approach for image indexing and retrieval. Pattern Recognit. 2005, 38, 2506–2518. [Google Scholar] [CrossRef]

- Zhao, Q.; Tao, H. A motion observable representation using color correlogram and its applications to tracking. Comput. Vis. Image Underst. 2009, 113, 273–290. [Google Scholar] [CrossRef]

- Malviya, A.V.; Ladhake, S.A. Pixel based image forensic technique for copy-move forgery detection using auto color correlogram. Procedia Comput. Sci. 2016, 79, 383–390. [Google Scholar] [CrossRef]

- Carlucci, L. A formal system for texture languages. Pattern Recognit. 1972, 4, 53–72. [Google Scholar] [CrossRef]

- Lu, S.Y.; Fu, K.S. A syntactic approach to texture analysis. Comput. Graph. Image Process. 1978, 7, 303–330. [Google Scholar] [CrossRef]

- Liu, G.H.; Yang, J.Y. Image retrieval based on the texton co-occurrence matrix. Pattern Recognit. 2008, 41, 3521–3527. [Google Scholar] [CrossRef]

- Liu, G.H.; Zhang, L.; Hou, Y.K.; Li, Z.Y.; Yang, J.Y. Image retrieval based on multi-texton histogram. Pattern Recognit. 2010, 43, 2380–2389. [Google Scholar] [CrossRef]

- Liu, G.H.; Li, Z.Y.; Zhang, L.; Xu, Y. Image retrieval based on micro-structure descriptor. Pattern Recognit. 2011, 44, 2123–2133. [Google Scholar] [CrossRef]

- Wang, X.Y.; Wang, Z.Y. A novel method for image retrieval based on structure elements descriptor. J. Vis. Commun. Image Represent. 2013, 24, 63–74. [Google Scholar] [CrossRef]

- Liu, J.L.; Zhao, H.W.; Kong, D.G.; Chen, C.X. Image retrieval based on weighted blocks and color feature. In Proceedings of the 2011 International Conference on Mechatronic Science, Electric Engineering and Computer (MEC), Jilin, China, 19–22 August 2011; pp. 921–924.

- Lasmar, N.E.; Berthoumieu, Y. Gaussian copula multivariate modeling for texture image retrieval using wavelet transforms. IEEE Trans. Image Process. 2014, 23, 2246–2261. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; James, Z.W. Automatic linguistic indexing of pictures by a statistical modeling approach. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1075–1088. [Google Scholar]

- Backes, A.R.; Casanova, D.; Bruno, O.M. Color texture analysis based on fractal descriptors. Pattern Recognit. 2012, 45, 1984–1992. [Google Scholar] [CrossRef]

- Zhao, M.; Zhang, H.; Sun, J. A novel image retrieval method based on multi-trend structure descriptor. J. Vis. Commun. Image Represent. 2016, 38, 73–88. [Google Scholar] [CrossRef]

- Zeng, Z.Y. A novel local structure descriptor for color image retrieval. Information 2016, 7, 9. [Google Scholar] [CrossRef]

- Shrivastava, N.; Tyagi, V. An integrated approach for image retrieval using local binary pattern. Multimedia Tools Appl. 2016, 75, 6569–6583. [Google Scholar] [CrossRef]

| Different Block Sizes | Recall Rates | |||||

|---|---|---|---|---|---|---|

| 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | |

| No block | 0.6505 | 0.5694 | 0.5162 | 0.4701 | 0.4312 | 0.3850 |

| 2 × 2 | 0.6922 | 0.6075 | 0.5535 | 0.5088 | 0.4655 | 0.4202 |

| 4 × 4 | 0.6912 | 0.6070 | 0.5529 | 0.5109 | 0.4708 | 0.4306 |

| 8 × 8 | 0.6610 | 0.5750 | 0.5219 | 0.4777 | 0.4380 | 0.3997 |

| Recall Rates | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 |

|---|---|---|---|---|---|---|

| Precision Rates | 0.6949 | 0.6313 | 0.5891 | 0.5510 | 0.5143 | 0.4795 |

| Different Block Sizes | Recall Rates | |||||

|---|---|---|---|---|---|---|

| 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | |

| No block | 0.7404 | 0.6683 | 0.6229 | 0.5817 | 0.5420 | 0.5041 |

| 2 × 2 | 0.7512 | 0.6834 | 0.6359 | 0.6005 | 0.5643 | 0.5250 |

| 4 × 4 | 0.7539 | 0.6848 | 0.6373 | 0.5993 | 0.5612 | 0.5228 |

| 8 × 8 | 0.7422 | 0.6726 | 0.6263 | 0.5854 | 0.5473 | 0.5084 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, F.-p.; Hao, M.-l. Effective Image Retrieval Using Texture Elements and Color Fuzzy Correlogram. Information 2017, 8, 27. https://doi.org/10.3390/info8010027

Yang F-p, Hao M-l. Effective Image Retrieval Using Texture Elements and Color Fuzzy Correlogram. Information. 2017; 8(1):27. https://doi.org/10.3390/info8010027

Chicago/Turabian StyleYang, Fu-ping, and Mei-li Hao. 2017. "Effective Image Retrieval Using Texture Elements and Color Fuzzy Correlogram" Information 8, no. 1: 27. https://doi.org/10.3390/info8010027

APA StyleYang, F.-p., & Hao, M.-l. (2017). Effective Image Retrieval Using Texture Elements and Color Fuzzy Correlogram. Information, 8(1), 27. https://doi.org/10.3390/info8010027