Arabic Handwritten Alphanumeric Character Recognition Using Very Deep Neural Network

Abstract

:1. Introduction

2. Arabic Handwriting Characteristics and Challenges

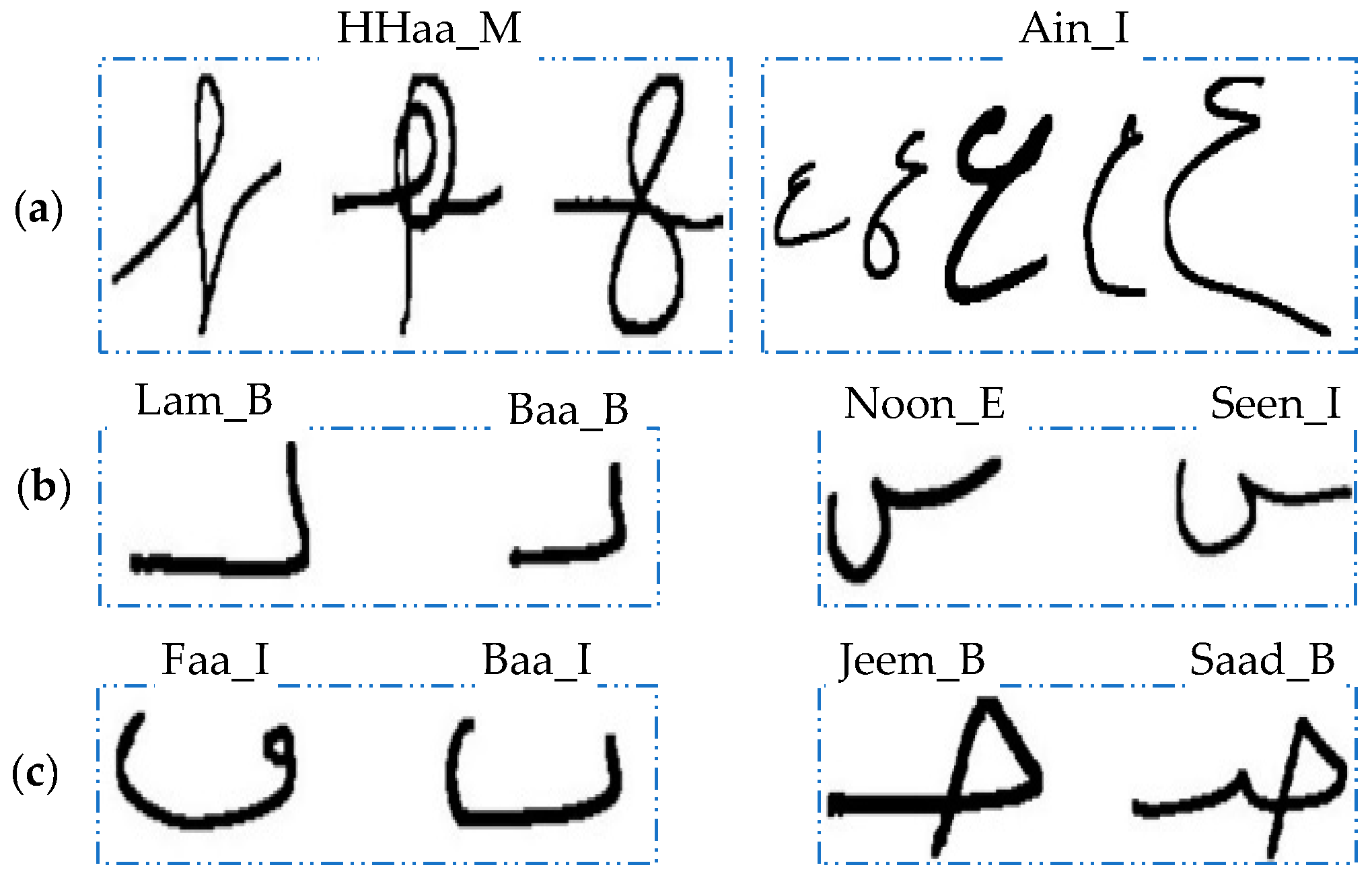

- The writing style is different for each writer. The same character can be written in various shapes as in Figure 1a.

- Certain characters are very similar in shape and are referred to as preplexing characters; Figure 1b shows a representative set of pairs of preplexing characters.

- Different characters have very similar shapes with a slight modification, as in Figure 1c.

3. Method

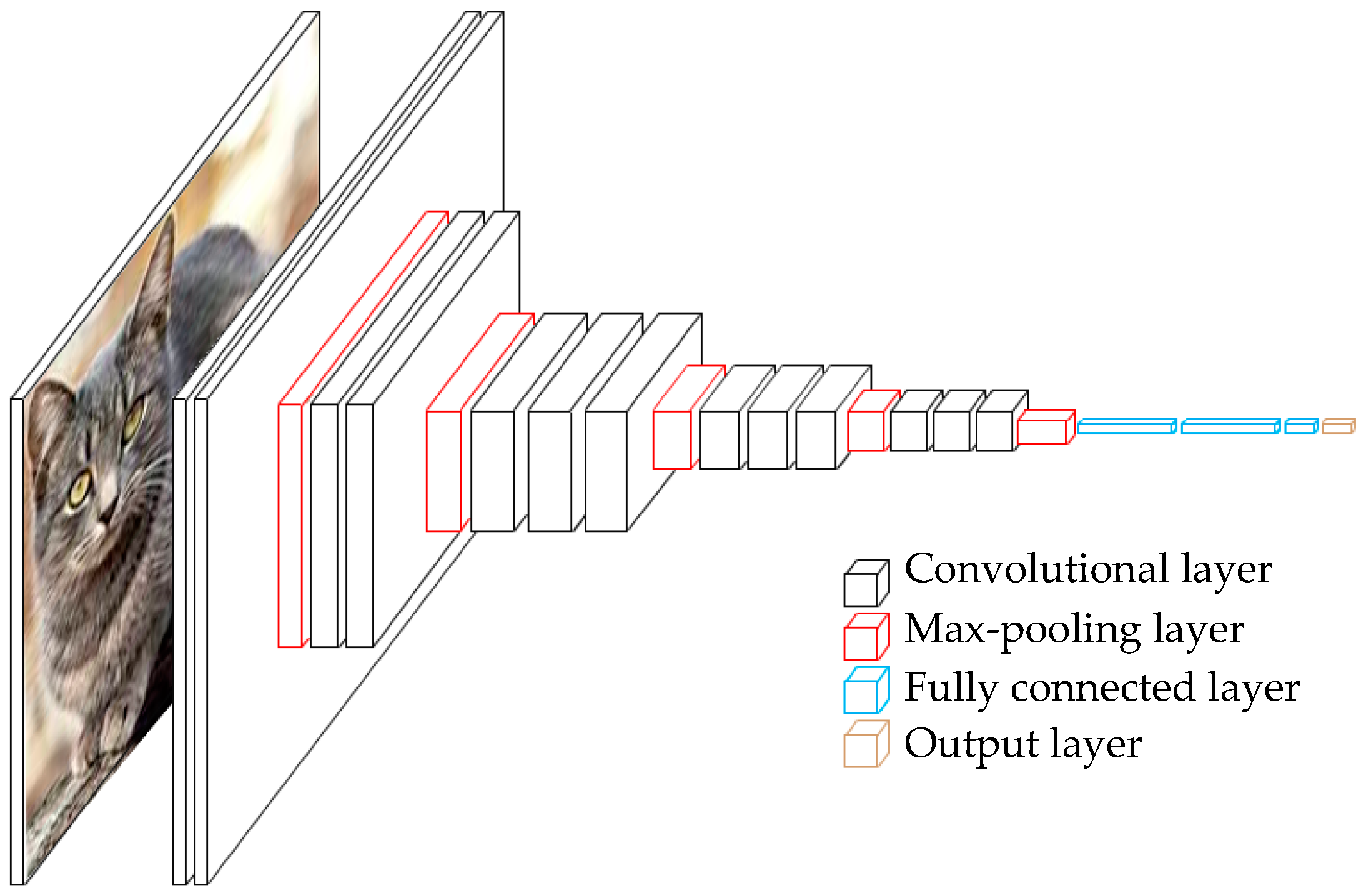

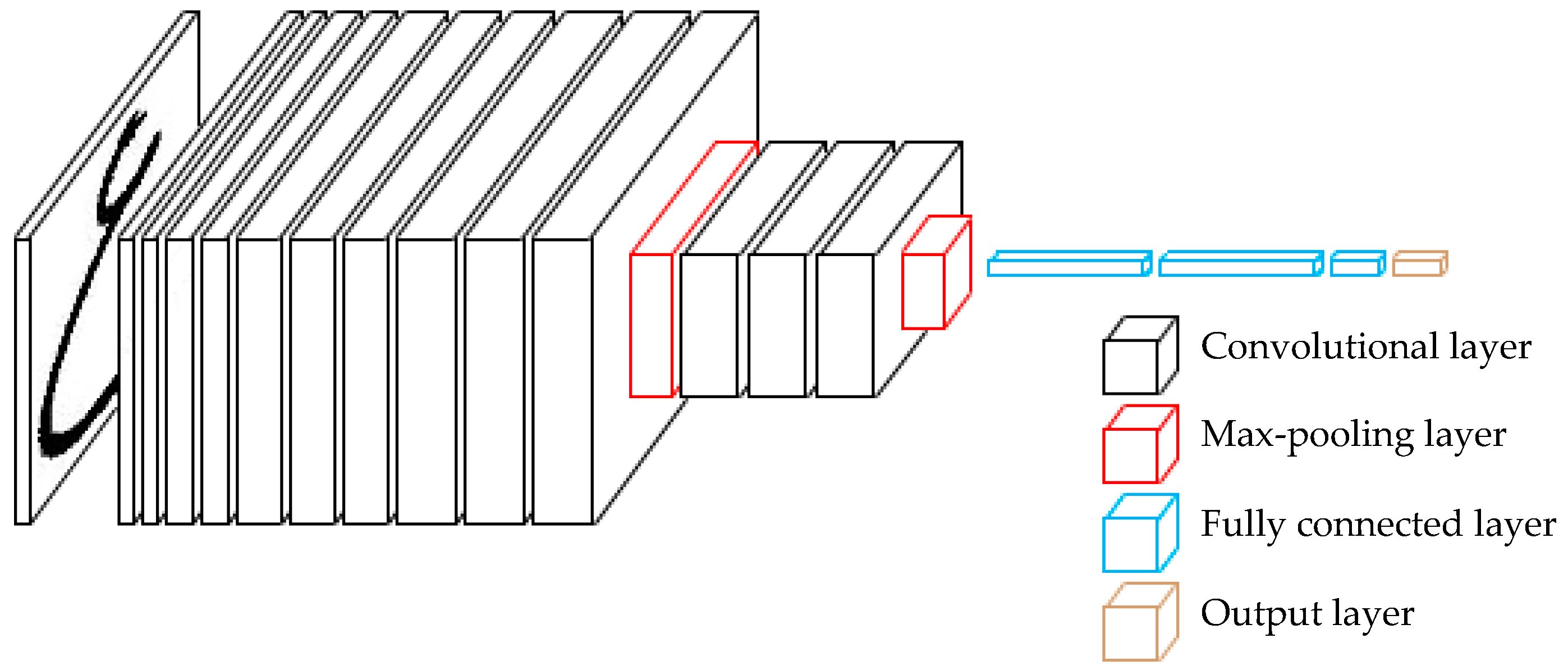

3.1. VGGNet

3.2. Alphanumeric VGG

3.3. Overfitting in Deep Network

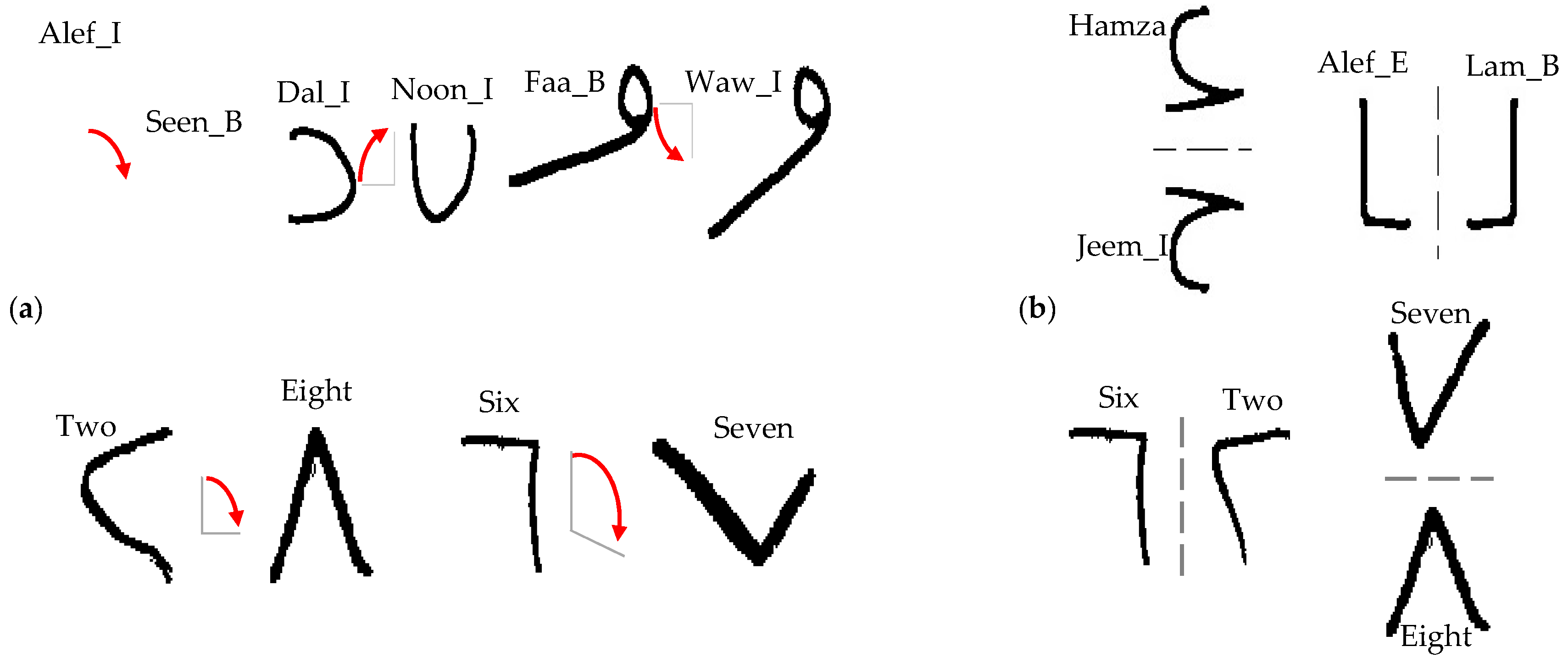

” and “

” and “  ” digits, and between “

” digits, and between “  ” and “

” and “  ” as shown in Figure 4. If flipping is used, the network would be unable to distinguish between handwritten “

” as shown in Figure 4. If flipping is used, the network would be unable to distinguish between handwritten “  ” and “

” and “  ”. On HACDB (the Arabic handwritten characters database), the network may become incapable of discerning many characters if the training set was augmented by the indiscriminate flipping or rotating of images. Figure 4 shows some characters that can be badly affected if augmentation is applied blindly. Figure 4a shows, for example, the character Dal_Isolated (

”. On HACDB (the Arabic handwritten characters database), the network may become incapable of discerning many characters if the training set was augmented by the indiscriminate flipping or rotating of images. Figure 4 shows some characters that can be badly affected if augmentation is applied blindly. Figure 4a shows, for example, the character Dal_Isolated (  ) can be the same as the character Noon_Isolated (

) can be the same as the character Noon_Isolated (  ) when rotation is applied. From Figure 4b, if flipping used, the network would be unable to distinguish properly between handwritten Lam_beginning (

) when rotation is applied. From Figure 4b, if flipping used, the network would be unable to distinguish properly between handwritten Lam_beginning (  ) and Alef_End (

) and Alef_End (  ) and between handwritten Hamza (

) and between handwritten Hamza (  ) and Jeem_Isolated (

) and Jeem_Isolated (  ) [18]. However, the main data augmentation employed in this work is for the HACDB database, which contains 6600 shapes of handwritten characters.

) [18]. However, the main data augmentation employed in this work is for the HACDB database, which contains 6600 shapes of handwritten characters.3.4. Error Criteria

4. Experimental Results and Performance Analysis

4.1. Training Method

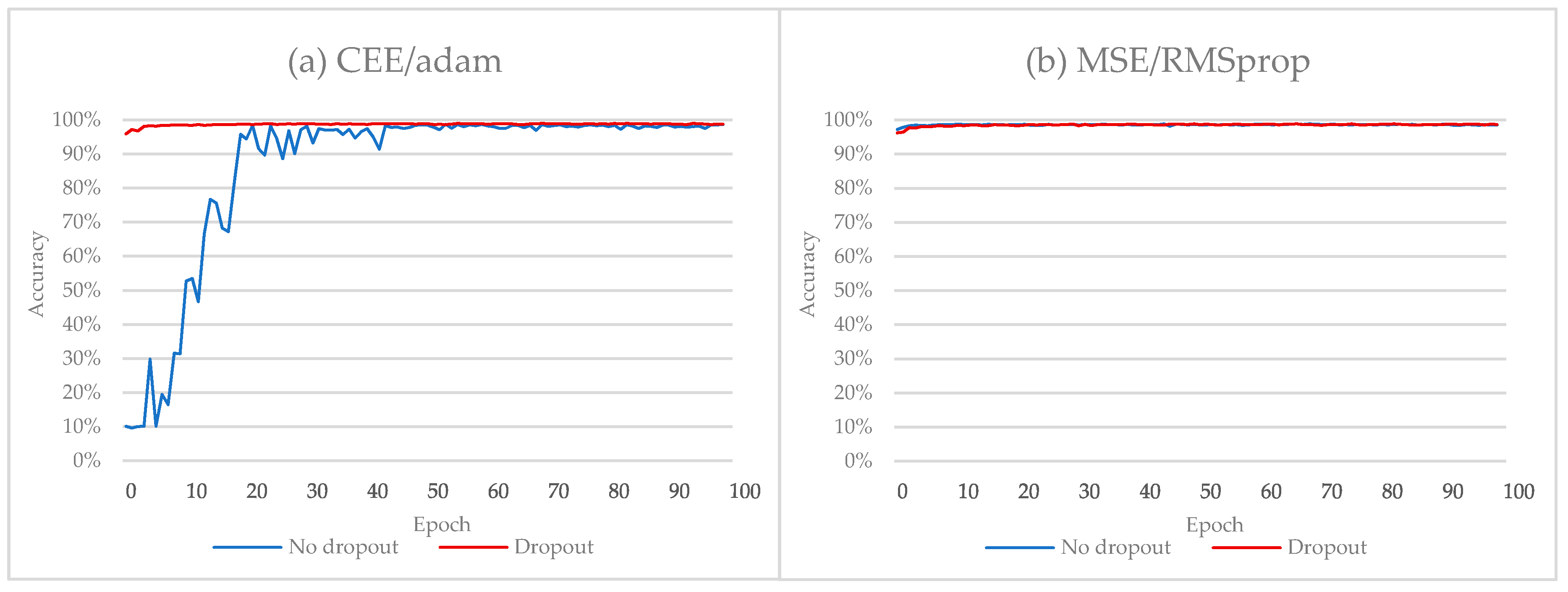

4.2. Overfitting

4.3. ADBase Database

4.3.1. The Impact of CEE Function

4.3.2. The Impact of MSE Function

4.3.3. Comparison with the State-of-the-Art

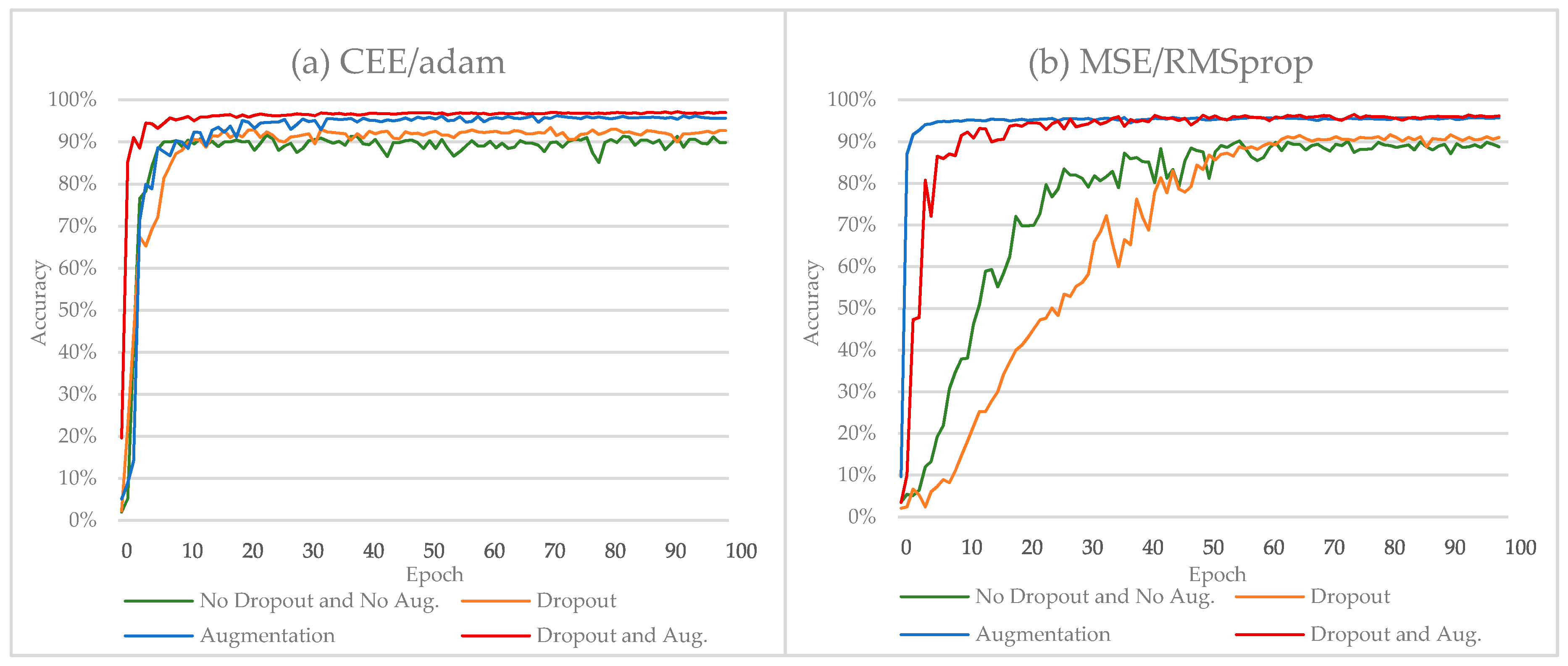

4.4. HACDB Database

4.4.1. The Impact of MSE Function

4.4.2. The Impact of CEE Function

4.4.3. Comparison with the State-of-the-Art

5. Conclusions

- Regarding the ADBase database: without dropout, we achieved classification accuracies equal to 98.83% using the MSE function and 98.75% using the CEE function on the validation set that does not hold on the test set. With dropout, we achieved classification accuracies equal to 99.57% and 99.66 using CEE and MSE, respectively. The MSE function achieved better results over the CEE function.

- Regarding the HACDB database: without dropout and augmentation, we achieved 90.61% and 91.97% classification accuracy using MSE and CEE, respectively, on the validation set that does not hold on the test set. With dropout, the classification accuracy improved slightly to 92.42% and 93.48% using MSE and CEE, respectively. We repeated the previous experiment, this time augmenting the original data 10-fold with each image. We see that data augmentation increases the validation set accuracy dramatically, i.e., from 90.61% to 95.95% using the MSE function and from 91.97% to 96.42% using CEE. However, we got the two state-of-the-art results when we applied data augmentation and dropout together. The two state-of-the-art results are 96.58% using the MSE function and 97.32% using the CEE function.

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Ashiquzzaman, A.; Tushar, A.K. Handwritten Arabic numeral recognition using deep learning neural networks. In Proceedings of the 2017 IEEE International Conference on Imaging, Vision & Pattern Recognition (icIVPR), Dhaka, Bangladesh, 13–14 February 2017; pp. 1–4. [Google Scholar]

- Chergui, L.; Kef, M. SIFT descriptors for Arabic handwriting recognition. Int. J. Comput. Vis. Robot. 2015, 5, 441–461. [Google Scholar] [CrossRef]

- Assayony, M.O.; Mahmoud, S.A. An Enhanced Bag-of-Features Framework for Arabic Handwritten Sub-words and Digits Recognition. J. Pattern Recognit. Intell. Syst. 2016, 4, 27–38. [Google Scholar]

- Tharwat, A.; Gaber, T.; Hassanien, A.E.; Shahin, M.; Refaat, B. Sift-based arabic sign language recognition system. In Afro-european Conference for Industrial Advancement; Springer: Berlin, Germany, 2015; pp. 359–370. [Google Scholar]

- Chen, J.; Cao, H.; Prasad, R.; Bhardwaj, A.; Natarajan, P. Gabor features for offline Arabic handwriting recognition. In Proceedings of the 9th IAPR International Workshop on Document Analysis Systems, Cambridge, MA, USA, 9–11 June 2010; pp. 53–58. [Google Scholar]

- Elzobi, M.; Al-Hamadi, A.; Saeed, A.; Dings, L. Arabic handwriting recognition using gabor wavelet transform and SVM. In Proceedings of the 2012 IEEE 11th International Conference on Signal Processing (ICSP), Beijing, China, 21–25 October 2012; pp. 2154–2158. [Google Scholar]

- Elleuch, M.; Tagougui, N.; Kherallah, M. Towards Unsupervised Learning for Arabic Handwritten Recognition Using Deep Architectures. In Proceedings of the International Conference on Neural Infomation Processing, Istanbul, Turkey, 9–12 November 2015; pp. 363–372. [Google Scholar]

- Zaiz, F.; Babahenini, M.C.; Djeffal, A. Puzzle based system for improving Arabic handwriting recognition. Eng. Appl. Artif. Intell. 2016, 56, 222–229. [Google Scholar] [CrossRef]

- Pechwitz, M.; Maddouri, S.S.; Märgner, V.; Ellouze, N.; Amiri, H. IFN/ENIT-database of handwritten Arabic words. In Proceedings of the 7th Colloque International Francophone sur l'Ecrit et le Document (CIFED), Hammamet, Tunis, 21–23 October 2002; pp. 127–136. [Google Scholar]

- Amrouch, M.; Rabi, M. The Relevant CNNs Features Based HMM for Arabic Handwriting Recognition. Int. J. Imaging Robot. 2017, 17, 98–109. [Google Scholar]

- Maalej, R.; Tagougui, N.; Kherallah, M. Online Arabic handwriting recognition with dropout applied in deep recurrent neural networks. In Proceedings of the 2016 IEEE 12th IAPR Workshop on Document Analysis Systems (DAS), Santorini, Greece, 11–14 April 2016; pp. 417–421. [Google Scholar]

- Boubaker, H.; Elbaati, A.; Tagougui, N.; El Abed, H.; Kherallah, M.; Alimi, A.M. Online Arabic databases and applications. In Guide to OCR for Arabic Scripts; Springer: Berlin, Germany, 2012; pp. 541–557. [Google Scholar]

- Al-Omari, F.A.; Al-Jarrah, O. Handwritten Indian numerals recognition system using probabilistic neural networks. Adv. Eng. Inform. 2004, 18, 9–16. [Google Scholar] [CrossRef]

- Abdleazeem, S.; El-Sherif, E. Arabic handwritten digit recognition. Int. J. Doc. Anal. Recognit. 2008, 11, 127–141. [Google Scholar] [CrossRef]

- Parvez, M.T.; Mahmoud, S.A. Arabic handwritten alphanumeric character recognition using fuzzy attributed turning functions. In Proceedings of the Workshop in Frontiers in Arabic Handwriting Recognition, 20th International Conference in Pattern Recognition (ICPR), Istanbul, Turkey, 22–26 August 2010. [Google Scholar]

- Lawgali, A.; Angelova, M.; Bouridane, A. HACDB: Handwritten Arabic characters database for automatic character recognition. In Proceedings of the 4th European Workshop on Visual Information Processing, Paris, France, 10–12 June 2013; pp. 255–259. [Google Scholar]

- Elleuch, M.; Maalej, R.; Kherallah, M. A New Design Based-SVM of the CNN Classifier Architecture with Dropout for Offline Arabic Handwritten Recognition. Procedia Comput. Sci. 2016, 80, 1712–1723. [Google Scholar] [CrossRef]

- Elleuch, M.; Kherallah, M. An Improved Arabic Handwritten Recognition System using Deep Support Vector Machines. Int. J. Multimed. Data Eng. Manag. 2016, 7, 1–20. [Google Scholar] [CrossRef]

- Lawgali, A. An Evaluation of Methods for Arabic Character Recognition. Int. J. Signal Process. Image Process. Pattern Recognit. 2014, 7, 211–220. [Google Scholar] [CrossRef]

- Zhong, Z.; Jin, L.; Xie, Z. High performance offline handwritten chinese character recognition using googlenet and directional feature maps. In Proceedings of the 2015 IEEE 13th International Conference on Document Analysis and Recognition (ICDAR), Nancy, France, 23–26 August 2015; pp. 846–850. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv, 2014; arXiv:1409.1556. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database, Computer Vision and Pattern Recognition. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in neural information processing systems, Lake Tahoe, California, USA, 3–8 December 2012; pp. 1097–1105. [Google Scholar]

- Fraser-Thomas, J.; Côté, J.; Deakin, J. Understanding dropout and prolonged engagement in adolescent competitive sport. Psychol. Sport Exerc. 2008, 9, 645–662. [Google Scholar] [CrossRef]

- Wu, H.; Gu, X. Towards dropout training for convolutional neural networks. Neural Netw. 2015, 71, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Van Dyk, D.A.; Meng, X.-L. The art of data augmentation. J. Comput. Gr. Stat. 2001, 10, 1–50. [Google Scholar] [CrossRef]

- Lemley, J.; Bazrafkan, S.; Corcoran, P. Smart Augmentation-Learning an Optimal Data Augmentation Strategy. IEEE Access 2017, 5, 5858–5869. [Google Scholar] [CrossRef]

- Polson, N.G.; Willard, B.T.; Heidari, M. A statistical theory of deep learning via proximal splitting. arXiv, 2015; arXiv:1509.06061. [Google Scholar]

- Kim, I.-J.; Xie, X. Handwritten Hangul recognition using deep convolutional neural networks. Int.J. Doc. Anal. Recognit. 2015, 18, 1–13. [Google Scholar] [CrossRef]

- Kline, D.M.; Berardi, V.L. Revisiting squared-error and cross-entropy functions for training neural network classifiers. Neural Comput. Appl. 2005, 14, 310–318. [Google Scholar] [CrossRef]

- Abdelazeem, S.; El-Sherif, E. The Arabic Handwritten Digits Databases ADBase & MADBase. Available online: http://datacenter.aucegypt.edu/shazeem/ (accessed on 24 August 2017).

| VGG_16 | Alphanumeric VGG_66 | Alphanumeric VGG_10 |

|---|---|---|

| Input 224 × 224 RGB image | Input 28 × 28 binary image | |

| Convolutional 3_64 | Convolutional 3_8 | Convolutional 3_8 |

| Convolutional 3_64 | Convolutional 3_8 | Convolutional 3_8 |

| Maxpooling | ||

| Convolutional 3_128 | Convolutional 3_16 | Convolutional 3_16 |

| Convolutional 3_128 | Convolutional 3_16 | Convolutional 3_16 |

| Maxpooling | ||

| Convolutional 3_256 | Convolutional 3_32 | Convolutional 3_32 |

| Convolutional 3_256 | Convolutional 3_32 | Convolutional 3_32 |

| Convolutional 3_256 | Convolutional 3_32 | Convolutional 3_32 |

| Maxpooling | ||

| Convolutional 3_512 | Convolutional 3_64 | Convolutional 3_64 |

| Convolutional 3_512 | Convolutional 3_64 | Convolutional 3_64 |

| Convolutional 3_512 | Convolutional 3_64 | Convolutional 3_64 |

| Maxpooling | ||

| Convolutional 3_512 | Convolutional 3_64 | Convolutional 3_64 |

| Convolutional 3_512 | Convolutional 3_64 | Convolutional 3_64 |

| Convolutional 3_512 | Convolutional 3_64 | Convolutional 3_64 |

| Maxpooling | ||

| FC-4096 | FC-512 | FC-512 |

| FC-4096 | FC-512 | FC-512 |

| FC-1000 | FC-66 | FC-10 |

| Network | VGG_16 | Alphanumeric VGG-66 | Alphanumeric VGG-10 |

|---|---|---|---|

| Number of parameters | 138,357,544 | 2,133,082 | 2,104,354 |

| Arabic digit | ١ | ٢ | ٣ | ٤ | ٥ | ٦ | ٧ | ٨ | ٩ | ٠ |

| English digit | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 0 |

| Image |  |  |  |  |  |  |  |  |  |  |

| Learning Method | No Dropout | Dropout |

|---|---|---|

| CCE/adam | 98.83% | 99.57% |

| MSE/RMSprop | 98.75% | 99.66% |

| Author(s) | Method | Accuracy |

|---|---|---|

| Proposed | Alphanumeric VGG/MSE | 99.66% |

| Proposed | Alphanumeric VGG/CEE | 99.57% |

| Abdelazeem et al. [14] | SVM with RBF kernel | 99.48% |

| Parvez et al. [15] | Fuzzy Turning Function | 97.17% |

| Character | Shape | Character | Shape |

|---|---|---|---|

| Ain (ع) |  | Raa (ر) |  |

| Alef (ا) |  | Saad (ص) |  |

| Baa (ب) |  | Seen (س) |  |

| Dal (د) |  | TTaa (ط) |  |

| Faa (ف) |  | Waw (و) |  |

| HHaa (ه) |  | Yaa (ى) |  |

| Hamza (ء) |  | Alef_Lam_Jemm (الحـ) |  |

| Jeem (ج) |  | Lam_Alef (لا) |  |

| Kaf (ك) |  | Lam_Jeem (لحـ) |  |

| Lam (ل) |  | Lam_Meem (لمـ) |  |

| Meem (م) |  | Meem_Jeem (محـ) |  |

| Noon (ن) |  | Lam_Meem_Jeem (لمحـ) |  |

| Learning Method | No Dropout and No Augmentation | Dropout | Augmentation | Dropout and Augmentation |

|---|---|---|---|---|

| CCE/adam | 91.97% | 93.48% | 96.42% | 97.32% |

| MSE/RMSprop | 90.61% | 92.42% | 95.95% | 96.58% |

| Authors | Method | Accuracy |

|---|---|---|

| Proposed | Alphanumeric VGG/CEE | 97.32% |

| Proposed | Alphanumeric VGG/MSE | 96.58% |

| Elleuch et al. [7] | DBN | 96.36% |

| Elleuch et al. [17] | CNN + SVM | 94.17% |

| Elleuch et al. [18] | Deep SVM | 91.36% |

| Lawgali [19] | DCT + ANN | 75.31% |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mudhsh, M.; Almodfer, R. Arabic Handwritten Alphanumeric Character Recognition Using Very Deep Neural Network. Information 2017, 8, 105. https://doi.org/10.3390/info8030105

Mudhsh M, Almodfer R. Arabic Handwritten Alphanumeric Character Recognition Using Very Deep Neural Network. Information. 2017; 8(3):105. https://doi.org/10.3390/info8030105

Chicago/Turabian StyleMudhsh, MohammedAli, and Rolla Almodfer. 2017. "Arabic Handwritten Alphanumeric Character Recognition Using Very Deep Neural Network" Information 8, no. 3: 105. https://doi.org/10.3390/info8030105