1. Introduction

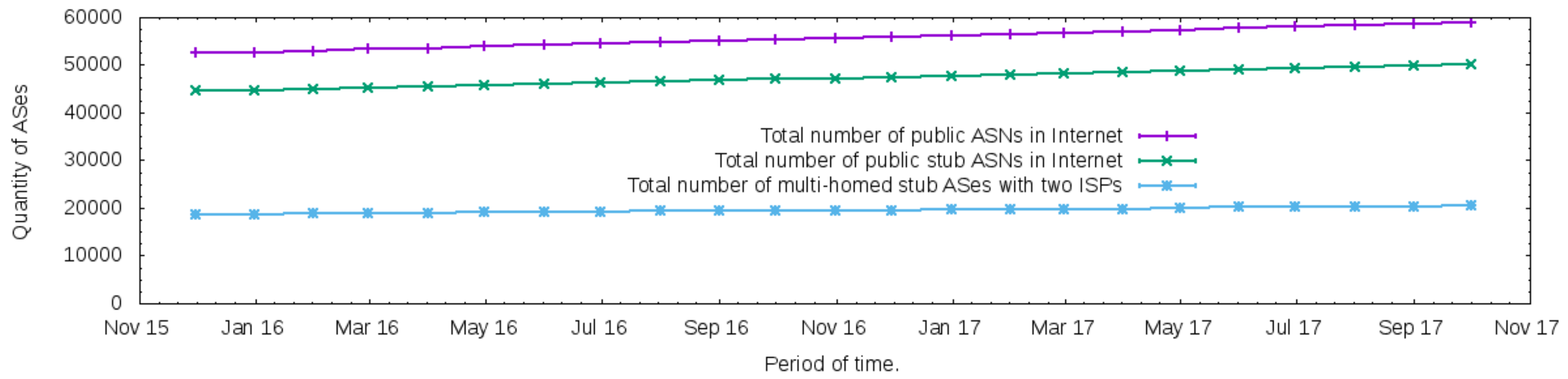

The Internet is a collection of tens of thousands of independently operated networks. In the jargon of the Internet, these networks are called Autonomous Systems (ASes). An AS can be an Internet Service Provider (ISP), a campus, a content provider, or any other independently operated network. All these ASes can connect with each other as they execute an inter-domain routing protocol to exchange network reachability information. The Border Gateway Protocol (BGP) [

1] is the current standard for inter-domain routing on the Internet and provides reachability information among the ASes.

BGP is one of the most successful protocols on the Internet. However, many researchers have indicated issues related to BGP since its inception, e.g., the lack of end-to-end service guarantees, long convergence time, and security issues [

2]. Furthermore, it is well known that BGP suffers from the process of “ossification”, where the architecture becomes very dependent on the protocol, and new features are inherently hard to introduce into the network.

For the BGP, the ossification is due to economic reasons combined with the fact that backward compatibility has to be assured since there is no flag day to switch to a new architecture. Thus, all BGP appliances have to execute the same version of the protocol to operate appropriately, or anomalies in the network may occur (e.g., BGP black holes). Hence, a new feature for the BGP protocol has to have a minimum integration within heterogeneous networks and interoperate through different administrative domains (e.g., the Internet). These requirements have frustrated new proposals to evolve BGP and the ecosystem of inter-domain routing [

3].

Although the BGP has some mechanisms to control inbound traffic, for example, Multi-Exit Discriminator (MED), Communities, AS-Path Prepending and others, the effectiveness of applying these mechanisms are unclear and are also not guaranteed once they are indirect approaches. Thereby, BGP inbound traffic control mechanisms try to influence other ASes to change the preference regarding routes, and often the desired traffic distribution cannot be achieved. Furthermore, another difficulty for the manipulation of inter-domain traffic is the BGP’s destination-based forwarding paradigm [

1]. This approach limits the granularity of selecting the traffic once the decision of forwarding IP packets only relies on the information of the IP destination header field.

One promising approach to enable network architectures to evolve is Software Defined Networking (SDN). SDN emerged as a new paradigm that allows network engineers to elaborate new network designs and includes desirable features for this evolution such as open programmable interfaces at networking devices, the separation between control and forwarding planes, and innovation occurs in applications executed inside the control planes [

4,

5,

6,

7].

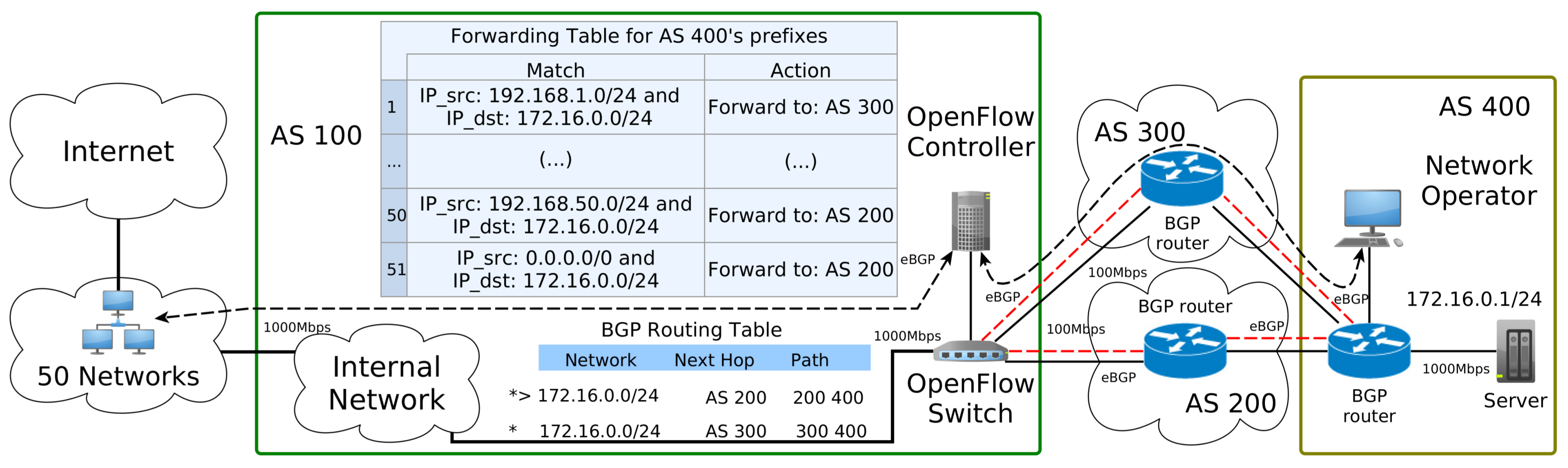

A notable SDN technology is the OpenFlow protocol (OF). OF is an open programmable interface for SDN that gained momentum in academia and industry over the last decade [

8]. OF is flow-oriented, meaning that the sequence of packets identified by a set of common header fields follows a given path. Thus, OF can provide fine-grain forward capabilities to the network by matching multiple fields of TCP/IP. This work applies SDN concepts and uses the OpenFlow capabilities to compose a new network architecture to evolve inter-domain routing systems.

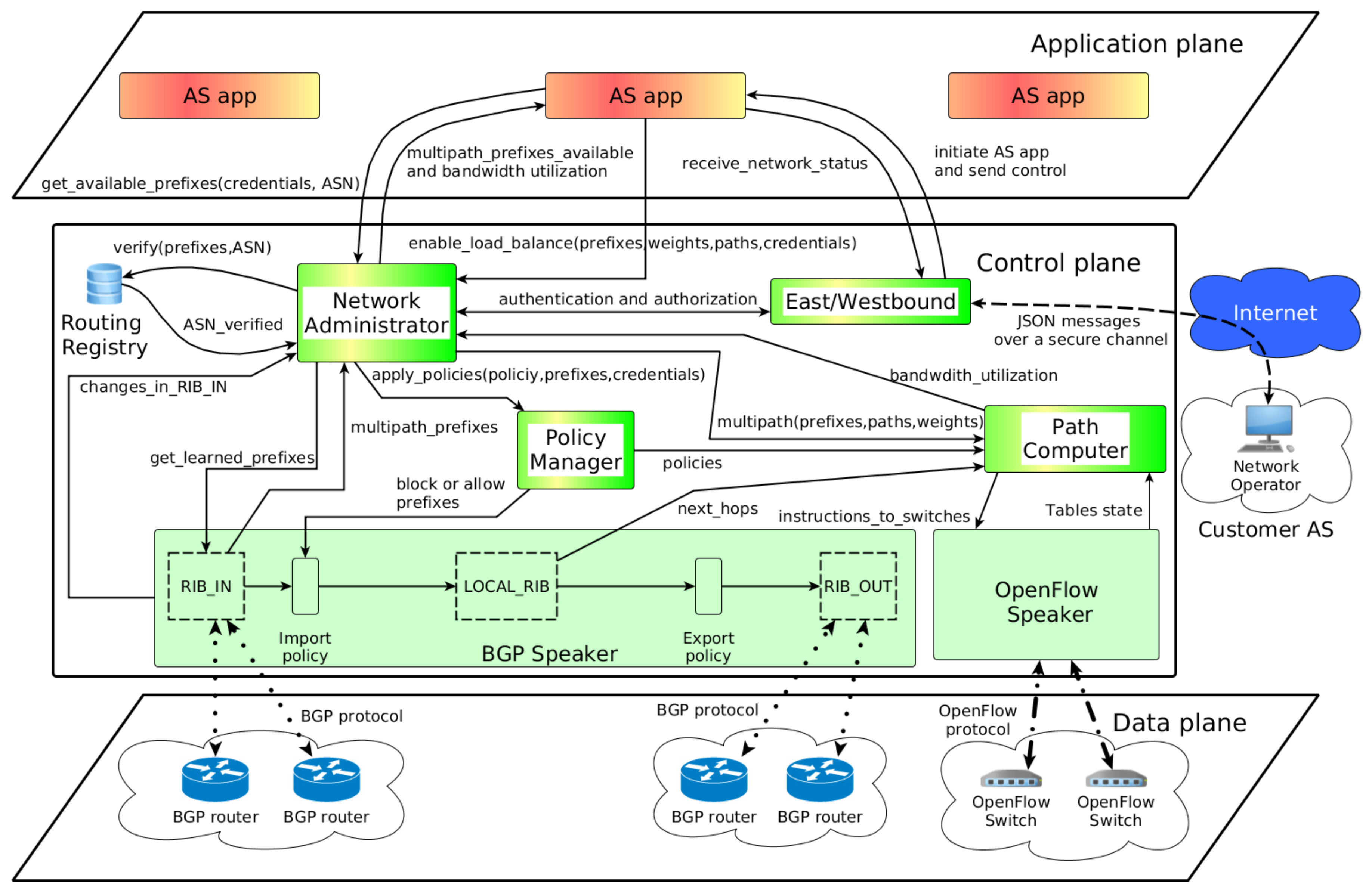

Here, the proposal is to investigate an architecture to manage inbound traffic in an inter-domain routing system using SDN technologies. The main idea was to depict new mechanisms to be deployed in transit ASes (often an ISP) that can allow a customer AS to execute control tasks for managing how incoming traffic can reach its network. Therefore, the main contributions of this work were:

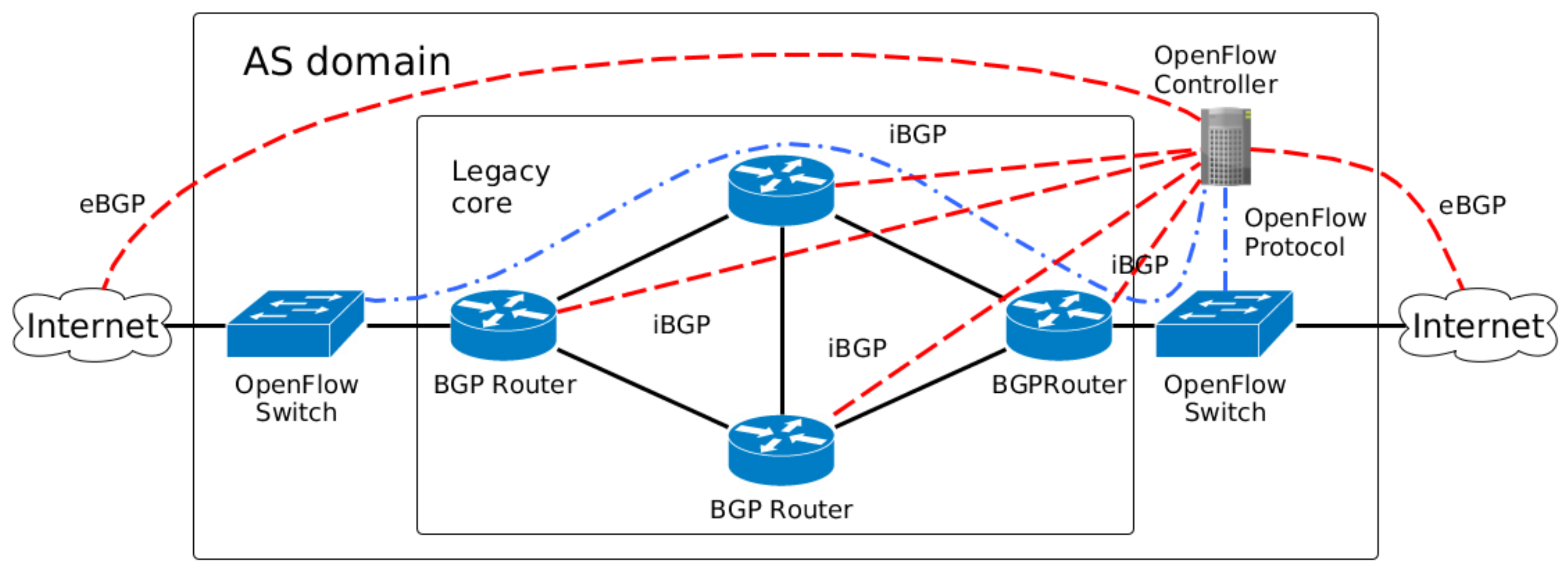

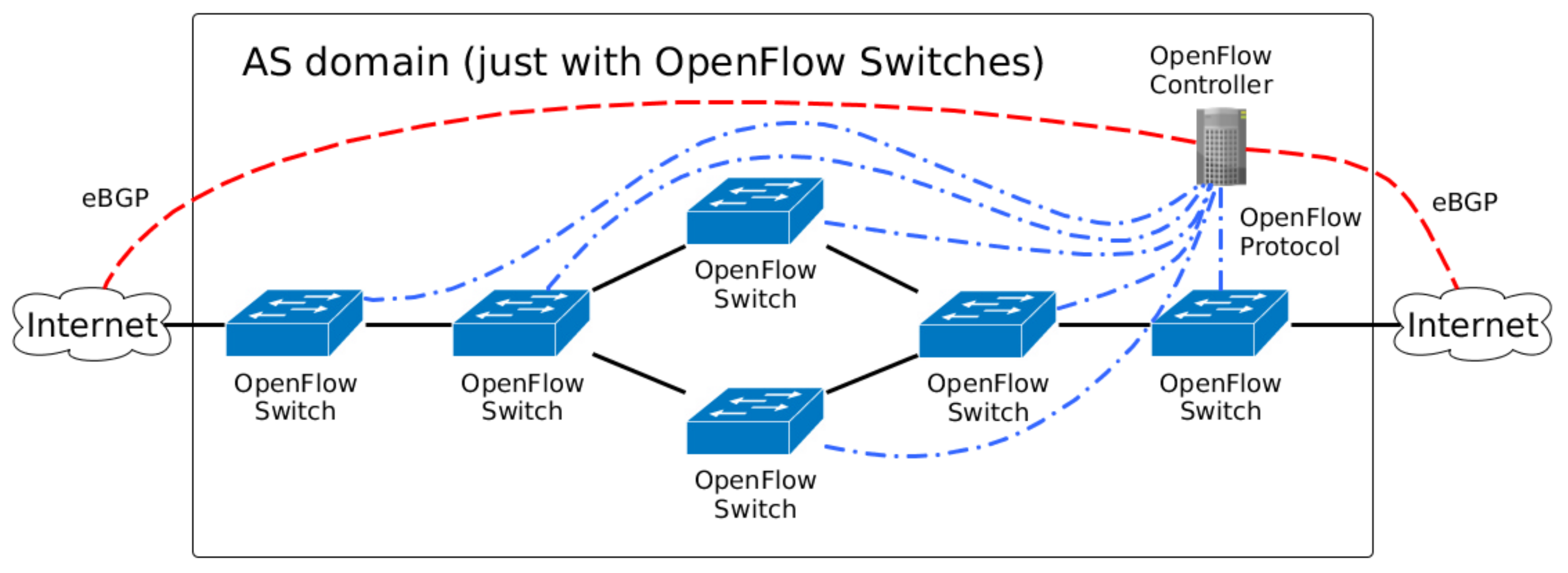

Presenting and describing an architecture that uses OF networks and is compatible with current inter-domain routing protocol (BGP);

Exploring OF features for network traffic engineering tasks in inter-domain routing; and

Analyzing how the incoming traffic can be managed using the proposed architecture and BGP.

The remainder of the text is organized as follows.

Section 2 discusses the related works regarding SDN solutions for inter-domain routing and

Section 3 details the proposed architecture. Then,

Section 4 describes how the ASes can apply inbound control to manage network traffic towards its networks using SDN applications executed inside the architecture and

Section 5 performs an evaluation of the proposal with a proof of concept. Finally,

Section 6 provides more discussion on the architecture and

Section 7 presents the final thoughts of this work.

2. Related Works

Applying BGP’s inbound traffic engineering techniques (such as

Selective advertisement or

AS-path Prepending) is a counterproductive approach as these techniques do not guarantee its effectiveness. Moreover, the BGP techniques try to

influence the routing decisions of external ASes to obtain their desired inbound traffic distribution, hence network operators that use these approaches are led to a trial-and-error process, where each AS will manipulate its own routing system to fulfill its business goals. Indeed, the problem of inter-domain routing traffic engineering can be seen as a conflicted one where the interactions between ASes can be modeled as game theory and nonlinear programming [

9].

The idea of overcoming the BGP’s limitation for control inbound traffic with traditional networks where all the network logic is embedded into network appliances and distributed in the network topology can be classified into: using a type of overlay network [

10,

11,

12], a new network protocol [

13,

14], or both approaches [

15]. All these works shared the drawbacks of requiring executing protocols embedded to network appliances, or an overhead in network management activities to support these solutions. Usually, medium and large networks have a myriad of network appliances and the deployment of solutions with traditional approaches can lead to high CAPEX (capital expenditure) and OPEX (operational expenditure). Nonetheless, SDN has desirable features with the potential to create solutions to evolve the inter-domain routing environment.

Exploring the SDN concepts, the Multi-Dimension Link Vector (MLV) [

16] presented a new mechanism to exchange a network view. MLV enabled SDN networks to improve the Internet routing flexibility in an inter-domain network federation by using a link vector algorithm to compose a new way of representing the inter-domain state of the network. With a link vector data structure, the network operator could decide the routes of the inter-domain routing. Even though MLV was a proposal to evolve inter-domain routing among SDN networks, it was not compatible with BGP. This requirement imposed a practical limitation for the wide use of the MLV proposal once SDN networks have not yet been broadly adopted for inter-domain routing.

Regarding integration between the SDN controller and BGP, almost every modern SDN controller has mechanisms to exchange BGP information with others BGP speakers (e.g., legacy routers). For example, the ONF ATRIUM project proposed a framework to support BGPusing ONOS controller [

17] and Quagga [

18]. Another widely used SDN controller, the Ryu [

19], supports interworking between OpenFlow and BGP. Once a BGP component (the BGP speaker) is properly installed in the Ryu SDN framework, the controller can establish BGP sessions with others BGP speakers. Therefore, proposals to cope with an inter-domain environment that is not back-compatible with BGP have little practical appeal. The proposed architecture of this paper is compatible with BGP and provides mechanisms to override the BGP default behavior when it is required.

One proposal that allows BGP rules to be overridden by SDN rules is the Software-Defined Internet exchange (SDX) [

20], and its extension, the Industrial-Scale Software Defined Internet Exchange Point (iSDX) [

21]. This used the centralized control idea of SDN into an Internet Exchange Point (IXP) and enabled the creation of more flexible forwarding policies, reducing the forwarding table size of OpenFlow devices used by the IXP participants and the end-to-end enforcing of QoS. However, they did not design these proposals to other types of network environments. Here, this paper proposes an architecture to transit ASes, especially the ISPs, to provide management capabilities to other ASes to control their inbound network traffic. Furthermore, the new routing logic occurs directly between the customers and the transit AS that deploys the proposed architecture.

The Route Chaining System (RCS) [

22] presented the concept of using SDN networks to allow ASes to select routes that do not follow the standard BGP algorithm and explore the possibility of Internet diversity paths. The RCS explores path diversity in inter-domain routing systems with SDN, focusing on controlling the network traffic in transit ASes from the AS source to their destinations. The RCS also requires an inter-domain communication layer between the ASes to enable this solution. The main drawback of the RCS is the propagation of new types of prefix messages in the inter-domain environment for representing the fine-grain rules. This new inter-domain routing system dramatically increases the signaling, making the solution inviable once it consumes a lot of bandwidth. Here, the idea of this architecture is to mitigate the utilization of the bandwidth to control messages between domains using compression techniques and exchanging the network state by sending it in binary format, and not in plain text.

Furthermore, regarding routing logic, Kotronis et al. [

23] proposed SIREN, a solution to offload the routing functions of a customer AS to an external trusted contractor. Thus, this contractor would be responsible for optimizing and managing the network traffic of its customers. The main idea of SIREN is that, with multiple customers, the contractor would eventually get the global inter-domain network state, be able to identify conflicts in inter-domain policies and take actions to optimize customer traffic. The idea of SIREN goes towards the opposite direction of this paper, where it is claimed to give more control to customer ASes that own and advertise its prefixes. Using the architecture of this work, a customer AS can manage the network traffic on the Internet that otherwise, using only the BGP, cannot be achieved. In other words, SIREN pushes more control for ISPs to manage the inter-domain routing of its customer; here, customers have more control of the inter-domain routing once it is the customer’s responsibility to apply the routing logic.

4. Controlling Inbound Traffic

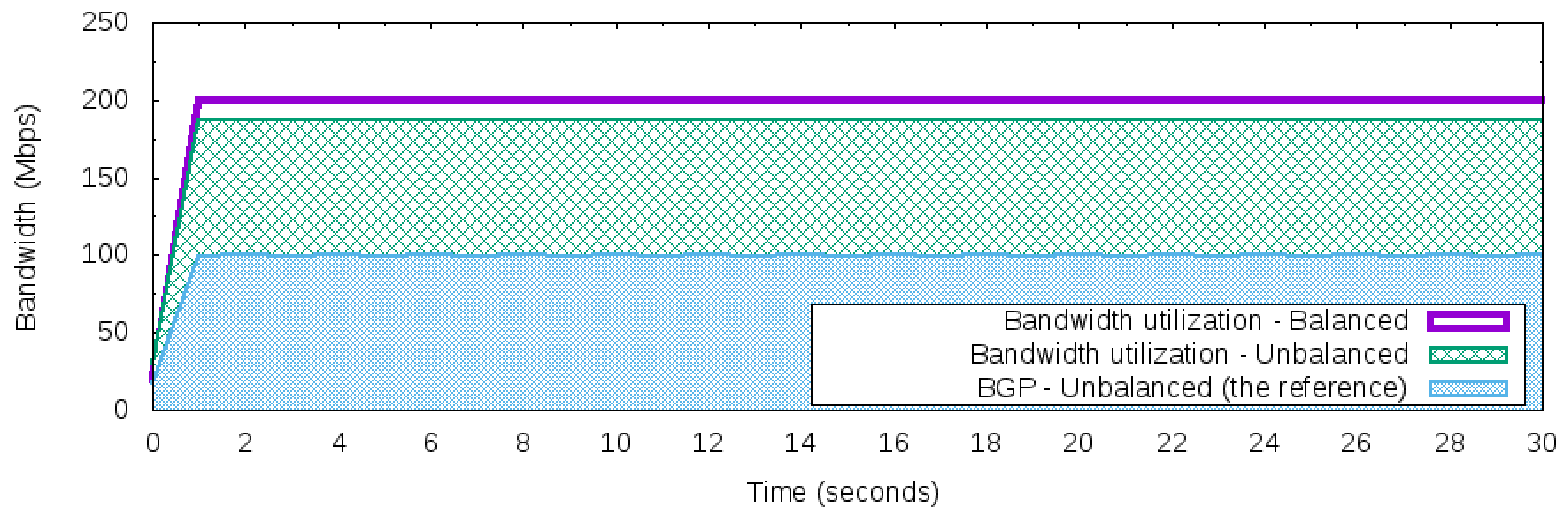

The control of inbound traffic is executed inside the application plane of the proposed architecture. A customer AS initializes the AS app to manage the network prefixes of the transit AS with the goal of instructing how traffic will be handled. The AS app also receives the network status from the proposed architecture. For example, the bandwidth utilization of each prefix owned by the Customer AS, or the available paths to reach its networks. With that information, the network operation of the Customer AS can send control information to configure how much traffic each path will receive for each prefix in use.

The AS app is responsible for exploring the multiple paths available for a given prefix if it is required. The information about those paths come from the BGP Speaker component that learned all the prefixes advertised by the AS neighbors through external BGP peering. Furthermore, with the information about multiple paths available, the Customer AS can indicate how the traffic will be treated and distributed to those paths.

The BGP protocol uses just one “best” next hop for each prefix even if other paths to those prefixes are valid and available. BGP is not a multipath protocol, and one good reason for that is that exploring multiple paths on the Internet can affect the performance of Transmission Control Protocol (TCP) connections, the most used transport level protocol in the Internet [

25].

Thus, distributing packets belonging to a connection through multiple paths can result in poor performance of high-level protocols of the TCP/IP stack [

26] (e.g., reordering packets of TCP [

27]). In particular, it is often the case on the Internet, which connects heterogeneous types of network appliances and protocols, that the network suffers from different delays among paths. To avoid the problem of exploring path diversity of the Internet, the proposed architecture guarantees that the association of the source and destination of the IP packets will be forwarded through the same path. Then, a 2-tuple composed of the IP source prefix and the IP destination prefix is adopted to define a flow in the OpenFlow network. Therefore, for a given TCP connection, it will use the same path during its time life (if the path is valid and available).

Aligned with the 2-tuple flow definition, there is an also a probe test from the AS app to a customer AS that checks the health of each path in use. Thus, if an inter-domain path suffers a problem (such as a link failure) or a transient loop occurs, the AS app will be notified to not use that problematic path.

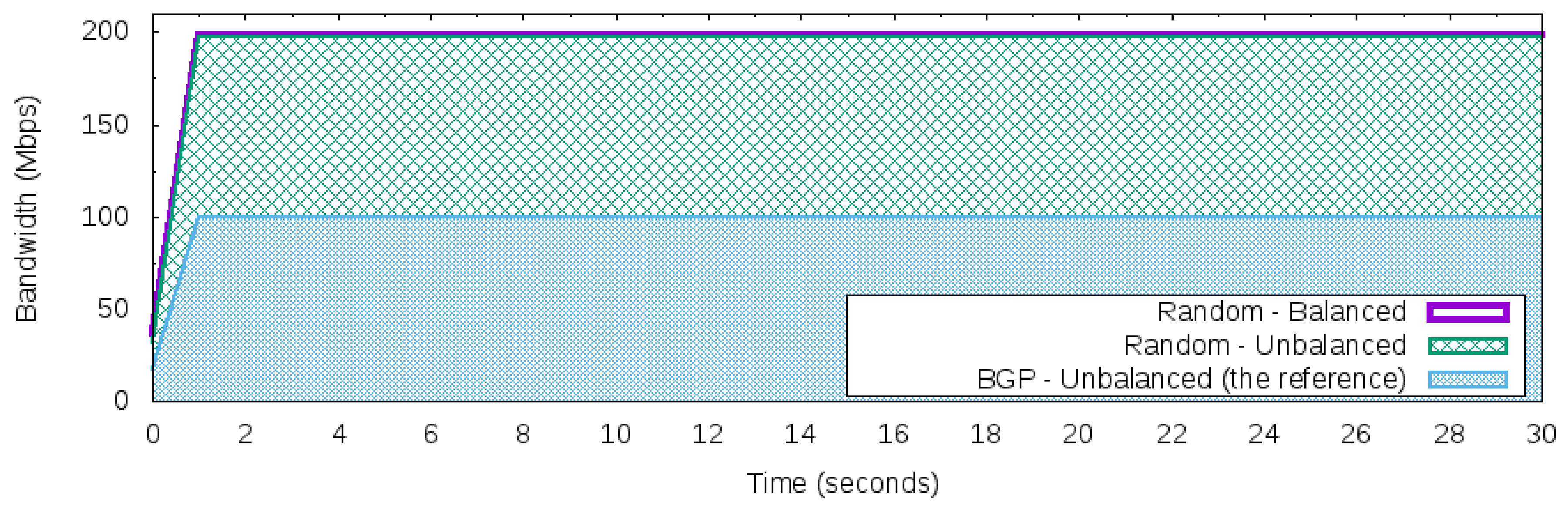

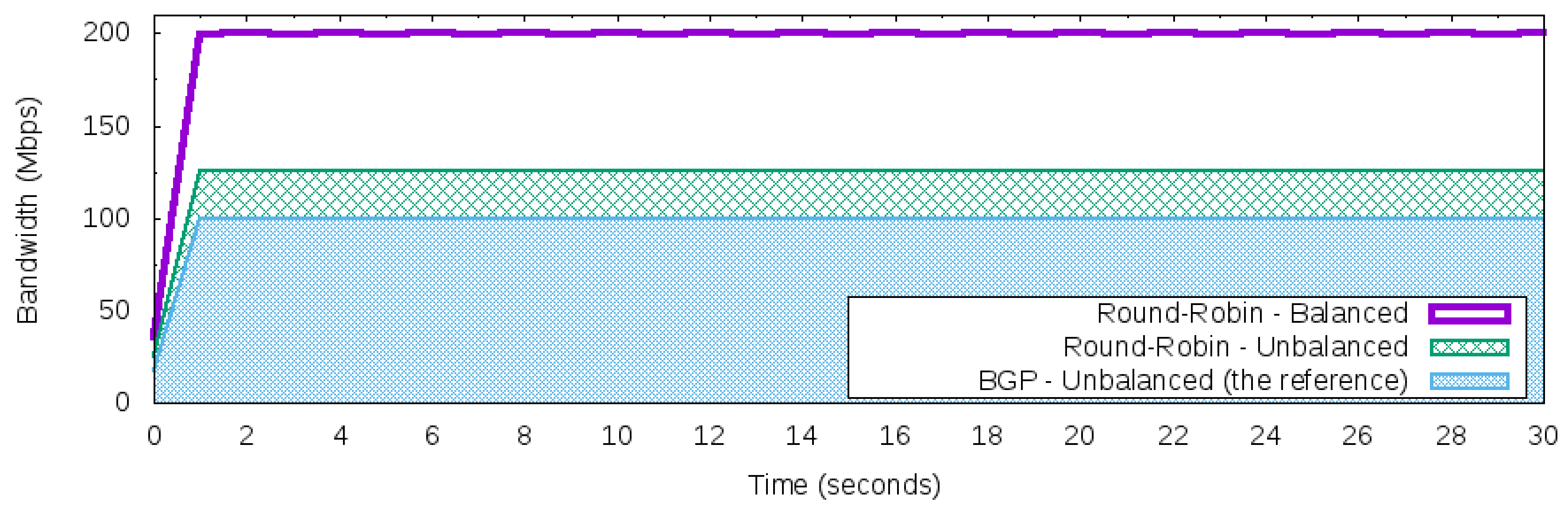

Regarding the inbound traffic control, the proposed architecture allows any innovation in the logic behind the

AS app for a customer AS. As well explained by Silva [

28], there are different strategies for SDN load balancers. However, in this work, it was decided to develop three types of strategies for the load balancers to be used as the control application in the

AS app:

Reactive Random Load Balancer (Random)

Reactive Round-Robin Load Balancer (RR)

Reactive Round-Robin Load Balancer with Threshold (RRT)

These three strategies use the reactive flow creation approach [

29] where the flows are created on-demand in the response of a packet that requires a new flow. The baseline for the comparative will be the default behavior of the BGP that uses the “best” next hop to the forward packets. The remainder of this section is dedicated to depicting each approach.