A Maturity Model for Diagnosing the Capabilities of Smart Factory Solution Suppliers and Its Pilot Application

Abstract

:1. Introduction

2. Theoretical Foundation and Related Models

2.1. Theoretical Foundation: Organizational Maturity and Maturity Models

2.2. Related Models

3. Methodology

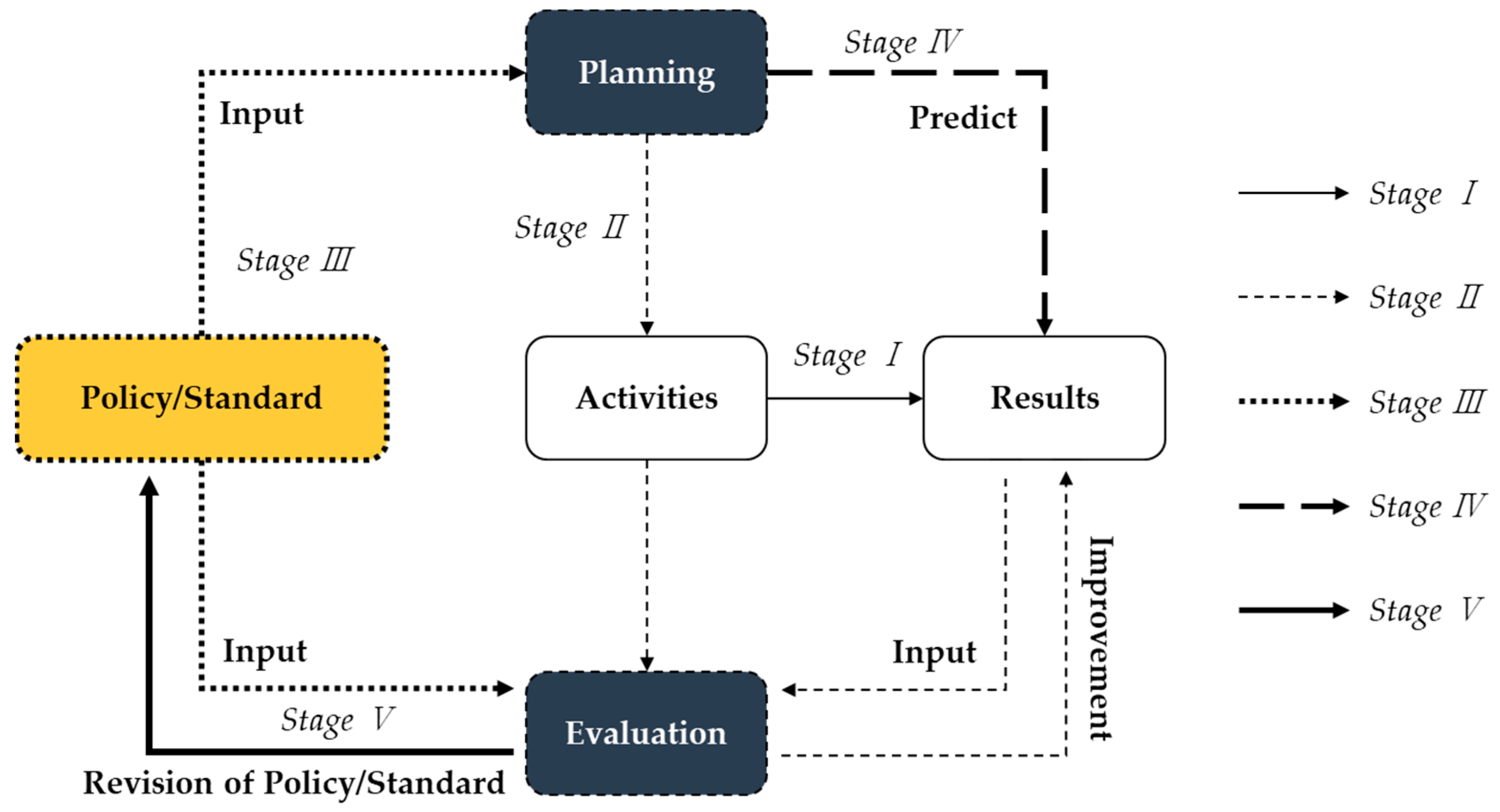

3.1. Framework to Build Our Maturity Model

- (1)

- Define the Scope and Objectives:

- (2)

- Literature Review:

- (3)

- Stakeholder Engagement:

- (4)

- Identify Key Dimensions and Levels:

- (5)

- Develop Assessment Criteria:

- (6)

- Validation and Iteration:

- (7)

- Documentation and Communication:

- (8)

- Pilot Testing:

- (9)

- Implementation Support:

- (10)

- Continuous Improvement and Institutionalization:

3.2. Normal Group Technique (NGT)

3.3. Validation of the Proposed Model

4. Capability Diagnostic Model

4.1. Diagnosis Areas

4.2. Capability Maturity

4.3. Detailed Diagnostic Items and Criteria

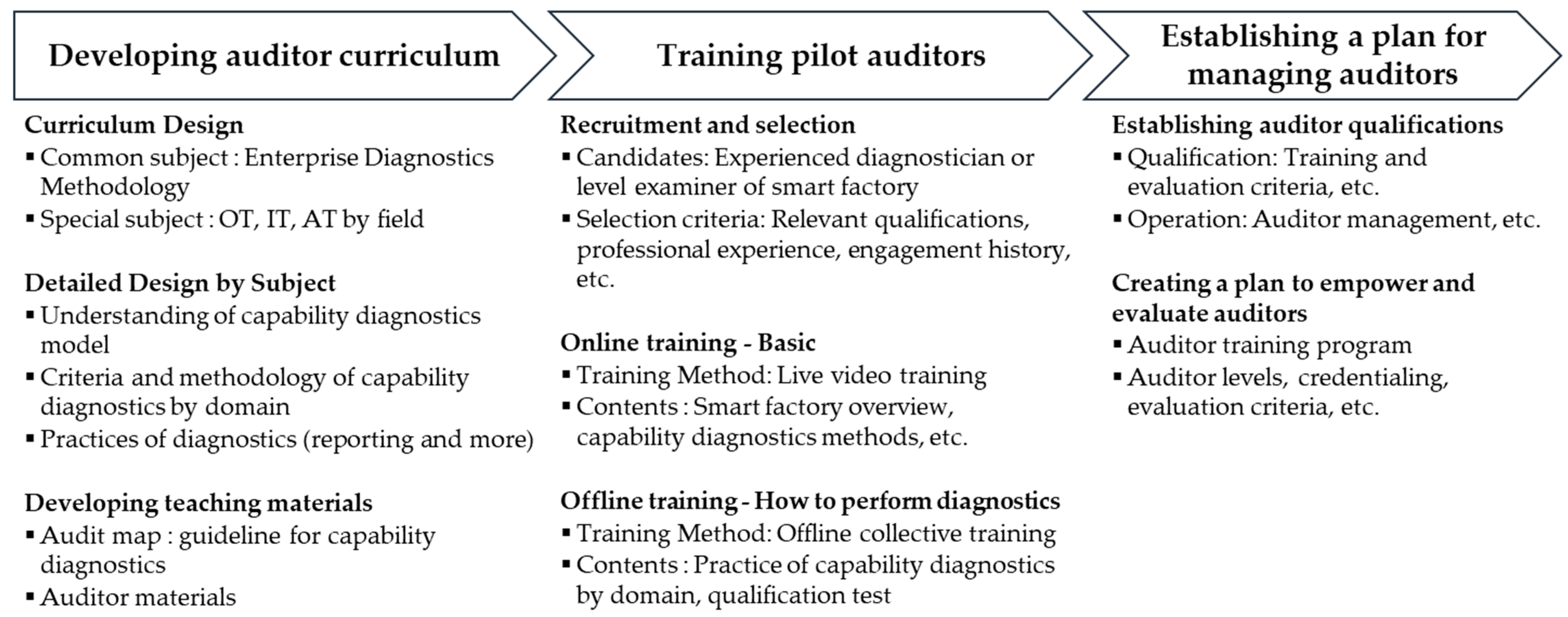

5. Evaluation Process: Auditor Training Operational Process

6. Pilot Diagnostics and the Results

6.1. Auditor Training and Supplier Selection

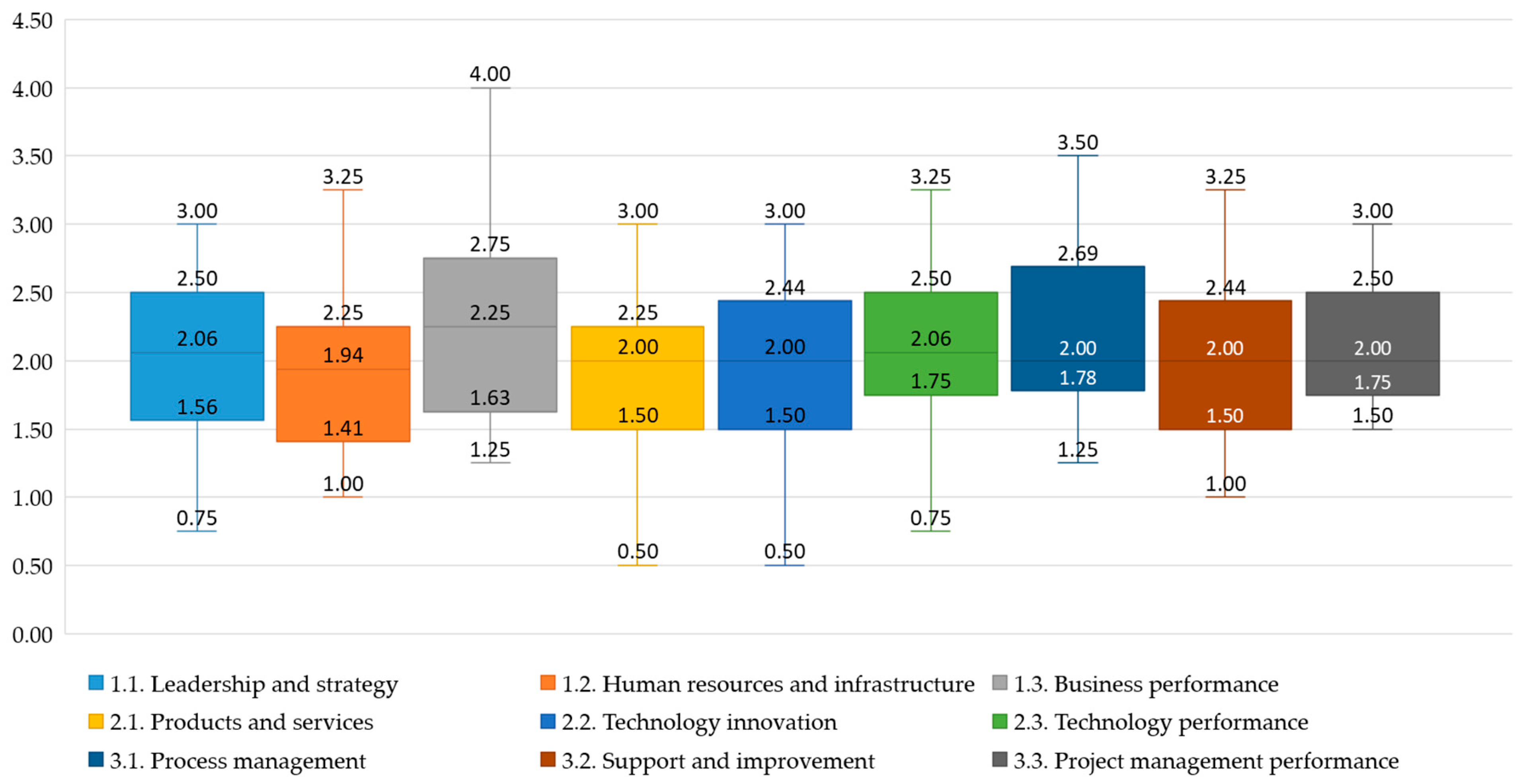

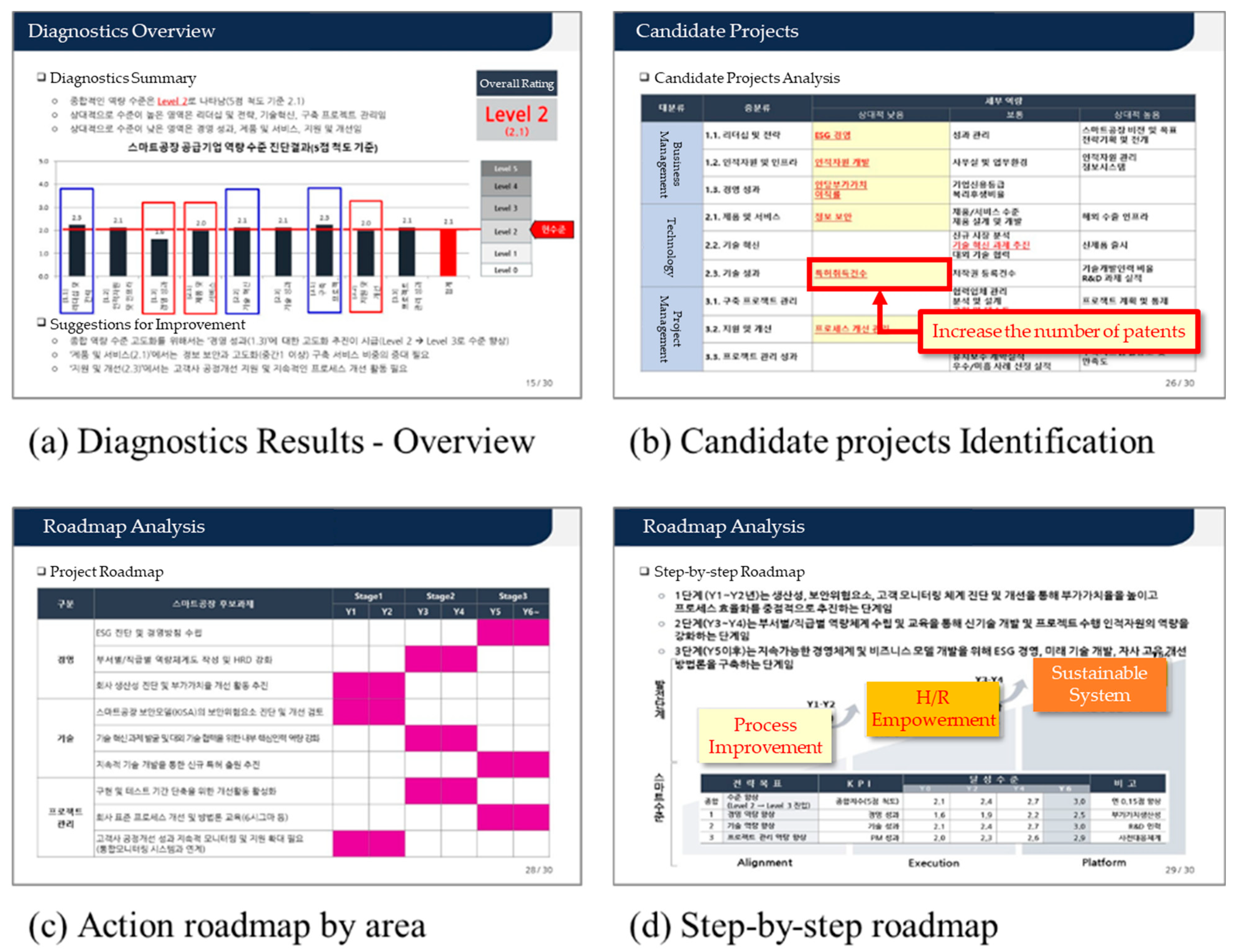

6.2. Pilot Diagnostic Results

7. Promotional Strategy and Institutionalization

7.1. Mid- to Long-Term Roadmap

7.2. Institutionalization

- Full or partial coverage of diagnostic costs, depending on the roadmap phase, until institutionalization

- Needs for careful design to avoid socialization

- Spreading the system by providing preferential conditions for participation in government dissemination projects

- Information provision in the KOSMO system based on the strong areas of the supplier’s diagnostic results.

- Use of basic information and diagnostic results for future research and statistical analysis after obtaining consent

8. Contributions and Limitations

9. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Vlasov, A.I.; Kurnosenko, A.E.; Juravleva, L.V.; Lysenko, O.A. Trend analysis in the development of factories of the future, taking into account digital transformation of active systems. In Proceedings of the IV International Scientific and Practical Conference, St. Petersburg, Russia, 18–19 March 2021; pp. 1–6. [Google Scholar]

- Acatech. Industrie 4.0 Maturity Index. 2020. Available online: https://en.acatech.de/publication/industrie-4-0-maturity-index-update-2020/ (accessed on 10 June 2023).

- Schuh, G.; Anderl, R.; Gausemeier, J.; ten Hompel, M.; Wahlster, W. Industrie 4.0 Maturity Index. In Managing the Digital Transformation of Companies (Acatech STUDY); Herbert Utz: Munich, Germany, 2017. [Google Scholar]

- EDB Singapore. The Smart Industry Readiness Index. 2023. Available online: https://www.edb.gov.sg/en/about-edb/media-releases-publications/advanced-manufacturing-release.html (accessed on 10 June 2023).

- TÜVSÜD. Smart Industry Readiness Index (SIRI). 2023. Available online: https://www.tuvsud.com/ko-kr/industries/manufacturing/machinery-and-robotics/smart-industry-readiness-index (accessed on 10 June 2023).

- VDMA. Guideline Industrie 4.0. 2023. Available online: https://vdma-verlag.com/home/artikel_72.html (accessed on 10 June 2023).

- NIST. Smart Manufacturing Systems Readiness Level (SMSRL) Tool. 2019. Available online: https://www.nist.gov/services-resources/software/smart-manufacturing-systems-readiness-level-smsrl-tool (accessed on 10 June 2023).

- Fraunhofer. Digital Maturity Assessment (DMA). 2023. Available online: https://interaktiv.ipa.fraunhofer.de/sme-active/how-digital-is-my-company/?lang=en (accessed on 10 June 2023).

- Korea Smart Manufacturing Office. Level Verification System of Smart Factory. 2023. Available online: https://www.smart-factory.kr/notice/read/855?page=1&bbsClCodeSe=00000002&bsnsClCodeSe=88888888 (accessed on 10 June 2023).

- Aagaard, A.; Presser, M.; Collins, T.; Beliatis, M.; Skou, A.K.; Jakobsen, E.M. The Role of Digital Maturity Assessment in Technology Interventions with Industrial Internet Playground. Electronics 2021, 10, 1134. [Google Scholar] [CrossRef]

- BCG. Digital Acceleration Index. 2023. Available online: https://www.bcg.com/publications/2021/digital-acceleration-index (accessed on 10 June 2023).

- KPMG. Are You Ready for Digital Transformation? Measuring Your Digital Business Aptitude. 2015. Available online: https://assets.kpmg.com/content/dam/kpmg/pdf/2016/04/measuring-digital-business-aptitude.pdf (accessed on 10 June 2023).

- Ministry of SMEs and Startups. INNOBIZ Innovative SMEs. 2023. Available online: https://www.innobiz.net/ (accessed on 10 June 2023).

- Ministry of SMEs and Startups. MAINBIZ. 2023. Available online: https://mainbiz.go.kr/main.do (accessed on 10 June 2023).

- Korea Productivity Center. Productivity Management System (PMS) Certification Schemes. 2023. Available online: https://pms.kpc.or.kr/sub1_1_system_intro.asp (accessed on 10 June 2023).

- National IT Industry Promotion Agency. Software Process Quality Certification. 2023. Available online: http://www.sp-info.or.kr/portal/main/main.do (accessed on 10 June 2023).

- Benbasat, I.; Dexter, A.S.; Mantha, R.W. Impact of organizational maturity on information system skill needs. MIS Q. 1980, 4, 21–34. [Google Scholar] [CrossRef]

- Saavedra, V.; Dávila, A.; Melendez, K.; Pessoa, M. Organizational maturity models architectures: A systematic literature review. In Trends and Applications in Software Engineering: Proceedings of CIMPS 2016; Springer: Cham, Switzerland, 2017; pp. 33–46. [Google Scholar]

- Proença, D.; José, B. Maturity models for information systems-a state of the art. Procedia Comput. Sci. 2016, 100, 1042–1049. [Google Scholar] [CrossRef]

- CMMI. Capability Maturity Model Integration 2.0. 2018. Available online: https://cmmiinstitute.com/ (accessed on 10 June 2023).

- Tocto-Cano, E.; Paz Collado, S.; López-Gonzales, J.L.; Turpo-Chaparro, J.E. A Systematic Review of the Application of Ma-turity Models in Universities. Information 2020, 11, 466. [Google Scholar] [CrossRef]

- Ferradaz, C.; Domingues, P.; Kucińska-Landwójtowicz, A.; Sampaio, P.; Arezes, P.M. Organizational maturity models: Trends for the future. In Occupational and Environmental Safety and Health II; Springer: Cham, Switzerland, 2020; pp. 667–675. [Google Scholar]

- Kucińska-Landwójtowicz, A.; Czabak-Górska, I.D.; Domingues, P.; Sampaio, P.; Ferradaz de Carvalho, C. Organizational maturity models: The leading research fields and opportunities for further studies. Int. J. Qual. Reliab. Manag. 2023. [Google Scholar] [CrossRef]

- Becker, J.; Ralf, K.; Jens, P. Developing maturity models for IT management: A procedure model and its application. Bus. Inf. Syst. Eng. 2009, 1, 213–222. [Google Scholar] [CrossRef]

- Wendler, R. The maturity of maturity model research: A systematic mapping study. Inf. Softw. Technol. 2012, 54, 1317–1339. [Google Scholar] [CrossRef]

- Facchini, F.; Oleśków-Szłapka, J.; Ranieri, L.; Urbinati, A. A maturity model for logistics 4.0: An empirical analysis and a roadmap for future research. Sustainability 2019, 12, 86. [Google Scholar] [CrossRef]

- Correia, E.; Garrido-Azevedo, S.; Carvalho, H. Supply Chain Sustainability: A Model to Assess the Maturity Level. Systems 2023, 11, 98. [Google Scholar] [CrossRef]

- Schumacher, A.; Erol, S.; Sihn, W. A maturity model for assessing Industry 4.0 readiness and maturity of manufacturing enterprises. Procedia CIRP 2016, 52, 161–166. [Google Scholar] [CrossRef]

- Wagire, A.A.; Joshi, R.; Rathore, A.P.S.; Jain, R. Development of maturity model for assessing the implementation of Industry 4.0: Learning from theory and practice. Prod. Plan. Control. 2021, 32, 603–622. [Google Scholar] [CrossRef]

- Rockwell Automation. The Connected Enterprise Maturity Model. 2023. Available online: https://www.rockwellautomation.com/en-au/company/news/blogs/the-connected-enterprise-maturity-model--metrics-that-matter.html (accessed on 10 June 2023).

- IMPULS Foundation of VDMA. Industry 4.0 Readiness. 2023. Available online: https://www.industrie40-readiness.de/?lang=en (accessed on 10 June 2023).

- Brunner, M.; Jodlbauer, H.; Schagerl, M. Reifegradmodell Industrie 4.0. Industrie 4.0 Management. Digit. Transform. 2016, 32, 49–52. Available online: https://research.fh-ooe.at/data/files/publications/5376_Reifegradmodell_I40.pdf (accessed on 10 June 2023).

- PwC. Industry 4.0: Building the Digital Enterprise. 2016. Available online: https://www.pwc.com/gx/en/industries/industries-4.0/landing-page/industry-4.0-building-your-digital-enterprise-april-2016.pdf (accessed on 10 June 2023).

- Jung, K.; Kulvatunyou, B.; Choi, S.; Brundage, M.P. An Overview of a Smart Manufacturing System Readiness Assessment. In Proceedings of the IFIP International Conference on Advances in Production Management Systems, Cham, Switzerland, 3–7 September 2016; pp. 705–712. [Google Scholar]

- Boddy, C. The nominal group technique: An aid to brainstorming ideas in research. Qual. Mark. Res. Int. J. 2012, 15, 6–18. [Google Scholar] [CrossRef]

- NIST. Baldrige Excellence Framework. 2023. Available online: https://www.nist.gov/baldrige/publications/baldrige-excellence-framework (accessed on 10 June 2023).

- ISO 56000:2020; Innovation Management—Fundamentals and Vocabulary. ISO: Geneva, Switzerland, 2020.

- Hyundai Motor Group. 5 Star System. 2023. Available online: http://winwin.hyundai.com/coportal/global/5star.html (accessed on 10 June 2023).

- ISO/IEC/IEEE 12207:2017; Systems and Software Engineering—Software Life Cycle Processes. ISO: Geneva, Switzerland, 2017.

- PMI. PMBOK® Guide. 2021. Available online: https://www.pmi.org/pmbok-guide-standards/foundational/pmbok (accessed on 10 June 2023).

| Organization | Model Name | Description |

|---|---|---|

| Acatech | Industrie 4.0 Maturity Index | Resources, Information Systems, Organization, and Culture are defined as the main core competencies for a company to become an agile organization capable of implementing a smart factory, and the level of each competency is divided into six stages: Computerization, Connectivity, Visibility, Transparency, Predictive Capacity, and Adaptability [2,3]. |

| EDB Singapore/TÜV SÜD | Smart Industry Readiness Index (SIRI) | This model consists of eight key areas (Operations, Supply Chains, Automation, Connectivity, etc.) that support three core components (Process, Technology, Organization). The final layer consists of 16 assessment dimensions, such as Vertical Integration, Shop Floor, Facility, and Leadership Competency, that should be referenced when assessing the maturity level of a manufacturing facility [4,5]. |

| VDMA | Guideline Industrie 4.0 | The Products section evaluates innovation in terms of product development, and the Production section assesses the overall production process and cost efficiency. In addition, the guidelines are categorized into five stages: Preparation, Analysis, Creativity, Evaluation, and Implementation, and detailed evaluation indicators are utilized for each stage [6]. |

| NIST | Smart Manufacturing System Readiness Level (SMSRL) | To diagnose the readiness to select and improve manufacturing technologies and implement smart factories, this model classifies maturity into four categories: Organization, Information Technology, Performance Management, and Information Connectivity [7]. |

| Fraunhofer | Digital Maturity Assessment (DMA) | This model measures the level of digital maturity of a company in the areas of market analysis (threat of new entrants, bargaining power of buyers, bargaining power of suppliers, competition with existing competitors, threat of substitutes), business (customer, culture/workforce, digital strategy, governance, digital market building, transition management, digital operations), and IT (interoperability, IT security, big data, connectivity) [8]. |

| Korea Smart Manufacturing Office (KOSMO) | Smart Factory Level Verification System | Based on KS X 9001, the level of a company’s smart factory is evaluated through 4 areas, 10 categories, and 44 detailed evaluation items, including promotion strategy, process, information system and automation, and performance. It diagnoses the level of each area from Level 0 (no ICT) to Level 5 (advanced) in five stages, providing a level confirmation certificate and presenting customized guidelines that can be used to build and advance smart factories [9]. |

| Organization | Model Name | Description |

|---|---|---|

| University of Aarhus | Digital Maturity Assessment Tool (DMAT) | This diagnostic tool provides a digital maturity assessment, a maturity overview, and a personalized mini-report. It consists of six dimensions (strategy, culture, organization, processes, technology, customers, and partners) that make up 30 sub-dimensions. An online tool to assess digital maturity in questionnaire format, measured on a five-level scale ranging from 1 to 5 [10]. |

| Boston Consulting Group (BCG) | Digital Acceleration Index | It allows you to assess your organization’s digital capabilities and compare them to peer industry averages, digital leaders, and other groups. The assessment dimensions are organized into eight areas: digitally enabled business strategy, customer delivery and go-to-market, operations, support functions, new digital growth, changing the way we work, leveraging data and technology capabilities, and integrated ecosystem [11]. |

| KPMG | Digital Business Aptitude | By assessing your digital business aptitude (DBA), you can answer the question of “how successful are you at digital transformation” and “identify gaps that need to be addressed”. It consists of five domains and four to six attributes for each domain that describe key capabilities related to an organization’s ability to successfully undertake digital business transformation [12]. |

| Ministry of SMEs and Startups | InnoBiz | It was developed and used as an index to evaluate the technological innovation system of SMEs based on the OECD’s “Oslo Manual”, a manual for evaluating technological innovation activities. It is divided into four categories: technology innovation ability, technology commercialization ability, technology innovation management ability, and technology innovation performance, and each category is composed of large items such as R&D activity indicators, technology innovation system, technology commercialization ability, marketing ability, management innovation ability, manager’s values, and technology management performance [13]. |

| Ministry of SMEs and Startups | MainBiz | The evaluation indicators are divided into three strategic directions: management innovation infrastructure, management innovation activities, and management innovation performance, and each strategic direction is subdivided into categories, evaluation items, and evaluation indicators. According to the evaluation score, it is divided into creative, growth, basic, and basic, and each focuses on preventing problems from occurring in advance, building a flexible system that can respond to changes, and establishing plans to improve capabilities for key processes [14]. |

| Korea Productivity Center | Productivity Management System (PMS) | After evaluating the current level of the management innovation system, improvement tasks are proposed to drive productivity innovation to achieve performance goals. Core values are required through seven categories: leadership, innovation, customers, measurement, analysis and knowledge management, human resources, processes, and management performance. Therefore, the PMS model consists of seven audit categories, 19 basic items, and 80 detailed items [15]. |

| National IT Industry Promotion Agency | Software Process Quality Certification | In order to improve quality and secure reliability while developing and managing software and information systems, we have developed a system to assess and grade the level of software process quality capabilities of software companies and development organizations. This model diagnoses the level of quality competence through a total of 70 questions based on five areas: project management, development, support, organization management, and process improvement [16]. |

| Area | Sub-Area | Evaluation Category | Scoring | |

|---|---|---|---|---|

| 1. Business management | 1.1. Leadership and strategy | 1.1.1. Leadership | 75 | 250 |

| 1.1.2. Strategies | ||||

| 1.2. Human resources and infrastructure | 1.2.1. Human resources | 75 | ||

| 1.2.2. Business infrastructure | ||||

| 1.3. Business performance | 1.3.1. Financial performance | 100 | ||

| 1.3.2. Non-financial performance | ||||

| 2. Technology | 2.1. Products and services | 2.1.1. Product and service infrastructure | 150 | 500 |

| 2.1.2. Design and development | ||||

| 2.2. Technology innovation | 2.2.1. Creating new markets | 150 | ||

| 2.2.2. Increase technology competitiveness | ||||

| 2.3. Technical performance | 2.3.1. R&D activity metrics | 200 | ||

| 2.3.2. R&D performance metrics | ||||

| 3. Project management | 3.1. Process management | 3.1.1. Plan and control | 75 | 250 |

| 3.1.2. Manage deliverables | ||||

| 3.2. Support and improvements | 3.2.1. Support and organization management | 75 | ||

| 3.2.2. Process improvement | ||||

| 3.3. Project management performance | 3.3.1. Adopter performance | 100 | ||

| 3.3.2. Follow-up performance | ||||

| Maturity Level | Evaluation Criteria |

|---|---|

| 5. Optimizing |

|

| 4. Predictable |

|

| 3. Defined |

|

| 2. Managed |

|

| 1. Informal |

|

| 0. None |

|

| Maturity Level | Evaluation Criteria |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

| Evaluation Category | Diagnostic Item (Evaluation Indicator) |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| Area | Sub-Area | Evaluation Category | Diagnostic Item |

|---|---|---|---|

| 1. management | 1.1. Leadership and strategy | 1.1.1. Leadership | 1.1.1.1. Smart Factory Vision and Goals |

| Item Description |

| ||

| Key Things to Look For |

| ||

| Evaluation Criteria | 5 | The organization’s smart factory vision system and operation activities are so excellent that they are becoming an example for other companies. | |

| 4 | The organization’s smart factory vision and goals are closely aligned with its strategy and business plan and are periodically evaluated and improved. | ||

| 3 | The organization’s smart factory vision and goals are systematically established, and various systems and activities are implemented according to the strategies and plans associated with them. | ||

| 2 | The organization’s smart factory vision and goals are systematically established, but they are underutilized for strategic and business planning. | ||

| 1 | The organization has a smart factory vision and goals, but they are not specific or structured. | ||

| 0 | No evidence of an organizational smart factory vision and goals. | ||

| Diagnostic Notes |

| ||

| Area | Sub-Area | Evaluation Category | Diagnostic Item |

|---|---|---|---|

| 3. Project management | 3.1. Process management | 3.1.1. Planning and control | 3.1.1.1. Project planning and control |

| Item Description |

| ||

| Key Things to Look For |

| ||

| Evaluation Criteria | 5 | Predict environmental changes and exceptional situations in advance for optimized response | |

| 4 | Ability to plan and control projects and quantitatively measure and manage activities and predict outcomes | ||

| 3 | Project planning and controls are based on enterprise-wide policies or standards to guide evaluation. | ||

| 2 | Project planning and control results from the activities and capabilities through planning | ||

| 1 | Project planning and control results from the activities and capabilities of individuals without a plan | ||

| 0 | No activity or results for project planning and control | ||

| Diagnostic Notes |

| ||

| Phase 1. Foundation | Phase 2. Diffusion | Phase 3. Stabilization |

|---|---|---|

|

|

|

| Supplier Eligibility Prerequisites | Supplier Qualification Preferences | Check Optional Capability Levels | Capability Build-Up Consulting Prerequisites | Etc. | |

|---|---|---|---|---|---|

| Supplier | 29.0 | 45.4 | 21.3 | 4.3 | 0.0 |

| Adopter | 36.2 | 15.3 | 34.6 | 13.7 | 0.2 |

| Offer a Small Incentive to All Participating Companies | Incentivize a Select Group of Highly Capable Companies | Penalize Some Companies with Low Capability Levels | Provide Support for Some Less Capable Organizations | Etc. | |

|---|---|---|---|---|---|

| Supplier | 61.2 | 24.4 | 4.0 | 10.1 | 0.3 |

| Adopter | 33.2 | 29.1 | 24.9 | 12.6 | 0.2 |

| Disclosures in Governmental Programs | Public Only for High-Level Companies | Disclose Only for Low-Level Companies | All Unpublished | Etc. | |

|---|---|---|---|---|---|

| Supplier | 59.2 | 21.3 | 2.6 | 16.7 | 0.3 |

| Adopter | 77.6 | 13.3 | 6.2 | 3.0 | 0.2 |

| New Regulations in Action | The Burden of Additional Work | Additional Cost and Time | The Burden of External Evaluation | Doubts about Effectiveness | Etc. | |

|---|---|---|---|---|---|---|

| Supplier | 25.6 | 30.2 | 33.0 | 5.2 | 6.0 | 0.0 |

| Formal Institutional Operations | Rising Supply Costs | Supply-Demand Imbalance | Etc. | |||

| Adopter | 53.1 | 36.6 | 10.1 | 0.2 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cha, C.-W.; Lee, J.; Jun, S.; Kang, K.; Chang, T.-W. A Maturity Model for Diagnosing the Capabilities of Smart Factory Solution Suppliers and Its Pilot Application. Systems 2023, 11, 569. https://doi.org/10.3390/systems11120569

Cha C-W, Lee J, Jun S, Kang K, Chang T-W. A Maturity Model for Diagnosing the Capabilities of Smart Factory Solution Suppliers and Its Pilot Application. Systems. 2023; 11(12):569. https://doi.org/10.3390/systems11120569

Chicago/Turabian StyleCha, Cheol-Won, Jeongcheol Lee, Sungbum Jun, Keumseok Kang, and Tai-Woo Chang. 2023. "A Maturity Model for Diagnosing the Capabilities of Smart Factory Solution Suppliers and Its Pilot Application" Systems 11, no. 12: 569. https://doi.org/10.3390/systems11120569