1. Introduction

Electronic sports (eSports) are video game-based competitive activities, running in the virtual environment of the ‘real world’ [

1]. They have been gaining widespread social acceptance in recent years. From a traditionally prejudiced and skeptical standpoint, eSports’ hedonic effect is generally negatively associated with public health, owing to addiction, violence, toxicity, and sexual harassment [

2,

3,

4,

5]. The ingrained and intransmutable generational disparities in the public’s perception and acceptance of eSports has also further deepened concerns about engagement [

6,

7]. However, fortunately, a shift has occurred towards a more constructive attitude in recent years, mainly driven by multiple advantageous conditions, such as the promotion of cultural inclusiveness, commercial legitimization, and health-technical interventions [

8,

9,

10]. Additionally, a positive mindset, spirit, and a sense of cohesion within the community may foster social connectedness [

11,

12]. For example, the World Health Organization encouraged the global gaming industry to launch the activity ‘#PlayApartTogether’ to help prevent the spread of the COVID-19 pandemic as a risk-free alternative space for competition [

13]. Further, a growing volume of research supports the positive consequences of eSports, including psychological well-being, social connectedness, stress reduction, cognitive skills, and skill transferability [

14,

15,

16,

17,

18].

Recently, the International Olympic Committee (IOC) placed eSports on the official agenda of Olympic events [

19]. Seven recognized events (e.g., League of Legends, Dota, Three Kingdoms 2, and Street Fighter 5) were also approved as official medal sports at the Asian Games Hangzhou 2022 [

20]. There are an estimated 223 million enthusiasts worldwide, with over USD 40 million in the greatest prize pool of the game Dota 2 [

21,

22]. It is expected that the global eSports market will generate USD 1.61 billion in 2024, representing a compound annual growth rate (CAGR) of 11.6% between 2021 and 2024 [

23]. Although eSports contribute to digital culture and benefit the economy, systematic and sustainable development remains a challenge. Among the issues is the habitual negative gaming atmosphere, causing user retention problems and gamer churn, which is most represented by Multiplayer Online Battle Arena (MOBA) games [

24,

25,

26].

MOBA games are recognized as a prominent social interaction-based eSports genre, and the gaming atmosphere is closely linked to players’ social experiences. Within gaming, players collaboratively engage in competitive spaces structured with standard rules, immersive scenarios, and multifaceted performances by controlling individual digital avatars to pursue victory [

27,

28,

29,

30,

31]. Socially, MOBA games are troubled by prolonged team-based competition that is inefficient and skill levels of teammates that are misunderstood, leading to antisocial and toxic behavior. The form of communication is limited yet overly expressive, generating a negative atmosphere that is strongly associated with lower levels of players’ well-being [

27,

32]. For example, 80% of US players believe co-players make prejudiced comments while 74% report having experienced harassment [

25]. Negative behavior among players is considered an integral and acceptable aspect of the competitive game experience [

24]. Toxicity is fueled by the inherent competitiveness (i.e., killing each other) of MOBA games but is only weakly linked to success [

33]. In the long run, negative player behavior substantially threatens the game’s balanced atmosphere [

32]. Players who encounter negative behavior are more likely to stop playing by up to a stunning 320% [

34]. Therefore, it is crucial to supply channels through which to release negative emotions and address MOBA’s negative gaming atmosphere during social interactions [

35].

A positive gaming experience has profound effects on user retention, willingness to engage with content, and consumer loyalty, while avoiding unsustainable user behaviors [

32,

36,

37]. A solution may be the application of artificial intelligence (AI) teammates that are designed to collaborate with different human teammates at different skill levels so as to enhance their performance [

38,

39,

40,

41]. However, the potential and effectiveness of helpful AI teammates to intervene within a negative atmosphere and to provide emotional support has not been investigated. If players can be successful despite being negative, they need a different incentive to stop insulting other players and to behave more pleasantly [

33]. To counter a negative atmosphere, an AI for gamers’ competence improvement should be combined with a medium for emotional disclosure if things go wrong.

Self-disclosure after a bad experience is best served by social robots, more so than by other media such as writing or a WhatsApp (version 2.11.109) or WeChat (version 8.0.16) group [

42,

43]. Social robots can be perceived as the physical representation of AI teammates. They can serve as an intuitive supplement to address the limitations of AI teammates in effectively fostering healing and enhancing positive emotions. Although the use of social robots has advanced quickly in the fields of health care [

44] and education [

45], their potential to optimize negative atmosphere in games (i.e., MOBA) is overlooked.

Our research questions, then, are do AI teammates and social robots improve the gaming atmosphere in eSports? If so, how do they actualize the process of improvement?

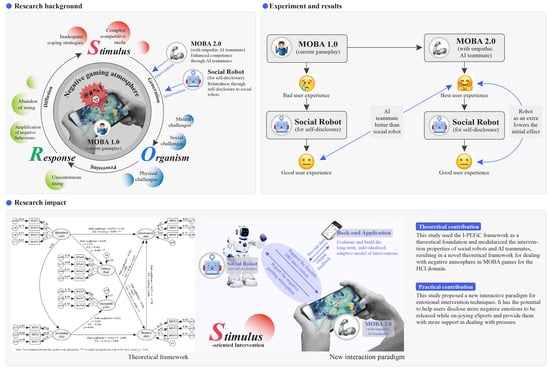

In this research, we first interpreted the genesis of the negative gaming atmosphere from the perspective of the Stimulus–Organism–Response (S-O-R) theory and defined the necessity of the presence of AI teammates and social robots. Then, we employed Interactively Perceiving and Experiencing Fictional Characters (I-PEFiC) as the foundational model of human–robot interaction (HRI) [

46,

47]. By combining these theories with gaming scenarios, we further distilled and developed novel, verifiable components that capture the interventional characteristics of AI teammates and social robots. Our aim was to offer a theoretical explanation of current game experiences and an intervention scheme for the eSports industries to avoid a negative gaming atmosphere and to provide emotional support in competitive gaming environments.

3. Research Hypotheses

Following the hypothesized interactive paradigm (

Figure 3), we proposed a number of research hypotheses with which to investigate and improve the negative gaming atmosphere (

Figure 4). In general, we expect that the Anonymity (AN) and Invisibility (IN) of AI Teammates will lead to greater performance and competence of players [

113]. Further, if social robots are used to provide the Affordance (AF) of verbal self-disclosure, players’ emotional Valence (before–after intervention: Vb-Va) will be further improved [

113]. The psychological Distance (DT) among players will be decreased and Involvement (IO) will be increased. More specifically, our hypotheses are as follows (see also

Figure 4):

H1: The Affordance (AF) of social robots is significantly and positively correlated with players’ Involvement (IO).

H2: The AF of social robots is negatively correlated with players’ psychological Distance (DT).

H3: The AF of social robots is positively correlated with players’ emotional Valence (Val).

H4: Players’ Val is negatively correlated with DT.

H5: Players’ Val is positively correlated with IO.

H6: Players’ DT is negatively correlated with IO.

H7: Invisibility (IN) of helpful teammates is positively correlated with Anonymity (AN).

H8: IN of AI teammates is negatively correlated with IO.

H9: IN of AI teammates is positively correlated with DT.

H10: AN of AI teammates is negatively correlated with IO.

H11: AN of AI teammates is negatively correlated with DT.

Note: with respect to H3–H5, we used a Valence difference score (Val = MVb − MVa).

5. Data Analysis and Results

5.1. The Analysis of Samples

5.1.1. Sample Size

From the 122 invited participants, we collected 111 valid questionnaires with a validity rate of 91% (

Supplementary Material ‘Raw Dataset’). During the experiment, subjects communicated and cooperated with us remotely, and the average completion times of parts 1 and 2 of the survey were 3:50 m and 2:49 m, respectively.

We used the software G-power 3.1 to analyze the sample size [

123]. For the GLM (General Linear Model) repeated measures method, we presupposed the presence of a medium effect size f = 0.25, statistical test power 1–ß = 0.8, and significance level α = 0.05, and the G-power results indicated that at least 74 subjects were needed. With

N = 111 and

n = 95, the test power should be stable beyond 80%.

After the initial Cronbach analyses, we tested the discriminant validity of the items by means of Principal Component Analysis. After removing certain items that were scattered over components, the remainder were neatly arranged in the expected components, showing that measurement scales were divergent. After PCA, all measurement scales achieved good reliability (Cronbach’s α ≥ 0.74). Valences before and after (Vb and Va, four items each) and the difference scores (Val) achieved Cronbach’s alpha > 0.87. Affordances (four items) achieved Cronbach’s α = 0.88; Involvement (four items) Cronbach’s α = 0.86; Distance (three items, after deletion of one item based on poor discriminant validity), Cronbach’s α = 0.85; Invisibility (four items), Cronbach’s α = 0.75; and Anonymity (four contra-indicative items), Cronbach’s α = 0.79. The results of the reliability analyses are compiled in

Table 1.

We calculated scale means and performed an outlier analysis using boxplots, finding that participants 12, 17, 24, 47, 50, and 71 were outliers in Vb, and participants 5, 17, 59, and 91 were outliers in Va. Participants 1, 5, 10, 32, 33, and 108 were outliers in AF. Participants 5, 34, and 59 were outliers in DT, and there were no outliers in IO, IN, and AN. In the following steps, we performed effects analyses with (N = 111) and without outliers (n = 95).

5.1.2. Demographics

We examined whether age was associated with Valence-before-treatment (Vb), Valence-after-treatment (Va), Affordance (AF), Involvement (IO), Distance (DT), Invisibility (IN), and Anonymity (AN). We calculated Pearson’s binary correlations (two-tailed) and did not find any significant relationship with age (p > 0.05). It is worth noting that certain correlations did occur between variables. In line with I-PEFiC theory, Involvement was negatively correlated with Distance (r = −0.369, p = 0.000), indicating that distancing tendencies negatively impacted people’s engagement with the agency (Involvement-Distance trade-off).

We ran a MANOVA (Pillai’s Trace) to examine whether gender and educational level had an effect on the dependents and found a small multivariate effect (V = 0.346, F(21,297) = 1.847, p = 0.014) but no univariate effect of gender per se (p > 0.05). Thus, gender was removed from subsequent analyses.

Education level, however, had a significant univariate impact on mean Affordance (MAF) (V = 5.796, F(3297) = 6.634, p = 0.000, ηp2 = 0.162). Education level also influenced mean Anonymity (MAN) to some extent (V = 2.169, F(3297) = 2.804, p = 0.044, ηp2 = 0.075). In MAF, those with high school, secondary school, or technical school degrees experienced higher levels of Affordance than those with university bachelor’s degrees (M = 1.09, SD = 0.35, p = 0.012), and those with master’s degrees or above (M = 1.51, SD = 0.38, p = 0.001). In MAN, those with master’s degrees and above experienced more Affordance than those with junior college degrees (M = 1.02, SD = 0.36, p = 0.028) and experienced more Anonymity.

We then tested education level with two GLM repeated measures procedures, once for MOBA 1.0 (human teammates) and once for MOBA 2.0 (AI teammates), with the seven dependents as within. No significant interaction effects occurred, and no significant univariate effects were established when outliers were removed: F(3.91) = 1.378, ηp2 = 0.043, p = 0.255. We concluded that education level does not have to be used as a control variable to check for confounding effects.

We constructed three data sets, one containing all 111 participants, one containing 95 participants (no outliers), and one containing 16 participants (outliers only). We evaluated our hypotheses with these three sets.

5.1.3. Gaming Performance

A total of 56 players provided us with their detailed game data (

Supplementary Materials ‘Data of Competitions’). For players who chose King of Glory, the average game duration was above the minimum set by the system (6 min for AI teammate mode and 15 min for human teammate mode). Players who chose League of Legends for their experiment took longer, 35–45 min in total. The average gold earned was 9893, with a median of 10,288, showing that they were fully engaged in the game.

Kills/Deaths/Assists (K/D/A) is a statistical measure used in MOBA games to track a player’s performance in terms of the number of kills, deaths, and assists during the game. In terms of competitive performance, their average K/D/A score in human team mode was 6.1/4.1/10.8, while in AI teammate mode it was 14.3/2.7/4.0, indicating their solid experience. Most Valuable Player (MVP) is an award given to the player who has made the greatest impact or contribution to a game or match. Their win rate in human teammate mode was 80% with an MVP rate of 28%, and in AI teammate mode it was 96% with an MVP rate of 88%. These data vividly show that these players have a certain level of skill and experience.

Further questioning revealed that many of them had previously achieved the “King” rank in King of Glory ranked games, while players with experience in League of Legends had achieved the “Gold” rank. This information indicates that many of them had more than a month of MOBA experience.

5.2. Manipulation Check

To determine whether KING-bot provoked any emotions at all and whether teammates (real people or AI) elicited any mood changes, we ran a GLM repeated measures procedure for

N = 111,

n = 95, and

n = 16.

Table 2 shows that, except for the outlier group (

n = 16), different types of teammates (

N = 111 and

n = 95) showed significant multivariate effects for Valence-before-treatment (Vb), Valence-after-treatment (Va), Affordance (AF), Involvement (IO), Distance (DT), Invisibility (IN), and Anonymity (AN).

The results of the univariate effects analysis are shown in

Table 3. With or without outliers (

N = 111 and

n = 95), both types of teammates exerted significant effects. For outliers (

n = 16), the differences remained insignificant. Our manipulation was successful: compared to human teammates only, AI teammates brought positive changes to players’ emotions, and the different measures were sensitive to playing with or without AI teammates.

5.3. Effects of Social Robots and AI Teammates

With N = 111, we ran a paired-samples t-test for mean Valence before (MVb = 3.82, SD = 0.83) versus after talking to a robot (MVa = 4.27, SD = 0.97), irrespective of playing with an AI teammate (MOBA 1.0 and 2.0 combined). The difference was significant, with a considerable effect size (t(110) = −4.37, p = 0.000 (2-tailed), Cohen’s d = −0.42, CI = −0.608–−0.220), underscoring that robot intervention significantly improved the mood of aggravated players. We repeated the analysis without the outliers (n = 95) and found comparable results (MVb = 3.96, SD = 0.71 vs. MVa = 4.35, SD = 0.87; t(94) = −3.91, p = 0.000, d = −0.40, CI = −0.610–−0.191), so the effect is generally valid.

To assess the interaction between game versions and robot intervention, we conducted a GLM repeated measures analysis for mean Valence before and after talking to a robot without being preceded by an AI teammate (MOBA 1.0) versus while being preceded by an AI teammate (MOBA 2.0). For

N = 111, the multivariate effects were significant with quite a large effect size (Pillai’s Trace):

V = 0.49,

F(3108) = 34.45,

p = 0.000,

ηp2 = 0.49. In addition, the within-subjects effect was significant with a decent effect size:

F(1110) = 78.91,

p = 0.000,

ηp2 = 0.42. An excerpt of

Table 4 and

Table 5 showing players’ mean Valence before and after talking to a robot, without (MOBA 1.0) and with an AI teammate (MOBA 2.0) (

N = 111) is presented below (

Table 6):

We scrutinized the significant interaction with paired-samples

t-tests for

N = 111 (

Table 5). In MOBA 1.0, without an AI teammate, mean Valence before talking to a robot (

MVb = 3.01) was significantly lower than after (

MVa = 4.23):

t(110) = −3.61,

p = 0.000, Cohen’s

d = −0.72,

CI = −0.930–−0.512; self-disclosure to a robot improved mood. The level of mean Valence after talking to a robot was not significantly different when talking to a robot alone (MOBA 1.0,

MVa = 4.23) or doing so with an AI helper included (MOBA 2.0,

MVa = 4.31):

t(110) = −0.77,

p = 0.445.

However, the AI helper raised mood the most, more so than a robot on its own.

Table 5 shows that, in MOBA 2.0, including an AI teammate, Valence before talking to the robot (

MVb = 4.61) was significantly higher than after (

MVa = 4.31); the effect of the AI helper improved the gameplay. After the happiness of being helped by an AI teammate, self-disclosure to the robot seemed to have lessened that effect:

t(110) = 2.55,

p = 0.012,

d = 0.24,

CI = −0.052–0.430. Indeed, being helped by the AI teammate evoked the highest mean scores of Valence (MOBA2,

MVb = 4.61), higher than talking to the robot alone (MOBA 1.0,

MVa = 4.23):

t(110) = −3.28,

p = 0.001,

d = −0.31,

CI = −0.501–−0.120. Without the outliers (

n = 95), the arrangement of these effects did not change, although the differences were more pronounced and the effect sizes were stronger.

5.4. Effects of AI Teammate

To further investigate the user experience of working with an AI teammate compared to with human teammates, we ran a GLM multivariate analysis on all dependents with MOBA version 1.0 vs. 2.0 as the fixed factor. For N = 111, the multivariate effects were significant with a considerable effect size (Pillai’s Trace): V = 0.435, F(6105) = 13.468, p = 0.000, ηp2 = 0.44. Additionally, the within-subjects effect of teammate mode was significant with an acceptable effect size: F(1110) = 49.621, p = 0.000, ηp2 = 0.31.

The following is based on

Table 5 and

Table 7. Three paired-sample

t-tests showed significant results for

N = 111, which did not change when removing the outliers. Regarding the teammates, we compared Valence towards the human teammate (HmVb) with valence towards the AI teammate (AiVb) and found that positive feelings were significantly higher after playing with the AI helper: HmVb (

M = 3.01, SD = 1.30) vs. AiVb (

M = 4.62, SD = 1.04),

t(110) = −10.18,

p = 0.000. The AI teammate (AiAN) showed significantly higher levels of Anonymity than its human (HmAN) counterpart: HmAN (

M = 3.81, SD = 1.12) vs. AiAN, (

M = 4.41, SD = 1.27),

t(110) = −4.02,

p = 0.000. Due to being preceded by an AI helper, emotional distance towards the robot counselor was significantly higher in the AI mode (AiDT) than after playing with human (HmDT) teammates: HmDT (

M = 3.00, SD = 1.18) vs. AiDT (

M = 3.26, SD = 1.46),

t(110) = −2.00,

p = 0.048 (with

n = 95,

p = 0.013).

Despite more Anonymity, AI teammates, on average, improved the gamers’ moods the most, more so than human teammates and even more so than talking to a robot afterward. With an AI helper preceding, the robot actually evoked more distancing tendencies.

5.5. The Model of MOBA Game Player’s Engagement Behavior

The data from the human teammate model (MOBA 1.0) and the AI teammate model (MOBA 2.0) were pooled and averaged to measure the interrelationships between the variables. We used G-power software to make preliminary predictions about the sample size needed to build the linear multiple regression model.

We presupposed the presence of a medium effect size

f2 = 0.15, statistical test power 1 − ß = 0.8, and significance level α = 0.05, and the G-power results indicated that at least 98 subjects were needed. This a priori estimation of the required sample size at a medium effect size of

f2 = 0.15 showed that a total subject size of 98 was appropriate, the test power would be stable above 80%, and the sample size (

N = 111) in this study was sufficient to produce scientifically reliable results. Thus, the valid sample size of 111 that we collected can be used to predict and support our hypothetical model. In the following steps, we will further verify the hypothesized model and the interrelationships among the variables with the IBM tool SPSS AMOS [

126].

To investigate the relationships among variables, AMOS 26.0 was used to conduct Structural Equation Modeling. To ensure the validity of the model, we first removed the items with standardized factor loadings below 0.6 and reliability SMC below 0.36. Removed items were MIN4i (std = 0.539), MAN6c (std = 0.586), and MIO2i (std = 0.598).

5.5.1. Reliability and Convergence

First, the credibility and validity of the associated hypotheses models were evaluated. As shown in

Table 2, the values for Cronbach’s α were between 0.748 and 0.912, demonstrating a high level of internal consistency. The convergent validity was measured by item factor loadings (k), composite reliability (CR), and AVE. The values of (k) for all variables were significant at over 0.60. CR for all variables was in the range from 0.755 to 0.885. All the AVE values also exceeded 0.50. In general, all coefficients exceeded the specified thresholds, indicating that the internal consistency of the variables in the model was high. Thus, the items in the questionnaire were reliable for the hypothesized model. For the relevant test results, please consult

Table 8.

5.5.2. Identifying Factors for Validity

The discriminant validity test requires that the measure does not reflect other variables. The square root of the mean-variance value has to exceed the correlation between the construct of interest and the other constructs.

Table 9 shows that the square root was always more significant than the degree of correlation, indicating that all variables have some degree of discriminant validity.

5.5.3. Model’s Degree of Fit

Structural validity measures the degree of fit, and the coefficients must meet the relevant requirements. We assessed the following indices, and the results are shown in

Table 10. The RMSEA (Root Mean Square Error of Approximation) value was 0.082, the CFI (Comparative fit index) value was 0.896, and the IFI (incremental fit index) value was 0.898. These resultant values were at standard levels, which suggests the model had a reasonable to good fit and that our hypotheses sufficiently fit with the data collected.

5.6. Hypotheses Test

The research hypotheses were validated by the experiment and data analysis (see

Table 11). Each of the nine hypotheses was tested using the SEM. The associated R-squared and paths demonstrate the degree of support for the theoretical model retrieved in the data; the results are shown in

Figure 6. All paths are significant, so the nine hypotheses proposed in this study were confirmed.

Figure 6 shows the

R2 and path coefficients of the model, clearly reflecting the existence of a specific influence relationship between the variables. According to Cohen’s evaluation standard,

R2 ≥ 0.01 has little explanatory power,

R2 ≥ 0.09 has medium explanatory power, and

R2 ≥ 0.25 has strong explanatory power [

127]. In this research model,

R2 ranged from 0.169 to 0.573, indicating reasonably high explanatory power. In more detail,

R2 of Involvement (IO) was 0.573, which means that Affordance, Anonymity, and Distance explained 57.3% of the variance in Involvement. In addition, Valence and Invisibility considerably reduced feelings of Distance (DT) at 29.9%. Distance and Involvement are the main dimensions of user engagement, so our model showed predictive factors influencing MOBA–player engagement.

6. Discussion

With respect to our research question, AI teammates and social robots both have the potential to improve the gaming atmosphere in eSports. The results were as expected: the Invisibility of the AI teammates helped players to participate more actively in the game. The anonymous environment raised the comfort level and enabled some users to express their thoughts with a greater willingness to engage in deeper dyadic participation. The AI teammates may have facilitated problem-oriented coping strategies, after which talking to a robot for negative-mood regulation seemed to have been superfluous [

113].

Furthermore, as in other studies, players were satisfied with the robots’ affordance of intervening with the players’ emotions, improving their engagement, and willingness to continue the interaction [

46]. Social robots seem to positively affect the negative gaming atmosphere and may further emotion-based coping strategies [

113]. In line with Duan et al. and Luo et al.’s research [

42,

43], the positive interventional effect of social robots on improving users’ negative emotions has been shown to make users more willing to become friends with social robots and may stir enthusiasm to engage in the next game [

43].

The low correlation we found between Involvement and Distance (H6: DT→IO) shows that the two concepts are not two ends of the same dimension, as suggested by the research of Konijn and Hoorn [

111] and Konijn and Bushman [

128]. Within the confines of our participants being from mainland China and Hong Kong, the four key findings of our study are as follows.

6.1. The Double-Edged Enhanced Competence Supported by AI Teammates

The Invisibility granted by AI teammates can reduce the psychological distance between players (H9: IN→DT). The results were extremely positive, as the inclusion of AI teammates significantly improved the overall level of gameplay and significantly increased the probability of winning. In addition, the effect of Invisibility on increasing the Anonymity of AI teammates (H7: IN→AN) was also significant.

AI teammates’ Invisibility was better able to meet users’ psychological needs, such as the enjoyment of aggression through smoother and faster kills and a greater sense of self-presentation and sustained pleasure [

1]. This allowed players to maintain a superior gaming experience without the need to elicit negative stimuli.

However, we also found that the significant effect of the Anonymity of AI teammates did not fully satisfy our intentions, which is not consistent with our expectations (H10: AN→IO, H11: AN→DT).

In conversations with participants after the experiment, we learned that Anonymity might make it more difficult for human players to communicate and form emotional relationships with their AI teammates effectively. If human players are unable to communicate effectively with their AI teammates, they may still be frustrated with the game. Thus, the impact of AI teammates’ Anonymity on the gaming experience can be complex and context-dependent, depending on factors such as skill level and player needs. However, the current design of AI teammates is based primarily on the performance of the AI algorithms and rarely addresses the importance of bridging the communication gap between humans and agents to improve performance [

39].

Therefore, it is important to consider not only whether they exist anonymously or in a more adaptive form based on player preferences, such as cultural and personal factors, but also communication when implementing them [

94,

95].

6.2. The Effective Self-Disclosure Process Conducted by Social Robots

Social robots as interaction partners have high affordance and provide easy access to positive treatment of players and improve their involvement in the game (H1: AF→IO, H3: AF→Val).

For players who were willing to interact with a social robot, regardless of their human or artificial teammates, emotional Valence improved when they finished the game and self-disclosed to the robot (H3: AF→Val). Social robots had a greater impact on participants’ emotions than human teammates (

Table 7). Players’ emotions were mimicked, emotional valence was enhanced, and this reduced the psychological distance between them and the other players (H4: Val→DT).

Through the feedback, we also received constructive suggestions that the appearance of the social robot is crucial during the intervention process, as it increases the affordances of the technology [

129,

130]. KING-bot possessed a strong upper body with a thin waist, symbolizing creativity and strength, consistent with the task of healing emotions [

131]. KING -bot’s visual cues lead to higher esthetic judgments, aroused emotions, and evoked perceptual expressions in users [

132].

While current experimental results have shown the importance of the visual appearance of social robots in enhancing human emotions, there is still much to be explored in terms of the possibilities of multisensory forms of interaction. For example, there are several multisensory factors that need to be considered to improve the affordances of HRI. These factors include user inputs (e.g., verbal communication, body movements, tactile buttons, and proximity) and social robot outputs (e.g., body contact, distance, and facial expressions) [

102].

6.3. The Potential Conflicts of the AI Teammates and Social Robots

We have discovered that adding a social robot after already having played with a supportive AI teammate does not bolster the uplifting effect; it actually brings it down to about the level of talking to a robot without being helped by an AI teammate and adds some feelings of distance to it. Additionally, users are not receptive to overly extreme, flat emotion regulation techniques.

We started with the assumption that, after negative gameplay, social robots for self-disclosure may alleviate aggravation, which was supported by our results. We also assumed that adding an AI teammate to increase winning chances may do so, after which possible remaining frustration may be relieved by the robot. The latter did not occur. Although social robots improved the gamers’ mood to a high degree, AI teammates did more so, and adding a robot on top of an artificial teammate downregulated the (still) good mood. A graphical representation of these results is found in

Figure 7.

7. Prototype Design and Vision

To more clearly express practical implications and future research prospects, we created a prototype of MOBA Pro (

Figure 8).

MOBA Pro helps players build real-time connections with social robots and AI teammates. At the same time, MOBA Pro can enhance its capabilities by collecting relevant data during interventions with social robots and AI peers. Using machine learning and deep learning techniques, it can then train highly personalized interaction models tailored to individual users. This approach enables a personalized and technologically comprehensive interactive experience that leads to greater overall effectiveness.

In future entertainment, removing negative emotions from users will no longer be limited to a single GUI channel. With changes in emotional computing, multimodal and physiological interfaces, material science, and other technologies, ubiquitous computer systems can also access human behavioral and perceptual data at a deeper level and interact seamlessly with humans.

This prototype is merely illustrative to show the practical significance of our theoretical model. Further efforts can be made by designers and engineers whose contributions will help to further develop and refine the theoretical model.

8. Limitations and Future Work

This study has the following research limitations:

- (a)

Due to the spread of COVID-19 in China, we were unable to closely observe users’ immediate responses to HRI and collect more informative data, which deprived us of the opportunity to gain further research insights. Once the epidemic has subsided, we will conduct our next study in a fully offline physical environment.

- (b)

We did a before and after comparison of the human teammate mode and then the AI teammate mode, and this order may have some impact on the results. This will need to be addressed in later studies to more thoroughly examine the separate and mixed effects of the human teammate mode and the AI teammate mode.

- (c)

It is important to be aware that our measurement methods may have limitations. Although we have reported the design principles and effectiveness of the measurement metrics for all instruments, it is worth noting that these instruments are preliminary and should be refined when considering future proposals.

- (d)

In terms of research assumptions and model design, our study could have considered more disaggregated emotion assessment factors. MOBA players’ emotions are multifaceted and simplistic binary categorizations of emotions may be inappropriate. It could be argued that the AI teammates and social robots caused conflict for some participants, which could exacerbate the player’s negative emotions.

In addition, some participants described the phenomenon of a negative atmosphere in MOBA games, the novelty of the experiment once completed, and their mixed preferences for interacting with a social robot. On the positive side, one of the participants remarked: “This robot is so cute; will it appear on the market as a co-branded product shortly? It looks like it will be a good companion. Good luck with your experiment!” On the downside, another participant stated: “Must we have an AI match? Is this an insult to my skills? Why can’t I curse when playing MOBA? It is my nature to curse; I am the best player of the BNU Zhuhai”. Additionally, another player said: “The robot in this video is honestly a bit scary. I think a bit of the Valley of Terror effect and whether non-video interaction can reduce the Valley of Terror effect”. In other words, it would be wise to consider population segmentation, discerning those who focus on cuteness from those who focus on personal prowess while avoiding uncanny effects by making robots tangible interfaces instead of screen-based avatars.

In view of our participants’ comments, we hope to conduct more contextual and task exploration work in the future, such as addressing the following questions:

- (a)

Does a touch interface make users feel more comfortable during the interaction when the robot is created from e-textile materials?

- (b)

Using sentiment analysis, can social robots sense the users’ diverse emotions during MOBA interactions?

- (c)

To achieve natural interactions and user experiences, how can social robots further absorb existing technologies to improve usability in the physical interaction of MOBAs?