Holo3DGIS: Leveraging Microsoft HoloLens in 3D Geographic Information

Abstract

:1. Introduction

2. Methods

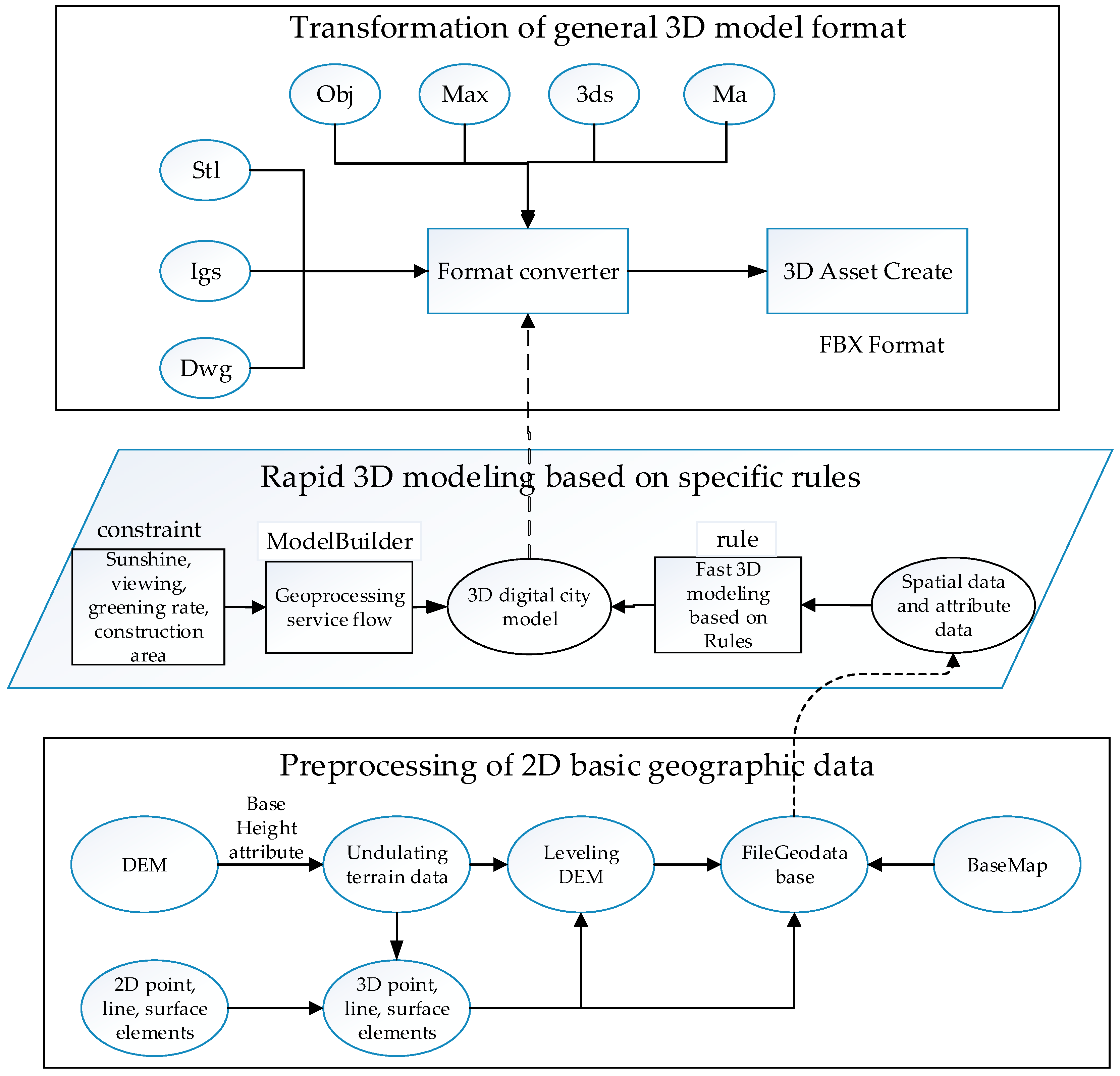

2.1. Creation of the 3D Asset

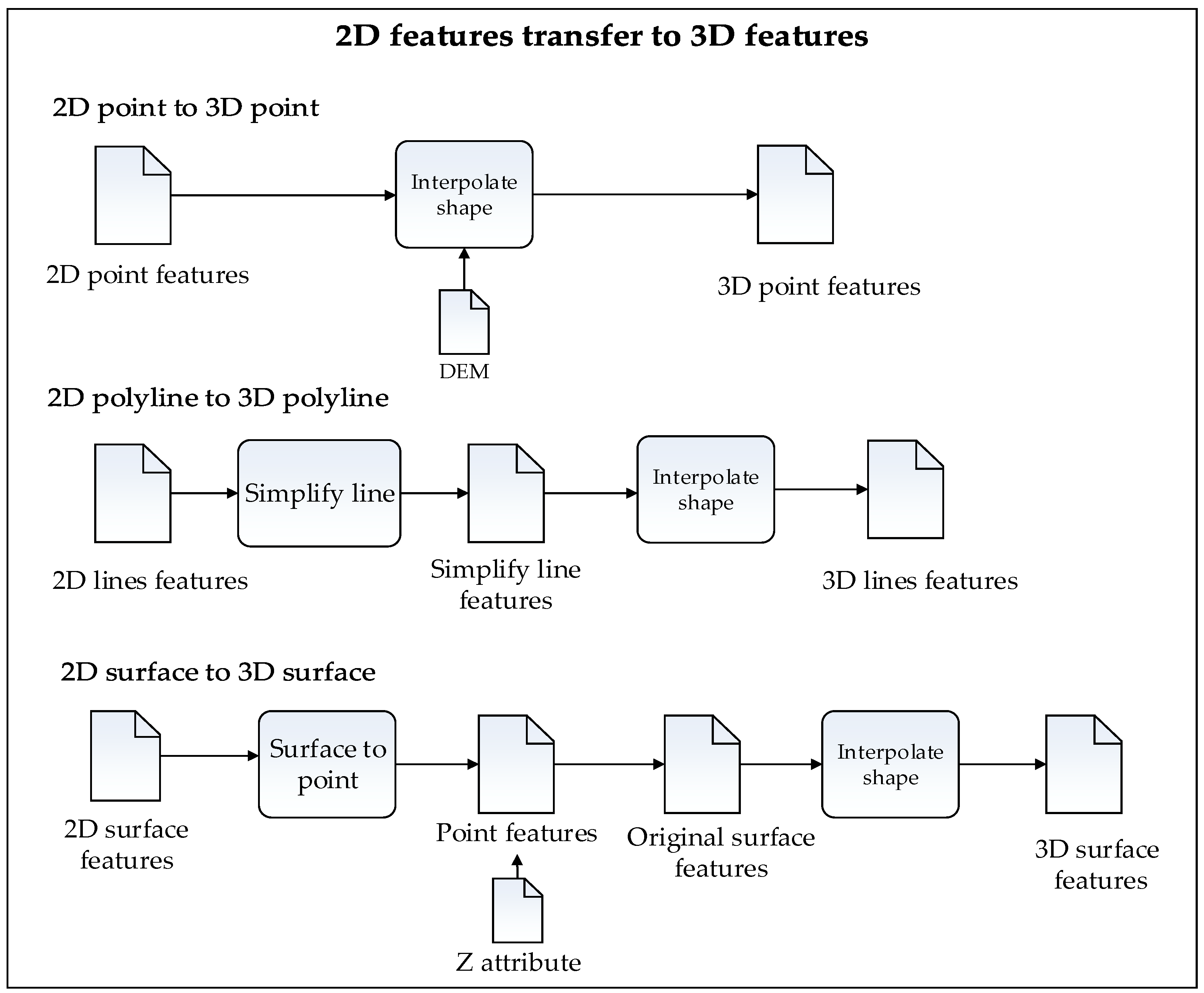

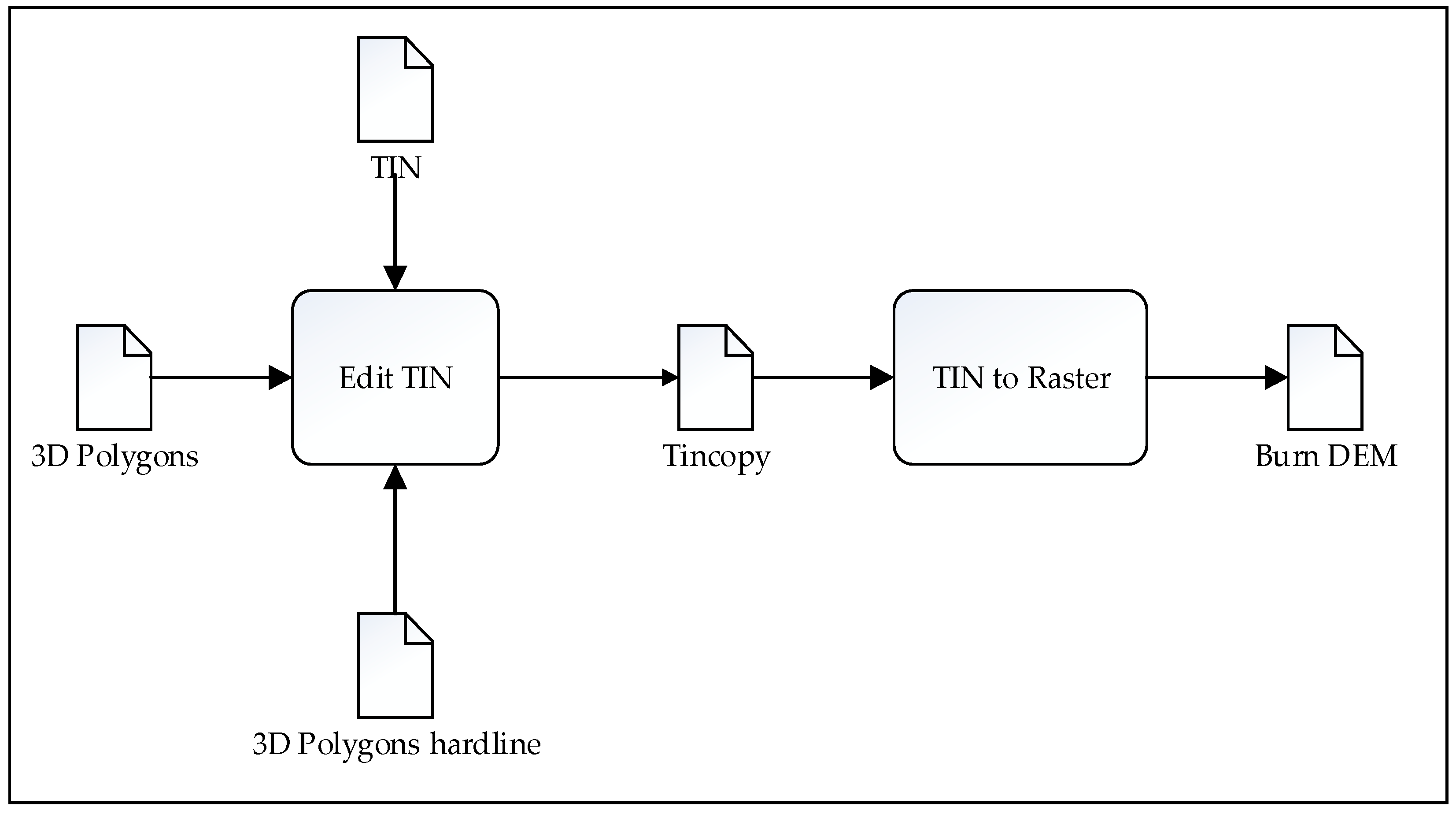

2.1.1. Preprocessing of 2D Basic Geographic Data

2.1.2. Rapid 3D Modelling Based on Specific Rules

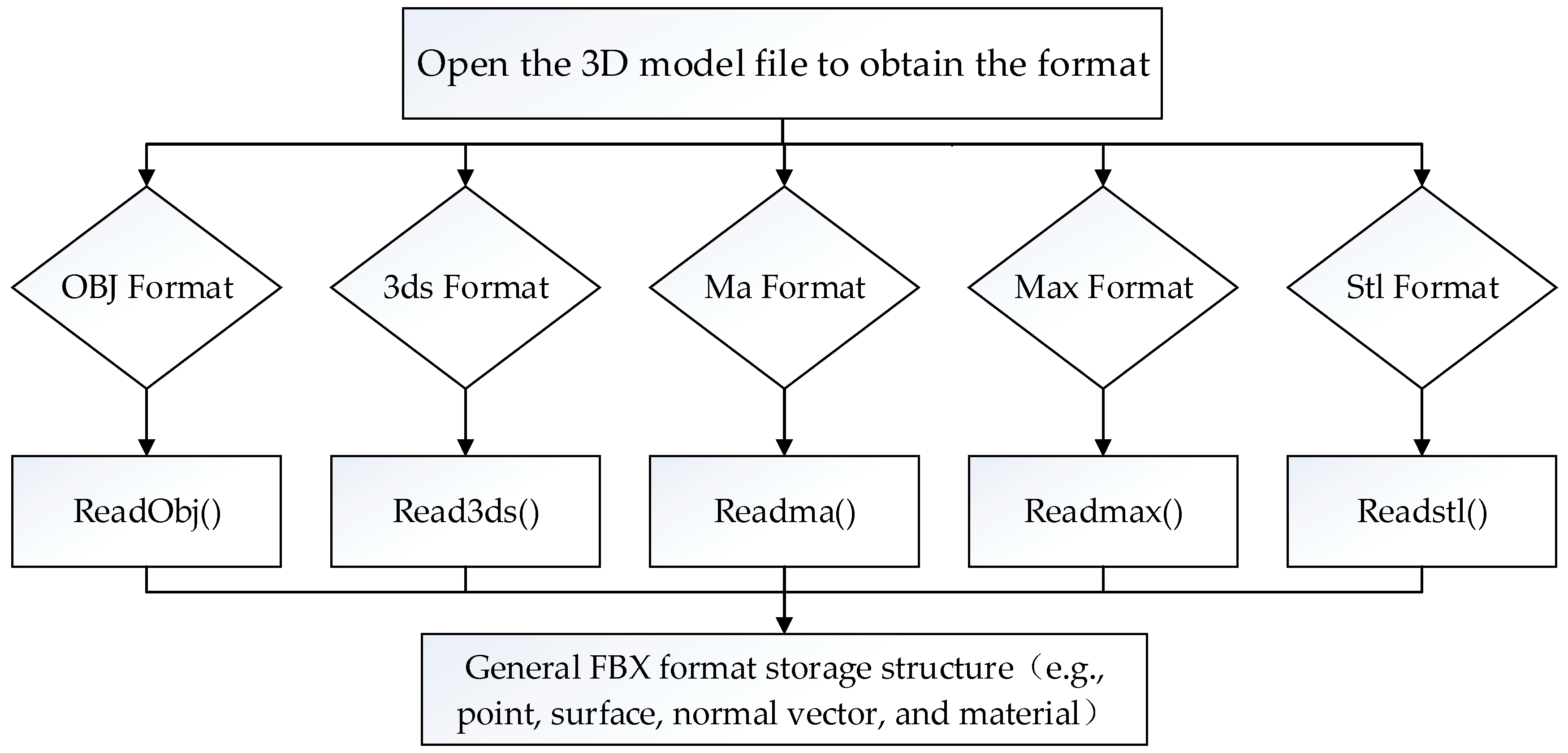

2.1.3. Transformation of the General 3D Model Format

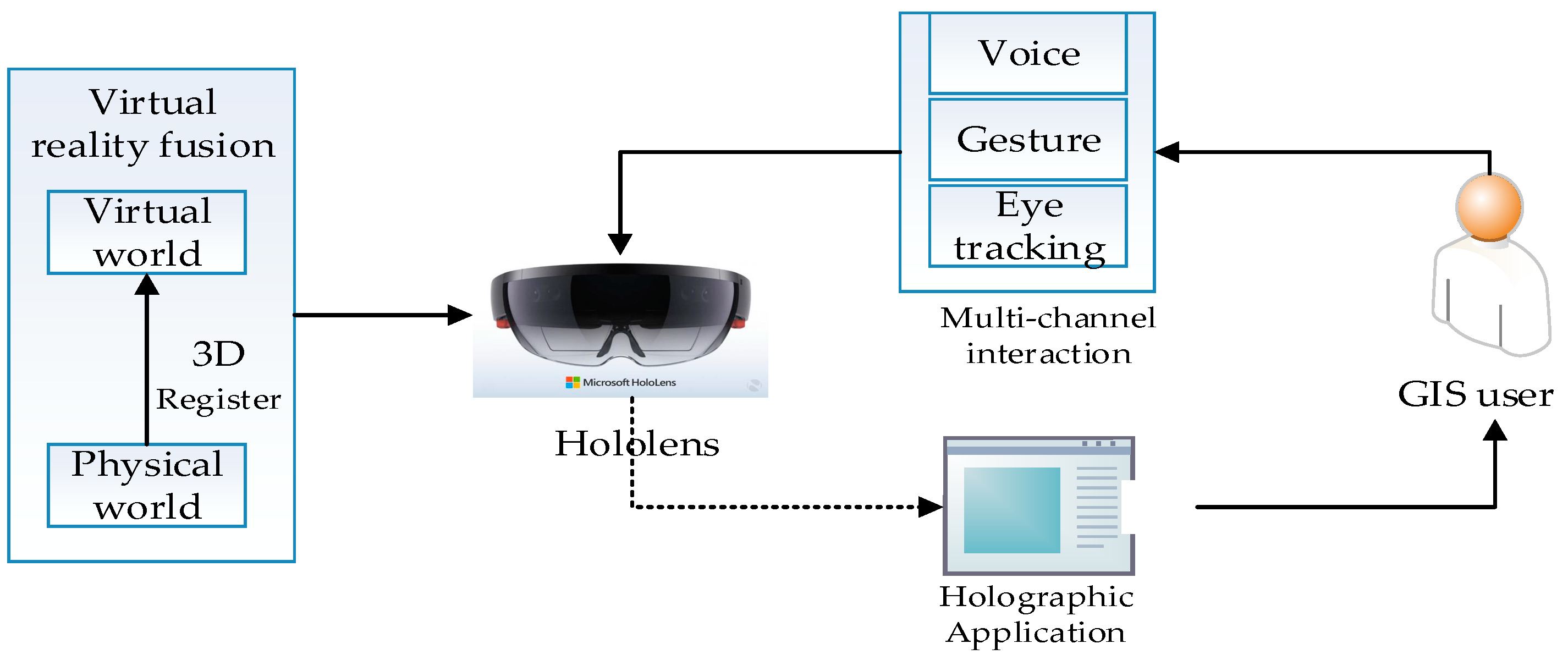

2.2. Holo3DGIS App Development

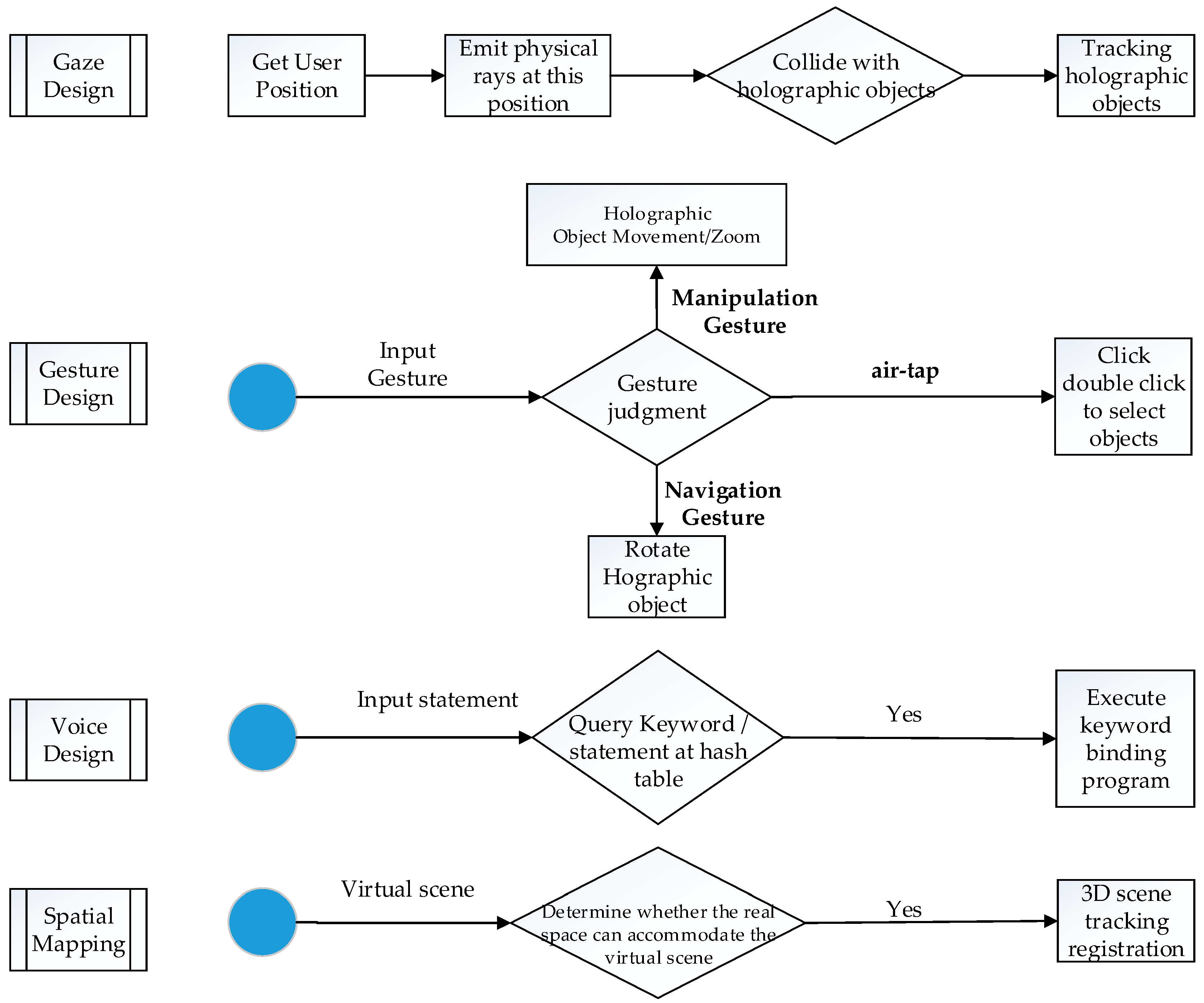

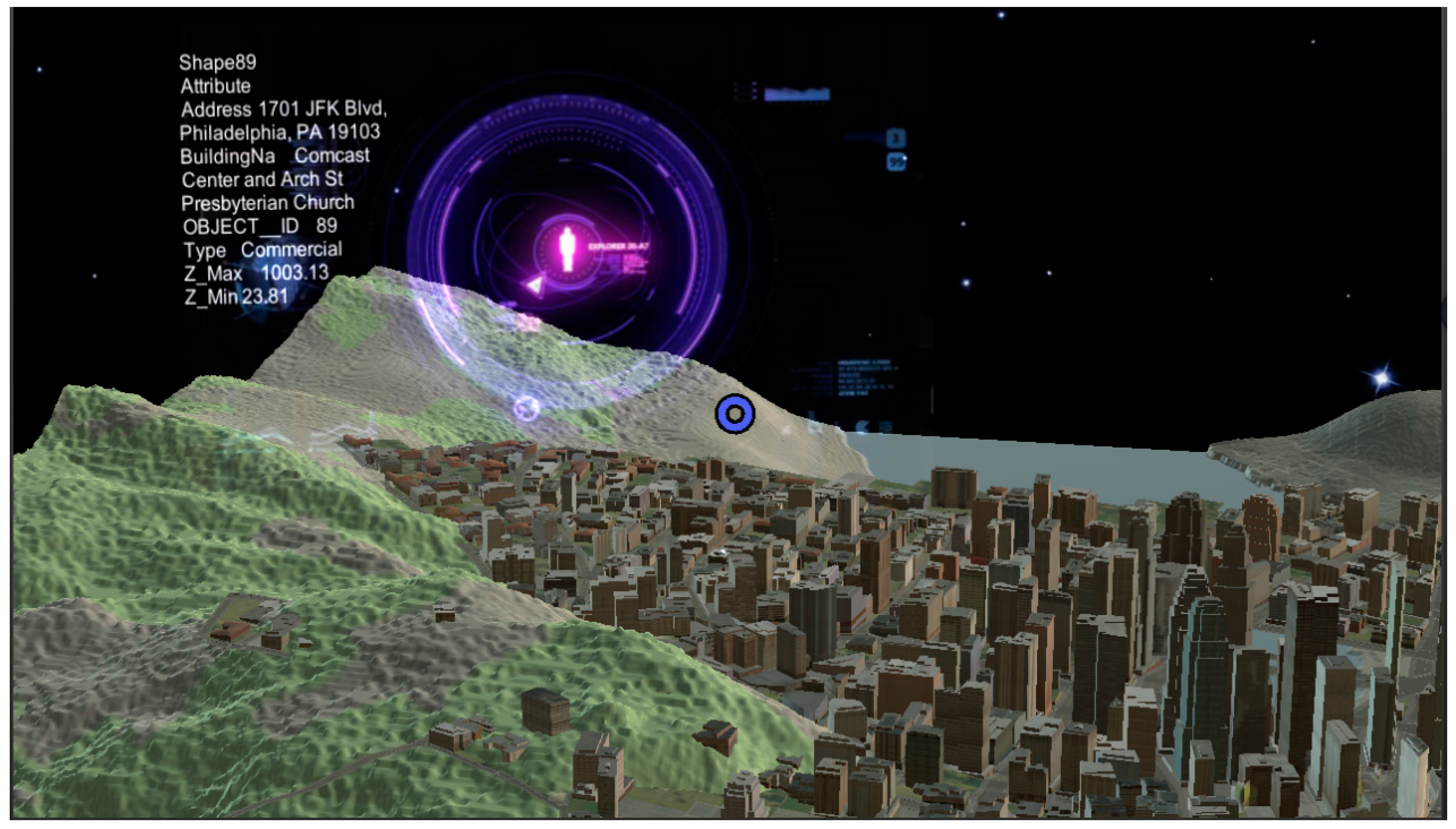

- Gaze design: Based on eye tracking technology, this method is the fastest way to obtain interactions between users and Holo3DGIS applications. It is also the first form of the user interaction input, which is used to track holographic objects. The design of the gaze function needs to not only capture the location of the user’s sight, but also add voices or visual information as feedback to the user. The specific implementation of the gaze function is based on the location and direction of the user’s head using the physical ray of the Unity3D engine; after colliding with the holographic object, the collision results include the location of the collision point and the information of the collision object, which results in the tracking of objects in the holographic geographical scene.

- Gesture design: The function of the gesture design is to transform the user’s intention into actual action. By tracking and capturing the input gesture based on the location and state of the user’s hand, the system automatically creates the appropriate response to manipulate the objects in the holographic geographic scene. Holo3DGIS only recognizes three gestures: air-tap, navigation gesture, and manipulation. The air-tap triggers click and double-click events. The navigation gesture is used to realize the rotation of holographic objects. Manipulation controls the movement of the holographic objects.

- Voice design: The interaction of the user and the holographic object through voice control can anticipate the actions of the user’s hands. The following rules need to be followed in the design of voice control: (1) create very concise commands. On the one hand, this is convenient for system identification. On the other hand, it is convenient for user memory; (2) use simple vocabulary; (3) avoid using single tone commands; and (4) avoid using the HoloLens system commands. Voice control provides users with a voice command experience by setting keywords and corresponding behaviors in the application. When the user speaks the keyword, the pre-set action is used. This method is used to store keywords and corresponding behaviors into the hash table, initialize keyword recognizer instances by registering the keyword, and registering voice commands to accomplish subsequent processing. The purposes of the voice design and the gesture design are the same: to realize the interactions with objects in the holographic geographic scene. However, the methods of realization between the voice design and the gesture design are different.

- Spatial mapping: Spatial mapping can introduce the virtual world into the real world and place the holographic 3D geographical scene into the most suitable location given the user’s physical space to achieve a combination between the real world and the virtual geographic world. The spatial mapping design mainly consists of two steps. First, by using the HoloLens deep camera to scan the target’s physical space, the scanned data and built-in triangulation are obtained to achieve modelling and digitization of the physical world. This is one of the indicators that distinguishes between MR and AR, which refers to the ability to model the physical world with a 3D camera. The second step is to calculate whether or not the digital physical space can fully accommodate the virtual geographic scene. If it can be accommodated, the combination of the virtual and the real worlds is realized. If not, the system provides users with feedback that cannot be combined with the virtual and real worlds. Through surface mapping, the HoloLens avoids placing images in obstructed positions and generates an experience that is contextualized to the user’s location.

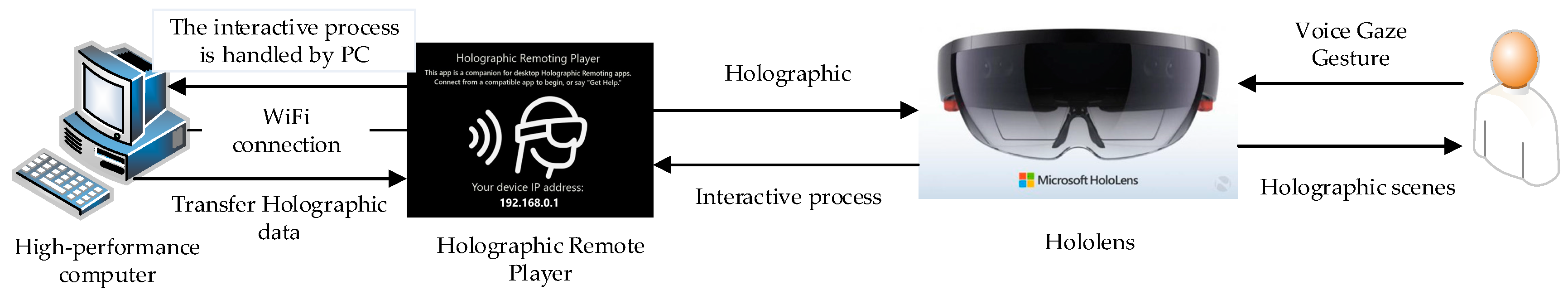

2.3. Holo3DGIS App Compiler Deployment

2.3.1. The Deployment Pattern of Small Scenes

2.3.2. The Deployment Pattern of Large Scenes

3. Experiments and Results

3.1. Experimental Preparation

- Data: 2D basic geographic information data of Philadelphia [23], including a digital elevation model (DEM), basemap, point features (e.g., infrastructure and trees), polyline features (e.g., streets), polygon features (e.g., buildings), and other data.

- Software: ArcGIS 10.2 (2D GIS data preprocessing), CityEngine 2014.1 (rapid 3D modelling based on specific rules), Unity3D 5.4.0f3 (core engine for the development of Holo3DGIS applications), Visual Studio Community 2015 (a platform for Holo3DGIS application development, compilation, and deployment), and Windows 10 professional version (the HoloLens developer required either Windows 10 Pro, Enterprise, or Education systems).

- Hardware: Microsoft HoloLens developer Edition [24], PC (Dell, Intel (R) Xeon (R) CPU E3—1220 v3 at 3.10 GHz (4 CPUs), ~3.1 GHz).

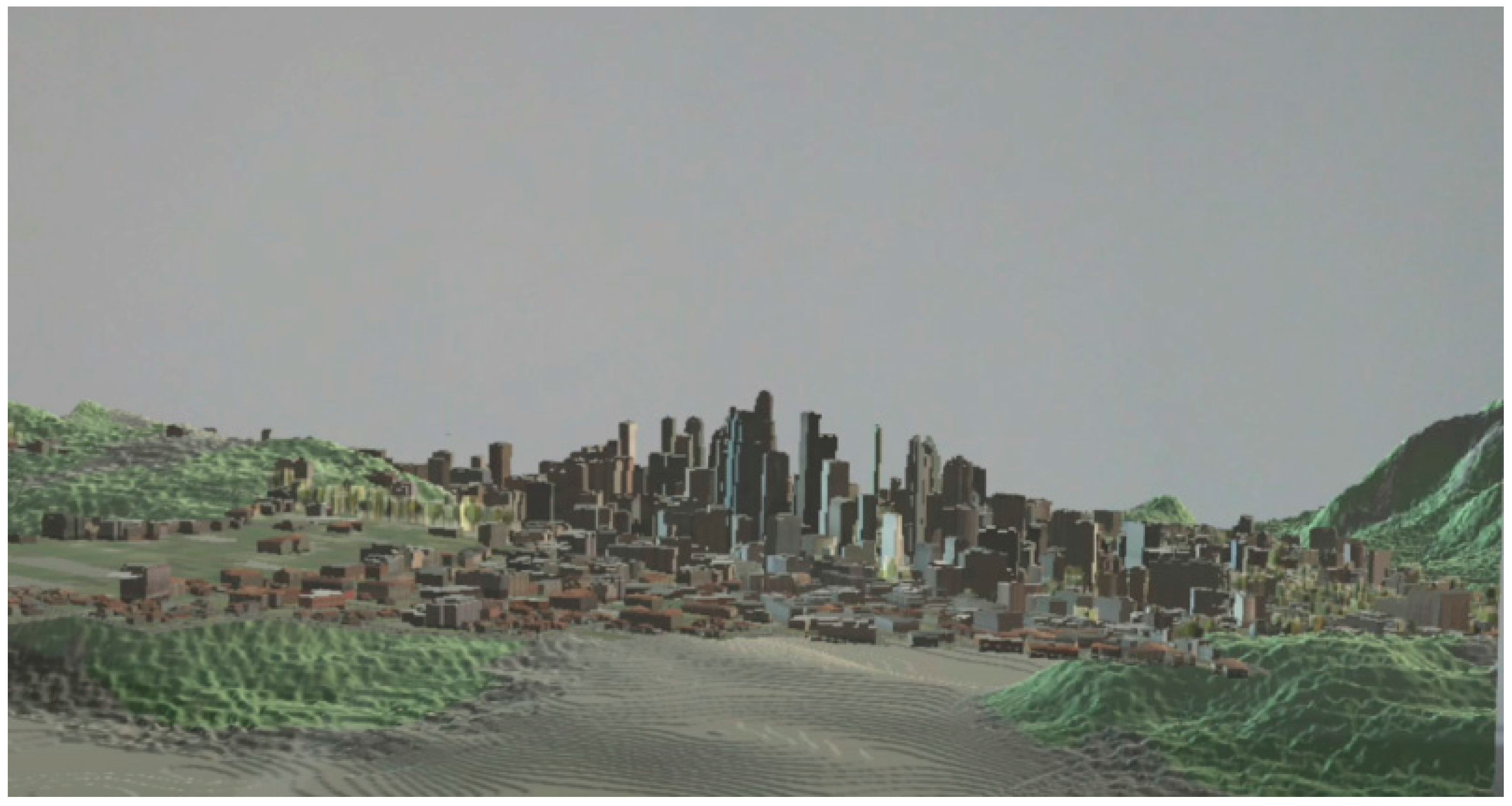

3.2. Experimental Results and Analyses

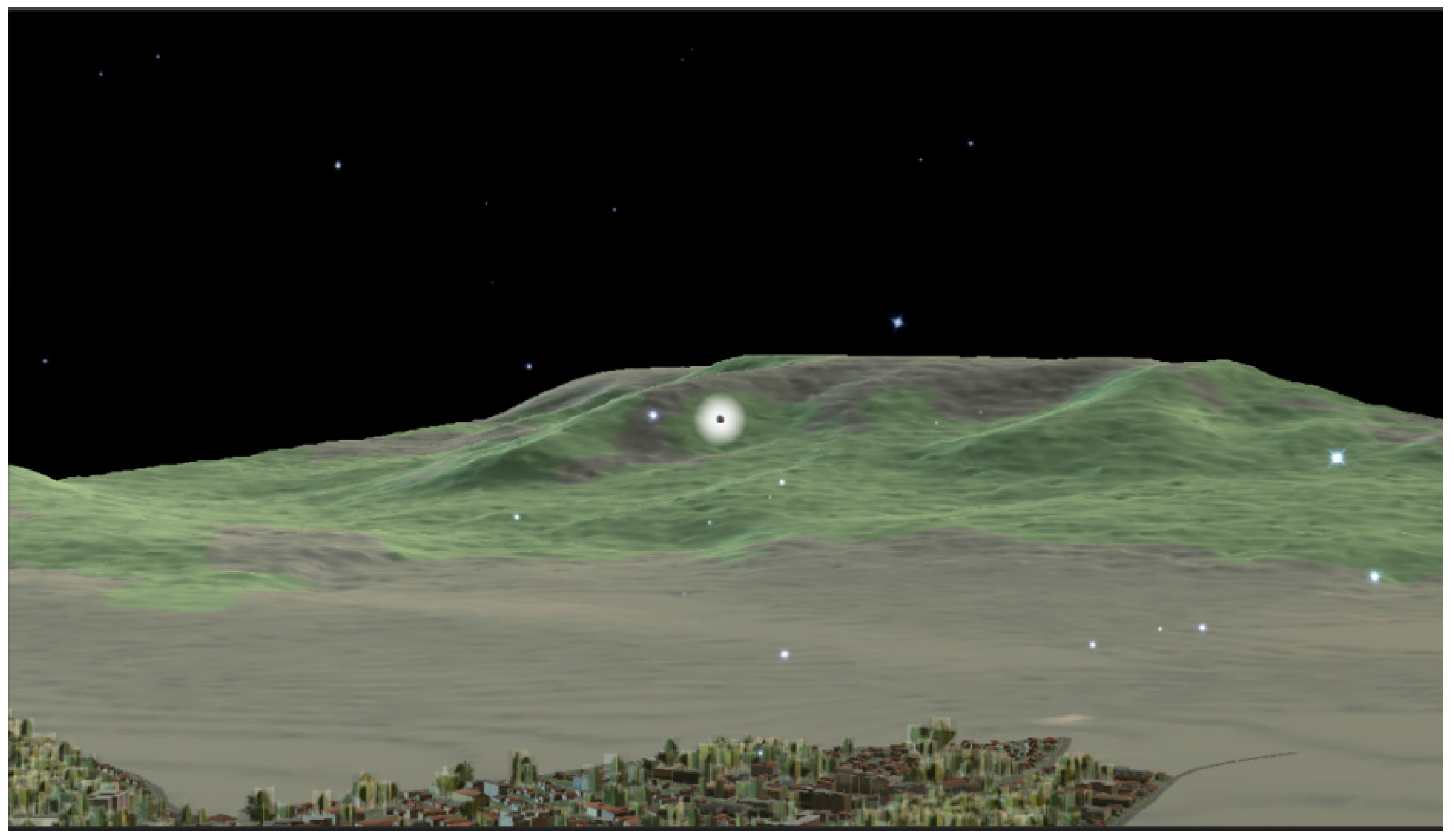

3.2.1. Introduction of the Virtual Geographic World into the Physical World

- In the Holo3DGIS application, geographic scenes and objects in the digital Philadelphia scene are created by the light and voice of the users’ peripheral world. Therefore, the digital Philadelphia scene is the part of the physical world and has the characteristics of a real-time virtual object.

- Interactive features: the digital city in the Holo3DGIS application can respond to the gaze, gestures and voice instructions of the GIS user. In addition to interacting with people, the digital city, as a part of the physical world, can interact with the surface of the physical world.

- Depth and perspective information: when the digital city in the Holo3DGIS application is placed in the physical world, it has a wealth of information and perspective; the distance and angle between users and digital city entities are measurable.

- Spatial persistence: the digital Philadelphia scene is inserted into the real world by an anchor in space; when a GIS user returns, the digital Philadelphia scene remains in its original position.

3.2.2. New Interactive Mode of the 3D GIS

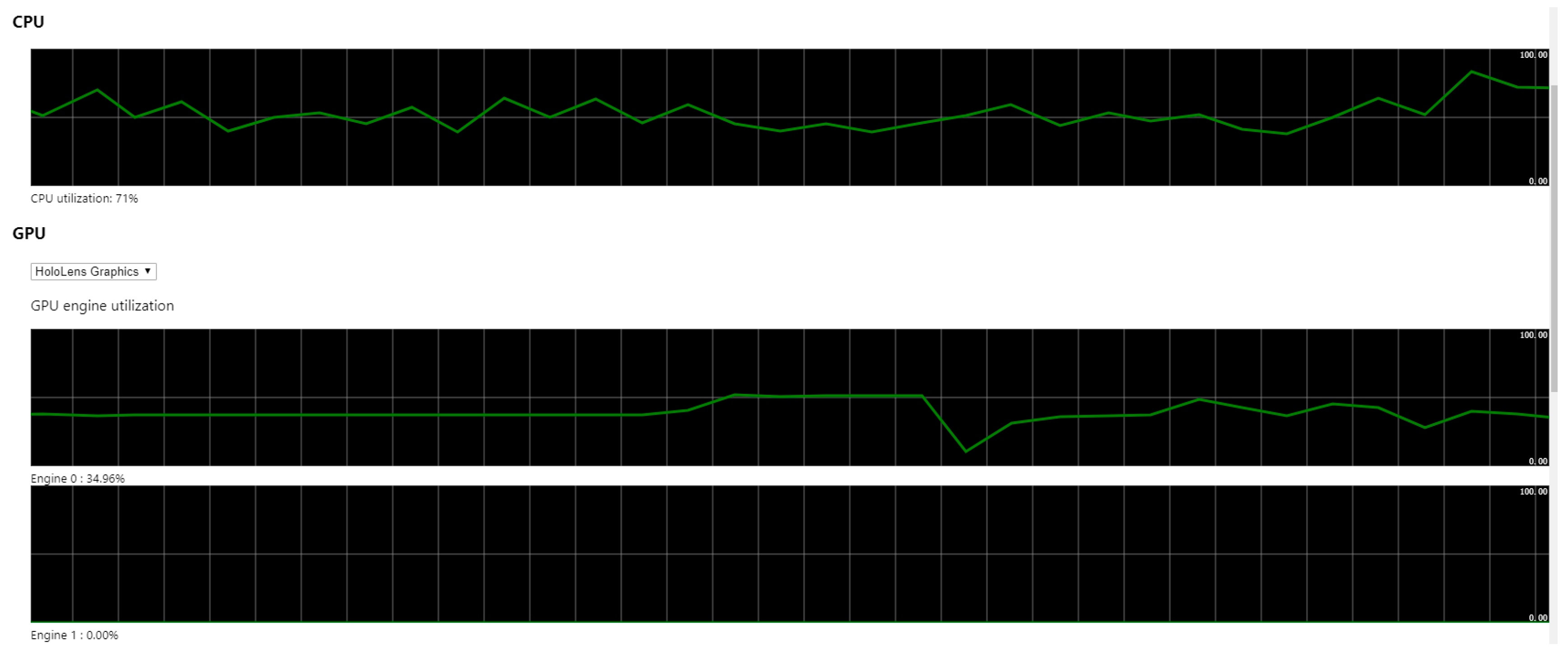

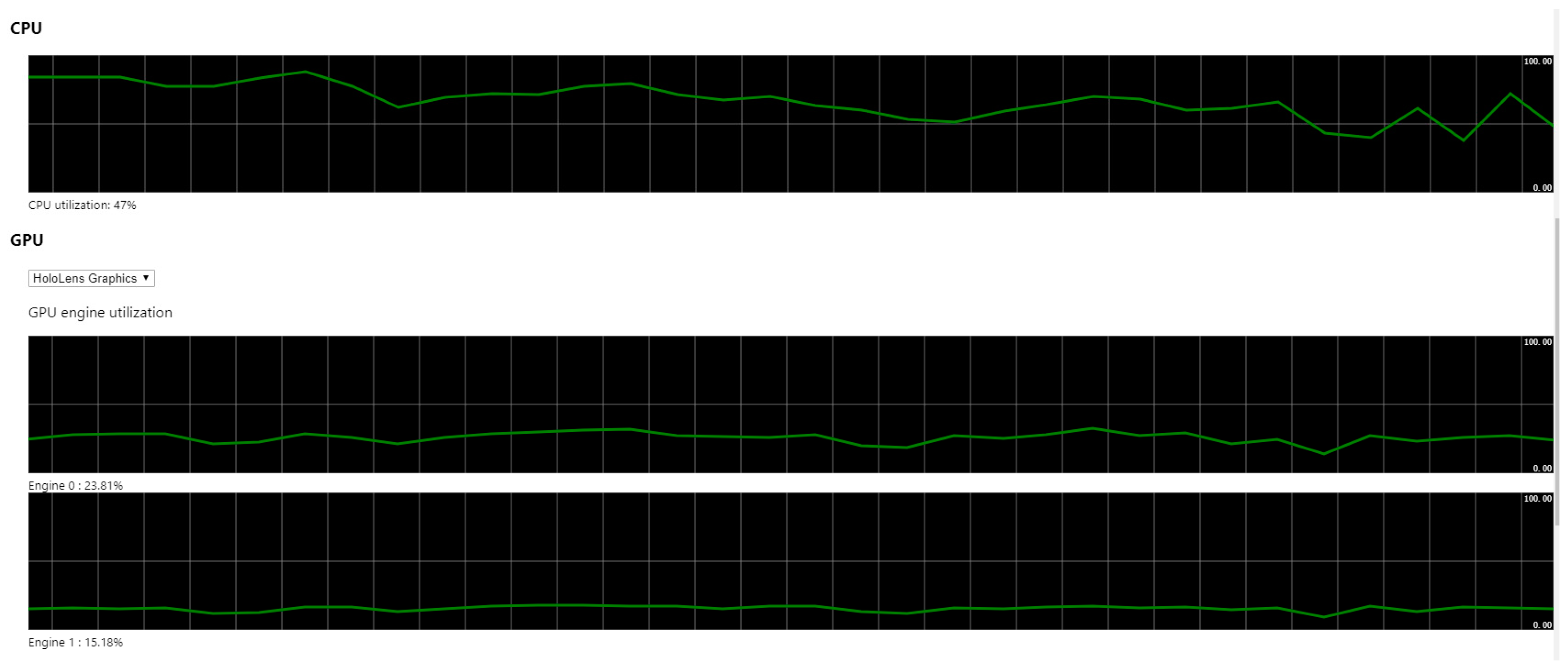

3.2.3. Performance Comparison

3.2.4. Human Computer Interact Test

3.2.5. Limitations and Deficiencies

- The Holo3DGIS application does not consider how to organize and schedule geographic scene data according to the display requirements. Therefore, using an MR device, such as the the HoloLens, to visualize 3D geospatial data may introduce a delay because geographic scenes exceed the computers rendering capabilities connected to the HoloLens. The result of this delay is that the user may suffer from nausea.

- Holo3DGIS applications can be developed based on the Unity3D engine, but the development mode will be limited to the Unity3D engine. To build individual development engines, The Holographic DirectX 11 application programming interface (API) is needed. DirectX 11 lacks a corresponding base library, and it requires designs from scratch; therefore, the difficulty coefficient of development is larger.

- MR devices, such as the HoloLens, have a very limited field of view (approximately 30° by 17.5°), which may narrow the user’s visual space. In addition, the user may suffer from fatigue because their eyes need to focus on the HoloLens plane within an inch of their eyes for quite some time. In addition, the HoloLens weight can cause neck strain after extended use. These three drawbacks may cause discomfort to the GIS user.

- Holo3DGIS applications are suitable for the first-person perspective, but they can also be used for third-person browsing. However, the latter is not as efficient as the former. The main reason is that the third-person perspective relies on the mixed reality capture system in the HoloLens device portal. The mixed reality capture system uses a 2-million-pixel red-green-blue (RGB) camera to capture the video stream; therefore, the effect is not satisfactory. All of the experimental screenshots in this article are obtained through a third-person mixed reality capture screen stream, which causes the result to be not as good as wearing the HoloLens holographic glasses directly. In addition, the screenshot of the spatial mapping captures both the real physical world and the holographic virtual image, while the gaze and gesture capture only the holographic virtual image. To improve the resolution of the screenshot, the pixels for the third-person perspective must be solved. Spectator view can be used to capture the mixed reality picture, and the resolution of the output picture can reach 1080p or even 4K. At the same time, relying on a single lens reflex (SLR) and other high-definition multimedia interface (HDMI) image recording equipment can provide a wider view. Currently, third person viewing screens used at HoloLens conferences are shown through spectator view. This is a method that requires two HoloLens devices, an HDMI-capable camcorder, and a high-performance computer for support.

4. Conclusions

- The real world is a complex and imperfect three-dimensional map. The integration and interaction of virtual geographic information scenes and real-world data through mixed reality technology will greatly improve the GIS user’s application experience. It changes the traditional vision, body sense, and interaction mode.

- Holo3DGIS has perspective information and depth information, which enables GIS users to experience real 3D GIS.

- MR provides a set of new input and output interactions for the GIS; it outputs an interactive feedback loop between the real world, the virtual geographic world, and the user to enhance the sense of authenticity throughout the user’s experience. In addition, it provides a new research direction and means for 3D GIS data visualization.

- GIS provides functions for the collection, processing, storage, and spatial analysis of geographic data for a mixed reality. Moreover, GIS broadens the scope and application for mixed realities.

- Commonly-shared data structures and interfaces between MR and GIS need to be established. Currently, GIS access to the MR platform requires the transformation of data format. On the one hand, this could increase the cost of MR development. On the other hand, the transformation of data formats could cause the data quality to become worse, which would diminish the user’s experience.

- By adding a geospatial analysis model service to the Holo3DGIS application, the dynamic display of changes in geographic processes in holographic mixed realities could be obtained.

- By adopting distributed computing technologies, holographic rendering calculations could be transferred from a single computer to multiple computers for parallel computing. Solving the lack of computing ability with a single computer could be accomplished with larger geographic scenes.

- By designing a service-oriented Holo3DGIS framework by publishing 3D geographic data into services and designing samples, accurate interfaces and contracts for communication can be obtained. MR could gain access to virtual geographic scene services in a standard manner. The virtual geographic scene service could be used by other MR users in the network. MR geographic resources could be accessed on demand.

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Macatulad, E.G.; Blanco, A.C. 3DGIS-Based Multi-Agent Geosimulation and Visualization of Building Evacuation Using GAMA Platform. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, XL-2, 87–91. [Google Scholar] [CrossRef]

- Verbree, E.; Maren, G.V.; Germs, R.; Jansen, F.; Kraak, M.J. Interaction in virtual world views-linking 3D GIS with VR. Int. J. Geogr. Inf. Syst. 1999, 13, 385–396. [Google Scholar] [CrossRef]

- Germs, R.; Maren, G.V.; Verbree, E.; Jansen, F.W. A multi-view VR interface for 3D GIS. Comput. Graph. 1999, 23, 497–506. [Google Scholar] [CrossRef]

- Rebelo, F.; Noriega, P.; Duarte, E.; Soares, M. Using virtual reality to assess user experience. Hum. Factors 2012, 54, 964–982. [Google Scholar] [CrossRef] [PubMed]

- Frank, L.A. Using the computer-driven VR environment to promote experiences of natural world immersion. In Proceedings of the SPIE Engineering Reality of Virtual Reality, Burlingame, CA, USA, 4–5 February 2013; Volume 8649, pp. 185–225. [Google Scholar] [CrossRef]

- Tingoy, O.; Gulluoglu, S.; Yengin, D. The use of virtual reality as an educational tool. In Proceedings of the 3rd International Technology, Education and Development Conference, Valencia, Spain, 9–11 March 2009. [Google Scholar]

- Sabir, M.; Prakash, J. Virtual Reality: A Review. In Proceedings of the 2nd International Conference on Advance Trends in Engineering and Technology, Jaipur, India, 17–19 April 2014. [Google Scholar]

- Berryman, D.R. Augmented reality: A review. Med. Ref. Serv. Q. 2012, 31, 212–218. [Google Scholar] [CrossRef] [PubMed]

- Uchiyama, H.; Saito, H.; Servières, M.; Moreau, G. AR City Representation System Based on Map Recognition Using Topological Information. Lect. Notes Comput. Sci. 2009, 5622, 128–135. [Google Scholar] [CrossRef]

- Sielhorst, T.; Feuerstein, M.; Navab, N. Advanced Medical Displays: A Literature Review of Augmented Reality. J. Disp. Technol. 2008, 4, 451–467. [Google Scholar] [CrossRef]

- Mackay, W.; Wellner, P. Computer augmented environments: Back to the real world. Haematologica 1993, 94, 170–172. [Google Scholar]

- Insley, S. Augmented Reality: Merging the Virtual and the Real. Eng. Technol. 2003, 8, 1–8. [Google Scholar]

- Wang, X.; Dunston, P.S. User perspectives on mixed reality tabletop visualization for face-to-face collaborative design review. Autom. Constr. 2008, 17, 399–412. [Google Scholar] [CrossRef]

- Brigham, T.J. Reality Check: Basics of Augmented, Virtual, and Mixed Reality. Med. Ref. Serv. Q. 2017, 36, 171–178. [Google Scholar] [CrossRef] [PubMed]

- HoloLens Hardware Details. Available online: https://developer.microsoft.com/en-us/windows/mixed-reality/hololens_hardware_details (accessed on 12 November 2017).

- Furlan, R. The future of augmented reality: Hololens-Microsoft’s AR headset shines despite rough edges [Resources_Tools and Toys]. IEEE Spectr. 2016, 53, 21. [Google Scholar] [CrossRef]

- Holodisaster: Leveraging Microsoft HoloLens in Disaster and Emergency Management. Available online: https://www.researchgate.net/publication/312320915_Holodisaster_Leveraging_Microsoft_HoloLens_in_Disaster_and_Emergency_Management (accessed on 18 December 2017).

- Stark, E.; Bisták, P.; Kučera, E.; Haffner, O.; Kozák, Š. Virtual Laboratory Based on Node.js Technology and Visualized in Mixed Reality Using Microsoft HoloLens. Fed. Conf. Comput. Sci. Inf. Syst. 2017, 13, 315–322. [Google Scholar] [CrossRef]

- NASA, Microsoft Collaboration will Allow Scientists to ‘Work on Mars’. Available online: https://www.jpl.nasa.gov/news/news.php?feature=4451 (accessed on 18 December 2017).

- Roth, D.; Yang, M.; Ahuja, N. Learning to recognize three-dimensional objects. Neural Comput. 2002, 14, 1071–1103. [Google Scholar] [CrossRef] [PubMed]

- Müller, P.; Wonka, P.; Haegler, S.; Ulmer, A.; Van Gool, L. Procedural modeling of buildings. ACM Trans. Graph. 2006, 25, 614–623. [Google Scholar] [CrossRef]

- Performance Recommendations for HoloLens Apps. Available online: https://developer.microsoft.com/en-us/windows/mixed-reality/performance_recommendations_for_hololens_apps (accessed on 20 December 2017).

- Geographic Information Data of Philadelphia. Rar. Available online: https://pan.baidu.com/s/1eSuAOCi (accessed on 20 December 2017).

- The Commercial Suite and the Development Edition are Fully Equipped for All HoloLens Developers, Whether You’re Working as an Individual or a Team. Available online: https://www.microsoft.com/en-us/hololens/buy#buyenterprisefeatures (accessed on 20 December 2017).

- Gaze. Available online: https://developer.microsoft.com/en-us/windows/mixed-reality/gaze (accessed on 20 December 2017).

| Data Preparation: 2D Building Vector, Contour Line, and Texture Map |

|---|

| Interpretative statement: the terms in the following passage (Lot, Building, Frontfacade, Floor and (Wall | Tile |Window)) are variables in the CGA rules file. Extrude(), comp(), split(), Repeat() and texture() are the functions of the CGA rules. The symbol -->is similar to equate (=). The result of the CGA file execution is a textured 3D building model. Lot -->extrude(height) Building // Creating the 3D buildings Building -->comp(f){front: Frontfacade | side:Sidefacade | top:roof} // Divide the building into three parts: the front, the left and the right Frontfacade -->split(y) Repeat{Floor} // Divide the building into target floors Floor -->Split(x) Repeat{Wall | Tile |Wall} //Split the floors into walls, windows, doors, and floors (Wall | Tile |Window) -->texture(texture_function) // Paste the texture for each segmented part with the mapping function |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, W.; Wu, X.; Chen, G.; Chen, Z. Holo3DGIS: Leveraging Microsoft HoloLens in 3D Geographic Information. ISPRS Int. J. Geo-Inf. 2018, 7, 60. https://doi.org/10.3390/ijgi7020060

Wang W, Wu X, Chen G, Chen Z. Holo3DGIS: Leveraging Microsoft HoloLens in 3D Geographic Information. ISPRS International Journal of Geo-Information. 2018; 7(2):60. https://doi.org/10.3390/ijgi7020060

Chicago/Turabian StyleWang, Wei, Xingxing Wu, Guanchen Chen, and Zeqiang Chen. 2018. "Holo3DGIS: Leveraging Microsoft HoloLens in 3D Geographic Information" ISPRS International Journal of Geo-Information 7, no. 2: 60. https://doi.org/10.3390/ijgi7020060