Assessing Biology Pre-Service Teachers’ Professional Vision of Teaching Scientific Inquiry

Abstract

:1. Introduction

1.1. Professional Vision as a Part of Professional Development

1.2. Assessment of Professional Vision

1.3. Training Fostering Professional Vision

1.4. Challenges in the Teaching and Learning of Scientific Inquiry

1.5. The Role of Formative Assessment for Science Teaching

1.6. Aim of the Study

- Dimensionality: Based on the theoretical background, we assumed a four-dimensional structure of professional vision. Hence, we explored in RQ 1: To what extent does the empirical data collected with our instrument fit this theoretically described structure of professional vision?

- Scoring: Different expert reference norms have been used to score participants’ answers in previous research. Hence, we aimed to answer the following as RQ 2: Is the use of a strict (dichotomous) or less strict (partial credit) expert reference norm more suitable?

- Sensitivity: As any suitable measurement instrument should be able to detect changes, RQ 3 was whether our instrument is sensitive enough to measure changes of professional vision.

2. Materials and Methods

2.1. Designing a Test Instrument

2.1.1. Development of the Text Vignettes

2.1.2. Development of the Items

2.1.3. Scoring

2.2. Structure of the Training

2.3. Participants and Research Design

2.4. Analyses of Data

3. Results

3.1. Assessing Preconditions for Using the Test to Assess Professional Vision

3.2. Test Scoring

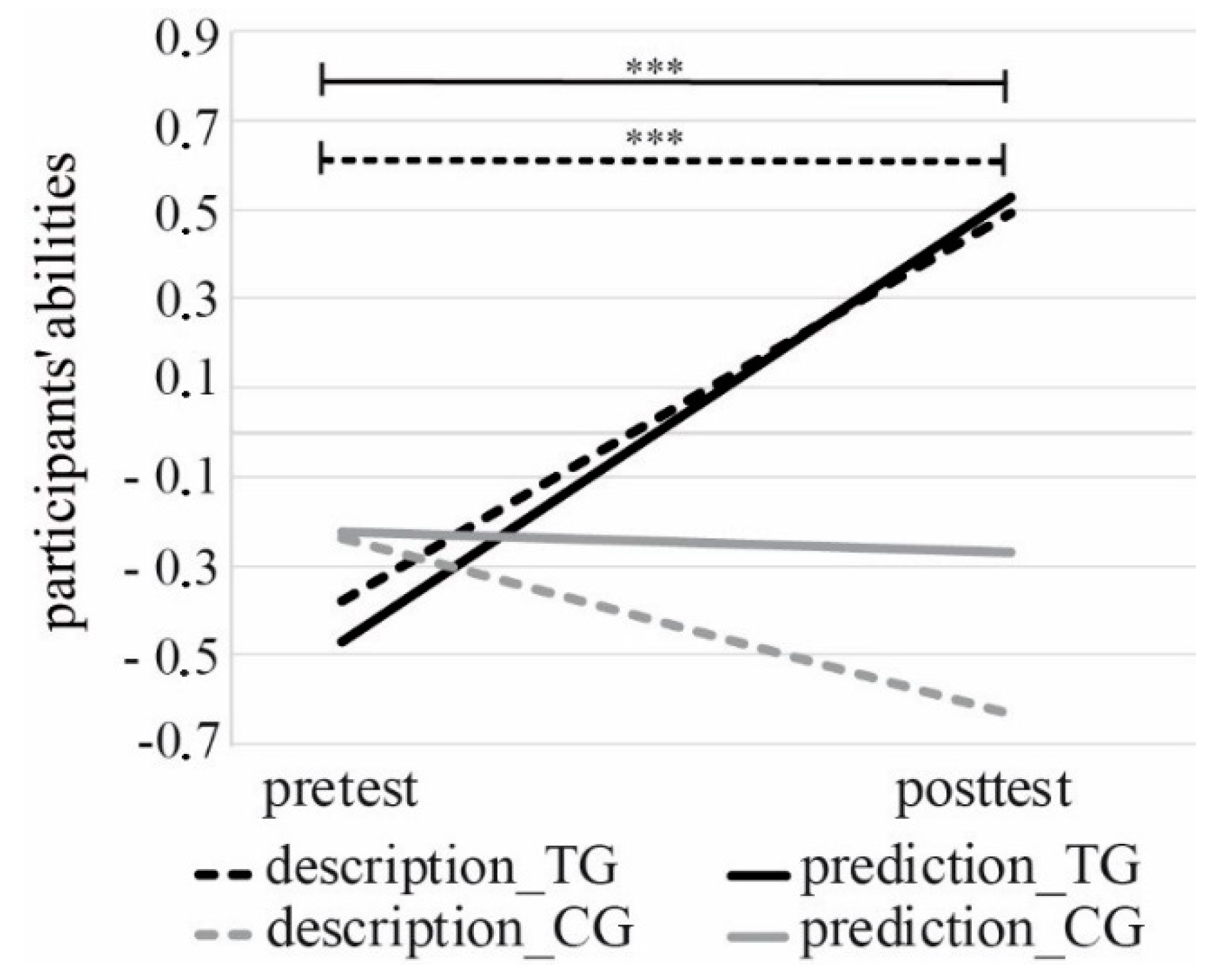

3.3. Demonstrating Sensitivity

4. Discussion

5. Limitations and Outlook

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Shulman, L.S. Knowledge and teaching: Foundations of new reform. Harv. Educ. Rev. 1987, 57, 1–21. [Google Scholar] [CrossRef]

- Großschedl, J.; Mahler, D.; Kleickmann, T.; Harms, U. Content-related knowledge of biology teachers from secondary schools: Structure and learning opportunities. Int. J. Sci. Educ. 2014, 36, 2335–2366. [Google Scholar] [CrossRef]

- Jüttner, M.; Neuhaus, B.J. Development of items for a pedagogical content knowledge test based on empirical analysis of pupils’ errors. Int. J. Sci. Educ. 2012, 34, 1125–1143. [Google Scholar] [CrossRef] [Green Version]

- Käpylä, M.; Heikkinen, J.-P.; Asunta, T. Influence of content knowledge on pedagogical content knowledge: The case of teaching photosynthesis and plant growth. Int. J. Sci. Educ. 2009, 31, 1395–1415. [Google Scholar] [CrossRef]

- Park, S.; Chen, Y.-C. Mapping out the integration of the components of pedagogical content knowledge (PCK): Examples from high school biology classrooms. J. Res. Sci. Teach. 2012, 49, 922–941. [Google Scholar] [CrossRef]

- Rozenszajn, R.; Yarden, A. Expansion of biology teachers’ pedagogical content knowledge (PCK) during a long-term professional development program. Res. Sci. Educ. 2014, 44, 189–213. [Google Scholar] [CrossRef]

- Baumert, J.; Kunter, M.; Blum, W.; Brunner, M.; Voss, T.; Jordan, A.; Klusmann, U.; Krauss, S.; Neubrand, M.; Tsai, Y.-M. Teachers’ mathematical knowledge, cognitive activation in the classroom, and student progress. Am. Educ. Res. J. 2010, 47, 133–180. [Google Scholar] [CrossRef] [Green Version]

- Hill, H.C.; Rowan, B.; Ball, D.L. Effects of teachers’ mathematical knowledge for teaching on student achievement. Am. Educ. Res. J. 2005, 42, 371–406. [Google Scholar] [CrossRef] [Green Version]

- Hashweh, M.Z. Effects of science teachers’ epistemological beliefs in teaching. J. Res. Sci. Teach. 1996, 33, 47–63. [Google Scholar] [CrossRef]

- Lord, T.R. A comparison between traditional and constructivist teaching in college biology. Innov. High. Educ. 1997, 21, 197–216. [Google Scholar] [CrossRef]

- Luft, J.A. Changing inquiry practices and beliefs: The impact of an inquiry-based professional development programme on beginning and experienced secondary science teachers. Int. J. Sci. Educ. 2001, 23, 517–534. [Google Scholar] [CrossRef]

- Luft, J.A.; Hewson, P.W. Research on teacher professional development programs in science. In Handbook of Research on Science Education; Ledermann, N.G., Abell, S.K., Eds.; Routledge: New York, NY, USA, 2014; pp. 889–909. [Google Scholar]

- Luft, J.A.; Roehring, G.H. Capturing science teachers’ epistemological beliefs: The development of the teacher beliefs interview. Electron. J. Sci. Educ. 2007, 11, 38–63. [Google Scholar]

- Seidel, T.; Schwindt, K.; Rimmele, R.; Prenzel, M. Konstruktivistische Überzeugungen von Lehrpersonen: Was bedeuten sie für den Unterricht? Constructivist beliefs of teachers: Which impact do they have on school lessons? In Perspektiven der Didaktik: Zeitschrift für Erziehungswissenschaft; Meyer, M.A., Hellekamps, S., Prenzel, M., Eds.; VS Verlag für Sozialwissenschaften/GWV Fachverlage GmbH: Wiesbaden, Germany, 2009; pp. 259–276. [Google Scholar]

- Gess-Newsome, J.; Lederman, N.G. Biology Teachers’ Perceptions of Subject Matter Structure and its Relationship to Classroom Practice. J. Res. Sci. Teach. 1992, 32, 301–325. [Google Scholar] [CrossRef]

- Mellado, V. Preservice teachers’ classroom practice and their conceptions of the nature of science. Sci. Educ. 1997, 6, 331–354. [Google Scholar] [CrossRef]

- Treagust, D.F.; Duit, R.; Joslin, P.; Lindauer, I. Science teachers’ use of analogies: Observations from classroom practice. Int. J. Sci. Educ. 1992, 14, 413–422. [Google Scholar] [CrossRef]

- Wenglinsky, H. How Schools Matter: The link between teacher classroom practices and student academic performance. Educ. Policy Anal. Arch. 2002, 10, 1–30. [Google Scholar]

- Blömeke, S.; Gustafsson, J.-E.; Shavelson, R.J. Beyond dichotomies: Competence viewed as a continuum. Z. Für Psychol. 2015, 223, 3–13. [Google Scholar]

- Seidel, T.; Blomberg, G.; Stürmer, K. “Observer”—Validierung eines videobasierten Instruments zur Erfassung der professionellen Wahrnehmung von Unterricht. Projekt OBSERVE. Schwerpunktprogramms und Perspektiven des Forschungsansatzes [“Observer”—Validation of a video-based instrument for capturing the professional vision of school lessons. Projekt OBSERVE]. In Kompetenzmodellierung. Zwischenbilanz des DFG-Schwerpunktprogramms und Perspektiven des Forschungsansatzes. 56. Beiheft der Zeitschrift für Pädagogik; Klieme, E., Leutner, D., Kenk, M., Eds.; Beltz: Weinheim, Germany, 2010; pp. 296–306. [Google Scholar]

- Sherin, M. The development of teachers’ professional vision in teacher clubs. In Video Research in the Learning Sciences; Goldman, R., Ed.; Erlbaum: Mahwah, NJ, USA, 2007; pp. 383–395. [Google Scholar]

- van Es, E.A.; Sherin, M.G. Mathematics teachers’ “learning to notice” in the context of a video club. Teach Teach. Educ. 2008, 24, 244–276. [Google Scholar] [CrossRef]

- Seidel, T.; Stürmer, K. Modeling and measuring the structure of professional vision in preservice teachers. Am. Educ. Res. J. 2014, 51, 739–771. [Google Scholar] [CrossRef] [Green Version]

- van Es, E.A.; Sherin, M.G. Learning to notice: Scaffolding new teachers’ interpretations of classroom interactions. J. Technol. Teach. Educ. 2002, 10, 571–596. [Google Scholar]

- Sherin, M.G.; van Es, E.A. Effects of Video Club Participation on Teachers’ Professional Vision. J. Teach. Educ. 2009, 60, 20–37. [Google Scholar] [CrossRef]

- Berliner, D.C. Learning about and learning from expert teachers. Int. J. Educ. Res. 2001, 35, 463–482. [Google Scholar] [CrossRef]

- Borko, H.; Livingston, C. Cognition and improvisation: Differences in mathematics instruction by expert and novice teachers. Am. Educ. Res. J. 1989, 26, 473–498. [Google Scholar] [CrossRef]

- Schwindt, K. Teachers Observe Instruction: Criteria for Competent Perception of Instruction; Waxmann: Münster, Germany, 2008. [Google Scholar]

- Zumbach, J.; Haider, K.; Mandl, H. Fallbasiertes Lernen: Theoretischer Hintergrund und praktische Anwendung [Case-based learning: Theoretical background and practical application]. In Pädagogische Psychologie in Theorie und Praxis: Ein Fallbasiertes Lehrbuch; Mandl, H., Zumbach, J., Eds.; Hogrefe: Göttingen, Germany; Bern, Switzerland, 2008; pp. 1–11. [Google Scholar]

- Borko, H.; Livingston, C.; Shavelson, R.J. Teachers’ thinking about instruction. Remedial Spec. Educ. 1990, 11, 40–49. [Google Scholar] [CrossRef]

- Stürmer, K.; Könings, K.D.; Seidel, T. Declarative knowledge and professional vision in teacher education: Effect of courses in teaching and learning. Br. J. Educ. Psychol. 2013, 83, 467–483. [Google Scholar] [CrossRef] [PubMed]

- Goodwin, C. Professional vision. Am. Anthropol. 1994, 96, 606–633. [Google Scholar] [CrossRef]

- Steffensky, M.; Gold, B.; Holdynski, M.; Möller, K. Professional vision of classroom management and learning support in science classrooms—Does professional vision differ across general and content-specific classroom interactions? Int. J. Sci. Math. Educ. 2015, 13, 351–368. [Google Scholar] [CrossRef]

- Star, J.R.; Strickland, S.K. Learning to observe: Using video to improve preservice mathematics teachers’ ability to notice. J. Math. Teach. Educ. 2008, 11, 107–125. [Google Scholar] [CrossRef]

- Sunder, C.; Todorova, M.; Möller, K. Kann die professionelle Unterrichtswahrnehmung von Sachunterrichtsstudierenden trainiert werden?—Konzeption und Erprobung einer Intervention mit Videos aus dem naturwissenschaftlichen Grundschulunterricht [Can social studies students’ professional vision be trained? Concepting and testing an intervention with videos from scientific primary school education]. Z. Für Didakt. Der Nat. 2016, 22, 1–12. [Google Scholar]

- Beck, R.J.; King, A.; Marshall, S.K. Effects of videocase construction on preservice teachers’ observations of teaching. J. Exp. Educ. 2002, 4, 345–361. [Google Scholar] [CrossRef]

- Michalsky, T. Developing the SRL-PV assessment scheme: Preservice teachers’ professional vision for teaching self-regulated learning. Stud. Educ. Eval. 2014, 43, 214–229. [Google Scholar] [CrossRef]

- Santagata, R.; Zannoni, C.; Stigler, J.W. The role of lesson analysis in pre-service teacher education: An empirical investigation of teacher learning from a virtual video-based field experience. J. Math. Teach. Educ. 2007, 10, 123–140. [Google Scholar] [CrossRef]

- Möller, K.; Steffensky, M.; Meschede, N.; Wolters, M. Professionelle Wahrnehmung der Lernunterstützung im naturwissenschaftlichen Grundschulunterricht [Professional vision of learning support within scientific primary school education]. Unterrichtswissenschaft 2015, 43, 317–335. [Google Scholar]

- Wolff, C.E.; Jarodzka, H.; van den Bogert, N.; Boshuizen, H.P.A. Teacher vision: Expert and novice teachers’ perception of problematic classroom management scenes. Instr. Sci. 2016, 44, 243–265. [Google Scholar] [CrossRef] [Green Version]

- Wolff, C.E.; Jarodzka, H.; Boshuizen, H. See and tell: Differences between expert and novice teachers’ interpretations of problematic classroom management events. Teach. Teach. Educ. 2017, 66, 295–308. [Google Scholar] [CrossRef]

- Stürmer, K.; Seidel, T.; Müller, K.; Häusler, J.S.; Cortina, K. What is in the eye of preservice teachers while instructing? An eye-tracking study about attention processes in different teaching situations. Z. Für Erzieh. 2017, 20, 75–92. [Google Scholar]

- Dreher, A.; Kuntze, S. Teachers’ professional knowledge and noticing: The case of multiple representations in the mathematics classroom. Educ. Stud. Math. 2015, 88, 89–114. [Google Scholar] [CrossRef]

- Son, J.-W. How preservice teachers interpret and respond to student errors: Ration and proportion in similar rectangles. Educ. Stud. Math. 2013, 84, 49–70. [Google Scholar] [CrossRef]

- Kersting, N.B.; Givvin, K.B.; Thompson, B.J.; Santagata, R.; Stigler, J.W. Measuring usable knowledge: Teachers’ analyses of mathematics classroom videos predict teaching quality and student learning. Am. Educ. Res. J. 2012, 49, 568–589. [Google Scholar] [CrossRef]

- Roth, K.J.; Garnier, H.E.; Chen, C.; Lemmens, M.; Schwille, K.; Wickler, N.I.Z. Videobased lesson analysis: Effective science PD for teacher and student learning. J. Res. Sci. Teach. 2011, 48, 117–148. [Google Scholar] [CrossRef]

- Hamre, B.K.; Pianta, R.C.; Burchinal, M.; Field, S.; LoCasale-Crouch, J.; Downer, J.T.; Howes, C.; LaParo, K.; Scott-Little, C. A Course on effective teacher-child interactions: Effects on teacher beliefs, knowledge, and observed practice. Am. Educ. Res. J. 2012, 49, 88–123. [Google Scholar] [CrossRef] [Green Version]

- Gold, B.; Förster, S.; Holodynski, M. Evaluation eines videobasierten Trainingsseminars zur Förderung der professionellen Wahrnehmung von Klassenführung im Grundschulunterricht [Evaluation of a video-based training seminar for supporting classroom managements’ professional vision in primary school education]. Z. Für Pädagogische Psychol. 2013, 27, 141–155. [Google Scholar]

- Santagata, R.; Guarino, J. Using video to teach future teachers to learn from teaching. Zdm Math. Educ. 2011, 43, 133–145. [Google Scholar] [CrossRef] [Green Version]

- Barth, V.L.; Piwowar, V.; Kumschick, I.R.; Ophardt, D.; Thiel, F. The impact of direct instruction in a problem-based learning setting. Effects of a video-based training program to foster preservice teachers’ professional vision of critical incidents in the classroom. Int. J. Educ. Res. 2019, 95, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Alsawaie, O.N.; Alghazo, I.M. The effect of video-based approach on prospective teachers’ ability to analyze mathematics teaching. J. Math. Teach. Educ. 2010, 13, 223–241. [Google Scholar] [CrossRef]

- Roth, K.J.; Bintz, J.; Wickler, N.I.Z.; Hvidsten, C.; Taylor, J.; Beardsley, P.M.; Caine, A.; Wilson, C.D. Design principles for effective video-based professional development. Int. J. Stem Educ. 2017, 4, 31. [Google Scholar] [CrossRef] [Green Version]

- Tekkumru-Kisa, M.; Stein, M.K. Designing, facilitating, and scaling-up video-based professional development: Supporting complex forms of teaching in science and mathematics. Int. J. Stem Educ. 2017, 4, 27. [Google Scholar] [CrossRef] [Green Version]

- Tekkumru-Kisa, M.; Stein, M.K. A framework for planning and facilitating video-based professional development. Int. J. Stem Educ. 2017, 4, 28. [Google Scholar] [CrossRef] [Green Version]

- Krammer, K.; Ratzka, N.; Klieme, E.; Lipowsky, F.; Pauli, C.; Reusser, K. Learning with classroom videos: Conception and first results of an online teacher-training program. Zent. Für Didakt. Math. 2006, 38, 422–432. [Google Scholar] [CrossRef]

- Blomberg, G.; Renkl, A.; Sherin, M.G.; Borko, H.; Seidel, T. Five research-based heuristics for using video in pre-service teacher education. J. Educ. Res. Online 2013, 5, 90–114. [Google Scholar]

- Gaudin, C.; Chaliès, S. Video viewing in teacher education and professional development: A literature review. Educ. Res. Rev. 2015, 16, 41–67. [Google Scholar] [CrossRef]

- Coffey, A.M. Using Video to develop skills in reflection in teacher education students. Aust. J. Teach. Educ. 2014, 39, 86–97. [Google Scholar] [CrossRef]

- Shernoff, D.J.; Sinha, S.; Bressler, D.M.; Ginsburg, L. Assessing teacher education and professional development needs for the implementation of integrated approaches to STEM education. Int. J. Stem Educ. 2017, 4, 13. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gold, B.; Pfirrmann, C.; Holodynski, M. Promoting professional vision of classroom management through different analytic perspectives in video-based learning environments. J. Teach. Educ. 2020, 002248712096368. [Google Scholar] [CrossRef]

- Weber, K.E.; Gold, B.; Prilop, C.N.; Kleinknecht, M. Promoting pre-service teachers’ professional vision of classroom management during practical school training: Effects of a structured online- and video-based self-reflection and feedback intervention. Teach. Teach. Educ. 2018, 76, 39–49. [Google Scholar] [CrossRef]

- National Research Council. National Science Education Standards; National Academy Press: Washington, DC, USA, 1996. [Google Scholar]

- National Research Council. Inquiry and the National Science Education Standards: A Guide for Teaching and Learning; National Academies Press: Washington, DC, USA, 2000. [Google Scholar]

- The Australian Curriculum F—10. Available online: https://www.australiancurriculum.edu.au/download/DownloadF10 (accessed on 14 November 2020).

- Department for Education and Skills/Qualification and Curriculum Authority. Handbook for Secondary Teachers in England (2004): The National Curriculum: Key Stages ¾; HMSO: London, UK, 2004.

- Ledermann, N.G.; Abell, S.K. Handbook of Research on Science Education; Routledge: New York, NY, USA, 2014. [Google Scholar]

- Maier, M. Entwicklung und Prüfung eines Instrumentes zur Diagnose der Experimentierkompetenz von Schülerinnen und Schülern [An Instruments’ Development and Examination for Diagnosing the Experimenting Competence of Pupils]; Logos Verlag: Berlin, Switzerland, 2015. [Google Scholar]

- Germann, P.J.; Aram, R.; Burke, G. Identifying patterns and relationships among the responses of seventh-grade students to the science process skill of designing experiments. J. Res. Sci. Teach. 1996, 33, 79–99. [Google Scholar] [CrossRef]

- Hammann, M.; Phan, T.T.H.; Ehmer, M.; Bayhuber, H. Schulpraxis-Fehlerfrei Experimentieren [Experimenting correctly]. Math. Nat. Unterr. 2006, 59, 292–299. [Google Scholar]

- Chen, Z.; Klahr, D. All Other Things Being Equal: Acquisition and Transfer of the Control of Variables Strategy. Child Dev. 1999, 70, 1098–1120. [Google Scholar] [CrossRef] [Green Version]

- Klahr, D.; Fay, A.L.; Dunbar, K. Heuristics for scientific experimentation: A developmental study. Cogn. Psychol. 1993, 25, 111–146. [Google Scholar] [CrossRef]

- Arnold, J.C.; Kremer, K.; Mayer, J. Understanding students’ experiments—What kind of support do they need in inquiry tasks? Int. J. Sci. Educ. 2014, 36, 2719–2749. [Google Scholar] [CrossRef]

- Bybee, R.W. Teaching science as inquiry. In Inquiry into Inquiry Learning and Teaching in Science; Minstrell, J., van Zee, E.H., Eds.; American Association for the Advancement of Science: Washington, DC, USA, 2000; pp. 20–46. [Google Scholar]

- Furtak, E.M. The problem with answers: An exploration of guided scientific inquiry teaching. Sci. Ed. 2006, 90, 453–467. [Google Scholar] [CrossRef]

- Mayer, R.E. Should there be a three-strikes rule against pure discovery learning? The case for guided methods of instruction. Am. Psychol. 2004, 59, 14–19. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kirschner, P.A.; Sweller, J.; Clark, R.E. Why minimal guidance during instruction does not work: An analysis of the failure of constructivist, discovery, problem-based, experiential, and inquiry-based teaching. Educ. Psychol. 2006, 41, 75–86. [Google Scholar] [CrossRef]

- Bell, B.; Cowie, B. The characteristics of formative assessment in science education. Sci. Educ. 2001, 85, 536–553. [Google Scholar] [CrossRef]

- Black, P.; Wiliam, D. Developing the theory of formative assessment. Educ. Assess. Eval. Account. 2009, 21, 5–31. [Google Scholar] [CrossRef] [Green Version]

- Decristan, J.; Hondrich, A.L.; Büttner, G.; Hertel, S.; Klieme, E.; Kunter, M.; Lühken, A.; Adl-Amini, K.; Djarkovic, S.; Mannel, S.; et al. Impact of additional guidance in science education on primary students’ conceptual understanding. J. Educ. Res. 2015, 108, 358–370. [Google Scholar] [CrossRef]

- Shavelson, R.J.; Young, D.B.; Ayala, C.C.; Brandon, P.R.; Furtak, E.M.; Ruiz-Primo, M.; Tomita, M.K.; Yin, Y. On the impact of curriculum-embedded formative assessment on learning: A collaboration between curriculum and assessment developers. Appl. Meas. Educ. 2008, 21, 295–314. [Google Scholar] [CrossRef]

- Hattie, J. Visible Learning: A Synthesis of over 800 Meta-Analyses Relating to Achievement; Routledge: New York, NY, USA, 2008. [Google Scholar]

- Decristan, J.; Klieme, E.; Kunter, M.; Hochweber, J.; Büttner, G.; Fauth, B.; Hondrich, A.L.; Rieser, S.; Hertel, S.; Hardy, I. Embedded formative assessment and classroom process quality: How do they interact in promoting science understanding? Am. Educ. Res. J. 2015, 52, 1133–1159. [Google Scholar] [CrossRef]

- Loughland, T.; Kilpatrick, L. Formative assessment in primary science. Int. J. Prim. Elem. Early Years Educ. 2013, 43, 128–141. [Google Scholar] [CrossRef]

- Ruiz-Primo, M.A.; Furtak, E.M. Informal formative assessment and scientifiv inquiry: Exploring teachers’ practices and student learning. Educ. Assess. 2006, 11, 205–235. [Google Scholar]

- Coffey, J.E.; Hammer, D.; Levin, D.M.; Grant, T. The missing disciplinary substance of formative assessment. J. Res. Sci. Teach. 2011, 48, 1109–1136. [Google Scholar] [CrossRef]

- Gotwals, A.W.; Philhower, J.; Cisterna, D.; Bennett, S. Using video to examine formative assessment practices as measures of expertise for mathematics and science teachers. Int. J. Sci. Math. Educ. 2015, 13, 405–423. [Google Scholar] [CrossRef] [Green Version]

- Morrison, J.A.; Lederman, N.G. Science teachers’ diagnosis and understanding of students’ preconceptions. Sci. Educ. 2003, 87, 849–867. [Google Scholar] [CrossRef]

- Davis, E.A.; Petish, D.; Smithey, J. Challenges new science teachers face. Rev. Educ. Res. 2006, 76, 607–651. [Google Scholar] [CrossRef]

- Furtak, E.M.; Ruiz-Primo, M.A.; Bakeman, R. Exploring the utility of sequential analysis in studying informal formative assessment practices. Educ. Meas. Issues Pract. 2017, 36, 28–38. [Google Scholar] [CrossRef]

- Allen, D.W.; Eve, A.W. Microteaching. Theory Pract. 1968, 7, 181–185. [Google Scholar] [CrossRef]

- Gold, B.; Holodynski, M. Using digital video to measure the professional vision of elementary classroom management: Test validation and methodological challenges. Comput. Educ. 2017, 107, 13–30. [Google Scholar] [CrossRef]

- Cicchetti, D.V. Guidelines, criteria, and rules of thumb for evaluating normed and standardized assessment instruments in psychology. Psychol. Assess. 1994, 6, 284–290. [Google Scholar] [CrossRef]

- Wirtz, M.A.; Caspar, F. Beurteilerübereinstimmung und Beurteilerreliabilität: Methoden zur Bestimmung und Verbesserung der Zuverlässigkeit von Einschätzungen mittels Kategoriensystemen und Ratingskalen [Rater Agreement and Rater Reliability: Methods for Identification and Improvement of Ratings Using Category Systems and Rating Scales]; Hogrefe Verl. für Psychologie: Göttingen, Germany, 2002. [Google Scholar]

- Baumann, S. Selbständiges Experimentieren und Konzeptuelles Lernen mit Beispielaufgaben in Biologie [Self-Reliant Experimentation and Conceptual Learning by Using Worked Examples in Biology]; Logos Verlag: Berlin, Germany, 2014. [Google Scholar]

- Haugwitz, M.; Sandmann, A. Collaborative modelling of the vascular system - designing and evaluating a new learning method for secondary students. J. Biol. Educ. 2010, 44, 136–140. [Google Scholar] [CrossRef]

- Rumann, S. Kooperatives Experimentieren im Chemieunterricht: Entwicklung und Evaluation einer Interventionsstudie zur Säure-Base-Thematik; Logos: Berlin, Germany, 2005. [Google Scholar]

- Vogt, F.; Schmiemann, P. Development of professional vision, knowledge, and beliefs of pre-service teachers in an out-of-school laboratory course. In Professionalisierung durch Lehr-Lern-Labore in der Lehrerausbildung; Bosse, D., Meier, M., Trefzger, T., Ziepprecht, K., Eds.; Verlag Empirische Pädagogik: Landau in der Pfalz, Germany, 2020; pp. 25–47. [Google Scholar]

- Mayer, J. Erkenntnisgewinnung als wissenschaftliches Problemlösen [Knowledge acquisition as scientific problem solving]. In Theorien in der biologiedidaktischen Forschung: Ein Handbuch für Lehramtsstudenten und Doktoranden; Krüger, D., Vogt, H., Eds.; Springer-Lehrbuch: Berlin/Heidelberg, Germany, 2007; pp. 177–196. [Google Scholar]

- Zhang, M.; Lundeberg, M.; Koehler, M.J.; Eberhardt, J. Understanding affordances and challenges of three types of video for teacher professional development. Teach. Teach. Educ. 2011, 27, 454–462. [Google Scholar] [CrossRef]

- Boone, W.J.; Scantlebury, K. The role of Rasch Analysis When Conducting Science Education Research Utilizing Multiple-Choice Tests. Sci. Ed. 2006, 90, 253–269. [Google Scholar] [CrossRef]

- Harrison, G.M.; Duncan Seraphin, K.; Philippoff, J.; Vallin, L.M.; Brandon, P.R. Comparing Models of Nature of Science Dimensionality Based on the Next Generation Science Standards. Int. J. Sci. Educ. 2015, 37, 1321–1342. [Google Scholar] [CrossRef]

- Neumann, I.; Neumann, K.; Nehm, R. Evaluating instrument quality in science education: Rasch-based analyses of a nature of science test. Int. J. Sci. Educ. 2011, 33, 1373–1405. [Google Scholar] [CrossRef] [Green Version]

- Rasch, G. Studies in Mathematical Psychology: I. Probabilistic Models for Some Intelligence and Attainment Tests; Nielsen & Lydiche: Oxford, UK, 1960. [Google Scholar]

- Masters, G.N. A rasch model for partial credit scoring. Psychometrika 1982, 47, 149–174. [Google Scholar] [CrossRef]

- Kiefer, T.; Robitzsch, A.; Wu, M.; Robitzsch, M.A. Package ‘TAM’. Available online: https://mran.microsoft.com/snapshot/2017-02-20/web/packages/TAM/TAM.pdf (accessed on 16 June 2020).

- Burnham, K.P.; Anderson, D.R. Multimodel Inference. Sociol. Methods Res. 2004, 33, 261–304. [Google Scholar] [CrossRef]

- Hartig, J.; Kühnbach, O. Schätzung von Veränderung mit “plausible values” in mehrdimensionalen Rasch-Modellen [Estimation of changes with “plausible values” within the multidimensional Rasch-models]. In Veränderungsmessung und Längsschnittstudien in der Empirischen Erziehungswissenschaft, 1st ed.; Ittel, A., Ed.; VS Verlag für Sozialwissenschaften: Wiesbaden, Germany, 2006; pp. 27–44. [Google Scholar]

- Bond, T.G.; Fox, C.M. Applying the Rasch Model; Erlbaum: Mahwah, NJ, USA, 2001. [Google Scholar]

- Warm, T.A. Weighted likelihood estimation of ability in item response theory. Psychometrika 1989, 54, 427–450. [Google Scholar] [CrossRef]

- Kim, J.K.; Nicewander, W.A. Ability estimation for conventional test. Pyschometrika 1993, 58, 587–599. [Google Scholar] [CrossRef]

- Brovelli, D.; Bölsterli, K.; Rehm, M.; Wilhelm, M. Erfassen professioneller Kompetenzen für den naturwissenschaftlichen Unterricht: Ein Vignettentest mit authentisch komplexen Unterrichtssituationen und offenem Antwortformat [Recording professional competencies for science classes: A vignette-test with authentic and complex teaching situations and open response format]. Unterrichtswissenschaft 2013, 41, 306–329. [Google Scholar]

- Friesen, M.; Kuntze, S.; Vogel, M. Videos, Texte oder Comics? Die Rolle des Vignettenformats bei der Erhebung fachdidaktischer Analysekompetenz zum Umgang mit Darstellungen im Mathematikunterricht [Videos, texts or comics? The role of the vignette-format when elevating didactical analysis competences dealing with representations in mathematics classes]. In Effektive Kompetenzdiagnose in der Lehrerbildung: Professionalisierungsprozesse Angehender Lehrkräfte Untersuchen; Rutsch, J., Rehm, M., Vogel, M., Seidenfuß, M., Dörfler, T., Eds.; Springer: Wiesbaden, Germany, 2018; pp. 153–177. [Google Scholar]

- Zucker, V. Erkennen und Beschreiben von formativem Assessment im Naturwissenschaftlichen Grundschulunterricht: Entwicklung eines Instruments zur Erfassung von Teilfähigkeiten der professionellen Wahrnehmung von Lehramtsstudierenden [Perception and Description of Formative Assessment in Scientific Primary School Education: Development of an Instrument to Assess Sub-Abilities of Professional Vision of Pre-Service Teachers]; Logos Verlag: Berlin, Germany, 2019. [Google Scholar]

- Stürmer, K.; Seidel, T. Connecting generic pedagogical knowledge with practice. In Pedagogical Knowledge and the Changing Nature of the Teaching Profession; Guerriero, S., Ed.; OECD Publishing: France, Paris, 2017; pp. 137–149. [Google Scholar]

- Kramer, M.; Förtsch, C.; Stürmer, J.; Förtsch, S.; Seidel, T.; Neuhaus, B.J. Measuring biology teachers’ professional vision: Development and validation of a video-based assessment tool. Cogent Educ. 2020, 7, 1823155. [Google Scholar] [CrossRef]

- Stürmer, K.; Seidel, T.; Holzberger, D. Intra-individual differences in developing professional vision: Preservice teachers’ changes in the course of an innovative teacher education program. Instr. Sci. 2016, 44, 293–309. [Google Scholar] [CrossRef]

| To What Extend Are the Following Stimuli Present in the Text Vignette You Just Read: Please Choose A Plausible Answer for Each Aspect: | Answer Format | |

|---|---|---|

| perception | “Are responses considering the clarification of what a hypothesis is present?” | Dichotomous (yes/no) |

| description | “The teacher explains what a hypothesis is” “The teacher explains why a hypothesis is essential in an experiment” “The teacher explains how a hypothesis is phrased” | Four-point Likert scale 1 (disagree) to 4 (agree) |

| explanation | “The teacher supports the understanding of what a hypothesis is.” “The teacher supports the understanding regarding the hypothesis’ function within the process of experimenting.” “The teacher supports the correct linguistic formulation of a hypothesis.” | Four-point Likert scale 1 (disagree) to 4 (agree) |

| prediction | “The students can transfer the hypothesis‘ function to other experiments.” “The students are able to understand what the hypothesis within further experiments is.” “The students can utilize the preferred ‘If…, then…’ structure of formulating a hypothesis for further experiments.” | Four-point Likert scale 1 (disagree) to 4 (agree) |

| Dimensionality | Deviance | Parameters | Δ Deviance | AIC | BIC | |

|---|---|---|---|---|---|---|

| Pre-test | 1-D model | 12,661 | 109 | 162 ** | 12,879 | 13,182 |

| 2-D model | 12,604 | 111 | 104 ** | 12,826 | 13,134 | |

| 3-D model | 12,597 | 114 | 97 ** | 12,825 | 13,142 | |

| 4-D model | 12,499 | 118 | 12,735 | 13,063 | ||

| Post-test | 1-D model | 13,612 | 109 | 259 ** | 13,830 | 14,133 |

| 2-D model | 13,546 | 111 | 193 ** | 13,768 | 14,076 | |

| 3-D model | 13,533 | 114 | 180 ** | 13,761 | 14,077 | |

| 4-D model | 13,353 | 118 | 13,589 | 13,917 | ||

| (a) Strict expert reference norm. | ||||||

| Dimensionality | Deviance | Parameters | Δ Deviance | AIC | BIC | |

| Pre-test | 1-D model | 23,021 | 205 | 119 ** | 23,431 | 24,001 |

| 2-D model | 22,958 | 207 | 55 ** | 23,372 | 23,947 | |

| 3-D model | 22,945 | 210 | 43 ** | 23,365 | 23,949 | |

| 4-D model | 22,902 | 214 | 23,330 | 23,925 | ||

| Post-test | 1-D model | 23,377 | 205 | 171 ** | 23,787 | 24,357 |

| 2-D model | 23,312 | 207 | 105 ** | 23,726 | 24,301 | |

| 3-D model | 23,300 | 210 | 93 ** | 23,720 | 24,303 | |

| 4-D model | 23,207 | 214 | 23,635 | 24,230 | ||

| (b) Less strict expert reference norm. | ||||||

| Strict Expert-Referenced Norm (1 = Hit; 0 = Miss) | Less Strict Expert-Referenced Norm (2 = Hit; 1 = Close; 0 = Miss) | |

|---|---|---|

| Abilities | EAP/WLE Reliability | |

| Perception | 0.36/0.24 | 0.35/0.24 |

| Description | 0.91/0.85 | 0.91/0.89 |

| Explanation | 0.54/0.15 | 0.60/0.27 |

| Prediction | 0.85/0.64 | 0.80/0.67 |

| Variance | ||

| Perception | 0.20 | 0.20 |

| Description | 2.13 | 0.49 |

| Explanation | 0.11 | 0.08 |

| Prediction | 1.88 | 0.24 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vogt, F.; Schmiemann, P. Assessing Biology Pre-Service Teachers’ Professional Vision of Teaching Scientific Inquiry. Educ. Sci. 2020, 10, 332. https://doi.org/10.3390/educsci10110332

Vogt F, Schmiemann P. Assessing Biology Pre-Service Teachers’ Professional Vision of Teaching Scientific Inquiry. Education Sciences. 2020; 10(11):332. https://doi.org/10.3390/educsci10110332

Chicago/Turabian StyleVogt, Friederike, and Philipp Schmiemann. 2020. "Assessing Biology Pre-Service Teachers’ Professional Vision of Teaching Scientific Inquiry" Education Sciences 10, no. 11: 332. https://doi.org/10.3390/educsci10110332