Assessing Teachers’ Capabilities to Work with Models and Evaluate Results in the Context of a Complex and Authentic STEM Problem

Abstract

:1. Introduction

2. Educational Background

2.1. Problem-Solving in STEM Education

2.1.1. Teaching Problem-Solving

2.1.2. Complex Problems

2.1.3. Authenticity in STEM Education

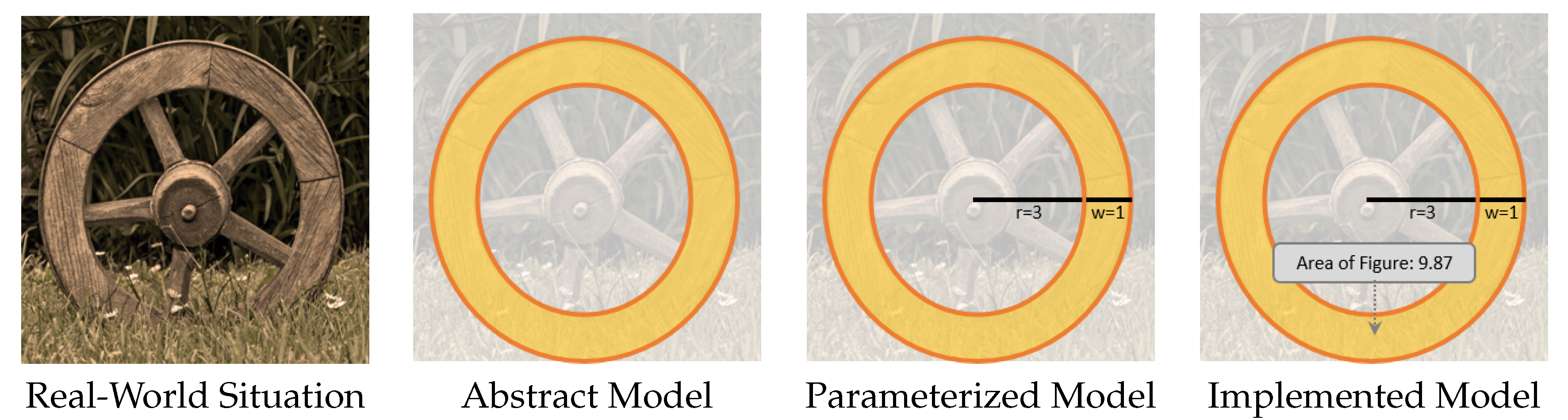

2.2. Models and Working with Models in Problem-Solving

2.2.1. Defining Models and Their Common Properties

2.2.2. Selection of Models

2.2.3. Working with Technological Models

2.2.4. Model and Result Evaluation

2.2.5. The SOLO Taxonomy to Measure Structural Complexity

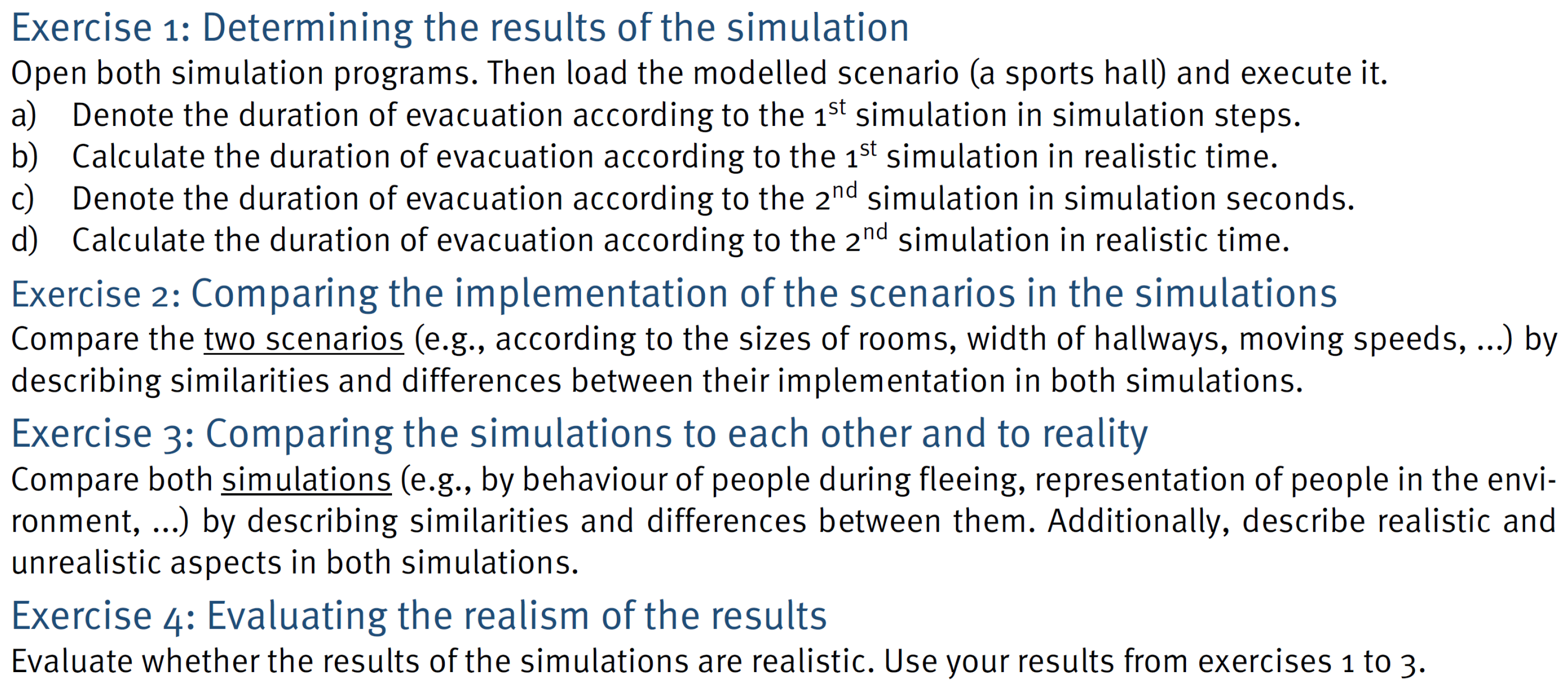

3. Simulating Building Evacuations as the Problem for our Study

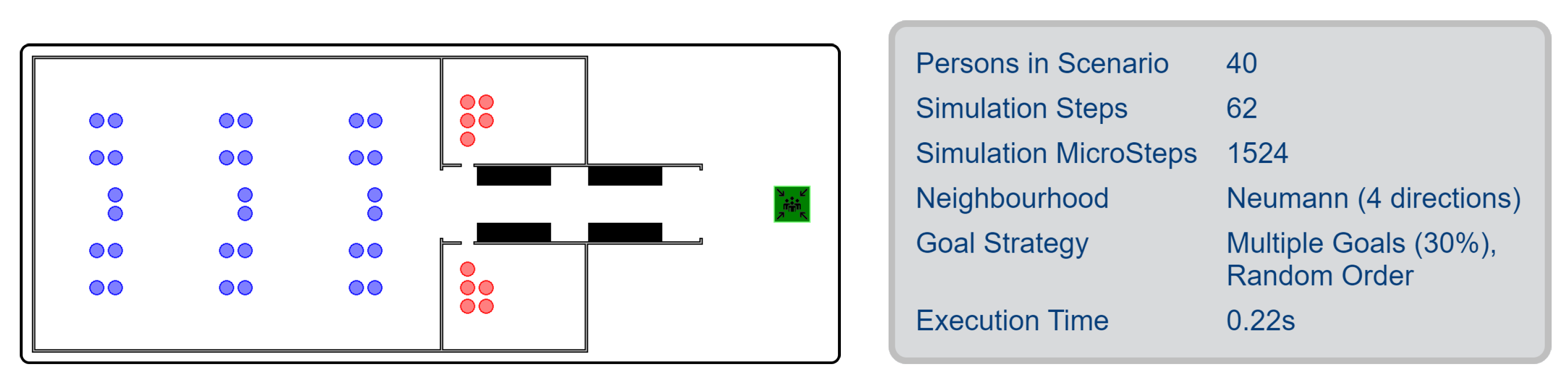

3.1. Simulating Building Evacuations with Grid Automata

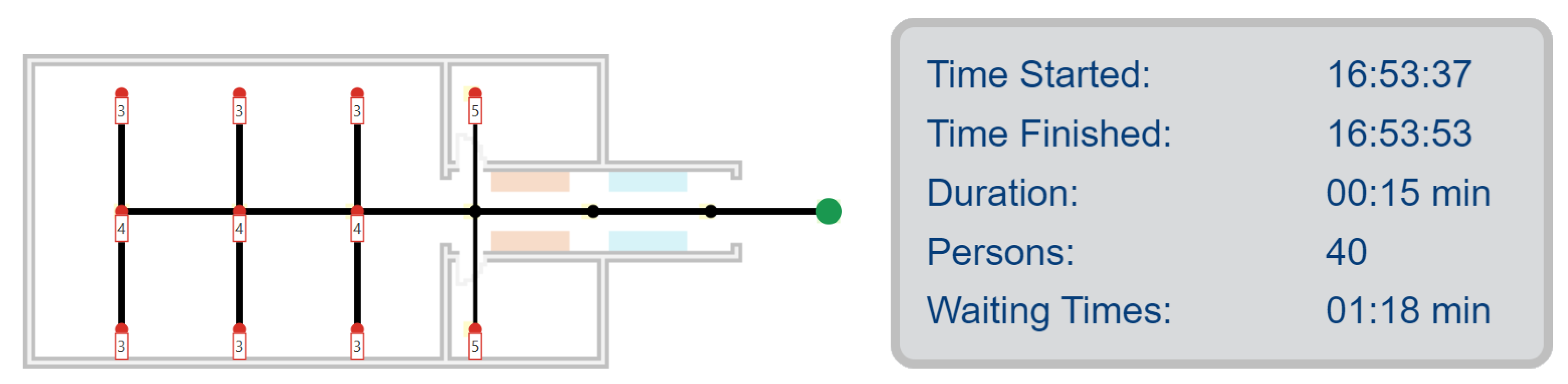

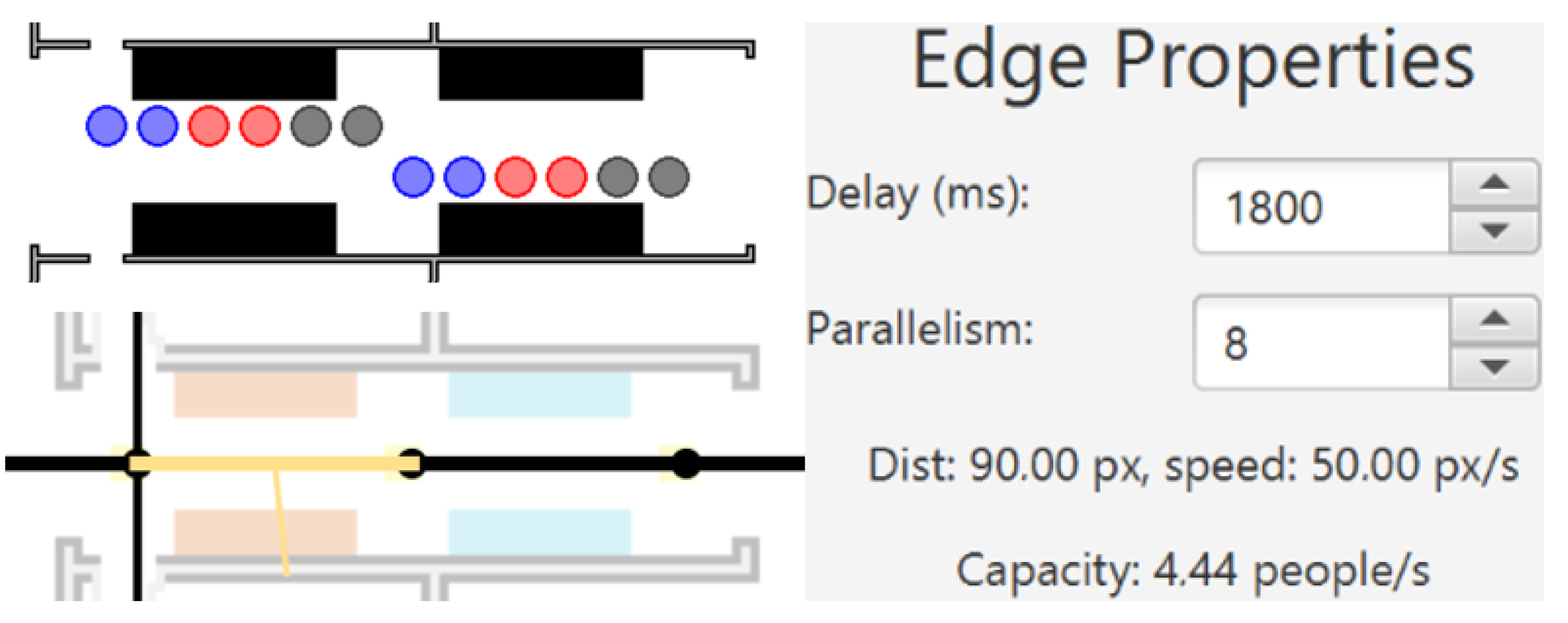

3.2. Simulating Building Evacuations with Flow Networks

3.3. Description of the Problem Used for Our Study

3.4. Significance of the Problem

3.5. The Trap in the Problem

4. Formal Setup

4.1. Prospective Teacher Group

4.2. Teacher Group

5. Research Questions

Research Question 1:

To what extent were the participants able to correctly estimate the duration with the two simulation environments?

Research Question 2:

To what extent were the participants able to argue for or against the realism of their estimated evacuation durations?

Research Question 3:

To what extent do the teachers believe they are sufficiently educated to work on and teach using such problems?

Research Question 4:

To what extent was the self-assessment of the participants aligned with the capabilities identified by our assessment?

6. Methods

6.1. Method for Research Question 1

) or did not (

) or did not ( ). In special cases, a solution could be classified as partly fulfilling an indicator (

). In special cases, a solution could be classified as partly fulfilling an indicator ( ). For this evaluation, the whole solution was considered: If a participant denoted two different results in exercise 1b (steps and micro-steps) and denoted in exercise 4 that the steps were the correct result to evaluate for realism, then the indicator “exactly one result per simulation is denoted” was fulfilled.

). For this evaluation, the whole solution was considered: If a participant denoted two different results in exercise 1b (steps and micro-steps) and denoted in exercise 4 that the steps were the correct result to evaluate for realism, then the indicator “exactly one result per simulation is denoted” was fulfilled.6.2. Method for Research Question 2

6.3. Method for Research Question 3

- I feel sufficiently technically educated to solve such problems (as a learner);

- I feel sufficiently technically educated to teach with such problems;

- I feel sufficiently didactically educated to teach with such problems.

6.4. Method for Research Question 4

7. Results

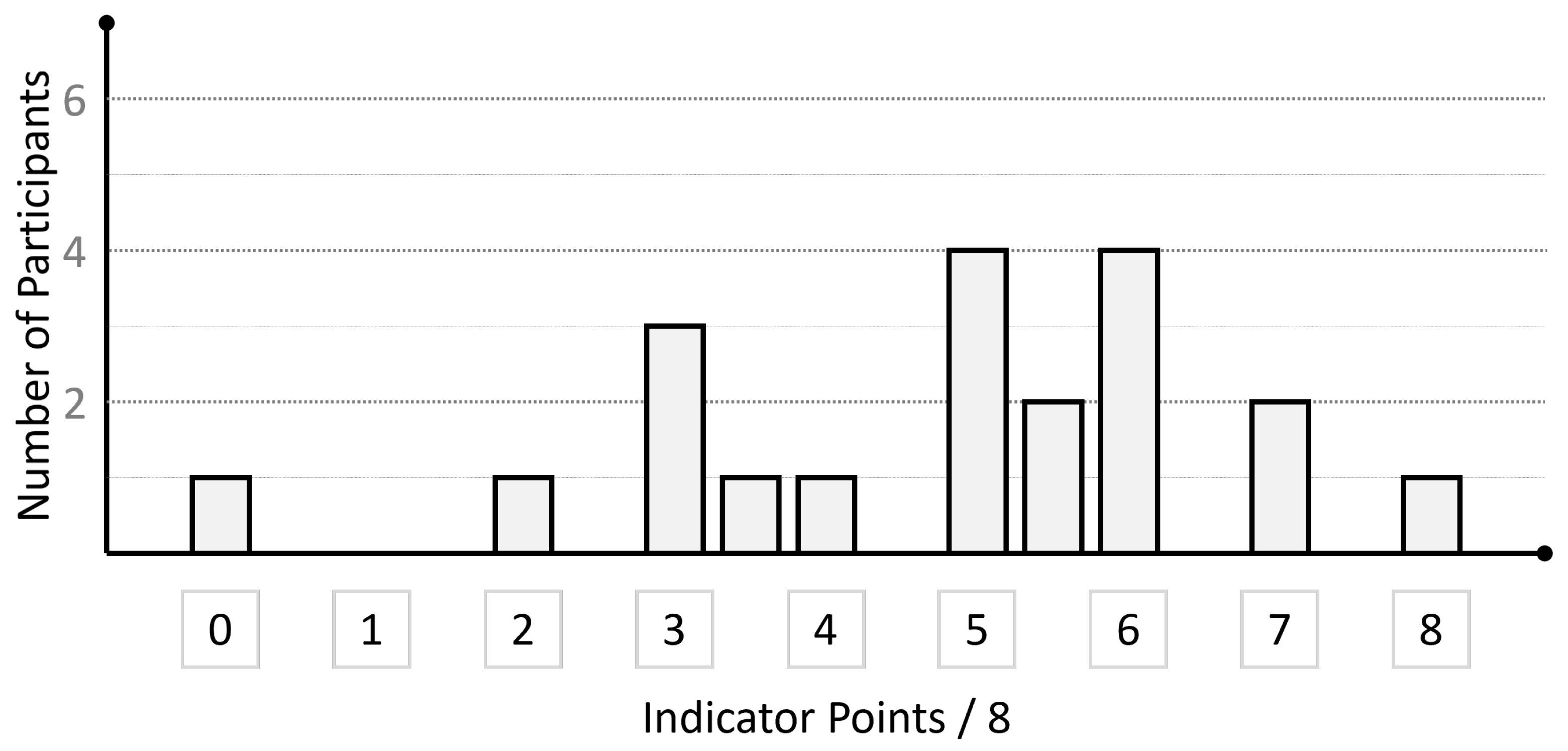

7.1. Research Question 1: Production of Estimates

). Notably, this partially correct classification was given rather leniently.

). Notably, this partially correct classification was given rather leniently.Result Summary:

Only four pair of estimates were mathematically correct and used consistent assumptions. Seven participants fulfilled half or less of the criteria. Six participants did not recognize the necessary format of the answer.

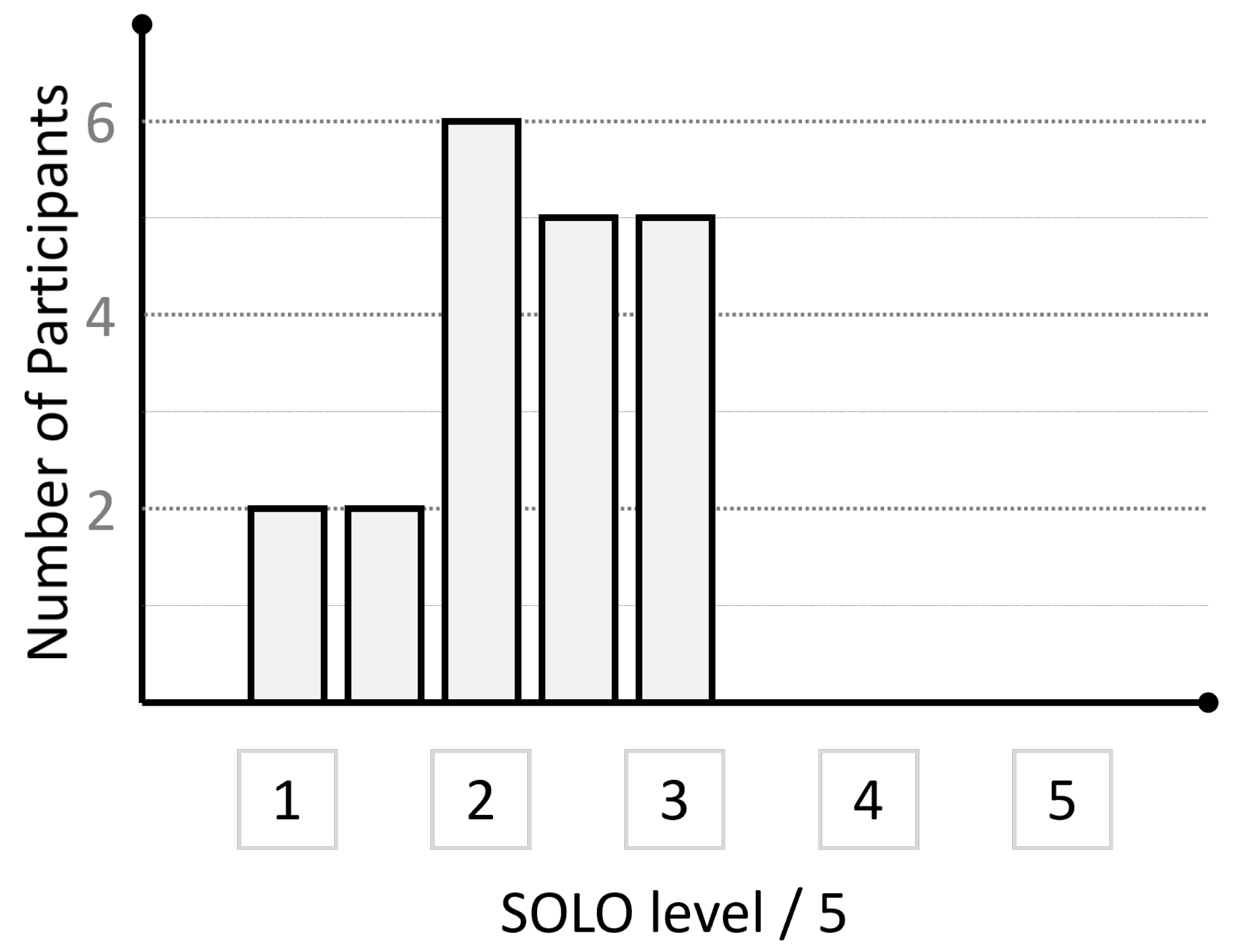

7.2. Research Question 2: Evaluation of Realism

Result Summary:

A significant minority of participants had no final conclusion on whether their estimates were realistic. Five evaluations reached a SOLO level of 3, no argumentation was scored higher.

7.3. Research Question 3: Participants’ Self-Assessment

Result Summary:

Most participants (15) felt sufficiently technically educated to solve such exercises. Half of them (6) also felt sufficiently (technically and educationally) educated to teach using such exercises

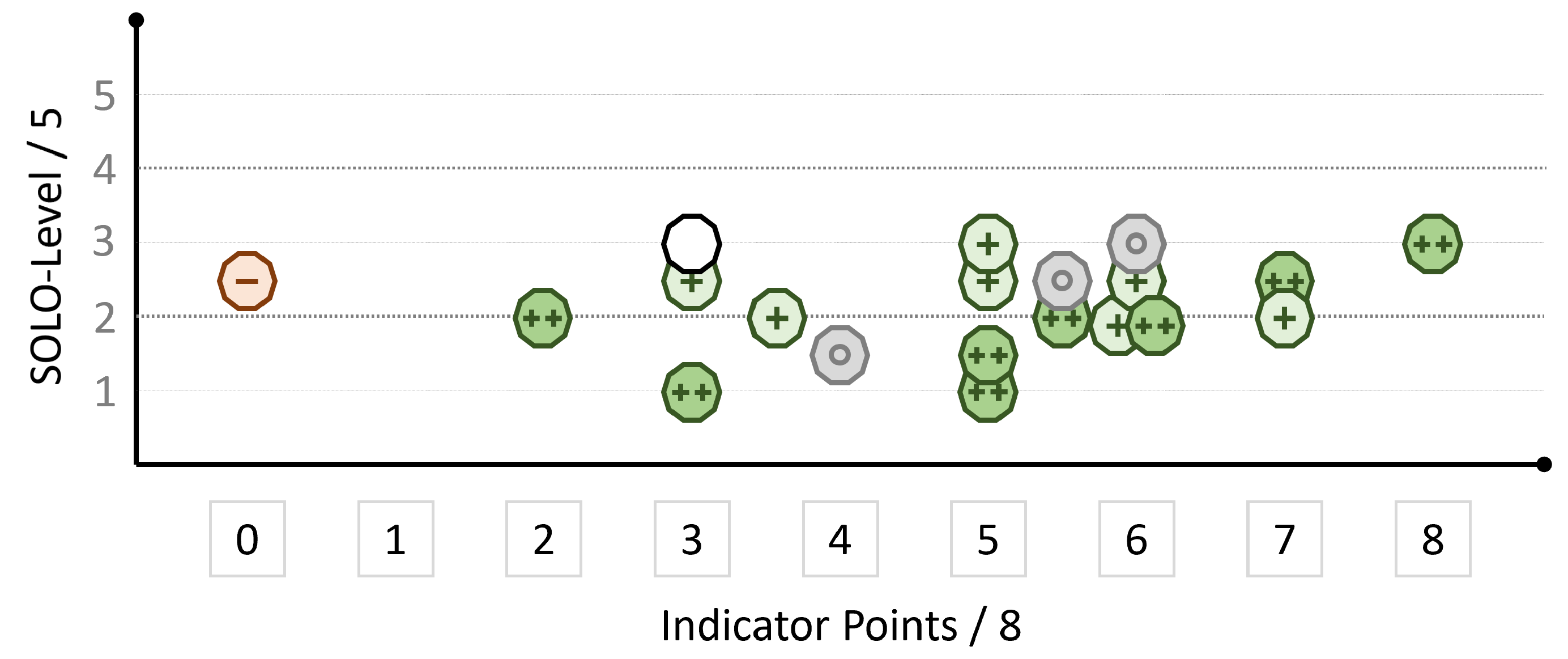

7.4. Research Question 4: Dependency between Self-Assessment and Our Assessment

Result Summary:

The quality of the simulation estimates and the quality of the evaluation were at least weakly correlated. The quality of the simulation estimates was likely uncorrelated with the self-assessment. The quality of the evaluation with the self-assessment had a negative correlation that was of at least medium strength.

8. Interpretation

8.1. Lack of Competence in the Assessed Activities

8.2. Gap between Self-Assessment and External Assessment

8.3. Independence of Sub-Skills of Problem-Solving

9. Limitations

9.1. Sample Characteristics and Size

9.2. Task Validity and Specificity

9.3. Influence from the Setup

9.4. Focus on the Written Solutions

10. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Details on the Evaluative Qualitative Analysis of the Estimates

Appendix A.1. Sample Solution

Appendix A.2. Indicators

- E

- One Estimate: Did the Participant denote exactly one real-world estimate (this estimate might be a range or distribution) for evaluation per simulation environment?

- Regardless of whether the problem was solved correctly or not, evaluating the realism of the estimates requires exactly one result per simulation environment as object for analysis in the evaluation. As such, participants not fulfilling this criteria were unable to perform any meaningful evaluation.

- E1

- Denote Grid Value: Did the participant denote (at least) the amount of simulation steps as a key result of the grid automaton?

- E2

- Transform Grid: Did the participants transform the result of the grid automaton correctly into a real-world estimate of the evacuation duration?

- E3

- Denote Flow Value: Did the participant denote (at least) the simulation duration as a key result of the flow network?

- E4

- Transform Flow: Did the participants correctly transform the result of the flow network into a real-world estimate of the evacuation duration?

- E5

- Consistent Speed: Did the participant assume the same speed of agents for creating both real-world estimates?

- E6

- Size Difference: Did the participant note that the sport halls implemented did not have the same size?

- E7

- Configuration Impact: Did the participant indicate that the grid automaton results vary with different configurations?

- Participants that did not fulfill one of these criteria were unable to perform a fully correct evaluation in exercise 4.

Appendix B. Details about the Quality of the Solutions

Appendix B.1. Denoting Exactly One Result

Appendix B.2. Denoting and Transforming the Results

), incorrect (

), incorrect ( ), or partially correct (

), or partially correct ( ). Partially correct solutions are justified in the text.

). Partially correct solutions are justified in the text.

), incorrect (

), incorrect ( ), or partially correct (

), or partially correct ( ). Partially correct solutions are justified in the text.

). Partially correct solutions are justified in the text.| E | E1 | E2 | E3 | E4 | E5 | E6 | E7 | |||

|---|---|---|---|---|---|---|---|---|---|---|

| Participant | One Estimate | Denote Grid Value | Transform Grid | Denote Flow Value | Transform Flow | Consistent Speed | Size Difference | Config Impact | Mistakes | Point Score |

| S1 |  |  |  |  |  |  |  |  | R3 | 6 |

| S2 |  |  |  |  |  |  |  |  | WT | 5 |

| S3 |  |  |  |  |  |  |  |  | 0 | |

| S4 |  |  |  |  |  |  |  |  | 8 | |

| S5 |  |  |  |  |  |  |  |  | WT | 5 |

| S6 |  |  |  |  |  |  |  |  | R3, WT | 3 |

| P1 |  |  |  |  |  |  |  |  | WT | 4 |

| P2 |  |  |  |  |  |  |  |  | 6 | |

| P3 |  |  |  |  |  |  |  |  | R3 | 5.5 |

| P4 |  |  |  |  |  |  |  |  | R3, WT | 5 |

| P5 |  |  |  |  |  |  |  |  | 5.5 | |

| P6 |  |  |  |  |  |  |  |  | LW | 5 |

| P7 |  |  |  |  |  |  |  |  | LW | 3.5 |

| P8 |  |  |  |  |  |  |  |  | LW | 6 |

| P9 |  |  |  |  |  |  |  |  | MS, WT | 3 |

| P10 |  |  |  |  |  |  |  |  | 6 | |

| P11 |  |  |  |  |  |  |  |  | 7 | |

| P12 |  |  |  |  |  |  |  |  | 7 | |

| P13 |  |  |  |  |  |  |  |  | 3 | |

| P14 |  |  |  |  |  |  |  |  | 2 | |

/20 /20 | 14 | 18 | 15 | 18 | 7 | 10 | 2 | 7 |

).

). ), since a slightly different model (without congestion) was used but the solution of this model was calculated correctly.

), since a slightly different model (without congestion) was used but the solution of this model was calculated correctly.Appendix B.3. Consistent Speed

).

). ).

).Appendix B.4. Size Difference and Configuration Impact

).

).| ID | Grid Real.? | Flow Real.? | SOLO-Level | Arguments Used |

|---|---|---|---|---|

| S1 |  |  | 2+ | assertion about magnitude of results; assertion about assumptions |

| S2 | ? | ? | 1 | No clear argumentation given (denial) |

| S3 |  |  | 2+ | Listed Assumptions that must be fulfilled; Argued why Assumptions are fulfilled; Visual Representation of the Simulations; Comprehensibility by Students |

| S4 |  |  | 3 | Listed Assumptions that must be fulfilled; Cross-Validation;Usability of Simulation |

| S5 | ? | ? | 2+ | assertion about magnitude of results; assertion about assumptions |

| S6 |  |  | 3 | Listed Assumptions that must be fulfilled; assertion about assumptions; assertion about magnitude of result |

| P1 |  |  | 1+ | listed assumptions that are not exactly fulfilled |

| P2 | ? | ? | 2 | cross-validation |

| P3 |  |  | 3 | cross-validation; listed assumptions that must be fulfilled |

| P4 |  |  | 3 | assertion about the magnitude of results; listed assumptions that must be fulfilled; details of own solution |

| P5 |  |  | 2 | listed assumptions that must be fulfilled |

| P6 |  |  | 1+ | listed assumptions that are not exactly fulfilled |

| P7 |  |  | 2 | listed assumptions that must be fulfilled |

| P8 | ? | ? | 2 | cross-validation |

| P9 | ? | ? | 2+ | assertion about magnitude of results; usability of software; listed assumptions that are not exactly fulfilled |

| P10 |  |  | 3 | cross-validation; comparison with real-world event |

| P11 | ? | ? | 2+ | listed assumptions that must be fulfilled; analyzed properties of the model; details of own solution |

| P12 | ? | ? | 2 | listed assumptions that must be fulfilled |

| P13 | ? | ? | 1 | No clear argumentation given (tautologizing) |

| P14 |  |  | 2 | listed assumptions that must be fulfilled |

References

- Forman, E.A. The practice turn in learning theory and science education. In Constructivist Education in an Age of Accountability; Springer: Cham, Switzerland, 2018; pp. 97–111. [Google Scholar] [CrossRef]

- Engle, R.A.; Conant, F.R. Guiding principles for fostering productive disciplinary engagement: Explaining an emergent argument in a community of learners classroom. Cogn. Instr. 2002, 20, 399–483. [Google Scholar] [CrossRef]

- Kokotsaki, D.; Menzies, V.; Wiggins, A. Project-based learning: A review of the literature. Improv. Sch. 2016, 19, 267–277. [Google Scholar] [CrossRef]

- Krajcik, J. Three-dimensional instruction. Sci. Teach. 2015, 82, 50. [Google Scholar]

- Hursen, C.; Fasli, F.G. Investigating the Efficiency of Scenario Based Learning and Reflective Learning Approaches in Teacher Education. Eur. J. Contemp. Educ. 2017, 6, 264–279. [Google Scholar] [CrossRef]

- Misfeldt, M. Scenario based education as a framework for understanding students engagement and learning in a project management simulation game. Electron. J. E-Learn. 2015, 13, 181–191. [Google Scholar]

- Lave, J.; Wenger, E. Situated Learning: Legitimate Peripheral Participation; Cambridge University Press: Cambridge, UK, 1991. [Google Scholar] [CrossRef]

- Holbrook, J.; Rannikmae, M. The Nature of Science Education for Enhancing Scientific Literacy. Int. J. Sci. Educ. 2007, 29, 1347–1362. [Google Scholar] [CrossRef]

- Herrington, J.; Oliver, R. An instructional design framework for authentic learning environments. Educ. Technol. Res. Dev. 2000, 48, 23–48. [Google Scholar] [CrossRef]

- McKenzie, A.D.; Morgan, C.K.; Cochrane, K.W.; Watson, G.K.; Roberts, D.W. Authentic learning: What is it, and what are the ideal curriculum conditions to cultivate it in. In Quality Conversations, Proceedings of the 25th HERDSA Annual Conference, Perth, WA, Australia, 7–10 July 2002; Higher Education Research and Development Society of Australasia, Inc.: Milperra, Australia, 2002; pp. 426–433. Available online: https://citeseerx.ist.psu.edu/document?doi=f0ff25e610b51526b22860eb85192a603321aa30 (accessed on 7 January 2024).

- Kaiser, G.; Bracke, M.; Göttlich, S.; Kaland, C. Authentic Complex Modelling Problems in Mathematics Education. In Educational Interfaces between Mathematics and Industry: Report on an ICMI-ICIAM-Study; Springer International Publishing: Cham, Switzerland, 2013; pp. 287–297. [Google Scholar] [CrossRef]

- Merritt, J.; Lee, M.Y.; Rillero, P.; Kinach, B.M. Problem-based learning in K–8 mathematics and science education: A literature review. Interdiscip. J. Probl.-Based Learn. 2017, 11, 3. [Google Scholar] [CrossRef]

- Priemer, B.; Eilerts, K.; Filler, A.; Pinkwart, N.; Rösken-Winter, B.; Tiemann, R.; Belzen, A.U.Z. A framework to foster problem-solving in STEM and computing education. Res. Sci. Technol. Educ. 2020, 38, 105–130. [Google Scholar] [CrossRef]

- Moorthy, K.; Vincent, C.; Darzi, A. Simulation based training. BMJ 2005, 330, 493. [Google Scholar] [CrossRef]

- Gegenfurtner, A.; Quesada-Pallarès, C.; Knogler, M. Digital simulation-based training: A meta-analysis. Br. J. Educ. Technol. 2014, 45, 1097–1114. [Google Scholar] [CrossRef]

- Buchholtz, N.; Mesrogli, S. A whole week of modelling–examples and experiences of modelling for students in mathematics education. In Teaching Mathematical Modelling: Connecting to Research and Practice; Springer: Dordrecht, The Netherlands, 2013; pp. 307–316. [Google Scholar] [CrossRef]

- Greefrath, G.; Wess, R. Mathematical Modeling in Teacher Education—Developing Professional Competence of Pre-Service Teachers in a Teaching–Learning Lab. Proc. Singap. Natl. Acad. Sci. 2022, 16, 25–39. [Google Scholar] [CrossRef]

- Siller, H.S.; Greefrath, G.; Wess, R.; Klock, H. Pre-service Teachers’ Self-Efficacy for Teaching Mathematical Modelling. In Advancing and Consolidating Mathematical Modelling: Research from ICME-14; Springer: Cham, Switzerland, 2023; pp. 259–274. [Google Scholar] [CrossRef]

- Thompson, A.G. Teaching and Learning Mathematical Problem Solving. In Teaching and Learning Mathematical Problem Solving; Routledge: London, UK, 1985. [Google Scholar] [CrossRef]

- Liljedahl, P.; Santos-Trigo, M.; Malaspina, U.; Bruder, R. Problem Solving in Mathematics Education; Springer: Cham, Switzerland, 2016. [Google Scholar] [CrossRef]

- Pólya, G.; Conway, J.H. How to Solve It: A New Aspect of Mathematical Method; Princeton University Press: Princeton, NJ, USA, 1957. [Google Scholar]

- Hobbs, L.; Clark, J.C.; Plant, B. Successful students–STEM program: Teacher learning through a multifaceted vision for STEM education. In STEM Education in the Junior Secondary: The State of Play; Springer: Singapore, 2018; pp. 133–168. [Google Scholar] [CrossRef]

- Just, J.; Siller, H.S. The Role of Mathematics in STEM Secondary Classrooms: A Systematic Literature Review. Educ. Sci. 2022, 12, 629. [Google Scholar] [CrossRef]

- Moore, T.J.; Smith, K.A. Advancing the state of the art of STEM integration. J. STEM Educ. Innov. Res. 2014, 15, 5. Available online: https://karlsmithmn.org/wp-content/uploads/2017/08/Moore-Smith-JSTEMEd-GuestEditorialF.pdf (accessed on 7 January 2024).

- Chapman, O. Mathematics teachers’ knowledge for teaching problem solving. LUMAT Int. J. Math, Sci. Technol. Educ. 2015, 3, 19–36. [Google Scholar] [CrossRef]

- Chapman, O. Constructing Pedagogical Knowledge of Problem Solving: Preservice Mathematics Teachers. Int. Group Psychol. Math. Educ. 2005, 2, 225–232. [Google Scholar]

- Podkhodova, N.; Snegurova, V.; Stefanova, N.; Triapitsyna, A.; Pisareva, S. Assessment of Mathematics Teachers’ Professional Competence. J. Math. Educ. 2020, 11, 477–500. [Google Scholar] [CrossRef]

- Ramos-Rodríguez, E.; Fernández-Ahumada, E.; Morales-Soto, A. Effective Teacher Professional Development Programs. A Case Study Focusing on the Development of Mathematical Modeling Skills. Educ. Sci. 2022, 12, 2. [Google Scholar] [CrossRef]

- Kinay, I.; Bagceci, B. The Investigation of the Effects of Authentic Assessment Approach on Prospective Teachers’ Problem-Solving Skills. Int. Educ. Stud. 2016, 9, 51–59. [Google Scholar] [CrossRef]

- Koellner, K.; Jacobs, J.; Borko, H.; Schneider, C.; Pittman, M.E.; Eiteljorg, E.; Bunning, K.; Frykholm, J. The problem-solving cycle: A model to support the development of teachers’ professional knowledge. Math. Think. Learn. 2007, 9, 273–303. [Google Scholar] [CrossRef]

- Jasper, B.; Taube, S. Action research of elementary teachers’ problem-solving skills before and after focused professional development. Teach. Educ. Pract. 2005, 17, 299–310. [Google Scholar]

- Blömeke, S.; Delaney, S. Assessment of teacher knowledge across countries: A review of the state of research. ZDM 2012, 44, 223–247. [Google Scholar] [CrossRef]

- Doerr, H.M. What knowledge do teachers need for teaching mathematics through applications and modelling? In Modelling and Applications in Mathematics Education; Springer: New York, NY, USA, 2007; pp. 69–78. [Google Scholar] [CrossRef]

- Funke, J. Complex problem solving. Encyclopedia of the Sciences of Learning; Springer: Berlin/Heidelberg, Germany, 2012; pp. 682–685. [Google Scholar]

- Wess, R.; Klock, H.; Siller, H.S.; Greefrath, G. Mathematical Modelling. In Measuring Professional Competence for the Teaching of Mathematical Modelling: A Test Instrument; Springer International Publishing: Cham, Switzerland, 2021; pp. 3–20. [Google Scholar] [CrossRef]

- De Fruyt, F.; Wille, B.; John, O.P. Employability in the 21st Century: Complex (Interactive) Problem Solving and Other Essential Skills. Ind. Organ. Psychol. 2015, 8, 276–281. [Google Scholar] [CrossRef]

- Jang, H. Identifying 21st century STEM competencies using workplace data. J. Sci. Educ. Technol. 2016, 25, 284–301. [Google Scholar] [CrossRef]

- Geisinger, K.F. 21st Century Skills: What Are They and How Do We Assess Them? Appl. Meas. Educ. 2016, 29, 245–249. [Google Scholar] [CrossRef]

- Neubert, J.C.; Mainert, J.; Kretzschmar, A.; Greiff, S. The Assessment of 21st Century Skills in Industrial and Organizational Psychology: Complex and Collaborative Problem Solving. Ind. Organ. Psychol. 2015, 8, 238–268. [Google Scholar] [CrossRef]

- Greiff, S.; Wüstenberg, S.; Molnár, G.; Fischer, A.; Funke, J.; Csapó, B. Complex problem solving in educational contexts—Something beyond g: Concept, assessment, measurement invariance, and construct validity. J. Educ. Psychol. 2013, 105, 364. [Google Scholar] [CrossRef]

- Jacobson, M.J.; Wilensky, U. Complex systems in education: Scientific and educational importance and implications for the learning sciences. J. Learn. Sci. 2006, 15, 11–34. [Google Scholar] [CrossRef]

- Ciolan, L.; Ciolan, L.E. Two perspectives, same reality? How authentic is learning for students and for their teachers. Procedia-Soc. Behav. Sci. 2014, 142, 24–28. [Google Scholar] [CrossRef]

- Lombardi, M.M.; Oblinger, D.G. Authentic learning for the 21st century: An overview. Educ. Learn. Initiat. 2007, 1, 1–12. [Google Scholar]

- Reeves, T.C.; Herrington, J.; Oliver, R. Authentic activities and online learning. In Quality Conversations, Proceedings of the 25th HERDSA Annual Conference, Perth, WA, Australia, 7–10 July 2002; Higher Education Research and Development Society of Australasia, Inc.: Milperra, Australia, 2002; p. 562. Available online: https://ro.ecu.edu.au/cgi/viewcontent.cgi?article=4899&context=ecuworks (accessed on 7 January 2024).

- Roehrig, G.H.; Dare, E.A.; Ellis, J.A.; Ring-Whalen, E. Beyond the basics: A detailed conceptual framework of integrated STEM. Discip. Interdiscip. Sci. Educ. Res. 2021, 3, 1–18. [Google Scholar] [CrossRef]

- Roth, W.M. Authentic School Science: Knowing and Learning in Open-Inquiry Science Laboratories; Springer: Dordrecht, The Netherlands, 2012; Volume 1. [Google Scholar] [CrossRef]

- Anker-Hansen, J.; Andreé, M. In pursuit of authenticity in science education. Nord. Stud. Sci. Educ. 2019, 15, 54–66. [Google Scholar] [CrossRef]

- Vos, P. What is ‘authentic’ in the teaching and learning of mathematical modelling? In Trends in Teaching and Learning of Mathematical Modelling; Springer: Dordrecht, The Netherlands, 2011; pp. 713–722. [Google Scholar] [CrossRef]

- Heymann, H.W. Why Teach Mathematics?: A Focus on General Education; Springer: Dordrecht, The Netherlands, 2003; Volume 33. [Google Scholar] [CrossRef]

- Har, L.B. Authentic Learning. The Active Classroom The Hong Kong Institute of Education. 2013. Available online: https://www.eduhk.hk/aclass/Theories/AuthenticLearning_28June.pdf (accessed on 7 January 2024).

- Bhagat, K.K.; Huang, R. Improving Learners’ Experiences through Authentic Learning in a Technology-Rich Classroom. In Authentic Learning Through Advances in Technologies; Springer: Singapore, 2018; pp. 3–15. [Google Scholar] [CrossRef]

- Chin, K.Y.; Lee, K.F.; Chen, Y.L. Impact on student motivation by using a QR-based U-learning material production system to create authentic learning experiences. IEEE Trans. Learn. Technol. 2015, 8, 367–382. [Google Scholar] [CrossRef]

- Somyürek, S. An effective educational tool: Construction kits for fun and meaningful learning. Int. J. Technol. Des. Educ. 2015, 25, 25–41. [Google Scholar] [CrossRef]

- Chen, G.D.; Nurkhamid; Wang, C.Y.; Yang, S.H.; Lu, W.Y.; Chang, C.K. Digital learning playground: Supporting authentic learning experiences in the classroom. Interact. Learn. Environ. 2013, 21, 172–183. [Google Scholar] [CrossRef]

- Sadik, A. Digital storytelling: A meaningful technology-integrated approach for engaged student learning. Educ. Technol. Res. Dev. 2008, 56, 487–506. [Google Scholar] [CrossRef]

- Pu, Y.H.; Wu, T.T.; Chiu, P.S.; Huang, Y.M. The design and implementation of authentic learning with mobile technology in vocational nursing practice course. Br. J. Educ. Technol. 2016, 47, 494–509. [Google Scholar] [CrossRef]

- Hallström, J.; Schönborn, K.J. Models and modelling for authentic STEM education: Reinforcing the argument. Int. J. STEM Educ. 2019, 6, 1–10. [Google Scholar] [CrossRef]

- Gilbert, J.K. Models and modelling: Routes to more authentic science education. Int. J. Sci. Math. Educ. 2004, 2, 115–130. [Google Scholar] [CrossRef]

- Gilbert, S.W. Model Building and Definition of Science. J. Res. Sci. Teach. 1991, 28, 73–79. [Google Scholar] [CrossRef]

- Blum, W.; Niss, M. Applied mathematical problem solving, modelling, applications, and links to other subjects—State, trends and issues in mathematics instruction. Educ. Stud. Math. 1991, 22, 37–68. [Google Scholar] [CrossRef]

- Tomasi, J. Models and modeling in theoretical chemistry. J. Mol. Struct. THEOCHEM 1988, 179, 273–292. [Google Scholar] [CrossRef]

- Bevana, N.; Kirakowskib, J.; Maissela, J. What is usability. In Proceedings of the 4th International Conference on HCI 1991, Stuttgart, Germany, 1–6 September 1991; pp. 1–6. [Google Scholar]

- Pluta, W.J.; Chinn, C.A.; Duncan, R.G. Learners’ epistemic criteria for good scientific models. J. Res. Sci. Teach. 2011, 48, 486–511. [Google Scholar] [CrossRef]

- Apostel, L. Towards the formal study of models in the non-formal sciences. In The Concept and the Role of the Model in Mathematics and Natural and Social Sciences; Springer: Dordrecht, The Netherlands, 1961; pp. 1–37. [Google Scholar] [CrossRef]

- Brinda, T.; Fothe, M.; Friedrich, S.; Koerber, B.; Puhlmann, H.; Röhner, G.; Schulte, C. Grundsätze und Standards für die Informatik in der Schule-Bildungsstandards Informatik für die Sekundarstufe I. 2008. Available online: https://informatikstandards.de/fileadmin/GI/Projekte/Informatikstandards/Dokumente/bildungsstandards_2008.pdf (accessed on 7 January 2024).

- Greefrath, G.; Siller, H.S.; Weitendorf, J. Modelling considering the influence of technology. In Trends in Teaching and Learning of Mathematical Modelling; Springer: Dordrecht, The Netherlands, 2011; pp. 315–329. [Google Scholar] [CrossRef]

- Geiger, V. Factors affecting teachers’ adoption of innovative practices with technology and mathematical modelling. In Trends in Teaching and Learning of Mathematical Modelling; Springer: Dordrecht, The Netherlands, 2011; pp. 305–314. [Google Scholar] [CrossRef]

- Kaiser, G. Mathematical Modelling and Applications in Education. In Encyclopedia of Mathematics Education; Springer: Dordrecht, The Netherlands, 2014; pp. 396–404. [Google Scholar] [CrossRef]

- Banks, J. Discrete Event System Simulation; Pearson Education: Delhi, India, 2005. [Google Scholar]

- Kaiser, G.; Stender, P. Complex modelling problems in co-operative, self-directed learning environments. In Teaching Mathematical Modelling: Connecting to Research and Practice; Springer: Dordrecht, The Netherlands, 2013; pp. 277–293. [Google Scholar] [CrossRef]

- Ortlieb, C.P. Mathematische Modelle und Naturerkenntnis; Universität Hamburg, Fachbereich Mathematik: Hamburg, Germany, 2001. [Google Scholar] [CrossRef]

- Blum, W.; Leiß, D. Deal with modelling problems. Math. Model. Educ. Eng. Econ.-ICTMA 2007, 12, 222. [Google Scholar]

- Ferri, R.B. 5.5—Modelling Problems from a Cognitive Perspective. In Mathematical Modelling; Haines, C., Galbraith, P., Blum, W., Khan, S., Eds.; Woodhead Publishing: Sawston, UK, 2007; pp. 260–270. [Google Scholar] [CrossRef]

- Doerr, H.M.; Ärlebäck, J.B.; Misfeldt, M. Representations of modelling in mathematics education. In Mathematical Modelling and Applications; Springer: Cham, Switzerland, 2017; pp. 71–81. [Google Scholar] [CrossRef]

- Czocher, J.; Stillman, G.; Brown, J. Verification and Validation: What Do We Mean? In Making Waves, Opening Spaces; Mathematics Education Research Group of Australasia: Adelaide, Australia, 2018; pp. 250–257. Available online: http://files.eric.ed.gov/fulltext/ED592478.pdf (accessed on 7 January 2024).

- Carson, J. Model verification and validation. In Proceedings of the Winter Simulation Conference, San Diego, CA, USA, 8–11 December 2002; Volume 1, pp. 52–58. [Google Scholar] [CrossRef]

- Whitner, R.B.; Balci, O. Guidelines for Selecting and Using Simulation Model Verification Techniques. In Proceedings of the 21st Conference on Winter Simulation, Washington, DC, USA, 4–6 December 1989; pp. 559–568. [Google Scholar] [CrossRef]

- Kleijnen, J.P. Verification and validation of simulation models. Eur. J. Oper. Res. 1995, 82, 145–162. [Google Scholar] [CrossRef]

- Schlesinger, S. Terminology for model credibility. Simulation 1979, 32, 103–104. [Google Scholar] [CrossRef]

- Brown, J.P.; Stillman, G.A. Developing the roots of modelling conceptions: ‘Mathematical modelling is the life of the world’. Int. J. Math. Educ. Sci. Technol. 2017, 48, 353–373. [Google Scholar] [CrossRef]

- Stillman, G. Impact of prior knowledge of task context on approaches to applications tasks. J. Math. Behav. 2000, 19, 333–361. [Google Scholar] [CrossRef]

- Czocher, J.A.; Moss, D.L. Mathematical modeling: Are prior experiences important? Math. Teach. 2017, 110, 654–660. [Google Scholar] [CrossRef]

- Browne, M.W. Cross-validation methods. J. Math. Psychol. 2000, 44, 108–132. [Google Scholar] [CrossRef] [PubMed]

- Sargent, R.G. Simulation model verification and validation. In Proceedings of the 1991 Winter Simulation Conference Proceedings, Phoenix, AZ, USA, 8–11 December 1991. [Google Scholar] [CrossRef]

- Ling, Y.; Mahadevan, S. Quantitative model validation techniques: New insights. Reliab. Eng. Syst. Saf. 2013, 111, 217–231. [Google Scholar] [CrossRef]

- Redish, E. Using Math in Physics: 4. Toy Models. Phys. Teach. 2021, 59, 683–688. [Google Scholar] [CrossRef]

- Biggs, J.B.; Collis, K.F. Evaluating the Quality of Learning: The SOLO Taxonomy (Structure of the Observed Learning Outcome); Academic Press: Cambridge, MA, USA, 2014. [Google Scholar]

- Collis, K.F. A Study of Concrete and Formal Operations in School Mathematics: A Piagetian Viewpoint; Australian Council for Educational Research: Melbourne, Australia, 1975. [Google Scholar] [CrossRef]

- Greubel, A.; Siller, H.S.; Hennecke, M. Teaching Simulation Literacy with Evacuations. In Proceedings of the European Conference on Technology Enhanced Learning, Heidelberg, Germany, 14–18 September 2020; pp. 200–214. [Google Scholar] [CrossRef]

- Greubel, A.; Siller, H.S.; Ruzika, S.; Knippertz, L. Teaching Mathematical Modeling with Computing Technology: Presentation of a Course based on Evacuations. In Proceedings of the 17th Workshop in Primary and Secondary Computing Education, Morschach, Switzerland, 31 October–2 November 2022. [Google Scholar] [CrossRef]

- Li, Y.; Chen, M.; Dou, Z.; Zheng, X.; Cheng, Y.; Mebarki, A. A review of cellular automata models for crowd evacuation. Phys. A Stat. Mech. Appl. 2019, 526, 120752. [Google Scholar] [CrossRef]

- Cova, T.J.; Johnson, J.P. A network flow model for lane-based evacuation routing. Transp. Res. Part A Policy Pract. 2003, 37, 579–604. [Google Scholar] [CrossRef]

- Yamada, T. A network flow approach to a city emergency evacuation planning. Int. J. Syst. Sci. 1996, 27, 931–936. [Google Scholar] [CrossRef]

- Kari, J. Theory of cellular automata: A survey. Theor. Comput. Sci. 2005, 334, 3–33. [Google Scholar] [CrossRef]

- Greubel, A.; Siller, H.S.; Hennecke, M. EvaWeb: A Web App for Simulating the Evacuation of Buildings with a Grid Automaton. In Proceedings of the 16th European Conference on Technology Enhanced Learning, EC-TEL 2021, Bolzano, Italy, 20–24 September 2021. [Google Scholar] [CrossRef]

- Shen, T.S. ESM: A building evacuation simulation model. Build. Environ. 2005, 40, 671–680. [Google Scholar] [CrossRef]

- Tan, L.; Hu, M.; Lin, H. Agent-based simulation of building evacuation: Combining human behavior with predictable spatial accessibility in a fire emergency. Inf. Sci. 2015, 295, 53–66. [Google Scholar] [CrossRef]

- Pelechano, N.; Malkawi, A. Evacuation simulation models: Challenges in modeling high rise building evacuation with cellular automata approaches. Autom. Constr. 2008, 17, 377–385. [Google Scholar] [CrossRef]

- Dimakis, N.; Filippoupolitis, A.; Gelenbe, E. Distributed building evacuation simulator for smart emergency management. Comput. J. 2010, 53, 1384–1400. [Google Scholar] [CrossRef]

- National Research Council. A Framework for K-12 Science Education: Practices, Crosscutting Concepts, and Core Ideas; National Academies Press: Washington, DC, USA, 2012. [CrossRef]

- Monnet, J. Informatics Education at School in Europe; Publications Office of the European Union: Luxembourg, 2022. [Google Scholar] [CrossRef]

- Bocconi, S.; Chioccariello, A.; Dettori, G.; Ferrari, A.; Engelhardt, K. Developing Computational Thinking in Compulsory Education: Joint Research Center (European Commission); Publications Office of the European Union: Luxembourg, 2016. [Google Scholar] [CrossRef]

- Van Someren, M.; Barnard, Y.; Sandberg, J. The Think Aloud Method: A Practical Approach to Modelling Cognitive Processes; Academic Press: London, UK, 1994; Available online: https://pure.uva.nl/ws/files/716505/149552_Think_aloud_method.pdf (accessed on 7 January 2024).

- Alias, M.; Masek, A.; Salleh, H. Self, Peer and Teacher Assessments in Problem Based Learning: Are They in Agreements? Procedia-Soc. Behav. Sci. 2015, 204, 309–317. [Google Scholar] [CrossRef]

- Baars, M.; Vink, S.; Van Gog, T.; De Bruin, A.; Paas, F. Effects of training self-assessment and using assessment standards on retrospective and prospective monitoring of problem solving. Learn. Instr. 2014, 33, 92–107. [Google Scholar] [CrossRef]

- Chen, P. Relationship between Students’ Self-Assessment of Their Capabilities and Their Teachers’ Judgments of Students’ Capabilities in Mathematics Problem-Solving. Psychol. Rep. 2006, 98, 765–778. [Google Scholar] [CrossRef] [PubMed]

- Falchikov, N.; Goldfinch, J. Student Peer Assessment in Higher Education: A Meta-Analysis Comparing Peer and Teacher Marks. Rev. Educ. Res. 2000, 70, 287–322. [Google Scholar] [CrossRef]

- Ross, J.; Bruce, C. Teacher self-assessment: A mechanism for facilitating professional growth. Teach. Teach. Educ. 2007, 23, 146–159. [Google Scholar] [CrossRef]

- Brouwers, A.; Tomic, W. A longitudinal study of teacher burnout and perceived self-efficacy in classroom management. Teach. Teach. Educ. 2000, 16, 239–253. [Google Scholar] [CrossRef]

- Cancro, G. The Interrelationship of Organizational Climate, Teacher Self-Efficacy, and Perceived Teacher Autonomy. Ph.D. Thesis, Fordham University, New York, NY, USA, 1992; p. 146. Available online: https://www.proquest.com/dissertations-theses/interrelationship-organizational-climate-teacher/docview/303980667/se-2 (accessed on 7 January 2024).

- Ross, J. The reliability, validity, and utility of self-assessment. Pract. Assess. Res. Eval. 2019, 11, 10. [Google Scholar] [CrossRef]

- Kuckartz, U. Qualitative text analysis: A systematic approach. In Compendium for Early Career Researchers in Mathematics Education; Springer: Cham, Switzerland, 2019; pp. 181–197. [Google Scholar] [CrossRef]

- Forehand, M. Bloom’s taxonomy: Original and revised. Emerg. Perspect. Learn. Teach. Technol. 2005, 8, 41–44. [Google Scholar]

- Pernet, C.R.; Wilcox, R.; Rousselet, G.A. Robust Correlation Analyses: False Positive and Power Validation Using a New Open Source Matlab Toolbox. Front. Psychol. 2013, 3, 606. [Google Scholar] [CrossRef]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences; Academic Press: Cambridge, MA, USA, 2013. [Google Scholar] [CrossRef]

- Furnham, A.; Grover, S. Correlates of Self-Estimated Intelligence. J. Intell. 2020, 8, 6. [Google Scholar] [CrossRef]

- Dunning, D. The Dunning–Kruger Effect: On Being Ignorant of One’s Own Ignorance. In Advances in Experimental Social Psychology; Elsevier: Amsterdam, The Netherlands, 2011; Volume 44, pp. 247–296. [Google Scholar] [CrossRef]

= agree,

= agree,  = rather agree,

= rather agree,  = neutral,

= neutral,  = rather disagree. The participant with the empty icon did not answer the question.

= rather disagree. The participant with the empty icon did not answer the question.

= agree,

= agree,  = rather agree,

= rather agree,  = neutral,

= neutral,  = rather disagree. The participant with the empty icon did not answer the question.

= rather disagree. The participant with the empty icon did not answer the question.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Greubel, A.; Siller, H.-S.; Hennecke, M. Assessing Teachers’ Capabilities to Work with Models and Evaluate Results in the Context of a Complex and Authentic STEM Problem. Educ. Sci. 2024, 14, 104. https://doi.org/10.3390/educsci14010104

Greubel A, Siller H-S, Hennecke M. Assessing Teachers’ Capabilities to Work with Models and Evaluate Results in the Context of a Complex and Authentic STEM Problem. Education Sciences. 2024; 14(1):104. https://doi.org/10.3390/educsci14010104

Chicago/Turabian StyleGreubel, André, Hans-Stefan Siller, and Martin Hennecke. 2024. "Assessing Teachers’ Capabilities to Work with Models and Evaluate Results in the Context of a Complex and Authentic STEM Problem" Education Sciences 14, no. 1: 104. https://doi.org/10.3390/educsci14010104