Practical Programming Exams with Automated Assessment Improve Student Engagement and Learning Outcomes

Abstract

1. Introduction

2. Study Aim and Research Questions

2.1. Scalable Feedback Systems

2.2. Scope of the Study

2.3. Research Questions

- RQ1: Does the introduction of a mandatory practical exam improve student learning outcomes?

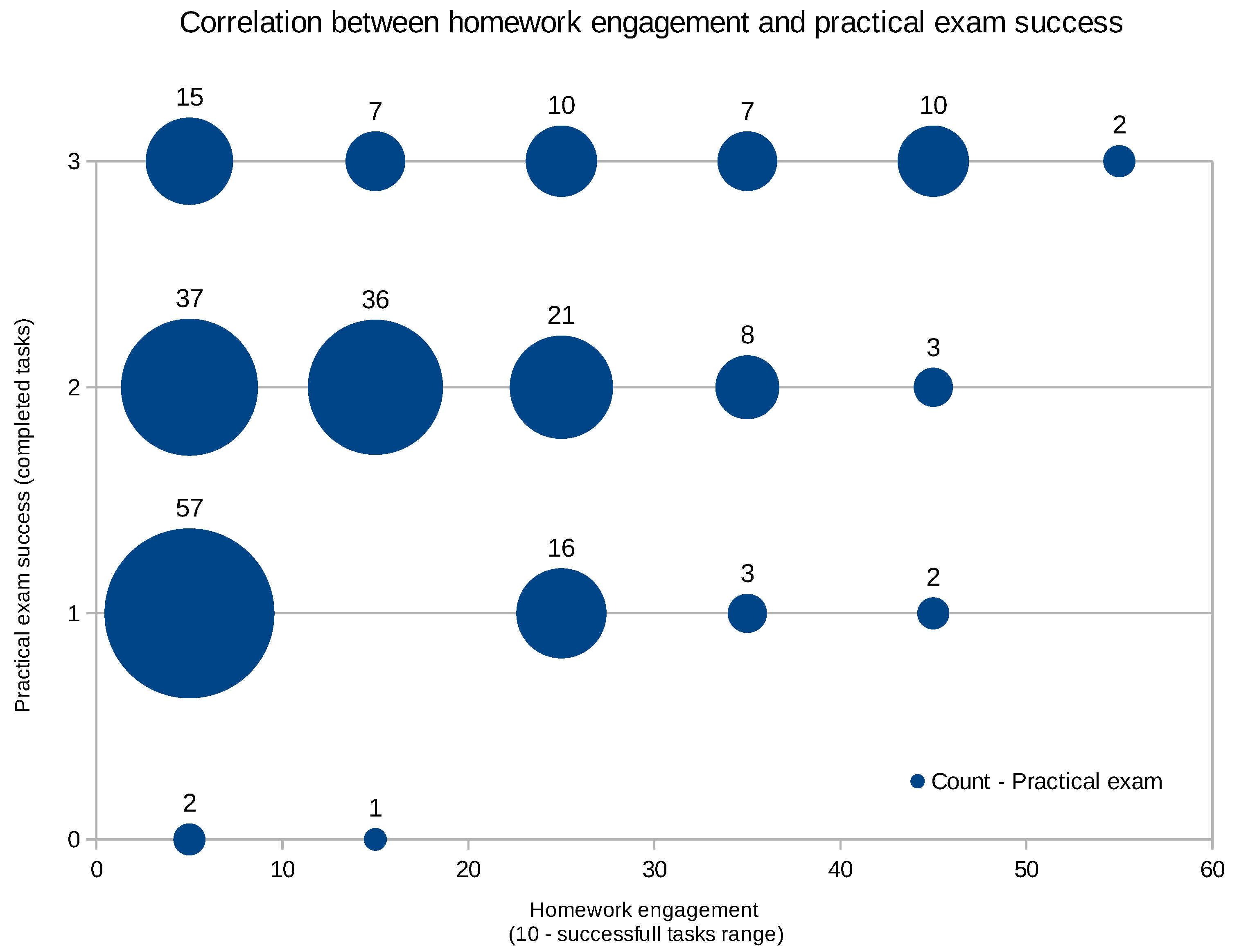

- RQ2: Does engagement in non-mandatory programming assignments correlate with student performance in written and practical exams?

- Evaluate the impact of a mandatory practical exam on student performance by comparing success rates before and after its implementation.

- Analyze the relationship between voluntary programming practice and exam performance by measuring correlations between engagement in non-mandatory assignments and results in both theoretical (written) and applied (practical) assessments.

- Assess the effectiveness of self-directed learning opportunities by investigating whether students who voluntarily engage in additional exercises demonstrate stronger problem-solving abilities.

- H1: The introduction of a mandatory practical exam is expected to be associated with improved learning outcomes.

- H2: There exists a positive correlation between engagement in non-mandatory programming assignments and exam performance, suggesting that students who practice more tend to achieve higher scores.

3. Materials and Methods

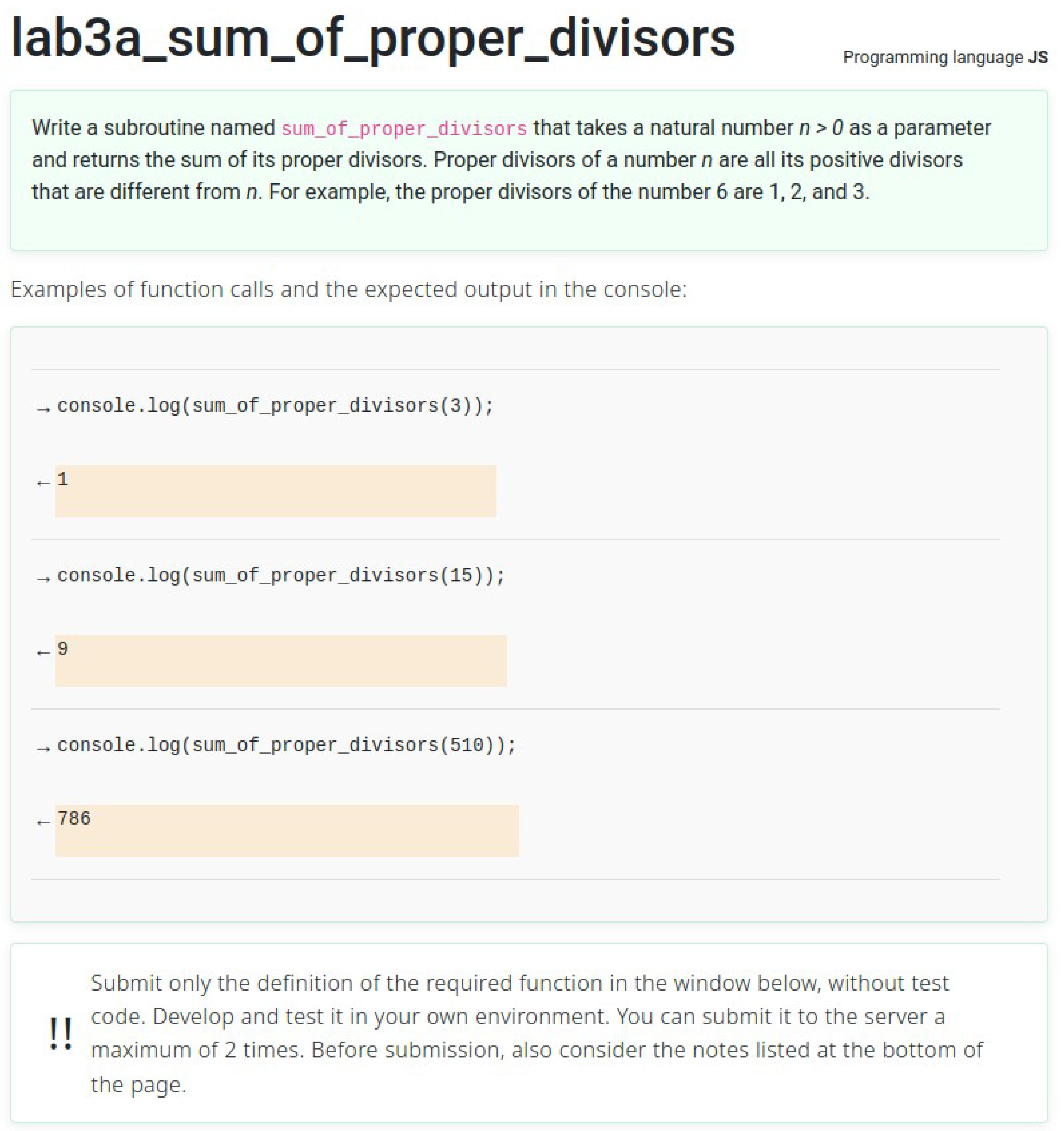

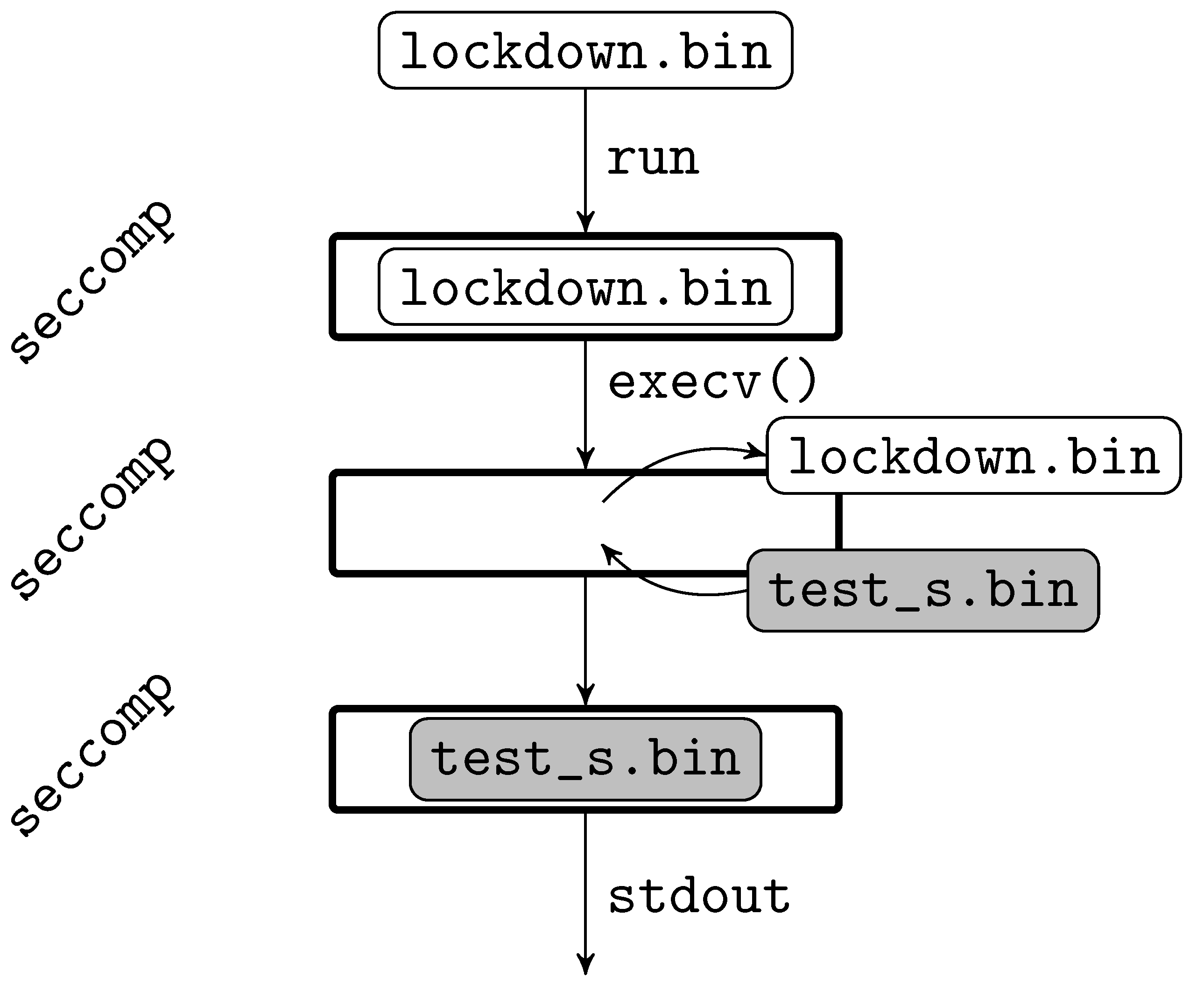

3.1. Programmers’ Interactive Virtual Onboarding (PIVO)

3.2. Curriculum

- two hours of lectures,

- one hour of tutorial sessions, and

- one hour of lab sessions (conducted as two-hour sessions every two weeks).

3.2.1. Exam Enrollment Requirements

Laboratory Component

- Attendance at all five laboratory sessions (5/5), and

- Successful submission of assignments for all five sessions (5/5) via the PIVO system.

- Lab assignments were standardized across all students.

Homework Component

- Completion of 12 multiple-choice (MCQ) theoretical questions, and

- Completion of five introductory programming tasks, submitted to the PIVO system.

- Homework assignments were individualized for each student. To pass the homework component, students were required to obtain a minimum of 12 out of 20 available points.

3.3. Exercising and Mentoring Environment

- Laboratory sessions: Hands-on practice sessions where students worked on practical exercises under supervision

- Obligatory homework assignments: Four structured assignments comprising easy to moderately difficult tasks, forming part of the required coursework

- Non-mandatory homework assignments: A set of over 50 programming tasks ranging from moderate to high difficulty, aimed at reinforcing students’ understanding of advanced concepts.

3.4. Exam Format Semester 2022/2023

- Probing Exam (Optional): Students complete two simple programming assignments during the probing exam (on PIVO system, offline and with no accessories). Successfully completing these assignments awarded extra points, rising the final score if the student passed the written exam.

- Written Exam: The main component of the final exam, consisting of 15 multiple-choice questions focused on code analysis (strictly on-paper). The exam had a maximum duration of 50 min. Students scoring more than 50% on this component passed, points achieved at probing exam were added on top in this case.

3.5. Exam Format Semester 2023/2024

- Part I—Written Exam The first part consisted of 15 multiple-choice questions focused on code analysis (strictly on-paper), with a maximum duration of 50 min. Students scoring more than 50% on this component were eligible to proceed to the practical exam.

- Part II—Practical Exam The second part involved a unique set of three programming assignments for each student. To pass, students were required to score written exam >50% and, in addition, successfully complete at least one of the assignments in practical part. Three assignments had three levels, easy, medium, hard. More assignments completed bring higher final score.

(Easy assignment) Write a subroutine named binaryRepresentation that takes an array b as a parameter, which contains the binary representation of a non-negative integer. The array b consists of a sequence of ones (1) and zeros (0), ending with a sentinel value of −1. The subroutine should return the decimal value of the number represented in the array b. Examples:

bin = [1, 0, 0, −1];

console.log(binaryRepresentation(bin));

bin = [1, 0, 0, 1, 1, 0, −1];

console.log(binaryRepresentation(bin));

bin = [0, 0, −1];

console.log(binaryRepresentation(bin));

bin = [1, 1, 0, 1, −1];

console.log(binaryRepresentation(bin));

(Intermediate assignment) Write a subroutine named search, which takes two arrays of natural numbers, haystack and needle, as parameters. Both arrays are terminated with a sentinel value of 0. The subroutine should search the haystack array for a sequence of elements that match the needle array and return the index of the first element of the found sequence. If there are multiple such sequences, the subroutine should return the smallest index among them. If no such sequence exists in the haystack array, the subroutine should return the value −1. Examples:

sn = [2, 3, 4, 5, 6, 7, 2, 3, 4, 0];

ig = [2, 3, 4, 0];

console.log(search(sn, ig));

sn = [1, 2, 3, 4, 5, 6, 0];

ig = [3, 4, 5, 0];

console.log(search(sn, ig));

sn = [1, 2, 3, 4, 5, 6, 0];

ig = [5, 6, 7, 0];

console.log(search(sn, ig));

sn = [2, 3, 4, 5, 6, 0];

ig = [1, 2, 3, 0];

console.log(search(sn, ig));

sn = [2, 3, 4, 5, 6, 0];

ig = [2, 3, 4, 5, 6, 0];

console.log(search(sn, ig));

(Hard assignment) Write a subprogram named extractsnake that takes as parameters a two-dimensional array m with its number of rows () and columns (), as well as an empty array snake. The array m contains only zeros and one continuous sequence of ones in either a vertical or horizontal direction. The subprogram should record the row and column indices of the start and end of this sequence of ones in the array snake, then count and return the number of ones in the identified sequence. The array snake should be a two-dimensional array, where the first row stores the start indices and the second row stores the end indices of the sequence of ones in m. The beginning of the sequence is considered to be the one that has either the smallest row index or the smallest column index. Assume that there is always at least one occurrence of a one in m. Examples:

m1 = [[0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0],

[0, 0, 1, 1, 1, 1],

[0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0]];

k1 = [];

d1 = extractsnake(m1, 5, 6, k1);

console.log(d1, JSON.stringify(k1));

m2 = [[0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 1, 0]];

k2 = [];

d2 = extractsnake(m2, 3, 7, k2);

console.log(d2, JSON.stringify(k2));

m3 = [[0],

[1],

[1],

[1],

[0],

[0]];

k3 = [];

d3 = extractsnake(m3, 6, 1, k3);

console.log(d3, JSON.stringify(k3));

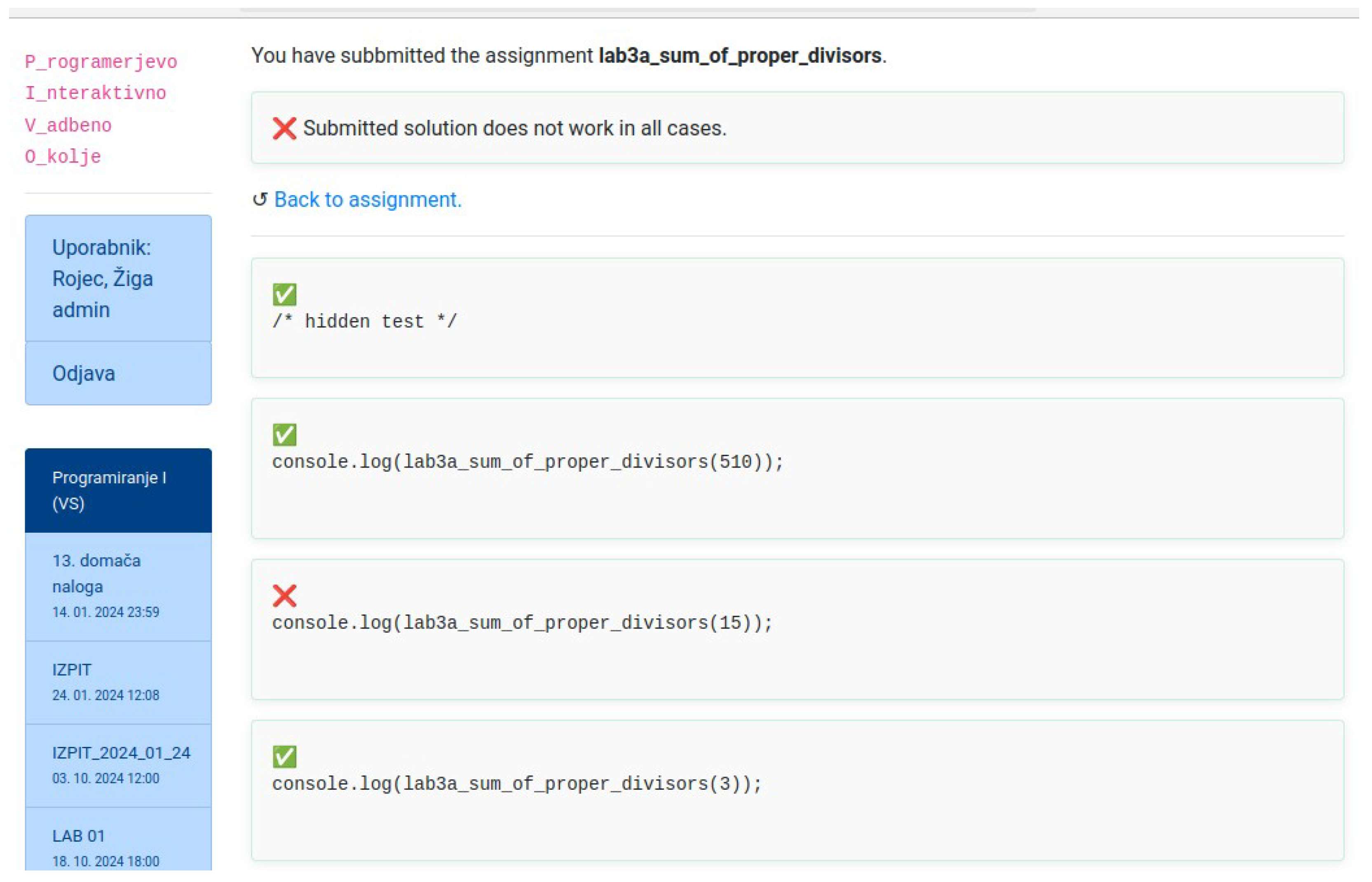

3.6. Accepted Answers

3.7. Threats to Validity and Limitations

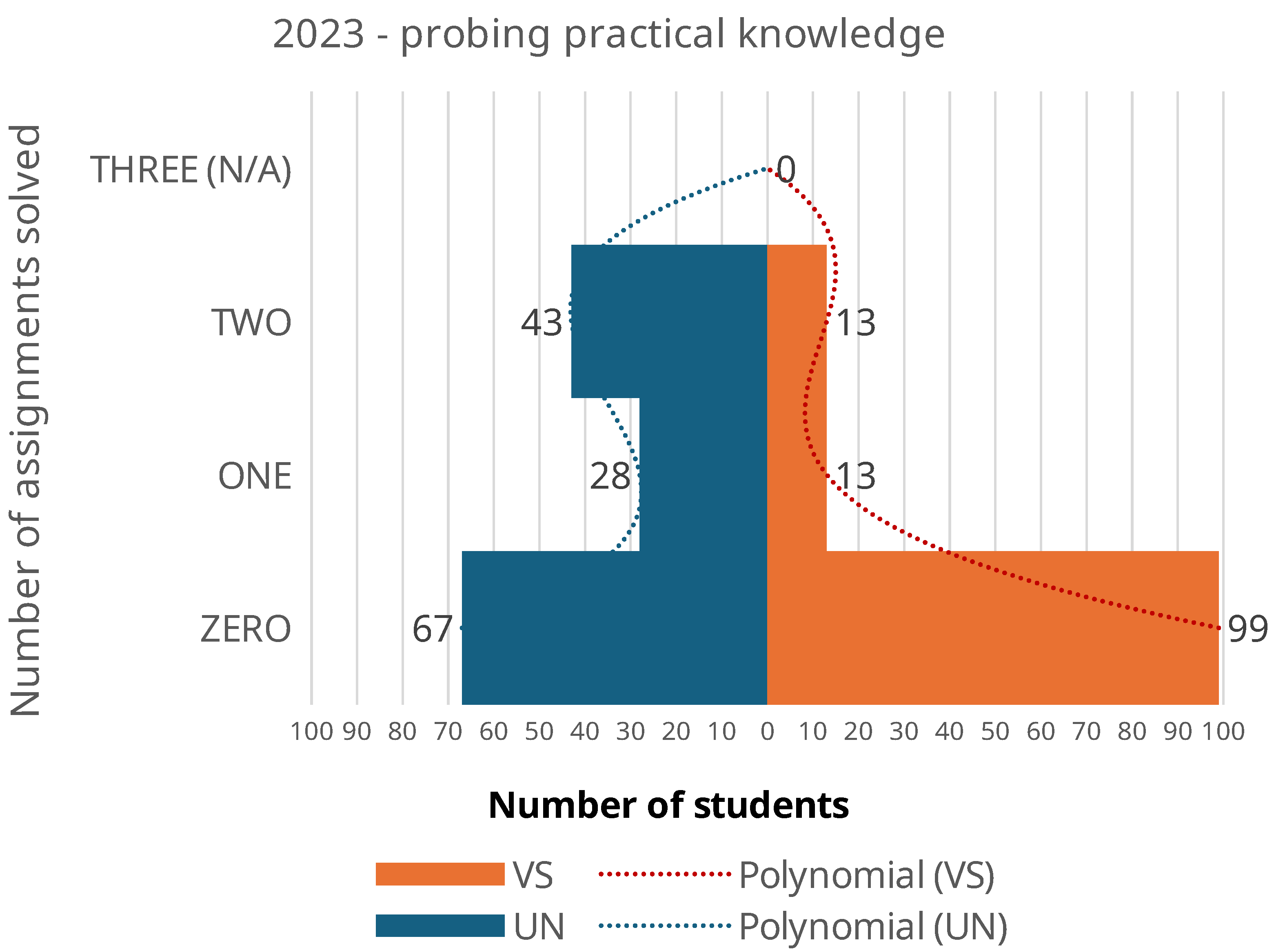

4. Results

- curriculum format was identical (same basic study resources and references, same time-schedule, same physical location…),

- staff was identical,

- staff/per student ratio was identical,

- written exam was identical,

- number of students were high, and

- no known socially impacted generation (special COVID-epidemic circumstances already faded-out).

4.1. Correlation Between Engagement and Success

5. Discussion

5.1. Student Feedback (Qualitative Observations)

5.2. Implications for Practice/Transferability

5.3. Cost–Benefit and Scalability Considerations

5.4. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| APAS | Automated Programming Assessment System |

| PIVO | Programmers’ Interactive Virtual Onboarding |

References

- Belmar, H. (2022). Review on the teaching of programming and computational thinking in the world. Frontiers in Computer Science, 4, 997222. [Google Scholar] [CrossRef]

- Brkić, L., Mekterović, I., Fertalj, M., & Mekterović, D. (2024). Peer assessment methodology of open-ended assignments: Insights from a two-year case study within a university course using novel open source System. Computers & Education, 213, 105001. [Google Scholar] [CrossRef]

- Calles-Esteban, F., Hellín, C. J., Tayebi, A., Liu, H., López-Benítez, M., & Gómez, J. (2024). Influence of gamification on the commitment of the students of a programming course: A case study. Applied Sciences, 14(8), 3475. [Google Scholar] [CrossRef]

- De Souza, D. M., Isotani, S., & Barbosa, E. F. (2015). Teaching novice programmers using ProgTest. International Journal of Knowledge and Learning, 10, 60–77. [Google Scholar] [CrossRef]

- Erdogmus, H., Morisio, M., & Torchiano, M. (2005). On the effectiveness of the test-first approach to programming. IEEE Transactions on Software Engineering, 31(3), 226–237. [Google Scholar] [CrossRef]

- Freedman, D., Pisani, R., & Purves, R. (2007). Statistics (international student edition). W. W. Norton & Company. [Google Scholar]

- Gao, J., Pang, B., & Lumetta, S. S. (2016, July 9–13). Automated feedback framework for introductory programming courses. 2016 ACM Conference on Innovation and Technology in Computer Science Education (pp. 53–58), Arequipa, Peru. [Google Scholar] [CrossRef]

- Gomes, A., & Mendes, A. J. (2007, September 3–7). Learning to program–difficulties and solutions. International Conference on Engineering Education (ICEE) (Vol. 7, pp. 1–5), Coimbra, Portugal. [Google Scholar]

- Grover, S., & Pea, R. (2018). Computational thinking: A competency whose time has come. Computer Science Education: Perspectives on Teaching and Learning in School, 19(1), 19–38. [Google Scholar]

- Hellín, C. J., Calles-Esteban, F., Valledor, A., Gómez, J., Otón-Tortosa, S., & Tayebi, A. (2023). Enhancing student motivation and engagement through a gamified learning environment. Sustainability, 15(19), 14119. [Google Scholar] [CrossRef]

- Iosup, A., & Epema, D. (2014, March 5–8). An experience report on using gamification in technical higher education. 45th ACM Technical Symposium on Computer Science Education (pp. 27–32), Atlanta, GA, USA. [Google Scholar] [CrossRef]

- Lokar, M., & Pretnar, M. (2015, November 19–22). A low overhead automated service for teaching programming. 15th Koli Calling Conference on Computing Education Research (pp. 132–136), Koli, Finland. [Google Scholar] [CrossRef]

- Luxton-Reilly, A., Simon, Albluwi, I., Becker, B. A., Giannakos, M., Kumar, A. N., Ott, L., Paterson, J., Scott, M. J., Sheard, J., & Szabo, C. (2018, July 2–4). Introductory programming: A systematic literature review. 23rd Annual ACM Conference on Innovation and Technology in Computer Science Education (pp. 55–106), Larnaca, Cyprus. [Google Scholar] [CrossRef]

- Mekterović, I., Brkić, L., & Horvat, M. (2023). Scaling automated programming assessment systems. Electronics, 12(4), 942. [Google Scholar] [CrossRef]

- Mekterović, I., Brkić, L., Milašinović, B., & Baranović, M. (2020). Building a comprehensive automated programming assessment system. IEEE Access, 8, 81154–81172. [Google Scholar] [CrossRef]

- Meža, M., Košir, J., Strle, G., & Košir, A. (2017). Towards automatic real-time estimation of observed learner’s attention using psychophysiological and affective signals: The touch-typing study case. IEEE Access, 5, 27043–27060. [Google Scholar] [CrossRef]

- Paiva, J. C., Figueira, Á., & Leal, J. P. (2023). Bibliometric analysis of automated assessment in programming education: A deeper insight into feedback. Electronics, 12(10), 2254. [Google Scholar] [CrossRef]

- Rajesh, S., Rao, V. V., & Thushara, M. (2024, April 5–7). Comprehensive investigation of code assessment tools in programming courses. 2024 IEEE 9th International Conference for Convergence in Technology (I2CT), Pune, India. Available online: https://www.semanticscholar.org/paper/Comprehensive-Investigation-of-Code-Assessment-in-Rajesh-Rao/cffa6f4234607988c9418a89412ac2ca6cc08db1 (accessed on 20 September 2025).

- Restrepo-Calle, F., Echeverry, J. J. R., & González, F. A. (2019). Continuous assessment in a computer programming course supported by a software tool. Computer Applications in Engineering Education, 27(1), 80–89. [Google Scholar] [CrossRef]

- Rojec, Z. (2021). Varen zagon nepreverjene programske kode v sistemu PIVO. Elektrotehniski Vestnik, 88(1/2), 54–60. [Google Scholar]

- Sabarinath, R., & Quek, C. L. G. (2020). A case study investigating programming students’ peer review of codes and their perceptions of the online learning environment. Education and Information Technologies, 25(5), 3553–3575. [Google Scholar] [CrossRef]

- Savelka, J., Agarwal, A., Bogart, C., Song, Y., & Sakr, M. (2023, June 7–12). Can generative pre-trained transformers (GPT) pass assessments in higher education programming courses? 2023 Conference on Innovation and Technology in Computer Science Education V (pp. 117–123), Turku, Finland. [Google Scholar] [CrossRef]

- Sobral, S. R. (2021). Teaching and learning to program: Umbrella review of introductory programming in higher education. Mathematics, 9(15), 1737. [Google Scholar] [CrossRef]

- Wang, H.-Y., Huang, I., & Hwang, G.-J. (2016). Comparison of the effects of project-based computer programming activities between mathematics-gifted students and average students. Journal of Computers in Education, 3(1), 33–45. [Google Scholar] [CrossRef]

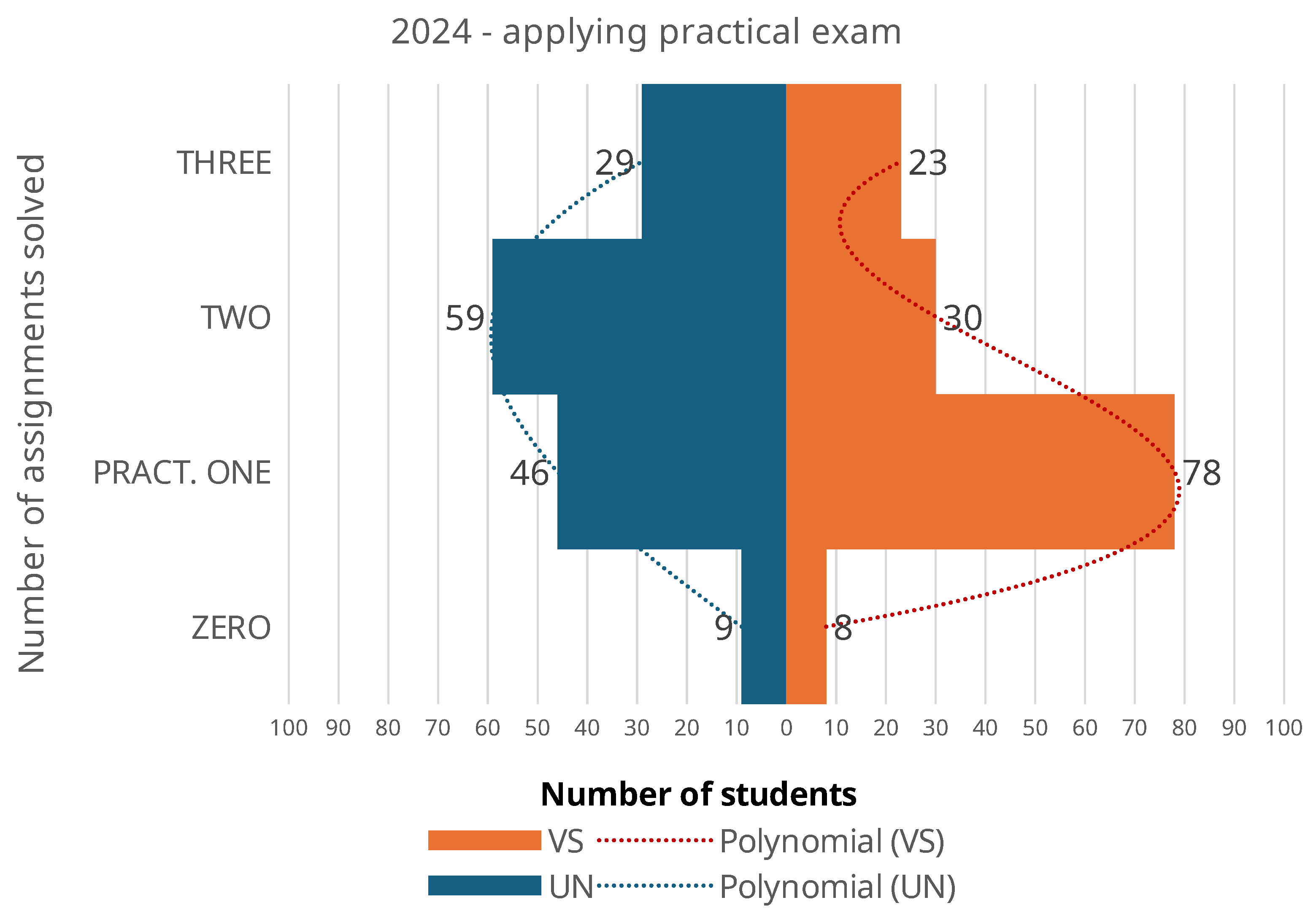

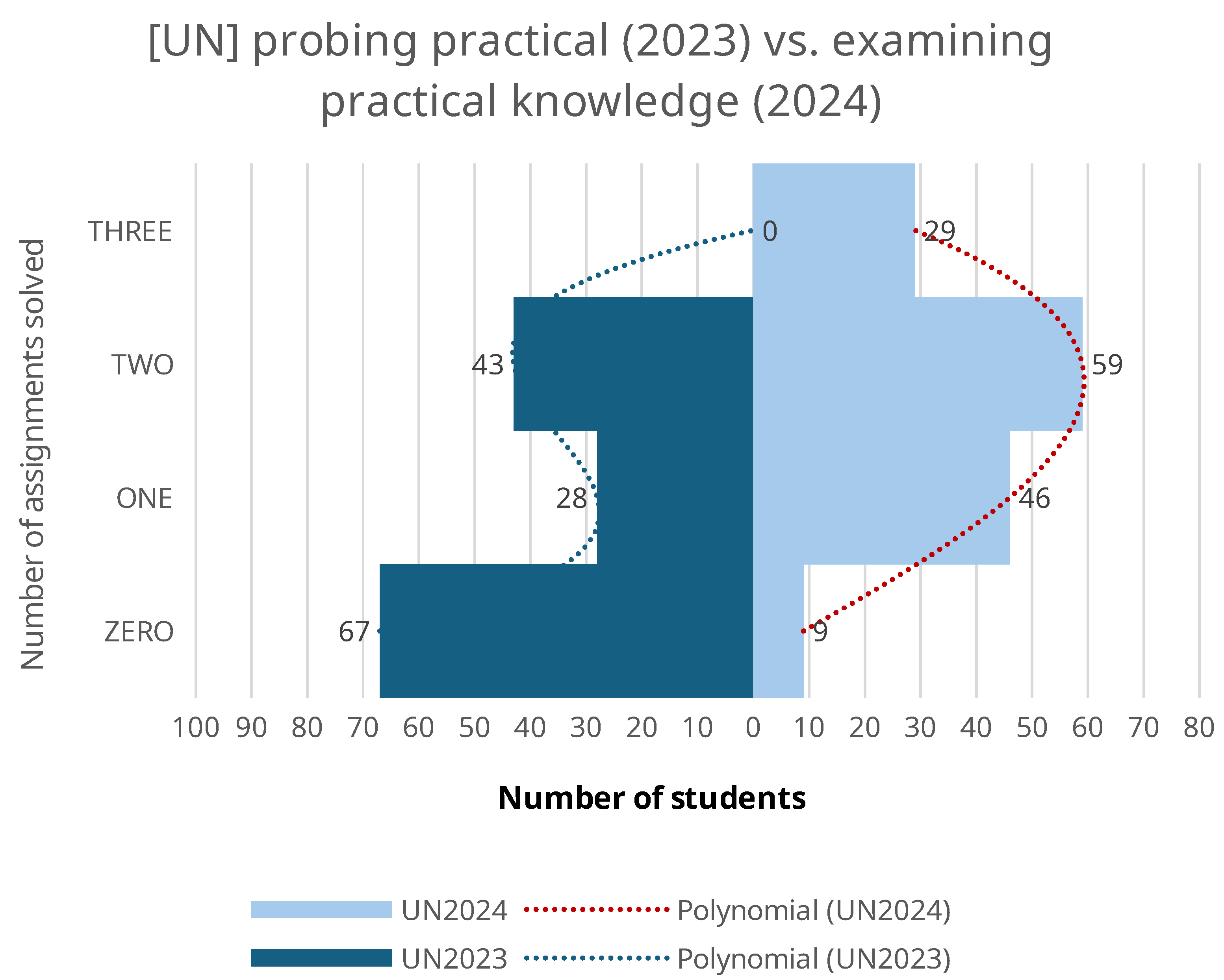

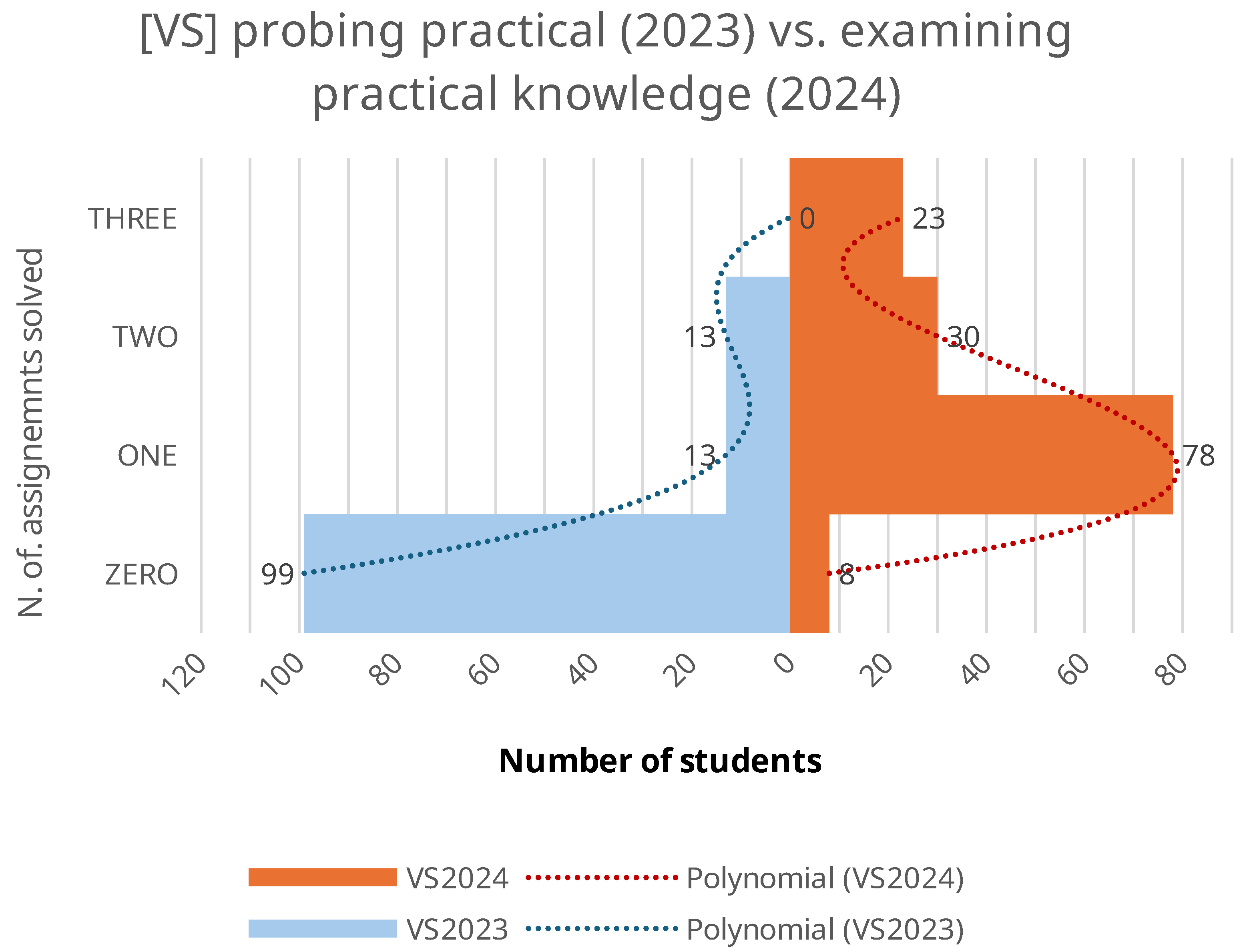

| Year & st. Program | UN 2023 | UN 2024 | VS 2023 | VS 2024 | MERGED 2023 | MERGED 2024 |

|---|---|---|---|---|---|---|

| N of Stud. | 138 | 143 | 125 | 139 | 263 | 282 |

| ZERO | 67 (49%) | 9 (6%) | 99 (79%) | 8 (6%) | 166 (63%) | 17 (6%) |

| ONE | 28 (20%) | 46 (32%) | 13 (10%) | 78 (56%) | 41 (16%) | 124 (44%) |

| TWO | 43 (31%) | 59 (41%) | 13 (10%) | 30 (22%) | 56 (21%) | 89 (32%) |

| THREE | N/A | 29 (20%) | N/A | 23 (17%) | N/A | 52 (18%) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rojec, Ž.; Puhan, J.; Fajfar, I. Practical Programming Exams with Automated Assessment Improve Student Engagement and Learning Outcomes. Educ. Sci. 2025, 15, 1486. https://doi.org/10.3390/educsci15111486

Rojec Ž, Puhan J, Fajfar I. Practical Programming Exams with Automated Assessment Improve Student Engagement and Learning Outcomes. Education Sciences. 2025; 15(11):1486. https://doi.org/10.3390/educsci15111486

Chicago/Turabian StyleRojec, Žiga, Janez Puhan, and Iztok Fajfar. 2025. "Practical Programming Exams with Automated Assessment Improve Student Engagement and Learning Outcomes" Education Sciences 15, no. 11: 1486. https://doi.org/10.3390/educsci15111486

APA StyleRojec, Ž., Puhan, J., & Fajfar, I. (2025). Practical Programming Exams with Automated Assessment Improve Student Engagement and Learning Outcomes. Education Sciences, 15(11), 1486. https://doi.org/10.3390/educsci15111486