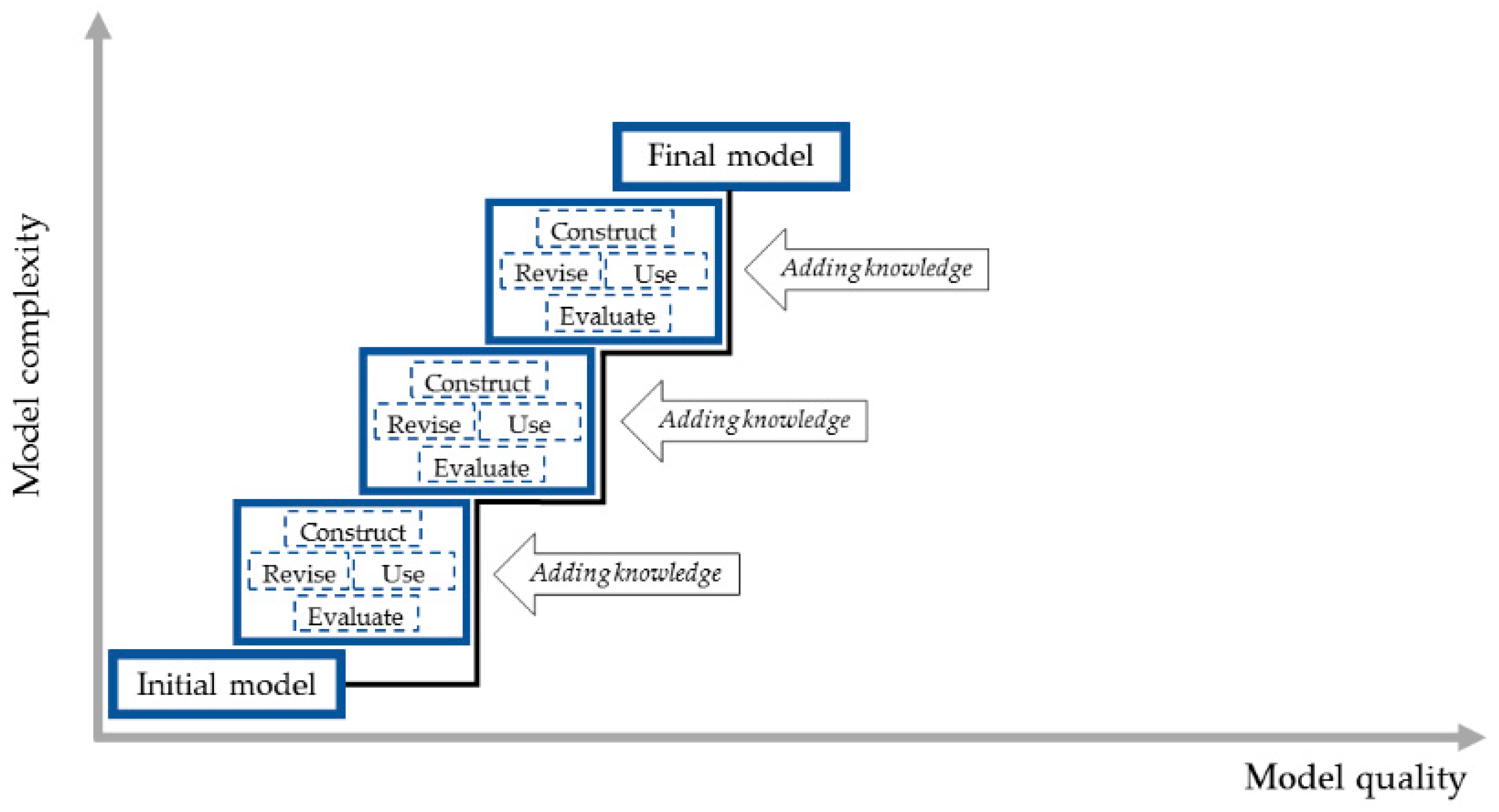

Supporting Students in Building and Using Models: Development on the Quality and Complexity Dimensions

Abstract

:1. Introduction

2. Theoretical Background

2.1. Elements of the Modeling Practice

“Science often involves the construction and use of a wide variety of models and simulations to help develop explanations about natural phenomena. Models make it possible to go beyond observables and imagine a world not yet seen. Models enable predictions of the form ‘if … then … therefore’ to be made in order to test hypothetical explanations.”(p. 50)

- Constructing models: Models are built to represent students’ current understanding of phenomena. Students are expected to not just use provided models in the classroom, but to engage in the process of building their own models. This should promote their ownership and agency of knowledge building;

- Using models: Models can be used for different purposes, including communicating current understanding of ideas and to explain and predict phenomena. Students are expected to use models by testing them against data sources;

- Evaluating models: At the heart of the scientific process stands the constant evaluation of competing models. This is what drives the consensus knowledge building of the scientific community. Students should be able to share their models with peers and critique each other’s models by testing them against logical reasoning and empirical evidence;

- Revising models: Models are generative tools. They represent the current understanding of scientific ideas. It lies in the nature of models that they are revised when new (e.g., additional variables) or more accurate ideas or insights (e.g., advanced measurements) are available. When new knowledge and understanding accumulates or changes, students should revise their models to better represent their latest ideas. In this article, we use a broad definition of the term revise, including any changes that students conduct in their models, thus also encompassing the simple addition of model elements. If we refer to revise in the narrow sense, this is pointed out. In these cases, we mean changing elements of the model that had been added in an earlier model version.

2.2. Progression in Students’ Metamodeling Knowledge

2.3. Rationale and Research Questions

- How were the elements of the modeling practice used in the unit integrating the modeling tool?

- How did students’ modeling practice develop throughout the unit with regard to constructing, using, evaluating, and revising models?

- What was the change in students’ metamodeling knowledge about the nature of models and modeling across the unit?

3. Methodology

3.1. Context

3.1.1. SageModeler Software

- Visualizing variables and relationships in diagrams in a way that students can customize;

- Using drag-and-drop functionality for constructing models and preparing diagrams;

- Defining relationships between variables without the dependence on equations;

- Performing exploratory data analysis through an environment designed for students and integrated into SageModeler.

3.1.2. Water Quality Unit for Middle School

3.2. Population

3.3. Data Collection and Analyses

3.3.1. Classroom Observations, Video Recordings and Teacher Reflection Notes

3.3.2. Student Models and Connected Prompts about Reasons for Specifying Relationships

- pH: Expected to vary in its effect on water quality, since neutral pH is most preferable for most living organisms. First, this relationships is increasing from acidic to neutral conditions, and then decreasing from neutral to basic conditions. This variable was added in the initial model;

- Temperature: Expected to have a decreasing effect on water quality: As the temperature increases, the water quality should decrease. This variable was added in the initial model;

- Conductivity: Expected to have a decreasing effect on water quality. This variable was added in the first model revision;

- Dissolved oxygen: Expected to have an increasing effect on water quality. This variable was added in the second model revision.

- Models that included all the required variables and accurate relationships that were categorized as fully complete models;

- Models that did not include the above-mentioned variables or where relationships were categorized as incomplete and classified into several types of incompleteness.

- Undefined relationships: Variables are connected, but relationship type not determined;

- Inaccurate relationships: Relationship types between variables are not accurately defined;

- Missing variables: Required variables do not appear in the model;

- Unconnected variables: Variables appear in the model, but are not connected to any other variables;

- Unrelated variables: Additional variables are directly connected to the dependent variable;

- Inaccurate labels: Labels of variables were not accurately named.

3.3.3. Selection of Focus Case Groups

3.3.4. Pre- and Post-Modeling Surveys

- Q1

- Your friend has never heard about scientific models. How would you describe to him what a scientific model is?

- Q2

- Describe how you or your classmates would use a scientific model;

- Q3

- Describe how a scientist uses a scientific model;

- Q4

- What do you need to think about when creating, using, and revising a scientific model?

3.3.5. Teacher and Unit Developer Reflections

4. Results

4.1. Modeling Practice Elements Integrated in the Water Quality Unit

“What we’ve been doing now, we’ve been planning [points at the word ‘plan’ on the board]. That’s the first part of thinking about a model, to plan what should be in the model. And we want to think about the various variables. What is it that we want to model? What is it that we want to use to be able to predict or to explain?”

- Students were instructed to set each of their independent variables to the best possible conditions of a healthy stream and then run a simulation of their models by producing an output graph to determine if the water quality (the dependent variable) was set to the highest possible water quality;

- Students were instructed to test portions of their models (pH and water quality or temperature and water quality, for example) to systematically verify if those parts of the model ‘worked’. This meant students checked if the relationship between these variables was scientifically appropriate in that it matched the data the students had collected or the information they had learnt about in class beforehand;

- Students were instructed to select and set one independent variable on their models while keeping all other variables set at the best condition for water quality and then predict the impact on the overall water quality of the selected variable. They then had to test their model to see if their prediction and the results matched.

- Do I need to evaluate and revise the relationships in my model by evaluating the relationship type setting (more and more, less and less, etc.)? Are my relationships accurate?

- Do I need to rethink my science ideas?

“How many of you finished? [several students raise hands] … so what we want to do today, finish that first... Now today, if we get finished with this, and remember, so we plan, we build, we test...you remember we test it, how many of you tested it? [several students raise hands]. OK, you played around with the stuff, you got a data table. OK, so we make sure we test those today and revise it if it doesn’t quite work the way we want it towork, right?”

“So we have to ask ourselves, Does this work? If you get to the way that it works, the way you think it works, then now we can expand our model ... you are going to plan, and build it, you are going to test it, you are going to see if it runs the way you think and then you will revise it.”

“So we have been using this modeling program ... we’re trying to model relationships between various water quality measures, right? And the health of the stream and its organisms. And you have in there right now pH, temperature, and conductivity and what we are going to do today is add dissolved oxygen.”

“You have created a very complex model of a very complex system, right? The water quality is a really complex system. And for lots of you, you have your four water quality measures all connected to here [points at water quality variable in an example model projected on the screen], which is very important, it affects our relationships. But then there is also some of these kinds of relationships [cross referencing with her hands] that even make the model more complex. Right? So if we are really going to model the complexity of this phenomenon we also have to look for relationships in between.”

4.2. Development of Students’ Models and Metamodeling Knowledge

4.2.1. Case 1: A complete Model (Group E)

Quote 1: “Scientists use models to show/describe phenomena that their peers may need to see.” [Sandy, pre-survey, question 3]

Quote 2: “A scientific model should be clear with no unneeded parts. It should also be up to date on all current information.” [Kara, pre-survey, question 4]Quote 3: “You need to think about all of the scientific ideas and concepts involved, our model should be accurate, and the best models are simple and don’t have unnecessary parts.” [Kara, post-survey, question 4]Quote 4: “I think about how […] not everything will be exact.” [Sandy, pre-survey, question 4]

Quote 5: “I think about how there may be a need for a key, the scale […]” [Sandy, pre-survey, question 4];Quote 6: “We could use scientific models to model how things affect our stream.” [Sandy, post-survey, question 2]

4.2.2. Case 2: An incomplete model revised in later modeling iterations (Group C)

Quote 7: “[…] All models should be simplistic. All models have right and wrong factors.” [Rick, pre-survey, question 1]Quote 8: “They [models] are simple, and there can be many correct models about one phenomenon.” [Rick, post-survey, question 1]Quote 9: “[…] They are constantly changing, so if you have to change it, you’re ok. […].” [Rick, pre-survey, question 4]

Quote 10: “You might have to change it, if your theory doesn’t work, that’s when you revise it […].” [Rick, post-survey, question 4]

Quotes 11: [Rick, pre-survey, questions 2&3];Students: “[…] to scale something.”Scientists: “[…] represent and test things”;Quotes 12: [Ron, post-survey, questions 2&3];Students: “To see what happens in a smaller version.”Scientists: “[…] show other people what will happen in a smaller version.”

4.2.3. Case 3: A Complete Model with Additional Variables (Group A)

Quote 13: “If we can’t see something from the naked eye, you can make a model to see what it looks like up close.” [Dave, pre-survey, question 2]

Quote 14: “[...] a model of something that is being studied. It can be visualized and created to model almost anything.” [Zack, post-survey, question 1]

Quote 15: “So that they are always correct.” [Dave, post-survey, question 4]Quote 16: “What is [going to] represent what.” [Zack, pre/post-surveys, question 4]

(Note: This likely refers to pictures students chose for variables in their models).

Quote 17: “They [scientists] use models to explain a theory.” [Dave, pre-survey, question 3]Quote 18: “Scientists use scientific models to predict what things could turn out as.” [Zack, pre-survey, question 3]Quote 19: “To hypothesize on how somethings works.” [Zack, post-survey, question 3]

5. Discussions

5.1. Incorporating the Elements of the Modeling Practice

5.2. Development of Students’ Models and Related Explanations

5.3. Development of Students’ Metamodeling Knowledge

5.4. Implications: Integrating the Modeling Practice Elements to Develop Quality and Complexity of Models

- Only two out of five groups with incomplete models in the initial model and the first model revision revised (parts of) earlier versions of their model they had submitted in previous lessons (revision in the narrow sense). We were only able to observe students making revisions to elements of their model they had added right before in the same lesson;

- Students’ answers to the meta-modeling questions:

- ◯

- often focused on the requirements to have complete or up-to-date models, thus suggesting a focus on having included all the required elements (for similar findings, see, e.g., Ref. [35]). A related finding by Sins and colleagues [18] points at the relationship between students’ epistemological knowledge and their modeling practice, indicating that students with low epistemic beliefs rather focused on model surface features, instead of the models’ predictive or explanatory features;

- ◯

- ◯

- devoted little attention to aspects of model revision in the narrow sense, i.e., going back and changing elements that had been specified earlier. For future research, it may be useful to determine if differences in performance can be found quantitatively between the elements of modeling practice, such as those in students’ conceptualization of aspects of metamodeling knowledge described by Krell and colleagues. [35].

5.5. Limitations of Methods

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- National Research Council [NRC]. A Framework for K-12 Science Education: Practices, Crosscutting Concepts, and Core Ideas; The National Academies Press: Washington, DC, USA, 2012; ISBN 978-0-309-21742-2. [Google Scholar]

- Schwarz, C.V.; Reiser, B.J.; Davis, E.A.; Kenyon, L.; Achér, A.; Fortus, D.; Krajcik, J. Developing a learning progression for scientific modeling: Making scientific modeling accessible and meaningful for learners. J. Res. Sci. Teach. 2009, 46, 632–654. [Google Scholar] [CrossRef] [Green Version]

- Stachowiak, H. Allgemeine Modelltheorie (General Model Theory); Springer: Vienna, Austria, 1973. [Google Scholar]

- Gouvea, J.; Passmore, C. ‘Models of’ versus ‘Models for’. Sci. Educ. 2017, 26, 49–63. [Google Scholar] [CrossRef]

- Lehrer, R.; Schauble, L. Cultivating model-based reasoning in science education. In The Cambridge Handbook of the Learning Sciences; Sawyer, R.K., Ed.; Cambridge University Press: New York, NY, USA, 2005; pp. 371–387. [Google Scholar]

- Schwarz, C.V.; White, B.Y. Metamodeling knowledge: Developing students’ understanding of scientific modeling. Cogn. Instr. 2005, 23, 165–205. [Google Scholar] [CrossRef]

- Schwarz, C.; Reiser, B.J.; Acher, A.; Kenyon, L.; Fortus, D. MoDeLS: Challenges for defining a learning progression for scientific modeling. In Learning Progressions in Science; Alonzo, A., Gotwals, A.W., Eds.; Sense Publishers: Rotterdam, The Netherlands, 2012; pp. 101–138. ISBN 978-9460918223. [Google Scholar]

- Pierson, A.E.; Clark, D.B.; Sherard, M.K. Learning progressions in context: Tensions and insights from a semester-long middle school modeling curriculum. Sci. Educ. 2017, 101, 1061–1088. [Google Scholar] [CrossRef]

- Fretz, E.B.; Wu, H.K.; Zhang, B.; Davis, E.A.; Krajcik, J.S.; Soloway, E. An investigation of software scaffolds supporting modeling practices. Res. Sci. Educ. 2002, 32, 567–589. [Google Scholar] [CrossRef]

- Quintana, C.; Reiser, B.J.; Davis, E.A.; Krajcik, J.; Fretz, E.; Duncan, R.G.; Kyza, E.; Edelson, D.; Soloway, E. A scaffolding design framework for software to support science inquiry. J. Learn. Sci. 2004, 13, 337–386. [Google Scholar] [CrossRef]

- Harrison, A.G.; Treagust, D.F. A typology of school science models. Int. J. Sci. Educ. 2000, 22, 1011–1026. [Google Scholar] [CrossRef]

- Clement, J. Learning via model construction and criticism. In Handbook of Creativity; Sternberg, R., Ed.; Springer: Boston, MA, USA, 1989; pp. 341–381. ISBN 978-0521576048. [Google Scholar]

- Bamberger, Y.M.; Davis, E.A. Middle-school science students’ scientific modelling performances across content areas and within a learning progression. Int. J. Sci. Educ. 2013, 35, 213–238. [Google Scholar] [CrossRef]

- Fortus, D.; Shwartz, Y.; Rosenfeld, S. High school students’ meta-modeling knowledge. Res. Sci. Educ. 2016, 46, 787–810. [Google Scholar] [CrossRef]

- Gilbert, J.K. Models and modelling: Routes to more authentic science education. Int. J. Sci. Math. Educ. 2004, 2, 115–130. [Google Scholar] [CrossRef]

- Gobert, J.D.; Pallant, A. Fostering students' epistemologies of models via authentic model-based tasks. J. Sci. Educ. Technol. 2004, 13, 7–22. [Google Scholar] [CrossRef]

- Krell, M.; Krüger, D. University students’ meta-modelling knowledge. Res. Sci. Tech. Educ. 2017, 35, 261–273. [Google Scholar] [CrossRef]

- Sins, P.H.; Savelsbergh, E.R.; van Joolingen, W.R.; van Hout-Wolters, B.H. The relation between students’ epistemological understanding of computer models and their cognitive processing on a modelling task. Int. J. Sci. Educ. 2009, 31, 1205–1229. [Google Scholar] [CrossRef]

- Pluta, W.J.; Chinn, C.A.; Duncan, R.G. Learners’ epistemic criteria for good scientific models. J. Res. Sci. Teach. 2011, 48, 486–511. [Google Scholar] [CrossRef]

- Zangori, L.; Peel, A.; Kinslow, A.; Friedrichsen, P.; Sadler, T.D. Student development of model-based reasoning about carbon cycling and climate change in a socio-scientific issues unit. J. Res. Sci. Teach. 2017, 54, 1249–1273. [Google Scholar] [CrossRef]

- Passmore, C.; Gouvea, J.S.; Giere, R. Models in science and in learning science: Focusing scientific practice on sense-making. In International Handbook of Research in History, Philosophy and Science Teaching; Matthews, M., Ed.; Springer: Dordrecht, The Netherlands, 2014; pp. 1171–1202. ISBN 978-94-007-7654-8. [Google Scholar]

- Grosslight, L.; Unger, C.; Jay, E.; Smith, C. Understanding models and their use in science: Conceptions of middle and high school students and experts. J. Res. Sci. Teach. 1991, 28, 799–822. [Google Scholar] [CrossRef]

- Edelson, D. Design research: What we learn when we engage in design. J. Learn. Sci. 2002, 11, 105–121. [Google Scholar] [CrossRef]

- Concord Consortium, Building Models Project. Available online: https://learn.concord.org/building-models (accessed on 14 September 2018).

- Concord Consortium, Common Online Data Analysis Platform Project (CODAP). Available online: https://codap.concord.org/ (accessed on 14 September 2018).

- Finzer, W.; Damelin, D. Design perspective on the Common Online Data Analysis Platform. In Proceedings of the 2016 Annual Meeting of the American Educational Research Association (AERA), Washington, DC, USA, 8–12 April 2016. [Google Scholar]

- Jackson, S.; Krajcik, J.; Soloway, E. Model-It: A design retrospective. In Innovations in Science and Mathematics Education: Advanced Designs for Technologies of Learning; Jacobsen, M., Kozma, R., Eds.; Lawrence Erlbaum: Mahwah, NJ, USA, 2000; pp. 77–115. ISBN 978-0805828467. [Google Scholar]

- Bielik, T.; Opitz, S. Supporting secondary students’ modeling practice using a web-based modeling tool. In Proceedings of the 2017 Annual Meeting of the National Association for Research in Science Teaching (NARST), San Antonio, TX, USA, 22–25 April 2017. [Google Scholar]

- Damelin, D. Technical and conceptual challenges for students designing systems models. In Proceedings of the Annual Meeting of the 2017 National Association for Research in Science Teaching (NARST), San Antonio, TX, USA, 22–25 April 2017. [Google Scholar]

- Damelin, D.; Krajcik, J.; McIntyre, C.; Bielik, T. Students making system models: An accessible approach. Sci. Scope 2017, 40, 78–82. [Google Scholar] [CrossRef]

- Stephens, L.; Ke, L. Explanations and relationships in students’ mental and external models. In Proceedings of the 2017 Annual Meeting of the National Association for Research in Science Teaching (NARST), San Antonio, TX, USA, 22–25 April 2017. [Google Scholar]

- Novak, A.M. Using technologies to support middle school students in building models of stream water quality. In Proceedings of the 2017 Annual Meeting of the National Association for Research in Science Teaching (NARST), San Antonio, TX, USA, 22–25 April 2017. [Google Scholar]

- Krajcik, J.; Shin, N. Project-based learning. In The Cambridge Handbook of the Learning Sciences, 2nd ed.; Sawyer, R., Ed.; Cambridge University Press: New York, NY, USA, 2015; pp. 275–297. [Google Scholar]

- Novak, A.M.; Treagust, D.F. Adjusting claims as new evidence emerges: Do students incorporate new evidence into their scientific explanations? J. Res. Sci. Teach. 2018, 55, 526–549. [Google Scholar] [CrossRef]

- Krell, M.; Upmeier zu Belzen, A.; Krüger, D. Students’ levels of understanding models and modelling in Biology: Global or aspect-dependent? Res. Sci. Educ. 2014, 44, 109–132. [Google Scholar] [CrossRef]

- Justi, R.; van Driel, J. A case study of the development of a beginning chemistry teacher's knowledge about models and modelling. Res. Sci. Educ. 2005, 35, 197–219. [Google Scholar] [CrossRef]

- Upmeier zu Belzen, A.; Krüger, D. Modellkompetenz im Biologieunterricht (Model competence in biology education). Zeitschr. Did. Naturw. 2010, 16, 41–57. [Google Scholar]

- Crawford, B.A.; Cullin, M.J. Supporting prospective teachers’ conceptions of modelling in science. Int. J. Sci. Educ. 2004, 26, 1379–1401. [Google Scholar] [CrossRef]

- Clement, J.; Rea-Ramirez, M. Model Based Learning and Instruction in Science; Springer: Dordrecht, The Netherlands, 2008; ISBN 9781402064937. [Google Scholar]

| Type of Incompleteness in the Model | Description | Group | Changed in Next Modeling Lesson? |

|---|---|---|---|

| Undefined relationship | Relationship between ‘water quality’ and ‘conductivity’ not defined in first model revision. | B, F, K | Groups B, K: no; Group F: yes |

| Relationship between ‘water quality’ and ‘dissolved oxygen’ not defined in second model revision. | I | Occurred in final model revision | |

| Inaccurate relationship | Relationship between ‘temperature’ and ‘water quality’ defined as ‘vary’ in initial model. | C | Yes |

| Missing variable | ‘Dissolved oxygen’ missing in second model revision. | K | Occurred in final model revision |

| Unconnected variable | ‘Dissolved oxygen’ not connected to any other variable in second model revision. | F | Occurred in final model revision |

| Inaccurate label | ‘Acidity’ label instead of ‘pH’ in initial model. | I | No |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bielik, T.; Opitz, S.T.; Novak, A.M. Supporting Students in Building and Using Models: Development on the Quality and Complexity Dimensions. Educ. Sci. 2018, 8, 149. https://doi.org/10.3390/educsci8030149

Bielik T, Opitz ST, Novak AM. Supporting Students in Building and Using Models: Development on the Quality and Complexity Dimensions. Education Sciences. 2018; 8(3):149. https://doi.org/10.3390/educsci8030149

Chicago/Turabian StyleBielik, Tom, Sebastian T. Opitz, and Ann M. Novak. 2018. "Supporting Students in Building and Using Models: Development on the Quality and Complexity Dimensions" Education Sciences 8, no. 3: 149. https://doi.org/10.3390/educsci8030149