Image Reconstruction and Investigation of Factors Affecting the Hue and Wavelength Relation Using Different Interpolation Algorithms with Raw Data from a CMOS Sensor

Abstract

:1. Introduction

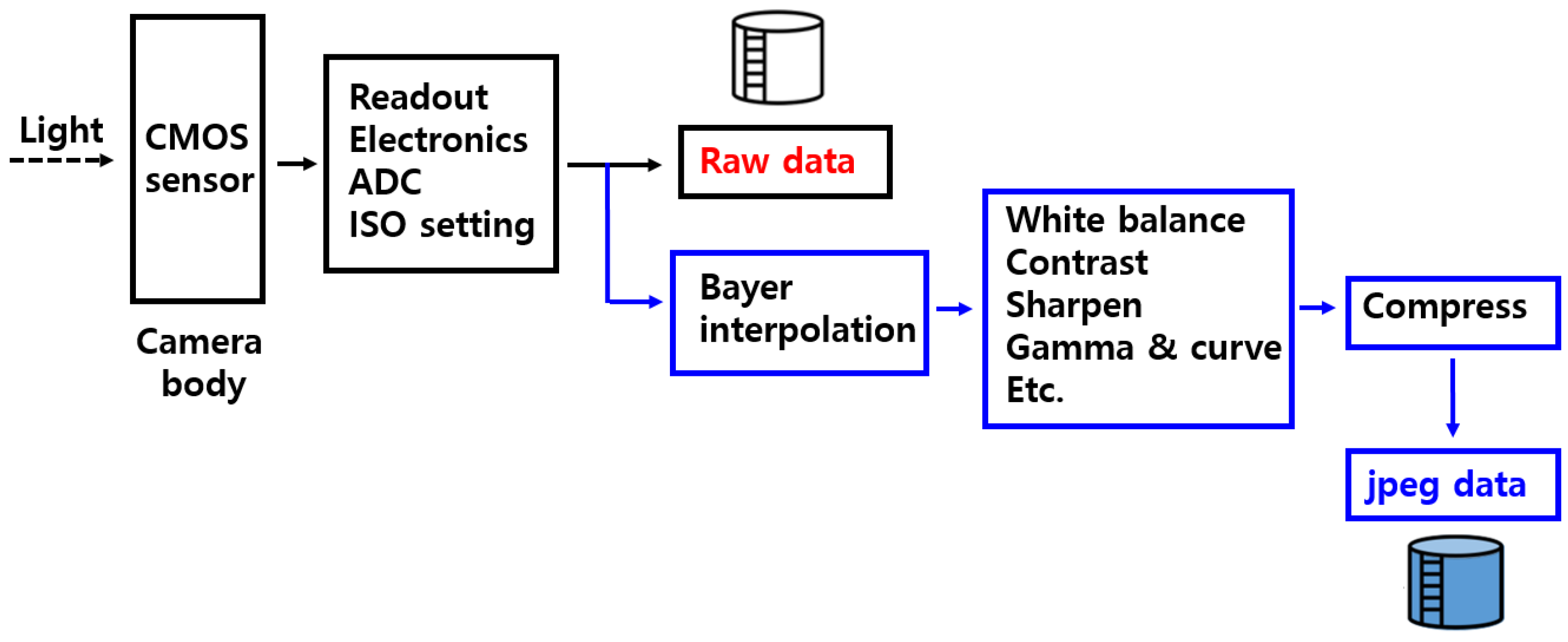

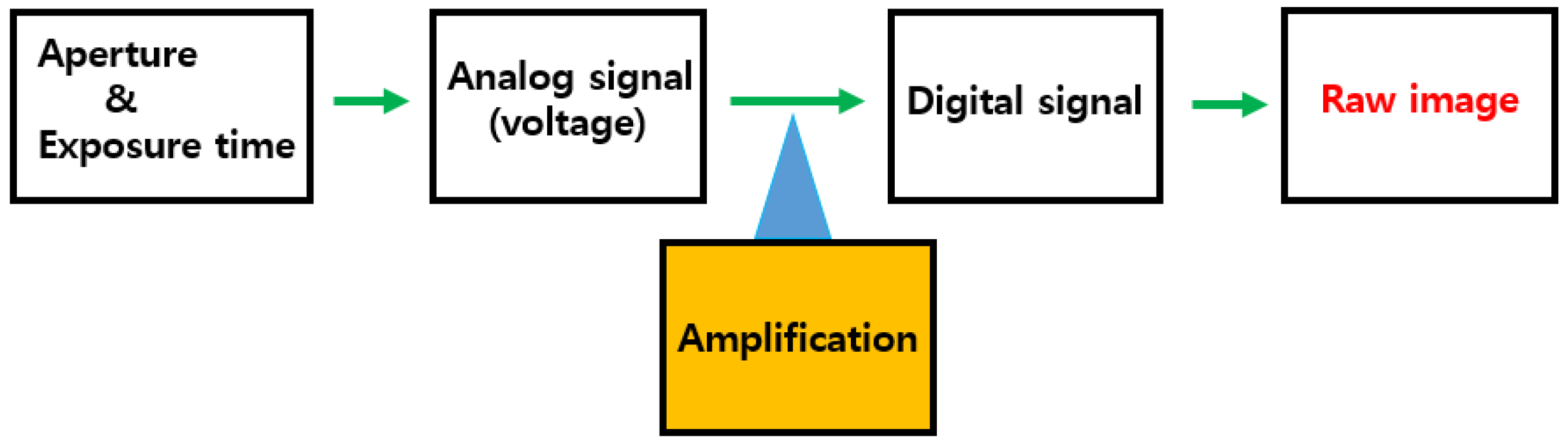

2. Production of a Digital Image with CMOS Sensor Technology

2.1. Experimental Setup

2.2. Reconstruction of Raw Image Data

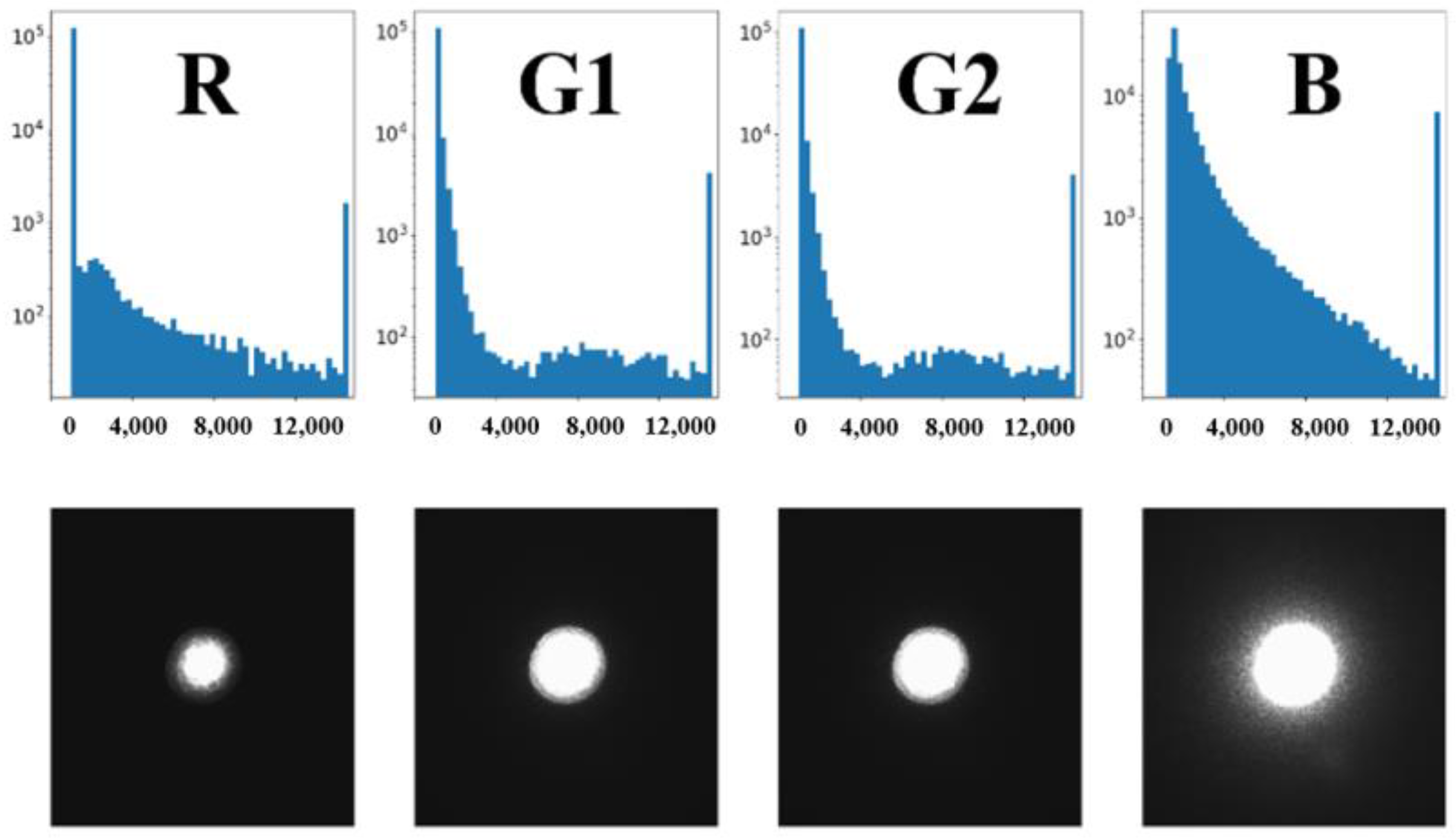

2.2.1. Raw Image

2.2.2. Raw Image Data Processing

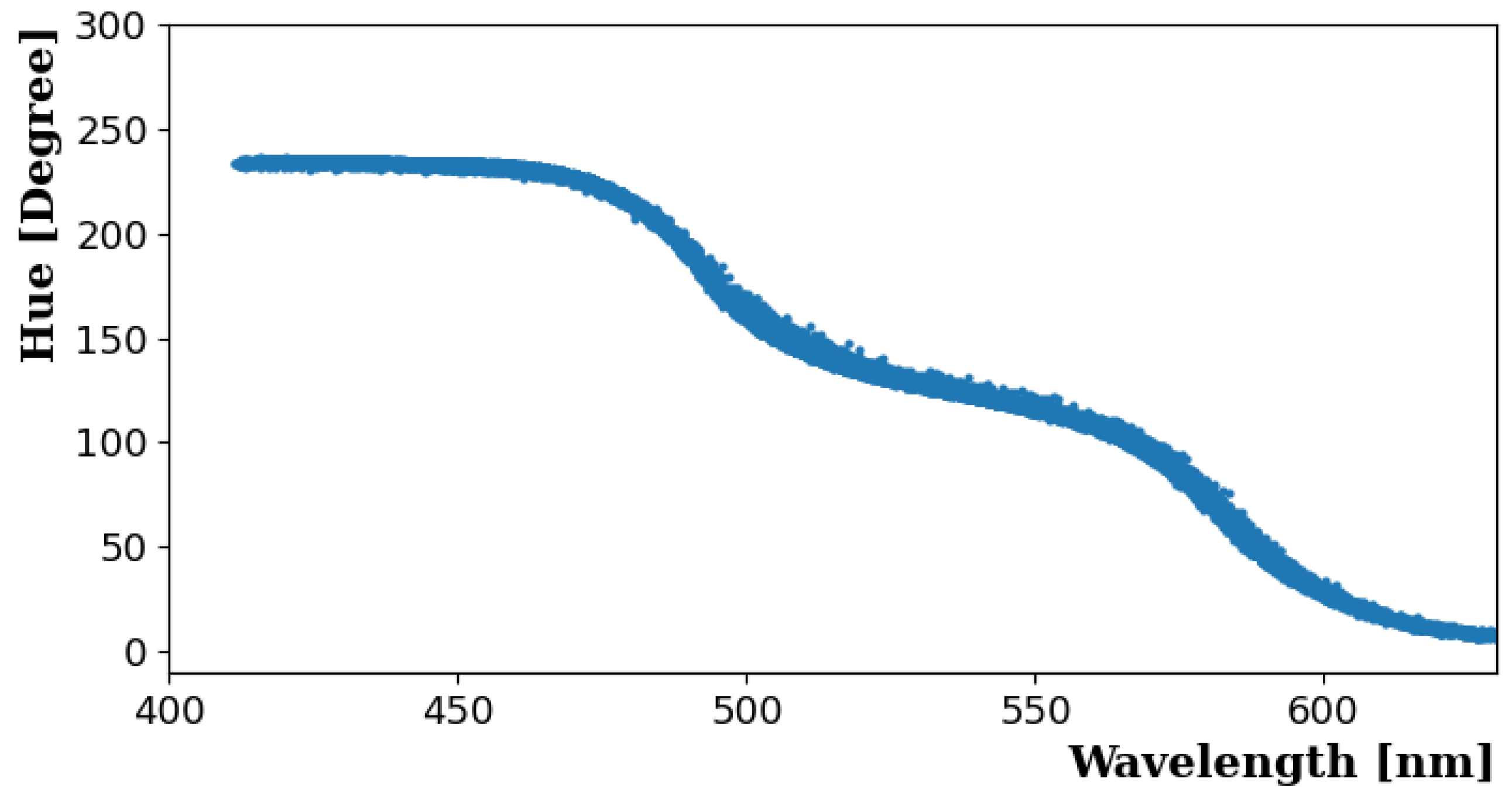

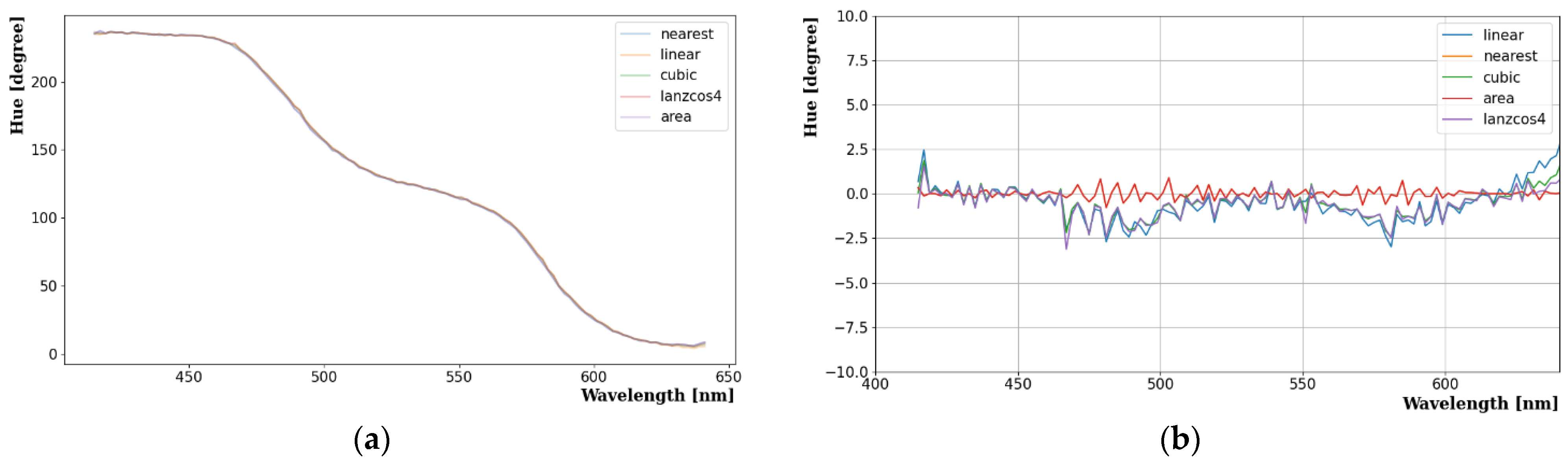

3. H-W Curves with Several Different Interpolation Algorithms

3.1. H-W Curve Result

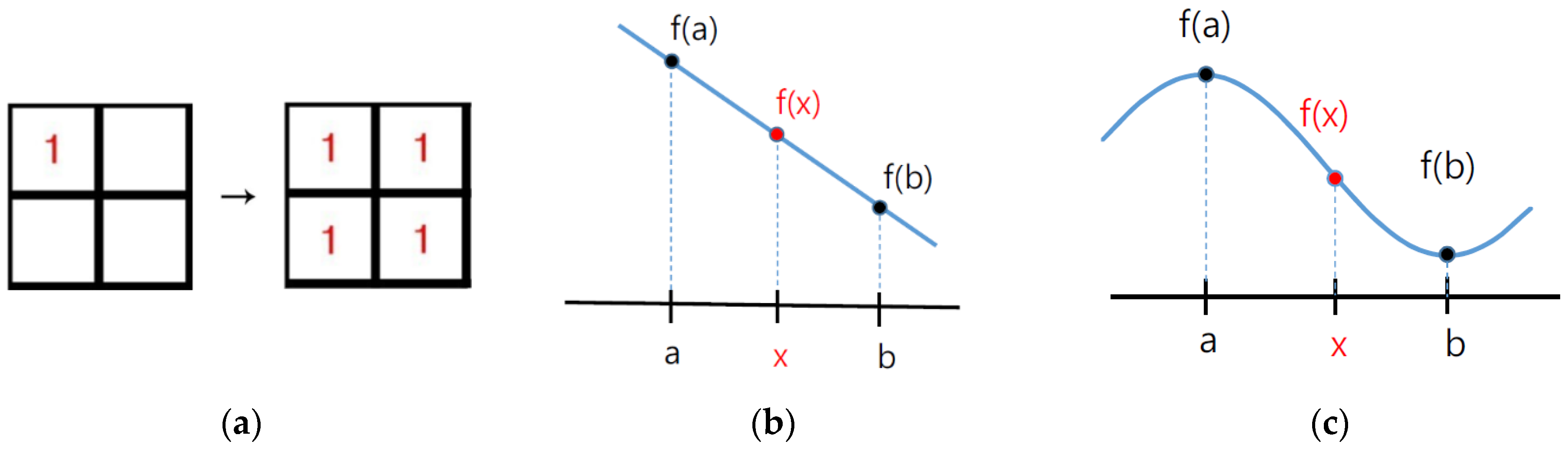

3.2. Several Interpolation Algorithms: Nearest Neighbor, Linear, and Cubic

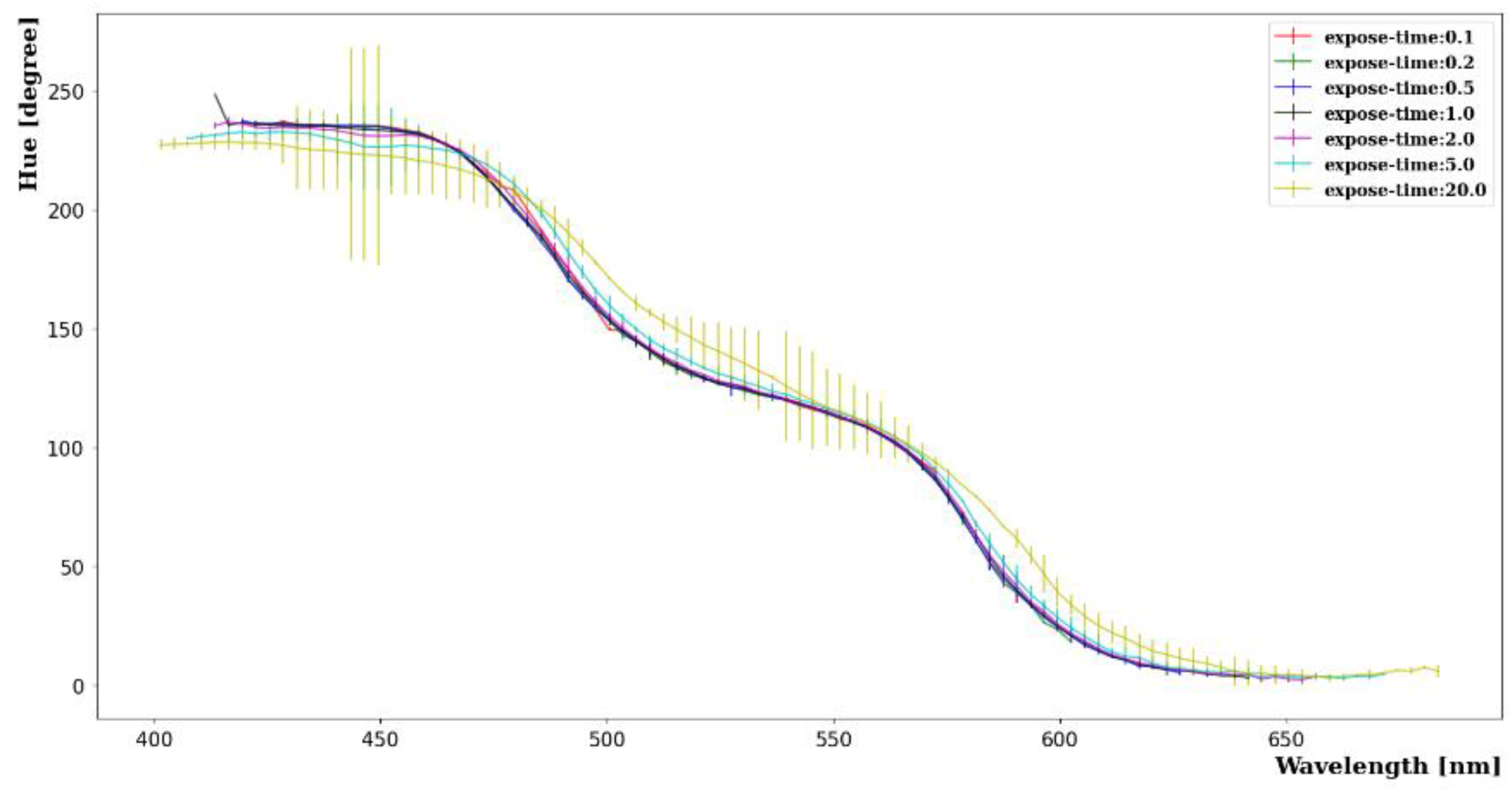

4. Several Intensity Variables Affecting Raw Images

4.1. Exposure Time (or Camera Shutter Speed)

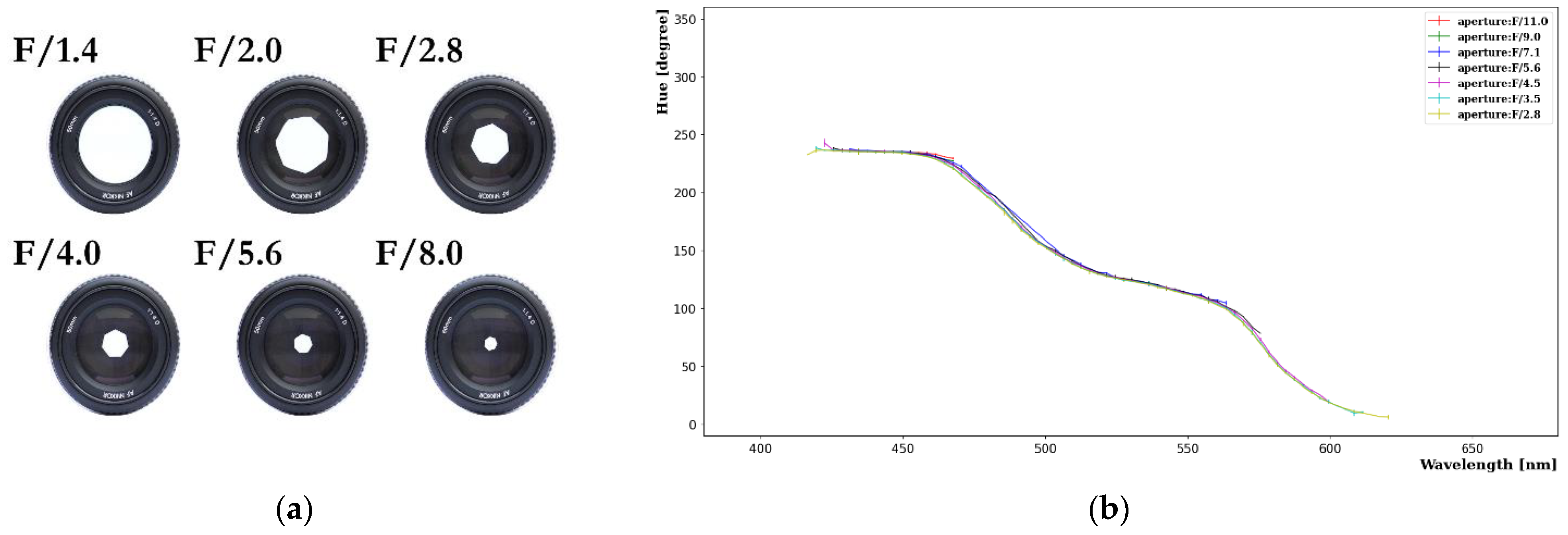

4.2. Aperture

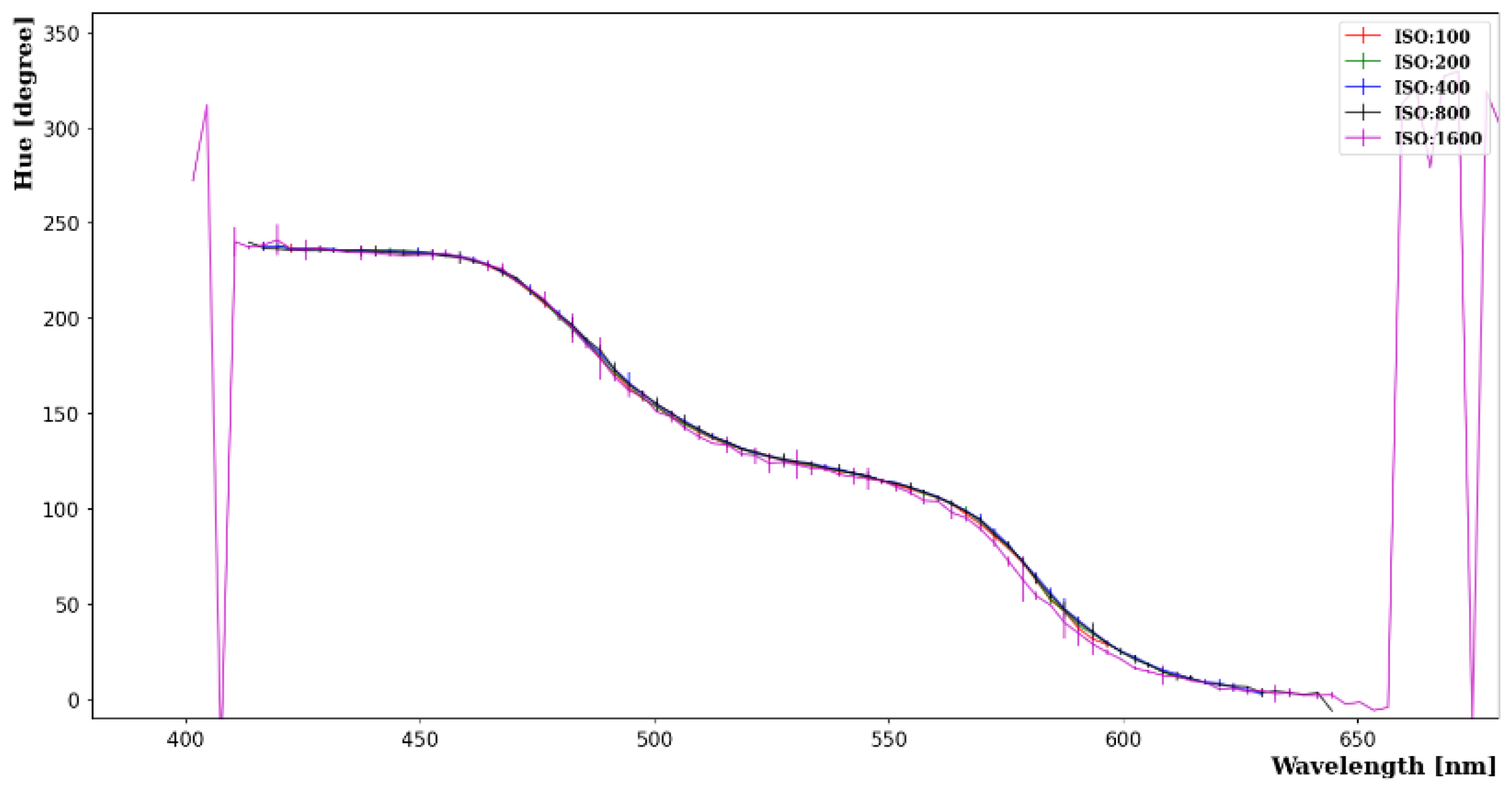

4.3. ISO Number

5. Summary and Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Park, J.S.; Lee, J.; Yeo, I.S.; Choi, W.Q.; Ahn, J.K.; Choi, J.H.; Choi, S.; Choi, Y.; Jang, H.I.; Jang, J.S.; et al. Production and optical properties of Gd-loaded liquid scintillator for the RENO neutrino detector. Nucl. Instrum. Methods Phys. Res. Sect. A Accel. Spectrometers Detect. Assoc. Equip. 2013, 707, 45–53. [Google Scholar] [CrossRef]

- Yeo, I.S.; Joo, K.K.; Kim, B.K.; So, S.H.; Song, S.H. Development of a gadolinium-loaded LAB-based aqueous scintillstor for neutrino detection. J. Korean Phys. Soc. 2013, 62, 22–25. [Google Scholar] [CrossRef]

- Lightfool, P.K.; Kudryavtsev, V.A.; Spooner, N.J.C.; Liubarsky, I.; Luscher, R. Development of a gadolinium-loaded liquid scintillator for solar neutrino detection and neutron measurements. Nucl. Instrum. Methods Phys. Res. Sect. A Accel. Spectrometers Detect. Assoc. Equip. 2004, 522, 439–446. [Google Scholar] [CrossRef]

- Smith, T.; Guild, J. The CIE colorimetric standards and their use. Trans. Opt. Soc. 1931, 33, 73–134. [Google Scholar] [CrossRef]

- Schanda, J. Colorimetry: Understanding the CIE System; Wiley: Vienna, Austria, 2007. [Google Scholar]

- Smith, A.R. Color Gamut Transform Paris. ACM Siggraph Comput. Graph. 1978, 12, 12–19. [Google Scholar] [CrossRef]

- Lee, C.H.; Lee, E.J.; Ahn, S.C. Color space conversion via gamut-based color samples of printer. J. Imaging Sci. Technol. 2001, 45, 427–435. [Google Scholar] [CrossRef]

- Chernov, V.; Alander, J.; Bochko, V. Integer-based accurate conversion between RGB and HSV color spaces. Comput. Electr. Eng. 2015, 46, 328–337. [Google Scholar] [CrossRef]

- de Oliveira, H.J.S.; de Almeida, P.L., Jr.; Sampaio, B.A.; Fernandes, J.P.A.; Pessoa-Neto, O.D.; de Lima, E.A.; de Almeida, L.F. A handheld smartphone-controlled spectrophotometer based on hue to wavelength conversion for molecular absorption and emission measurements. Sens. Actuators B Chem. 2017, 238, 1084–1091. [Google Scholar] [CrossRef]

- McGregor, T.J.; Jeffries, B.; Coutts, D.W. Three dimensional particle image velocimetry using colour coded light sheets. In Proceedings of the Fourth Australian Conference on Laser Diagnostics in Fluid Mechanics and Combustion, McLaren Vale, SA, Australia, 7–9 December 2005. [Google Scholar]

- McGregor, T.J.; Spence, D.J.; Coutts, D.W. Laser-based volumetric colour-coded three-dimensional particle velocimetry. Opt. Lasers Eng. 2007, 45, 882–889. [Google Scholar] [CrossRef]

- Ramanath, R.; Snyder, W.E.; Bilbro, G.L. Demosaicking methods for Bayer color arrays. J. Electron. Imaging 2002, 11, 306–315. [Google Scholar] [CrossRef]

- Gunturk, B.K.; Glotzbach, J.; Altunbasak, Y.; Schafer, R.W.; Mersereau, R.M. Demosaicking: Color filter array interpolation. IEEE Signal Process. Mag. 2005, 22, 44–54. [Google Scholar]

- Park, H.W.; Choi, J.W.; Choi, J.Y.; Joo, K.K.; Kim, N.R. Investigation of the hue-wavelength response of a CMOS RGB-based image sensor. Sensors 2022, 22, 9497. [Google Scholar] [CrossRef] [PubMed]

- Chumak, A.V.; Serga, A.A.; Hillebrands, B. Magnonic crystals for data processing. J. Phys. D Appl. Phys. 2017, 50, 244001. [Google Scholar] [CrossRef]

- García-Arribas, A.; Fernández, E.; Svalov, A.; Kurlyandskaya, G.V.; Barandiaran, J.M. Thin-film magneto-impedance structures with very large sensitivity. J. Magn. Magn. Mater. 2016, 400, 321–326. [Google Scholar] [CrossRef]

- Fossum, E.R. CMOS image sensors: Electronic camera-on-a-chip. IEEE Trans. Electr. Dev. 1997, 44, 1689–1698. [Google Scholar] [CrossRef]

- Koren, I.; Chapman, G.; Koren, Z. Advanced fault-tolerance techniques for a color digital camera-on-a-chip. In Proceedings of the 2001 IEEE International Symposium on Defect and Fault Tolerance in VLSI Systems, San Francisco, CA, USA, 24–26 October 2001; pp. 3–10. [Google Scholar]

- Yoshida, M.; Sonoda, T.; Nagahara, H.; Endo, K.; Sugiyama, Y. Action recognition from a single coded image. IEEE Trans. Comput. Imaging 2020, 6, 463–476. [Google Scholar] [CrossRef]

- Szeliski, R. Computer Vision: Algorithms and Applications, 2nd ed.; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Miler, H. Color filter array for CCD and CMOS image sensors using a chemically amplified, thermally cured, pre-dyed, positive tone photoresist for 365 nm lithography. Proc. SPIE 1999, 3678, 1083–1090. [Google Scholar]

- ISO 2720:1974; General Purpose Photographic Exposure Meters (Photoelectric Type)—Guide to Product Specification. International Organization for Standardization (ISO): London, UK, 1974.

- Park, H.W.; Choi, J.W.; Joo, K.K.; Kim, N.R.; Shin, C.D. Estimating Fluor Emission Spectra Using Digital Image Analysis Compared to Spectrophotometer Measurements. Sensors 2023, 23, 4291. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, E.-M.; Joo, K.-K.; Park, H.-W. Image Reconstruction and Investigation of Factors Affecting the Hue and Wavelength Relation Using Different Interpolation Algorithms with Raw Data from a CMOS Sensor. Photonics 2023, 10, 1216. https://doi.org/10.3390/photonics10111216

Kim E-M, Joo K-K, Park H-W. Image Reconstruction and Investigation of Factors Affecting the Hue and Wavelength Relation Using Different Interpolation Algorithms with Raw Data from a CMOS Sensor. Photonics. 2023; 10(11):1216. https://doi.org/10.3390/photonics10111216

Chicago/Turabian StyleKim, Eun-Min, Kyung-Kwang Joo, and Hyeon-Woo Park. 2023. "Image Reconstruction and Investigation of Factors Affecting the Hue and Wavelength Relation Using Different Interpolation Algorithms with Raw Data from a CMOS Sensor" Photonics 10, no. 11: 1216. https://doi.org/10.3390/photonics10111216