Media Forensic Considerations of the Usage of Artificial Intelligence Using the Example of DeepFake Detection

Abstract

:1. Introduction

- A literature review of regulatory documents on the usage of AI technology;

- Identification of 15 requirements for the use of high-risk AI systems and their implications in the context of DeepFake detection;

- Discussion of the possibilities and challenges of explainability in AI applications, taking into account different audiences of the explanations.

2. The State of the Art

2.1. DeepFakes

2.2. Regulatory Requirements for the Usage of AI

2.3. Explainability in Artificial Intelligence

3. Derived Requirements for the Context of DeepFake Detection

3.1. Selected Definitions from the Context of the AIA

3.1.1. Data Protection (incl. Privacy Protection)

3.1.2. Reliability, Safety, and Run-Time Constraints

3.1.3. Accountability, Autonomy, and Control

3.1.4. Transparency of Algorithms

3.1.5. Algorithm Auditability and Explainability

3.1.6. Usability, User Interface Design and Fairness (Accessibility)

3.1.7. Accuracy, Decision Confidence, and Reproduceability

3.1.8. Fairness (Non-Biased Decisions)

3.1.9. Decision Interpretability and Explainability

3.1.10. Transparency and Contestability of Decisions

3.1.11. Legal Framework Conditions

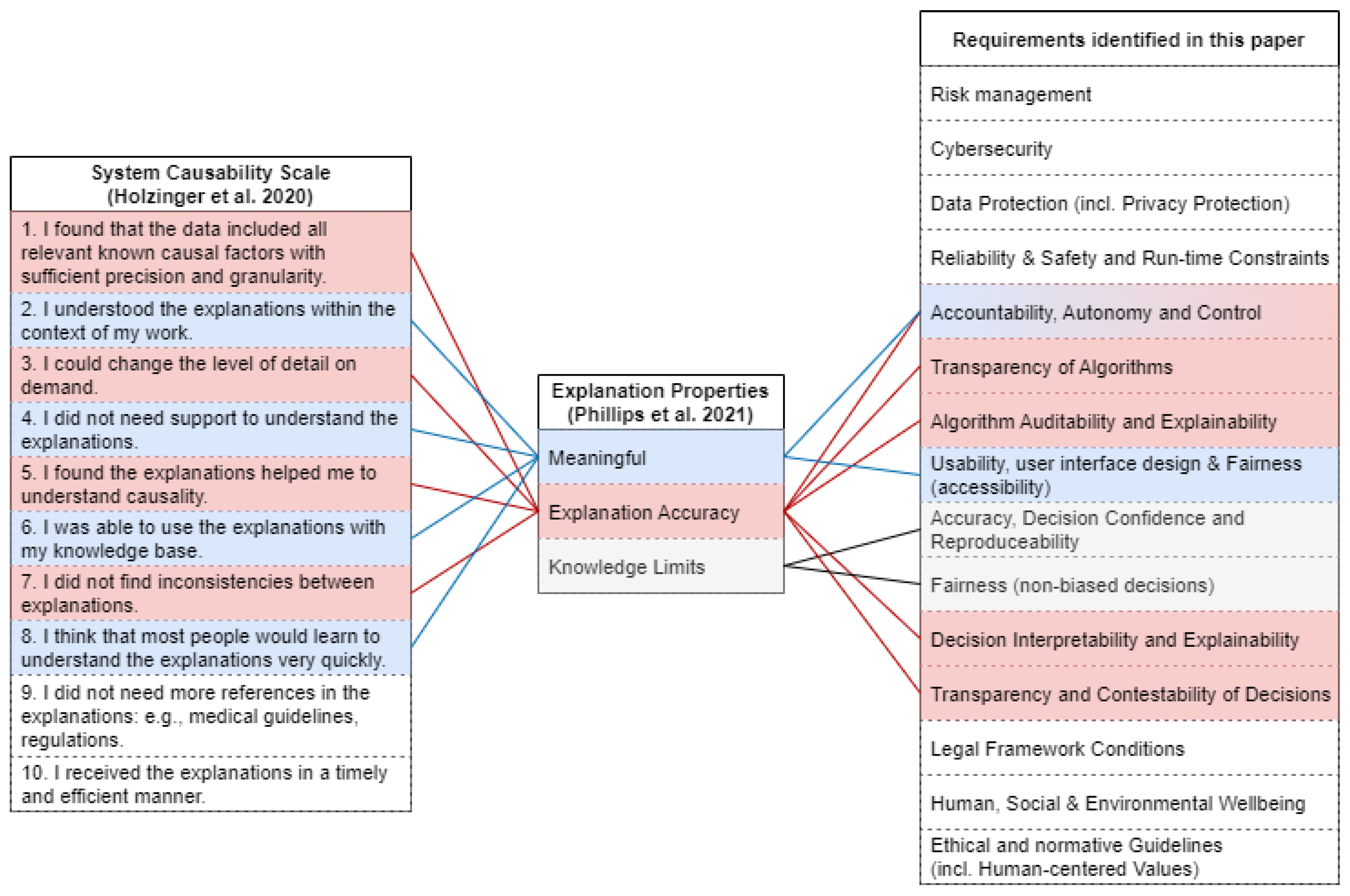

3.2. Projection of the Derived Requirements in the Existing Literature

4. Challenges in AI Requirements for DeepFake Detection

5. Summary and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- European Commission. Proposal for a Regulation of the European Parliament and of the Council Laying Down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act) and Amending Certain Union Legislative Acts. COM(2021) 206 Final. 2021. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:52021PC0206 (accessed on 14 September 2021).

- European Parliament. Amendments Adopted by the European Parliament on 14 June 2023 on the Proposal for a Regulation of the European Parliament and of the Council on Laying Down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act) and Amending Certain Union Legislative Acts. COM(2021)0206–C9-0146/2021–2021/0106(COD). 2023. Available online: https://www.europarl.europa.eu/doceo/document/TA-9-2023-0236_EN.html (accessed on 12 September 2023).

- Rathgeb, C.; Tolosana, R.; Vera-Rodriguez, R.; Busch, C. (Eds.) Handbook of Digital Face Manipulation and Detection From DeepFakes to Morphing Attacks; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Siegel, D.; Krätzer, C.; Seidlitz, S.; Dittmann, J. Media Forensics Considerations on DeepFake Detection with Hand-Crafted Features. J. Imaging 2021, 7, 108. [Google Scholar] [CrossRef]

- Siegel, D.; Krätzer, C.; Seidlitz, S.; Dittmann, J. Forensic data model for artificial intelligence based media forensics - Illustrated on the example of DeepFake detection. Electron. Imaging 2022, 34, 324-1–324-6. [Google Scholar] [CrossRef]

- U.S. Congress. Federal Rules of Evidence; Amended by the United States Supreme Court in 2021; Supreme Court of the United States: Washington, DC, USA, 2021. [Google Scholar]

- Legal Information Institute. Rule 702. Testimony by Expert Witnesses. 2019. Available online: https://www.law.cornell.edu/rules/fre/rule_702 (accessed on 15 November 2023).

- Champod, C.; Vuille, J. Scientific Evidence in Europe–Admissibility, Evaluation and Equality of Arms. Int. Comment. Evid. 2011, 9. [Google Scholar] [CrossRef]

- BSI. Leitfaden IT-Forensik; German Federal Office for Information Security: Bonn, Germany, 2011. [Google Scholar]

- Kiltz, S. Data-Centric Examination Approach (DCEA) for a Qualitative Determination of Error, Loss and Uncertainty in Digital and Digitised Forensics. Ph.D. Thesis, Otto-von-Guericke-Universität Magdeburg, Fakultät für Informatik, Magdeburg, Germany, 2020. [Google Scholar]

- European Network of Forensic Science Institutes. Best Practice Manual for Digital Image Authentication. ENFSI-BPM-DI-03. 2021. Available online: https://enfsi.eu/wp-content/uploads/2022/12/1.-BPM_Image-Authentication_ENFSI-BPM-DI-03-1.pdf (accessed on 12 January 2023).

- Siegel, D.; Kraetzer, C.; Dittmann, J. Joining of Data-driven Forensics and Multimedia Forensics for Deepfake Detection on the Example of Image and Video Data. In Proceedings of the SECURWARE 2023, The Seventeenth International Conference on Emerging Security Information, Systems and Technologies, Porto, Portugal, 25–29 September 2023; IARIA: Wilmington, DE, USA, 2023; pp. 43–51. [Google Scholar]

- European Union Agency For Cybersecurity. Remote Identity Proofing: Attacks & Countermeasures. Technical Report. 2022. Available online: https://www.enisa.europa.eu/publications/remote-identity-proofing-attacks-countermeasures (accessed on 20 November 2023).

- European Commission. Assessment List for Trustworthy Artificial Intelligence (ALTAI) for Self-Assessment. 2020. Available online: https://digital-strategy.ec.europa.eu/en/library/assessment-list-trustworthy-artificial-intelligence-altai-self-assessment (accessed on 9 March 2021).

- Wing, J.M. Trustworthy AI. Commun. ACM 2021, 64, 64–71. [Google Scholar] [CrossRef]

- Phillips, P.J.; Hahn, C.A.; Fontana, P.C.; Yates, A.N.; Greene, K.; Broniatowski, D.A.; Przybocki, M.A. Four principles of Explainable Artificial Intelligence; National Institute of Standards and Technology (NIST): Gaitersburg, MD, USA, 2021. [Google Scholar] [CrossRef]

- UNICRI, INTERPOL. Toolkit for Responsible AI Innovation in Law Enforcement: Principles for Responsible AI Innovation. Technical Report. 2023. Available online: https://unicri.it/sites/default/files/2023-06/02_Principles%20for%20Responding%20AI%20Innovation.pdf (accessed on 8 February 2024).

- Wahlster, W.; Winterhalter, C. German Standardization Roadmap on Artificial Intelligence; DIN DKE: Frankfurt am Main, Germany, 2020. [Google Scholar]

- Berghoff, C.; Biggio, B.; Brummel, E.; Danos, V.; Doms, T.; Ehrich, H.; Gantevoort, T.; Hammer, B.; Iden, J.; Jacob, S.; et al. Towards Auditable AI Systems–Current Status and Future Directions; German Federal Office for Information Security: Bonn, Germany, 2021; Available online: https://www.bsi.bund.de/SharedDocs/Downloads/EN/BSI/KI/Towards_Auditable_AI_Systems.html (accessed on 14 September 2021).

- European Commission. Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the Protection of Natural Persons with Regard to the Processing of Personal Data and on the Free Movement of such Data, and Repealing Directive 95/46/EC (General Data Protection Regulation). 2016. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:02016R0679-20160504 (accessed on 12 January 2023).

- Kraetzer, C.; Siegel, D.; Seidlitz, S.; Dittmann, J. Process-Driven Modelling of Media Forensic Investigations-Considerations on the Example of DeepFake Detection. Sensors 2022, 22, 3137. [Google Scholar] [CrossRef] [PubMed]

- Linardatos, P.; Papastefanopoulos, V.; Kotsiantis, S. Explainable AI: A Review of Machine Learning Interpretability Methods. Entropy 2021, 23, 18. [Google Scholar] [CrossRef] [PubMed]

- Holzinger, A.; Carrington, A.; Müller, H. Measuring the Quality of Explanations: The System Causability Scale (SCS). KI-Künstliche Intell. 2020, 34, 193–198. [Google Scholar] [CrossRef] [PubMed]

- Likert, R. A technique for the measurement of attitudes/by Rensis Likert. Arch. Psychol. 1932, 22, 55. [Google Scholar]

- Rosenfeld, A. Better Metrics for Evaluating Explainable Artificial Intelligence. In Proceedings of the AAMAS ’21: 20th International Conference on Autonomous Agents and Multiagent Systems, Virtual Event, UK, 3–7 May 2021; Dignum, F., Lomuscio, A., Endriss, U., Nowé, A., Eds.; ACM: New York, NY, USA, 2021; pp. 45–50. [Google Scholar]

- Jüttner, V.; Grimmer, M.; Buchmann, E. ChatIDS: Explainable Cybersecurity Using Generative AI. In Proceedings of the SECURWARE 2023, The Seventeenth International Conference on Emerging Security Information, Systems and Technologies, Porto, Portugal, 25–29 September 2023; IARIA: Wilmington, DE, USA, 2023; pp. 7–10. [Google Scholar]

- Lapuschkin, S.; Binder, A.; Montavon, G.; Müller, K.R.; Samek, W. The LRP Toolbox for Artificial Neural Networks. J. Mach. Learn. Res. 2016, 17, 1–5. [Google Scholar]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why Should I Trust You?”: Explaining the Predictions of Any Classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar]

- Lundberg, S.M.; Lee, S.I. A Unified Approach to Interpreting Model Predictions. In Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Chattopadhyay, A.; Sarkar, A.; Howlader, P.; Balasubramanian, V.N. Grad-CAM++: Generalized Gradient-Based Visual Explanations for Deep Convolutional Networks. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision, WACV 2018, Lake Tahoe, NV, USA, 12–15 March 2018; IEEE Computer Society: Washington, DC, USA, 2018; pp. 839–847. [Google Scholar] [CrossRef]

- Gossen, F.; Margaria, T.; Steffen, B. Towards Explainability in Machine Learning: The Formal Methods Way. IT Prof. 2020, 22, 8–12. [Google Scholar] [CrossRef]

- Rudin, C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 2019, 1, 206–215. [Google Scholar] [CrossRef] [PubMed]

- European Commission. Communication from the Commission to the European Parliament, the Council, the European Economic and Social Committee and the Regions:Building Trust in Human-Centric Artificial Intelligence. COM(2019) 168 Final. 2019. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A52019DC0168&qid=1707400044663 (accessed on 8 February 2024).

- European Commission. Independent High-Level Expert Group on Artificial Intelligence set up by the European Commision Ethics Guidlines for Trustworthy AI. 2019. Available online: https://ec.europa.eu/newsroom/dae/redirection/document/60419 (accessed on 9 March 2021).

- Krätzer, C.; Siegel, D.; Seidlitz, S.; Dittmann, J. Human-in-control and quality assurance aspects for a benchmarking framework for DeepFake detection models. Electron. Imaging 2023, 35, 379–1–379–6. [Google Scholar] [CrossRef]

- Unesco. Draft Text of the Recommendation on the Ethics of Artifical Intelligence. In Proceedings of the Intergovernmental Meeting of Experts (Category II) Related to a Draft Recommendation on the Ethics of Artificial Intelligence, Online, 21–25 June 2021. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000377897 (accessed on 23 November 2021).

- Roccetti, M.; Delnevo, G.; Casini, L.; Salomoni, P. A Cautionary Tale for Machine Learning Design: Why we Still Need Human-Assisted Big Data Analysis. Mob. Networks Appl. 2020, 25, 1075–1083. [Google Scholar] [CrossRef]

- Cui, Y.; Koppol, P.; Admoni, H.; Niekum, S.; Simmons, R.; Steinfeld, A.; Fitzgerald, T. Understanding the Relationship between Interactions and Outcomes in Human-in-the-Loop Machine Learning. In Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence, IJCAI-21, Montreal, QC, Canada, 19–27 August 2021; Zhou, Z.H., Ed.; International Joint Conferences on Artificial Intelligence Organization: Online, 2021; pp. 4382–4391. [Google Scholar] [CrossRef]

- Jena, S.; Sundarrajan, S.; Meena, A.; Chandavarkar, B.R. Human-in-the-Loop Control and Security for Intelligent Cyber-Physical Systems (CPSs) and IoT. In Proceedings of the Advances in Data Science and Artificial Intelligence, Patna, India, 23–24 April 2022; Misra, R., Kesswani, N., Rajarajan, M., Veeravalli, B., Brigui, I., Patel, A., Singh, T.N., Eds.; Springer: Cham, Switzerland, 2023; pp. 393–403. [Google Scholar]

- Chen, C.; Li, O.; Tao, D.; Barnett, A.; Rudin, C.; Su, J. This Looks Like That: Deep Learning for Interpretable Image Recognition. In Proceedings of the Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, Vancouver, BC, Canada, 8–14 December 2019; Wallach, H.M., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E.B., Garnett, R., Eds.; 2019; pp. 8928–8939. [Google Scholar]

- Leventi-Peetz, A.; Östreich, T. Deep Learning Reproducibility and Explainable AI (XAI); Federal Office for Information Security (BSI) Germany: Bonn, Germany, 2022; Available online: https://www.bsi.bund.de/SharedDocs/Downloads/EN/BSI/KI/Deep_Learning_Reproducibility_and_Explainable_AI.html (accessed on 29 November 2023).

- Liao, Q.V.; Gruen, D.; Miller, S. Questioning the AI: Informing Design Practices for Explainable AI User Experiences. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–15. [Google Scholar]

| Requirements | Descriptions |

|---|---|

| Risk management | The risk management system shall consist of a continuous iterative process run throughout the entire life cycle of a high-risk AI system, requiring regular systematic updating. It shall identify, estimate and evaluate current and arising risks. |

| Cybersecurity | High-risk AI systems shall be designed, implemented and configured in such a way that they achieve an appropriate level of cybersecurity (i.e., resilience against targeted attacks) and perform consistently in this respect throughout their lifecycle. |

| Data Protection (incl. Privacy Protection) | AI systems should respect and uphold privacy rights and corresponding data protection regulation and ensure the confidentiality, integrity, authenticity and non-repudiation of the data. This particularly includes training, validation and testing data sets containing person-related information. A more detailed description is given in Section 3.1.1. |

| Reliability and Safety and Run-time Constraints | AI systems should reliably operate in accordance with their intended purpose throughout their life cycle. The defined run-time constrains should be kept throughout the whole life cycle, even under potentially significantly growing workloads. A more detailed description is given in Section 3.1.2. |

| Accountability, Autonomy and Control | Those responsible for the various phases of the AI system life cycle should be identifiable and accountable for the outcomes of the system, and human oversight of AI systems should be enabled. A more detailed description is given in Section 3.1.3. |

| Transparency of Algorithms | The decision algorithm of the AI system and its evaluation criteria and results should be made public (either to the public or for a specific audience (e.g., certified auditors)). A more detailed description is given in Section 3.1.4. |

| Algorithm Auditability and Explainability | There should exist methods that enable third parties to examine and review the behavior of an algorithm and thereby allow them to identify what the AI system is doing and why. This may include detailed descriptions of the system’s architecture and processes, trained models (incl. the training data) and input data. A more detailed description is given in Section 3.1.5. |

| Usability, User Interface Design and Fairness (accessibility) | The user interface design for an AI system should enable understandability of decisions and explanations. AI systems should in their usage be inclusive and accessible (independent of disabilities), and should not involve or result in unfair discrimination against individuals, communities, or groups. A more detailed description is given in Section 3.1.6. |

| Accuracy, Decision Confidence and Reproduceability | AI systems must have a known (or potential) and acceptable error rate. For each decision, a level of decision confidence in its result should be communicated. A more detailed description is given in Section 3.1.7. |

| Fairness (non-biased decisions) | Results of AI system usage (i.e., decisions) should not involve or result in unfair discrimination against individuals, communities, or groups. A more detailed description is given in Section 3.1.8. |

| Decision Interpretability and Explainability | Every decision made by the AI system must be interpretable and explainable, together with an confidence estimate for this decision. A more detailed description is given in Section 3.1.9. |

| Transparency and Contestability of Decisions | There should be transparency and responsible disclosure to ensure people know when they are being significantly impacted by an AI system and can find out when an AI system is engaging with them. When an AI system significantly impacts a person, community, group, or environment, there should be a timely process to allow people to challenge the use or output of the system. A more detailed description is given in Section 3.1.10. |

| Legal Framework Conditions | The usage of AI systems is governed by national and international legislation. For each AI application, the corresponding legal situation has to be accessed and considered in the design, implementation, configuration, and operation of the system. Adherence to these requirements has to be documented. A more detailed description is given in Section 3.1.11. |

| Human, Social and Environmental Well-being | AI systems should benefit individuals, society, and the environment. |

| Ethical and Normative Guidelines (incl. human-centered values) | Amongst other issues, AI systems should respect human rights, diversity, and the autonomy of individuals. |

| Category | Requirements | AIA [1,2] | BSI [18,19] | Unesco [36] | NIST [16] | Interpol [17] |

|---|---|---|---|---|---|---|

| IT Systems, Protocols and Compliance | Risk management | Risk management system (Art. 9) | - | Proportionality and Do No Harm | - | - |

| Cybersecurity | Accuracy, robustness and cybersecurity (Art. 15) | Security | - | Security (resilience) | - | |

| Data Protection (incl. Privacy Protection) | Data and data governance (Art. 10) | Data protection | Right to Privacy, and Data Protection | Privacy | Privacy | |

| Reliability and Safety and Run-time Constraints | - | Reliability; Safety | - | Reliability; Robustness; Safety | Robustness and Safety | |

| Accountability, Autonomy and Control | Human Oversight (Art. 14) | Autonomy and control | Responsibility and accountability | Accountability | Human autonomy (i.e., Human Control and Oversight, Human Agency) Accountability | |

| Algorithms and their training | Transparency of Algorithms | Technical documentation (Art. 11) | Transparency and interpretability | - | Transparency | Transparency |

| Algorithm Auditability and Explainability | Technical documentation (Art. 11) | - | - | Explainability | Traceability and Auditability | |

| UI Usability | Usability, User Interface Design and Fairness (accessibility) | - | - | Fairness and non-discrimination | - | Fairness (i.e., Diversity and Accessibility) |

| Decisions | Accuracy, Decision Confidence and Reproduceability | Accuracy, robustness and cybersecurity (Art. 15) | - | - | Accuracy | Accuracy |

| Fairness (non-biased decisions) | Data and data governance (Art. 10) | Fairness | Fairness and non-discrimination | Fairness; Mitigation of harmful bias | Fairness (i.e., Equality and Non-discrimination, Protecting Vulnerable Groups) | |

| Decision Interpretability and Explainability | Human Oversight (Art. 14) | - | Human oversight and determination;Transparency and explainability | Explainability; Interpretability | Explainability | |

| Transparency and Contestability of Decisions | Transparency and provision of information to users (Art. 13) | - | Transparency and explainability | Transparency | Fairness (i.e., Contestability and Redress) | |

| External Influences | Legal Framework Conditions | The document itself states legal conditions for EU. Technical documentation (Art. 11) and record keeping (Art. 12) | Legal framework conditions | - | - | Lawfulness (i.e., Legitimacy, Necessity, Proportionality) |

| Human, Social and Environmental Well-being | - | Social requirements | Sustainability; Awareness and literacy | - | Minimization of Harm (i.e., Human and Environmental Well-being, Efficiency) | |

| Ethical and Normative Guidelines (incl. Human-centered Values) | - | Ethical and normative guidelines | Multi-stakeholder and adaptive governance and collaboration | - | - |

| Requirement | New ID | SCS ID | SCS Aspects as Discussed in Holzinger et al. (2020) ([23] or New Criteria |

|---|---|---|---|

| Risk management | |||

| Cybersecurity | |||

| Data Protection (incl. Privacy Protection) | |||

| Reliability and Safety and Run-time Constraints | 10 | I received the explanations in a timely and efficient manner. | |

| Accountability, Autonomy and Control | 2 | I understood the explanations within the context of my work. | |

| 3 | I could change the level of detail on demand. | ||

| 4 | I did not need support to understand the explanations. | ||

| 5 | I found the explanations helped me to understand causality. | ||

| 7 | I did not find inconsistencies between explanations. | ||

| 10 | I received the explanations in a timely and efficient manner. | ||

| Transparency of Algorithms | new | Is the algorithm published? | |

| new | Is the algorithm accepted by the corresponding community? | ||

| Algorithm Auditability and Explainability | 4 | I did not need support to understand the explanations. | |

| Usability, User Interface Design, and Fairness (accessibility) | 2 | I understood the explanations within the context of my work. | |

| 3 | I could change the level of detail on demand. | ||

| 4 | I did not need support to understand the explanations. | ||

| 8 | I think that most people would learn to understand the explanations very quickly. | ||

| 9 | I did not need more references in the explanations: e.g., medical guidelines, regulations. | ||

| Accuracy, Decision Confidence, and Reproduceability | new | I repeated the processing on the same data and came to the same results and conclusions. | |

| Fairness (non-biased decisisons) | |||

| Decision Interpretability and Explainability (operator’s perspective) | 1 | I found that the data included all relevant known causal factors with sufficient precision and granularity. | |

| 2 | I understood the explanations within the context of my work. | ||

| 3 | I could change the level of detail on demand. | ||

| 4 | I did not need support to understand the explanations. | ||

| 5 | I found the explanations helped me to understand causality. | ||

| 6 | I was able to use the explanations with my knowledge base. | ||

| 7 | I did not find inconsistencies between explanations. | ||

| 8 | I think that most people would learn to understand the explanations very quickly. | ||

| 10 | I received the explanations in a timely and efficient manner. | ||

| new | I would be able to explain the decision (and its reason(s)) to another operator. | ||

| new | I would be able to explain the decision (and its reason(s)) to an affected entity. | ||

| Transparency and Contestability of Decisions (affected entity/-ies’ perspective) | 1 | I found that the data included all relevant known causal factors with sufficient precision and granularity. | |

| 3 | I could change the level of detail on demand. | ||

| 4 | I did not need support to understand the explanations. | ||

| 5 | I found the explanations helped me to understand causality | ||

| 8 | I think that most people would learn to understand the explanations very quickly. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Siegel, D.; Kraetzer, C.; Seidlitz, S.; Dittmann, J. Media Forensic Considerations of the Usage of Artificial Intelligence Using the Example of DeepFake Detection. J. Imaging 2024, 10, 46. https://doi.org/10.3390/jimaging10020046

Siegel D, Kraetzer C, Seidlitz S, Dittmann J. Media Forensic Considerations of the Usage of Artificial Intelligence Using the Example of DeepFake Detection. Journal of Imaging. 2024; 10(2):46. https://doi.org/10.3390/jimaging10020046

Chicago/Turabian StyleSiegel, Dennis, Christian Kraetzer, Stefan Seidlitz, and Jana Dittmann. 2024. "Media Forensic Considerations of the Usage of Artificial Intelligence Using the Example of DeepFake Detection" Journal of Imaging 10, no. 2: 46. https://doi.org/10.3390/jimaging10020046