Analytical Study of Colour Spaces for Plant Pixel Detection

Abstract

:1. Introduction

2. Methods

2.1. Colour Representation

2.1.1.

2.1.2. Normalized

2.1.3.

2.1.4.

2.1.5. CIE-Lab

2.2. Evaluation of Colour Space Representations

2.2.1. EMD on GMMs

2.2.2. Two-Class Variance Ratio

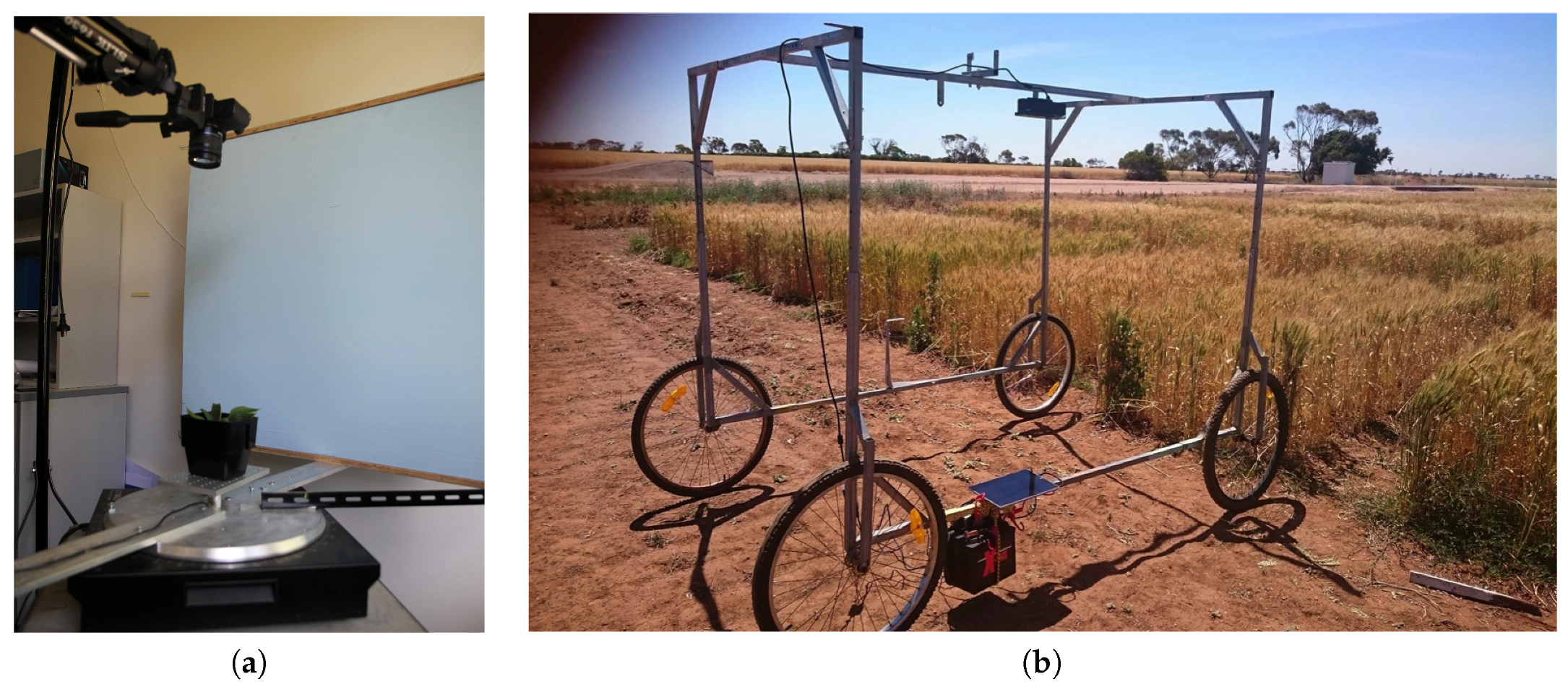

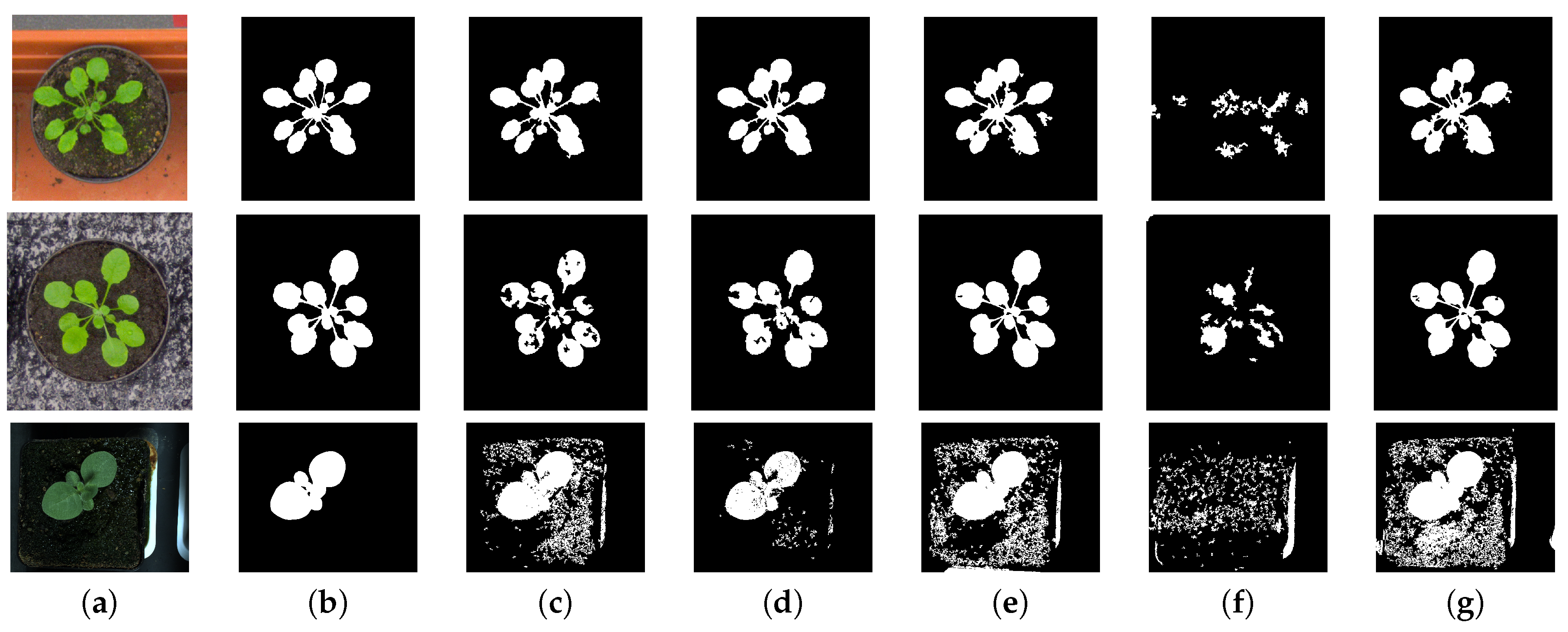

2.3. Dataset and Experiments

3. Results

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- An, N.; Welch, S.M.; Markelz, R.C.; Baker, R.L.; Palmer, C.M.; Ta, J.; Maloof, J.N.; Weinig, C. Quantifying time-series of leaf morphology using 2D and 3D photogrammetry methods for high-throughput plant phenotyping. Comput. Electr. Agric. 2017, 135, 222–232. [Google Scholar] [CrossRef]

- An, N.; Palmer, C.M.; Baker, R.L.; Markelz, R.C.; Ta, J.; Covington, M.F.; Maloof, J.N.; Welch, S.M.; Weinig, C. Plant high-throughput phenotyping using photogrammetry and imaging techniques to measure leaf length and rosette area. Comput. Electr. Agric. 2016, 127, 376–394. [Google Scholar] [CrossRef]

- Kovalchuk, N.; Laga, H.; Cai, J.; Kumar, P.; Parent, B.; Lu, Z.; Miklavcic, S.J.; Haefele, S.M. Phenotyping of plants in competitive but controlled environments: A study of drought response in transgenic wheat. Funct. Plant Biol. 2016, 44, 290–301. [Google Scholar] [CrossRef]

- Kumar, P.; Cai, J.; Miklavcic, S.J. High-throughput 3D modelling of plants for phenotypic analysis. In Proceedings of the 27th Conference on Image and Vision Computing New Zealand; ACM: New York, NY, USA, 2012; pp. 301–306. [Google Scholar]

- Kumar, P.; Connor, J.N.; Miklavcic, S.J. High-throughput 3D reconstruction of plant shoots for phenotyping. In Proceedings of the 2014 13th International Conference on Automation Robotics and Computer Vision (ICARCV), Singapore, 10–12 December 2014. [Google Scholar]

- Comaniciu, D.; Meer, P. Mean Shift: A robust approach toward feature space analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 603–619. [Google Scholar] [CrossRef]

- Golzarian, M.R.; Cai, J.; Frick, R.A.; Miklavcic, S.J. Segmentation of cereal plant images using level set methods, a comparative study. Int. J. Inf. Electr. Eng. 2011, 1, 72–78. [Google Scholar] [CrossRef]

- Valliammal, N.; Geethalakshmi, S.N. A novel approach for plant leaf image segmentation using fuzzy clustering. Int. J. Comput. Appl. 2012, 44, 10–20. [Google Scholar] [CrossRef]

- Phung, S.L.; Bouzerdoum, A.; Chai, D. Skin segmentation using color pixel classification: Analysis and comparison. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 148–154. [Google Scholar] [CrossRef] [PubMed]

- Jones, M.J.; Rehg, J.M. Statistical color models with application to skin detection. J. Comput. Vis. 2002, 46, 81–96. [Google Scholar] [CrossRef]

- Vezhnevets, V.; Sazonov, V.; Andreeva, A. A survey on pixel-based skin color detection techniques. Proc. Graph. 2003, 3, 85–92. [Google Scholar]

- Prati, A.; Mikić, I.; Trivedi, M.M.; Cucchiara, R. Detecting moving shadows: Formulation, algorithms and evaluation. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 918–923. [Google Scholar] [CrossRef]

- Fleyeh, H. Color detection and segmentation for road and traffic signs. Cybern. Intell. Syst. 2004, 2, 809–814. [Google Scholar]

- Kumar, P.; Sengupta, K.; Lee, A. A comparative study of different color spaces for foreground and shadow detection for traffic monitoring system. In Proceedings of the The IEEE 5th International Conference onIntelligent Transportation Systems, Singapore, 6 September 2002; pp. 100–105. [Google Scholar]

- Khattab, D.; Ebied, H.M.; Hussein, A.S.; Tolba, M.F. Color image segmentation based on different color space models using automatic GrabCut. Sci. World J. 2014, 127, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Hansch, R.; Ma, L.; Hellwich, O. Comparison of different color spaces for image segmentation using Graph-Cut. In Proceedings of the International Conference on Computer Vision Theory and Applications (VISAPP), Lisbon, Portugal, 5–8 January 2014; pp. 301–308. [Google Scholar]

- Muselet, D.; Macaire, L. Combining color and spatial information for object recognition across illumination changes. Pattern Recognit. Lett. 2007, 28, 1176–1185. [Google Scholar] [CrossRef]

- Golzarian, M.R.; Lee, M.K.; Desbiolles, J.M.A. Evaluation of color indices for improved segmentation of plant images. Trans. ASABE 2012, 55, 261–273. [Google Scholar] [CrossRef]

- Bianco, S.; Cusano, C.; Napoletano, P.; Schettini, R. Improving CNN-Based Texture Classification by Color Balancing. J. Imaging 2017, 3, 33. [Google Scholar] [CrossRef]

- Levina, E.; Bickel, P. The earth mover distance is the Mallows distance: Some insights from statistics. In Proceedings of the IEEE International Conference on Computer Vision, Vancouver, BC, Canada, 7–14 July 2001; Volume 2, pp. 251–256. [Google Scholar]

- Zhao, Q.; Brennan, S.; Tao, H. Differential EMD tracking. In Proceedings of the IEEE International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–21 October 2007; pp. 1–8. [Google Scholar]

- Kumar, P.; Dick, A. Adaptive earth mover distance-based Bayesian multi-target tracking. Comput. Vis. IET 2013, 7, 246–257. [Google Scholar] [CrossRef]

- Gevers, T.; Weijer, J.V.D.; Stokman, H. Color Feature Detection: An Overview; Color Image Processing: Methods and Applications; CRC Press: Boca Raton, FL, USA, 2006. [Google Scholar]

- Gevers, T.; Stokman, H. Robust histogram construction from color invariants for object recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 113–118. [Google Scholar] [CrossRef] [PubMed]

- Bianco, S.; Gasparini, F.; Schettini, R. Adaptive Skin Classification Using Face and Body Detection. IEEE Trans. Image Process. 2015, 24, 4756–4765. [Google Scholar] [CrossRef] [PubMed]

- Wyszecki, G.; Stiles, W.S. Color Science: Concepts and Methods, Quantitative Data and Formulae; Wiley: Hoboken, NJ, USA, 2000; Chapter 6. [Google Scholar]

- Busin, L.; Vandenbroucke, N.; Macaire, L. Color spaces and image segmentation. Adv. Imaging Electr. Phys. 2009, 151, 65–168. [Google Scholar]

- Rubner, Y.; Tomasi, C.; Guibas, L.J. The earth mover distance as a metric for image retrieval. Int. J. Comput. Vis. 2000, 40, 99–121. [Google Scholar] [CrossRef]

- Hitchcock, F.L. The distribution of a product from several sources to numerous localities. J. Math. Phys. 1941, 20, 224–230. [Google Scholar] [CrossRef]

- Mahalanobis, P.C. On the generalised distance in statistics. Proc. Natl. Inst. Sci. India 1936, 2, 49–55. [Google Scholar]

- Liu, Y.; Schmidt, K.L.; Cohn, J.F.; Mitra, S. Facial asymmetry quantification for expression invariant human identification. Comput. Vis. Image Underst. 2003, 91, 138–159. [Google Scholar] [CrossRef]

- Liu, Y.; Teverovskiy, L.; Carmichael, O.; Kikinis, R.; Shenton, M.; Carter, C.; Stenger, V.; Davis, S.; Aizenstein, H.; Becker, J.; et al. Discriminative MR image feature analysis for automatic schizophrenia and Alzheimer’s disease classification. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2004; Barillot, C., Haynor, D., Hellier, P., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; Volume 3216, pp. 393–401. [Google Scholar]

- Collins, R.T.; Liu, Y.; Leordeanu, M. Online selection of discriminative tracking features. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1631–1643. [Google Scholar] [CrossRef] [PubMed]

| Plant Type | Colour Space | EMD Distance | Variance Ratio |

|---|---|---|---|

| Arabidopsis | 282.46 | 1.17 | |

| 847.24 | 1.09 | ||

| - | 246.14 | 2.19 | |

| 264.67 | 2.23 | ||

| 155.01 | 1.67 | ||

| Tobacco | 228.65 | 0.76 | |

| 1389.56 | 0.99 | ||

| - | 415.17 | 0.43 | |

| 347.63 | 1.11 | ||

| 235.15 | 0.47 |

| Background Type | Colour Space | EMD Distance | Variance Ratio |

|---|---|---|---|

| Contrasting Green-Red | 230.36 | 1.07 | |

| 401.81 | 0.78 | ||

| - | 182.77 | 1.77 | |

| 39.43 | 1.88 | ||

| 178.47 | 1.57 | ||

| 282.16 | 1.27 | ||

| 404.70 | 2.13 | ||

| Green-Black | - | 272.88 | 9.17 |

| 257.87 | 2.24 | ||

| 181.57 | 3.62 |

| Plant type | Percentage Foreground Background Segmentation | ||||

|---|---|---|---|---|---|

| - | |||||

| A1 | 96.32 % | 96.67% | 93.40% | 10.87% | 94.25% |

| A2 | 90.53% | 98.51% | 95.54% | 49.69% | 97.83% |

| A3 | 64.8% | 89.6% | 57.23% | 19.56% | 51.79% |

| Plant Type | Plant Segmentation | Leaf Segmentation | ||

|---|---|---|---|---|

| Mean | Std | Mean | Std | |

| A1 | 92.14% | 2.82 % | 47.14% | 11.14% |

| A2 | 93.31% | 2.41 % | 55.16% | 13.15% |

| A3 | 76.52% | 35.32% | 34.03% | 22.35% |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kumar, P.; Miklavcic, S.J. Analytical Study of Colour Spaces for Plant Pixel Detection. J. Imaging 2018, 4, 42. https://doi.org/10.3390/jimaging4020042

Kumar P, Miklavcic SJ. Analytical Study of Colour Spaces for Plant Pixel Detection. Journal of Imaging. 2018; 4(2):42. https://doi.org/10.3390/jimaging4020042

Chicago/Turabian StyleKumar, Pankaj, and Stanley J. Miklavcic. 2018. "Analytical Study of Colour Spaces for Plant Pixel Detection" Journal of Imaging 4, no. 2: 42. https://doi.org/10.3390/jimaging4020042