Adaptive Multi-Scale Entropy Fusion De-Hazing Based on Fractional Order

Abstract

1. Introduction

2. Materials and Methods

2.1. Underwater Image Processing Algorithms

2.2. Hazy Image Processing Algorithms

2.3. Key Contributions and Features of a Proposed Scheme

- A modified global contrast enhancement and a multi-scale illumination/reflectance model-based algorithm using fractional order calculus-based kernels.

- Relatively low-complexity underwater image enhancement algorithm utilizing color correction and contrast operators.

- Frequency-based approach to image de-hazing and underwater image enhancement using successive, simultaneous high frequency component augmentation and low frequency component reduction.

- Feasible hazy and underwater image enhancement algorithm for relatively easier hardware architecture implementation.

- Avoidance of the dark channel prior based stages and iterative schemes by utilizing combined multi-level convolution using fractional derivatives.

3. Proposed Algorithms

3.1. Selection and Modification of Global Contrast Operator

3.1.1. Gain Offset Correction-Based Stretching (GOCS)

3.2. Proposed Multi-Scale Local Contrast Operator

3.2.1. Multi-Scale Fractional Order-Based Illumination/Reflectance Contrast Enhancement (Multi-fractional-IRCES)

3.2.2. Preliminary Results

3.3. Problems and Solutions

4. Results

4.1. Underwater Images

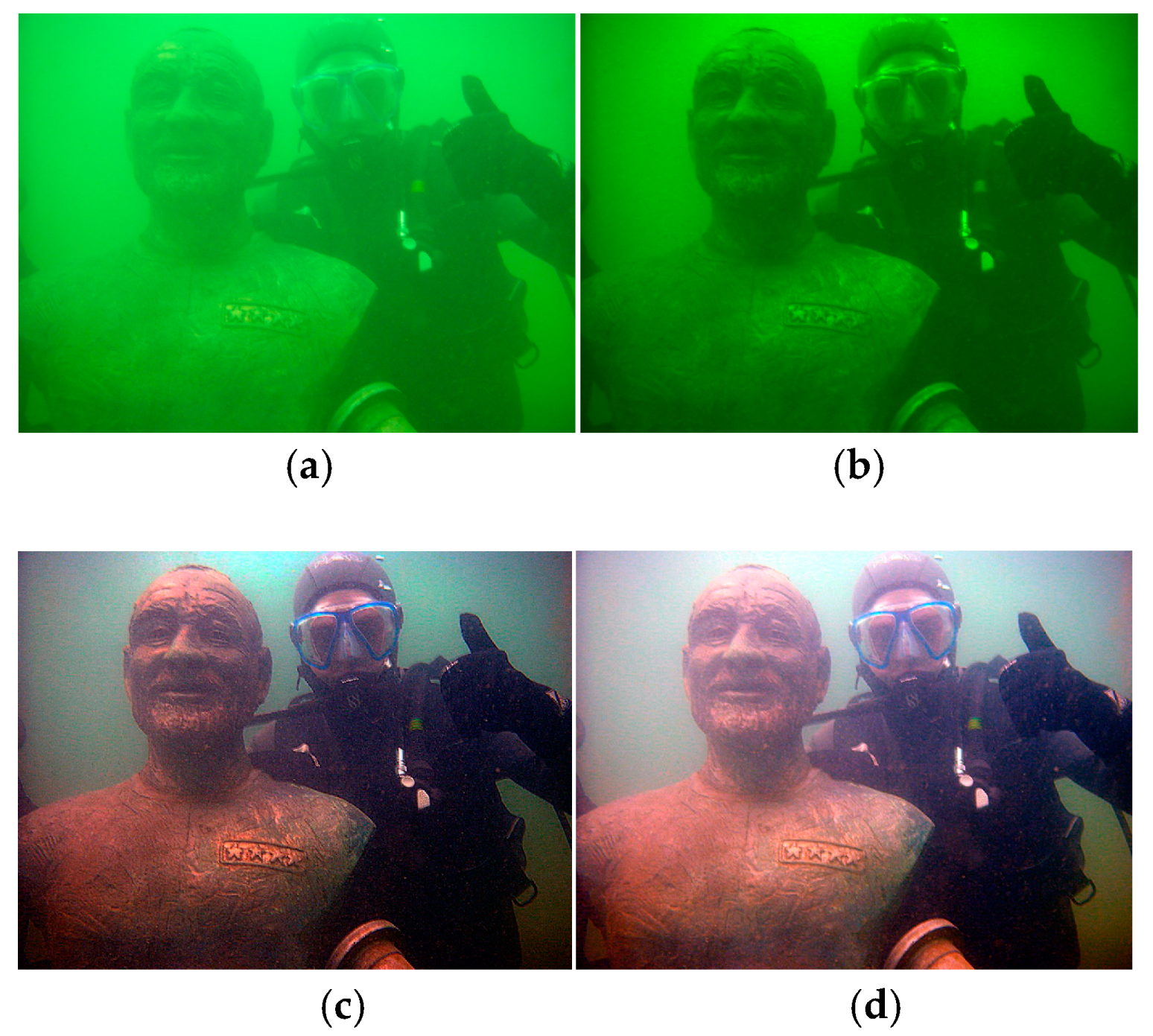

4.1.1. Subjective Evaluation

4.1.2. Comparison with Other Algorithms

4.2. Hazy Image Enhancement Results

- DCP (standard and fast versions) by He, et al. [32]: constant coefficient, , patch size, Ω = 15, regularization parameter, (standard version) and radius of guided filter, r = 24 (fast version).

- Color Attenuation Prior (CAP) by Zhu, et al. [91]: scattering coefficient, or 1; linear coefficients: ; transmission lower and upper bounds: , , regularization parameter, .

- Multi-Scale Convolutional Neural Network by Ren et al. [46]: for canyon image and for other images.

- Artificial Multi-Exposure-based Fusion (AMEF) by Galdran [54]: clip limit value, c as specified.

- PDE-Retinex set to the default parameters for minimal run-time: , .

- PDE-IRCES set to the default parameters for minimal run-time: .

5. Visual Comparison of AMEF and PA and Discussion

6. Conclusions

Funding

Acknowledgments

Conflicts of Interest

References

- Lee, S.; Yun, S.; Nam, J.H.; Won, C.S.; Jung, S.W. A review on dark channel prior based image dehazing algorithms. EURASIP J. Image Video Process. 2016, 2016, 1–23. [Google Scholar] [CrossRef]

- Schettini, R.; Corchs, S. Underwater Image Processing: State of the Art of Smoothing and Image Enhancement Methods. EURASIP J. Adv. Signal Process. 2010, 2010, 1–14. [Google Scholar] [CrossRef]

- Gibson, K.; Nguyen, T. Fast single image fog removal using the adaptive Wiener Filter. In Proceedings of the 2013 20th IEEE International Conference on Image Processing (ICIP), Melbourne, Australia, 15–18 September 2013. [Google Scholar]

- Galdran, A.; Pardo, D.; Picón, A.; Alvarez-Gila, A. Automatic red-channel underwater image restoration. J. Vis. Commun. Image R. 2015, 26, 132–145. [Google Scholar] [CrossRef]

- Li, C.; Guo, J.; Wang, B.; Cong, R.; Zhang, Y.; Wang, J. Single underwater image enhancement based on color cast removal and visibility restoration. J. Electron. Imaging 2016, 25, 1–15. [Google Scholar] [CrossRef]

- Li, C.; Guo, J. Underwater image enhancement by dehazing and color correction. SPIE J. Electron. Imaging 2015, 24, 033023. [Google Scholar] [CrossRef]

- Zhao, X.; Jin, T.; Qu, S. Deriving inherent optical properties from background color and underwater image enhancement. Ocean Eng. 2015, 94, 163–172. [Google Scholar] [CrossRef]

- Chiang, J.; Chen, Y. Underwater image enhancement by wavelength compensation and dehazing. IEEE Trans. Image Process. 2012, 21, 1756–1769. [Google Scholar] [CrossRef] [PubMed]

- Wen, H.; Tian, Y.; Huang, T.; Gao, W. Single underwater image enhancement with a new optical model. In Proceedings of the IEEE International Symposium on Conference on Circuits and Systems (ISCAS), Beijing, China, 19–23 May 2013. [Google Scholar]

- Serikawa, S.; Lu, H. Underwater image dehazing using joint trilateral filter. Comput. Electr. Eng. 2014, 40, 41–50. [Google Scholar] [CrossRef]

- Carlevaris-Bianco, N.; Mohan, A.; Eustice, R.M. Initial results in underwater single image dehazing. In Proceedings of the IEEE International Conference on Oceans, Seattle, WA, USA, 20–23 September 2010. [Google Scholar]

- Chiang, J.Y.; Chen, Y.C.; Chen, Y.F. Underwater Image Enhancement: Using Wavelength Compensation and Image Dehazing (WCID). In International Conference on Advanced Concepts for Intelligent Vision Systems; Springer: Berlin/Heidelberg, Germany; Ghent, Belgium, 22–25 August 2011; pp. 372–383. [Google Scholar]

- Iqbal, K.; Salam, R.A.; Osman, A.; Talib, A.Z. Underwater image enhancement using an integrated color model. IAENG Int. J. Comput. Sci. 2007, 34, 529–534. [Google Scholar]

- Ghani, A.S.A.; Isa, N.A.M. Underwater image quality enhancement through integrated color model with Rayleigh distribution. Appl. Soft Comput. 2015, 27, 219–230. [Google Scholar] [CrossRef]

- Fu, X.; Zhuang, P.; Huang, Y.; Liao, Y.; Zhang, X.P.; Ding, X. A retinex-based enhancing approach for single underwater image. In Proceedings of the International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014. [Google Scholar]

- Gouinaud, H.; Gavet, Y.; Debayle, J.; Pinoli, J.C. Color Correction in the Framework of Color Logarithmic Image Processing. In Proceedings of the IEEE 7th International Symposium on Image and Signal Processing and Analysis (ISPA), Dubrovnik, Croatia, 4–6 September 2011. [Google Scholar]

- Bazeille, S.; Quidu, I.; Jaulin, L.; Malkasse, J.P. Automatic underwater image pre-processing. In Proceedings of the Characterisation du Milieu Marin, CMM, Brest, France, 16–19 October 2006. [Google Scholar]

- Chambah, M.; Semani, D.; Renouf, A.; Coutellemont, P.; Rizzi, A. Underwater Color Constancy: Enhancement of Automatic Live Fish Recognition. In Proceedings of the 16th Annual symposium on Electronic Imaging, San Jose, CA, USA, 18–22 January 2004. [Google Scholar]

- Torres-Mendez, L.A.; Dudek, G. Color correction of underwater images for aquatic robot inspection. In Proceedings of the 5th International Workshop on Energy Minimization Methods in Computer Vision and Pattern Recognition (EMMCVPR ‘05), Saint Augustine, FL, USA, 9–11 November 2005. [Google Scholar]

- Ahlen, J.; Sundgren, D.; Bengtsson, E. Application of underwater hyperspectral data for color correction purposes. Pattern Recognit. Image Anal. 2007, 17, 170–173. [Google Scholar] [CrossRef]

- Ahlen, J. Colour Correction of Underwater Images Using Spectral Data. Ph.D. Thesis, Uppsala University, Uppsala, Sweden, 2005. [Google Scholar]

- Petit, F.; Capelle-Laizé, A.S.; Carré, P. Underwater image enhancement by attenuation inversion with quaternions. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Taipei, Taiwan, 19–24 April 2009. [Google Scholar]

- Bianco, G.; Muzzupappa, M.; Bruno, F.; Garcia, R.; Neumann, L. A New Colour Correction Method For Underwater Imaging. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Science, Piano di Sorrento, Italy, 16 April 2015. [Google Scholar]

- Prabhakar, C.; Praveen, P.K. An Image Based Technique for Enhancement of Underwater Images. Int. J. Mach. Intel. 2011, 3, 217–224. [Google Scholar]

- Lu, H.; Li, Y.; Zhang, L.; Serikawa, S. Contrast enhancement for images in turbid water. J. Opt. Soc. Am. 2015, 32, 886–893. [Google Scholar] [CrossRef] [PubMed]

- Nnolim, U.A. Smoothing and enhancement algorithms for underwater images based on partial differential equations. SPIE J. Electron. Imaging 2017, 26, 1–21. [Google Scholar] [CrossRef]

- Nnolim, U.A. Improved partial differential equation (PDE)-based enhancement for underwater images using local-global contrast operators and fuzzy homomorphic processes. IET Image Process. 2017, 11, 1059–1067. [Google Scholar] [CrossRef]

- Garcia, R.; Nicosevici, T.; Cufi, X. On the way to solve lighting problems in underwater imaging. In Proceedings of the IEEE Oceans Conference Record, Biloxi, MI, USA, 29–31 October 2002. [Google Scholar]

- Rzhanov, Y.; Linnett, L.M.; Forbes, R. Underwater video mosaicing for seabed mapping. In Proceedings of the IEEE International Conference on Image Processing, Vancouver, BC, Canada, 10–13 September 2000. [Google Scholar]

- Singh, H.; Howland, J.; Yoerger, D.; Whitcomb, L. Quantitative photomosaicing of underwater imagery. In Proceedings of the IEEE Oceans Conference, Nice, France, 28 September–1 October 1998. [Google Scholar]

- Fattal, R. Dehazing using Colour Lines. ACM Trans. Graphic. 2009, 28, 1–14. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Single Image Haze Removal Using Dark Channel Prior. IEEE Trans. Pattern Anal. Mach. Intel. (PAMI) 2010, 33, 2341–2353. [Google Scholar]

- Fang, S.; Zhan, J.; Cao, Y.; Rao, R. Improved single image dehazing using segmentation. In Proceedings of the 17th IEEE International Conference on Image Processing (ICIP), Hong Kong, China, 26–29 September 2010. [Google Scholar]

- Cui, T.; Tian, J.; Wang, E.; Tang, Y. Single image dehazing by latent region-segmentation based transmission estimation and weighted L1-norm regularisation. IET Image Process. 2017, 11, 145–154. [Google Scholar] [CrossRef]

- Senthamilarasu, V.; Baskaran, A.; Kutty, K. A New Approach for Removing Haze from Images. In Proceedings of the International Conference on Image Processing, Computer Vision, and Pattern Recognition (IPCV), Brasov, Romania, 4–16 August 2014. [Google Scholar]

- Ancuti, C.O.; Ancuti, C.; Bekaert, P. Effective single image dehazing by fusion. In Proceedings of the 17th IEEE International Conference on Image Processing (ICIP), Hong Kong, China, 26–29 September 2010. [Google Scholar]

- Galdran, A.; Vazquez-Corral, J.; Pardo, D.; Bertalmio, M. Fusion-based Variational Image Dehazing. IEEE Signal Process. Lett. 2017, 24, 151–155. [Google Scholar] [CrossRef]

- Carr, P.; Hartley, R. Improved Single Image Dehazing using Geometry. In Proceedings of the IEEE Digital Image Computing: Techniques and Applications, Melbourne, Australia, 1 December 2009. [Google Scholar]

- Park, D.; Han, D.K.; Ko, H. Single image haze removal with WLS-based edge-preserving smoothing filter. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, VAN, Canada, 26–31 May 2013. [Google Scholar]

- Galdran, A.; Vazquez-Corral, J.; Pardo, D.; Bertalmio, M.A. Variational Framework for Single Image Dehazing. In Computer Vision—ECCV 2014 Workshops; Springer: Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Galdran, A.; Vazquez-Corral, J.; Pardo, D.; Bertalmio, M. Enhanced Variational Image Dehazing. SIAM J. Imaging Sci. 2015, 8, 1519–1546. [Google Scholar] [CrossRef]

- Liu, X.; Zeng, F.; Huang, Z.; Ji, Y. Single color image dehazing based on digital total variation filter with color transfer. In Proceedings of the 20th IEEE International Conference on Image Processing (ICIP), Melbourne, VIC, Australia, 15–18 September 2013. [Google Scholar]

- Dong, X.M.; Hu, X.Y.; Peng, S.L.; Wang, D.C. Single color image dehazing using sparse priors. In Proceedings of the 17th IEEE International Conference on Image Processing (ICIP), Hong Kong, China, 26–29 September 2010. [Google Scholar]

- Meng, G.; Wang, Y.; Duan, J.; Xiang, S.; Pan, C. Efficient Image Dehazing with Boundary Constraint and Contextual Regularization. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Sydney, Australia, 1–8 December 2013. [Google Scholar]

- Zhang, X.S.; Gao, S.B.; Li, C.Y.; Li, Y.J. A Retina Inspired Model for Enhancing Visibility of Hazy Images. Front. Comput. Sci. 2015, 9, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M.H. Single Image Dehazing via Multi-Scale Convolutional Neural Networks. In Proceedings of the European Conference on Computer Vision 2016, Amsterdam, The Netherlands, 8 October 2016. [Google Scholar]

- Yang, S.; Zhu, Q.; Wang, J.; Wu, D.; Xie, Y. An Improved Single Image Haze Removal Algorithm Based on Dark Channel Prior and Histogram Specification. In Proceedings of the 3rd International Conference on Multimedia Technology (ICMT), Brno, Czech Republic, 22 November 2013. [Google Scholar]

- Guo, F.; Cai, Z.; Xie, B.; Tang, J. Automatic Image Haze Removal Based on Luminance Component. In Proceedings of the 6th International Conference on Wireless Communications Networking and Mobile Computing (WiCOM), Chengdu, China, 23–25 September 2010. [Google Scholar]

- Nair, D.; Kumar, P.A.; Sankaran, P. An Effective Surround Filter for Image Dehazing. In Proceedings of the ICONIAAC 14, Amritapuri, India, 10–11 October 2014. [Google Scholar]

- Xie, B.; Guo, F.; Cai, Z. Improved Single Image Dehazing Using Dark Channel Prior and Multi-scale Retinex. In Proceedings of the International Conference on Intelligent System Design and Engineering Application (ISDEA), Denver, CO, USA, 13–14 October 2010. [Google Scholar]

- Nnolim, U.A. Sky Detection and Log Illumination Refinement for PDE-Based Hazy Image Contrast Enhancement. 2017. Available online: http://arxiv.org/pdf/1712.09775.pdf (accessed on December 2017).

- Nnolim, U.A. Partial differential equation-based hazy image contrast enhancement. Comput. Electr. Eng. 2018, in press. [Google Scholar] [CrossRef]

- Nnolim, U.A. Image de-hazing via gradient optimized adaptive forward-reverse flow-based partial differential equation. J. Circuit. Syst. Comp. 2018, accepted. [Google Scholar] [CrossRef]

- Galdran, A. Artificial Multiple Exposure Image Dehazing. Signal Process. 2018, 149, 135–147. [Google Scholar] [CrossRef]

- Zhu, M.; He, B.; Wu, Q. Single Image Dehazing Based on Dark Channel Prior and Energy Minimization. IEEE Signal Process. Lett. 2018, 25, 174–178. [Google Scholar] [CrossRef]

- Shi, Z.; Zhu, M.; Xia, Z.; Zhao, M. Fast single-image dehazing method based on luminance dark prior. Int. J. Pattern Recognit. 2017, 31, 1754003. [Google Scholar] [CrossRef]

- Yuan, X.; Ju, M.; Gu, Z.; Wang, S. An Effective and Robust Single Image Dehazing Method Using the Dark Channel Prior. Information 2017, 8, 57. [Google Scholar] [CrossRef]

- Zhu, Y.; Tang, G.; Zhang, X.; Jiang, J.; Tian, Q. Haze removal method for natural restoration of images with sky. Neurocomputing 2018, 275, 499–510. [Google Scholar] [CrossRef]

- Wang, X.; Ju, M.; Zhang, D. Automatic hazy image enhancement via haze distribution estimation. Adv. Mech. Eng. 2018, 10, 1687814018769485. [Google Scholar] [CrossRef]

- Du, Y.; Li, X. Recursive Deep Residual Learning for Single Image Dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops 2018, Salt Lake City, Utah, 18–22 June 2018. [Google Scholar]

- Jiang, H.; Lu, N.; Yao, L.; Zhang, X. Single image dehazing for visible remote sensing based on tagged haze thickness maps. Remote Sens. Lett. 2018, 9, 627–635. [Google Scholar] [CrossRef]

- Ju, M.Y.; Ding, C.; Zhang, D.Y.; Guo, Y.J. Gamma-Correction-Based Visibility Restoration for Single Hazy Images. IEEE Signal Process. Lett. 2018, 25, 1084–1088. [Google Scholar] [CrossRef]

- Ki, S.; Sim, H.; Choi, J.S.; Seo, S.; Kim, S.; Kim, M. Fully End-to-End learning based Conditional Boundary Equilibrium GAN with Receptive Field Sizes Enlarged for Single Ultra-High Resolution Image Dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops 2018, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Li, C.; Guo, J.; Porikli, F.; Fu, H.; Pang, Y. A Cascaded Convolutional Neural Network for Single Image Dehazing. IEEE Access. 2018, 6, 24877–24887. [Google Scholar] [CrossRef]

- Li, R.; Pan, J.; Li, Z.; Tang, J. Single Image Dehazing via Conditional Generative Adversarial Network. Methods 2018, 3, 24. [Google Scholar]

- Luan, Z.; Zeng, H.; Shang, Y.; Shao, Z.; Ding, H. Fast Video Dehazing Using Per-Pixel Minimum Adjustment. Math. Probl. Eng. 2018, 2018, 9241629. [Google Scholar] [CrossRef]

- Mondal, R.; Santra, S.; Chanda, B. Image Dehazing by Joint Estimation of Transmittance and Airlight using Bi-Directional Consistency Loss Minimized FCN. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops 2018, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Qin, M.; Xie, F.; Li, W.; Shi, Z.; Zhang, H. Dehazing for Multispectral Remote Sensing Images Based on a Convolutional Neural Network with the Residual Architecture. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 1645–1655. [Google Scholar] [CrossRef]

- Santra, S.; Mondal, R.; Chanda, B. Learning a Patch Quality Comparator for Single Image Dehazing. IEEE Trans. Image Process. 2018, 27, 4598–4607. [Google Scholar] [CrossRef] [PubMed]

- Song, Y.; Li, J.; Wang, X.; Chen, X. Single Image Dehazing Using Ranking Convolutional Neural Network. IEEE Trans. Multimedia 2018, 20, 1548–1560. [Google Scholar] [CrossRef]

- Zhang, H.; Sindagi, V.; Patel, V.M. Multi-scale Single Image Dehazing using Perceptual Pyramid Deep Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops 2018, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Li, J.; Li, G.; Fan, H. Image Dehazing using Residual-based Deep CNN. IEEE Access 2018, 6, 26831–26842. [Google Scholar] [CrossRef]

- Lu, J.; Li, N.; Zhang, S.; Yu, Z.; Zheng, H.; Zheng, B. Multi-scale adversarial network for underwater image restoration. Opt. Laser Technol. 2018, in press. [Google Scholar] [CrossRef]

- Yang, Q.; Chen, D.; Zhao, T.; Chen, Y. Fractional calculus in image processing: A review. Fract. Calc. Appl. Anal. 2016, 19, 1222–1249. [Google Scholar] [CrossRef]

- Patrascu, V. Image enhancement method using piecewise linear transforms. In Proceedings of the European Signal Processing Conference (EUSIPCO-2004), Vienna, Austria, 6–10 September 2004. [Google Scholar]

- Baliga, A.B. Face Illumination Normalization with Shadow Consideration. Master’s Thesis, Carnegie Mellon University, Pittsburgh, PA, USA, May 2004. [Google Scholar]

- Nnolim, U.A. An adaptive RGB colour enhancement formulation for Logarithmic Image Processing-based algorithms. Optik 2018, 154, 192–215. [Google Scholar] [CrossRef]

- Laboratory for Image & Video Engineering (LIVE). Referenceless Prediction of Perceptual Fog Density and Perceptual Image Defogging. Available online: http://live.ece.utexas.edu/research/fog/fade_defade.html (accessed on 30 June 2018).

- Shen, X.; Li, Q.; Tan, Y.; Shen, L. An Uneven Illumination Correction Algorithm for Optical Remote Sensing Images Covered with Thin Clouds. Remote Sens. 2015, 7, 11848–11862. [Google Scholar] [CrossRef]

- Matkovic, K.; Neumann, L.; Neumann, A.; Psik, T.; Purgathofer, W. Global Contrast Factor-a New Approach to Image Contrast. In Proceedings of the 1st Eurographics Conference on Computational Aesthetics in Graphics, Visualization and Imaging, Girona, Spain, 18–20 May 2005. [Google Scholar]

- Susstrunk, S.; Hasler, D. Measuring Colourfulness in Natural Images. In Proceedings of the IS&T/SPIE Electronic Imaging 2003: Human Vision and Electronic Imaging VIII, Santa Clara, California, CA, USA, 21–24 January 2003. [Google Scholar]

- Yang, M.; Sowmya, A. An Underwater Color Image Quality Evaluation Metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef] [PubMed]

- Panetta, K.; Gao, C.; Agaian, S. Human-visual-system-inspired underwater image quality measures. IEEE J. Ocean Eng. 2016, 41, 541–551. [Google Scholar] [CrossRef]

- El Khoury, J.; Le Moan, S.; Thomas, J.B.; Mansouri, A. Color and sharpness assessment of single image dehazing. Multimed. Tools Appl. 2018, 77, 15409–15430. [Google Scholar] [CrossRef]

- Changhau, I. Underwater Image Enhance via Fusion (IsaacChanghau/ImageEnhanceViaFusion). Available online: https://github.com/IsaacChanghau/ImageEnhanceViaFusion (accessed on 30 July 2018).

- Emberton, S.; Chittka, L.; Cavallaro, A. Underwater image and video dehazing with pure haze region segmentation. Comput. Vis. Image Und. 2018, 168, 145–156. [Google Scholar] [CrossRef]

- Ancuti, C.; Ancuti, C.; Haber, T.; Bekaert, P. Enhancing underwater images and videos by fusion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, Rhode Island, 16 June 2012. [Google Scholar]

- Li, Z.; Tan, P.; Tan, R.T.; Zou, D.; Zhou, S.Z.; Cheong, L.F. Simultaneous video defogging and stereo reconstruction. In Proceedings of the IEEE Conferene on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Emberton, S.; Chittka, L.; Cavallaro, A. Hierarchical rank-based veiling light estimation for underwater dehazing. In Proceedings of the British Machine Vision Conference, Swansea, UK, 7–10 September 2015. [Google Scholar]

- Drews-Jr, P.; Nascimento, E.; Moraes, F.; Botelho, S.; Campos, M.; Grande-Brazil, R.; Horizonte-Brazil, B. Transmission estimation in underwater single images. In Proceedings of the IEEE International Conference on Computer Vision Workshop, Sydney, NSW, Australia, 2–8 December 2013. [Google Scholar]

- Zhu, Q.; Mai, J.; Shao, L.A. Fast Single Image Haze Removal Algorithm Using Color Attenuation Prior. IEEE Trans. Image Process. 2015, 24, 3522–3533. [Google Scholar] [PubMed]

- Caraffa, L.; Tarel, J.P. Stereo Reconstruction and Contrast Restoration in Daytime Fog. In Proceedings of the 11th IEEE Asian Conference on Computer Vision (ACCV’12), Daejeon, Korea, 5–9 November 2012. [Google Scholar]

- Tarel, J.P.; Cord, A.; Halmaoui, H.; Gruyer, D.; Hautiere, N. Improved Visibility of Road Scene Images under Heterogeneous Fog. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV’10), San Diego, CA, USA, 21–24 June 2010. [Google Scholar]

- Tarel, J.; Hautiere, N. Fast visibility restoration from a single color or gray level image. In Proceedings of the IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009. [Google Scholar]

- Dai, S.K.; Tarel, J.P. Adaptive Sky Detection and Preservation in Dehazing Algorithm. In Proceedings of the IEEE International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS), Nusa Dua, Bali, Indonesia, 9–12 November 2015. [Google Scholar]

- Nishino, K.; Kratz, L.; Lombardi, S. Bayesian Defogging. Int. J. Comput. Vis. 2012, 98, 263–278. [Google Scholar] [CrossRef]

- Wang, W.; He, C. Depth and Reflection Total Variation for Single Image Dehazing. arXiv 2016, arXiv:1601.05994. [Google Scholar]

- Ju, M.; Zhang, D.; Wang, X. Single image dehazing via an improved atmospheric scattering model. Vis. Comput. 2017, 33, 1613–1625. [Google Scholar] [CrossRef]

- Nnolim, U.A. Adaptive multi-scale entropy fusion de-hazing based on fractional order. Preprints 2018. [Google Scholar] [CrossRef]

- Nnolim, U.A. Fractional Multiscale Fusion-based De-hazing. Available online: https://arxiv.org/abs/1808.09697 (accessed on 29 August 2018).

| Algos | Ancuti et al. [87] | Li et al. [88] | Emberton et al. [86] | PA | |||||

|---|---|---|---|---|---|---|---|---|---|

| Measures | σc | UCIQE | σc | UCIQE | σc | UCIQE | σc | UCIQE | |

| Images | |||||||||

| S1 | 0.44 | 0.69 | 0.36 | 0.61 | 0.44 | 0.66 | 0.15 | 0.58 | |

| S2 | 0.44 | 0.67 | 0.33 | 0.57 | 0.42 | 0.61 | 0.26 | 0.88 | |

| S3 | 0.49 | 0.69 | 0.34 | 0.59 | 0.40 | 0.68 | 0.59 | 1.31 | |

| S4 | 0.23 | 0.63 | 0.22 | 0.50 | 0.29 | 0.61 | 0.38 | 0.99 | |

| S5 | 0.23 | 0.60 | 0.18 | 0.46 | 0.26 | 0.55 | 0.28 | 0.90 | |

| S6 | 0.19 | 0.58 | 0.11 | 0.39 | 0.25 | 0.54 | 0.19 | 0.68 | |

| Mean | 0.34 | 0.65 | 0.27 | 0.53 | 0.35 | 0.61 | 0.31 | 0.89 | |

| Algos | He, et al. [32] | Zhu, et al. [91] | Ren et al. [46] | PDE-GOC-SSR-CLAHE [52] | PDE-IRCES [53] | PA | |

| Images | (Ω = 0.95, w = 15, A = 240, r = 24) | β = 0.95,1; θ0 = 0.1893; θ1 = 1.0267; θ2 = −1.2966; Guided filter: 𝑟 = 60; 𝑡0 = 0.05; 𝑡1 = 1; 𝜀 = 0.001 | 𝛾 = 1.3 (canyon image) 0.8 ≤ 𝛾 ≤ 1.5 (others) | Δ𝑡 = 0.25; 𝑘𝑠𝑎𝑡 = 1.5 | Δ𝑡 = 0.25 | ||

| Tiananmen | 1.8455 /0.9606 /0.1879 | 1.1866 /1.0041 /0.0814 | 1.5649 /0.8734 /0.1288 | 2.8225 /1.0386 /0.0625 | 2.3219 /1.1614 /0 | 4.4410 /1.4514 /0.1688 | |

| Cones | 1.4977 /1.1478 /0.3878 | 0.9704 /1.0873 /0.2499 | 1.3818 /1.1042 /0.2956 | 2.7516 /1.1999 /0.2733 | 2.5881 /1.2064 /0 | 4.9702 /1.4620 /0.3142 | |

| City1 | 1.1914 /1.0332 /0.1336 | 0.9303 /1.0075 /0.2002 | 1.2989 /1.0232 /0.2002 | 1.7762 /1.1164 /0.0562 | 2.4080 /1.3458 /0.00375 | 3.8282 /1.4898 /0.1712 | |

| Canyon | 1.7481 /1.1057 /0.3796 | 1.2880 /1.0679 /0.2412 | 1.4564 /1.0319 /0.0446 | 2.5408 /1.2070 /0.3103 | 2.5224 /1.19684 /0.00019 | 3.9892 /1.7903 /0.2412 | |

| Canon | 3.2903 /1.0857 /0.3947 | 1.7127 /0.9089 /0.3198 | 2.6871 /1.0832 /0.3831 | 2.8059 /1.1188 /0.3947 | 2.8783 /1.3450 /0.00004 | 8.0224 /1.5785 /0.3942 | |

| Mountain | 1.7105 /0.9348 /0.0787 | 1.2092 /0.9307 /0.0984 | 1.6005 /0.9784 /0.0074 | 2.7275 /1.0202 /0.0074 | 2.9827 /1.2977 /0.00007 | 6.5399 /1.5503 /0.0244 | |

| Brick House | 1.2006 /0.9747 /0.1172 | 0.8597 /1.1395 /0.0730 | 1.2118 /1.0030 /0.1288 | 1.0836 /1.1135 /0.1021 | 1.4105 /1.2789 /0 | 3.0014 /1.3563 /0.0983 | |

| Pumpkins | 1.5927 /0.9501 /0.1581 | 0.9311 /0.6726 /0.1333 | 1.4753 /0.9511 /0.1764 | 2.4539 /1.0361 /0.1516 | 2.2777 /1.1626 /0.0001 | 3.3553 /1.6469 /0.2329 | |

| Train | 1.5206 /1.0090 /0.1664 | 0.9797 /1.0509 /0.3265 | 1.2036 /1.0203 /0.2412 | 1.5190 /1.1106 /0.3005 | 2.2569 /1.3589 /0.0038 | 4.3014 /1.5151 /0.2594 | |

| Toys | 2.2566 /0.9712 /0.3840 | 1.6711 /1.0117 /0.2865 | 2.1568 /0.9576 /0.2827 | 2.9813 /1.1095 /0.3379 | 2.1367 /1.2887 /0.00002 | 4.2837 /1.5937 /0.3736 | |

| Algos | He et al. [32] | Zhu et al. [91] | Ren et al. [46] | AMEF [54] | PDE-GOC-SSR-CLAHE [52] | PDE-IRCES [53] | PA | |

|---|---|---|---|---|---|---|---|---|

| Images | ||||||||

| Tiananmen (450 × 600) | 1.253494 | 0.991586 | 2.362754 | 1.4088 | 3.530989 | 2.330879 | 0.480659 | |

| Cones (384 × 465) | 0.850155 | 0.661314 | 1.651447 | 1.0506 | 2.381621 | 1.555098 | 0.268909 | |

| City1 (600 × 400) | 1.094910 | 0.875287 | 2.070620 | 1.2709 | 3.203117 | 2.183417 | 0.283372 | |

| Canyon (600 × 450) | 1.237655 | 0.972741 | 2.529734 | 1.5066 | 3.821395 | 2.306129 | 0.309343 | |

| Canon (525 × 600) | 1.431257 | 1.135376 | 2.890541 | 1.6958 | 4.187972 | 2.717652 | 0.374638 | |

| Mountain (400 × 600) | 1.129231 | 0.880835 | 2.358143 | 1.2985 | 3.158335 | 2.055685 | 0.360240 | |

| Brick house (711 × 693) | 2.230871 | 1.667610 | 5.234674 | 2.3618 | 6.395965 | 4.385789 | 1.102332 | |

| Pumpkins (400 × 600) | 1.125475 | 0.901815 | 2.253179 | 1.5018 | 3.152969 | 2.143529 | 0.407310 | |

| Train (400 × 600) | 1.105757 | 0.849072 | 2.075004 | 1.2935 | 3.178277 | 1.995436 | 0.365481 | |

| Toys (360 × 500) | 0.844945 | 0.657376 | 1.578068 | 1.0387 | 2.429651 | 1.545031 | 0.260878 | |

© 2018 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nnolim, U.A. Adaptive Multi-Scale Entropy Fusion De-Hazing Based on Fractional Order. J. Imaging 2018, 4, 108. https://doi.org/10.3390/jimaging4090108

Nnolim UA. Adaptive Multi-Scale Entropy Fusion De-Hazing Based on Fractional Order. Journal of Imaging. 2018; 4(9):108. https://doi.org/10.3390/jimaging4090108

Chicago/Turabian StyleNnolim, Uche A. 2018. "Adaptive Multi-Scale Entropy Fusion De-Hazing Based on Fractional Order" Journal of Imaging 4, no. 9: 108. https://doi.org/10.3390/jimaging4090108

APA StyleNnolim, U. A. (2018). Adaptive Multi-Scale Entropy Fusion De-Hazing Based on Fractional Order. Journal of Imaging, 4(9), 108. https://doi.org/10.3390/jimaging4090108