User Perception on Key Performance Indicators in an In-Service Office Building

Abstract

:1. Introduction

- The use of demand and supply languages: (i) the necessary requirements for good performance (demand) and (ii) the ability to satisfy these requirements (supply);

- The need to verify and validate results, taking into account the desired performance.

2. Methodology

2.1. Evaluation Techniques and Classification

- Analysis and consultation documentation;

- Visual inspection;

- User surveys;

- Surveys for building management entities;

- Tests (laboratory or in situ).

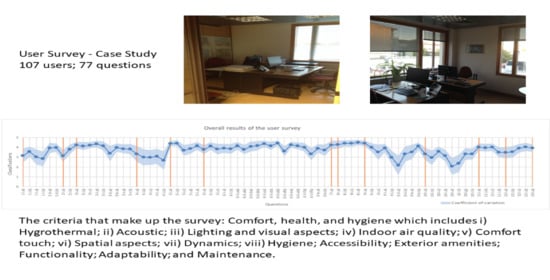

2.2. User Survey

- Comfort, health, and hygiene which includes (i) Hygrothermal; (ii) Acoustic; (iii) Lighting and visual aspects; (iv) Indoor air quality; (v) Comfort touch; vi) Spatial aspects; (vii) Dynamics; (viii) Hygiene;

- Accessibility;

- Exterior amenities;

- Functionality;

- Adaptability;

- Maintenance.

2.3. Case Study

3. Results and Discussion

3.1. Hygrothermal Comfort

3.2. Acoustic Comfort

3.3. Lighting and Visual Aspects

3.4. Indoor Air Quality

3.5. Comfort Touch

3.6. Spatial Features

3.7. Dynamics

3.8. Hygiene

3.9. Acessibility

3.10. Exterior Amenities

3.11. Functionality

3.12. Adaptability

3.13. Maintenance Actions

3.14. Synthesis

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- De Wilde, P. Ten questions concerning building performance analysis. Build. Environ. 2019, 110–117. [Google Scholar] [CrossRef]

- Foliente, G. Developments in performance-based building codes and standards. Forest Prod. J. 2000, 50, 12–21. [Google Scholar]

- Gross, J. Developments in the application of the performance concept in building. In Proceedings of the CIB-ASTM-RILEM 3rd International Symposium-Applications of the Performance Concept in Building, Tel-Avive, Israel, 9–12 December 1996; pp. 1–11. [Google Scholar]

- ASTM. Building Performance: Functional Preservation and Rehabilitation; ASTM Special Publication 901; American Society for Testing Materials: Philadelphia, PA, USA, 1986. [Google Scholar]

- VTTProP. Eco Prop Software and VTT ProP Classification. 2004. Available online: http://cic.vtt.fi/eco/e_index.htm (accessed on 11 March 2021).

- ISO. Performance Standards in Buildin-Principles for Their Preparation and Factors to be Considered; ISO 6241; International Organization for Standardization: Geneva, Switzerland, 1984. [Google Scholar]

- Silva, V. Sustainability indicators for buildings: State of the art and challenges for development in Brazil. Ambiente Construído (Built Environ. J.) 2008, 7, 1. (In Portuguese) [Google Scholar]

- Neely, A. Business Performance Measurement: Theory and Practice; Cambridge University Press: Cambridge, UK, 2002. [Google Scholar]

- Szigeti, F.; Davis, G. Performance Based Building: Conceptual Framework—Final Report; Performance Based Building Thematic Network: Rotterdam, The Netherlands, 2005. [Google Scholar]

- Ang, G.; Wyatt, D.; Hermans, M. A systematic approach to define client expectation to total building performance during the pre-design stage. In Proceedings of the CIB 2001 Triennal World Congress-Performance-Based (PeBBu) Network, Toronto, ON, Canada, Ocotber 2001. [Google Scholar]

- Blyth, A.; Worthing, J. Managing the Brief for Better Design; Span Press: London, UK, 2001. [Google Scholar]

- Gibson, E. Coordinator, CIB Working Commission W060. Working with the Performance Approach in Building; CIB W060. CIB State of Art Report No.64; CIB: Rotterdam, The Netherlands, 1982. [Google Scholar]

- Gregor, W.; Then, D. Facilities Management and Business of Space; Arlond: London, UK, 1999; A member of the holder headline group. [Google Scholar]

- Nilsson, N.; Cole, R.J. Context, History and Structure. In Proceedings of the GBC ‘9: Green Building Challenge ’98, Vancouver, BC, Canada, 26–28 October 1998; pp. 15–25. [Google Scholar]

- LEED. LEED Commercial Interiors. 2009. Available online: http://www.leeduser.com/ (accessed on 11 March 2021).

- Pinheiro, M. LiderA—Leading the environment in the search of sustainability-Presentation Summary of Voluntary Sustainability Assessment System in Constructio-Version Built Environments (V2.00b). Available online: http://www.lidera.info (accessed on 11 March 2021).

- Larsson, N. User Guide to the SBTool Assessment Framework; iiSBE; 2015; Available online: http://www.iisbe.org/system/files/SBTool%20Overview%2004May15.pdf (accessed on 11 March 2021).

- Mateus, R.; Bragança, L. Sustainability assessment of an affordable residential building. In Proceedings of the Portugal SB10: Sustainable Building, Coimbra, Portugal, 17–19 March 2010; pp. 581–589. [Google Scholar]

- Lay, M.C.; Reis, A.T. The Role of communal open spaces in the performance evaluation of housing. Built Environ. 2002, 2, 25–39. [Google Scholar]

- Cooper, I. Post-occupancy evaluation—Where are you? Building Research and Information. Spec. Issue Post-Occup. Eval. 2001, 29, 158–163. [Google Scholar] [CrossRef]

- EN 15341. Maintenance Key Performance; European Committee for Standardization: Brussels, Belgium, 2019. [Google Scholar]

- Maslesa, E.; Jensen, P.; Birkved, M. Indicators for quantifying environmental building performance: A systematic literature review. J. Building Eng. 2018, 552–560. [Google Scholar] [CrossRef]

- Marmo, R.; Nicotella, M.; Polverino, F.; Tibaut, A. A methodology for a performance information model to support facility management. Sustainability 2019, 11, 7007. [Google Scholar] [CrossRef] [Green Version]

- Bortolini, R.; Forcada, N. Operational performance indicators and causality analysis for non-residential buildings. Informes Constr. 2020, 72. [Google Scholar] [CrossRef]

- Antoniadou, P.; Kyriaki, E.; Manoloudis, A.; Papadopoulos, A. Evaluation of thermal sensation in office buildings: A case study in the Mediterranean. Procedia Environ. Sci. 2017, 38, 28–35. [Google Scholar] [CrossRef]

- Naspi, F.; Asano, M.; Zampetti, L.; Stazi, F.; Rever, G.M.; DÓrazio, M. Experimental study on occupants’ interaction with windows and lights in Mediterranean offices during the non-heating season. Build. Environ. 2018, 127, 221–238. [Google Scholar] [CrossRef]

- Wang, W.; Arya, C. Effect of high-performance facades on heating/cooling loads in London, UK office buildings. Proceedings of the Institution of Civil Engineers. Eng. Sustain. 2020, 173, 135–150. [Google Scholar] [CrossRef]

- EN 15221-7. Facility Management Part 7: Guidelines for Performance Benchmarking; European Committee for Standardization: Brussels, Belgium, 2012. [Google Scholar]

- ISO 15686-5. Building and Constructed Assets. In Service Life Plannin-Part 5: Life-Cycle Costing; International Organization for Standardization: Geneva, Switzerland, 2017. [Google Scholar]

- CIB. Usability of Workplaces Report on Case Studies; CIB Report, publication 306; CIB: Rotterdam, The Netherlands, 2005. [Google Scholar]

- Hartkopf, V.; Loftness, V.; Drake, P.; Dubin, F.; Mill, P.; Ziga, G. Designing the Office of the Future: The Japanese Approach to Tomorrow’s Workplace; Jonh Wiley & Sons, Inc.: New York, NY, USA, 1993. [Google Scholar]

- Flores-Colen, I. Methodology for Assessing the Performance of Walls Plastered Service from the Perspective of Predictive Maintenance. Ph.D. Thesis, University of Lisbon—IST, Lisbon, Portugal, 2009. Volume 1, 487p. (In Portuguese). [Google Scholar]

- BREEAM. BRE Environmental & Sustainability—BREEAM Offices 2008 Assessor Manual. BRE Global Ltd. Available online: http://www.breeam.org/ (accessed on 20 April 2008).

- Maurício, F. The Application of Facility Management Tools to Office Building’s Technical Maintenance. Master’s Thesis, University of Lisbon—IST, Lisbon, Portugal, 2011; 105p. (In Portuguese). [Google Scholar]

- France, J.R. Final Report of the Task Group 4: Life Cycle Costs in Construction; BATIMAT European Expert Workshop; ACE (Architect Council of Europe): Paris, France, 2005; pp. 4–6. [Google Scholar]

- Hakovirta, M.; Denuwara, N. How COVID-19 Redefines the Concept of Sustainability. Sustainability 2020, 12, 3727. [Google Scholar] [CrossRef]

- Ribeiro, C.; Ramos, N.; Flores-Colen, I. A review of balcony impacts on the indoor environmental quality of dwellings. Sustainability 2020, 12, 6453. [Google Scholar] [CrossRef]

| Criterion | [4] | [5] | [6] | [14] | [15] | [16] | [17] | [18] | [30] | [31] | [33] |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Technical properties | |||||||||||

| Mechanical and structural | x | x | x | x | |||||||

| Physical and chemical | x | x | x | x | x | ||||||

| Ventilation | x | x | x | x | x | X | x | x | x | x | |

| Durability | x | x | x | x | x | x | |||||

| Fire safety | x | x | x | x | x | x | |||||

| Structural safety | x | x | x | x | |||||||

| Maintenance | |||||||||||

| Operation and services | x | x | x | x | x | ||||||

| Usage properties | |||||||||||

| Hygrothermal | x | x | x | x | x | x | X | x | X | x | x |

| Acoustic | x | x | x | x | x | x | x | x | x | x | |

| Lighting | x | x | x | x | x | x | x | x | x | x | x |

| Indoor air quality | x | x | x | x | x | x | x | x | x | x | |

| Comfort touch and visual | x | x | x | x | x | ||||||

| Spatial aspects | x | x | x | ||||||||

| Dynamic | x | x | x | x | |||||||

| Hygiene | x | x | x | x | x | x | x | ||||

| Accessibility | x | x | x | x | |||||||

| Outdoor amenities | x | x | x | x | x | x | |||||

| Functionality | x | x | |||||||||

| Adaptability | x | x | x | x | x | x | x | x | x | ||

| Usage security | x | x | x | x | x | x | |||||

| Environment | |||||||||||

| Biodiversity | x | x | x | x | x | ||||||

| Resources | x | x | x | x | x | x | x | ||||

| Pollution | x | x | x | x | x | x | x | x | |||

| Economic | |||||||||||

| Costs/LCC | x | x | x | x | x | ||||||

| A.1.7. | DYNAMICS | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Question | i. “How Do You Rate the Intensity of Factors of Existing Vibration?” | |||||||||||||||||

| br | i | na/dk | 1 | 2 | 3 | 4 | 5 | ni | nic | m | cv | tstd | Significance p (tstd < tcrit) | |||||

| a) | 1 | - | 4 | 0 | 4 | 10 | 23 | 35 | 77 | 72 | 4.2 | 4.0 | 5 | 0.9 | 21% | 0.21 | 0.0 | [4.0; 4.4] |

| A.5. | ADAPTABILITY | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Question | i. “How Do You Rate the Building in Terms of Usage Satisfaction?” | |||||||||||||||||

| br | i | na/ns | 1 | 2 | 3 | 4 | 5 | ni | nic | m | cv | tstd | Significance p (tstd < tcrit) | |||||

| a) | - | - | - | 0 | 1 | 20 | 40 | 16 | 77 | 77 | 3.9 | 3.4 | 4 | 0.72 | 18% | 0.16 | 0.0 | [3.8; 4.1] |

| Criterion | Average of Results | |

|---|---|---|

| 1 | Hygrothermal | 3.93 |

| 2 | Acoustic | 4.19 |

| 3 | Lighting and visual aspects | 3.93 |

| 4 | Indoor air quality | 2.99 |

| 5 | Touch | 4.12 |

| 6 | Space | 3.91 |

| 7 | Dynamics | 4.24 |

| 8 | Hygiene | 4.39 |

| 9 | Accessibility | 3.50 |

| 10 | Exterior amenities | 3.01 |

| 11 | Functionality | 3.88 |

| 12 | Adaptability | 3.92 |

| 13 | Maintenance operations | 3.73 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pestana, T.; Flores-Colen, I.; Pinheiro, M.D.; Sajjadian, S.M. User Perception on Key Performance Indicators in an In-Service Office Building. Infrastructures 2021, 6, 45. https://doi.org/10.3390/infrastructures6030045

Pestana T, Flores-Colen I, Pinheiro MD, Sajjadian SM. User Perception on Key Performance Indicators in an In-Service Office Building. Infrastructures. 2021; 6(3):45. https://doi.org/10.3390/infrastructures6030045

Chicago/Turabian StylePestana, Teresa, Inês Flores-Colen, Manuel Duarte Pinheiro, and Seyed Masoud Sajjadian. 2021. "User Perception on Key Performance Indicators in an In-Service Office Building" Infrastructures 6, no. 3: 45. https://doi.org/10.3390/infrastructures6030045