A Survey on the Procedural Generation of Virtual Worlds

Abstract

:1. Introduction

- to create a realistic and credible environment in an adequate period of time,

- to keep the project in budget to make the result affordable for the end user.

2. History of PCG in Digital Games

- turn-based gameplay,

- procedurally generated levels,

- permanent death (no load/save functionality).

3. Definition of PCG

Procedural content generation is the automatic creation of digital assets for games, simulations or movies based on predefined algorithms and patterns that require a minimal user input.

- grammars,

- L-Systems,

- shape grammars,

- programming languages.

3.1. Theoretical Considerations

3.2. Random Number Generators

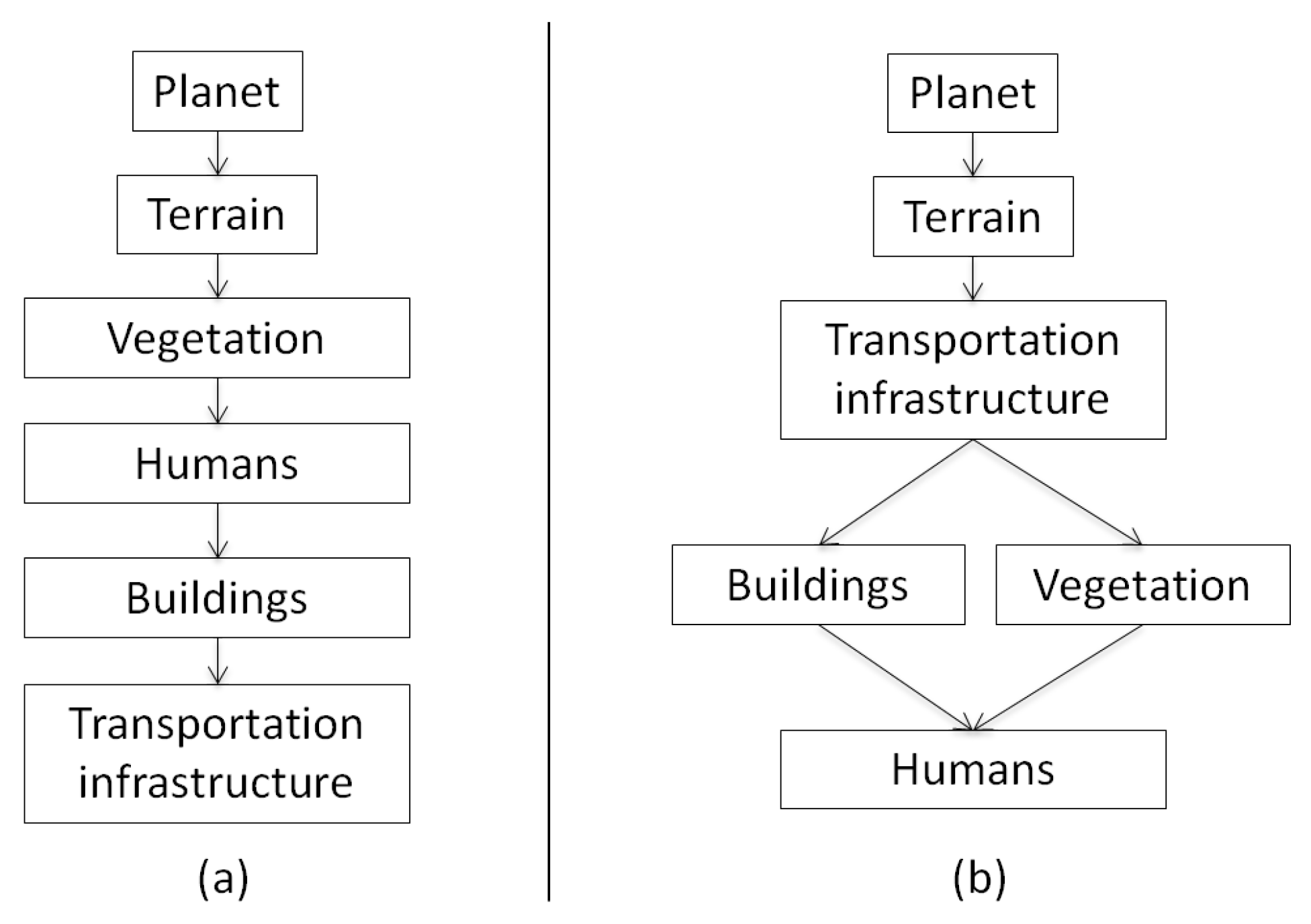

4. Classification

4.1. Vegetation and Landscape

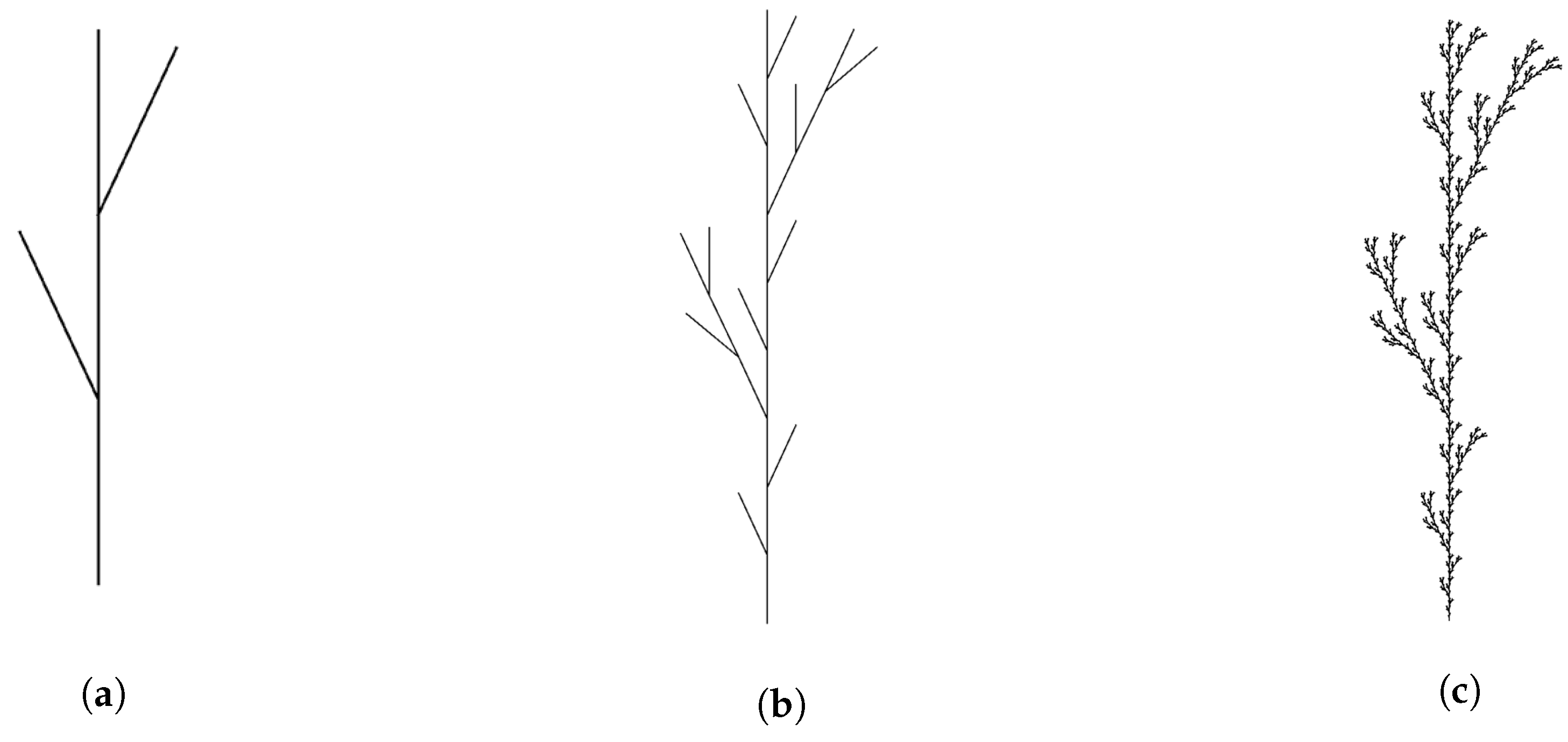

4.1.1. Generation of Vegetation

- F

- move one step forward, drawing a line,

- f

- move one step forward without drawing a line,

- +

- turn right by degrees,

- −

- turn left by degrees.

4.1.2. Landscapes

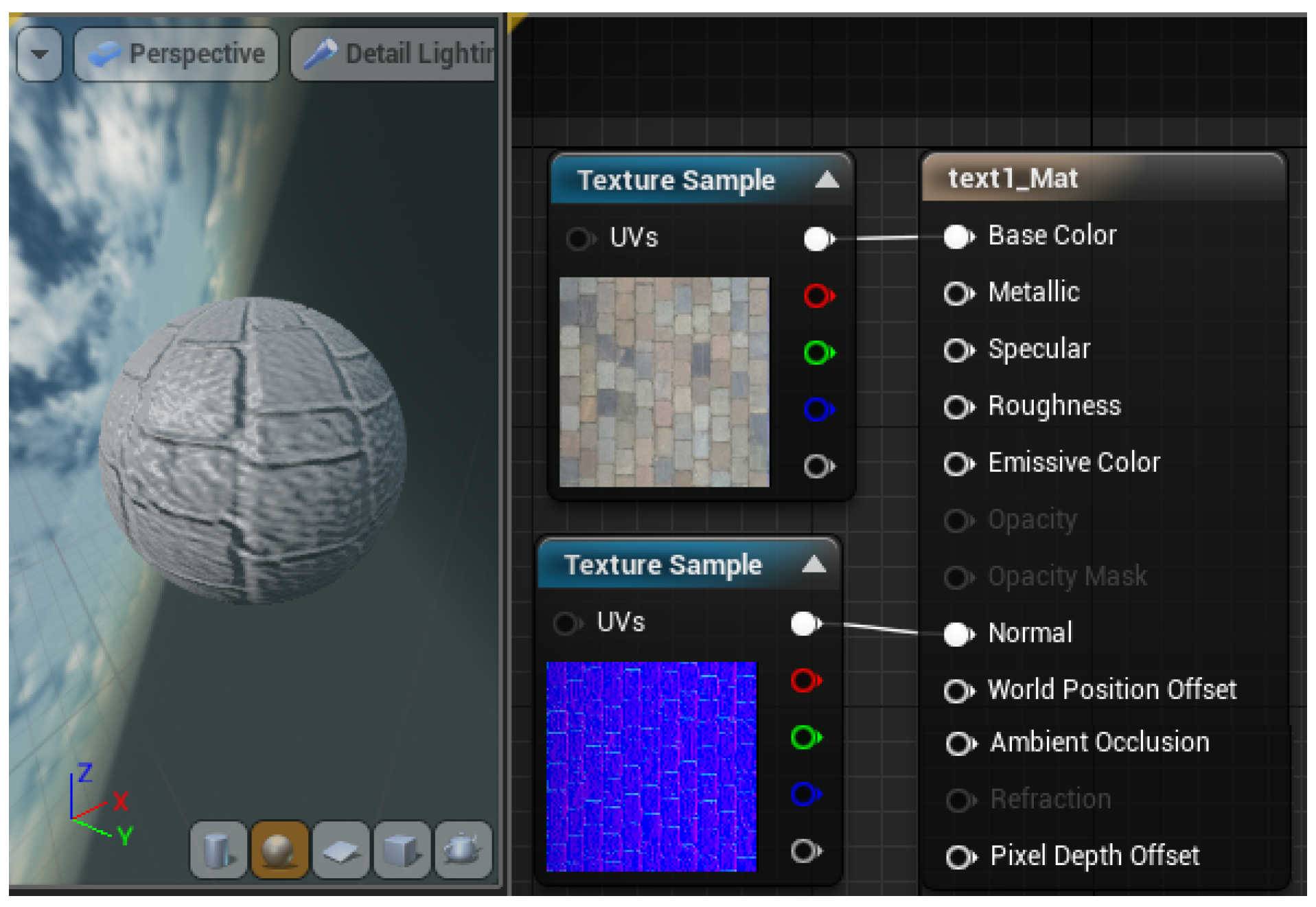

- manually drawing a texture,

- using a manually created color map to project textures to specific regions,

- generating a texture by analyzing slopes and heights of the terrain’s mesh.

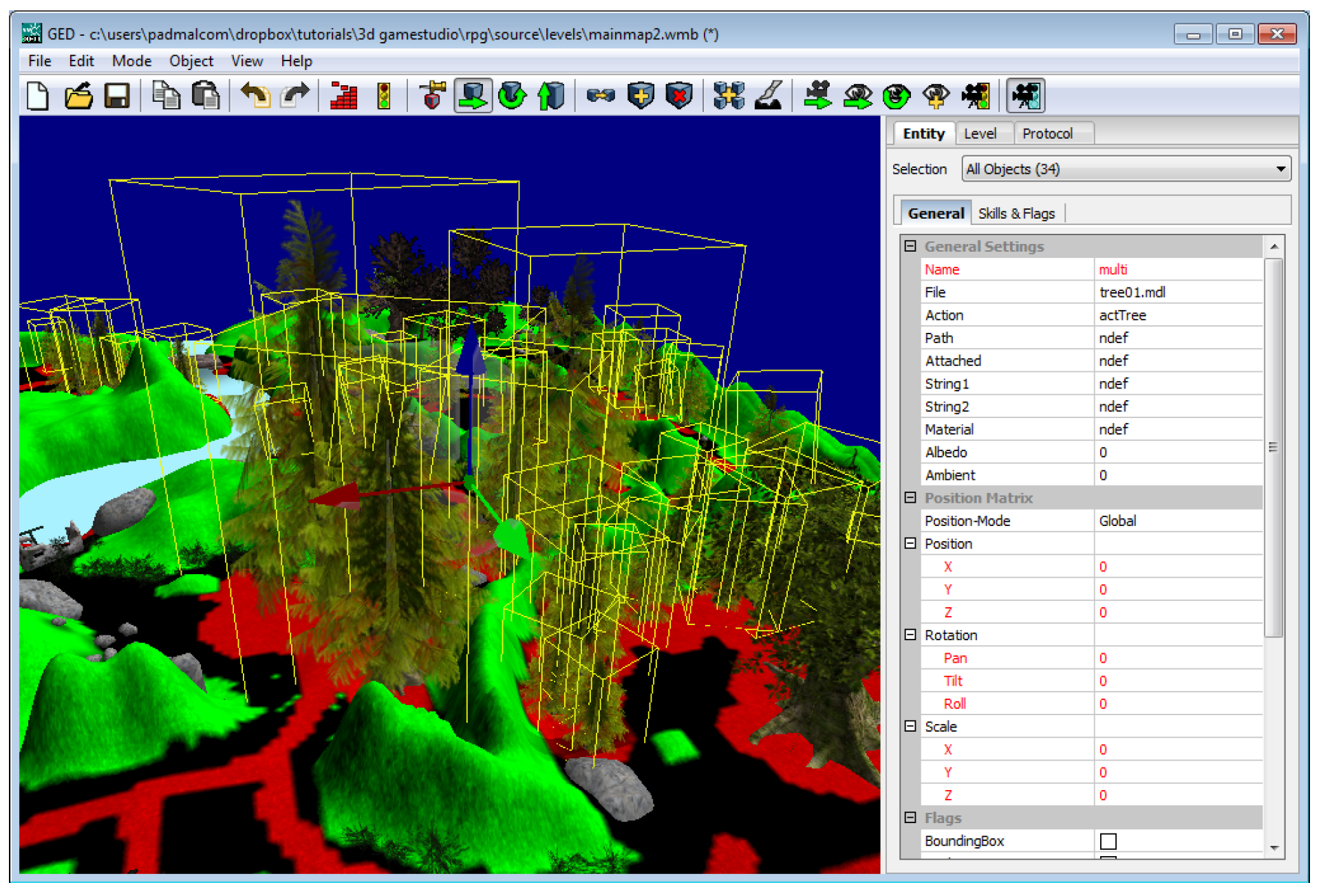

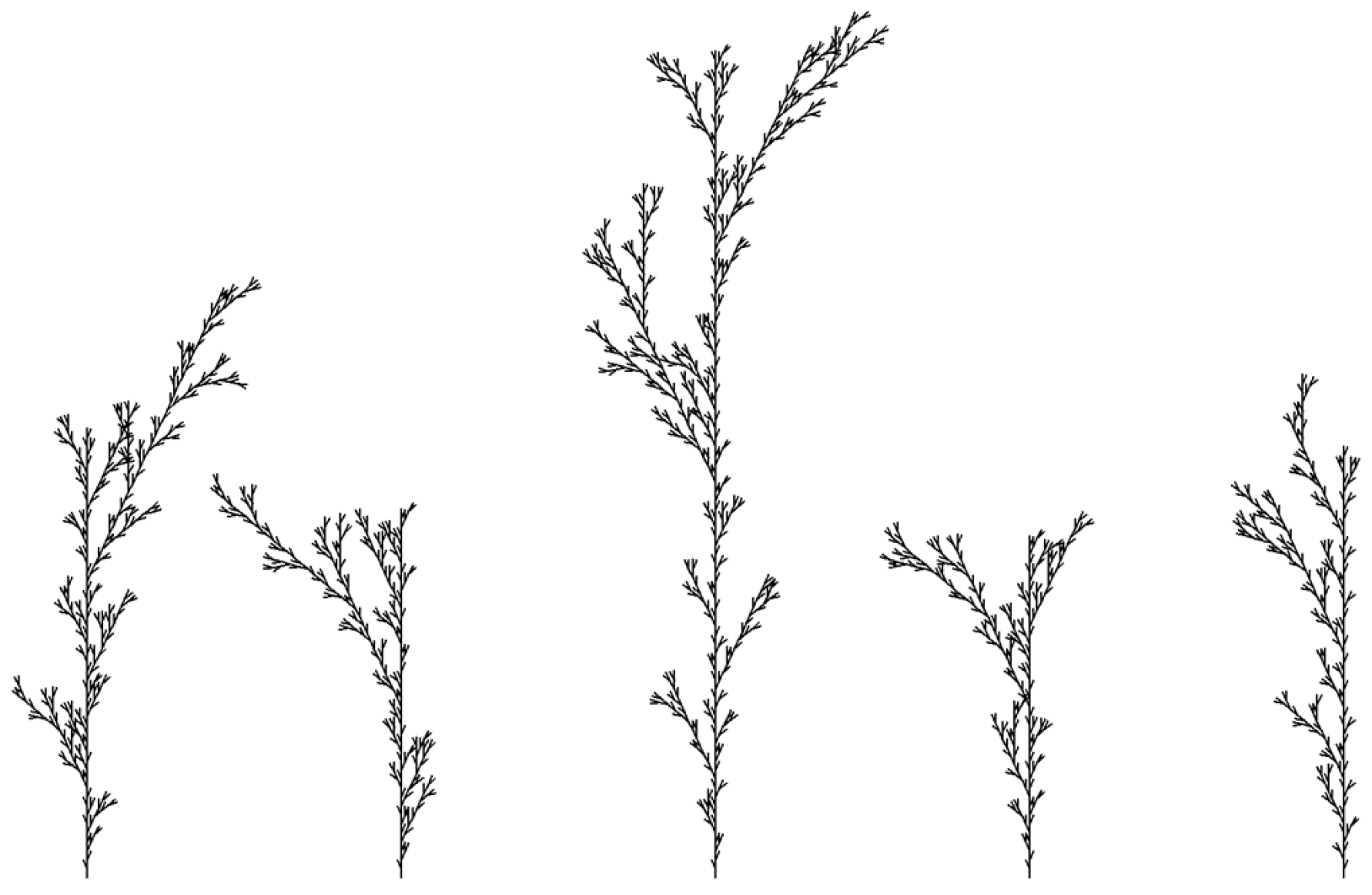

4.1.3. Placing Vegetation in a Landscape

4.1.4. Water

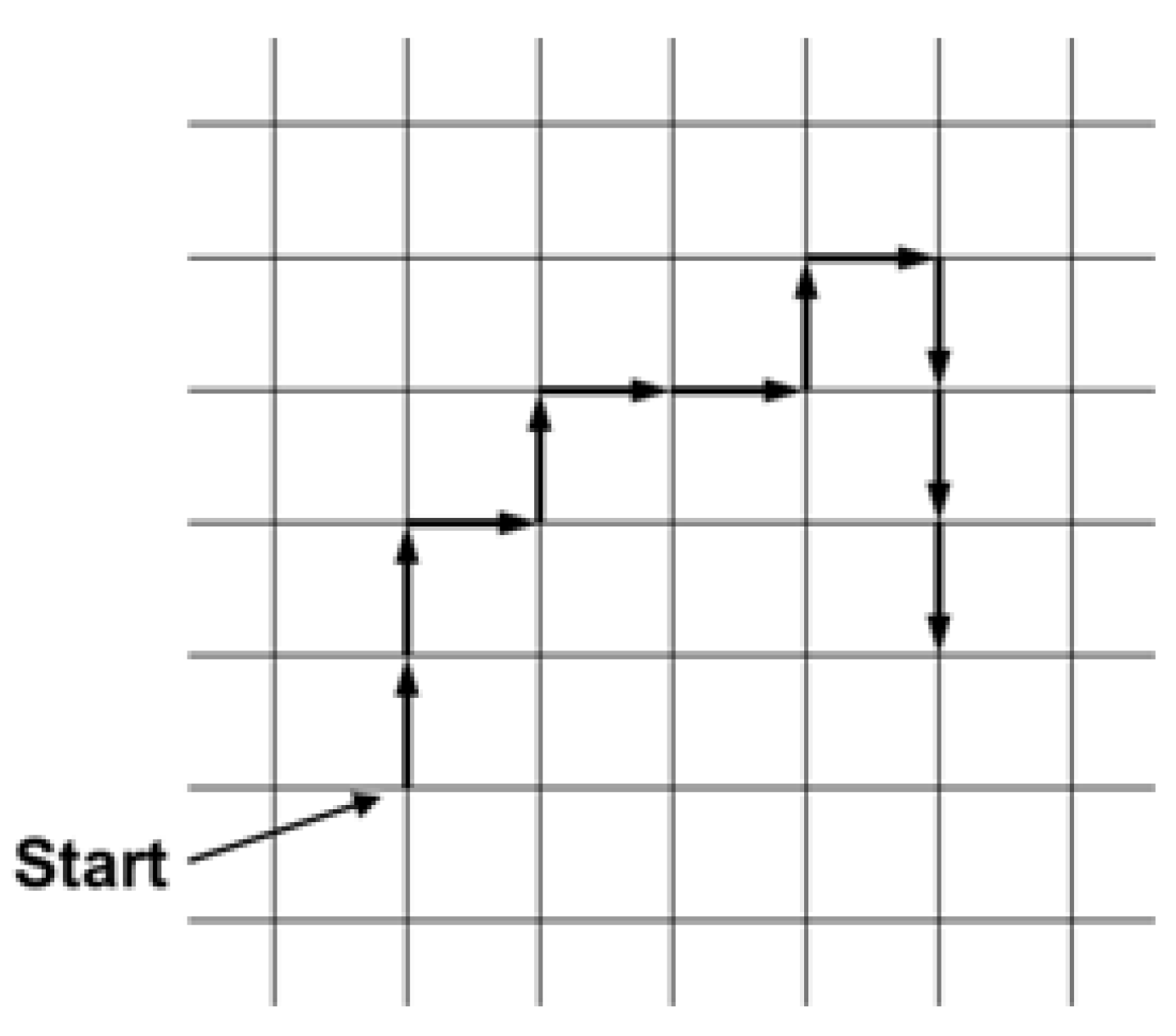

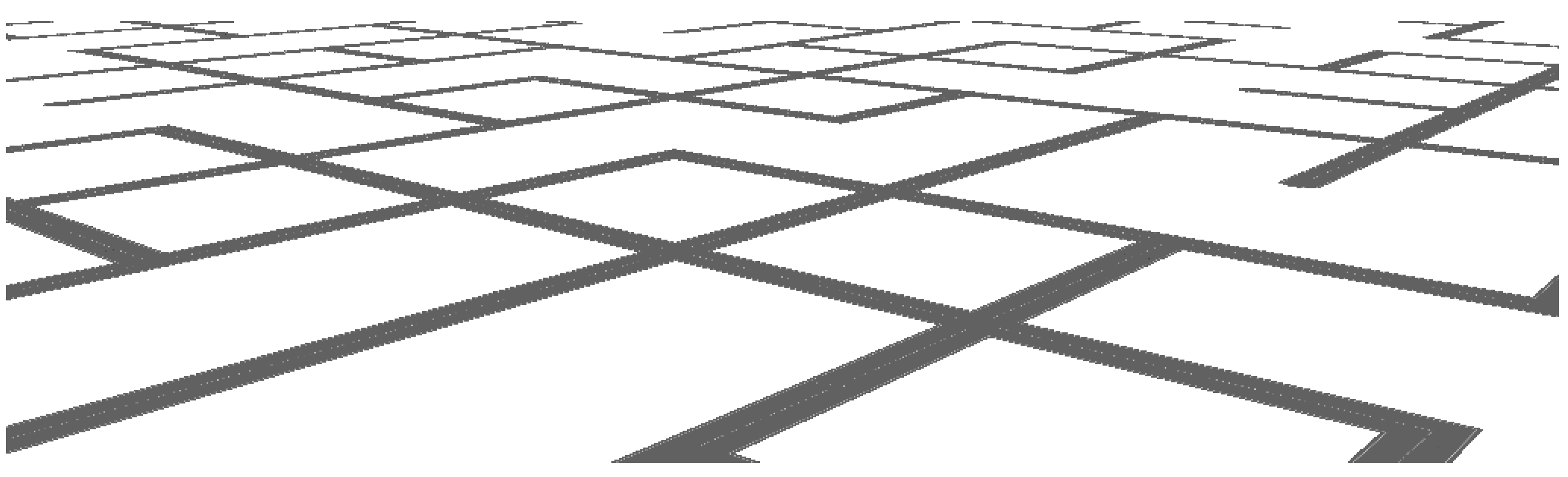

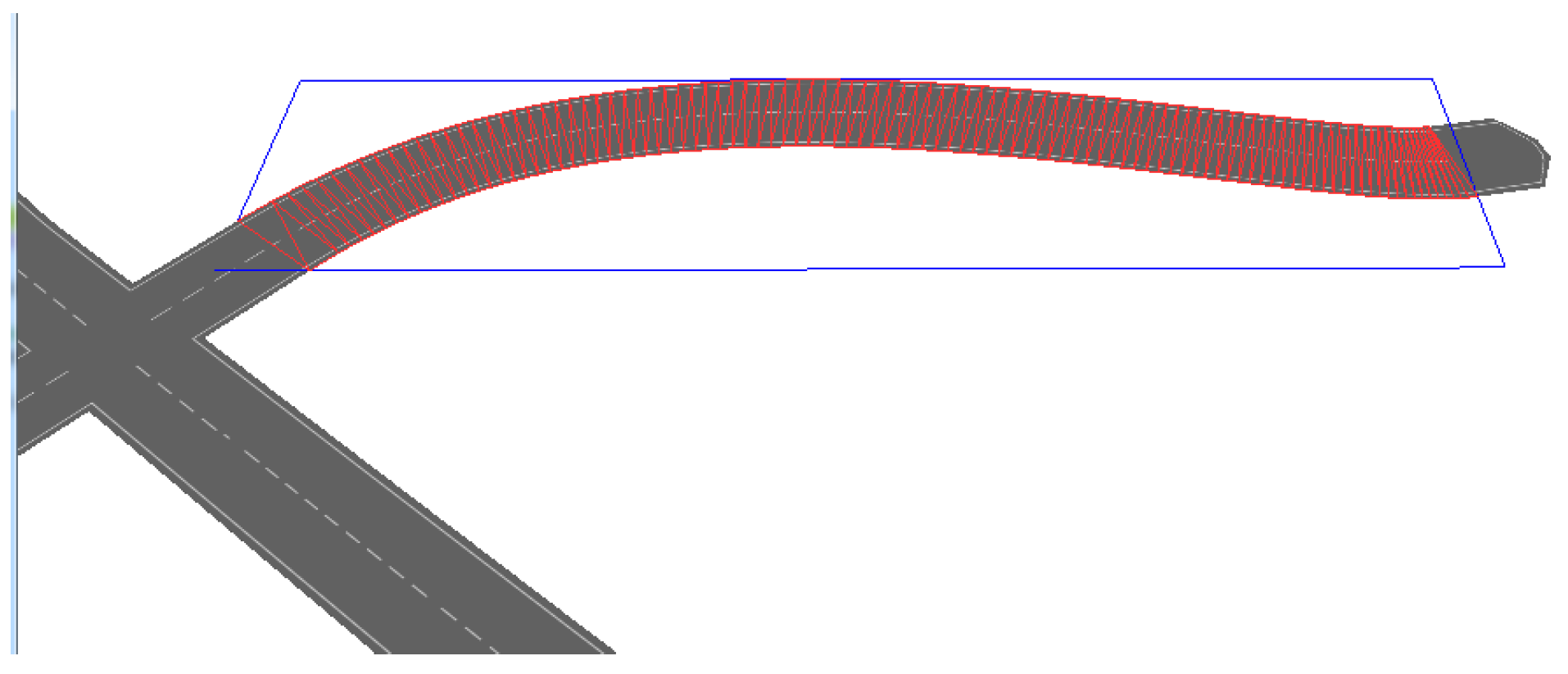

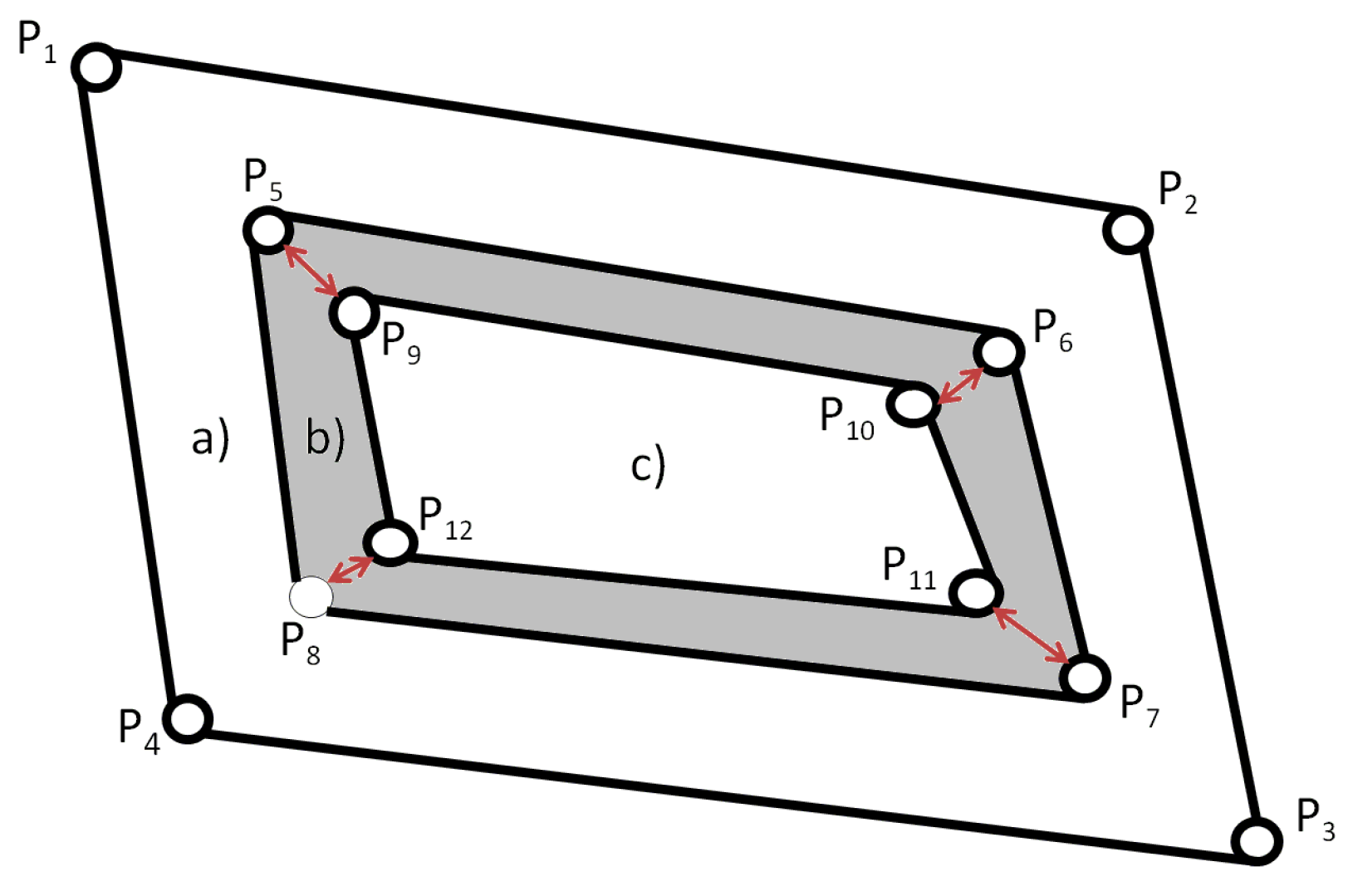

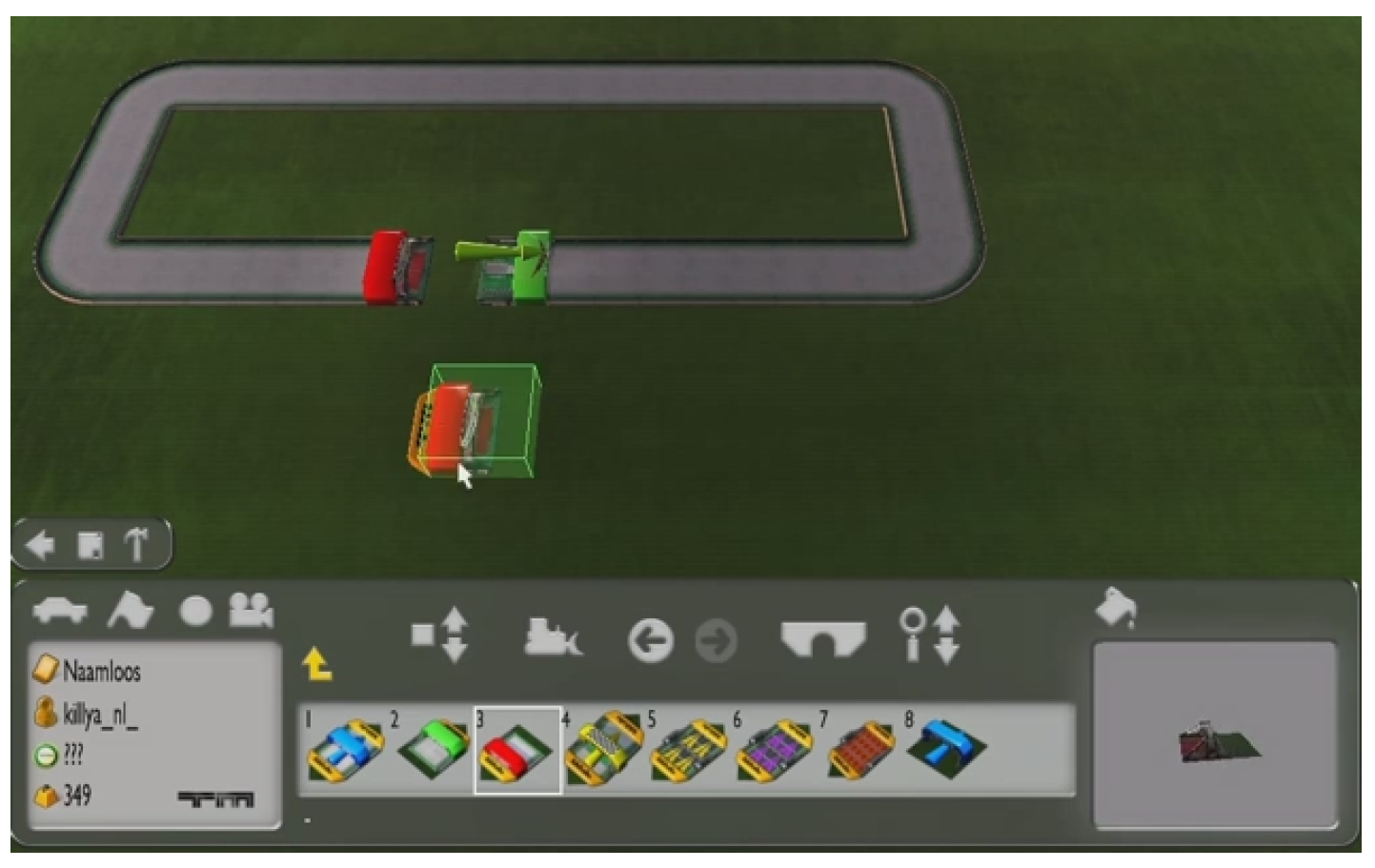

4.2. Road Networks

4.2.1. Intersecting Streets

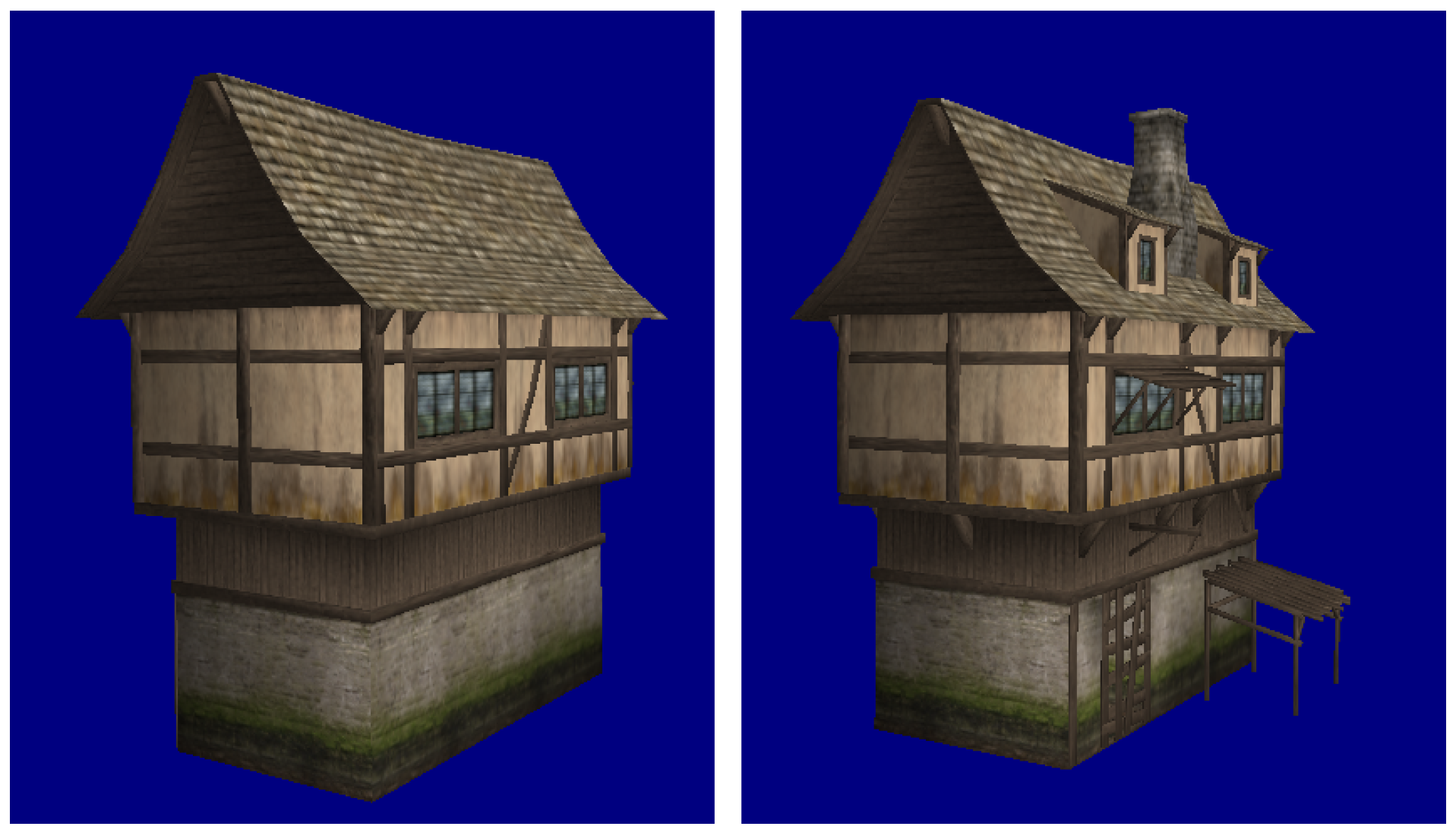

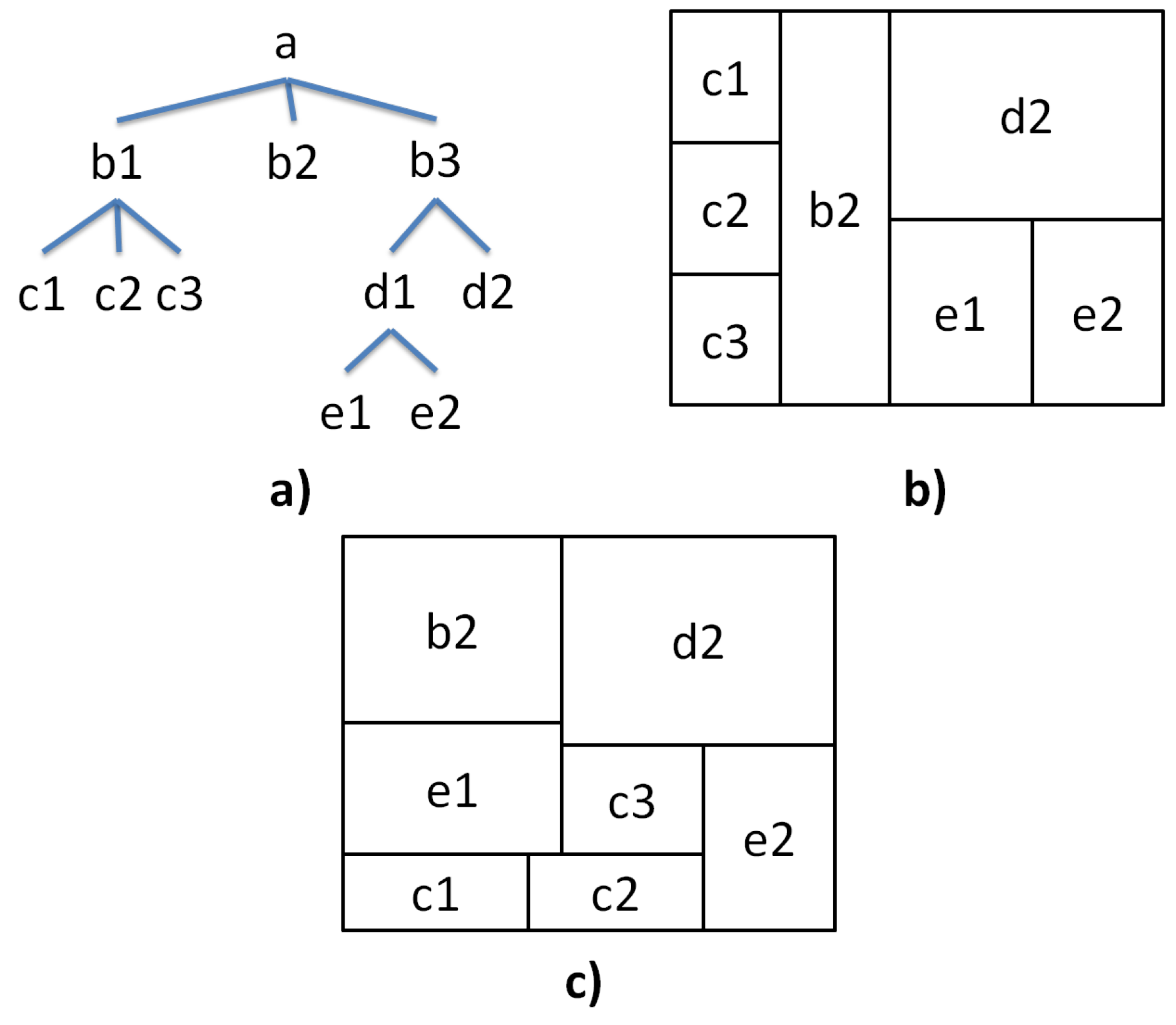

4.3. Buildings

- room arrangement on a fixed floor,

- shape and facade creation of the outer appearance of the buildings.

- the age (construction time, inhabited, renovation, decay),

- time of day or time of the year (illuminated windows and switched-on outer lights at night or in the Winter, smoking chimney in colder times of the year and open windows in the Summer).

- LOD0: regional, landscape,

- LOD1: city, region,

- LOD2: city districts,

- LOD3: architectural models (exterior), landmarks,

- LOD4: architectural models (including interior features).

4.3.1. Residential Buildings

4.3.2. Other Buildings

4.4. Living Beings

4.4.1. Humans

4.4.2. Creatures

- a user defines a set of variables for the creature generation (referred to as genes),

- a tool then translates these genes into a visual model,

- the model is rigged to have a skeleton ready for animation.

4.4.3. Simulated Motion

- goal selection,

- spatial queries,

- plan computation,

- plan adaptation.

- credibility,

- possibilities to interact.

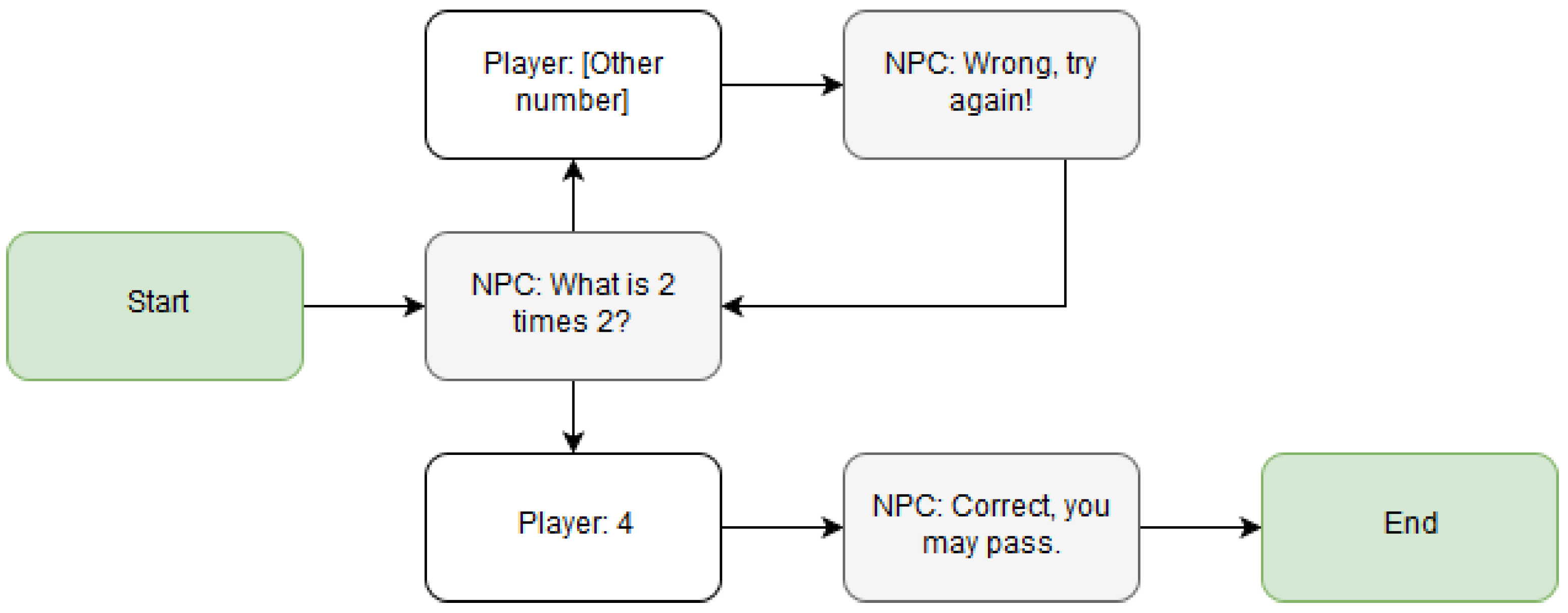

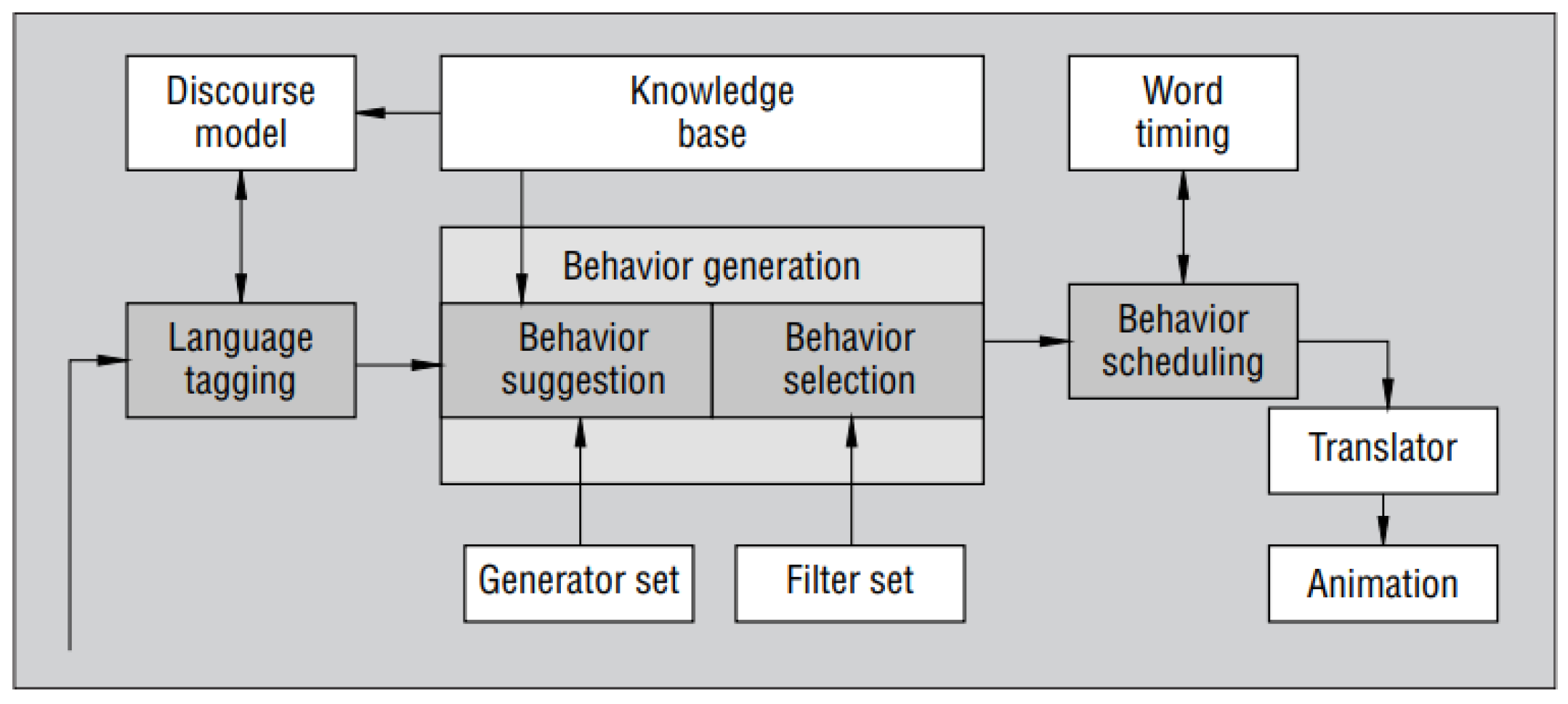

4.4.4. NPC Interaction

4.5. Procedurally Generated Stories

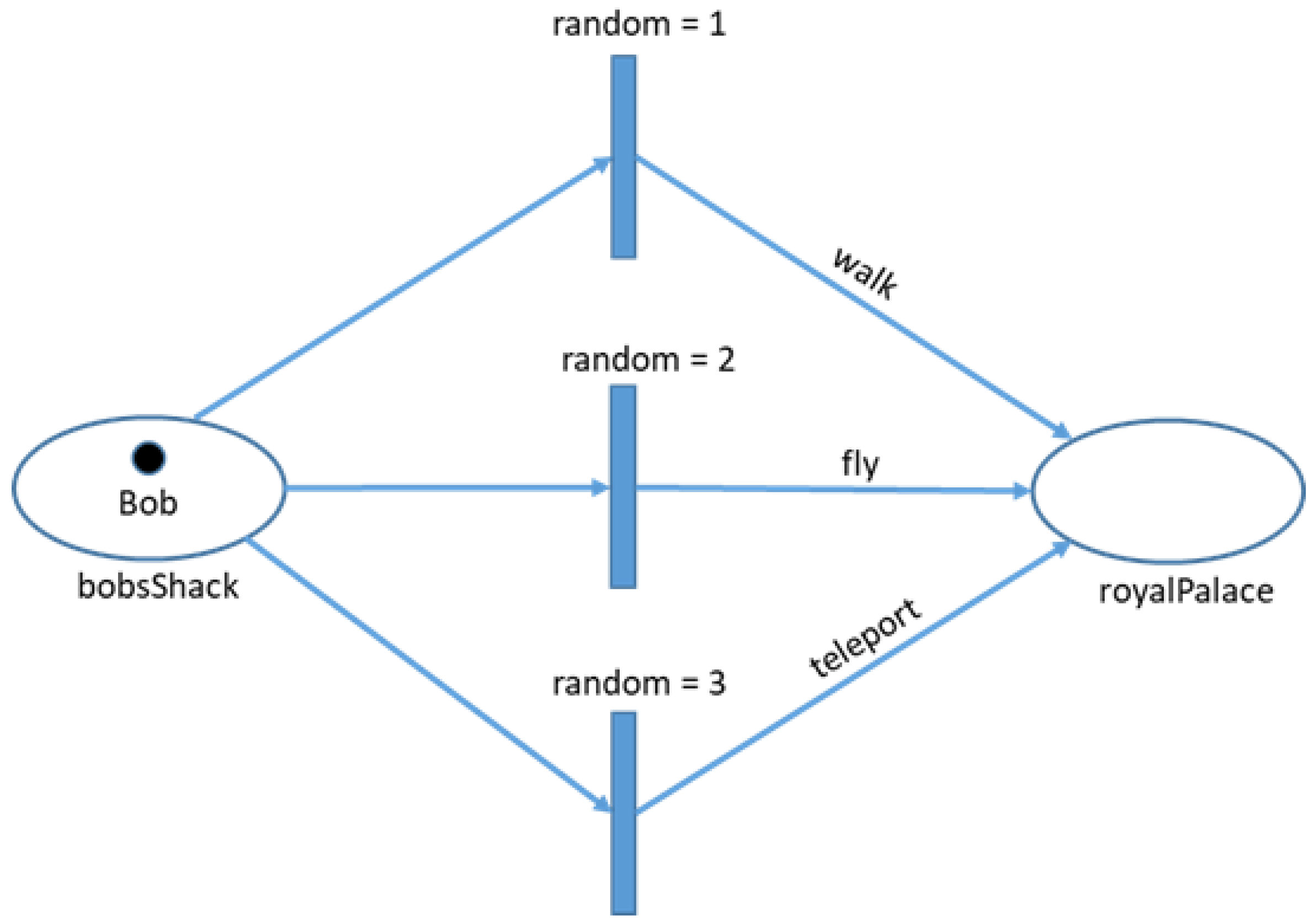

4.5.1. Planning Algorithms

- Action(walk(p:person, from:location, to:location)Precondition: At(p, from) ∧ random = 1Effect: ¬At(p, from) ∧ At(p, to)),

- Action(fly(p:person, from:location, to:location)Precondition: At(p, from) ∧ random = 2Effect: ¬At(p, from) ∧ At(p, to)),

- Action(telePort(p:person, from:location, to:location)Precondition: At(p, from) ∧ random = 3Effect: ¬At(p, from) ∧ At(p, to)).

4.5.2. Petri Nets

- S is a set of places, marked graphically by circles,

- T is a set of transitions, marked graphically by bars,

- is a multiset of arcs, i.e., W assigns to each arc a non-negative integer arc multiplicity (or weight); note that no arc may connect two places or two transitions. The elements of W are indicated graphically by arrows.

- M0 is an initial marking, consisting if tokens, indicated graphically by dots.

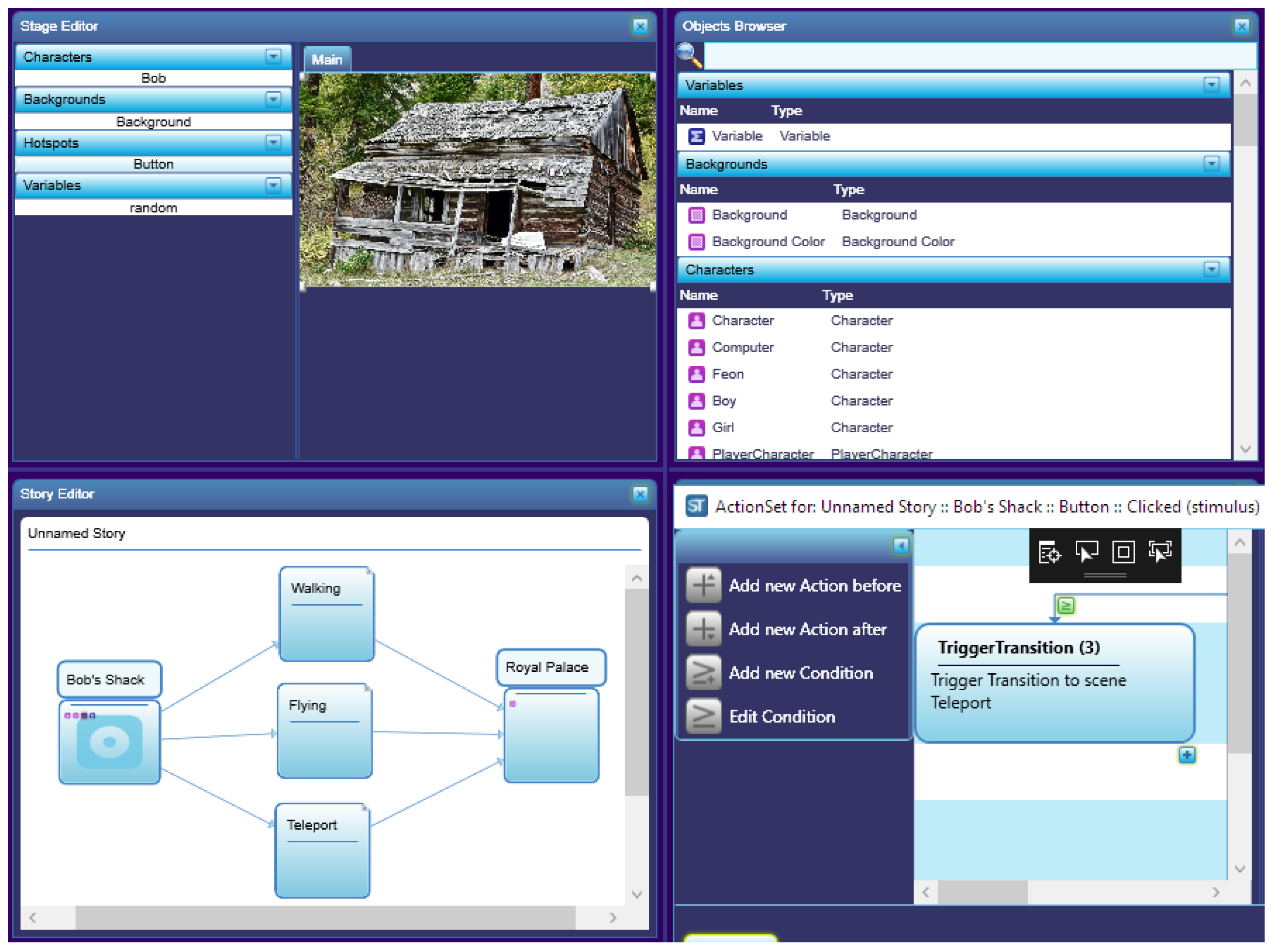

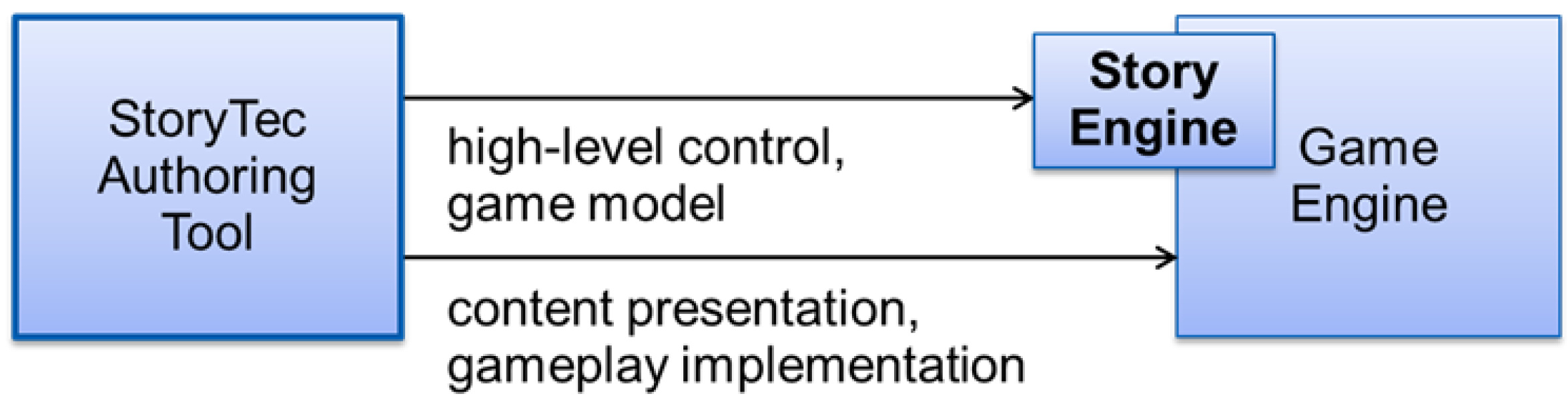

4.5.3. StoryTec

Game Structure Model

Game Logic Model

The StoryTec Editor and the StoryTec Runtime

4.5.4. The Procedural Generation of Game Content from a PCG Story

5. Conclusions

- PCG Wiki— A wiki presenting PCG in theory and practice, collecting games that make use of content generation algorithms and listing related links,

- procjam.com—An online contest inviting developers to create graphical demos explicitly making use of PCG,

- Reddit Procedural Generation—Topic on Reddit that serves as a panel for discussions and presentations of projects.

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Bethesda Game Studios. The Elder Scrolls V: Skyrim; Bethesda Game Studios: Rockville, MD, USA, 2011. [Google Scholar]

- Burgess, J. Modular Level Design for Skyrim. 2013. Available online: http://blog.joelburgess.com/2013/04/skyrims-modular-level-design-gdc-2013.html (accessed on 2 October 2017).

- Maxis. The Sims 1; Maxis: Redwood Shores, CA, USA, 2000. [Google Scholar]

- Maxis. Sim City; Maxis: Redwood Shores, CA, USA, 1989. [Google Scholar]

- Bach, E.; Madsen, A. Procedural Character Generation: Implementing Reference Fitting and Principal Components Analysis. Master’s Thesis, Aalborg University, Aalborg, Denmark, 2007. [Google Scholar]

- Frontier Developments. Elite: Dangerous; Video Game; Frontier Developments: Cambridge, UK, 2014. [Google Scholar]

- Mojang. Minecraft; Video Game; Mojang: Stockholm, Sweden, 2009. [Google Scholar]

- Teuber, K. The Settlers of Catan; Franckh-Kosmos Verlags-GmbH & Co.: Stuttgart, Germany, 1995. [Google Scholar]

- Wang, M. Java Settlers Intelligente agentenbasierte Spielsysteme für intuitive Multi-Touch-Umgebungen. Ph.D. Thesis, Free University of Berlin, Berlin, Germany, 2008. [Google Scholar]

- Dörner, R.; Göbel, S.; Effelsberg, W.; Wiemeyer, J. Serious Games: Foundations, Concepts and Practice; Springer International Publishing: Gewerbestrasse, Switzerland, 2016. [Google Scholar]

- Worth, D. Beneath Apple Manor; The Software Factory/Quality Software: Los Angeles, CA, USA, 1978. [Google Scholar]

- Carreker, D. The Game Developer’s Dictionary: A Multidisciplinary Lexicon for Professionals and Students; Cengage Learning: Boston, MA, USA, 2012. [Google Scholar]

- Michael Toy, G.W. Rogue; Epyx: San Francisco, CA, USA, 1980. [Google Scholar]

- Olivetti, J. The Game Archaeologist: A Brief History of Roguelikes. 2014. Available online: https://www.engadget.com/2014/01/18/the-game-archaeologist-a-brief-history-of-roguelikes/ (accessed on 2 October 2017).

- Lee-Urban, S. Procedural Content Generation. 2016. Available online: https://www.cc.gatech.edu/~surban6/2016-cs4731/lectures/2016_06_30-ProceduralContentGeneration_intro.pdf (accessed on 2 October 2017).

- Peachey, D.R. Solid Texturing of Complex Surfaces. In Proceedings of the 12th Annual Conference on Computer Graphics and Interactive Techniques, San Francisco, CA, USA, 22–26 July 1985; ACM: New York, NY, USA, 1985; pp. 279–286. [Google Scholar]

- Musgrave, F.K.; Kolb, C.E.; Mace, R.S. The Synthesis and Rendering of Eroded Fractal Terrains. In Proceedings of the 16th Annual Conference on Computer Graphics and Interactive Techniques, Boston, MA, USA, 31 July–4 August 1989; ACM: New York, NY, USA, 1989; Volume 23, pp. 41–50. [Google Scholar]

- Oppenheimer, P.E. Real Time Design and Animation of Fractal Plants and Trees. In Proceedings of the 13th Annual Conference on Computer Graphics and Interactive Techniques, Dallas, TX, USA, 18–22 August 1986; ACM: New York, NY, USA, 1986; Volume 20, pp. 55–64. [Google Scholar]

- Ebert, D.; Musgrave, F.; Peachey, D.; Perlin, K.; Worley, S. Texturing and Modeling: A Procedural Approach; (The Morgan Kaufmann Series in Computer Graphics); Elsevier Science: Amsterdam, The Netherlands, 2002. [Google Scholar]

- Westwood Pacific. Command and Conquer: Red Alert 2; Westwood Pacific: Las Vegas, NV, USA, 2000. [Google Scholar]

- Blizzard North. Diablo; Blizzard North: San Mateo, CA, USA, 1996. [Google Scholar]

- Bethesda Softworks. The Elder Scrolls II: Daggerfall; Bethesda Softworks: Bethesda, MD, USA, 1996. [Google Scholar]

- Blender Foundation. Blender. 2017. Available online: https://www.blender.org/ (accessed on 7 October 2017).

- Autodesk. Character Generator. 2014. Available online: https://charactergenerator.autodesk.com/ (accessed on 2 October 2017).

- Mixamo. Fuse. 2014. Available online: https://www.mixamo.com/ (accessed on 7 October 2017).

- MakeHuman team. MakeHuman. 2017. Available online: http://www.makehuman.org/ (accessed on 2 October 2017).

- Farbrausch. .kkrieger. 2004. Available online: https://web.archive.org/web/20120204065621/http://www.theprodukkt.com/kkrieger (accessed on 28 October 2017).

- Digitalekultur e.V. Die Demoszene—Neue Welten im Computer. 2003. Available online: https://www.digitalekultur.org/files/dk_wasistdiedemoszene.pdf (accessed on 2 October 2017).

- Farbrausch. Werkkzeug3. 2011. Available online: https://github.com/farbrausch/fr_public (accessed on 27 October 2017).

- Compton, K.; Osborn, J.C.; Mateas, M. Generative methods. In Proceedings of the Fourth Procedural Content Generation in Games Workshop, Chania, Greece, 14–17 May 2013. [Google Scholar]

- Macri, D.; Pallister, K. Procedural 3D Content Generation. Available online: http://web.archive.org/web/20060719005301/www.intel.com/cd/ids/developer/asmo-na/eng/20247.htm (accessed on 28 October 2017).

- Smith, G. An Analog History of Procedural Content Generation. 2015. Available online: http://sokath.com/main/files/1/smith-fdg15.pdf (accessed on 29 August 2017).

- Pixologic. ZBrush. 1999. Available online: http://pixologic.com/ (accessed on 29 August 2017).

- Epic Games. Unreal Engine 4. 2014. Available online: https://www.unrealengine.com (accessed on 7 October 2017).

- Unity Technologies. Unity 2017. Available online: https://unity3d.com (accessed on 7 October 2017).

- Crytec. Cry Engine V. 2016. Available online: https://www.cryengine.com/ (accessed on 7 October 2017).

- Epic Games. Procedural Buildings. 2012. Available online: https://docs.unrealengine.com/udk/Three/ProceduralBuildings.html (accessed on 27 October 2017).

- Side Effects Software. Houdini. 2017. Available online: https://www.sidefx.com/products/houdini-core/ (accessed on 7 October 2017).

- Esri R&D Center Zurich. Esri City Engine. 2014. Available online: http://www.esri.com/software/cityengine (accessed on 7 October 2017).

- Smelik, R.M.; Tutenel, T.; de Kraker, K.J.; Bidarra, R. A declarative approach to procedural modeling of virtual worlds. Comput. Graph. 2011, 35, 352–363. [Google Scholar] [CrossRef]

- Roden, T.; Parberry, I. From artistry to automation: A structured methodology for procedural content creation. In Proceedings of the International Conference on Entertainment Computing (ICEC 2004), Eindhoven, The Netherlands, 1–3 September 2004; pp. 301–304. [Google Scholar]

- Togelius, J.; Kastbjerg, E.; Schedl, D.; Yannakakis, G.N. What is procedural content generation?: Mario on the borderline. In Proceedings of the 2nd International Workshop on Procedural Content Generation in Games, Bordeaux, France, 28 June 2011; ACM: New York, NY, USA, 2011. [Google Scholar]

- Hendrikx, M.; Meijer, S.; Van Der Velden, J.; Iosup, A. Procedural Content Generation for Games: A Survey. ACM Trans. Multimedia Comput. Commun. Appl. 2013, 9. [Google Scholar] [CrossRef]

- Togelius, J.; Shaker, N.; Nelson, M.J. Procedural Content Generation in Games: A Textbook and an Overview of Current Research/J; Togelius, J., Shaker, N., Nelson, M.J., Eds.; Springer: Berlin, Germany, 2014. [Google Scholar]

- Prusinkiewicz, P.; Lindenmayer, A. The Algorithmic Beauty of Plants; Springer Science & Business Media: Berlin, Germany, 2012. [Google Scholar]

- Finkenzeller, D. Modellierung Komplexer Gebäudefassaden in der Computergraphik. Ph.D. Thesis, Universität Karlsruhe (TH), Karlsruhe, Germany, 2008. [Google Scholar]

- Pixar Animation Studios. RenderMan. 2014. Available online: https://renderman.pixar.com/ (accessed on 8 October 2017).

- Bradbury, G.A.; Choi, I.; Amati, C.; Mitchell, K.; Weyrich, T. Frequency-based Controls for Terrain Editing. In Proceedings of the 11th European Conference on Visual Media Production, London, UK, 13–14 November 2014; ACM: New York, NY, USA. [Google Scholar] [CrossRef]

- Soto, J. Statistical testing of random number generators. In Proceedings of the 22nd National Information Systems Security Conference, Arlington, VA, USA, 18–21 October 1999; Volume 10, p. 12. [Google Scholar]

- L’Ecuyer, P. Uniform random number generators: A review. In Proceedings of the 29th Conference on Winter simulation, Atlanta, GA, USA, 7–10 December 1997; IEEE Computer Society: Washington, DC, USA, 1997; pp. 127–134. [Google Scholar]

- Gerhard, G.; Thomas, H.K.; Claus, N.; Karl-Heinz, H. OGC City Geography Markup Language (CityGML) En-coding Standard. 2012. Available online: https://portal.opengeospatial.org/files/?artifact_id=47842 (accessed on 2 October 2017).

- Chomsky, N. Three models for the description of language. IRE Trans. Inf. Theory 1956, 2, 113–124. [Google Scholar] [CrossRef]

- Dapper, T. Practical Procedural Modeling of Plants. 2003. Available online: http://www.td-grafik.de/artic/talk20030122/overview.html (accessed on 2 October 2017).

- Deussen, O.; Hanrahan, P.; Lintermann, B.; Měch, R.; Pharr, M.; Prusinkiewicz, P. Realistic Modeling and Rendering of Plant Ecosystems. In Proceedings of the 25th Annual Conference on Computer Graphics and Interactive Techniques, Orlando, FL, USA, 19–24 July 1998; ACM: New York, NY, USA, 1998; pp. 275–286. [Google Scholar]

- Prusinkiewicz, P.; Hanan, J.; Hammel, M.; Mech, R.; Room, P.; Remphrey, W. Plants to Ecosystems: Advances in Computational Life Sciences; CSIRO: Clayton, Australia, 1997; pp. 1–134. [Google Scholar]

- Měch, R.; Prusinkiewicz, P. Visual models of plants interacting with their environment. In Proceedings of the 23rd Annual Conference on Computer Graphics And Interactive Techniques, New Orleans, LA, USA, 4–9 August 1996; ACM: New York, NY, USA, 1996; pp. 397–410. [Google Scholar]

- Sorrensen-Cothern, K.A.; Ford, E.D.; Sprugel, D.G. A model of competition incorporating plasticity through modular foliage and crown development. Ecol. Monogr. 1993, 63, 277–304. [Google Scholar] [CrossRef]

- Chen, X.; Neubert, B.; Xu, Y.Q.; Deussen, O.; Kang, S.B. Sketch-based Tree Modeling Using Markov Random Field. ACM Trans. Graph. 2008, 27. [Google Scholar] [CrossRef]

- Okabe, M.; Owada, S.; Igarash, T. Interactive Design of Botanical Trees using Freehand Sketches and Example-based Editing. Comput. Graph. Forum. 2005, 24, 487–496. [Google Scholar] [CrossRef]

- Reche-Martinez, A.; Martin, I.; Drettakis, G. Volumetric reconstruction and interactive rendering of trees from photographs. In Proceedings of the ACM SIGGRAPH 2004, Los Angeles, CA, USA, 8–12 August 2004; ACM: New York, NY, USA, 2004; Volume 23, pp. 720–727. [Google Scholar]

- Shlyakhter, I.; Rozenoer, M.; Dorsey, J.; Teller, S. Reconstructing 3D tree models from instrumented photographs. IEEE Comput. Graph. Appl. 2001, 21, 53–61. [Google Scholar] [CrossRef]

- Tan, P.; Zeng, G.; Wang, J.; Kang, S.B.; Quan, L. Image-based tree modeling. In Proceedings of the ACM SIGGRAPH 2007, San Diego, CA, USA, 5–9 August 2007; ACM: New York, NY, USA, 2007; Volume 26, p. 87. [Google Scholar]

- Mandelbrot, B.B.; Pignoni, R. The Fractal Geometry of Nature; WH Freeman: New York, NY, USA, 1983; Volume 173. [Google Scholar]

- Greenworks Organic Software. Xfrog. 2017. Available online: http://xfrog.com/ (accessed on 2 October 2017).

- Interactive Data Visualization. Speed Tree. 2015. Available online: https://speedtree.com (accessed on 2 October 2017).

- Kim, J. Modeling and optimization of a tree based on virtual reality for immersive virtual landscape generation. Symmetry 2016, 8. [Google Scholar] [CrossRef]

- Wong, S.K.; Chen, K.C. A Procedural Approach to Modelling Virtual Climbing Plants with Tendrils. Comput. Graph. Forum. 2016, 35, 5–18. [Google Scholar] [CrossRef]

- Stava, O.; Pirk, S.; Kratt, J.; Chen, B.; Mźch, R.; Deussen, O.; Benes, B. Inverse Procedural Modelling of Trees. Comput. Graph. Forum 2014, 33, 118–131. [Google Scholar] [CrossRef]

- Smelik, R.M.; De Kraker, K.J.; Tutenel, T.; Bidarra, R.; Groenewegen, S.A. A Survey of Procedural Methods for Terrain Modelling. Available online: http://cg.its.tudelft.nl/~rafa/myPapers/bidarra.3AMIGAS.RS.pdf (accessed on 2 October 2017).

- Freiknecht, J. Terrain Tutorial Using Shade-C. 2011. Available online: http://www.jofre.de/Transport/Terrain%20Tutorial%20using%20ShadeC.pdf (accessed on 2 October 2017).

- Matthews, E.A.; Malloy, B.A. Incorporating Coherent Terrain Types into Story-Driven Procedural Maps. Available online: http://meaningfulplay.msu.edu/proceedings2012/mp2012_submission_41.pdf (accessed on 2 October 2017).

- Perlin, K. Improving noise. In Proceedings of the ACM SIGGRAPH 2002, San Antonio, TX, USA, 23–26 July 2002; ACM: New York, NY, USA, 2002; Volume 21, pp. 681–682. [Google Scholar]

- Prusinkiewicz, P.; Hammel, M. A Fractal Model of Mountains and Rivers. Available online: http://algorithmicbotany.org/papers/mountains.gi93.pdf (accessed on 2 October 2017).

- Belhadj, F.; Audibert, P. Modeling Landscapes with Ridges and Rivers: Bottom Up Approach. In Proceedings of the 3rd International Conference on Computer Graphics and Interactive Techniques in Australasia and South East Asia, Dunedin, New Zealand, 30 November–2 December 2005; ACM: New York, NY, USA, 2005; pp. 447–450. [Google Scholar]

- Miller, G.S. The definition and rendering of terrain maps. In Proceedings of the 13th Annual Conference on Computer Graphics and Interactive Techniques, Dallas, TX, USA, 18–22 August 1986; ACM: New York, NY, USA, 1986; Volume 20, pp. 39–48. [Google Scholar]

- Olsen, J. Realtime Procedural Terrain Generation. 2004. Available online: http://web.mit.edu/cesium/Public/terrain.pdf (accessed on 2 October 2017).

- Kahoun, M. Realtime Library for Procedural Generation and Rendering of Terrains. Ph.D. Thesis, Charles University, Prague, Czech Republic, 2013. [Google Scholar]

- Duchaineau, M.; Wolinsky, M.; Sigeti, D.E.; Miller, M.C.; Aldrich, C.; Mineev-Weinstein, M.B. ROAMing terrain: Real-time Optimally Adapting Meshes. In Proceedings of the Visualization ’97, Phoenix, AZ, USA, 18–24 October 1997; pp. 81–88. [Google Scholar]

- Lee, J.; Jeong, K.; Kim, J. MAVE: Maze-based immersive virtual environment for new presence and experience. Comput. Animat. Virtual Worlds 2017, 28. [Google Scholar] [CrossRef]

- Benes, B.; Forsbach, R. Layered Data Representation for Visual Simulation of Terrain Erosion. In Proceedings of the 17th Spring Conference on Computer Graphics (SCCG ’01), Budmerice, Slovakia, 25–28 April 2001; IEEE Computer Society: Washington, DC, USA, 2001; p. 80. [Google Scholar]

- Santamaría-Ibirika, A.; Cantero, X.; Salazar, M.; Devesa, J.; Santos, I.; Huerta, S.; Bringas, P.G. Procedural approach to volumetric terrain generation. Vis. Comput. 2014, 30, 997–1007. [Google Scholar] [CrossRef]

- Cui, J.; Chow, Y.W.; Zhang, M. A Voxel-Based Octree Construction Approach for Procedural Cave Generation. 2011. Available online: http://ro.uow.edu.au/cgi/viewcontent.cgi?article=10948&context=infopapers (accessed on 2 October 2017).

- Boggus, M.; Crawfis, R. Procedural Creation of 3D Solution Cave Models. 2009. Available online: ftp://ftp.cse.ohio-state.edu/pub/tech-report/2009/TR19.pdf (accessed on 2 October 2017).

- Hammes, J. Modeling of Ecosystems as a Data Source for Real-Time Terrain Rendering. In Proceedings of the Digital Earth Moving: First International Symposium, DEM 2001, Manno, Switzerland, 5–7 September 2001; Westort, C.Y., Ed.; Springer: Berlin/Heidelberg, Germany, 2001; pp. 98–111. [Google Scholar]

- Alsweis, M.; Deussen, O. Wang-tiles for the simulation and visualization of plant competition. In Advances in Computer Graphics; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1–11. [Google Scholar]

- Berger, U.; Hildenbrandt, H.; Grimm, V. Towards a standard for the individual-based modeling of plant populations: self-thinning and the field-of-neighborhood approach. Nat. Resour. Model. 2002, 15, 39–54. [Google Scholar] [CrossRef]

- Wang, H. Proving theorems by pattern recognition I. Commun. ACM. 1960, 3, 220–234. [Google Scholar] [CrossRef]

- Deussen, O.; Lintermann, B. Digital Design of Nature: Computer Generated Plants and Organics, 1st ed.; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Huijser, R.; Dobbe, J.; Bronsvoort, W.F.; Bidarra, R. Procedural Natural Systems for Game Level Design. Available online: https://graphics.tudelft.nl/Publications-new/2010/HDBB10a/HDBB10a.pdf (accessed on 2 October 2017).

- Derzapf, E.; Ganster, B.; Guthe, M.; Klein, R. River Networks for Instant Procedural Planets. Comput. Graph. Forum 2011, 30, 2031–2040. [Google Scholar] [CrossRef]

- Doran, J.; Parberry, I. Controlled procedural terrain generation using software agents. IEEE Trans. Comput. Intell. AI Games 2010, 2, 111–119. [Google Scholar] [CrossRef]

- Loopix. Mystymood. 2016. Available online: http://www.loopix-project.com/ (accessed on 27 October 2017).

- Ilangovan, P.K. Procedural City Generator. Master’s Thesis, Bournemouth University, Bournemouth, Dorset, England, 2009. [Google Scholar]

- Felix Queißner, M.K.; Freiknecht, J. TUST Scripting Library. 2013. Available online: https://github.com/MasterQ32/TUST (accessed on 3 October 2017).

- Banf, M.; Barth, M.; Schulze, H.; Koch, J.; Pritzkau, A.; Schmidt, M.; Daraban, A.; Meister, S.; Sandhöfer, R.; Sotke, V.; et al. On-demand creation of procedural cities. In Game and Entertainment Technologies; IADIS Press: Freiburg, Germany, 2010. [Google Scholar]

- Kelly, G.; McCabe, H. Citygen: An Interactive System for Procedural City Generation. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.688.7603&rep=rep1&type=pdf (accessed on 3 October 2017).

- Parish, Y.I.; Müller, P. Procedural modeling of cities. In Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques, Los Angeles, CA, USA, 12–17 August 2001; ACM: New York, NY, USA, 2001; pp. 301–308. [Google Scholar]

- Nadeo. TrackMania Original; Nadeo: Issy-les-Moulineaux, France, 2003. [Google Scholar]

- Namco. Ridge Racer 64; Namco: Tokio, Japan, 1999. [Google Scholar]

- Acclaim Entertainment. Re-Volt; Acclaim Entertainment: Glen Cove, NY, USA, 1999. [Google Scholar]

- Taplin, J. Simulation Models of Traffic Flow. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.113.1933&rep=rep1&type=pdf (accessed on 3 October 2017).

- Fritzsche, H.T. A model for traffic simulation. Traffic Eng. Control 1994, 35, 317–321. [Google Scholar]

- Sewall, J.; Wilkie, D.; Merrell, P.; Lin, M.C. Continuum Traffic Simulation. Comput. Graph. Forum 2010, 27, 439–448. [Google Scholar] [CrossRef]

- Brenner, C. Towards Fully Automatic Generation of City Models. 2000. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.17.4549&rep=rep1&type=pdf (accessed on 3 October 2017).

- Saldana, M.; Johanson, C. Procedural modeling for rapid-prototyping of multiple building phases. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 5. [Google Scholar] [CrossRef]

- Birch, P.J.; Browne, S.P.; Jennings, V.J.; Day, A.M.; Arnold, D.B. Rapid Procedural-modelling of Architectural Structures. In Proceedings of the 2001 Conference on Virtual Reality, Archeology, and Cultural Heritage, Athens, Greece, 28–30 November 2001; ACM: New York, NY, USA, 2001; pp. 187–196. [Google Scholar]

- Martin, J. Algorithmic Beauty of Buildings Methods for Procedural Building Generation. Available online: http://digitalcommons.trinity.edu/cgi/viewcontent.cgi?article=1003&context=compsci_honors (accessed on 3 October 2017).

- Stocker, J. BuildR2. 2003. Available online: http://support.jasperstocker.com/buildr2/ (accessed on 3 October 2017).

- Ibele, T. Building Generator. 2009. Available online: http://tysonibele.com/Main/BuildingGenerator/buildingGen.htm (accessed on 3 October 2017).

- Podevyn, M. Developing an Organisational Framework for Sustaining Virtual City Models. Ph.D. Thesis, Northumbria University, Newcastle upon Tyne, England, 2013. [Google Scholar]

- Merrell, P.; Schkufza, E.; Koltun, V. Computer-generated residential building layouts. In Proceedings of the ACM SIGGRAPH Asia 2010, Seoul, Korea, 15–18 December 2010; ACM: New York, NY, USA, 2010; Volume 29, p. 181. [Google Scholar]

- Müller, P.; Wonka, P.; Haegler, S.; Ulmer, A.; Van Gool, L. Procedural modeling of buildings. In Proceedings of the ACM SIGGRAPH 2006, Boston, MA, USA, 30 July–3 August 2006; ACM: New York, NY, USA, 2006; Volume 25, pp. 614–623. [Google Scholar]

- Bruls, M.; Huizing, K.; van Wijk, J.J. Squarified Treemaps. In Data Visualization 2000, Proceedings of the Joint EUROGRAPHICS and IEEE TCVG Symposium on Visualization in Amsterdam, The Netherlands, 29–30 May 2000; de Leeuw, W.C., van Liere, R., Eds.; Springer: Vienna, Australia, 2000; pp. 33–42. [Google Scholar]

- Johnson, B.; Shneiderman, B. Tree-maps: A space-filling approach to the visualization of hierarchical information structures. In Proceedings of the 2nd Conference on Visualization’91, San Diego, CA, USA, 22–25 October 1991; pp. 284–291. [Google Scholar]

- Mirahmadi, M.; Shami, A. A Novel Algorithm for Real-Time Procedural Generation of Building Floor Plans. arXiv. 2012. Available online: https://arxiv.org/abs/1211.5842 (accessed on 3 October 2017).

- Lopes, R.; Tutenel, T.; Smelik, R.M.; De Kraker, K.J.; Bidarra, R. A Constrained Growth Method for Procedural Floor Plan Generation. Available online: https://graphics.tudelft.nl/Publications-new/2010/LTSDB10a/LTSDB10a.pdf (accessed on 3 October 2017).

- Merrell, P.; Schkufza, E.; Li, Z.; Agrawala, M.; Koltun, V. Interactive furniture layout using interior design guidelines. In Proceedings of the ACM SIGGRAPH 2011, Vancouver, BC, Canada, 7–11 August 2011; ACM: New York, NY, USA, 2011; Volume 30, p. 87. [Google Scholar]

- Wonka, P.; Wimmer, M.; Sillion, F.; Ribarsky, W. Instant Architecture; ACM: New York, NY, USA, 2003; Volume 22. [Google Scholar]

- Whitehead, J. Toward proccedural decorative ornamentation in games. In Proceedings of the 2010 Workshop on Procedural Content Generation in Games, Monterey, CA, USA, 19–21 June 2010; ACM: New York, NY, USA, 2010. [Google Scholar]

- Barreto, N.; Roque, L. A Survey of Procedural Content Generation tools in Video Game Creature Design. In Proceedings of the Second Conference on Computation Communication Aesthetics and X, Porto, Portugal, 26–27 June 2014. [Google Scholar]

- UMA Steering Group. UMA—Unity Multipurpose Avatar. 2017. Available online: https://www.assetstore.unity3d.com/en/#!/content/13930 (accessed on 3 October 2017).

- Maxis. Spore; Maxis: Redwood Shores, CA, USA, 2008. [Google Scholar]

- Hecker, C.; Raabe, B.; Enslow, R.W.; DeWeese, J.; Maynard, J.; van Prooijen, K. Real-time Motion Retargeting to Highly Varied User-created Morphologies. Available online: http://chrishecker.com/images/c/cb/Sporeanim-siggraph08.pdf (accessed on 3 October 2017).

- Hudson, J. Creature Generation Using Genetic Algorithms and Auto-Rigging. Ph.D. Thesis, Bournemouth University, Poole, Dorset, UK, 2013. [Google Scholar]

- Anderson, E.F. Playing Smart-Artificial Intelligence in Computer Games. 2003. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.108.8170&rep=rep1&type=pdf (accessed on 3 October 2017).

- Curtis, S.; Best, A.; Manocha, D. Menge: A modular framework for simulating crowd movement. Collect. Dyn. 2016, 1, 1–40. [Google Scholar] [CrossRef]

- Rockstar Games. Grand Theft Auto III; Rockstar Games: New York, NY, USA, 2001. [Google Scholar]

- Szymanezyk, O.; Dickinson, P.; Duckett, T. From Individual Characters to Large Crowds: Augmenting the Believability of Open-World Games through Exploring Social Emotion in Pedestrian Groups. In Proceedings of the DiGRA 2011 Conference: Think Design Play, Hilversum, The Netherlands, 14–17 September 2011. [Google Scholar]

- Lee, S. The Effect of RPG Newness, Rating, and Character Evilness on The NPC Believability; Michigan State University: East Lansing, MI, USA, 2009. [Google Scholar]

- Horswill, I.D. Lightweight Procedural Animation With Believable Physical Interactions. IEEE Trans. Comput. Intell. AI Games 2009, 1, 39–49. [Google Scholar] [CrossRef]

- Karim, A.A.; Gaudin, T.; Meyer, A.; Buendia, A.; Bouakaz, S. Procedural locomotion of multilegged characters in dynamic environments. Comput. Anim. Virtual Worlds 2013, 24, 3–15. [Google Scholar] [CrossRef]

- Traum, D. Computational Approaches to Dialogue. In The Routledge Handbook of Language and Dialogue; Taylor & Francis: Abingdon, UK, 2017; p. 143. [Google Scholar]

- Strong, C.R.; Mateas, M. Talking with NPCs: Towards Dynamic Generation of Discourse Structures. Available online: http://www.aaai.org/Papers/AIIDE/2008/AIIDE08-019.pdf (accessed on 3 October 2017).

- MacNamee, B.; Cunningham, P. Creating socially interactive no-player characters: The μ-SIV system. Int. J. Intell. Games Simul. 2003, 2, 28–35. [Google Scholar]

- Nevigo. Articy:draft. 2017. Available online: https://www.nevigo.com/en/articydraft/overview/ (accessed on 27 October 2017).

- Brom, C.; Poch, T.; Sery, O. AI level of detail for really large worlds. Game Programm. Gems 2010, 8, 213–231. [Google Scholar]

- Cassell, J.; Vilhjálmsson, H.H.; Bickmore, T. Beat: The behavior expression animation toolkit. In Proceedings of the 28th Annual Conference on Computer Graphics And Interactive Techniques, Los Angeles, CA, USA, 12–17 August 2001; ACM: New York, NY, USA, 2001; pp. 477–486. [Google Scholar]

- Gratch, J.; Rickel, J.; André, E.; Cassell, J.; Petajan, E.; Badler, N. Creating interactive virtual humans: Some assembly required. IEEE Intell. Syst. 2002, 17, 54–63. [Google Scholar] [CrossRef]

- De Carolis, B.; Pelachaud, C.; Poggi, I.; Steedman, M. APML, a markup language for believable behavior generation. In Life-Like Characters; Springer: Berlin/Heidelberg, Germany, 2004; pp. 65–85. [Google Scholar]

- Bickmore, T.W.; Picard, R.W. Establishing and maintaining long-term human-computer relationships. ACM Trans. Comput.-Hum. Interact. (TOCHI) 2005, 12, 293–327. [Google Scholar] [CrossRef]

- Miyamoto, S. The Legend of Zelda; Nintendo: Kyoto, Japan, 1986. [Google Scholar]

- Shaker, N.; Togelius, J.; Nelson, M.J. Procedural Content Generation in Games; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Mehm, F. Authoring of Adaptive Single-Player Educational Games. In PIK-Praxis der Informationsverarbeitung und Kommunikation; De Gruyter: Berlin, Germany, 2014; Volume 37, pp. 157–160. [Google Scholar]

- Ashmore, C.; Nitsche, M. The Quest in a Generated World. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.70.9841&rep=rep1&type=pdf (accessed on 3 October 2017).

- Hartsook, K.; Zook, A.; Das, S.; Riedl, M.O. Toward supporting stories with procedurally generated game worlds. In Proceedings of the 2011 IEEE Conference on Computational Intelligence and Games (CIG), Seoul, Korea, 31 August–3 September 2011; pp. 297–304. [Google Scholar]

- Sullivan, A.; Mateas, M.; Wardrip-Fruin, N. Making quests playable: Choices, CRPGs, and the Grail framework. Leonardo Electron. Almanac 2012, 17. [Google Scholar] [CrossRef]

- Dormans, J.; Bakkes, S. Generating missions and spaces for adaptable play experiences. IEEE Trans. Comput. Intell. AI Games 2011, 3, 216–228. [Google Scholar] [CrossRef]

- Colton, S.; Wiggins, G.A. Computational creativity: The final frontier? In Proceedings of the 20th European Conference on Artificial Intelligence, Montpellier, France, 27–31 August 2012; pp. 21–26. [Google Scholar]

- Liapis, A.; Yannakakis, G.N.; Togelius, J. Computational Game Creativity. Available online: http://julian.togelius.com/Liapis2014Computational.pdf (accessed on 3 October 2017).

- Pednault, E.P. Formulating multiagent, dynamic-world problems in the classical planning framework. In Reasoning about Actions and Plans; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1987; pp. 47–82. [Google Scholar]

- Riedl, M.; Young, R. Character-focused narrative generation for execution in virtual worlds. In Proceedings of the International Conference on Virtual Storytelling. Using Virtual RealityTechnologies for Storytelling, Toulouse, France, 20–21 November 2003; pp. 47–56. [Google Scholar]

- Riedl, M.O.; Young, R.M. Narrative planning: Balancing plot and character. J. Artif. Intell. Res. 2010, 39, 217–268. [Google Scholar]

- Gervas, P. The Living Handbook of Narratology, Chapter Story Generator Algorithms; Hamburg University: Hamburg, Germany, 2013. [Google Scholar]

- Beaudouin-Lafon, M.; Mackay, W.; Andersen, P.; Janecek, P.; Jensen, M.; Lassen, M.; Lund, K.; Mortensen, K.; Munck, S.; Ratzer, A.; et al. CPN/Tools: A post-WIMP interface for editing and simulating coloured Petri nets. In Proceedings of the International Conference on Applications and Theory of Petri Nets, Newcastle upon Tyne, UK, 25–29 June 2001; pp. 71–80. [Google Scholar]

- Reuter, C. Authoring Collaborative Multiplayer Games-Game Design Patterns, Structural Verification, Collaborative Balancing and Rapid Prototyping. Ph.D. Thesis, Technische Universität Darmstadt, Darmstadt, Germany, 2016. [Google Scholar]

- Lee, Y.S.; Cho, S.B. Context-aware petri net for dynamic procedural content generation in role-playing game. IEEE Comput. Intell. Mag. 2011, 6, 16–25. [Google Scholar] [CrossRef]

- BioWare. Neverwinter Nights; BioWare: Edmonton, AB, Canada, 2002. [Google Scholar]

- Krajzewicz, D.; Hertkorn, G.; Rössel, C.; Wagner, P. SUMO (Simulation of Urban MObility)—An Open-Source Traffic Simulation. Available online: http://sumo.dlr.de/pdf/dkrajzew_MESM2002_SUMO.pdf (accessed on 3 October 2017).

- Horni, A.; Nagel, K.; Axhausen, K.W. The multi-agent transport simulation MATSim; Ubiquity Press: London, UK, 2016. [Google Scholar]

- Munty Engine Team. Munty Engine. 2014. Available online: https://sourceforge.net/projects/muntyengine/ (accessed on 12 October 2017).

- Steven Savage. Seventh Sanctum. 2013. Available online: http://www.seventhsanctum.com (accessed on 2 October 2017).

- Guard, D. OpenSimulator. 2007. Available online: http://opensimulator.org (accessed on 2 October 2017).

- Gloor, C. PEDSIM. 2016. Available online: http://pedsim.silmaril.org (accessed on 2 October 2017).

- Smelik, R.M.; Tutenel, T.; Bidarra, R.; Benes, B. A Survey on Procedural Modelling for Virtual Worlds. Comput. Graph. Forum 2014, 33, 31–50. [Google Scholar] [CrossRef]

- Ubisoft Montreal. Watch Dogs; Ubisoft: Montreal, QC, Canada, 2014. [Google Scholar]

- Team Bondi. L.A. Noire; Rockstar Games: New York, NY, USA, 2011. [Google Scholar]

- Markuz. IGN’s “Making of”: The Hidden Secrets. 2014. Available online: http://www.accesstheanimus.com/Making_of_hidden_secrets_part2.html (accessed on 3 October 2017).

- Savage, R. VisitorVille. 2003. Available online: http://www.visitorville.com/ (accessed on 2 October 2017).

| Topic | Research | Implementation | Implementation Example |

|---|---|---|---|

| Creation of textures and materials | ++ | ++ | .werkkzeug, Unreal Engine 4 |

| Generation of floor plans | ++ | - | |

| Creation of buildings without interior | ++ | + | BuildR, Building Generator |

| Creation of multi-story buildings | - - | - - | |

| Creation of buildings with interior | o | - - | |

| Creation of public buildings | - - | - - | |

| Creation of public places | – | - - | |

| Generation of road networks | ++ | ++ | Road Network Generator, SUMO [158] |

| Multi-track road network generation | o | ++ | Road Network Generator, SUMO |

| Traffic simulation | ++ | ++ | SUMO, MATSim [159] |

| Humanoid model generation | o | + | UMA, MakeHuman |

| Animation of humanoid models | - - | - | Munty Engine [160] |

| Artificial personality generation | - | + | Seventh Sanctum [161] |

| Simulation of NPCs with individual daily routines | – | - | Open Simulator [162] |

| Simultaneous simulation of many NPCs | ++ | + | PEDSIM [163] |

| Generation of plants and trees | ++ | + | Speedtree, Xfrog |

| Generation of stories and quests | ++ | o | Rogue, Skyrim, Zelda |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Freiknecht, J.; Effelsberg, W. A Survey on the Procedural Generation of Virtual Worlds. Multimodal Technol. Interact. 2017, 1, 27. https://doi.org/10.3390/mti1040027

Freiknecht J, Effelsberg W. A Survey on the Procedural Generation of Virtual Worlds. Multimodal Technologies and Interaction. 2017; 1(4):27. https://doi.org/10.3390/mti1040027

Chicago/Turabian StyleFreiknecht, Jonas, and Wolfgang Effelsberg. 2017. "A Survey on the Procedural Generation of Virtual Worlds" Multimodal Technologies and Interaction 1, no. 4: 27. https://doi.org/10.3390/mti1040027