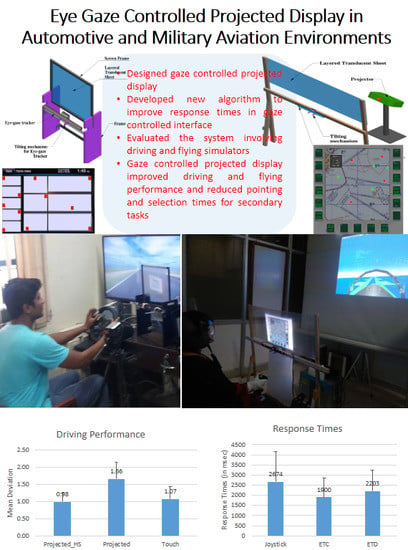

Eye Gaze Controlled Projected Display in Automotive and Military Aviation Environments

Abstract

:1. Introduction

- Integrating and evaluating eye gaze controlled interaction for projected display;

- Proposing an algorithm to facilitate interaction with gaze controlled interface;

- Evaluating gaze controlled interface for automotive and military aviation environments and comparing their performance with existing interaction techniques.

2. Related Work

2.1. Eye Gaze Tracking

2.2. Gaze Controlled Interface

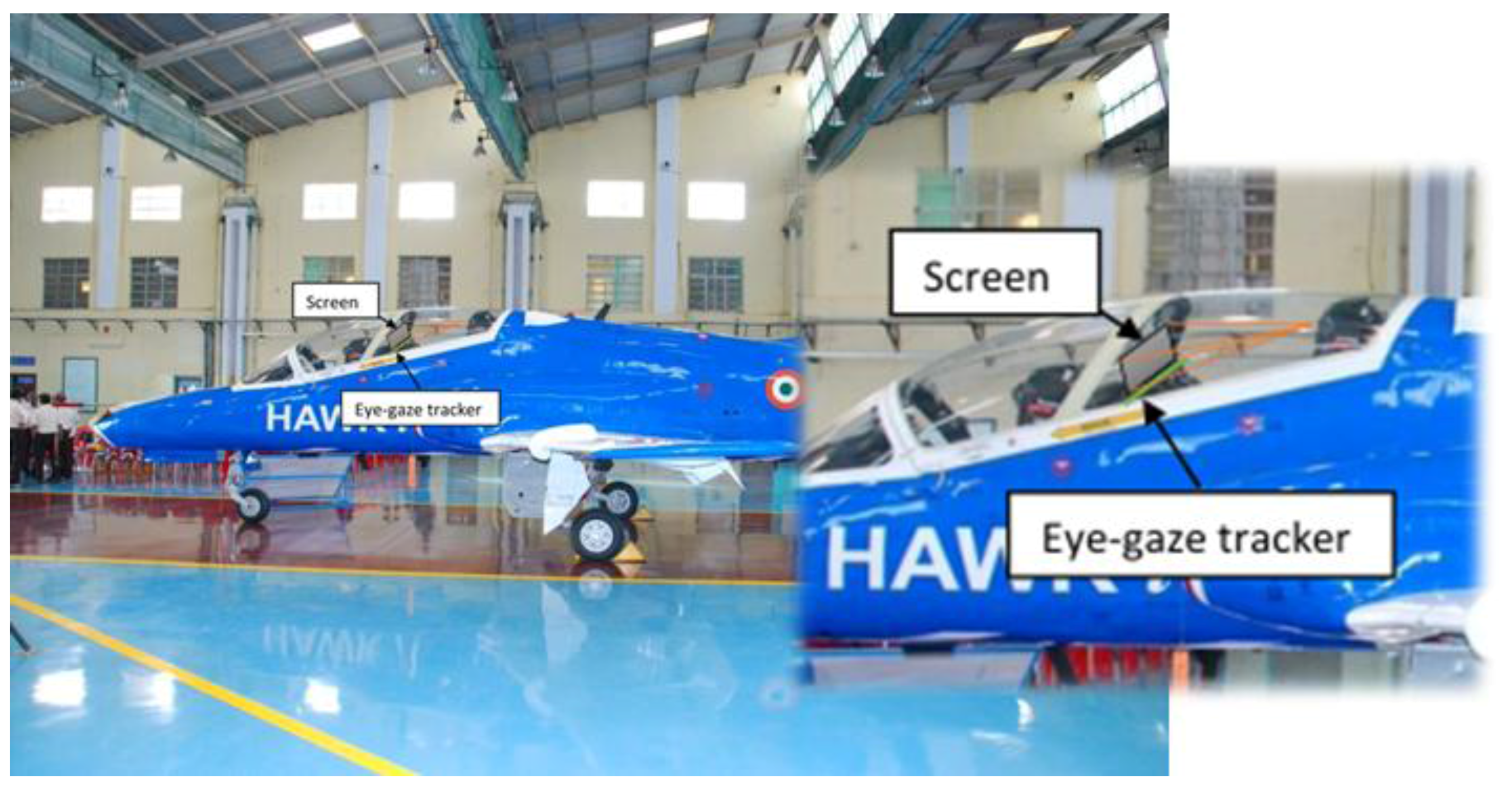

2.3. Aviation Environment

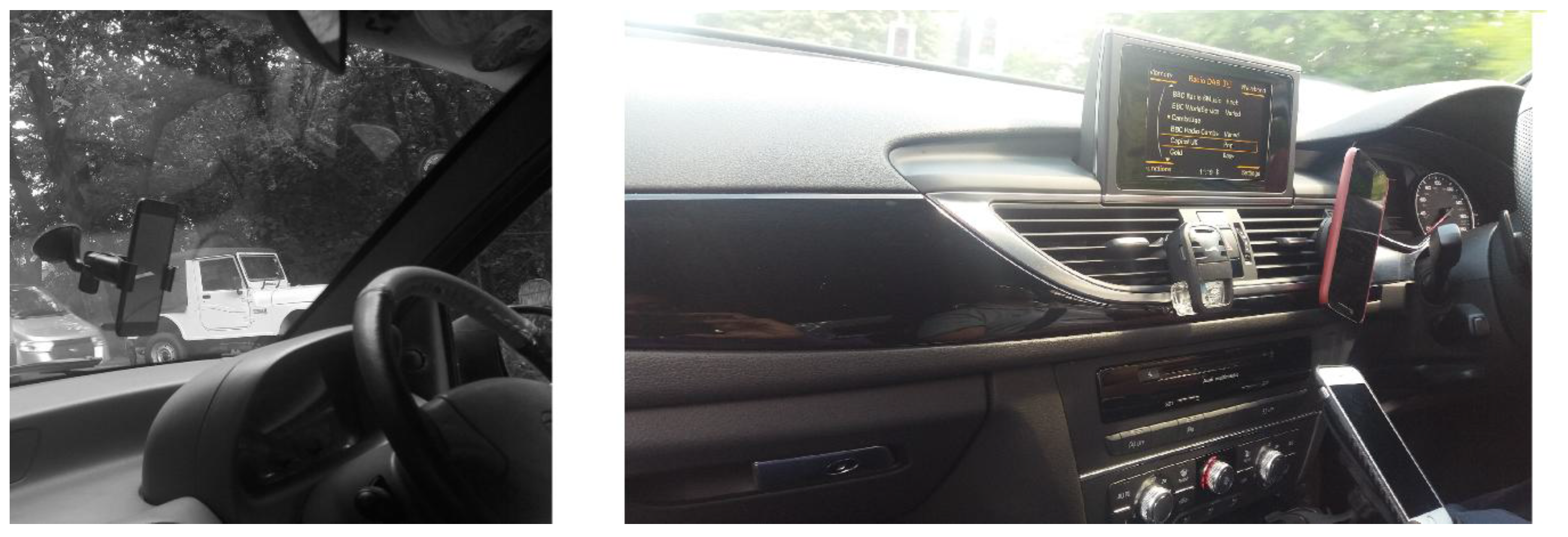

2.4. Automotive Environment

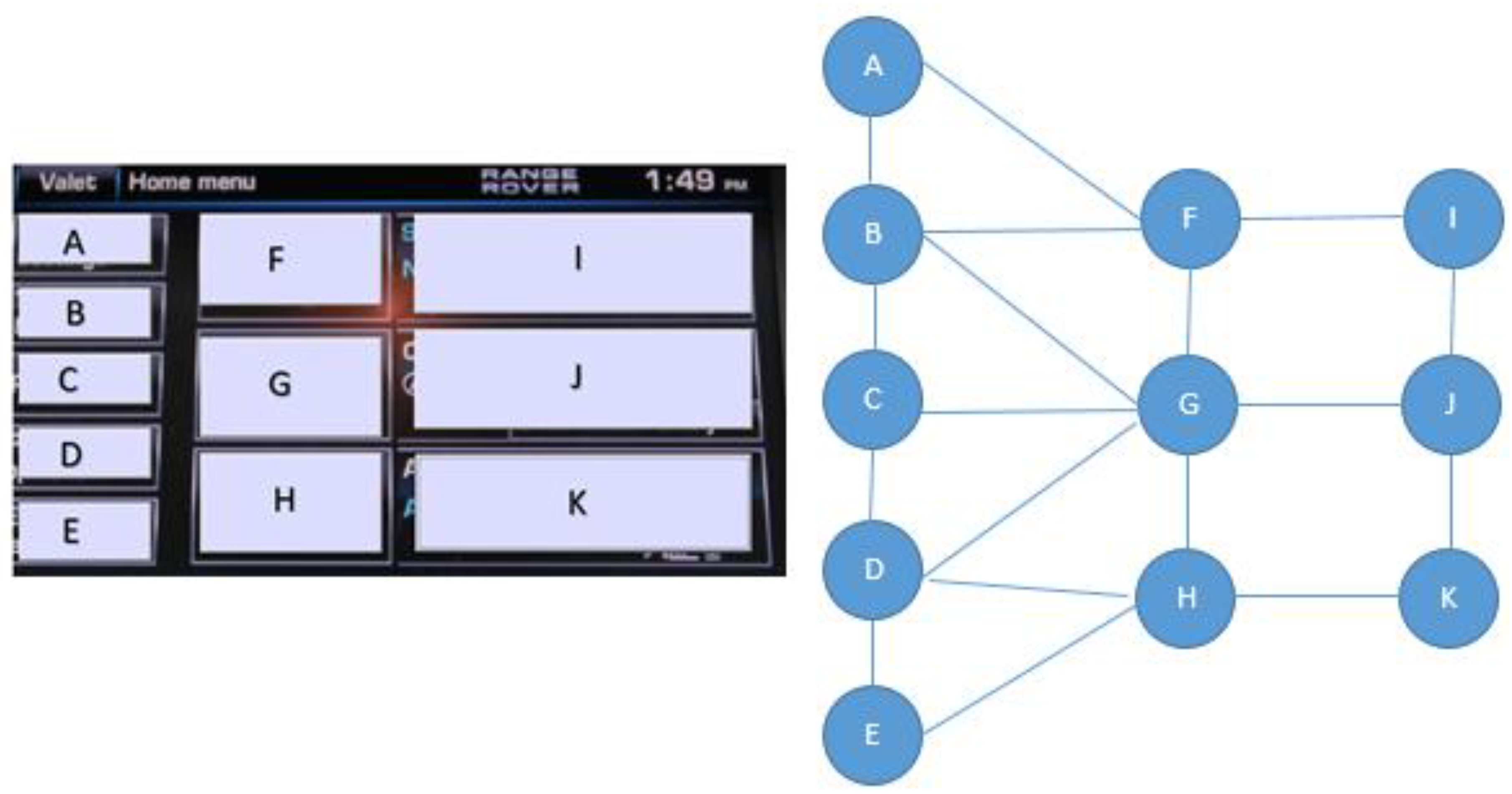

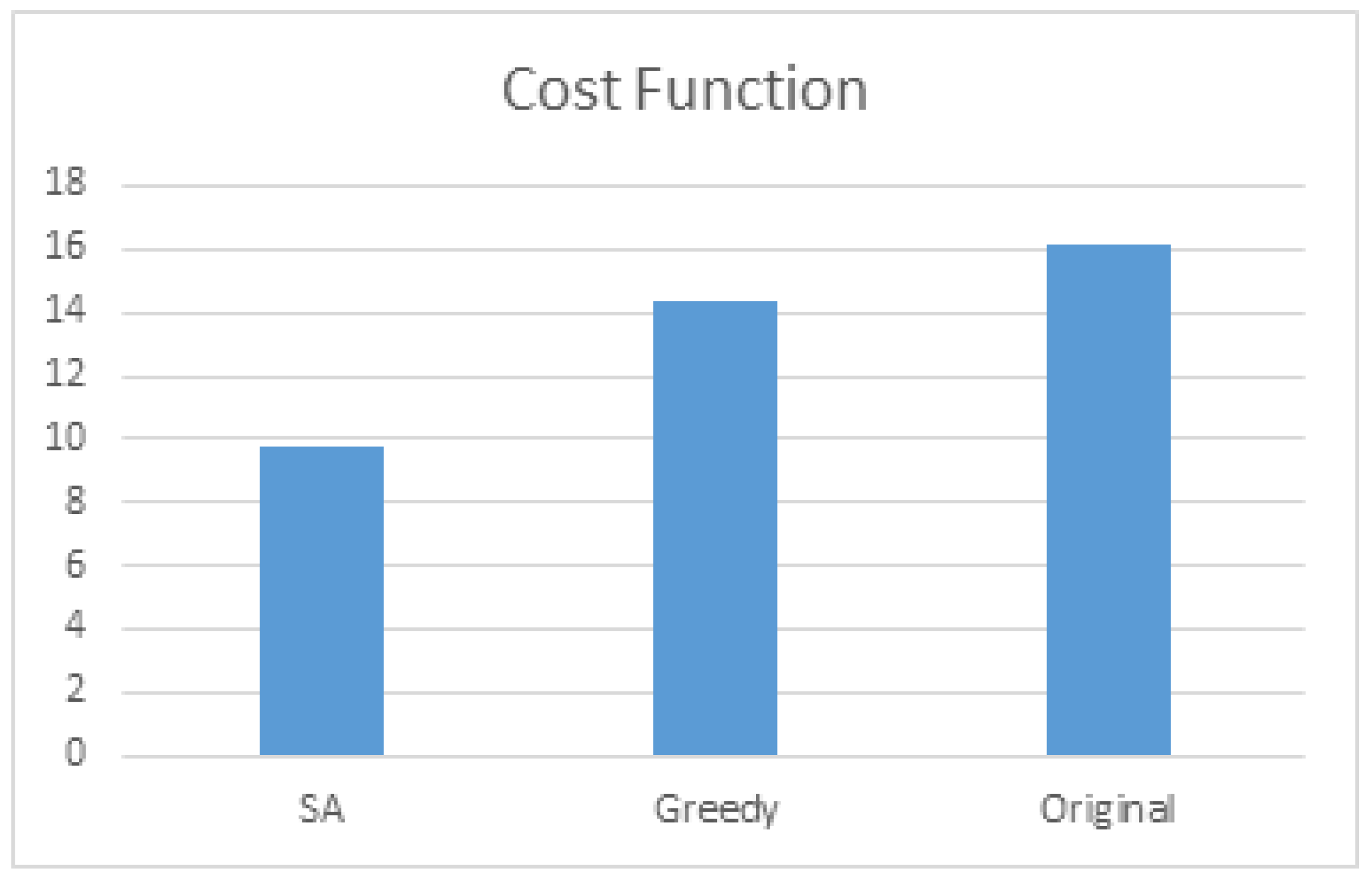

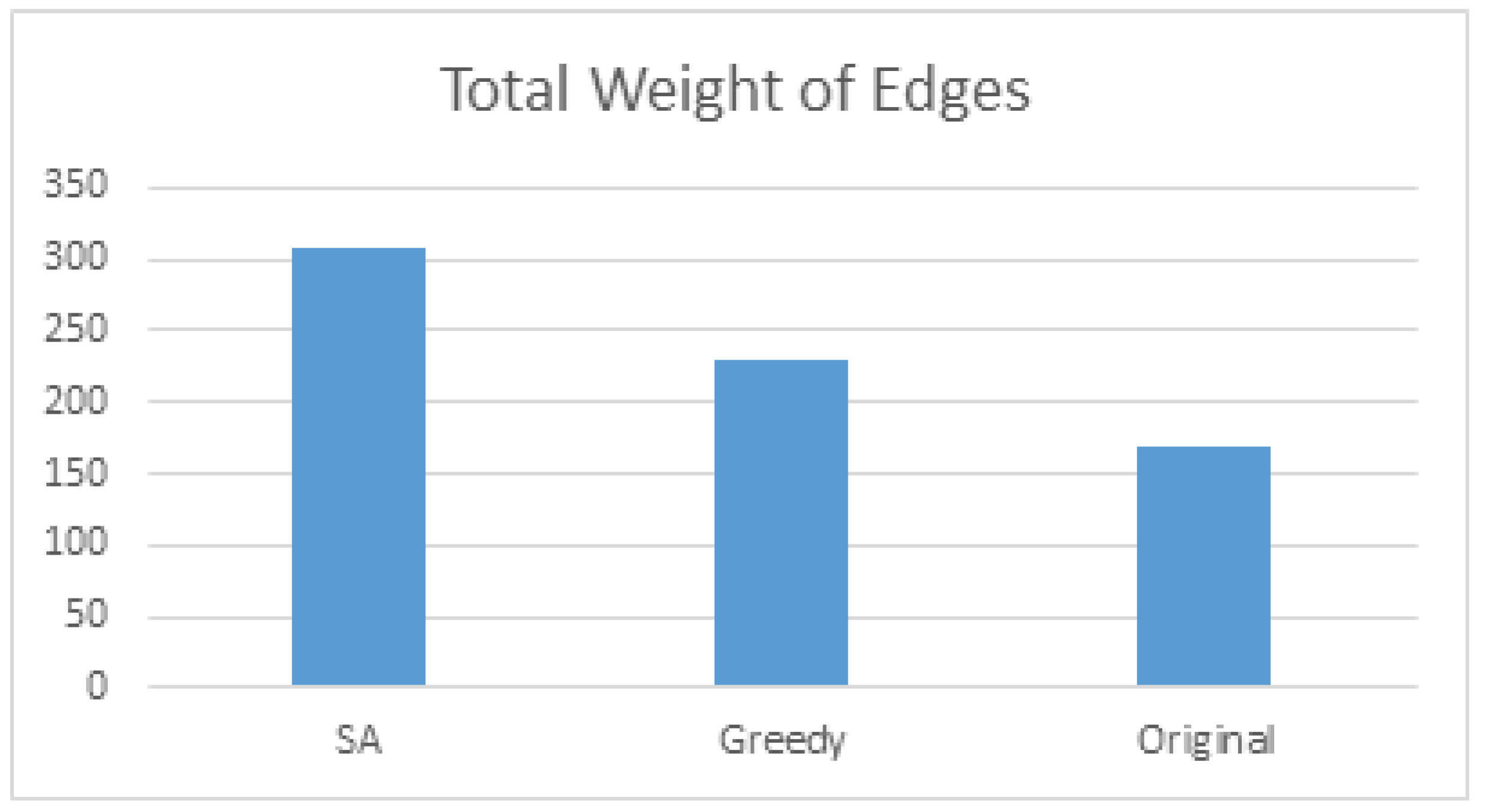

3. Gaze Controlled Projected Display

3.1. Existing Problem

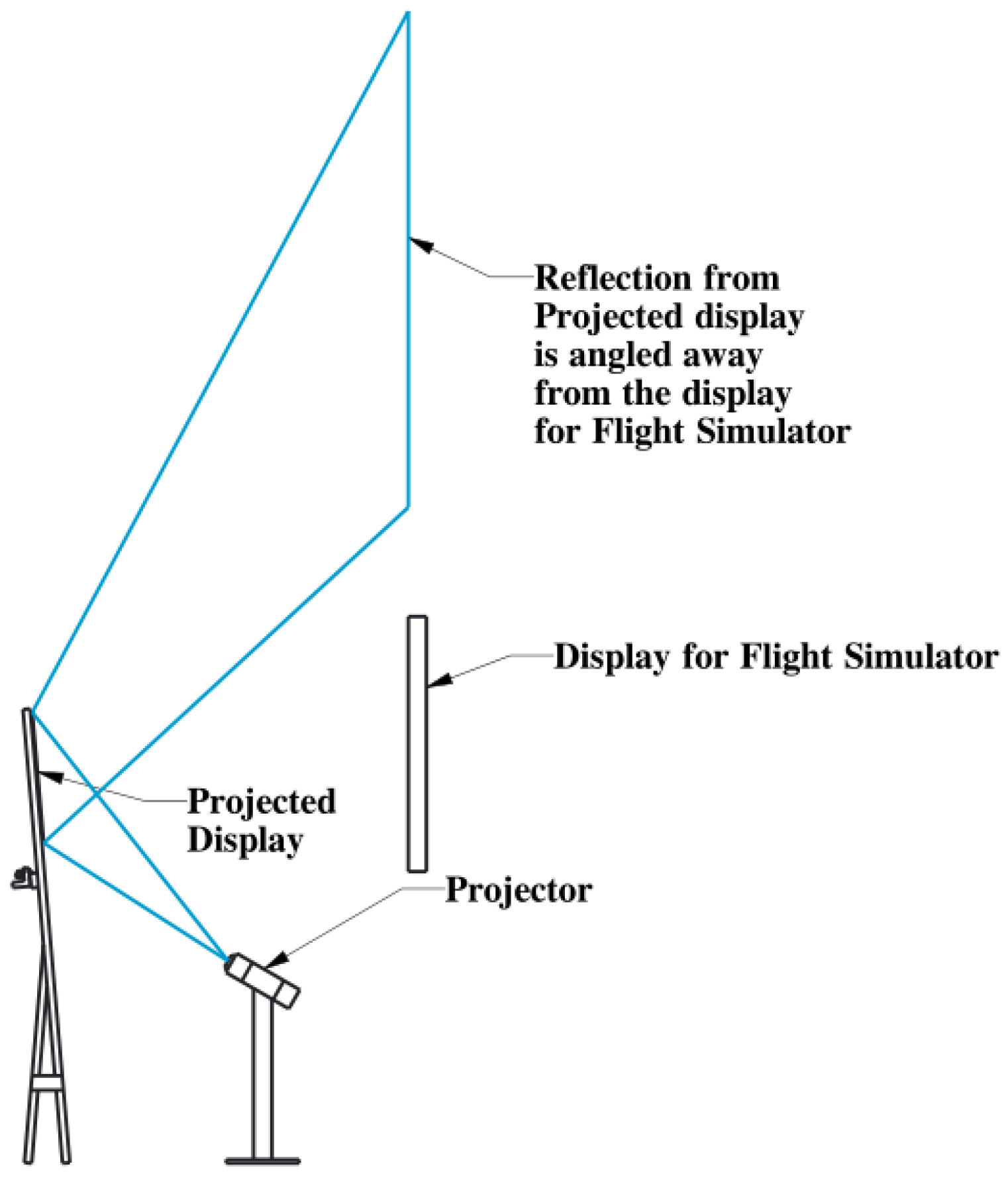

3.2. Proposed Solution

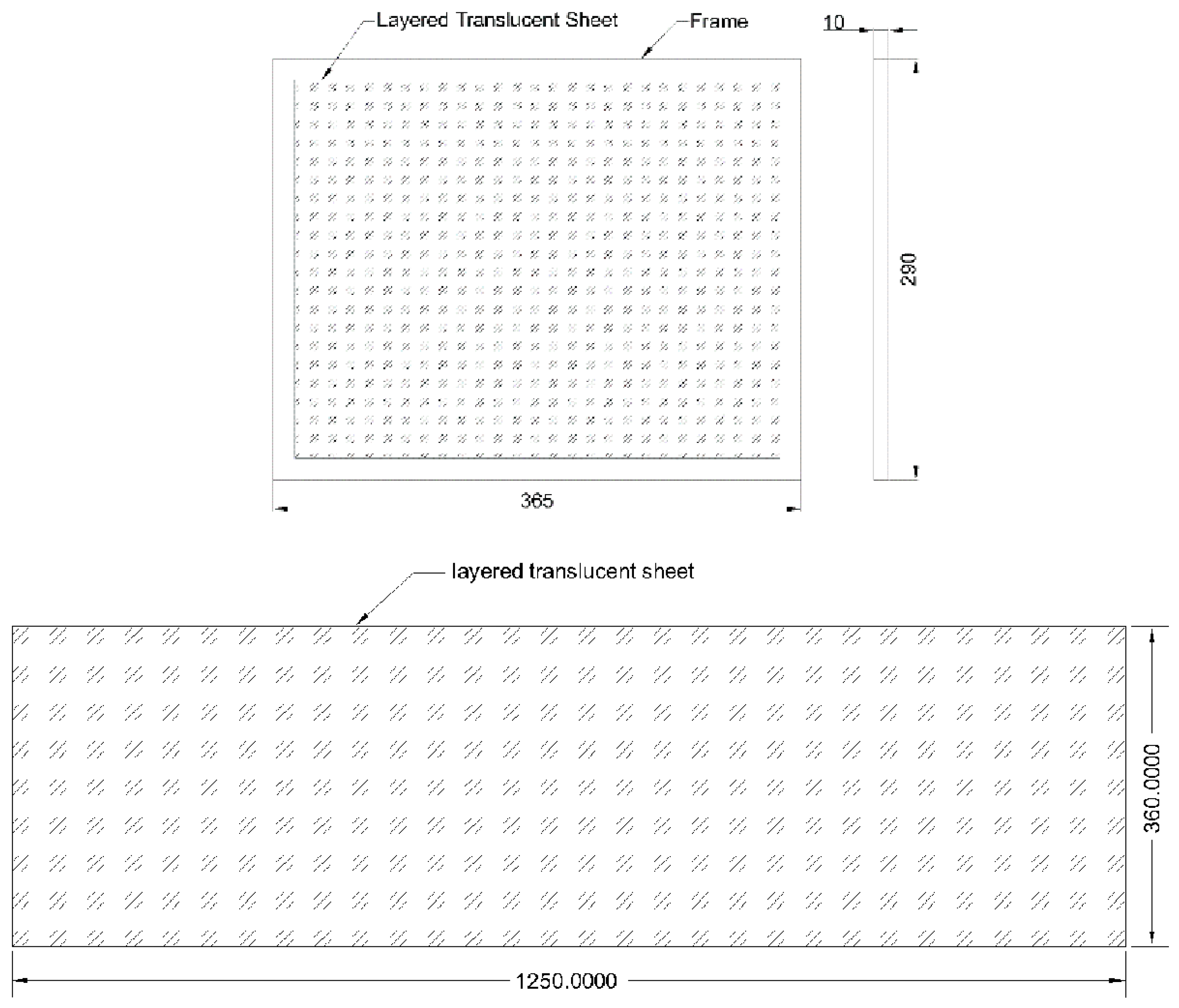

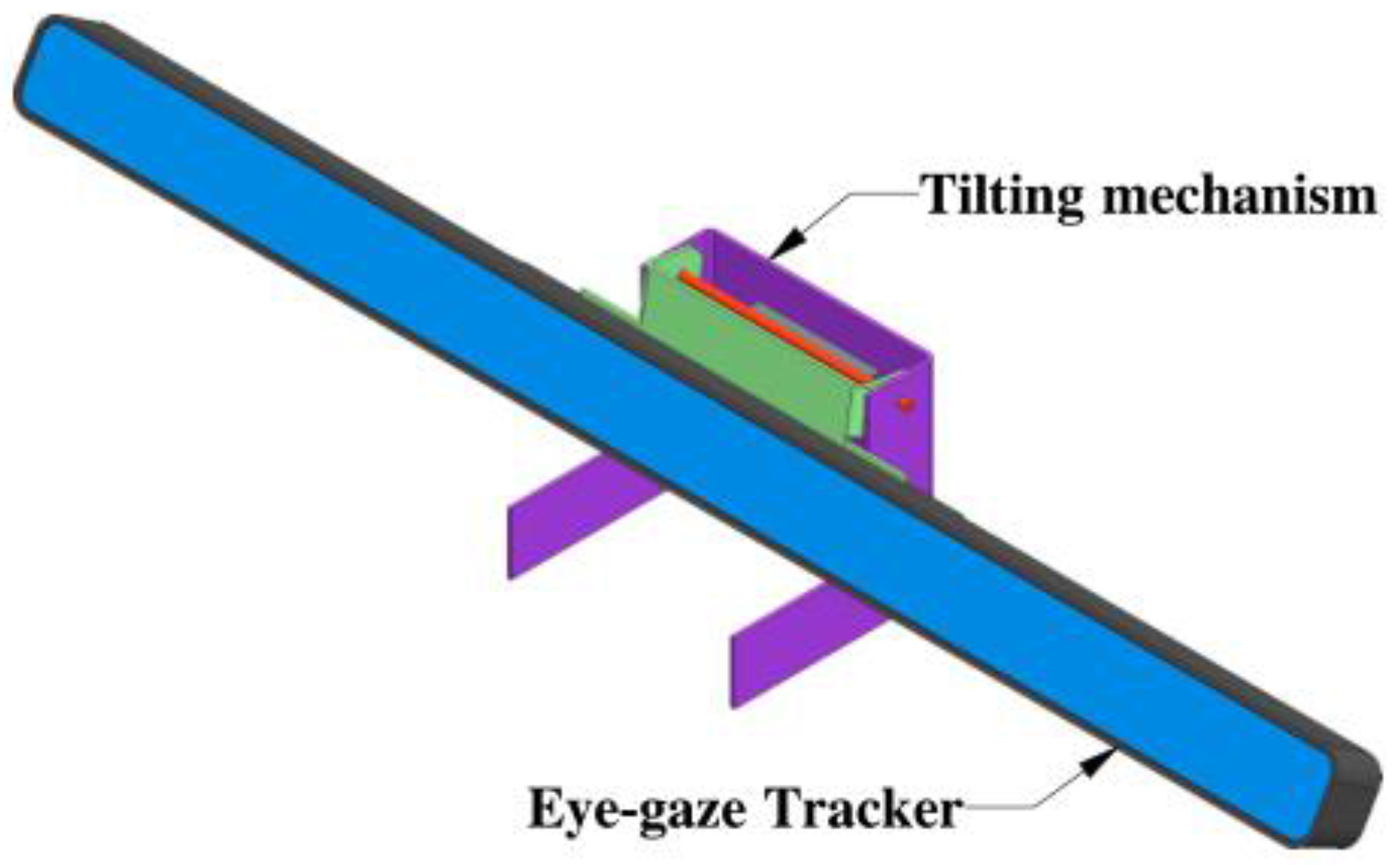

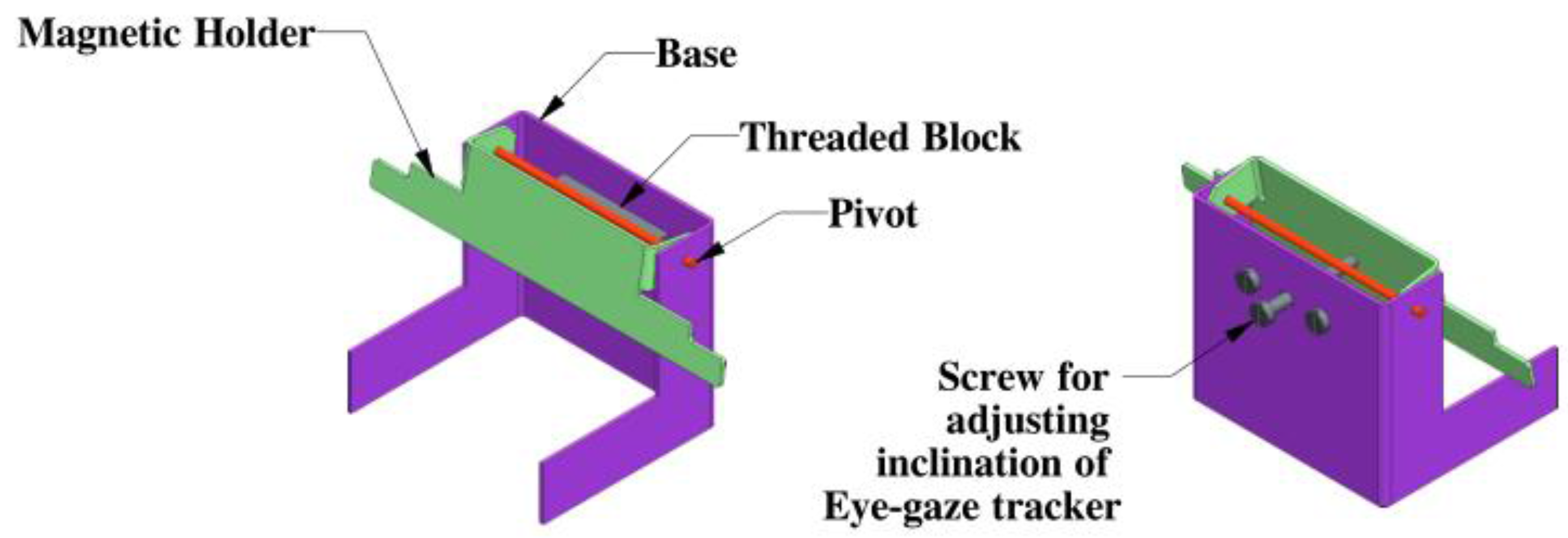

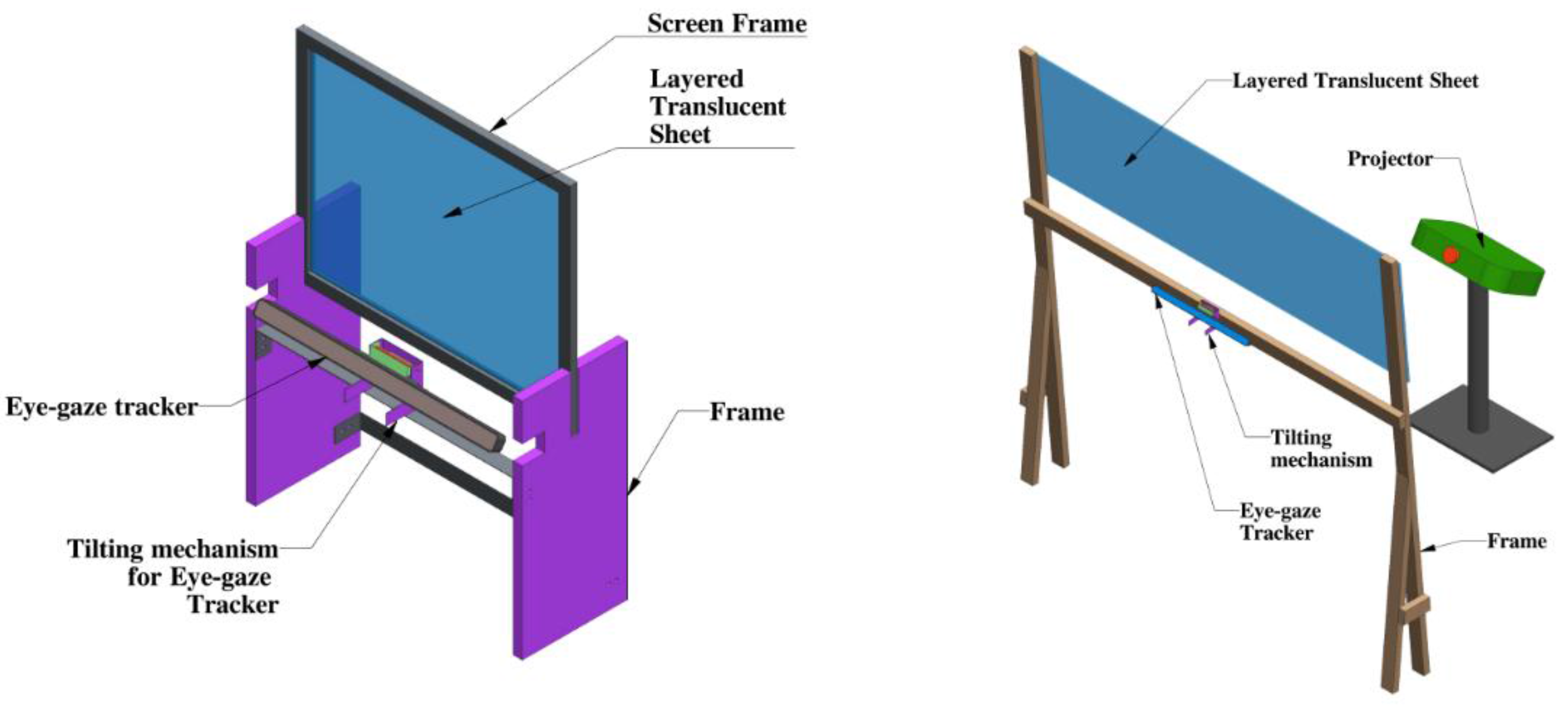

- The hardware part consists of designing a semi-transparent sheet and its holder. Off-the-shelf computers, projectors, eye gaze and finger movement trackers were used.

- The software part consists of designing and implementing algorithms to control an on-screen pointer using eye gaze and finger movement trackers to operate the projected display.

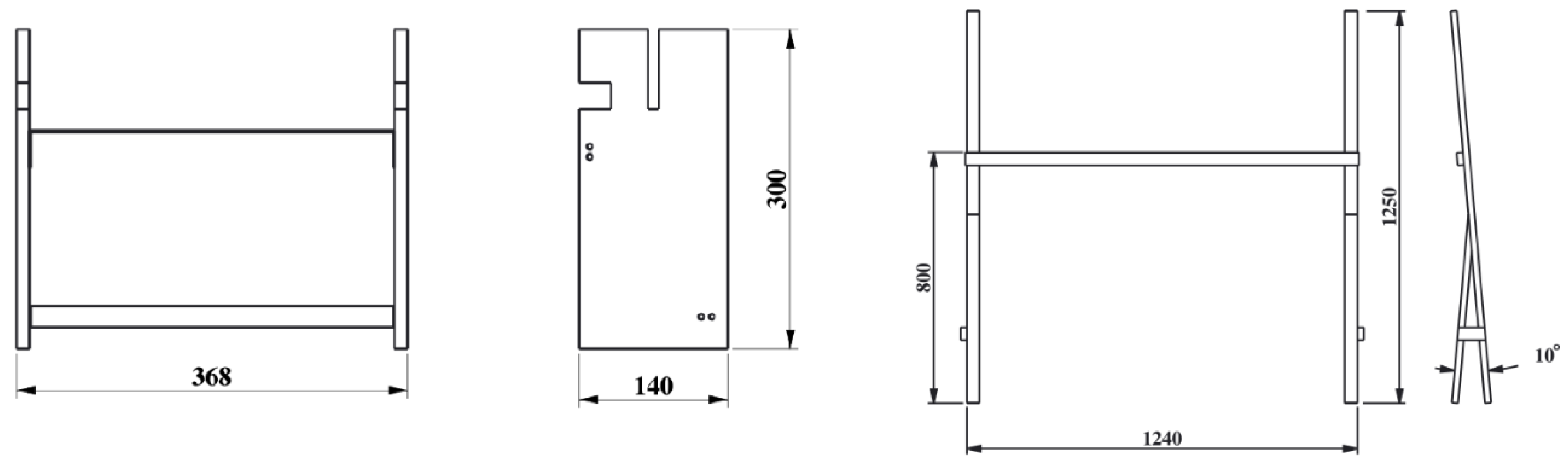

3.3. Hardware

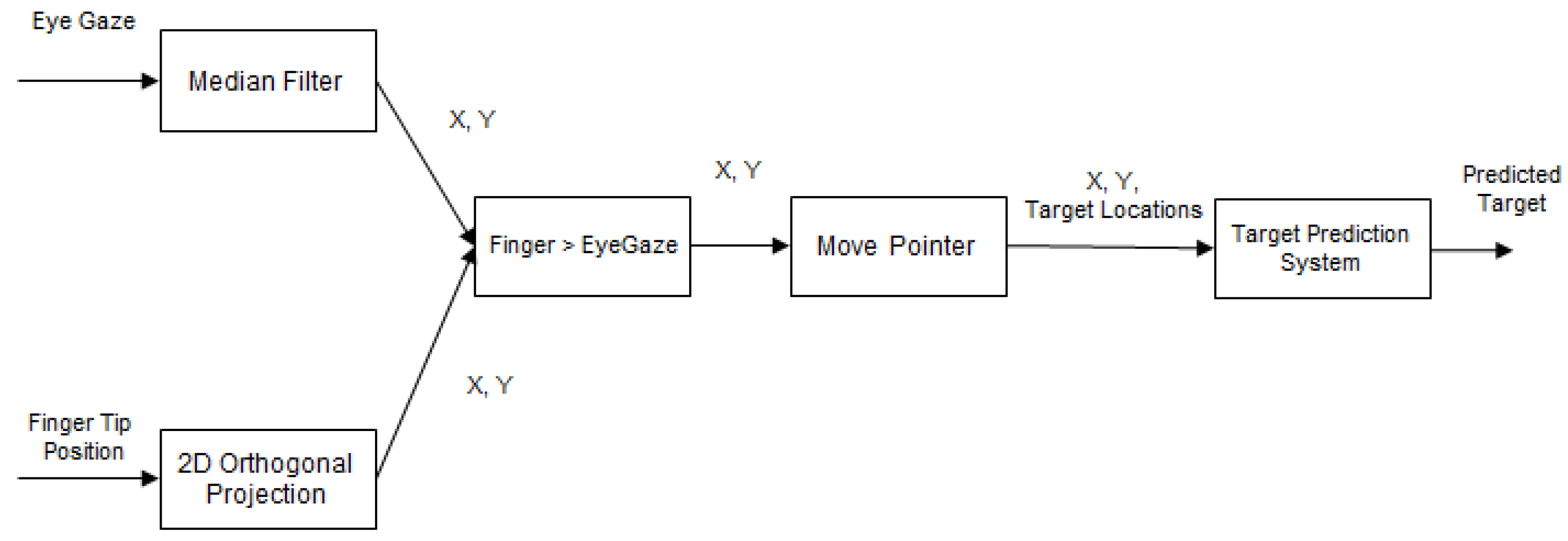

3.4. Software

- We integrated a finger movement tracker as an alternative modality that can move a pointer on screen following finger movement.

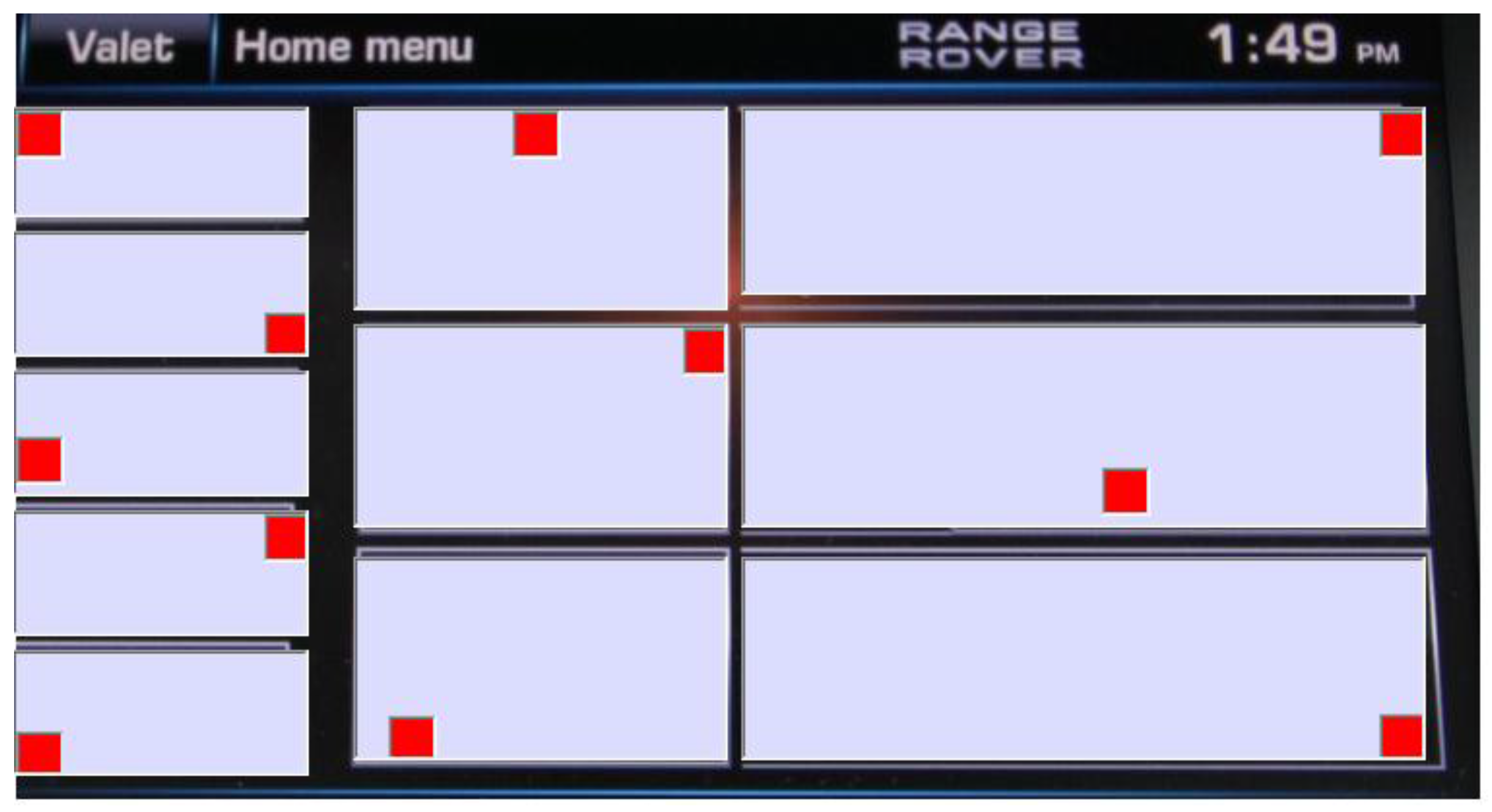

- We proposed to use a set of hotspots to leverage the pop-out effect of visual attention to reduce probabilities of wrong solutions for a gaze controlled interface.

3.4.1. Multimodal Software

3.4.2. Hotspots

4. User Study

- Proposing an eye gaze controlled projected display,

- Developing an algorithm to improve pointing performance in gaze controlled display.

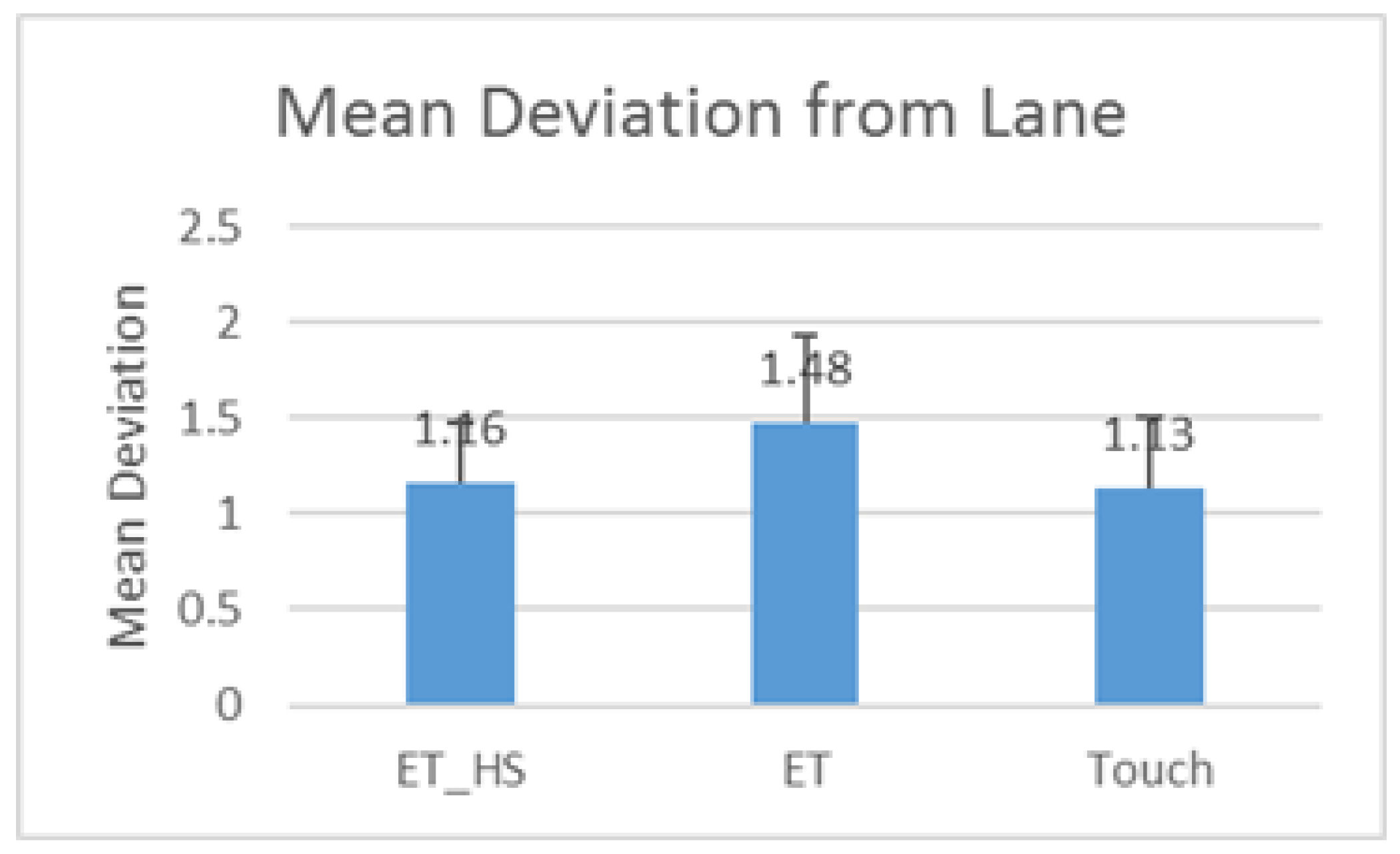

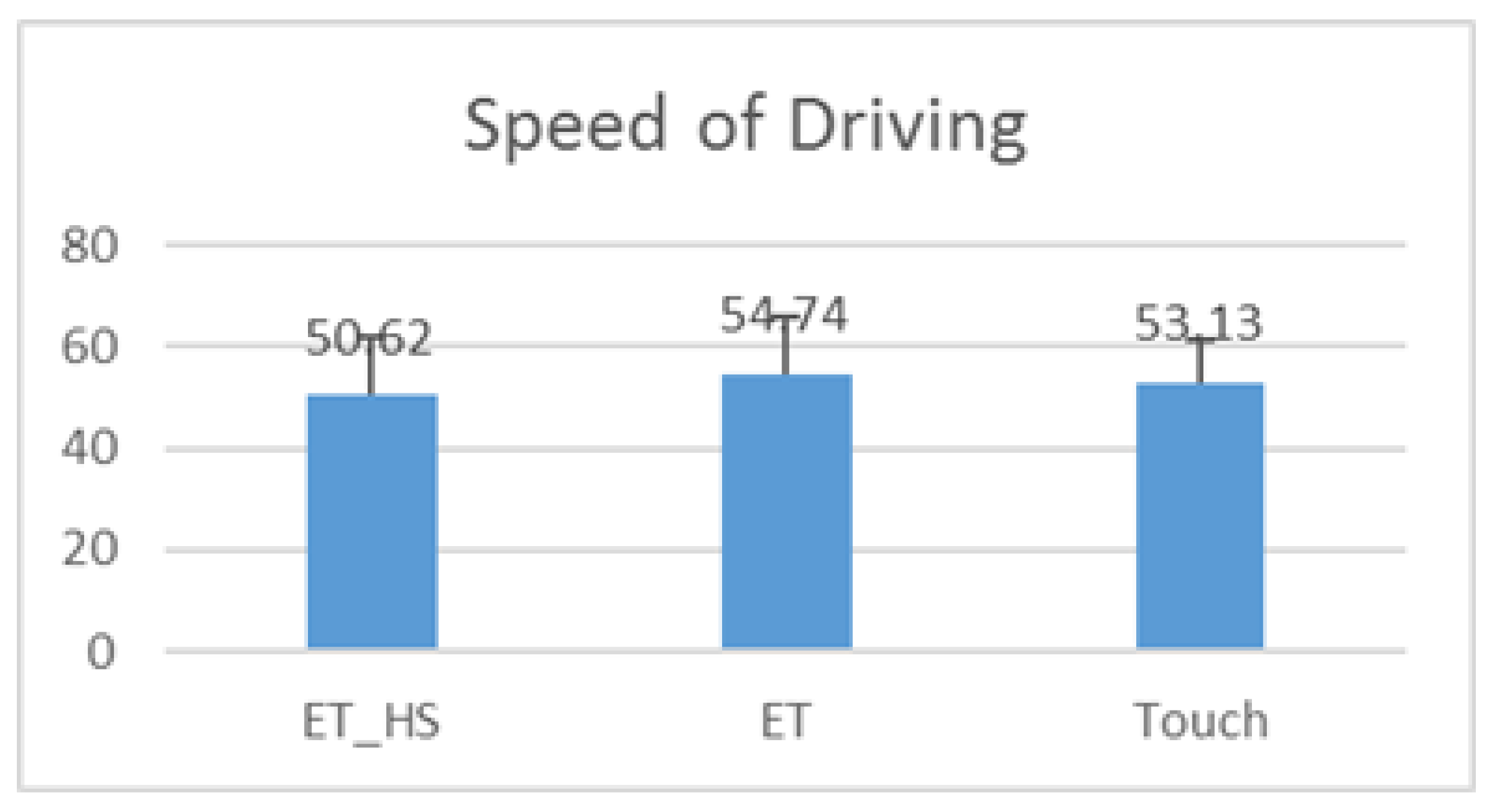

- The first study evaluated the utility of hotspots for eye gaze controlled interface in automotive environment. This study evaluated hotspot on a computer screen and did not use projected screen.

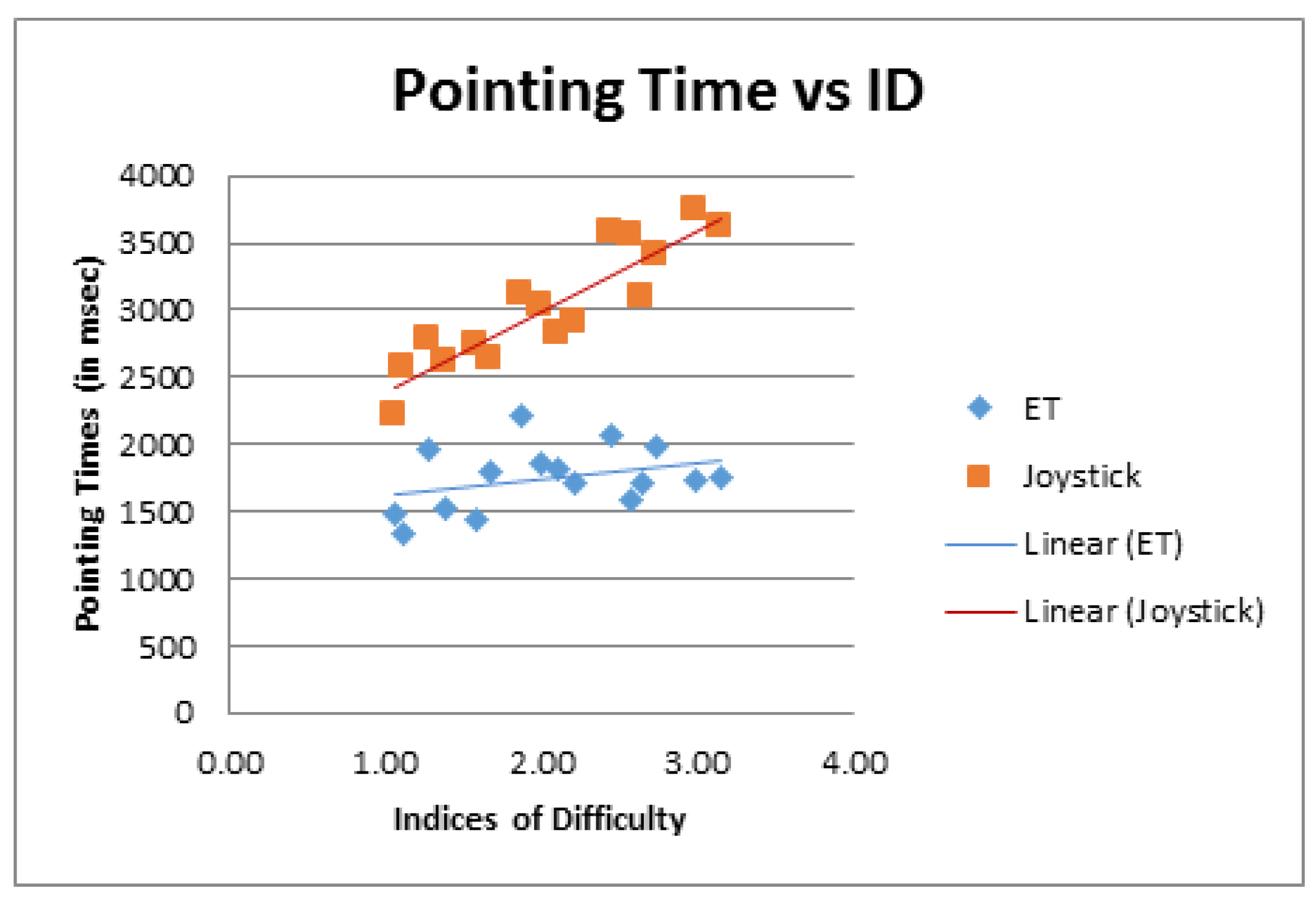

- The second study evaluated the projected display with respect to a HOTAS (Hands-On-Throttle-And-Stick [56]) joystick, which is the standard interaction device in military aviation environment for ISO 9241 pointing task.

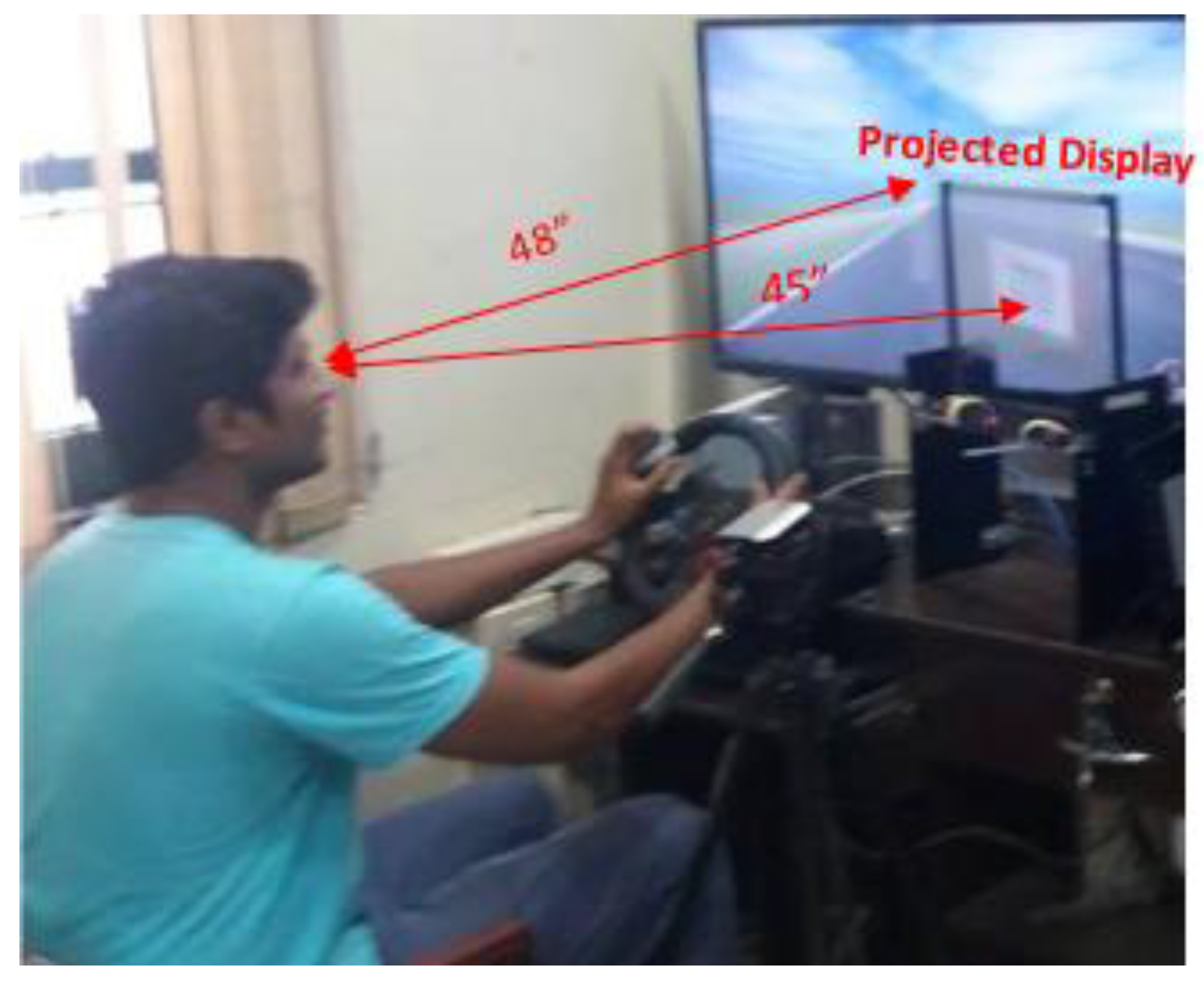

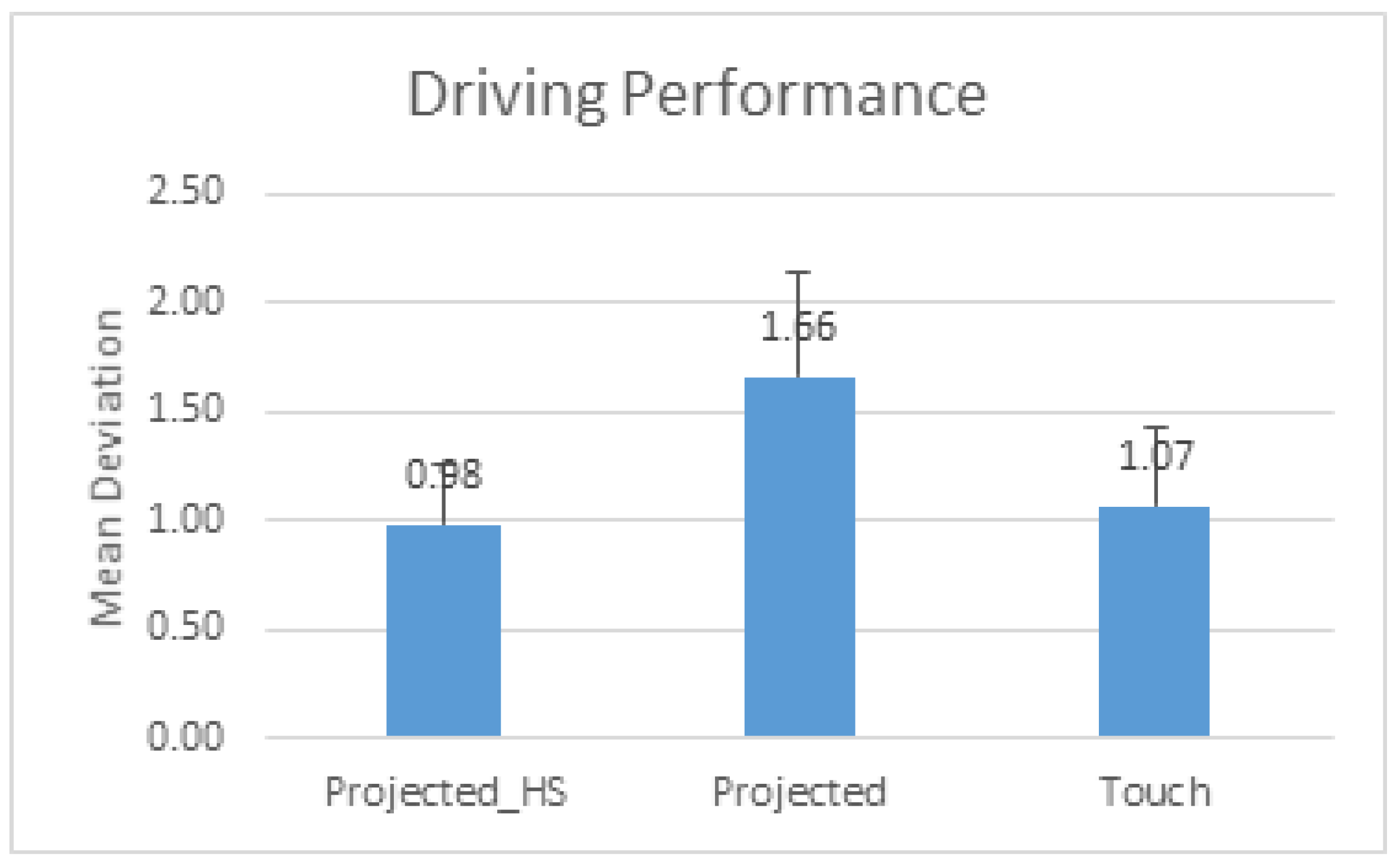

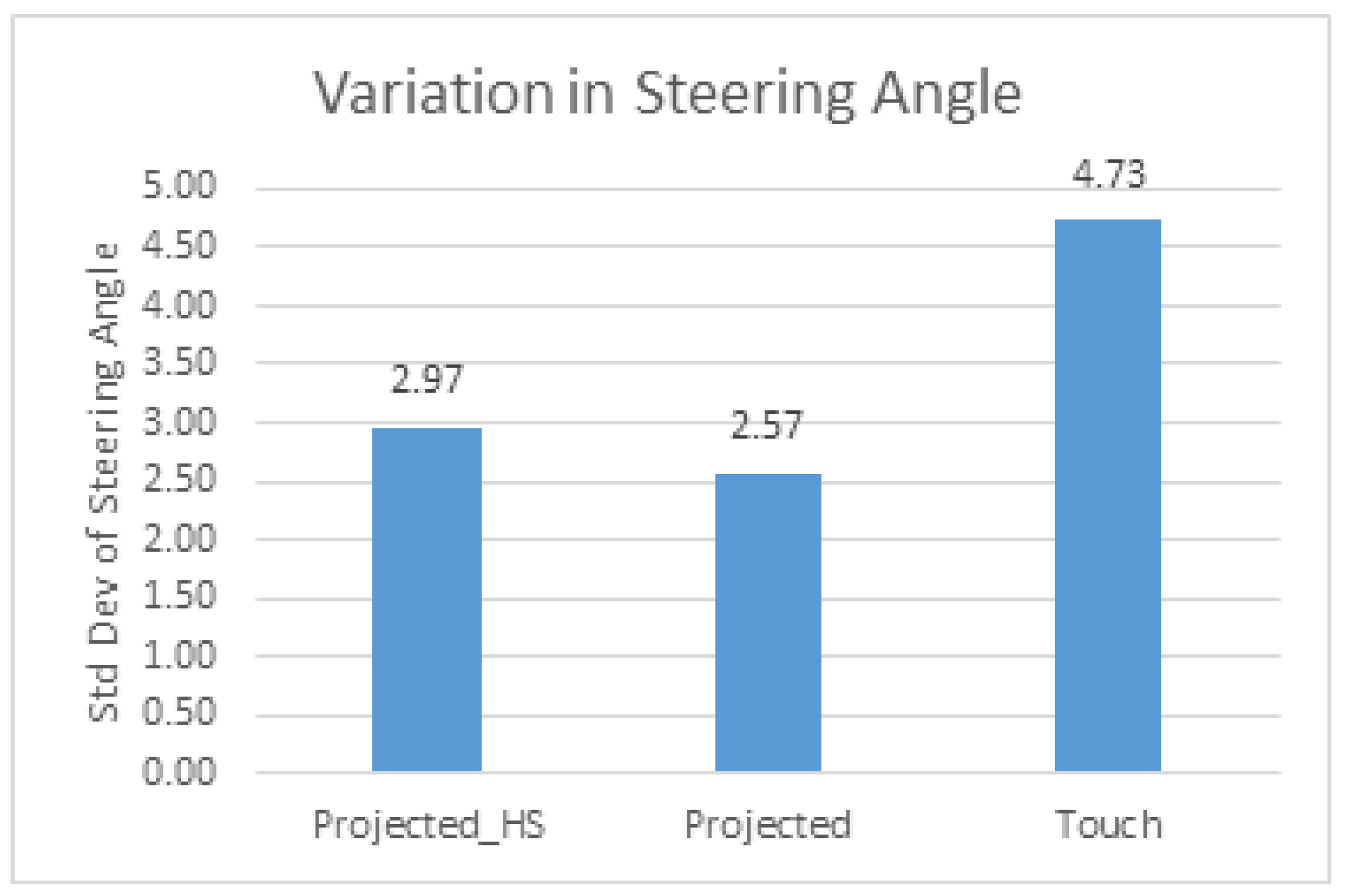

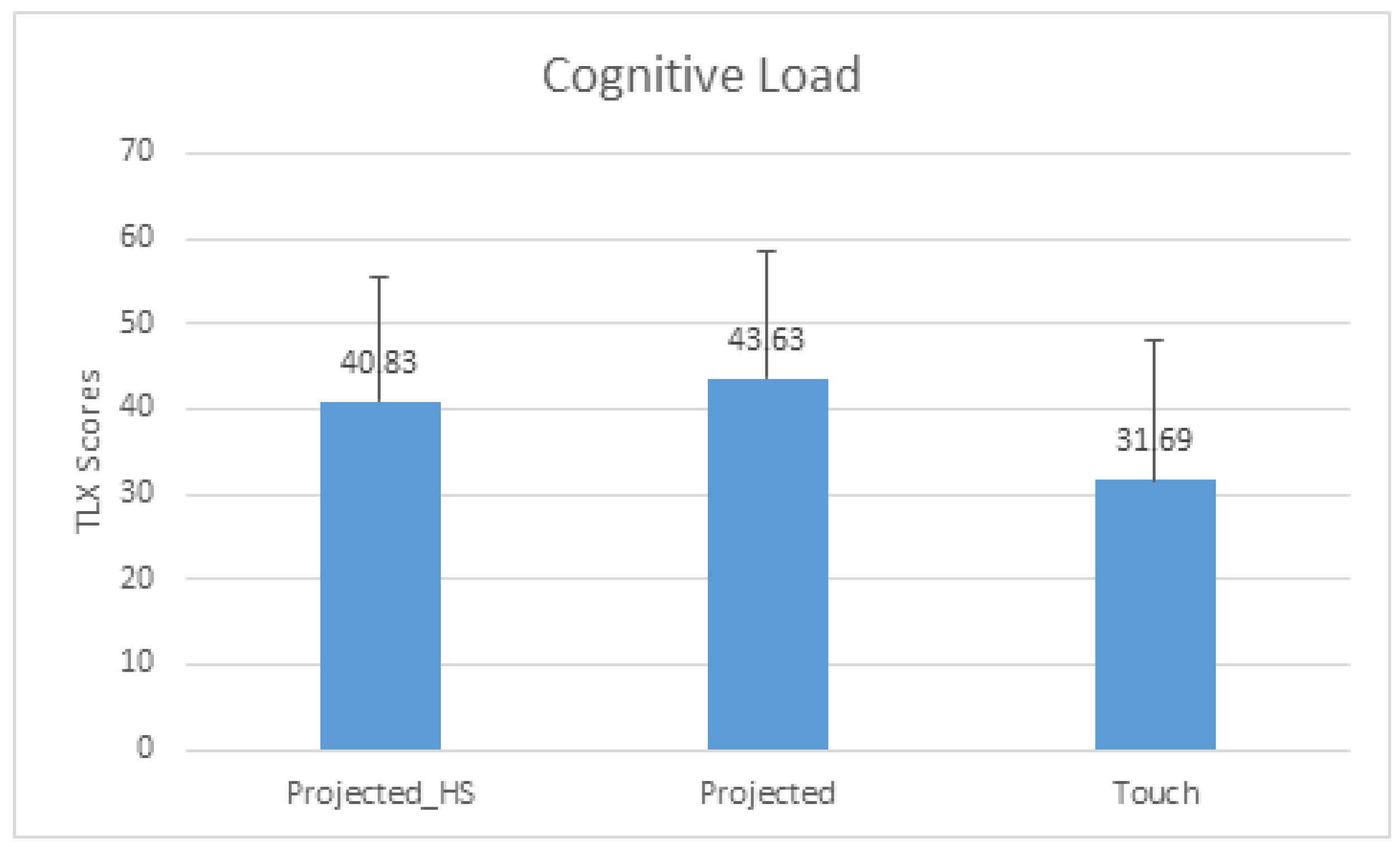

- The third user study evaluated the projected gaze controlled system with and without hotspots with respect to a touch screen display for automotive environment.

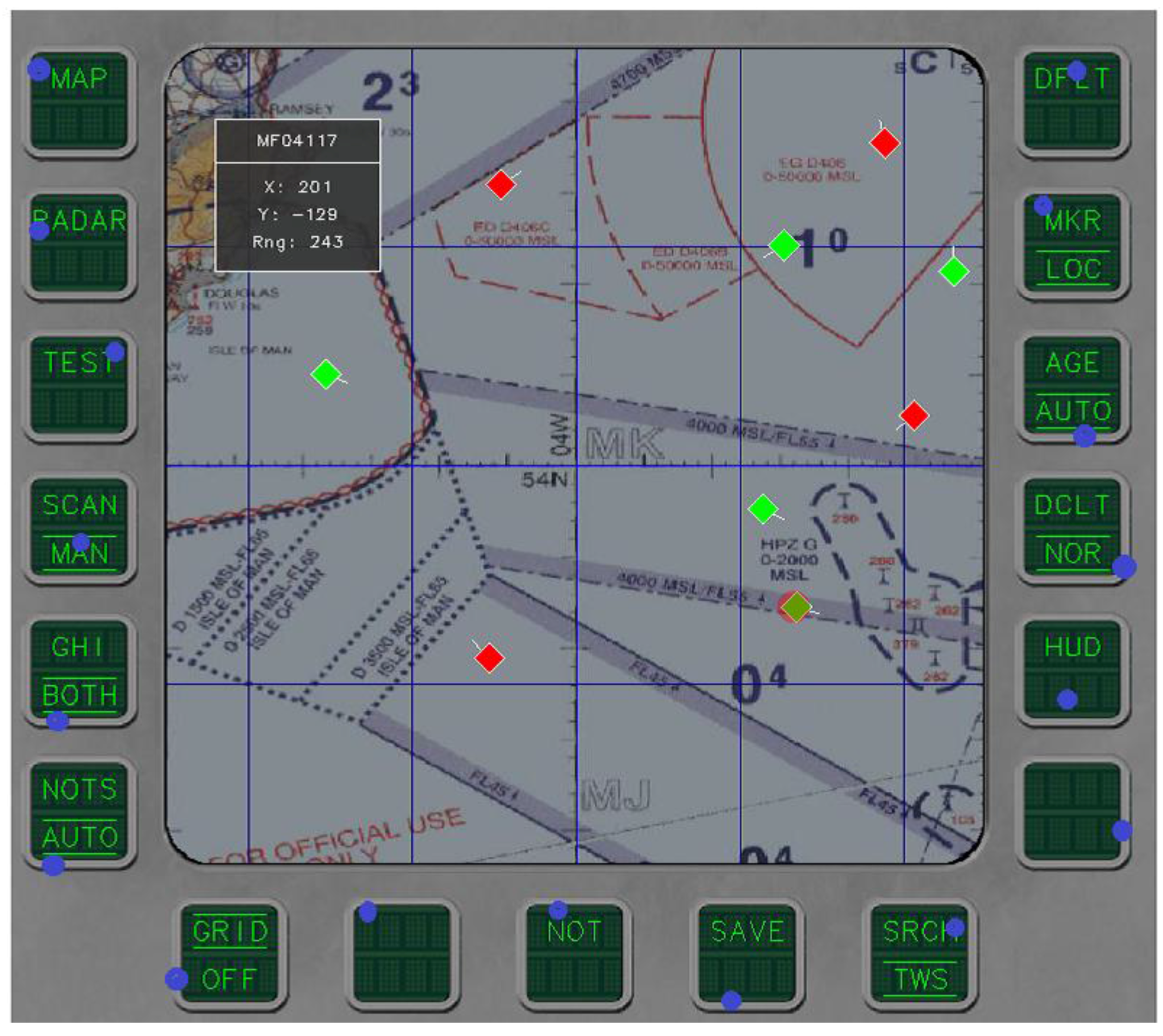

- The last study undertook trials in a sample multi-function display rendered on the gaze controlled projected display and compared its performance with respect to the HOTAS joystick.

4.1. Pilot Studies

- Eye gaze tracking with hotspots,

- Eye gaze tracking without hotspots,

- Touching.

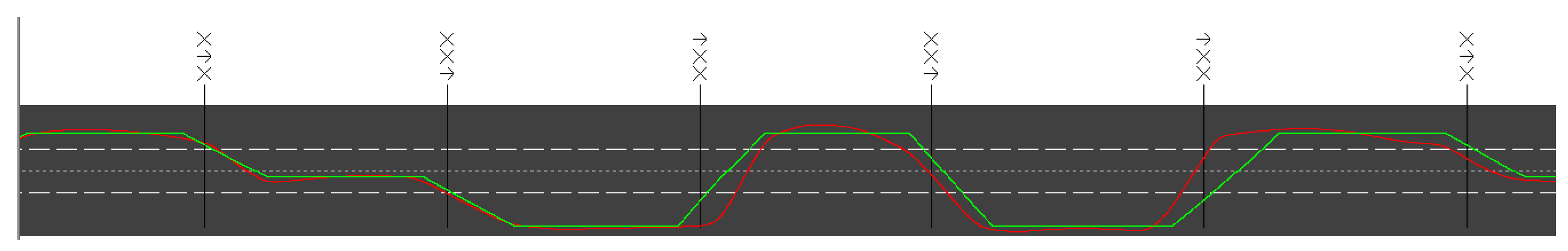

- Driving performance is measured as

- Mean deviation from designated lane calculated according to Annex E of ISO 26022 standard.

- Average speed of driving, in particular we investigated if the new modality significantly affected driving speed.

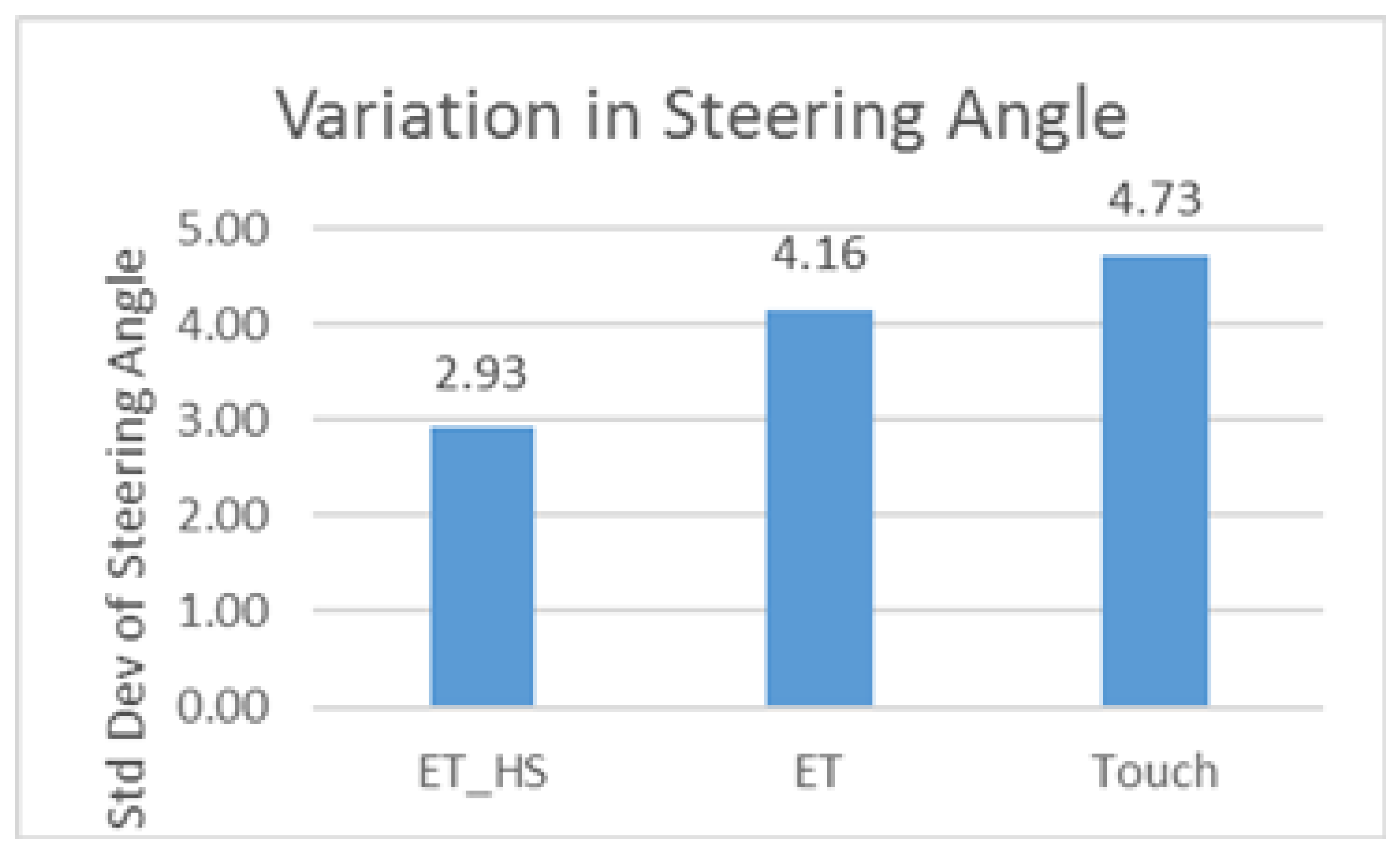

- Standard Deviation of Steering Angle, a large standard deviation means drivers made sharp turns for changing lanes.

- Pointing and Clicking performance is measured as

- Error in secondary task as the number of wrong buttons selected. It is reported as a percent of the total number of button selections.

- Response time as the time difference between the auditory cue and the time instant of the selection of the target button. This time duration adds up time to react to auditory cue, switch from primary to secondary task and the pointing and selection time in the secondary task.

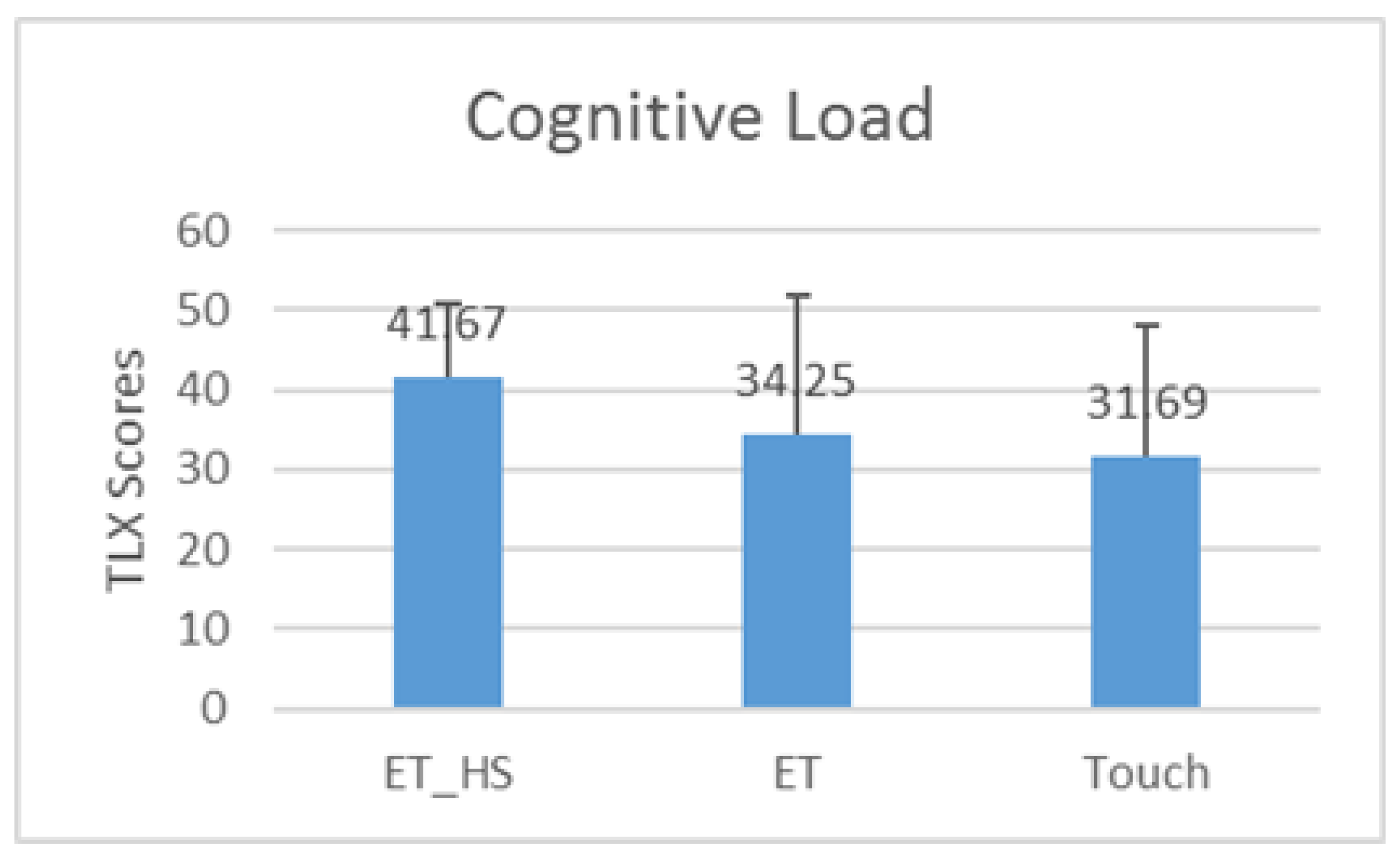

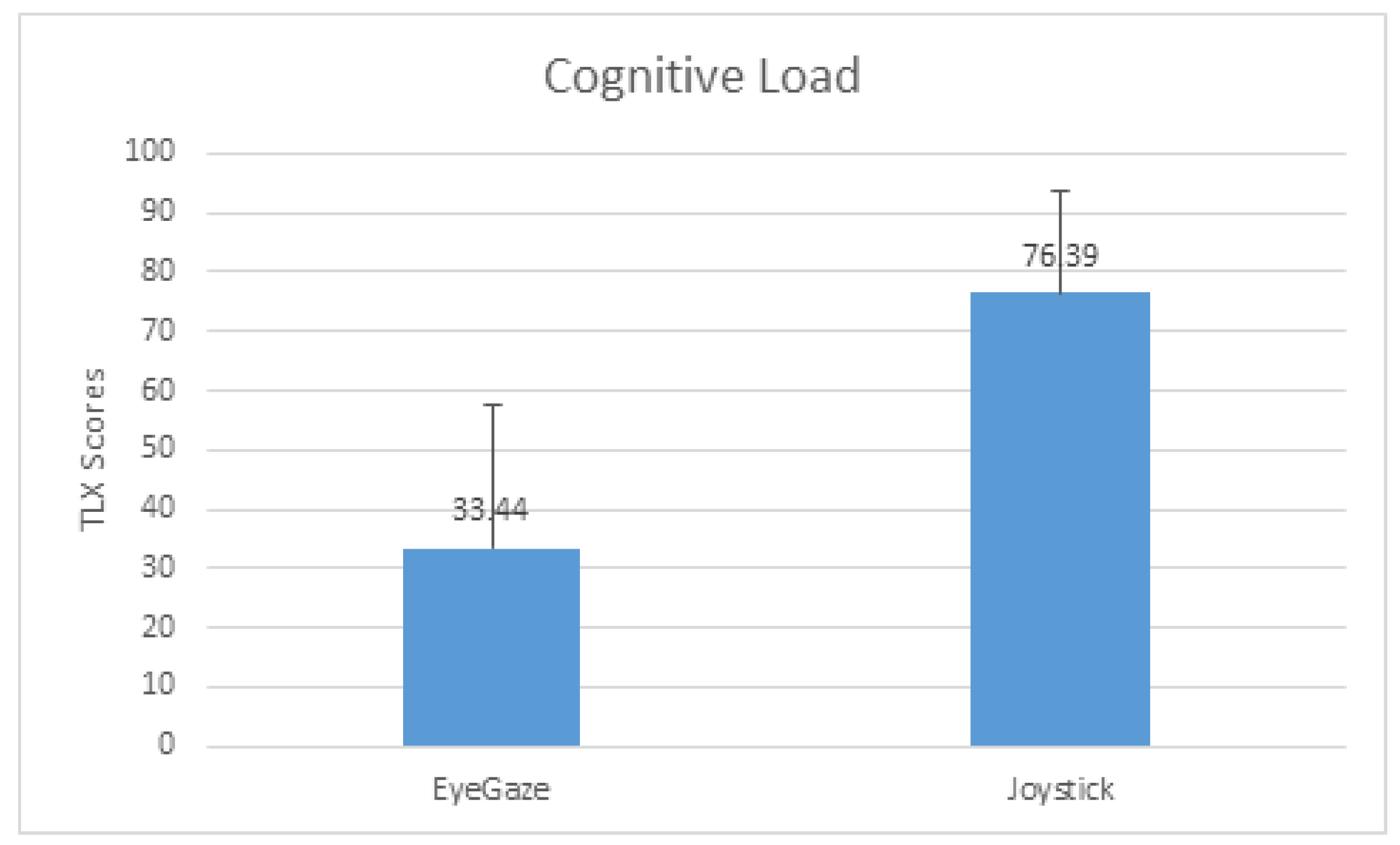

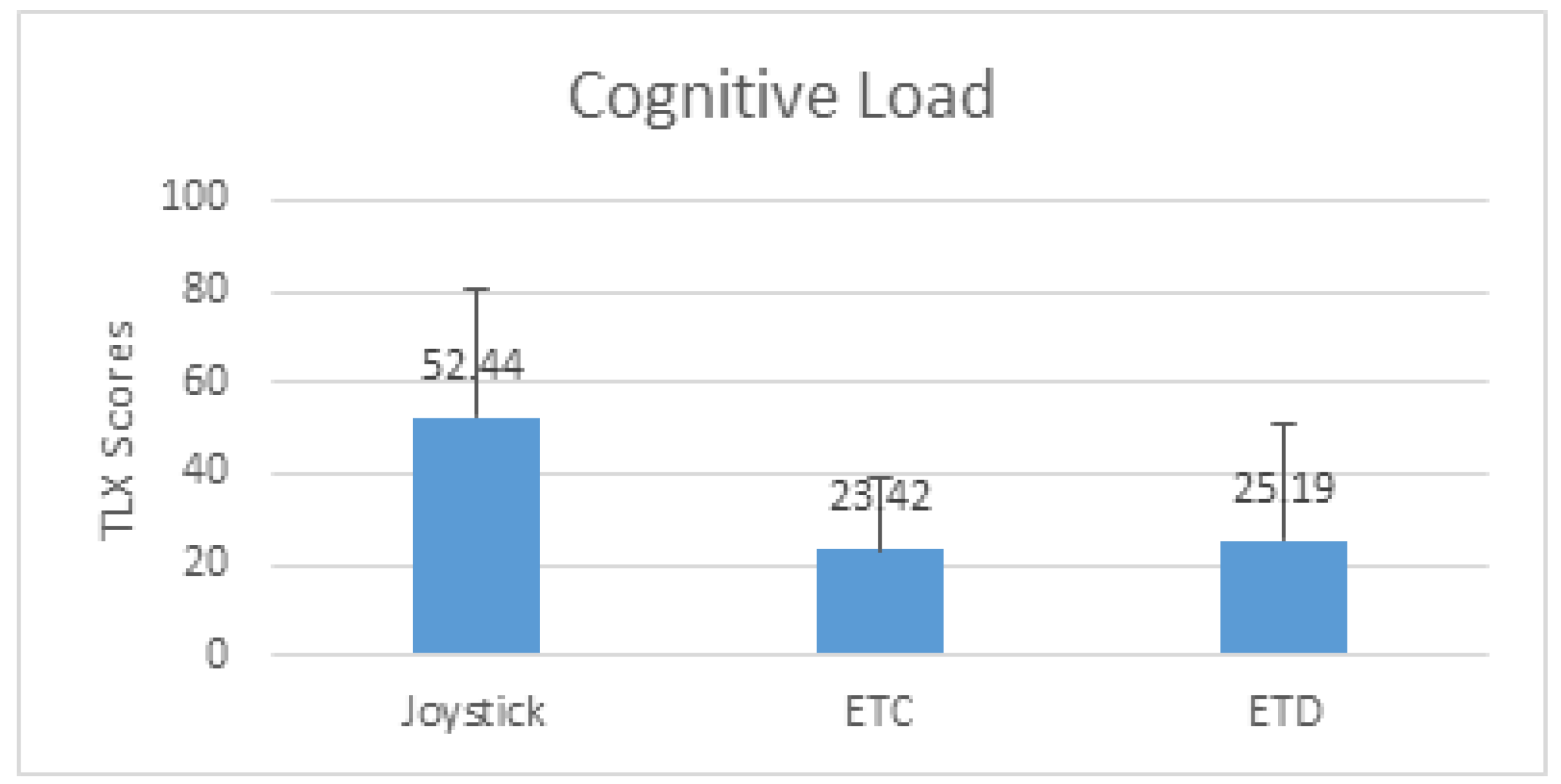

- Cognitive load measured as the NASA Task Load Index (TLX) score.

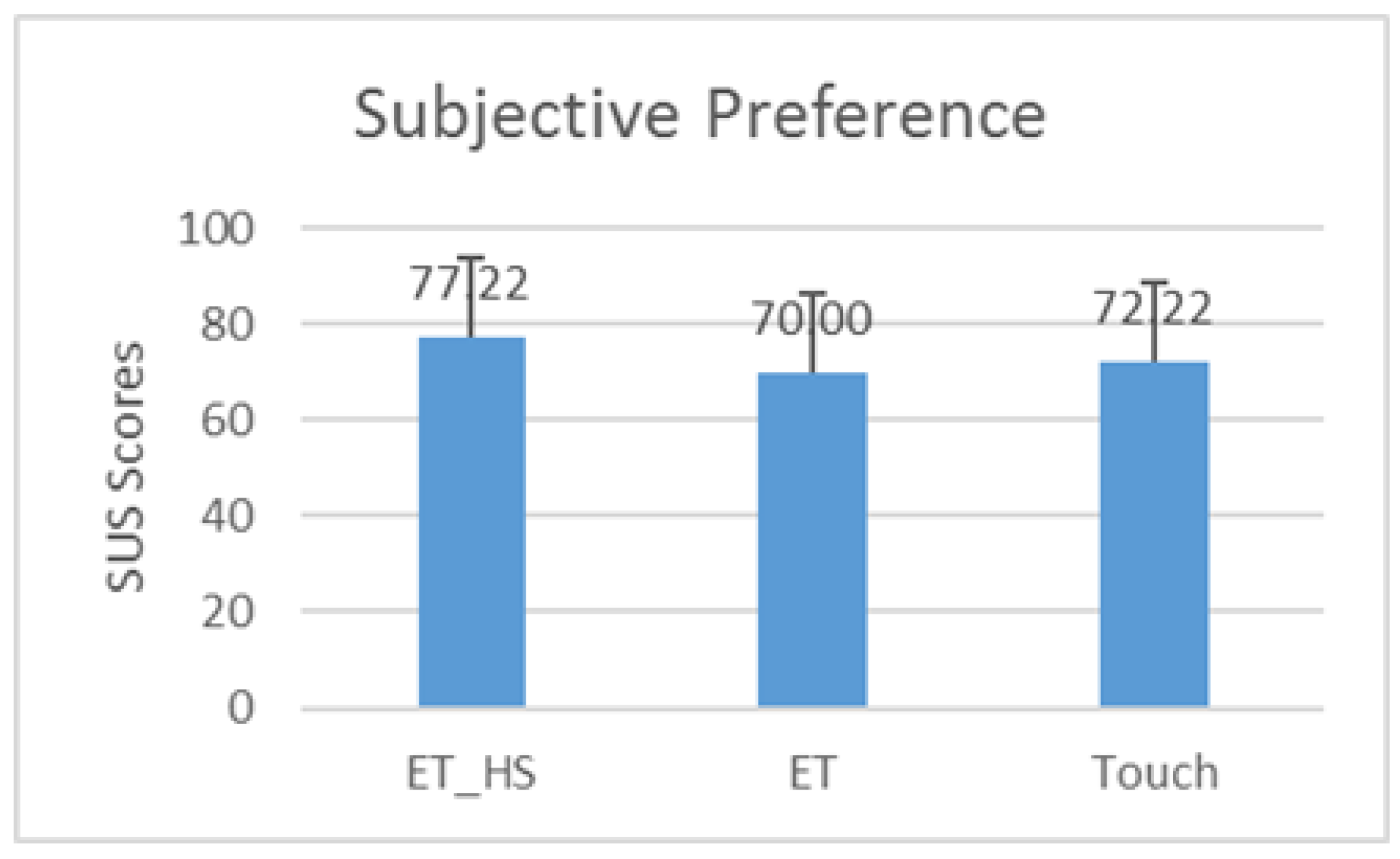

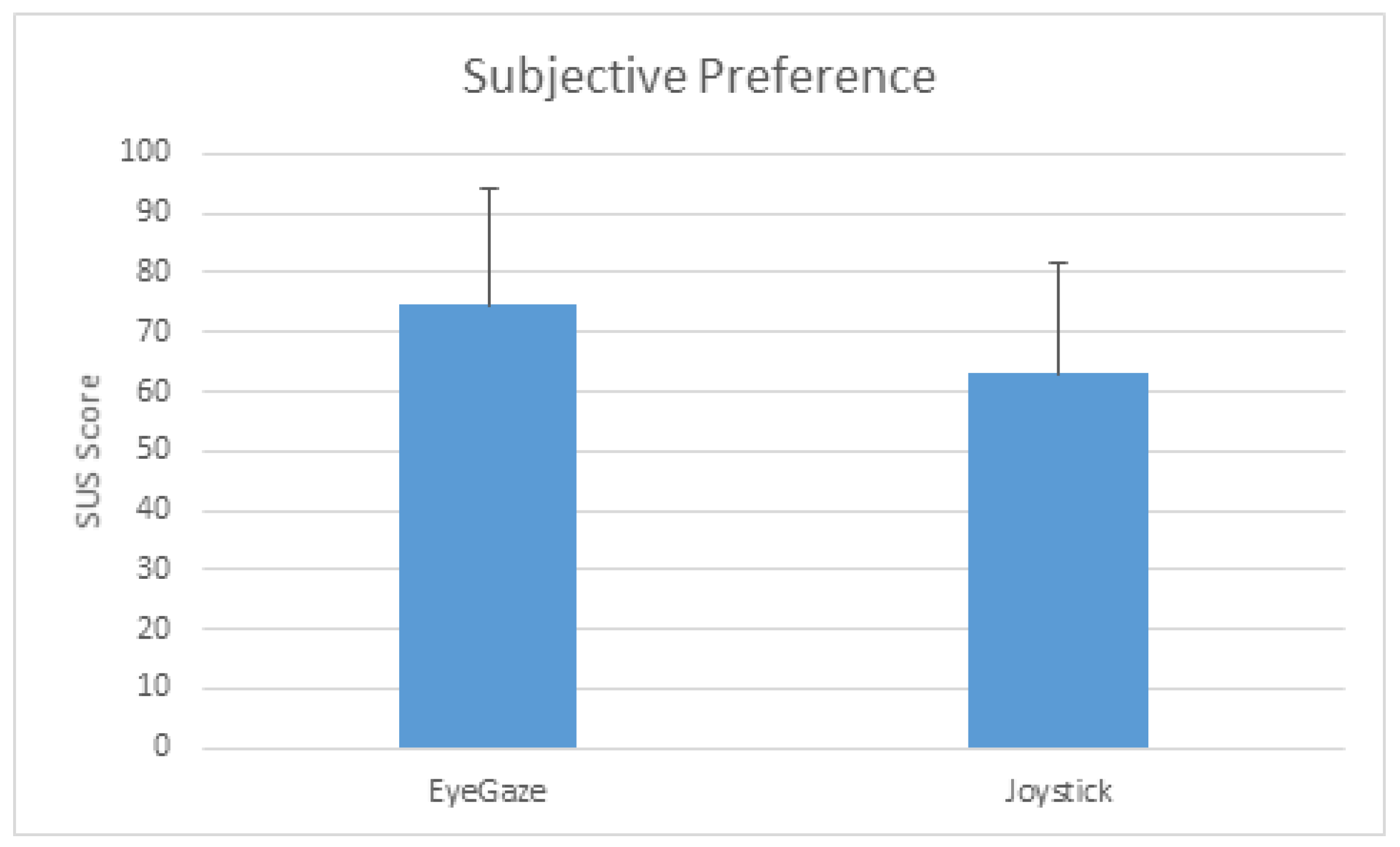

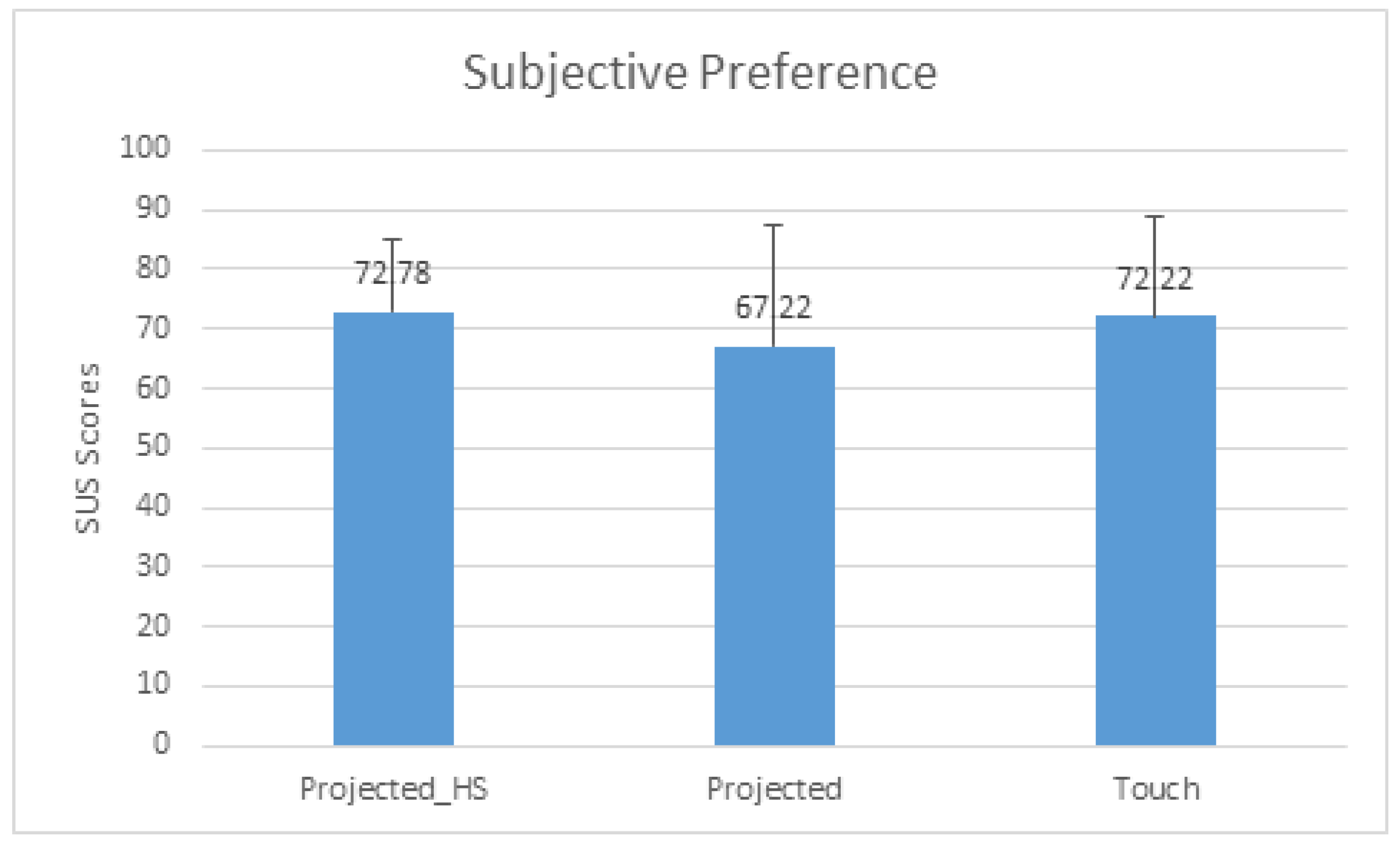

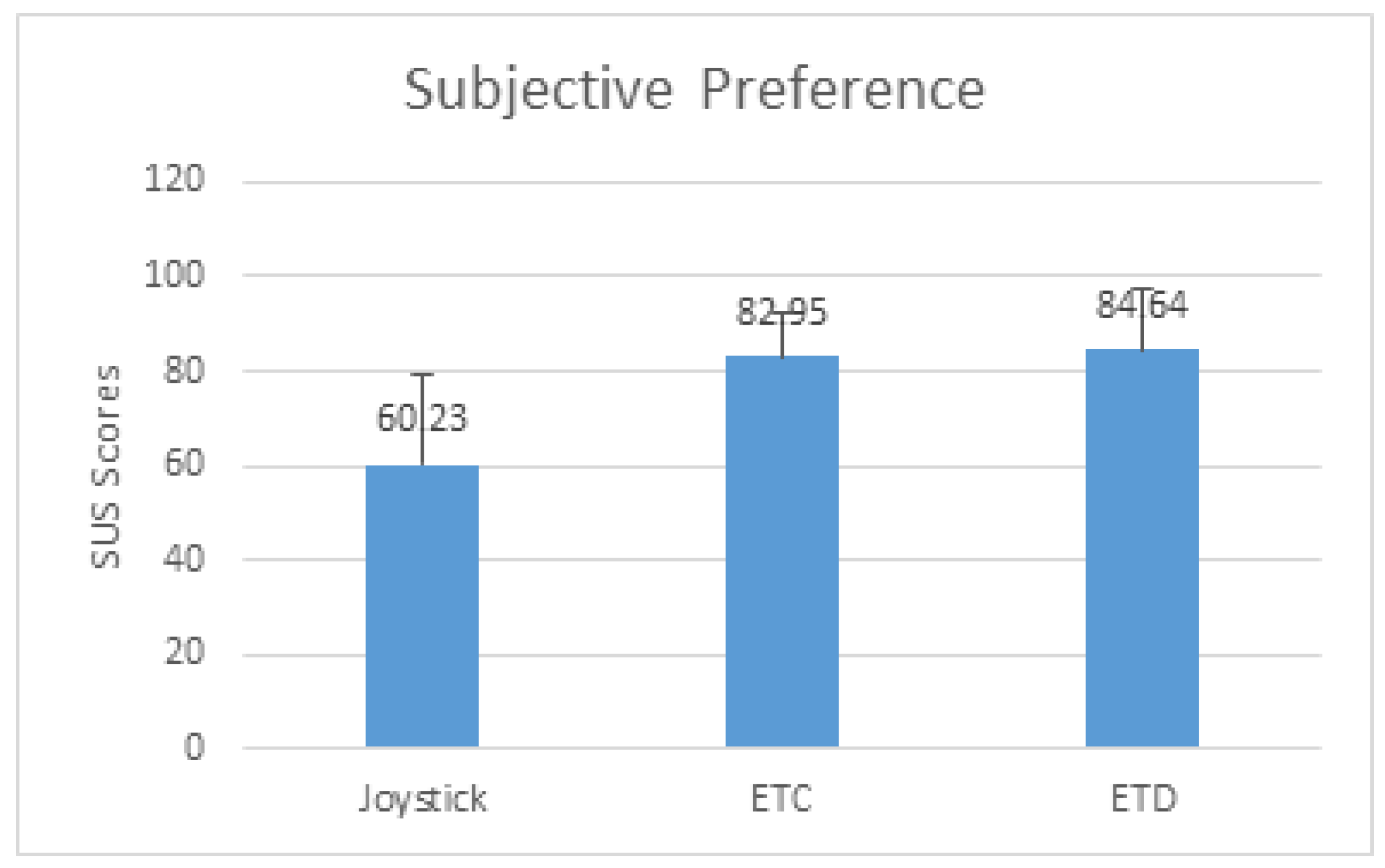

- Subjective preference as measured as the System Usability Score (SUS).

- The primary task consisted of Take-off manoeuvre followed by Straight and Level manoeuvre without trim control. Participants were instructed to level the flight at altitude between 1000 ft and 2000 ft after taking off.

- A pointing and selection task complying with ISO 9241-9 standard was developed as the secondary task with the following target sizes and distances as described in Table 2.

- The flow of the secondary task is listed below:

- An auditory cue (beep sound) was played to the participant. This mimicked the situation in which the pilot feels the need to look into the MFD for getting some information.

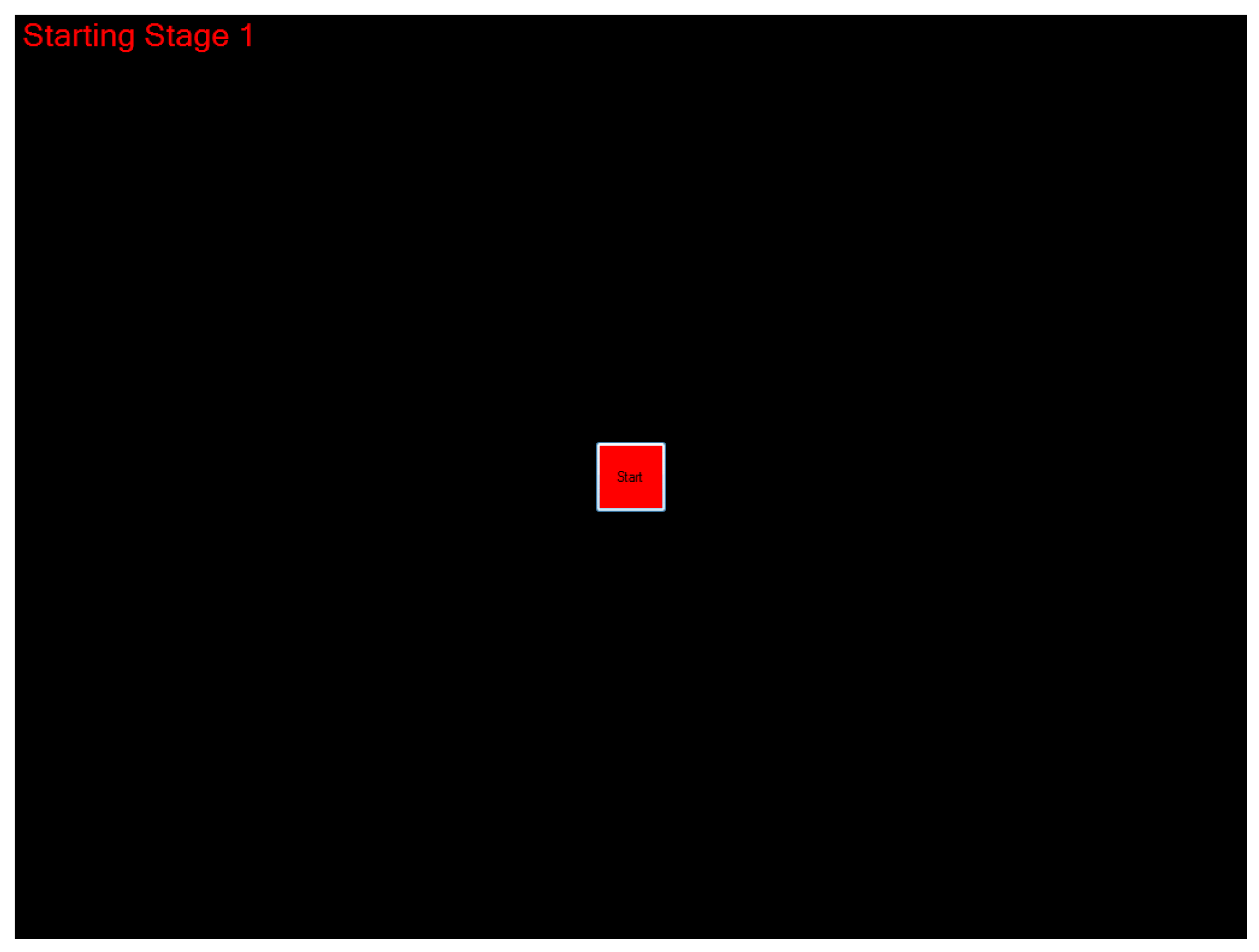

- The participant shifted his head and gaze to the MFD, in search for the cursor. For this task, the cursor was always placed in the center of the screen on a red-button (Figure 20). The eye tracker was used to determine if the pilot gazed at the button. The time taken by the participant to shift his head and gaze to the cursor on the MFD upon hearing that the auditory cue was measured and logged.

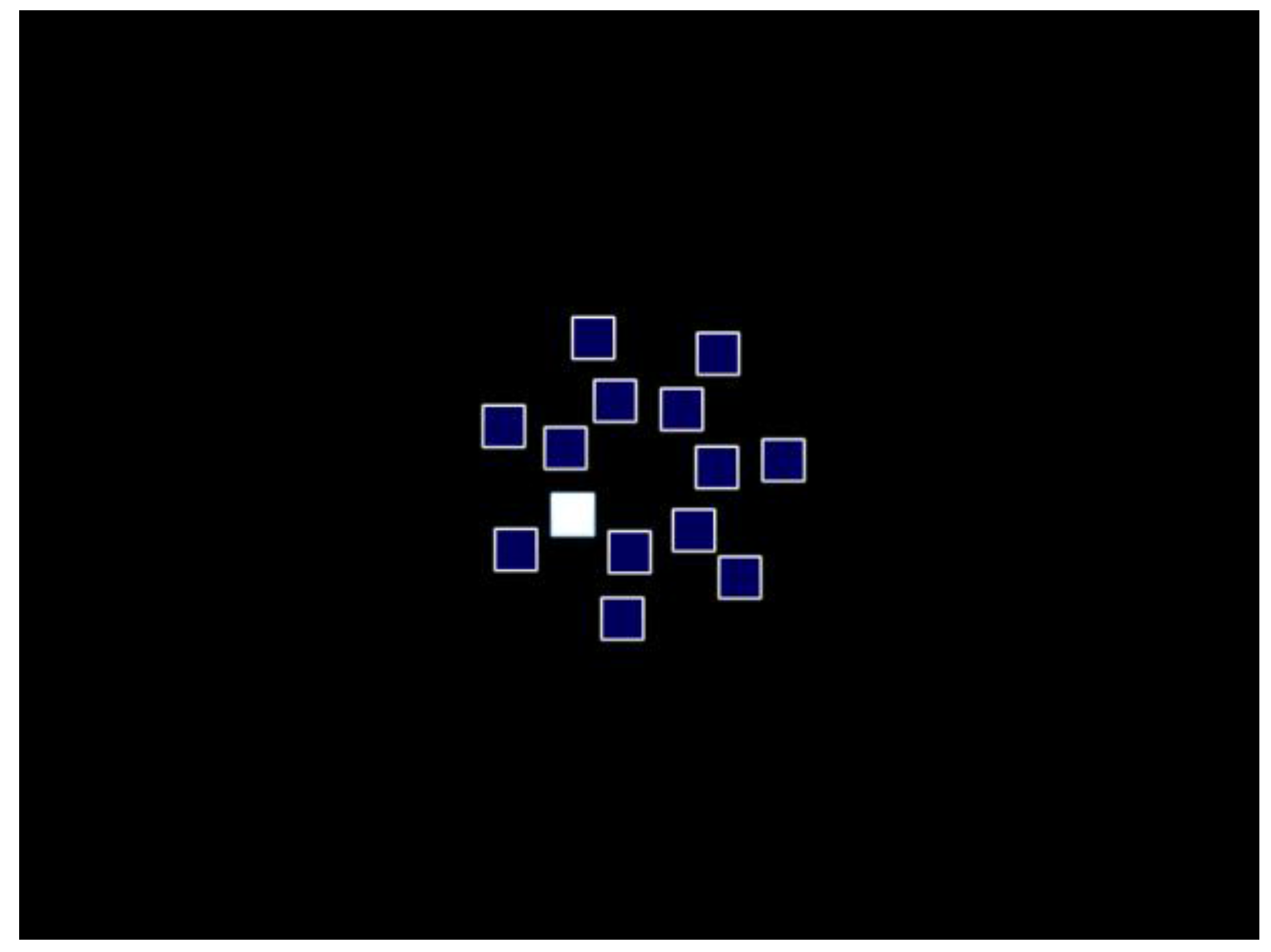

- Upon activation of the red button, fourteen buttons were displayed in a circular fashion around the center of the screen, of which one button was colored odd in white referred to as the target, and the rest were colored in blue referred to as distractors (Figure 21).

- The participant recognized the target from distractor, and manipulated the cursor using the provided input modality (TDS or eye gaze) to reach the target and then selected the target, using the Slew Button on the HOTAS. The time taken from the activation of the red button to the selection of the target button was measured and logged as the pointing and selection time.

- The participant then shifted his gaze back to the flight simulator to continue with the primary task.

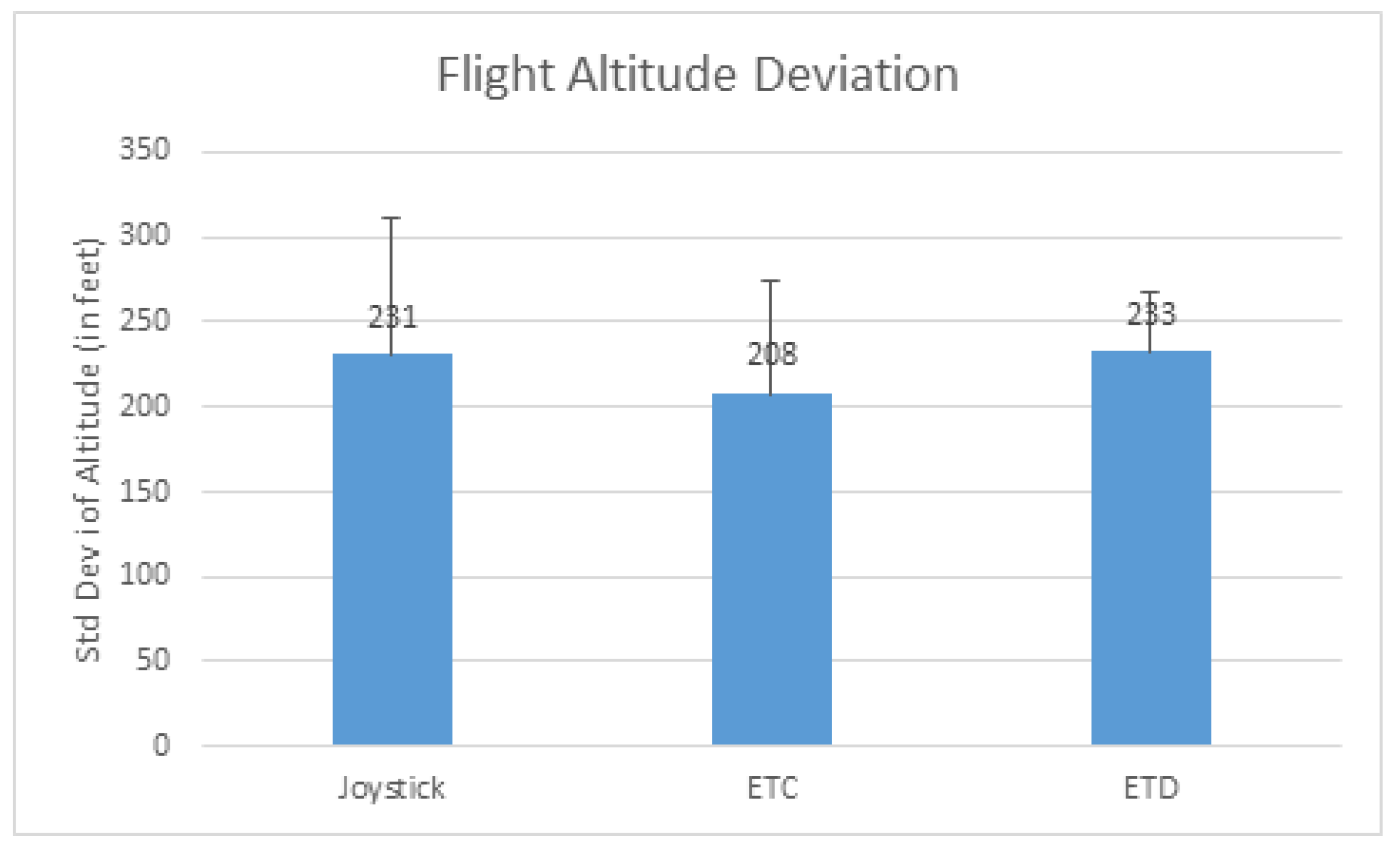

- Flying Performance was measured by

- Deviation from the straight line flying path,

- Deviation in altitude outside the specified envelope of 1000 and 2000 feet,

- Total distance flown,

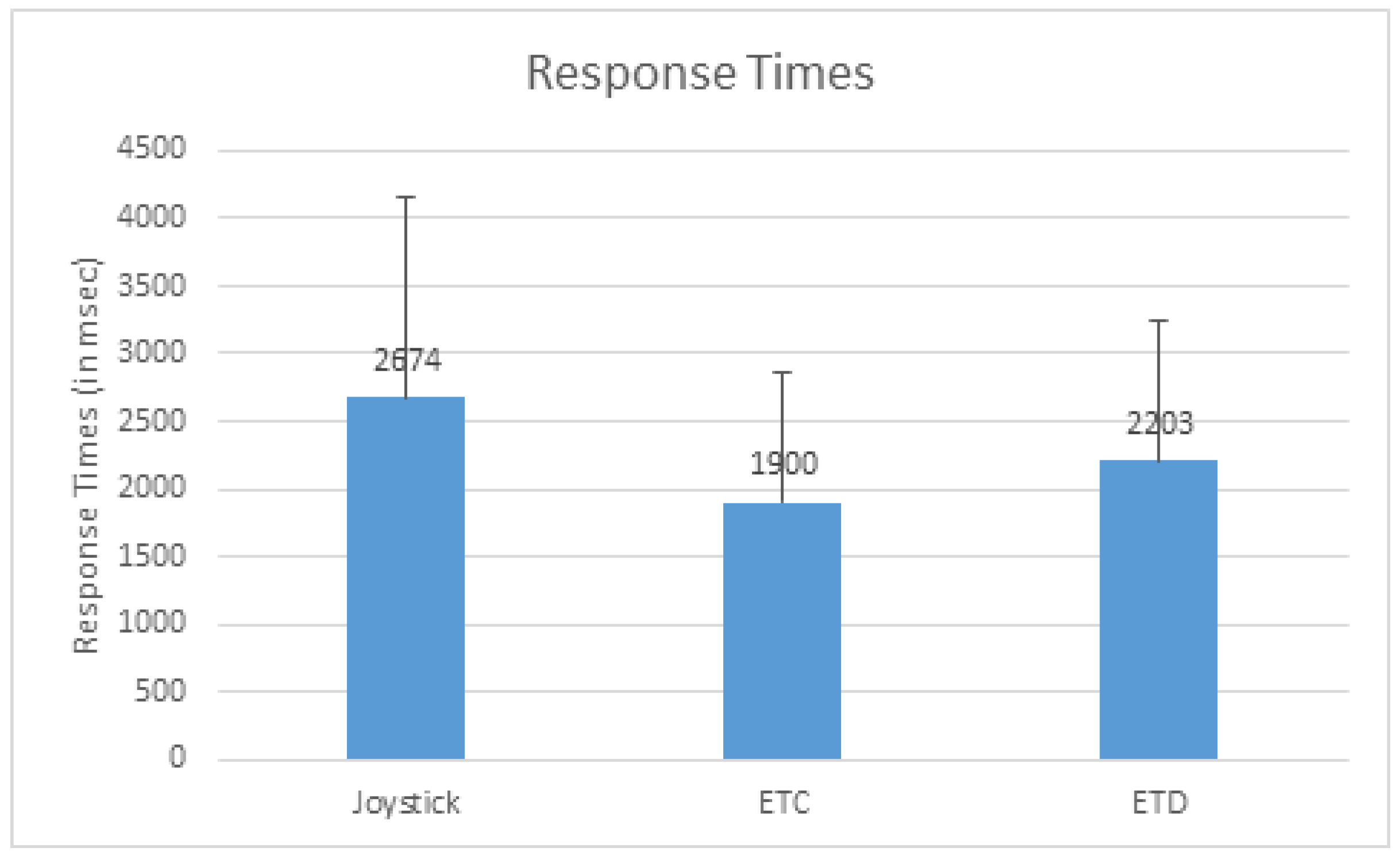

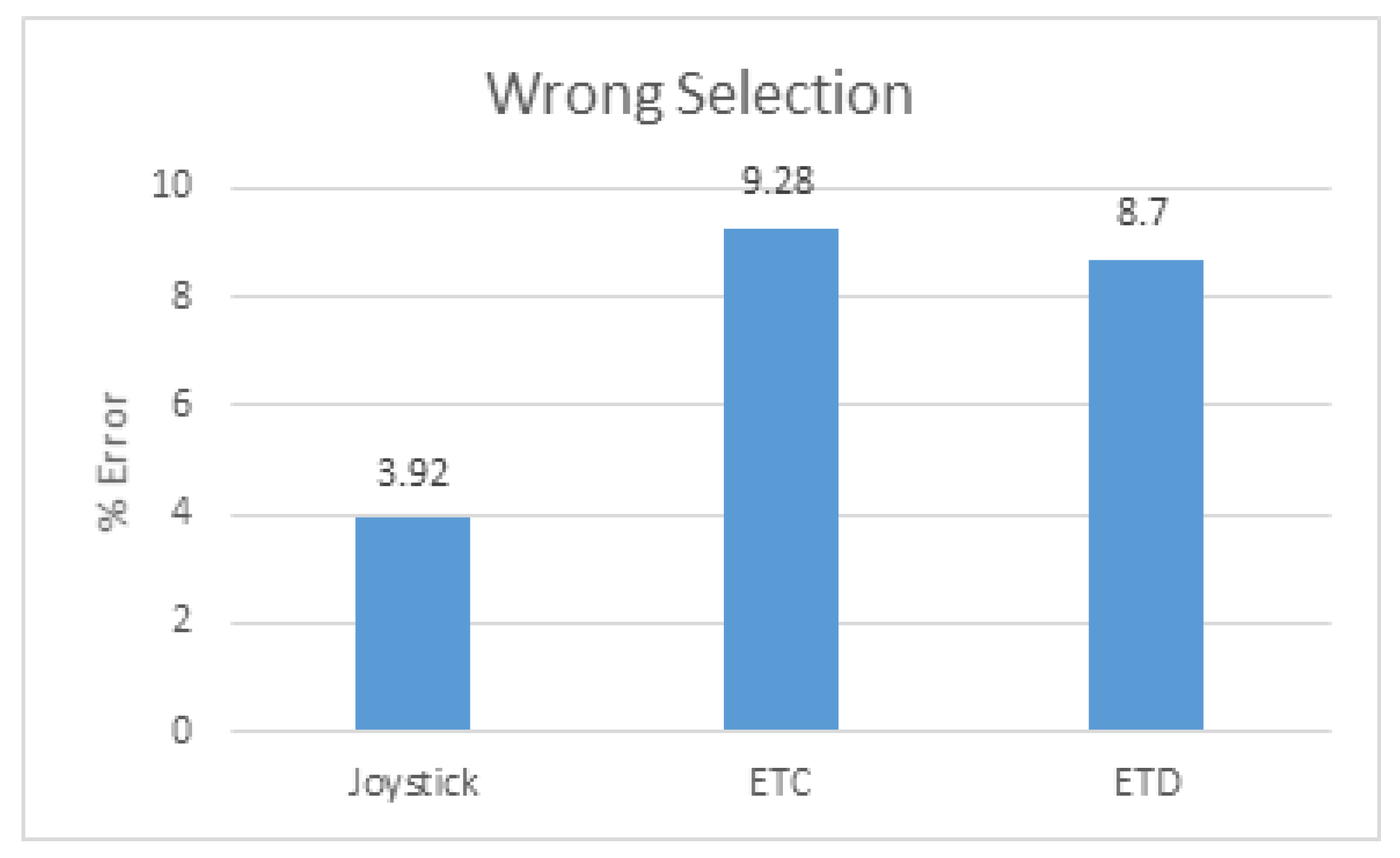

- Pointing and Clicking performance is measured as

- Error in secondary task as the number of wrong buttons selected. It is reported as a percent of the total number of button selections,

- Response time as the time difference between the auditory cue and the time instant of the selection of the target button. This time duration adds up time to react to auditory cue, switch from primary to secondary task and the pointing and selection time in the secondary task,

- Cognitive load measured as the NASA Task Load Index (TLX) score,

- Subjective preference as measured as the System Usability Score (SUS).

4.2. Confirmatory Studies

- Using HOTAS joystick,

- Using eye gaze tracking with clear visor (ETC),

- Using eye gaze tracking with dark visor (ETD).

5. Discussion

6. Conclusions

Author Contributions

Conflicts of Interest

References

- Huey, E. The Psychology and Pedagogy of Reading (Reprint); MIT Press: Cambridge, MA, USA, 1968. [Google Scholar]

- Duchowski, A.T. Eye Tracking Methodology; Springer: London, UK, 2007. [Google Scholar]

- Testing Procedure of Tobii Tracker. Available online: https://www.tobiipro.com/about/quality/ (accessed on 17 October 2017).

- Voronka, N.; Jacobus, C.J. Low-Cost Non-Imaging Eye Tracker System for Computer Control. U.S. Patent No. 6299308 B1, 9 October 2001. [Google Scholar]

- Milekic, S. Using Gaze Actions to Interact with a Display. U.S. Patent No. 7561143 B1, 14 July 2009. [Google Scholar]

- Jacob, M.; Hurwitz, B.; Kamhi, G. Eye Tracking Based Selective Accentuation of Portions of a Display. WO Patent No. 2013169237 A1, 14 November 2013. [Google Scholar]

- Farrell, S.; Zhai, S. System and Method for Selectively Expanding or Contracting a Portion of a Display Using Eye-Gaze Tracking. U.S. Patent No. 20050047629 A1, 3 March 2005. [Google Scholar]

- Martins, F.C.M. Passive Gaze-Driven Browsing. U.S. Patent No. 6608615 B1, 19 August 2003. [Google Scholar]

- Zhai, S.; Morimoto, C.; Ihde, S. Manual and Gaze Input Cascaded (MAGIC) Pointing. In Proceedings of the SIGCHI Conference on Human Factors in Computing System (CHI), Pittsburgh, PA, USA, 15–20 May 1999; ACM: New York, NY, USA, 1999. [Google Scholar]

- Ashdown, M.; Oka, K.; Sato, Y. Combining Head Tracking and Mouse Input for a GUI on Multiple Monitors. In Proceedings of the CHI Late breaking Result, Portland, OR, USA, 2–7 April 2005. [Google Scholar]

- Dostal, J.; Kristensson, P.O.; Quigley, A. Subtle Gaze-Dependent Techniques for Visualising Display Changes in Multi-Display Environments. In Proceedings of the ACM International Conference of Intelligent User Interfaces (IUI), Santa Monica, CA, USA, 19–22 March 2013. [Google Scholar]

- Zhang, Y.; Bulling, A.; Gellersen, H. SideWays: A Gaze Interface for Spontaneous Interaction with Situated Displays. In Proceedings of the ACM SIGCHI Conference on Human Factors in Computing System (CHI), Paris, France, 27 April–2 May 2013. [Google Scholar]

- Fu, Y.; Huang, T.S. hMouse: Head Tracking Driven Virtual Computer Mouse. In Proceedings of the IEEE Workshop on Applications of Computer Vision, Austin, TX, USA, 21–22 February 2007. [Google Scholar]

- CameraMouse. Available online: http://www.cameramouse.com (accessed on 22 September 2013).

- Fejtova, M.; Figueiredo, L.; Novák, P. Hands-free interaction with a computer and other technologies. Univers. Access Inf. Soc. 2009, 8, 277–295. [Google Scholar] [CrossRef]

- Fitts, P.M. The Information Capacity of the Human Motor System in controlling the amplitude of movement. J. Exp. Psychol. 1954, 47, 381–391. [Google Scholar] [CrossRef] [PubMed]

- Bates, R. Multimodal Eye-Based Interaction for Zoomed Target Selection on a Standard Graphical User Interface. In Proceedings of the INTERACT, Edinburgh, Scotland, 30 August–3 September 1999. [Google Scholar]

- Zandera, T.O.; Gaertnera, M.; Kothea, C.; Vilimek, R. Combining Eye Gaze Input with a Brain–Computer Interface for Touchless Human–Computer Interaction. Int. J. Hum. Comput. Interact. 2010, 27, 38–51. [Google Scholar] [CrossRef]

- Penkar, A.M.; Lutteroth, C.; Weber, G. Designing for the Eye—Design Parameters for Dwell in Gaze Interaction. In Proceedings of the 24th Australian Computer-Human Interaction Conference (OZCHI 2012), Melbourne, Australia, 26–30 November 2012. [Google Scholar]

- Grandt, M.; Pfendler, C.; Mooshage, O. Empirical Comparison of Five Input Devices for Anti-Air Warfare Operators. Available online: http://dodccrp.org/events/8th_ICCRTS/pdf/035.pdf (accessed on 30 November 2013).

- Lutteroth, C.; Penkar, M.; Weber, G. Gaze vs. Mouse: A Fast and Accurate Gaze-Only Click Alternative. In Proceedings of the 28th Annual ACM Symposium on User Interface Software & Technology, Charlotte, NC, USA, 11–15 November 2015. [Google Scholar]

- Pfeuffer, K.; Gellersen, H. Gaze and Touch Interaction on Tablets. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology, Tokyo, Japan, 16–19 October 2016; ACM: New York, NY, USA, 2016; pp. 301–311. [Google Scholar]

- Voelker, S.; Matviienko, A.; Schöning, J.; Borchers, J. Combining Direct and Indirect Touch Input for Interactive Desktop Workspaces using Gaze Input. In Proceedings of the 3rd ACM Symposium on Spatial User Interaction, Los Angeles, CA, USA, 8–9 August 2015. [Google Scholar]

- Jagacinski, R.J.; Monk, D.L. Fitts’ Law in Two Dimensions with Hand and Head Movements. J. Mot. Behav. 1985, 17, 77–95. [Google Scholar] [CrossRef] [PubMed]

- Kaber, D.B.; Riley, J.M.; Tan, K.W. Improved usability of aviation automation through direct manipulation and graphical user interface design. Int. J. Aviat. Psychol. 2002, 12, 153–178. [Google Scholar] [CrossRef]

- Baty, D.L.; Watkins, M.L. An Advanced Cockpit Instrumentation System: The Co-Ordinated Cockpit Displays; NASA Technical Memorandum 78559; NASA Scientific and Technical Information Office: Washington, DC, USA, 1979.

- Sokol, T.C.; Stekler, H.O. Technological change in the military aircraft cockpit industry. Technol. Forecast. Soc. Chang. 1988, 33, 55–62. [Google Scholar] [CrossRef]

- Furness, T.A. The super cockpit and its human factors challenges. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Dayton, OH, USA, 29 September–3 October 1986; Volume 30, p. 48. [Google Scholar]

- Thomas, P.; Biswas, P.; Langdon, P. State-of-the-Art and Future Concepts for Interaction in Aircraft Cockpits. In Proceedings of the HCI International, Los Angeles, CA, USA, 2–7 August 2015. [Google Scholar]

- MacKenzie, I.S.; Sellen, A.; Buxton, W. A comparison of input devices in elemental pointing and dragging tasks. In Proceedings of the CHI’91 Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 27 April–2 May 1991; ACM: New York, NY, USA, 1991; pp. 161–166. [Google Scholar]

- Thomas, P. Performance, characteristics, and error rates of cursor control devices for aircraft cockpit interaction. Int. J. Hum. Comput. Stud. 2018, 109, 41–53. [Google Scholar] [CrossRef]

- Biswas, P.; Langdon, P. Multimodal Intelligent Eye-Gaze Tracking System. Int. J. Hum. Comput. Interact. 2015, 31, 277–294. [Google Scholar] [CrossRef]

- Chang, W.; Hwang, W.; Ji, Y.G. Haptic Seat Interfaces for Driver Information and Warning Systems. Int. J. Hum. Comput. Interact. 2011, 27, 1119–1132. [Google Scholar] [CrossRef]

- Miniotas, D. Application of Fitts’ Law to Eye Gaze Interaction. In Proceedings of the ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), The Hague, The Netherlands, 1–6 April 2001; pp. 339–340. [Google Scholar]

- Ohn-Bar, E.; Trivedi, M. Hand Gesture Recognition in Real-Time for Automotive Interfaces: A Multimodal Vision-based Approach and Evaluations. IEEE Trans. Intell. Transp. Syst. 2014, 15, 2368–2377. [Google Scholar] [CrossRef]

- Feld, M.; Meixner, G.; Mahr, A.; Seissler, M.; Kalyanasundaram, B. Generating a Personalized UI for the Car: A User-Adaptive Rendering Architecture. In Lecture Notes in Computer Science, Proceedings of the International Conference on User Modeling, Adaptation, and Personalization (UMAP), Rome, Italy, 10–14 June 2013; Springer: Berlin/Heidelberg, Germany, 2013; Volume 7899, pp. 344–346. [Google Scholar]

- Normark, C.J. Design and Evaluation of a Touch-Based Personalizable In-vehicle User Interface. Int. J. Hum. Comput. Interact. 2015, 31, 731–745. [Google Scholar] [CrossRef]

- Poitschke, T.; Laquai, F.; Stamboliev, S.; Rigoll, G. Gaze-based interaction on multiple displays in an automotive environment. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC), Anchorage, AK, USA, 9–12 October 2011; pp. 543–548. [Google Scholar]

- Duarte, R.S.; Oliveira, B.F. Comparing the Glance Time Required to Operate Standart Interface Devices and Touchscreen Devices in Automobiles. In Proceedings of the ACM Interacción, Puerto de la Cruz, Tenerife, Spain, 10–12 September 2014. [Google Scholar]

- May, K.R.; Walker, B.N.; Gable, T.M. A Multimodal Air Gesture Interface for In Vehicle Menu Navigation. In Proceedings of the 6th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (Automotive UI 2014), Seattle, WA, USA, 17–19 September 2014; ACM: New York, NY, USA, 2014. [Google Scholar]

- Weinberg, G.; Knowles, A.; Langer, P. BullsEye: An Automotive Touch Interface that’s always on Target. In Proceedings of the 4th International Conference on Automotive UI, Portsmouth, NH, USA, 17–19 October 2014. [Google Scholar]

- Mondragon, C.K.; Bleacher, B. Eye Tracking Control of Vehicle Entertainment Systems. U.S. Patent No. WO2013036632, 14 March 2013. [Google Scholar]

- Ruiz, J.; Lank, E. Speeding pointing in tiled widgets: Understanding the effects of target expansion and misprediction. In Proceedings of the 15th International Conference on Intelligent User Interfaces (IUI’10), Hong Kong, China, 7–10 February 2010; ACM: New York, NY, USA, 2010; pp. 229–238. [Google Scholar]

- Seder, T.A.; Szczerba, J.F.; Cui, D. Virtual Cursor for Road Scene Object Lelection on Full Windshield Head-up Display. U.S. Patent No. US20120174004, 16 June 2015. [Google Scholar]

- Vertegaal, R. A Fitts’ Law Comparison of Eye Tracking and Manual Input in the Selection of Visual Targets. In Proceedings of the International Conference of Multimodal Interaction, Chania, Crete, Greece, 20–22 October 2008; pp. 241–248. [Google Scholar]

- System Usability Scale. Available online: http://en.wikipedia.org/wiki/System_usability_scale (accessed on 12 July 2014).

- Kim, J.; Lim, J.; Jo, C.; Kim, K. Utilization of Visual Information Perception Characteristics to Improve Classification Accuracy of Driver’s Visual Search Intention for Intelligent Vehicle. Int. J. Hum. Comput. Interact. 2015, 31, 717–729. [Google Scholar] [CrossRef]

- Flight Manual for the Package Eurofighter Typhoon Professional. Available online: https://www.afs-design.de/pdf/AFS_EFpro_English.pdf (accessed on 3 October 2017).

- Biswas, P.; Langdon, P. Eye-Gaze Tracking based Interaction in India. Procedia Comput. Sci. 2014, 39, 59–66. [Google Scholar] [CrossRef]

- Ware, C.; Mikaelian, H.M. An Evaluation of an Eye Tracker as a Device for Computer Input. In Proceedings of the ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), Toronto, ON, Canada, 5–9 April 1987; pp. 183–187. [Google Scholar]

- Murata, A. Improvement of Pointing Time by Predicting Targets in Pointing with a PC Mouse. Int. J. Hum. Comput. Interact. 1998, 10, 23–32. [Google Scholar] [CrossRef]

- Pasqual, P.; Wobbrock, J. Mouse pointing end-point prediction using kinematic template matching. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI’14), Toronto, ON, Canada, 26 April 26–1 May 2014; pp. 743–752. [Google Scholar]

- Biswas, P. Exploring the Use of Eye Gaze Controlled Interfaces in Automotive Environments; Springer: Cham, Switzerland, 2016; ISBN 978-3-319-40708-1. [Google Scholar]

- Tobii X3 Eye Tracker. Available online: https://www.tobiipro.com/product-listing/tobii-pro-x3-120/ (accessed on 31 August 2016).

- Treisman, A.; Gelade, G. A Feature Integration Theory of Attention. Cogn. Psychol. 1980, 12, 97–136. [Google Scholar] [CrossRef]

- HOTAS. Available online: http://www.thrustmaster.com/products/hotas-warthog (accessed on 12 July 2016).

- Large, D.; Burnett, G.; Crundall, E.; Lawson, G.; Skrypchuk, L. Twist It, Touch It, Push It, Swipe It: Evaluating Secondary Input Devices for Use with an Automotive Touchscreen HMI. In Proceedings of the 8th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (ACM AutoUI), Ann Arbor, MI, USA, 24–26 October 2016. [Google Scholar]

- Ward, D.J.; Blackwell, A.F.; MacKay, D.J.C. Dasher—A Data Entry Interface Using Continuous Gestures and Language Models. In Proceedings of the 13th annual ACM symposium on User interface software and technology (UIST), San Diego, CA, USA, 6–8 November 2000. [Google Scholar]

- Kern, D.; Mahr, A.; Castronovo, S.; Schmidt, A.; Müller, C. Making Use of Drivers’ Glances onto the Screen for Explicit Gaze-Based Interaction. In Proceedings of the Second International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Pittsburgh, PA, USA, 11–12 November 2010. [Google Scholar]

- Zhai, S.; Hunter, M.; Smith, B.A. The Metropolis Keyboard—An Exploration of Quantitative Techniques for Virtual Keyboard Design. In Proceedings of the ACM Symposium on User Interface Software and Technology (UIST 2000), San Diego, CA, USA, 5–8 November 2000. [Google Scholar]

- Biswas, P.; Twist, S.; Godsill, S. Intelligent Finger Movement Controlled Interface for Automotive Environment. In Proceedings of the British HCI Conference, Sunderland, UK, 3–6 July 2017. [Google Scholar]

| ? | Primary Display | Secondary Display |

|---|---|---|

| Projector Model | Dell 1220 | Acer X1185G |

| Resolution (pixel) | 1024 × 768 | 1024 × 768 |

| Size of Projection (mm) | 1200 × 900 | 380 × 295 |

| Distance from Eye (mm) | 2100 | 285 |

| Width of Target | ||

| Pixels | Size (in mm) | Arc Angle |

| 40 | 14.6 | 3° |

| 45 | 18.7 | 3.4° |

| 55 | 20.4 | 4.1° |

| 60 | 22.3 | 4.5° |

| Distance of Target from the Centre of Screen | ||

| Pixels | mm | Arc Angle |

| 65 | 24.2 | 4.8° |

| 120 | 44.6 | 9.0° |

| 200 | 74.2 | 14.8° |

| 315 | 116.8 | 23.2° |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Prabhakar, G.; Biswas, P. Eye Gaze Controlled Projected Display in Automotive and Military Aviation Environments. Multimodal Technol. Interact. 2018, 2, 1. https://doi.org/10.3390/mti2010001

Prabhakar G, Biswas P. Eye Gaze Controlled Projected Display in Automotive and Military Aviation Environments. Multimodal Technologies and Interaction. 2018; 2(1):1. https://doi.org/10.3390/mti2010001

Chicago/Turabian StylePrabhakar, Gowdham, and Pradipta Biswas. 2018. "Eye Gaze Controlled Projected Display in Automotive and Military Aviation Environments" Multimodal Technologies and Interaction 2, no. 1: 1. https://doi.org/10.3390/mti2010001