Comparing Map Learning between Touchscreen-Based Visual and Haptic Displays: A Behavioral Evaluation with Blind and Sighted Users

Abstract

:1. Introduction

2. Related Work

3. Contributions

4. Materials and Methods

4.1. Experiment 1: Evaluation with BVI Users

4.1.1. Participants

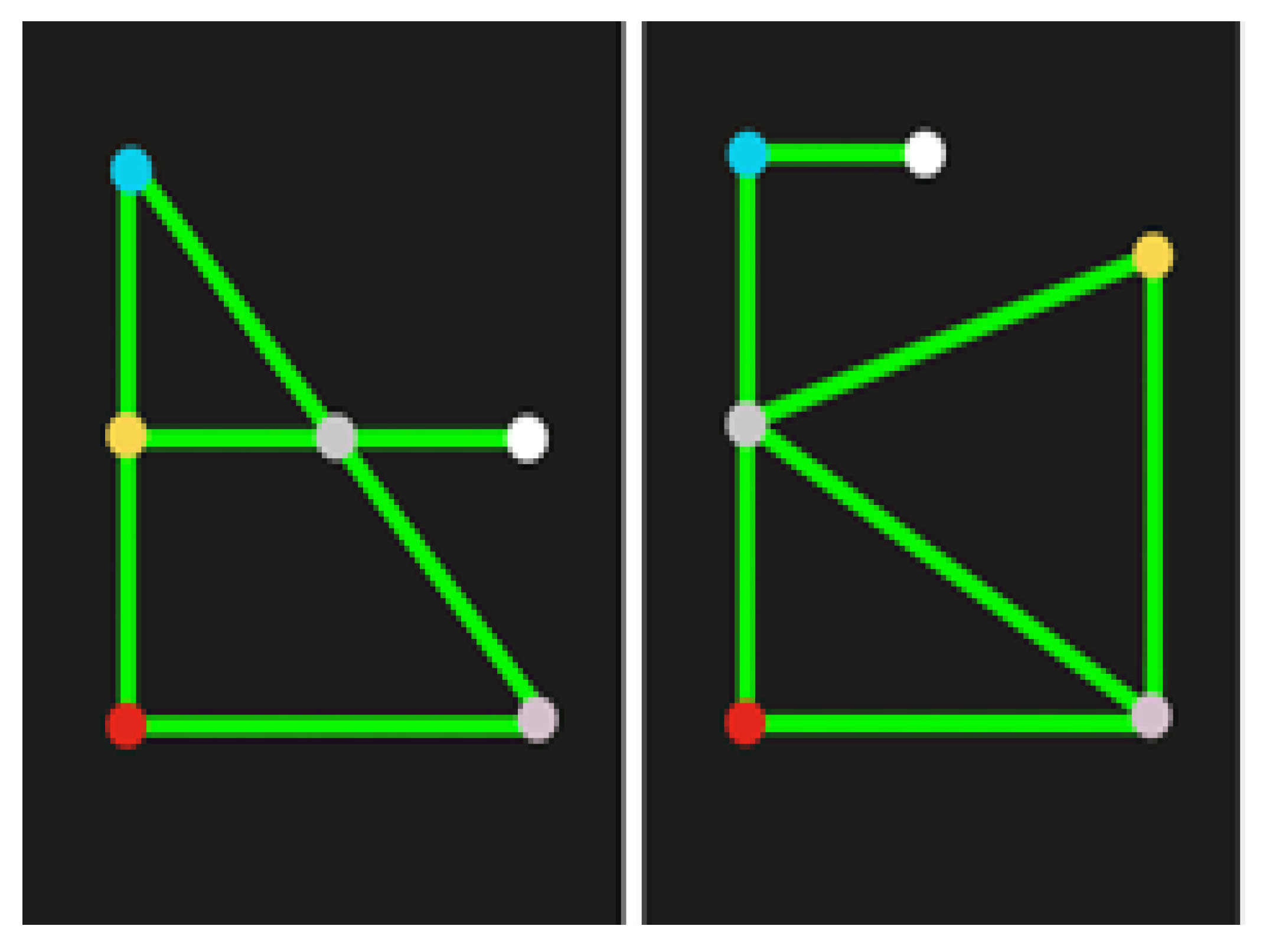

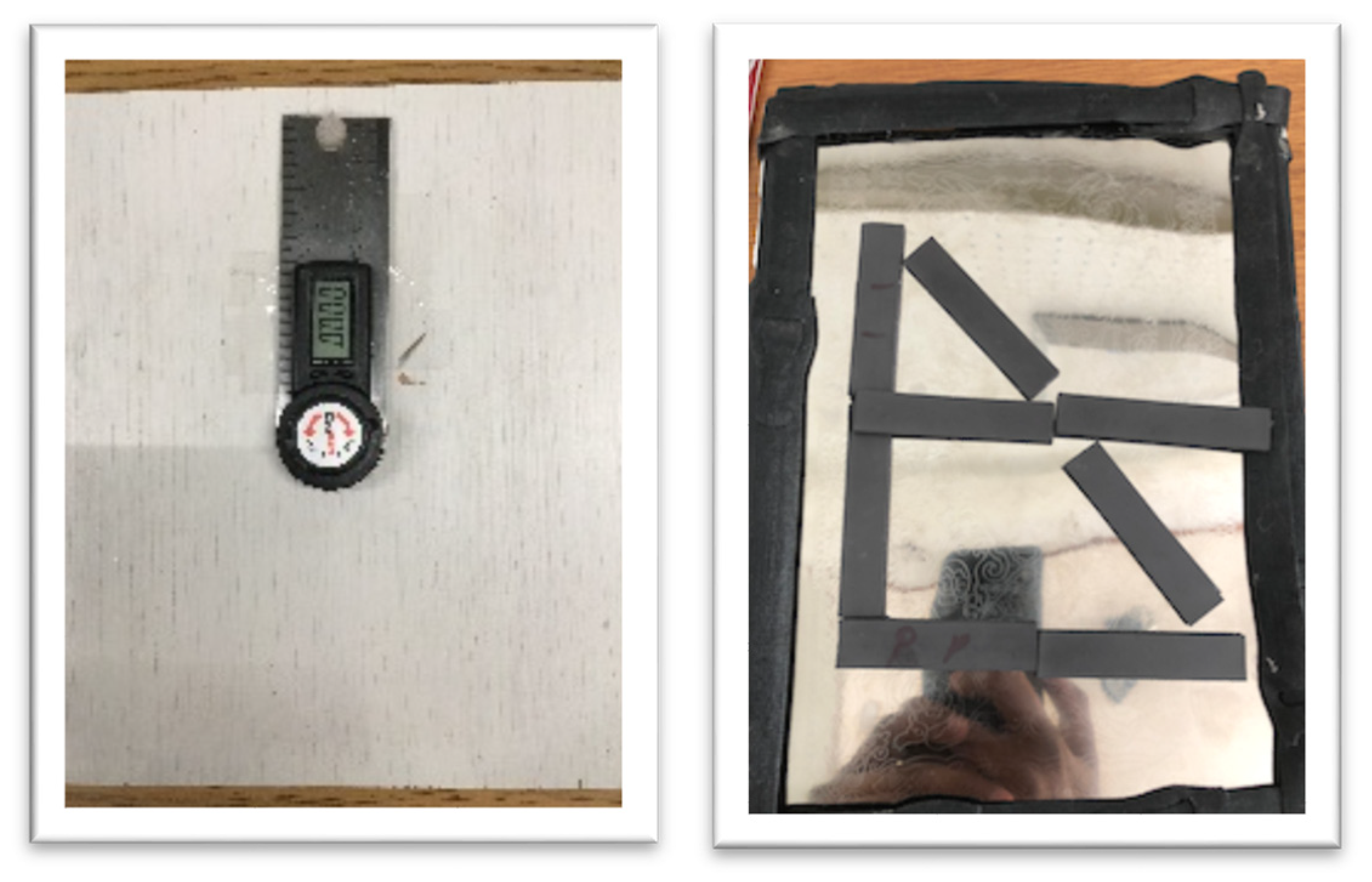

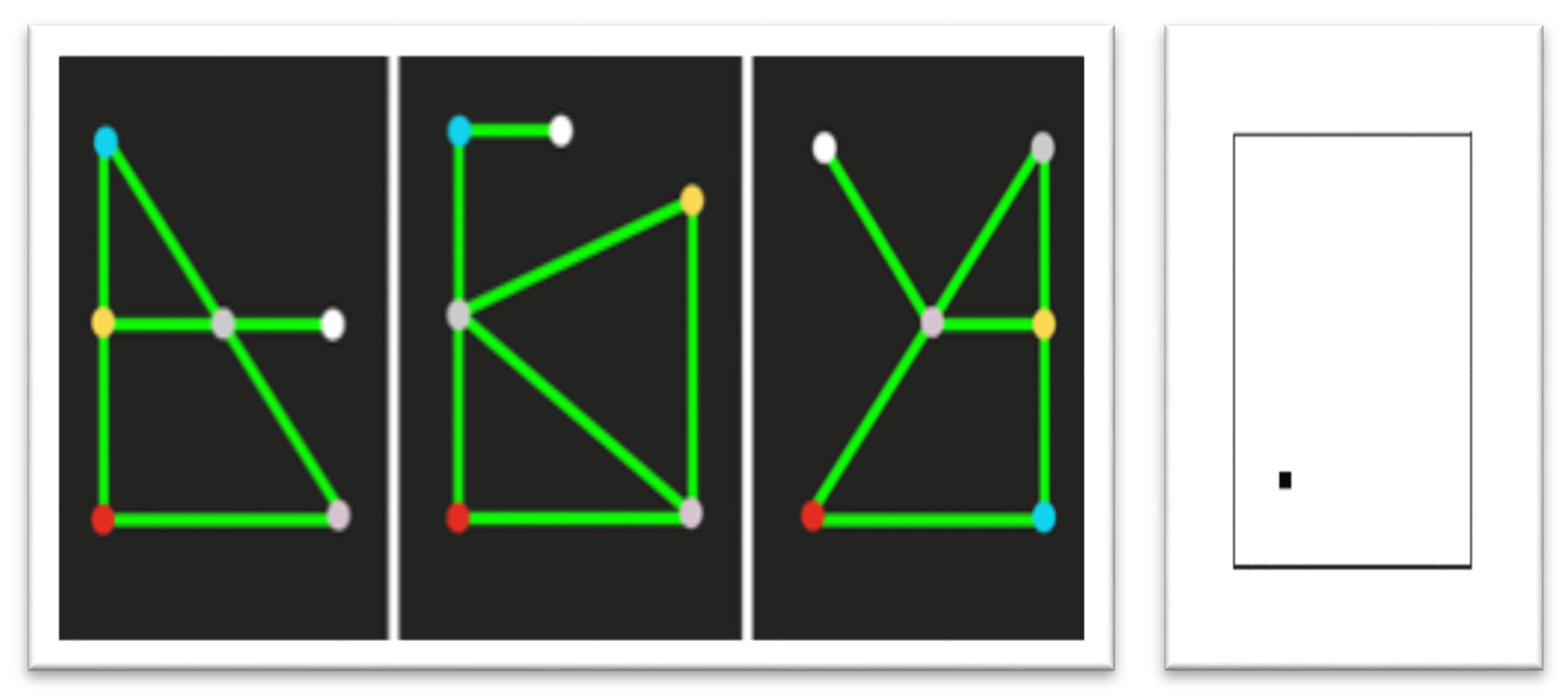

4.1.2. Conditions

4.1.3. Stimulus and Apparatus

4.1.4. Procedure

4.2. Experiment 2: Evaluation with Sighted Users

4.2.1. Participants

4.2.2. Conditions, Stimulus, and Procedure

5. Results

5.1. Results from Experiment 1: BVI Users

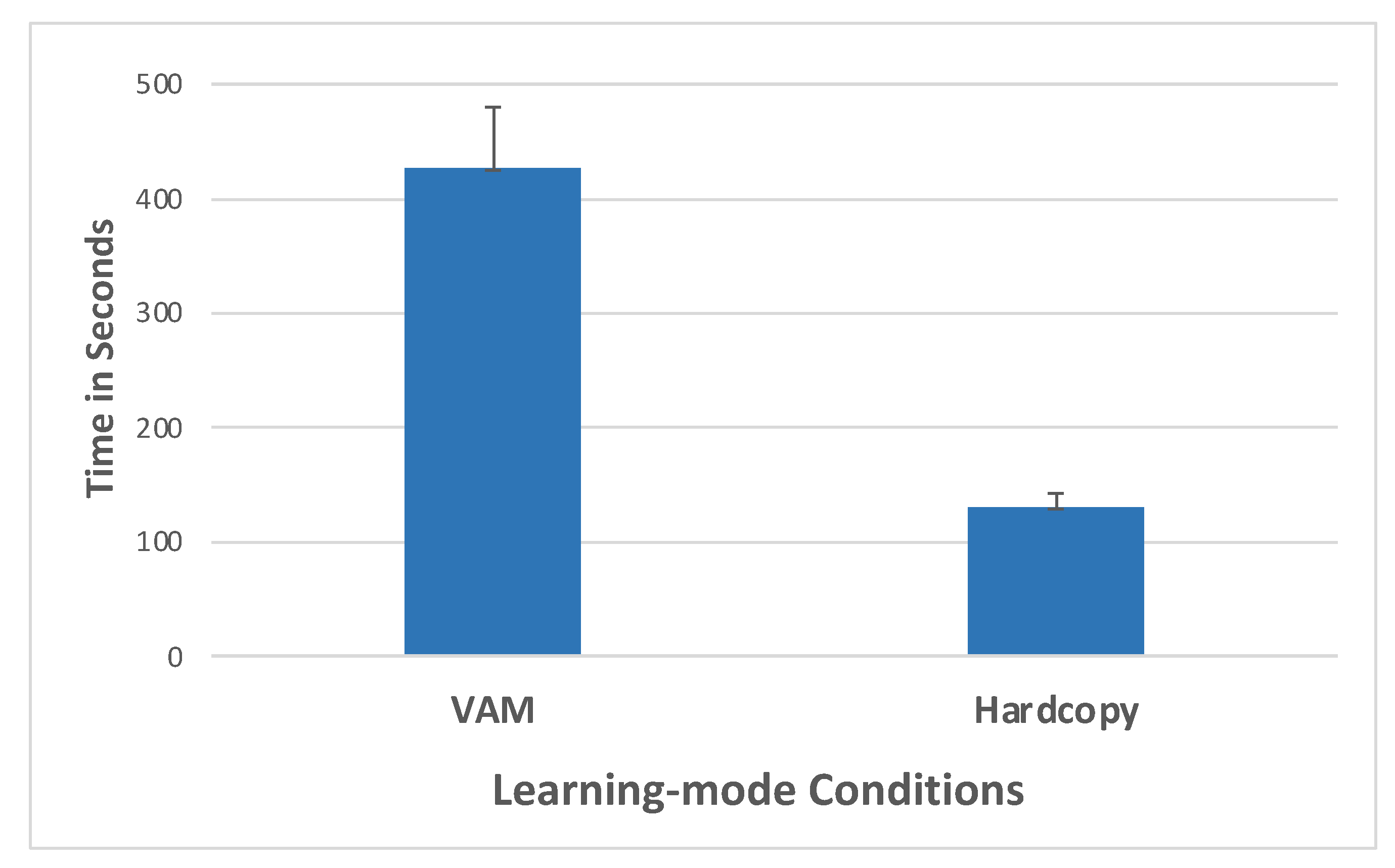

5.1.1. Learning Time

5.1.2. Wayfinding Accuracy

5.1.3. Wayfinding Sequence

5.1.4. Relative Directional Accuracy

5.1.5. Reconstruction Accuracy

5.2. Results from Experiment 2: Blindfolded Sighted Users

5.3. Comparison between Participant Groups

6. Discussion and Future Work

- Evidence that incorporating our previously established perceptual parameters and design guidelines yield significant performance improvements in learning and spatial behaviors. For example, the pointing errors with the VAM were significantly less than the average ~18° pointing errors reported in an earlier study using a touchscreen-based haptic interface not optimized with the current parameters [13]. Although learning with the VAM took longer than learning with traditional hardcopy tactile maps, these temporal differences were narrowed in the current studies, where learning with the VAM was notably faster than has been found in previous research. For instance, average learning time was ~6.5 min in the current studies, whereas participants in previous work evaluating touchscreen-based vibration and auditory cues not optimized with the parameters took an average of ~15 min to learn maps of similar complexity [11,12,70,71]. Taken together, these findings suggest that the previously established perceptual parameters and design guidelines for use on touchscreen-based non-visual interfaces (e.g., our prototype vibro-audio map) have positively influenced user behavior, both in terms of temporal performance and spatial accuracy.

- Results provide compelling evidence for the similarity of spatio-behavioral performance across all test measures when using the VAM vs. traditional hardcopy tactile maps. This outcome not only supports the efficacy of the VAM (and touchscreen-based haptic feedback more generally) as a viable new solution for conveying graphical information, but it also suggests that it can be used as effectively as traditional non-visual maps. The similar (or better) behavioral performance observed across testing measures and experiments for the VAM suggests that the cognitive maps built up from VAM learning were at least as accurate as those formed by learning with the hardcopy tactile maps. Beyond supporting the VAM as a viable new interface, this lack of reliable difference is of theoretical interest because the similarity of performance between the two tactile (haptic) conditions speaks to the ability of both channels to support cognitive map development, despite employing information extraction and pick-up from different sensory receptors (pressure-activated mechanoreceptors versus vibration-sensitive Pacinian corpuscles) and feedback mechanisms (intrinsic perceptual feedback as opposed to extrinsic vibratory feedback).

- Results provide compelling evidence for the similarity of spatio-behavioral performance when using the VAM between BVI participants and blindfolded sighted participants during haptic map learning. The lack of reliable statistical differences observed between Experiments 1 and 2 suggest that non-visual map learning and subsequent spatio-behavioral task performance based on the ensuing cognitive map is not dependent on the presence or absence of vision. We interpret these functionally similar findings between sighted and BVI participants as: (1) Providing support against the conventional view that BVI spatial performance is impoverished with respect to their sighted peers (for reviews, see [36,42,69]). Indeed, the current findings are congruent with a growing body of evidence showing highly similar performance on spatial tasks between these groups when sufficient information is available through non-visual spatial supports [56,72,73]. (2) Showing that sighted users stand to greatly benefit from haptic-based interfaces and increased research interest, especially in eyes-free scenarios. (3) Demonstrating that valid data are possible from blindfolded sighted participants in non-visual studies when sufficient training is provided.

- The results provide compelling evidence that visual map learning and haptic map learning are functionally equivalent for developing accurate cognitive maps and supporting spatial behaviors when matched for information content. The statistically indistinguishable test performance observed here after haptic and visual map learning in Experiment 2 is consistent with the view that spatial learning from different sensory inputs, when matched for information content as we did here, leads to the development and use of sensory-independent, amodal representations of space in memory [55,74]. The similarity observed between blind and sighted participants across experiments, as discussed in the previous point, provides additional evidence for the notion of developing and accessing of a sensory-independent spatial representation that functions equivalently in the service of action. This interpretation is consistent with a growing corpus of data from other studies comparing performance by blindfolded sighted and BVI users on the same tasks after visual and tactile learning, e.g., of simple route maps [56], bar graphs and shapes [6], indoor floor maps [13], and spatial path patterns [65].

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- World Health Organization. Visual Impairment and Blindness Fact Sheet; World Health Organization: Geneva, Switzerland, 2018. [Google Scholar]

- Bourne, R.R.; Adelson, J.; Flaxman, S.; Briant, P.; Bottone, M.; Vos, T.; Naidoo, K.; Braithwaite, T.; Cicinelli, M.; Jonas, J. Global Prevalence of Blindness and Distance and Near Vision Impairment in 2020: Progress towards the Vision 2020 targets and what the future holds. Investig. Ophthalmol. Vis. Sci. 2020, 61, 2317. [Google Scholar]

- Apple. Apple VoiceOver. Available online: www.apple.com/accessibility/voiceover/ (accessed on 10 March 2019).

- JAWS. Available online: www.freedomscientific.com (accessed on 10 March 2019).

- WebAim. WebAim: Screen Reader User Survey #5 Results. Available online: http://webaim.org/projects/screenreadersurvey5/ (accessed on 8 January 2018).

- Giudice, N.A.; Palani, H.; Brenner, E.; Kramer, K.M. Learning Non-Visual Graphical Information using a Touch-Based Vibro-Audio Interface. In Proceedings of the 14th International ACM SIGACCESS Conference on Computers and Accessibility (Assets’12), Boulder, CO, USA, 22–24 October 2012; ACM: New York, NY, USA, 2012; pp. 103–110. [Google Scholar]

- Klatzky, R.L.; Giudice, N.A.; Bennett, C.R.; Loomis, J.M. Touch-Screen Technology for the Dynamic Display of 2D Spatial Information Without Vision: Promise and progress. Multisens. Res. 2014, 27, 359–378. [Google Scholar] [CrossRef] [Green Version]

- Tennison, J.L.; Gorlewicz, J.L. Non-visual Perception of Lines on a Multimodal Touchscreen Tablet. ACM Trans. Appl. Percept. 2019, 16, 1–19. [Google Scholar] [CrossRef]

- Tennison, J.L.; Carril, Z.S.; Giudice, N.A.; Gorlewicz, J.L. Comparing graphical pattern matching on tablets and phones: Large screens are not necessarily better. Optom. Vis. Sci. 2018, 95, 720–726. [Google Scholar] [CrossRef] [Green Version]

- Tennison, J.L.; Gorlewicz, J.L. Toward Non-visual Graphics Representations on Vibratory Touchscreens: Shape Exploration and Identification. In Proceedings of the 10th International EuroHaptics Conference, London, UK, 4–7 July 2016; pp. 384–395. [Google Scholar]

- Poppinga, B.; Magnusson, C.; Pielot, M.; Rassmus-Gröhn, K. TouchOver map: Audio-tactile exploration of interactive maps. In Proceedings of the 12th International Conference on Human Computer Interaction with Mobile Devices, Lisbon, Portugal, 7–10 September 2010; ACM: Stockholm, Sweden, 2011; pp. 545–550. [Google Scholar]

- Palani, H.P.; Giudice, U.; Giudice, N.A. Evaluation of Non-visual Zooming Operations on Touchscreen Devices. In Proceedings of the 10th International Conference of Universal Access in Human-Computer Interaction (UAHCI), Part of HCI International 2016, Toronto, CA, USA, 17–22 July 2016; Antona, M., Stephanidis, C., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 162–174. [Google Scholar]

- Palani, H.P.; Giudice, N.A. Principles for Designing Large-Format Refreshable Haptic Graphics Using Touchscreen Devices: An Evaluation of Nonvisual Panning methods. ACM Trans. Access. Comput. (TACCESS) 2017, 9, 1–25. [Google Scholar] [CrossRef]

- Giudice, N.A.; Guenther, B.A.; Jensen, N.A.; Haase, K.N. Cognitive mapping without vision: Comparing wayfinding performance after learning from digital touchscreen-based multimodal maps vs. embossed tactile overlays. Front. Hum. Neurosci. 2020, 14, 87. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Grussenmeyer, W.; Garcia, J.; Jiang, F. Feasibility of using haptic directions through maps with a tablet and smart watch for people who are blind and visually impaired. In MobileHCI’16; ACM: Florence, Italy, 2016; pp. 83–89. [Google Scholar]

- Braille Authority of North America. Guidelines and Standards for Tactile Graphics; The Braille Authority of North America: Sandra Ruconich, UT, USA, 2010. [Google Scholar]

- Rowell, J.; Ungar, S. The world of touch: An international survey of tactile maps. Part 1: Production. Br. J. Vis. Impair. 2003, 21, 98–104. [Google Scholar] [CrossRef]

- Rowell, J.; Ungar, S. The world of touch: An international survey of tactile maps. Part 2: Design. Br. J. Vis. Impair. 2003, 21, 105–110. [Google Scholar] [CrossRef]

- Johnson, K.O.; Phillips, J.R. Tactile spatial resolution. I. Two-point discrimination, gap detection, grating resolution, and letter recognition. J. Neurophysiol. 1981, 46, 1177–1191. [Google Scholar] [CrossRef] [PubMed]

- Loomis, J.M.; Lederman, S.J. Tactual perception. In Handbook of Perception and Human Performance; Boff, K., Kaufman, L., Thomas, J., Eds.; Wiley: New York, NY, USA, 1986; Volume 2, p. 31. [Google Scholar]

- Jones, L.A.; Sarter, N.B. Tactile displays: Guidance for their design and application. Hum. Factors 2008, 50, 90–111. [Google Scholar] [CrossRef]

- Loomis, J.M. Tactile pattern perception. Perception 1981, 10, 5–27. [Google Scholar] [CrossRef]

- Lederman, S.J.; Klatzky, R.L.; Barber, P.O. Spatial and movement-based heuristics for encoding pattern information through touch. J. Exp. Psychol. Gen. 1985, 114, 33–49. [Google Scholar] [CrossRef]

- O’Modhrain, S.; Giudice, N.A.; Gardner, J.A.; Legge, G.E. Designing media for visually-impaired users of refreshable touch displays: Possibilities and pitfalls. IEEE Trans. Haptics 2015, 8, 248–257. [Google Scholar] [CrossRef]

- Palani, H. Making Graphical Information Accessible Without Vision Using Touch-Based Devices; University of Maine: Orono, ME, USA, 2013. [Google Scholar]

- Raja, M.K. The Development and Validation of a New Smartphone Based Non-Visual Spatial Interface for Learning Indoor Layouts; University of Maine: Orono, ME, USA, 2011. [Google Scholar]

- Lederman, S.; Klatzky, R. Haptic perception: A tutorial. Atten. Percept. Psychophys. 2009, 71, 1439–1459. [Google Scholar] [CrossRef] [Green Version]

- Gorlewicz, J.L.; Tennison, J.L.; Palani, H.P.; Giudice, N.A. The Graphical Access Challenge for People with Visual Impairments: Positions and Pathways Forward. In Interactive Multimedia; IntechOpen: London, UK, 2018; pp. 1–18. Available online: https://www.researchgate.net/publication/330940759_The_Graphical_Access_Challenge_for_People_with_Visual_Impairments_Positions_and_Pathways_Forward (accessed on 7 December 2021).

- Tennison, J.L.; Uesbeck, P.M.; Giudice, N.A.; Stefik, A.; Smith, D.W.; Gorlewicz, J.L. Establishing Vibration-based Tactile Line Profiles for Use in Multimodal Graphics. Trans. Appl. Percept. 2020, 17, 1–14. [Google Scholar] [CrossRef]

- Palani, H.P.; Fink, P.D.S.; Giudice, N.A. Design Guidelines for Schematizing and Rendering Haptically Perceivable Graphical Elements on Touchscreen Devices. Int. J. Hum. Comput. Interact. 2020, 36, 1393–1414. [Google Scholar] [CrossRef]

- Gorlewicz, J.L.; Tennison, J.L.; Uesbeck, P.M.; Richard, M.E.; Palani, H.P.; Stefik, A.; Smith, D.W.; Giudice, N.A. Design Guidelines and Recommendations for Multimodal, Touchscreen-based Graphics. ACM Trans. Access. Comput. (TACCESS) 2020, 13, 1–30. [Google Scholar] [CrossRef]

- Bentzen, B.L. Orientation maps for visually impaired persons. J. Vis. Impair. Blind. 1977, 71, 193–196. [Google Scholar] [CrossRef]

- Andrews, S.K. Spatial cognition through tactual maps. In Proceedings of the 1st International Symposium on Maps and Graphics for the Visually Handicapped, Washington, DC, USA, 10–12 March 1983; Wiedel, J., Ed.; Association of American Geographers: Washington, DC, USA, 1983; pp. 30–40. [Google Scholar]

- Golledge, R.G. Tactual strip maps as navigational aids. J. Vis. Impair. Blind. 1991, 85, 296–301. [Google Scholar] [CrossRef]

- Thinus-Blanc, C.; Gaunet, F. Representation of space in blind persons: Vision as a spatial sense? Psychol. Bull. 1997, 121, 20–42. [Google Scholar] [CrossRef] [PubMed]

- Schinazi, V.R.; Thrash, T.; Chebat, D.R. Spatial navigation by congenitally blind individuals. Wiley Interdiscip. Rev. Cogn. Sci. 2016, 7, 37–58. [Google Scholar] [CrossRef] [Green Version]

- Giudice, N.A.; Long, R.G. Establishing and Maintaining Orientation: Tools, Techniques, and Technologies. In Foundations of Orientation and Mobility, 4th ed.; APH Press: Louisville, KY, USA, 2010; Volume 1, pp. 45–62. [Google Scholar]

- Montello, D.R. Navigation. In The Cambridge Handbook of Visuospatial Thinking; Shah, P., Miyake, A., Eds.; Cambridge University Press: Cambridge, UK, 2005; pp. 257–294. [Google Scholar]

- Golledge, R.G. Human wayfinding and cognitive maps. In Wayfinding Behavior: Cognitive Mapping and Other Spatial Processes; Johns Hopkins University Press: Baltimore, MD, USA, 1999; pp. 5–45. [Google Scholar]

- O’Keefe, J.; Nadel, L. The Hippocampus as a Cognitive Map; Oxford University Press: London, UK, 1978. [Google Scholar]

- Golledge, R.G.; Klatzky, R.L.; Loomis, J.M. Cognitive mapping and wayfinding by adults without vision. In The Construction of Cognitive Maps; Portugali, J., Ed.; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1996; pp. 215–246. [Google Scholar]

- Millar, S. Understanding and Representing Space: Theory and Evidence from Studies with Blind and Sighted Children; Clarendon Press: Oxford, UK, 1994. [Google Scholar]

- Edman, P.K. Tactile Graphics; American Foundation for the Blind: New York, NY, USA, 1992. [Google Scholar]

- Blades, M.; Ungar, S.; Spencer, C. Map using by adults with visual impairments. Prof. Geogr. 1999, 51, 539–553. [Google Scholar] [CrossRef]

- Ungar, S. Cognitive mapping without visual experience. In Cognitive Mapping. Past, Present, and Future; Kitchin, R., Freundschuh, S., Eds.; Routledge: London, UK, 2000; pp. 221–248. [Google Scholar]

- Parkes, D. “NOMAD”: An audio-tactile tool for the acquisition, use and management of spatially distributed information by partially sighted and blind persons. In Proceedings of the Second International Symposium on Maps and Graphics for Visually Handicapped People, London, UK, 20–22 April 1988; pp. 54–64. [Google Scholar]

- Ducasse, J.; Brock, A.; Jouffrais, C. Accessible Interactive Maps for Visually Impaired Users. In Mobility of Visually Impaired People; Springer: Berlin/Heidelberg, Germany, 2018; pp. 537–584. [Google Scholar]

- Brock, A.; Jouffrais, C. Interactive audio-tactile maps for visually impaired people. ACM SIGACCESS Access. Comput. 2015, 113, 3–12. [Google Scholar] [CrossRef] [Green Version]

- Holmes, E.; Jansson, G.; Jansson, A. Exploring auditorily enhanced tactile maps for travel in new environments. New Technol. Educ. Vis. Handicap. 1996, 237, 191–196. [Google Scholar]

- Kane, S.K.; Morris, M.R.; Wobbrock, J.O. Touchplates: Low-cost tactile overlays for visually impaired touch screen users. In Proceedings of the 15th International ACM SIGACCESS Conference on Computers and Accessibility, Bellevue, WA, USA, 21–23 October 2013; ACM: New York, NY, USA, 2013; pp. 1–8. [Google Scholar]

- Simonnet, M.; Vieilledent, S.; Tisseau, J.; Jacobson, D. Comparing Tactile Maps and Haptic Digital Representations of a Maritime Environment. J. Vis. Impair. Blind. 2011, 105, 222–234. [Google Scholar] [CrossRef]

- Kaklanis, N.; Votis, K.; Tzovaras, D. Open Touch/Sound Maps: A system to convey street data through haptic and auditory feedback. Comput. Geosci. 2013, 57, 59–67. [Google Scholar] [CrossRef]

- Zeng, L.; Weber, G.H. Exploration of Location-Aware You-Are-Here Maps on a Pin-Matrix Display. IEEE Trans. Hum. Mach. Syst. 2016, 46, 88–100. [Google Scholar] [CrossRef]

- Brayda, L.; Leo, F.; Baccelliere, C.; Ferrari, E.; Vigini, C. Updated Tactile Feedback with a Pin Array Matrix Helps Blind People to Reduce Self-Location Errors. Micromachines 2018, 9, 351. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Loomis, J.M.; Klatzky, R.L.; Giudice, N.A. Representing 3D space in working memory: Spatial images from vision, touch, hearing, and language. In Multisensory Imagery: Theory & Applications; Lacey, S., Lawson, R., Eds.; Springer: New York, NY, USA, 2013; pp. 131–156. [Google Scholar]

- Giudice, N.A.; Betty, M.R.; Loomis, J.M. Functional equivalence of spatial images from touch and vision: Evidence from spatial updating in blind and sighted individuals. J. Exp. Psychol. Learn. Mem. Cogn. 2011, 37, 621–634. [Google Scholar] [CrossRef] [Green Version]

- The Eye Diseases Prevalence Research Group. Causes and prevalence of visual impairment among adults in the United States. Arch. Ophthalmol. 2004, 122, 477–485. [Google Scholar] [CrossRef]

- Rosenbaum, P.; Stewart, D. International Classification of Functioning, Disability and Health (ICIDH-2); The World Health Organization: Geneva, Switzerland, 2004; Volume 11, pp. 5–10. [Google Scholar]

- Sears, A.; Hanson, V. Representing users in accessibility research. ACM Trans. Access. Comput. 2012, 4, 1–6. [Google Scholar] [CrossRef]

- Shneiderman, B.; Plaisant, C.; Cohen, M.; Jacobs, S. Designing the User Interface: Strategies for Effective Human-Computer Interaction, 5th ed.; Addison Wesley: Boston, MA, USA, 2009. [Google Scholar]

- Rieser, J.J.; Lockman, J.J.; Pick, H.L., Jr. The role of visual experience in knowledge of spatial layout. Percept. Psychophys. 1980, 28, 185–190. [Google Scholar] [CrossRef]

- Passini, R.; Proulx, G. Wayfinding without vision: An experiment with congenitally, totally blind people. Environ. Behav. 1988, 20, 227–252. [Google Scholar] [CrossRef]

- Sadalla, E.K.; Burroughs, W.J.; Staplin, L.J. Reference points in spatial cognition. J. Exp. Psychol. 1980, 6, 516–528. [Google Scholar] [CrossRef]

- Palani, H.P.; Tennison, J.L.; Giudice, G.B.; Giudice, N.A. Touchscreen-based haptic information access for assisting blind and visually-impaired users: Perceptual parameters and design guidelines. In Advances in Usability, User Experience and Assistive Technology, Part of the International Conference on Applied Human Factors and Ergonomics (AHFE’18); Springer: Cham, Switzerland, 2018; Volume 798, pp. 837–847. [Google Scholar]

- Palani, H.P.; Giudice, G.B.; Giudice, N.A. Haptic Information Access on Touchscreen devices: Guidelines for accurate perception and judgment of line orientation. In Proceedings of the International Conference on Universal Access in Human-Computer Interaction, Las Vegas, NV, USA, 15–20 July 2018; Springer: Cham, Switzerland, 2018; pp. 243–255. [Google Scholar]

- Tobler, W.R. Bidimensional regression. Geogr. Anal. 1994, 26, 187–212. [Google Scholar] [CrossRef]

- Friedman, A.; Kohler, B. Bidimensional regression: Assessing the configural similarity and accuracy of cognitive maps and other two-dimensional data sets. Psychol Methods 2003, 8, 468–491. [Google Scholar] [CrossRef]

- Schinazi, V.R.; Epstein, R.A. Neural correlates of real-world route learning. NeuroImage 2010, 53, 725–735. [Google Scholar] [CrossRef]

- Giudice, N.A. Navigating without Vision: Principles of Blind Spatial Cognition. In Handbook of Behavioral and Cognitive Geography; Montello, D.R., Ed.; Edward Elgar Publishing: Cheltenham, UK; Northampton, MA, USA, 2018; pp. 260–288. [Google Scholar]

- Palani, H.P.; Giudice, N.A. Evaluation of non-visual panning operations using touch-screen devices. In Proceedings of the 16th international ACM SIGACCESS Conference on Computers & Accessibility (ASSETS’14), Rochester, NY, USA, 21–22 October 2014; ACM: New York, NY, USA, 2014; pp. 293–294. [Google Scholar]

- Su, J.; Rosenzweig, A.; Goel, A.; de Lara, D.; Truong, K.N. Timbremap: Enabling the visually-impaired to use maps on touch-enabled devices. In Proceedings of the 12th International Conference on Human Computer Interaction with Mobile Devices, Lisboa, Portugal, 7–10 September 2010; pp. 17–26. Available online: https://www.eecg.utoronto.ca/~ashvin/publications/timbermap.pdf (accessed on 7 December 2021).

- Loomis, J.M.; Lippa, Y.; Golledge, R.G.; Klatzky, R.L. Spatial updating of locations specified by 3-d sound and spatial language. J. Exp. Psychol. Learn. Mem. Cogn. 2002, 28, 335–345. [Google Scholar] [CrossRef]

- Kalia, A.; Legge, G.E.; Giudice, N.A. Learning building layouts with non-geometric visual information: The effects of visual impairment and age. Perception 2008, 37, 1677–1699. [Google Scholar] [CrossRef] [Green Version]

- Bryant, K.J. Representing space in language and perception. Mind Lang. 1997, 12, 239–264. [Google Scholar] [CrossRef]

| Parameter for | Guideline |

|---|---|

| Vibrotactile Line Detection | On-screen lines must be rendered at a minimum width of 1 mm for supporting accurate detection via haptic feedback |

| Vibrotactile Gap Detection | An interline gap width of 4 mm bounded by lines rendered at a width of 4 mm is recommended for discriminating parallel lines. |

| Discriminating Oriented Vibrotactile Lines | A minimum angular separation (i.e., cord length) of 4 mm is recommended for supporting discrimination of oriented lines. Angular elements should be schematized by calculating the minimum perceivable angle (using the formula: θ = 2 arcsin (cord length/2r)). |

| Vibrotactile Line Tracing and Orientation Judgments | A minimum line width of 4 mm is necessary for supporting tasks that require line tracing (path following), judging line orientation, and learning of complex spatial path patterns. |

| Building Mental Representations from Spatial Patterns | When rendered at a width of 4 mm, users can accurately judge vibrotactile line orientation to an angular interval of 7°. |

| Feedback Mechanism for Vibrotactile Perception | Users prefer vibrotactile feedback as a guiding cue (i.e., used to identify/follow lines) as opposed to a warning cue. This interaction style also leads to better performance. |

| Sex | Etiology of Blindness | Residual Vision | Age | Onset | Years (Stable) |

|---|---|---|---|---|---|

| M | Retinopathy of Prematurity | Light/dark perception | 44 | Birth | 44 |

| M | Retinopathy of Prematurity | None | 28 | Birth | 28 |

| M | Leber’s Congenital Amaurosis | Light perception | 40 | Birth | 40 |

| F | Retinitis Pigmentosa | Light/dark perception | 63 | Age 11 | 52 |

| F | Retinitis Pigmentosa | Light/dark perception | 38 | Birth | 38 |

| F | Unknown | Light/dark perception | 33 | Age 17 | 16 |

| M | Retinitis Pigmentosa | Light/dark perception | 48 | Age 25 | 13 |

| F | Retinitis Pigmentosa | Light/dark perception | 61 | Age 11 | 50 |

| M | Retinal Detachment | None | 61 | Birth | 61 |

| F | Retinopathy of Prematurity | None | 57 | Age 20 | 37 |

| F | Retinopathy of Prematurity | Light perception | 43 | Birth | 43 |

| M | Retinopathy of Prematurity | None | 48 | Birth | 48 |

| Measures | VAM | Hardcopy | ||

|---|---|---|---|---|

| Mean | SD | Mean | SD | |

| Learning time (in seconds) | 426.75 | 186.05 | 130 | 43.22 |

| Wayfinding accuracy (in percent) | 91 | 28.3 | 95 | 21.5 |

| Wayfinding sequence (in percent) | 72 | 45.1 | 54 | 50.1 |

| Relative directional error (in angle) | 6.5 | 8.84 | 9.78 | 11.95 |

| Reconstruction accuracy (in percent) | 83 | 38.9 | 83 | 38.9 |

| Measures | df | f | Sig. | |

|---|---|---|---|---|

| Hypothesis | Error | |||

| Learning time | 1 | 22 | 28.96 | <0.001 |

| Wayfinding accuracy | 1 | 94 | 1.09 | >0.05 |

| Wayfinding sequence accuracy | 1 | 94 | 3.14 | >0.05 |

| Relative directional accuracy | 1 | 94 | 4.50 | <0.05 |

| Reconstruction accuracy | 1 | 22 | 0.00 | >0.05 |

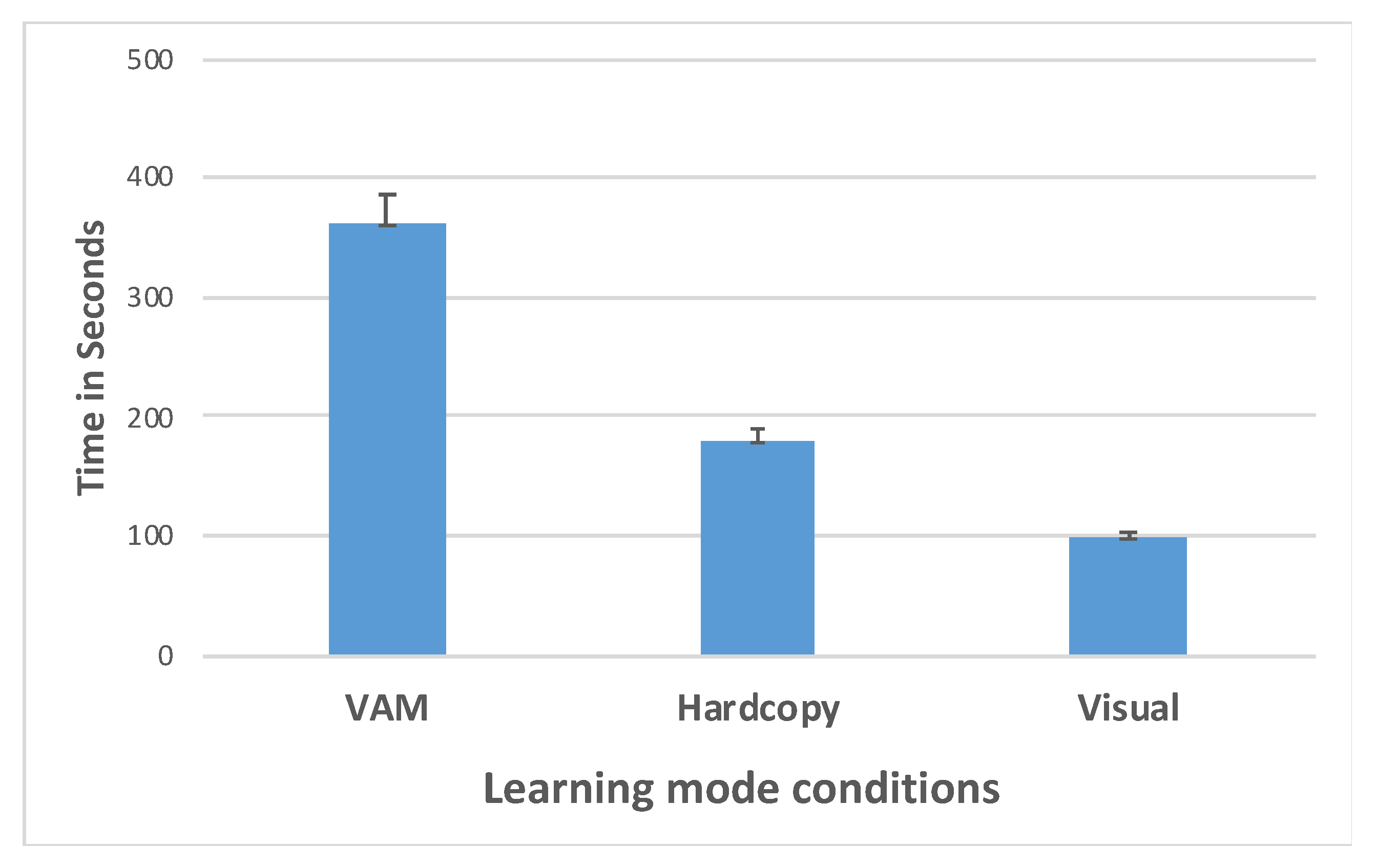

| Measures | VAM | Hardcopy | Visual | |||

|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | |

| Learning time (in seconds) | 360.00 | 93.64 | 178.93 | 49.07 | 97.86 | 23.35 |

| Wayfinding accuracy (in percent) | 95.00 | 22.70 | 96.00 | 18.70 | 98.00 | 13.40 |

| Wayfinding sequence (in percent) | 68.00 | 47.00 | 48.00 | 50.00 | 66.00 | 47.00 |

| Relative directional error (in angle) | 5.89 | 8.37 | 7.77 | 10.74 | 5.80 | 8.20 |

| Reconstruction accuracy (in percent) | 71.00 | 46.90 | 86.00 | 36.30 | 86.00 | 36.30 |

| Scale (in percent) | 88.06 | 9.47 | 86.70 | 8.15 | 90.33 | 8.73 |

| Theta (in degree) | 3.01 | 5.48 | 0.68 | 3.10 | 1.06 | 2.77 |

| Distortion Index | 14.95 | 1.41 | 14.74 | 1.63 | 15.25 | 1.57 |

| Measures | df | f | Sig. | |

|---|---|---|---|---|

| Hypothesis | Error | |||

| Learning time | 2 | 39 | 64.53 | <0.001 |

| Wayfinding accuracy | 2 | 165 | 0.512 | >0.05 |

| Wayfinding sequence accuracy | 2 | 165 | 2.813 | >0.05 |

| Relative directional accuracy | 2 | 165 | 0.816 | >0.05 |

| Reconstruction accuracy | 2 | 39 | 0.591 | >0.05 |

| Scale | 2 | 39 | 0.608 | >0.05 |

| Theta | 2 | 39 | 1.387 | >0.05 |

| Distortion Index | 2 | 39 | 0.381 | >0.05 |

| Measures | df | VAM | Hardcopy | |||

|---|---|---|---|---|---|---|

| Hypothesis | Error | f | Sig. | f | Sig. | |

| Learning time | 1 | 24 | 1.39 | >0.05 | 7.1 | <0.05 |

| Wayfinding accuracy | 1 | 102 | 1.66 | >0.05 | 0.39 | >0.05 |

| Wayfinding sequence accuracy | 1 | 102 | 1.08 | >0.05 | 1.542 | >0.05 |

| Relative directional accuracy | 1 | 102 | 0.57 | >0.05 | 3.516 | >0.05 |

| Reconstruction accuracy | 1 | 24 | 0.48 | >0.05 | 0.026 | >0.05 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Palani, H.P.; Fink, P.D.S.; Giudice, N.A. Comparing Map Learning between Touchscreen-Based Visual and Haptic Displays: A Behavioral Evaluation with Blind and Sighted Users. Multimodal Technol. Interact. 2022, 6, 1. https://doi.org/10.3390/mti6010001

Palani HP, Fink PDS, Giudice NA. Comparing Map Learning between Touchscreen-Based Visual and Haptic Displays: A Behavioral Evaluation with Blind and Sighted Users. Multimodal Technologies and Interaction. 2022; 6(1):1. https://doi.org/10.3390/mti6010001

Chicago/Turabian StylePalani, Hari Prasath, Paul D. S. Fink, and Nicholas A. Giudice. 2022. "Comparing Map Learning between Touchscreen-Based Visual and Haptic Displays: A Behavioral Evaluation with Blind and Sighted Users" Multimodal Technologies and Interaction 6, no. 1: 1. https://doi.org/10.3390/mti6010001