A Deep Learning Model of Perception in Color-Letter Synesthesia

Abstract

:1. Introduction

1.1. Background

1.2. Deep Learning Models

1.3. Contribution of Present Study

2. Methods

2.1. Handwritten Letters Database

2.2. Synesthesia Color-Letter Pairs

2.3. Numerical Implementation

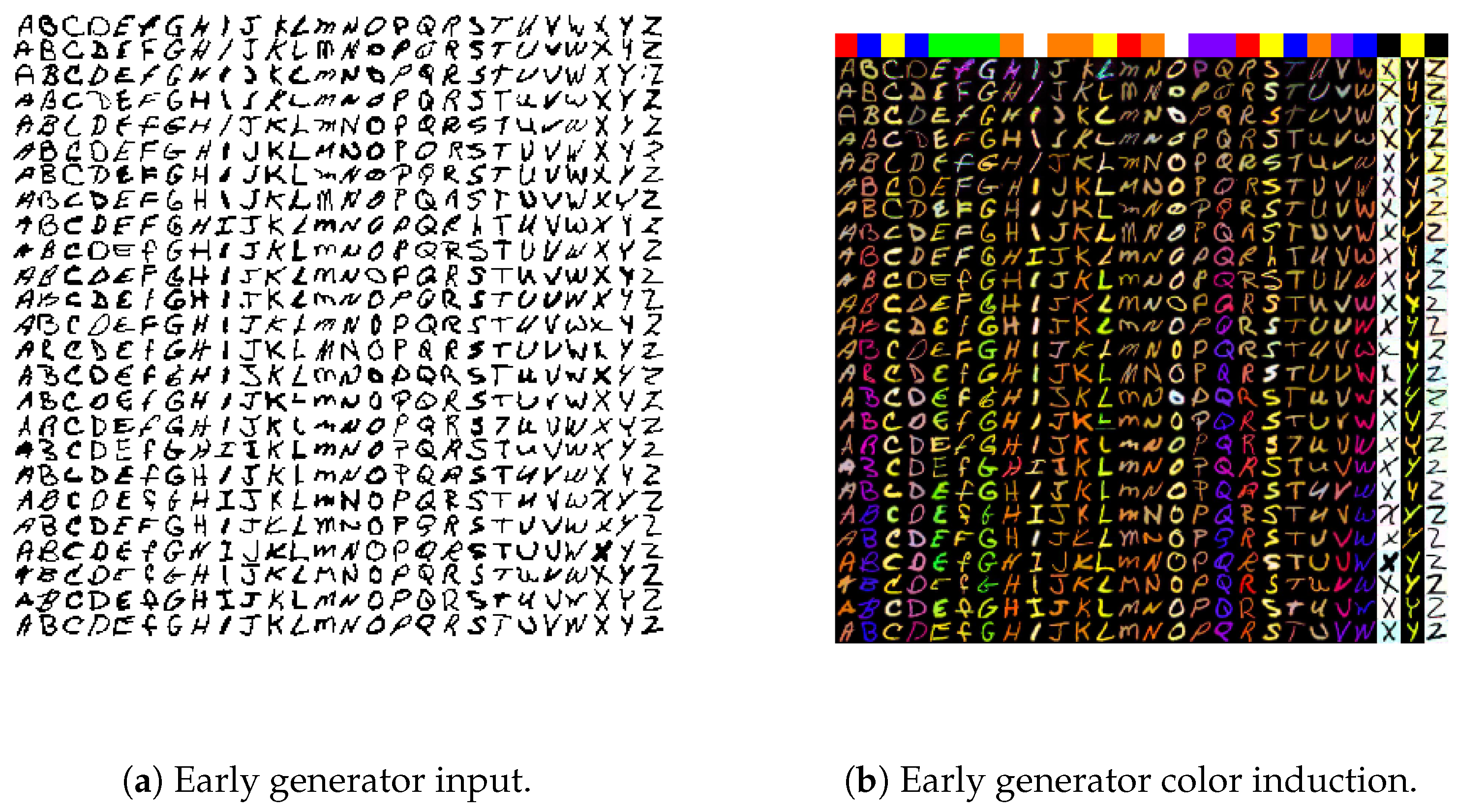

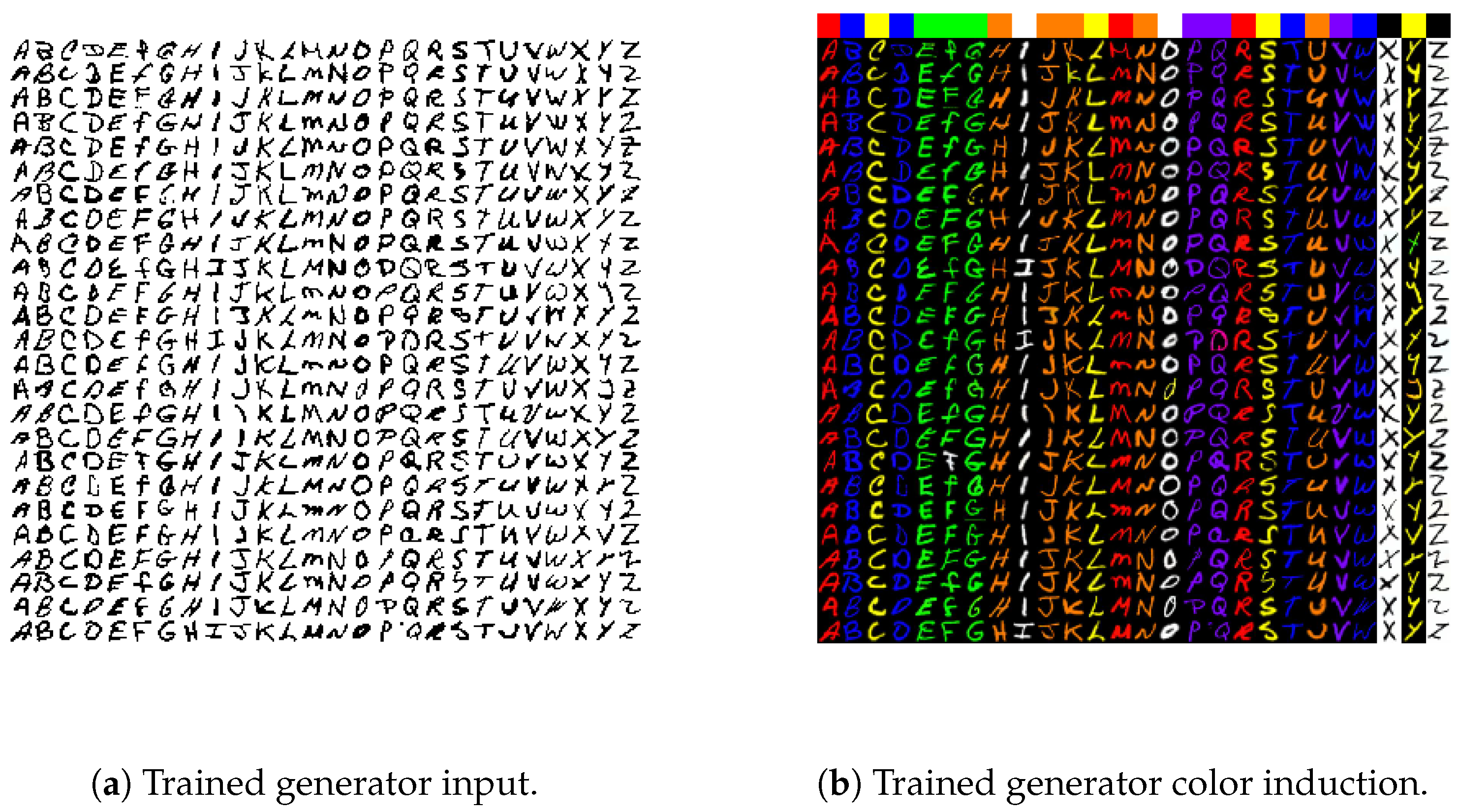

3. Results

4. Discussion

- Functional brain imaging. Studies aimed to identify and localize structure and function within the synesthesia experience [5]. Experimental data from functional magnetic resonance imaging or other modalities could be used to build a complementary deep learning model with more explicit mapping of layers to physiologic modular components than used in the current study. Such a model could provide additional insights to refine the competing hypotheses of cross-activation (increased linkage between proximal regions) [7] or disinhibited feedback from higher-level cortical areas [8] in synesthesia. The architecture of the generative network used here comprised fully-connected, convolutional neural layers; different architectural designs could be developed (e.g., direct cross-wiring, or recurrent connections) to directly simulate and compare the relative accuracy and plausibility of these competing theories. This is beyond the current investigative scope.

- Language learning and memory. Studying synesthesia may advance our understanding of human perception and information arrangement [6]. One theory proposed in [3] advances the idea that grapheme–color synesthesia develops in part by children to learn category structures; a fundamental task in literacy development is to recognize and discriminate between letters. Therefore, synesthesia might arise as an aid to memory. More generally, the ability to discern statistical regularities of printed letters or learn complex rules for letter combinations would assist learning at subsequent stages of literacy development [3]. In [28], small sample experiments suggested that additional sensory dimensions in synesthesia aid in memory tasks when compared to controls. We submit that the generative modeling approach of the current work may be useful to develop and test hypotheses in studies of language acquisition, memory and semantics.

- Consciousness studies. Synesthesia can give insight into the neural correlates of consciousness, through interaction between sensory inputs and their mediation by semantics in the induction of phenomenal subjective experience [4]. Connecting neural activations with subjective aspects of consciousness (perception of shape, color, and movement of an object) is potentially achievable following a systematic experimental approach [29]. In deep learning, understanding representations within deep layers is easy at the layer level; at the level of individual neurons, such understanding is much more difficult [30]. Extensions of the deep learning model reported here may help to advance toward these formidable objectives.

Acknowledgments

Conflicts of Interest

Abbreviations

| GAN | Generative adversarial network |

| GPU | Graphics processing unit |

| CPU | Central processing unit |

| CNN | Convolutional neural network |

| fMRI | Functional magnetic resonance imaging |

References

- Hubbard, E.M.; Ramachandran, V. Neurocognitive Mechanisms of Synesthesia. Neuron 2005, 48, 509–520. [Google Scholar] [CrossRef] [PubMed]

- Mattingley, J. Attention, Automaticity, and Awareness in Synesthesia. Ann. N. Y. Acad. Sci. 2009, 1156, 141–167. [Google Scholar] [CrossRef] [PubMed]

- Watson, M.; Akins, K.; Spiker, C.; Crawford, L.; Enns, J. Synesthesia and learning: a critical review and novel theory. Front. Hum. Neurosci. 2014, 8, 98. [Google Scholar] [CrossRef] [PubMed]

- Van Leeuwen, T.M.; Singer, W.; Nikolić, D. The Merit of Synesthesia for Consciousness Research. Front. Psychol. 2015, 6, 1850. [Google Scholar]

- Rouw, R.; Scholte, H.; Colizoli, O. Brain Areas Involved in Synaesthesia: A Review. J. Neuropsychol. 2011, 5, 214–242. [Google Scholar] [CrossRef] [PubMed]

- Rich, A.; Mattingley, J. Anomalous perception in synaesthesia: A cognitive neuroscience perspective. Nat. Rev. Neurosci. 2002, 3, 43–52. [Google Scholar] [CrossRef] [PubMed]

- Ramachandran, V.; Hubbard, E. Psychophysical investigations into the neural basis of synaesthesia. Proc. R. Soc. Lond. B Biol. Sci. 2001, 268, 979–983. [Google Scholar] [CrossRef] [PubMed]

- Grossenbacher, P.; Lovelace, C. Mechanisms of synesthesia: Cognitive and physiological constraints. Trends Cogn. Sci. 2001, 5, 36–41. [Google Scholar] [CrossRef]

- Jäncke, L.; Beeli, G.; Eulig, C.; Hänggi, J. The neuroanatomy of grapheme-color synesthesia. Eur. J. Neurosci. 2009, 29, 1287–1293. [Google Scholar] [CrossRef] [PubMed]

- Brang, D.; Hubbard, E.; Coulson, S.; Huang, M.; Ramachandran, V. Magnetoencephalography reveals early activation of V4 in grapheme-color synesthesia. NeuroImage 2010, 53, 268–274. [Google Scholar] [CrossRef] [PubMed]

- Bengio, Y. Learning deep architectures for AI. Found. Trends Mach. Learn. 2009, 2, 1–127. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A Fast Learning Algorithm for Deep Belief Nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Advances in Neural Information Processing Systems 27; Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N.D., Weinberger, K.Q., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2014; pp. 2672–2680. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional Generative Adversarial Nets. arXiv, 2014; arXiv:1411.1784. [Google Scholar]

- Gauthier, J. Conditional Generative Adversarial Networks for Convolutional Face Generation; Technical Report; Stanford University: Stanford, CA, USA, 2015. [Google Scholar]

- Cohen, G.; Afshar, S.; Tapson, J.; van Schaik, A. EMNIST: An extension of MNIST to handwritten letters. arXiv, 2017; arXiv:1702.05373. [Google Scholar]

- Witthoft, N.; Winawer, J.; Eagleman, D.M. Prevalence of Learned Grapheme-Color Pairings in a Large Online Sample of Synesthetes. PLoS ONE 2015, 10, e0118996. [Google Scholar] [CrossRef] [PubMed]

- Dixon, M.J.; Smilek, D.; Cudahy, C.; Merikle, P.M. Five plus two equals yellow. Nature 2000, 406, 365. [Google Scholar] [CrossRef] [PubMed]

- Cytowic, R. Synesthesia and mapping of subjective sensory dimensions. Neurology 1989, 39, 849–850. [Google Scholar] [CrossRef] [PubMed]

- Colizoli, O.; Murre, J.; Rouw, R. Pseudo-Synesthesia through Reading Books with Colored Letters. PLoS ONE 2012, 7, e39799. [Google Scholar] [CrossRef] [PubMed]

- Bor, D.; Rothen, N.; Schwartzman, D.; Clayton, S.; Seth, A. Adults Can Be Trained to Acquire Synesthetic Experiences. Sci. Rep. 2014, 4, 7089. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sejnowski, T.; Churchland, P.; Anthony Movshon, J. Putting big data to good use in neuroscience. Nat. Neurosci. 2014, 17, 1440–1441. [Google Scholar] [CrossRef] [PubMed]

- Agrawal, M.; Sawhney, K. Exploring Convolutional Neural Networks for Automatic Image Colorization; Technical Report; Stanford University: Stanford, CA, USA, 2017. [Google Scholar]

- Shwartz-Ziv, R.; Tishby, N. Opening the Black Box of Deep Neural Networks via Information. arXiv, 2017; arXiv:1703.00810. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A system for large-scale machine learning. arXiv, 2016; arXiv:1605.08695. [Google Scholar]

- Stroop, J.R. Studies of interference in serial verbal reactions. J. Exp. Psychol. 1935, 18, 643–662. [Google Scholar] [CrossRef]

- Mills, C.; Innis, J.; Westendorf, T.; Owsianiecki, L.; McDonald, A. Effect of a Synesthete’s Photisms on Name Recall. Cortex 2006, 42, 155–163. [Google Scholar] [CrossRef]

- Crick, F.; Koch, C. A Framework for Consciousness. Nat. Neurosci. 2003, 6, 119–126. [Google Scholar] [CrossRef] [PubMed]

- Hinton, G. Learning multiple layers of representation. Trends Cogn. Sci. 2007, 11, 428–434. [Google Scholar] [CrossRef] [PubMed]

| Color | Letters |

|---|---|

| Red | A, M, R |

| Blue | B, D, T, W |

| Green | E, F, G |

| Yellow | C, L, S, Y |

| Orange | H, J, K, N, U |

| Purple | P, Q, V |

| White | I, O |

| Black | X, Z |

© 2018 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bock, J.R. A Deep Learning Model of Perception in Color-Letter Synesthesia. Big Data Cogn. Comput. 2018, 2, 8. https://doi.org/10.3390/bdcc2010008

Bock JR. A Deep Learning Model of Perception in Color-Letter Synesthesia. Big Data and Cognitive Computing. 2018; 2(1):8. https://doi.org/10.3390/bdcc2010008

Chicago/Turabian StyleBock, Joel R. 2018. "A Deep Learning Model of Perception in Color-Letter Synesthesia" Big Data and Cognitive Computing 2, no. 1: 8. https://doi.org/10.3390/bdcc2010008