AI-Assisted Multi-Operator RAN Sharing for Energy-Efficient Networks

Abstract

:1. Introduction

1.1. Tower Companies

1.1.1. RAN-Sharing Types and Operator Differentiation

1.1.2. ClusterRAN

1.1.3. RAN-Sharing Types

1.1.4. Sharing RAN Resources and Responsibilities

1.2. Multitenant Energy Management by TowerCo

Enabling Energy-Saving Features

2. Related Works

3. Problem Description and Contributions

- How can RAN sharing improve the energy savings for an operator as compared to using independent hardware?

- What could be the impact of independent resource optimization by the operators with different KPIs on the energy savings obtained?

- 1.

- Through simulations we evaluate the benefits, complexity, and challenges of the RANaaS RAN-sharing scenario from the TowerCo perspective, specifically on the energy savings obtained. We analyze the benefits of providing independence in the sharing process by allowing each operator to control their share of resources and other cell-level parameters, thus enabling service differentiation.

- 2.

- We conduct a benefit analysis of using AI for energy-efficient network optimization from an operator’s perspective.

- 3.

- We propose an approach to distribute the energy cost among the participating MNOs in the RAN-sharing scenario of interest.

- 4.

- We discuss the implications and challenges of independent resource optimization by MNOs in the RANaaS RAN-sharing scenario by highlighting the potential benefits and drawbacks.

4. System Model

4.1. Base Station’s Activity Factor

4.2. Base Station’s Power Model

4.3. Base Station’s Sleep Modes

4.4. Base Station’s Energy Consumption

4.5. Energy Cost Distribution

- Shared power amplifiers —the power amplifiers used by two or more MNOs.

- Independent power amplifiers—the power amplifiers used by only one of the MNOs.

| Algorithm 1 TowerCo’s energy cost distribution algorithm |

|

5. Energy-Saving Features and AI-Assisted Radio Resource Management

5.1. Energy-Saving Features

- 1.

- Carrier adaptation: Activating this energy-saving feature involves turning on/off capacity layers or bands at each of the sites based on traffic demand, user distribution, and other network conditions. This process takes a longer time than antenna or bandwidth adaptation since it requires re-associating connected users to the same/different site and (or) band, which could take a variable time depending on the network scenario and conditions. Additionally, this process must be executed in a way that does not degrade the quality of service when there is an increase in traffic demand.

- 2.

- Antenna and bandwidth adaptation: Antenna adaptation involves turning on/off the number of active antennas at the base station, and the bandwidth adaptation results in varying the instantaneous bandwidth allocated to each user dynamically. These adaptations operate on a much shorter time scale compared to carrier adaptation. Both antenna and bandwidth adaptation lead to a change in the instantaneous throughput experienced by the user as seen in Equation (2) and described in Section 4. The change in the user throughput impacts the base station’s activity factor (see Equation (8)) and its energy consumption (see Equation (17)).

5.2. Hierarchical Reinforcement Learning (HRL)

5.3. Network Optimization as an HRL Problem

| Algorithm 2 Single-operator network optimization algorithm |

|

Reinforcement Learning Algorithm

5.4. Q-Learning Definitions

- State: It is a representation of the environment that an agent finds itself in and influences the actions of an agent. In this work, we considered a diverse set of heuristically determined input features for each agent listed in Table 3. Each agent observes a subset/complete set of features depending on its role and position in the hierarchy. In addition to the features listed in Table 3, each agent receives features that are more specific to their role. For instance, the local configuration adapter agent takes as input only those features that are more relevant at the site of interest. The site selector for RRA—a centralized agent—receives information from all sites along with the user distribution statistics at each site. On the other hand, the carrier adapter receives as input the carriers that could be activated or deactivated at each site.

- Action space: It corresponds to the decisions that an agent takes at each decision point. Each agent has a different action set closely tied to its objective. Table 2 describes the agent’s actions.

- Reward: The reward signal is common to all the agents and is a function of the two optimization KPIs: network energy consumption and throughput. The reward is expressed asHere, and correspond to the percentage change in the energy consumption and throughput, respectively. Here, and () are their importance factors. They indicate the relative importance of the two parameters in the optimization process. is a fixed reward that is assigned based on the direction of change in the two KPIs.

5.5. Implementation Flow

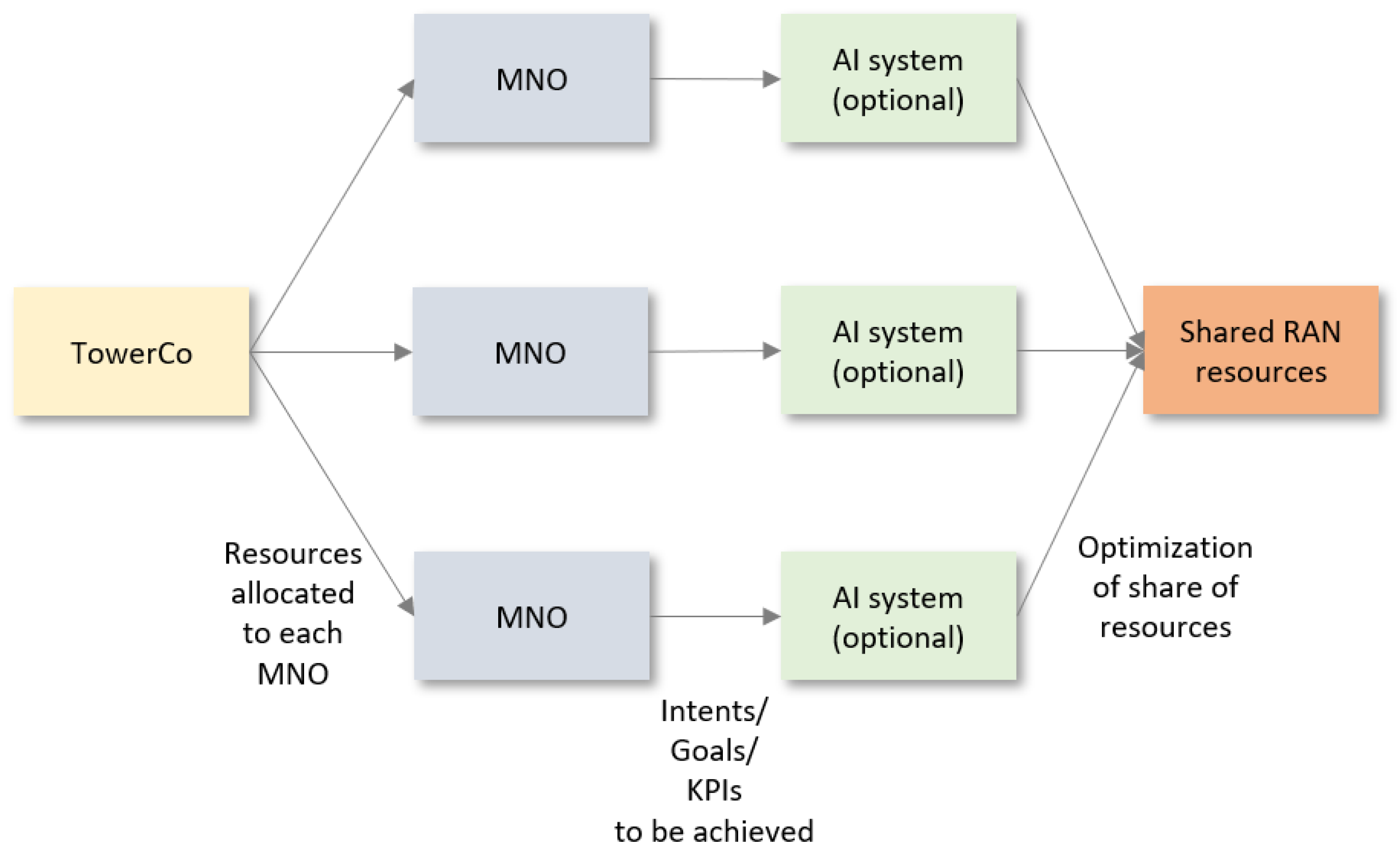

- TowerCo’s controller and energy manager: The TowerCo’s controller is responsible for allocating the share of resources to different MNOs, including the maximum bandwidth and antenna configuration that can be used on each band (step 1). We assumed equal and non-overlapping spectral resources to be available to all the MNOs as outlined in Table 4. Furthermore, the TowerCo’s controller receives the energy cost per MNOs from the TowerCo’s energy manager (step 7). The latter calculates the energy cost per MNO based on resources used by them at different sites in a network as described in Section 4.5 (step 5).

- MNO: The operator controls its share of resources and the KPIs it would like to optimize or improve. In this case, we assumed all operators would like to improve on both the energy efficiency and the network throughput. The only difference was the maximum throughput degradation that each operator could permit. To make this decision, they could consider the average traffic demands at different sites and the requirements of the connected users. Moreover, we assumed that the operators used the AI system described in Section 5 for managing their share of resources. Doing so required the operators to send their AI and KPI requirements to the AI manager (step 2).

- AI manager: The role of the AI manager is to select an AI configuration based on the needs and requirements of the operator. In this case, it could depend on the desired energy-saving features, the KPIs to optimize, and the degradation restrictions or limitations on the throughput set forth by the operator. In this work, we assumed each operator to have its own AI system, as seen in Figure 15. The AI system/functionality shown in the Figure 13 assists the operator in optimizing their share of resources. Figure 16 shows it as separate AI agents connected to and controlled by each operator.

6. Implementation, Results, and Analysis

6.1. System Setup, Simulation Parameters, and Assumptions

6.1.1. MNO

6.1.2. TowerCo

6.1.3. Baseline

6.2. Result Categories

6.3. TowerCo’s Energy Performance Analysis

6.3.1. Energy Savings

6.3.2. Impact of Operator Requirements on the Outcome Probabilities

- A dynamic allocation of resources to MNOs based on their traffic demand and user distribution;

- Allowing the TowerCo to optimize the network for all the MNOs in a centralized manner.

6.3.3. Impact of Operator Requirements on Energy-Saving Feature Activation and Savings

- 1.

- The limitation imposed in terms of how often bands could be turned ON/OFF during an observation period. We set this limitation factor to 10, i.e., it took ten times longer to perform carrier adaptation as compared to adapting the bandwidth or antenna configuration. In other words, in an episode of 20 steps, a band’s state could be changed at most twice.

- 2.

- The partial reassociation of connected users—turning off a band required moving the users connected to a different site and (or) band. However, in a stationary environment where all bands are uniformly loaded, it could be difficult to find a band at the same/different site that could accommodate additional users without degrading the KPIs of interest. In such a scenario, the carrier shutdown action is not executed fully, leading to a more unbalanced network. Such a network is more likely to degrade on the KPIs of interest.

6.3.4. Overlapping Actions from a TowerCo’s Perspective

6.3.5. Energy Savings from a TowerCo’s Perspective

6.4. Comparison to Other Works

7. Conclusions and Future Work

- 1.

- The operator’s preferences and needs;

- 2.

- The level of autonomy and control desired by the operators over their resources;

- 3.

- The data shared with TowerCo service providers;

- 4.

- How often data should be exchanged in the case of RAN services for achieving reasonable gains from a centralized network optimization;

- 5.

- Whether or not there is any pricing difference for both RAN-sharing options.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AAU | Active antenna unit |

| AI | Artificial intelligence |

| AR | Augmented reality |

| BB | Baseband |

| CAPEX | Capital expenditure |

| CIC | Cluster intelligent controller |

| CLRC | ClusterRAN controller |

| CLRI | ClusterRAN infrastructure |

| DDQN | Double deep Q-Network |

| DNN | Deep neural networks |

| EC | Energy consumption |

| EE | Energy efficiency |

| GHG | Greenhouse gas |

| ICT | Information and communication technology |

| KPI | Key performance indicators |

| LCM | Life-cycle management |

| MNO | Mobile network operator |

| NF | Network function |

| NPN | Nonpublic network |

| OPEX | Operational expenditure |

| RAN | Radio access networks |

| RANaaS | Radio access network as a service |

| RL | Reinforcement learning |

| RRA | Radio resource adaptation |

| RRU | Remote radio unit |

| SGD | Stochastic gradient descent |

| SIP | Service infrastructure provider |

| SLA | Service level agreement |

| TDL | Throughput degradation limit |

| VR | Virtual reality |

Appendix A

List of Variables and Parameters

| Variable | Symbol | Unit | Min Value | Max Value |

|---|---|---|---|---|

| Number of interferering BSs | 3 | 6 | ||

| Number of users in each cell | N | |||

| Observation time | T | ms | 0 | 160 |

| Cell radius | m | 0 | 400 | |

| Average requirement per user k | MB | |||

| Traffic demand | Mbps/km | |||

| Share of active subscribers | % | 2 | 16 | |

| Achievable rate per user | ||||

| Signal power | ||||

| Interference power | ||||

| Activity factor of BS i | 0 | 1 | ||

| Bandwidth per user k or the bandwidth used at a site | B | MHz | 0 | 100 |

| Number of spatial multiplexing layers | 1 | 2 | ||

| Number of users served simultaneously | ||||

| Power per power amplifier | p | W | ||

| Efficiency of power amplifier | % | 0 | 25 | |

| Power amplifier constant | ||||

| Active antennas at BS i | 0 | 64 | ||

| Distance of a user from a BS | D | m | 0 | 800 |

| Path loss exponent | 1 | ∞ | ||

| Antenna gain | c | |||

| Precoding matrix | ||||

| Weights matrix | ||||

| Local oscillator power consumption | W | |||

| Number of sectors | 0 | 3 | ||

| BS’s no-load power consumption | W | |||

| BS’s fixed power consumption | W | |||

| BS’s total power consumption | W | |||

| Energy consumption of a BS | E | J | ||

| Sleep delta/mode | 1 | 3 | ||

| Idle time | ms | 0 | 160 | |

| Sleep level transition times | ms | 0.0005 | 15 | |

| Total deployment area | km | |||

| Base station density |

References

- Andrae, A. New perspectives on internet electricity use in 2030. Eng. Appl. Sci. Lett. 2020, 3, 19–31. [Google Scholar]

- Andrae, A.; Edler, T. On Global Electricity Usage of Communication Technology: Trends to 2030. Challenges 2015, 6, 117–157. [Google Scholar] [CrossRef] [Green Version]

- ITU: Smart Energy Saving of 5G Base Stations: Traffic Forecasting and Strategy Optimization of 5G Wireless Network Energy Consumption Based on Artificial Intelligence and Other Emerging Technologies. 2021. Available online: https://www.itu.int/rec/T-REC-L.Sup43-202105-I (accessed on 1 June 2022).

- Huawei White Paper: Green 5G. 2022. Available online: http://www-file.huawei.com/-/media/corp2020/pdf/tech-insights/1/green_5g_white_paper_en_v2.pdf (accessed on 1 June 2022).

- Auer, G.; Giannini, V.; Desset, C.; Godor, I.; Skillermark, P.; Olsson, M.; Imran, M.A.; Sabella, D.; Gonzalez, M.J.; Blume, O.; et al. How much energy is needed to run a wireless network? IEEE Wirel. Commun. 2011, 18, 40–49. [Google Scholar] [CrossRef]

- Salem, F.E.; Altman, Z.; Gati, A.; Chahed, T.; Altman, E. Reinforcement learning approach for Advanced Sleep Modes management in 5G networks. In Proceedings of the VTC-FALL 2018: 88th Vehicular Technology Conference, Chicago, IL, USA, 27–30 August 2018; IEEE Computer Society: Chicago, IL, USA, 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Salem, F.E.; Chahed, T.; Altman, E.; Gati, A.; Altman, Z. Optimal Policies of Advanced Sleep Modes for Energy-Efficient 5G networks. In Proceedings of the 2019 IEEE 18th International Symposium on Network Computing and Applications (NCA), Cambridge, MA, USA, 26–28 September 2019; pp. 1–7. [Google Scholar] [CrossRef] [Green Version]

- Salem, F.E.; Gati, A.; Altman, Z.; Chahed, T. Advanced Sleep Modes and Their Impact on. In Proceedings of the 2017 IEEE 86th Vehicular Technology Conference (VTC-Fall), Toronto, QC, Canada, 24–27 September 2017; pp. 1–7. [Google Scholar]

- Salem, F.E.; Chahed, T.; Altman, Z.; Gati, A. Traffic-aware Advanced Sleep Modes management in 5G networks. In Proceedings of the 2019 IEEE Wireless Communications and Networking Conference (WCNC), Marrakesh, Morocco, 15–18 April 2019; pp. 1–6. [Google Scholar]

- Masoudi, M.; Khafagy, M.; Soroush, E.; Giacomelli, D.; Morosi, S.; Cavdar, C. Reinforcement Learning for Traffic-Adaptive Sleep Mode Management in 5G Networks. In Proceedings of the 2020 IEEE 31st Annual International Symposium on Personal, Indoor and Mobile Radio Communications, IEEE PIMRC, London, UK, 1 August–3 September 2020. [Google Scholar]

- European Commission: Mergers: Joint Control of INWIT by Telecom Italia and Vodafone. 2022. Available online: https://ec.europa.eu/commission/presscorner/detail/en/ip_20_414 (accessed on 15 June 2022).

- Rost, P.; Bernardos, C.J.; Domenico, A.D.; Girolamo, M.D.; Lalam, M.; Maeder, A.; Sabella, D.; Wübben, D. Cloud technologies for flexible 5G radio access networks. IEEE Commun. Mag. 2014, 52, 68–76. [Google Scholar] [CrossRef]

- Sabella, D.; de Domenico, A.; Katranaras, E.; Imran, M.A.; di Girolamo, M.; Salim, U.; Lalam, M.; Samdanis, K.; Maeder, A. Energy Efficiency Benefits of RAN-as-a-Service Concept for a Cloud-Based 5G Mobile Network Infrastructure. IEEE Access 2014, 2, 1586–1597. [Google Scholar] [CrossRef] [Green Version]

- Kassis, M.; Costanzo, S.; Yassin, M. Flexible Multi-Operator RAN Sharing: Experimentation and Validation Using Open Source 4G/5G Prototype. In Proceedings of the 2021 Joint European Conference on Networks and Communications & 6G Summit (EuCNC/6G Summit), Porto, Portugal, 8–11 June 2021; pp. 205–210. [Google Scholar] [CrossRef]

- Hossain, M.M.A.; Cavdar, C.; Björnson, E.; Jäntti, R. Energy Saving Game for Massive MIMO: Coping with Daily Load Variation. IEEE Trans. Veh. Technol. 2018, 67, 2301–2313. [Google Scholar] [CrossRef]

- Chavarria-Reyes, E.; Akyildiz, I.F.; Fadel, E. Energy-Efficient Multi-Stream Carrier Aggregation for Heterogeneous Networks in 5G Wireless Systems. IEEE Trans. Wirel. Commun. 2016, 15, 7432–7443. [Google Scholar] [CrossRef]

- Oh, E.; Son, K.; Krishnamachari, B. Dynamic Base Station Switching-On/Off Strategies for Green Cellular Networks. IEEE Trans. Wirel. Commun. 2013, 12, 2126–2136. [Google Scholar] [CrossRef]

- Liu, J.; Krishnamachari, B.; Zhou, S.; Niu, Z. DeepNap: Data-Driven Base Station Sleeping Operations Through Deep Reinforcement Learning. IEEE Internet Things J. 2018, 5, 4273–4282. [Google Scholar] [CrossRef]

- Miao, Y.; Yu, N.; Huang, H.; Du, H.; Jia, X. Minimizing Energy Cost of Base Stations with Consideration of Switching ON/OFF Cost. In Proceedings of the 2016 International Conference on Advanced Cloud and Big Data (CBD), Chengdu, China, 13–16 August 2016; pp. 310–315. [Google Scholar]

- Peesapati, S.K.G.; Olsson, M.; Masoudi, M.; Andersson, S.; Cavdar, C. Q-learning based Radio Resource Adaptation for Improved Energy Performance of 5G Base Stations. In Proceedings of the 2021 IEEE 32nd Annual International Symposium on Personal, Indoor and Mobile Radio Communications (PIMRC), Helsinki, Finland, 13–16 September 2021; pp. 979–984. [Google Scholar] [CrossRef]

- Peesapati, S.K.G.; Olsson, M.; Masoudi, M.; Andersson, S.; Cavdar, C. An Analytical Energy Performance Evaluation Methodology for 5G Base Stations. In Proceedings of the 2021 17th International Conference on Wireless and Mobile Computing, Networking and Communications (WiMob), Bologna, Italy, 11–13 October 2021; pp. 169–174. [Google Scholar] [CrossRef]

- Peesapati, S.K.G.; Olsson, M.; Andersson, S. A Multi-Strategy Multi-Objective Hierarchical Approach for Energy Management in 5G Networks. In Proceedings of the 2022 IEEE Global Communications Conference: Green Communication Systems and Networks (Globecom 2022 GCSN), Rio de Janeiro, Brazil, 4–8 December 2022. [Google Scholar]

- Nachum, O.; Tang, H.; Lu, X.; Gu, S.; Lee, H.; Levine, S. Why Does Hierarchy (Sometimes) Work So Well in Reinforcement Learning? arXiv 2019, arXiv:cs.LG/1909.10618. [Google Scholar]

- Pateria, S.; Subagdja, B.; Tan, A.h.; Quek, C. Hierarchical Reinforcement Learning: A Comprehensive Survey. ACM Comput. Surv. 2021, 54, 1–35. [Google Scholar] [CrossRef]

- Do, Q.; Koo, I. Deep Reinforcement Learning Based Dynamic Spectrum Competition in Green Cognitive Virtualized Networks. IEEE Access 2021, 9, 1. [Google Scholar] [CrossRef]

- Hossain, M.M.A.; Cavdar, C.; Björnson, E.; Jantti, R. Energy-Efficient Load-Adaptive Massive MIMO. In Proceedings of the 2015 IEEE Globecom Workshops (GC Wkshps), San Diego, CA, USA, 6–10 December 2015; pp. 1–6. [Google Scholar] [CrossRef] [Green Version]

- Andersson, G.; Vastberg, A.; Devlic, A.; Cavdar, C. Energy efficient heterogeneous network deployment with cell DTX. In Proceedings of the 2016 IEEE International Conference on Communications (ICC), Kuala Lumpur, Malaysia, 22–27 May 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Andersen, J.B. Array gain and capacity for known random channels with multiple element arrays at both ends. IEEE J. Sel. Areas Commun. 2000, 18, 2172–2178. [Google Scholar] [CrossRef]

- 3GPP-TS 38.331-NR-Radio Resource Control (RRC); Protocol specification. Available online: https://www.3gpp.org/dynareport/38331.htm (accessed on 15 March 2022).

- Debaillie, B.; Desset, C.; Louagie, F. A Flexible and Future-Proof Power Model for Cellular Base Stations. In Proceedings of the 2015 IEEE 81st Vehicular Technology Conference (VTC Spring), Glasgow, UK, 11–14 May 2015; pp. 1–7. [Google Scholar] [CrossRef]

- Hengst, B. Hierarchical Reinforcement Learning. In Encyclopedia of Machine Learning; Sammut, C., Webb, G.I., Eds.; Springer US: Boston, MA, USA, 2010; pp. 495–502. [Google Scholar] [CrossRef]

- Dethlefs, N.; Cuayáhuitl, D.H. Combining Hierarchical Reinforcement Learning and Bayesian Networks for Natural Language Generation in Situated Dialogue. In Proceedings of the 13th European Workshop on Natural Language Generation (ENLG), Nancy, France, 28–30 September 2011. [Google Scholar]

- Yan, Q.; Liu, Q.; Hu, D. A hierarchical reinforcement learning algorithm based on heuristic reward function. In Proceedings of the 2010 2nd International Conference on Advanced Computer Control, Shenyang, China, 27–29 March 2010; Volume 3, pp. 371–376. [Google Scholar] [CrossRef]

- Kawano, H. Hierarchical sub-task decomposition for reinforcement learning of multi-robot delivery mission. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 828–835. [Google Scholar] [CrossRef]

- Vezhnevets, A.S.; Osindero, S.; Schaul, T.; Heess, N.; Jaderberg, M.; Silver, D.; Kavukcuoglu, K. FeUdal Networks for Hierarchical Reinforcement Learning. arXiv 2017, arXiv:cs.AI/1703.01161. [Google Scholar]

| Year | Papers | Area/Key Points |

|---|---|---|

| 2020 | [1,10] | |

| 2021 | [3,14,20,21,24,25] |

|

| 2022 | [4,22] |

|

| Agent | No. of Features | No. of Actions | Type of Agent | Description/Actions |

|---|---|---|---|---|

| A1: Strategy selector | 152 | 3 | Network-level | Identifies the optimal selection sequence of different optimization strategies for a given network scenario. Actions include calls to lower-level agents that perform user–cell association, carrier shutdown, or site-level RRA. |

| A2: Carrier adapter | 66 | 15 | Network-level | Switches on or off the capacity layers at different sites depending on the network scenario. |

| A3: Site selector for RRA | 72 | 8 | Network-level | Identifies the sites where RRA could lead to energy savings without negatively impacting the user throughput. |

| A4: Site-level RRA or local configuration adapter | 22 | 13 | Site-level | Performs antenna and bandwidth adaptation at the site selected by A4 depending on the network scenario, the number of connected users, and other site-level metrics. |

| Feature | Input to Agent |

|---|---|

| Overall activity factor | 1–4, 5 * |

| Channel conditions | (1–4) , 5 * |

| Number of connected users | 1–4, 5 * |

| Number of users per band at each site | 1–4, 5 * |

| Average SINR | 1–4, 5 * |

| Carrier state at each site | 1–4, 5 * |

| Antenna configuration | 1–4, 5 * |

| Bandwidth utilized | 1–4, 5 * |

| Statistics of the user with lowest SINR | 1, 2 |

| Permissible throughput degradation | 1–5 |

| Current throughput degradation | 1–5 |

| Network energy consumption | 1–5 |

| Band | Frequency (MHz) | MNO Bandwidth (MHz) | TowerCo Bandwidth (MHz) | Type of Band | Possible Antenna Configurations | Possible Sleep Levels | Sleep Deltas |

|---|---|---|---|---|---|---|---|

| 1 | 700 | 10 | 30 | Coverage | 4, 2 | SM1, SM2, SM3 | 0.84, 0.69, 0.50 |

| 2 | 2100 | 20 | 60 | Capacity | 4, 2 | SM1, SM2, SM3 | 0.84, 0.69, 0.50 |

| 3 | 3500 | 100 | 300 | Capacity | 64, 32, 16 | SM1, SM2, SM3 | 0.84, 0.69, 0.50 |

| Parameter | Value |

|---|---|

| Replay memory size | 100,000 |

| Minibatch size | 64 |

| Learning rate | 0.001 |

| Discount factor () | 0.9 |

| Optimizer | Stochastic gradient descent |

| Activation function | tanh/softmax |

| Loss function | Mean squared error |

| Category | Both KPIs Met? | All Operators? | Description |

|---|---|---|---|

| 1 | Yes | Yes | Energy savings are obtained and throughput change is within permissible limits for all the operators |

| 2 | Yes | No | Both the KPIs are fulfilled for a few of the operators |

| 2 | No | Yes | Only one of the KPIs has been fulfilled for all the operators |

| 3 | No | No | Neither KPIs are fulfilled for all the operators |

| Category | Energy Savings? | Throughput Change within Permissible Limits? | Description |

|---|---|---|---|

| 1 | Yes | Yes | Obtained energy savings and the throughput change is within permissible limits |

| 2 | Yes | No | Obtained energy savings at the cost of throughput degradation beyond permissible limits |

| 3 | No | Yes | No energy savings obtained but throughput change is within limits |

| 4 | No | No | No energy savings obtained and throughput degradation is beyond limits |

| 5 | * | * | A special case where the agent does not take any action resulting in no change in the network energy consumption and throughput |

| Parameter | MNO | Scenario 1 (S1) | Scenario 2 (S2) | Scenario 3 (S3) |

|---|---|---|---|---|

| Throughput degradation limit, % | 1 | 0 | 20 | 0 |

| 2 | 0 | 20 | 0 | |

| 3 | 0 | 20 | 20 |

| S.No | Parameter | Unit | S1 | S2 | S3 |

|---|---|---|---|---|---|

| 1 | Total simulation instances | 1000 | 1000 | 1000 | |

| 2 | Total simulation outcomes (from an operator’s perspective) falling under | ||||

| => Category 1 | % | 77.7 | 94.59 | 83.35 | |

| => Category 2 | % | 19.10 | 1.76 | 12.80 | |

| => Category 3 | % | 0.36 | 1.76 | 1.23 | |

| => Category 4 | % | 2.84 | 1.86 | 2.60 | |

| 3 | Total simulation outcomes (TowerCo’s perspective) falling under | ||||

| => Category 1 | % | 39.0 | 84.8 | 64.2 | |

| => Category 2 | % | 60.9 | 15.2 | 35.6 | |

| => Category 3 | % | 0.1 | 0 | 0.2 |

| S.No | Parameter | Unit | S1 | S2 | S3 |

|---|---|---|---|---|---|

| 1 | Total simulation instances | 1000 | 1000 | 1000 | |

| 2 | Total simulation instances when carrier adaptation was invoked | % | 26.5 | 26.8 | 26.9 |

| 3 | Total simulation instances when carrier adaptation was invoked and had a positive contribution towards energy savings | % | 82.6 | 85.0 | 76.2 |

| 4 | Total simulation instances when carrier adaptation was invoked and simulation outcome category was | % | 57.6 | 94.3 | 69.8 |

| 5 | Probability of turning off | ||||

| => 1 band | % | 84.91 | 83.52 | 83.98 | |

| => 2 bands | % | 13.48 | 14.86 | 15.10 | |

| => 3 bands | % | 1.35 | 1.45 | 0.92 | |

| => 4 bands | % | 0 | 0.16 | 0 | |

| => 5 bands | % | 0.27 | 0 | 0 | |

| 6 | Average energy savings by turning off | ||||

| => 1 band | % | 3.9 | 4.9 | 3.9 | |

| => 2 bands | % | 8.5 | 5.5 | 5.4 | |

| => 3 bands | % | 10.0 | 9.9 | 3.7 | |

| => 4 bands | % | 0 | 12.0 | 0 | |

| => 5 bands | % | 3.1 | 0 | 0 |

| S.No | Parameter | Unit | S1 | S2 | S3 |

|---|---|---|---|---|---|

| 1 | Total simulation instances | 1000 | 1000 | 1000 | |

| 2 | Instances when carrier adaptation has been invoked by one or more MNOs (overlapping and non-overlapping actions) | % | 60.1 | 60.7 | 60.7 |

| 3 | Action overlapping instances of the total invocation instances | % | 30.0 | 27.6 | 28.8 |

| 4 | Non-overlapping action instances of the total invocation instances | % | 70.0 | 72.4 | 71.2 |

| 5 | Overlapping actions that resulted in a | ||||

| - positive outcome for all MNOs | % | 40.0 | 73.8 | 42.2 | |

| - positive outcome for one or two MNOs | % | 47.2 | 24.4 | 49.7 | |

| - negative outcome for all MNOs | % | 12.8 | 1.7 | 8.0 | |

| 6 | Instances when “X” MNOs were involved in a common action | ||||

| => X = 2 MNOs | % | 92.2 | 88.1 | 89.7 | |

| => X = 3 MNOs | % | 7.8 | 11.9 | 10.2 | |

| 7 | Total number of overlapping and non-overlapping actions | 865 | 851 | 835 | |

| 8 | Percentage of actions that resulted in | ||||

| - positive outcome for all MNOs | % | 45.0 | 74.0 | 54.0 | |

| - positive outcome for one or two MNOs | % | 10.0 | 5.0 | 10.0 | |

| - negative outcome for all MNOs | % | 45.0 | 22.0 | 35.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peesapati, S.K.G.; Olsson, M.; Andersson, S.; Qvarfordt, C.; Dahlen, A. AI-Assisted Multi-Operator RAN Sharing for Energy-Efficient Networks. Telecom 2023, 4, 334-368. https://doi.org/10.3390/telecom4020020

Peesapati SKG, Olsson M, Andersson S, Qvarfordt C, Dahlen A. AI-Assisted Multi-Operator RAN Sharing for Energy-Efficient Networks. Telecom. 2023; 4(2):334-368. https://doi.org/10.3390/telecom4020020

Chicago/Turabian StylePeesapati, Saivenkata Krishna Gowtam, Magnus Olsson, Sören Andersson, Christer Qvarfordt, and Anders Dahlen. 2023. "AI-Assisted Multi-Operator RAN Sharing for Energy-Efficient Networks" Telecom 4, no. 2: 334-368. https://doi.org/10.3390/telecom4020020