Exploring the Role of ChatGPT in Oncology: Providing Information and Support for Cancer Patients

Abstract

:1. Introduction

Large Language Models

2. Methods

3. Results and Discussion

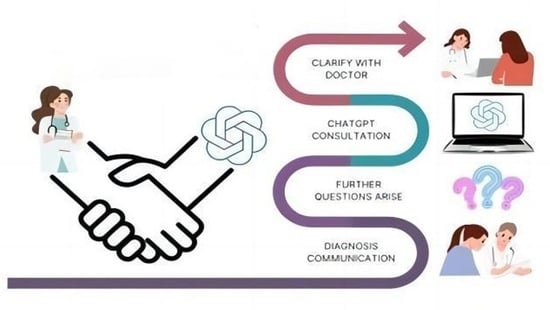

3.1. Potential Advantages of ChatGPT and Other LLMs

3.1.1. Accessibility and Inclusivity

3.1.2. Information Provision and Informed Decision-Making

3.1.3. Emotional Support and Patient Empowerment

3.1.4. Supportive Care

3.1.5. For Healthcare Practitioners and Medical Students

3.2. Appraisal of Literature on Different Types of Cancer

3.2.1. Head and Neck

3.2.2. Prostate Cancer

3.2.3. Hepatocarcinoma

3.2.4. Breast Cancer

3.2.5. Lung Cancer

3.2.6. Colon Cancer

3.2.7. Pancreatic Cancer

3.2.8. Cervical Cancer

3.2.9. Radiotherapy

4. Limitations and Perspectives

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Conflicts of Interest

References

- Bujnowska-Fedak, M.M.; Waligóra, J.; Mastalerz-Migas, A. The Internet as a Source of Health Information and Services. In Advancements and Innovations in Health Sciences; Pokorski, M., Ed.; Advances in Experimental Medicine and Biology; Springer International Publishing: Cham, Switzerland, 2019; Volume 1211, pp. 1–16. [Google Scholar] [CrossRef]

- Johnson, S.B.; King, A.J.; Warner, E.L.; Aneja, S.; Kann, B.H.; Bylund, C.L. Using ChatGPT to Evaluate Cancer Myths and Misconceptions: Artificial Intelligence and Cancer Information. JNCI Cancer Spectr. 2023, 7, pkad015. [Google Scholar] [CrossRef]

- Yeung, A.W.K.; Tosevska, A.; Klager, E.; Eibensteiner, F.; Tsagkaris, C.; Parvanov, E.D.; Nawaz, F.A.; Völkl-Kernstock, S.; Schaden, E.; Kletecka-Pulker, M.; et al. Medical and Health-Related Misinformation on Social Media: Bibliometric Study of the Scientific Literature. J. Med. Internet Res. 2022, 24, e28152. [Google Scholar] [CrossRef] [PubMed]

- Cancer Misinformation and Harmful Information on Facebook and Other Social Media: A Brief Report. Available online: https://pubmed.ncbi.nlm.nih.gov/34291289/ (accessed on 21 February 2024).

- Schäfer, W.L.A.; Van Den Berg, M.J.; Groenewegen, P.P. The Association between the Workload of General Practitioners and Patient Experiences with Care: Results of a Cross-Sectional Study in 33 Countries. Hum. Resour. Health 2020, 18, 76. [Google Scholar] [CrossRef] [PubMed]

- Dave, T.; Athaluri, S.A.; Singh, S. ChatGPT in Medicine: An Overview of Its Applications, Advantages, Limitations, Future Prospects, and Ethical Considerations. Front. Artif. Intell. 2023, 6, 1169595. [Google Scholar] [CrossRef] [PubMed]

- Joshi, G.; Jain, A.; Araveeti, S.R.; Adhikari, S.; Garg, H.; Bhandari, M. FDA-Approved Artificial Intelligence and Machine Learning (AI/ML)-Enabled Medical Devices: An Updated Landscape. Electronics 2024, 13, 498. [Google Scholar] [CrossRef]

- Li, Y.; Gao, W.; Luan, Z.; Zhou, Z.; Li, J. The Impact of Chat Generative Pre-Trained Transformer (ChatGPT) on Oncology: Application, Expectations, and Future Prospects. Cureus 2023, 15, e48670. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Liu, S.; Yang, H.; Guo, J.; Wu, Y.; Liu, J. Ethical Considerations of Using ChatGPT in Health Care. J. Med. Internet Res. 2023, 25, e48009. [Google Scholar] [CrossRef] [PubMed]

- Chatterjee, J.; Dethlefs, N. This New Conversational AI Model Can Be Your Friend, Philosopher, and Guide … and Even Your Worst Enemy. Patterns 2023, 4, 100676. [Google Scholar] [CrossRef] [PubMed]

- Hirosawa, T.; Harada, Y.; Yokose, M.; Sakamoto, T.; Kawamura, R.; Shimizu, T. Diagnostic Accuracy of Differential-Diagnosis Lists Generated by Generative Pretrained Transformer 3 Chatbot for Clinical Vignettes with Common Chief Complaints: A Pilot Study. Int. J. Environ. Res. Public Health 2023, 20, 3378. [Google Scholar] [CrossRef] [PubMed]

- Kung, T.H.; Cheatham, M.; Medenilla, A.; Sillos, C.; De Leon, L.; Elepaño, C.; Madriaga, M.; Aggabao, R.; Diaz-Candido, G.; Maningo, J.; et al. Performance of ChatGPT on USMLE: Potential for AI-Assisted Medical Education Using Large Language Models. PLoS Digit. Health 2023, 2, e0000198. [Google Scholar] [CrossRef]

- DeWalt, D.A.; Berkman, N.D.; Sheridan, S.; Lohr, K.N.; Pignone, M.P. Literacy and Health Outcomes: A Systematic Review of the Literature. J. Gen. Intern. Med. 2004, 19, 1228–1239. [Google Scholar] [CrossRef] [PubMed]

- Budhathoki, S.S.; Pokharel, P.K.; Good, S.; Limbu, S.; Bhattachan, M.; Osborne, R.H. The Potential of Health Literacy to Address the Health Related UN Sustainable Development Goal 3 (SDG3) in Nepal: A Rapid Review. BMC Health Serv. Res. 2017, 17, 237. [Google Scholar] [CrossRef] [PubMed]

- Perrone, F.; Jommi, C.; Di Maio, M.; Gimigliano, A.; Gridelli, C.; Pignata, S.; Ciardiello, F.; Nuzzo, F.; De Matteis, A.; Del Mastro, L.; et al. The Association of Financial Difficulties with Clinical Outcomes in Cancer Patients: Secondary Analysis of 16 Academic Prospective Clinical Trials Conducted in Italy. Ann. Oncol. 2016, 27, 2224–2229. [Google Scholar] [CrossRef]

- Zhu, L.; Mou, W.; Chen, R. Can the ChatGPT and Other Large Language Models with Internet-Connected Database Solve the Questions and Concerns of Patient with Prostate Cancer and Help Democratize Medical Knowledge? J. Transl. Med. 2023, 21, 269. [Google Scholar] [CrossRef] [PubMed]

- Rao, A.; Kim, J.; Kamineni, M.; Pang, M.; Lie, W.; Succi, M.D. Evaluating ChatGPT as an Adjunct for Radiologic Decision-Making. MedRxiv 2023. preprint. [Google Scholar] [CrossRef]

- Campbell, D.J.; Estephan, L.E. ChatGPT for Patient Education: An Evolving investigation. J. Clin. Sleep Med. 2023, 19, 2135–2136. [Google Scholar] [CrossRef] [PubMed]

- Ryan, H.; Schofield, P.; Cockburn, J.; Butow, P.; Tattersall, M.; Turner, J.; Girgis, A.; Bandaranayake, D.; Bowman, D. How to Recognize and Manage Psychological Distress in Cancer Patients. Eur. J. Cancer Care 2005, 14, 7–15. [Google Scholar] [CrossRef] [PubMed]

- Dekker, J.; Graves, K.D.; Badger, T.A.; Diefenbach, M.A. Management of Distress in Patients with Cancer—Are We Doing the Right Thing? Ann. Behav. Med. 2020, 54, 978–984. [Google Scholar] [CrossRef] [PubMed]

- Gordijn, B.; Have, H.T. ChatGPT: Evolution or Revolution? Med. Health Care Philos. 2023, 26, 1–2. [Google Scholar] [CrossRef] [PubMed]

- Elyoseph, Z.; Hadar-Shoval, D.; Asraf, K.; Lvovsky, M. ChatGPT Outperforms Humans in Emotional Awareness Evaluations. Front. Psychol. 2023, 14, 1199058. [Google Scholar] [CrossRef] [PubMed]

- Dolan, N.C.; Ferreira, M.R.; Davis, T.C.; Fitzgibbon, M.L.; Rademaker, A.; Liu, D.; Schmitt, B.P.; Gorby, N.; Wolf, M.; Bennett, C.L. Colorectal Cancer Screening Knowledge, Attitudes, and Beliefs Among Veterans: Does Literacy Make a Difference? J. Clin. Oncol. 2004, 22, 2617–2622. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Y.; Wu, Y.; Feng, B.; Wang, L.; Kang, K.; Zhao, A. Enhancing Diabetes Self-Management and Education: A Critical Analysis of ChatGPT’s Role. Ann. Biomed. Eng. 2023, 52, 741–744. [Google Scholar] [CrossRef] [PubMed]

- Yeo, Y.H.; Samaan, J.S.; Ng, W.H.; Ting, P.-S.; Trivedi, H.; Vipani, A.; Ayoub, W.; Yang, J.D.; Liran, O.; Spiegel, B.; et al. Assessing the Performance of ChatGPT in Answering Questions Regarding Cirrhosis and Hepatocellular Carcinoma. Clin. Mol. Hepatol. 2023, 29, 721–732. [Google Scholar] [CrossRef] [PubMed]

- Yapar, D.; Demir Avcı, Y.; Tokur Sonuvar, E.; Faruk Eğerci, Ö.; Yapar, A. ChatGPT’s Potential to Support Home Care for Patients in the Early Period after Orthopedic Interventions and Enhance Public Health. Jt. Dis. Relat. Surg. 2024, 35, 169–176. [Google Scholar] [CrossRef] [PubMed]

- Borkowski, A.A. Applications of ChatGPT and Large Language Models in Medicine and Health Care: Benefits and Pitfalls. Fed. Pract. 2023, 40, 170. [Google Scholar] [CrossRef]

- Tsang, R. Practical Applications of ChatGPT in Undergraduate Medical Education. J. Med. Educ. Curric. Dev. 2023, 10, 238212052311784. [Google Scholar] [CrossRef] [PubMed]

- Khan, R.A.; Jawaid, M.; Khan, A.R.; Sajjad, M. ChatGPT—Reshaping Medical Education and Clinical Management. Pak. J. Med. Sci. 2023, 39. [Google Scholar] [CrossRef] [PubMed]

- Kitamura, F.C. ChatGPT Is Shaping the Future of Medical Writing But Still Requires Human Judgment. Radiology 2023, 307, e230171. [Google Scholar] [CrossRef] [PubMed]

- Kuşcu, O.; Pamuk, A.E.; Sütay Süslü, N.; Hosal, S. Is ChatGPT Accurate and Reliable in Answering Questions Regarding Head and Neck Cancer? Front. Oncol. 2023, 13, 1256459. [Google Scholar] [CrossRef]

- Wei, K.; Fritz, C.; Rajasekaran, K. Answering Head and Neck Cancer Questions: An Assessment of ChatGPT Responses. Am. J. Otolaryngol. 2024, 45, 104085. [Google Scholar] [CrossRef]

- Shaw, J.; Patidar, K.R.; Reuter, B.; Hajezifar, N.; Dharel, N.; Wade, J.B.; Bajaj, J.S. Focused Education Increases Hepatocellular Cancer Screening in Patients with Cirrhosis Regardless of Functional Health Literacy. Dig. Dis. Sci. 2021, 66, 2603–2609. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.Y.; Alessandri Bonetti, M.; De Lorenzi, F.; Gimbel, M.L.; Nguyen, V.T.; Egro, F.M. Consulting the Digital Doctor: Google Versus ChatGPT as Sources of Information on Breast Implant-Associated Anaplastic Large Cell Lymphoma and Breast Implant Illness. Aesthetic Plast. Surg. 2023, 48, 590–607. [Google Scholar] [CrossRef] [PubMed]

- Rahsepar, A.A.; Tavakoli, N.; Kim, G.H.J.; Hassani, C.; Abtin, F.; Bedayat, A. How AI Responds to Common Lung Cancer Questions: ChatGPT versus Google Bard. Radiology 2023, 307, e230922. [Google Scholar] [CrossRef] [PubMed]

- Emile, S.H.; Horesh, N.; Freund, M.; Pellino, G.; Oliveira, L.; Wignakumar, A.; Wexner, S.D. How Appropriate Are Answers of Online Chat-Based Artificial Intelligence (ChatGPT) to Common Questions on Colon Cancer? Surgery 2023, 174, 1273–1275. [Google Scholar] [CrossRef] [PubMed]

- Moazzam, Z.; Cloyd, J.; Lima, H.A.; Pawlik, T.M. Quality of ChatGPT Responses to Questions Related to Pancreatic Cancer and Its Surgical Care. Ann. Surg. Oncol. 2023, 30, 6284–6286. [Google Scholar] [CrossRef] [PubMed]

- Hermann, C.E.; Patel, J.M.; Boyd, L.; Growdon, W.B.; Aviki, E.; Stasenko, M. Let’s Chat about Cervical Cancer: Assessing the Accuracy of ChatGPT Responses to Cervical Cancer Questions. Gynecol. Oncol. 2023, 179, 164–168. [Google Scholar] [CrossRef] [PubMed]

- Chow, J.C.L.; Wong, V.; Sanders, L.; Li, K. Developing an AI-Assisted Educational Chatbot for Radiotherapy Using the IBM Watson Assistant Platform. Healthcare 2023, 11, 2417. [Google Scholar] [CrossRef] [PubMed]

- Stokel-Walker, C. AI Bot ChatGPT Writes Smart Essays—Should Professors Worry? Nature, 9 December 2022; d41586-022-04397-7. [Google Scholar] [CrossRef]

- Hopkins, A.M.; Logan, J.M.; Kichenadasse, G.; Sorich, M.J. Artificial Intelligence Chatbots Will Revolutionize How Cancer Patients Access Information: ChatGPT Represents a Paradigm-Shift. JNCI Cancer Spectr. 2023, 7, pkad010. [Google Scholar] [CrossRef] [PubMed]

- Nedbal, C.; Naik, N.; Castellani, D.; Gauhar, V.; Geraghty, R.; Somani, B.K. ChatGPT in Urology Practice: Revolutionizing Efficiency and Patient Care with Generative Artificial Intelligence. Curr. Opin. Urol. 2024, 34, 98–104. [Google Scholar] [CrossRef] [PubMed]

- Dahmen, J.; Kayaalp, M.E.; Ollivier, M.; Pareek, A.; Hirschmann, M.T.; Karlsson, J.; Winkler, P.W. Artificial Intelligence Bot ChatGPT in Medical Research: The Potential Game Changer as a Double-Edged Sword. Knee Surg. Sports Traumatol. Arthrosc. 2023, 31, 1187–1189. [Google Scholar] [CrossRef] [PubMed]

- Whiles, B.B.; Bird, V.G.; Canales, B.K.; DiBianco, J.M.; Terry, R.S. Caution! AI Bot Has Entered the Patient Chat: ChatGPT Has Limitations in Providing Accurate Urologic Healthcare Advice. Urology 2023, 180, 278–284. [Google Scholar] [CrossRef]

| Advantages | Limitations |

|---|---|

| Accessibility and inclusiveness (remote access to essential health information for disadvantaged communities, inability to provide harmful or offensive responses, and elimination of stigma surrounding sensitive topics) | Limited Internet access and low digital literacy. Some languages are not available. |

| Timely and accurate access to medical information (good for general purposes and basic medical knowledge) | Limited consideration of the patient’s medical history; these models work only on inputs provided and cannot ask questions (moderate accuracy for complex cases). |

| Patient-friendly explanations of medical terms, treatment options, and potential side effects to improve patient understanding and informed decision-making | Oversimplification. Patients may consider this tool as a substitute for medical consultation, replacing the doctor–patient relationship. |

| Emotional support and patient empowerment | Underestimation of disease severity. |

| Ethical and legal support | It may not comply with local medical regulations, standards, and the patient’s cultural background. |

| Handling routine repetitive tasks like writing medical reports | Reduced supervision of clinicians routinely relies on these tools. |

| Retrieval of medical literature | It does not provide references (or if it does, they are not always true). It is limited to information available until the knowledge cutoff date and does not have real-time updates. It may include biased or outdated information. Limited consideration of the specific clinical setting. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cè, M.; Chiarpenello, V.; Bubba, A.; Felisaz, P.F.; Oliva, G.; Irmici, G.; Cellina, M. Exploring the Role of ChatGPT in Oncology: Providing Information and Support for Cancer Patients. BioMedInformatics 2024, 4, 877-888. https://doi.org/10.3390/biomedinformatics4020049

Cè M, Chiarpenello V, Bubba A, Felisaz PF, Oliva G, Irmici G, Cellina M. Exploring the Role of ChatGPT in Oncology: Providing Information and Support for Cancer Patients. BioMedInformatics. 2024; 4(2):877-888. https://doi.org/10.3390/biomedinformatics4020049

Chicago/Turabian StyleCè, Maurizio, Vittoria Chiarpenello, Alessandra Bubba, Paolo Florent Felisaz, Giancarlo Oliva, Giovanni Irmici, and Michaela Cellina. 2024. "Exploring the Role of ChatGPT in Oncology: Providing Information and Support for Cancer Patients" BioMedInformatics 4, no. 2: 877-888. https://doi.org/10.3390/biomedinformatics4020049