Most Significant Impact on Consumer Engagement: An Analytical Framework for the Multimodal Content of Short Video Advertisements

Abstract

1. Introduction

2. Related Work

2.1. Short Video Advertising

2.2. Consumer Engagement Behaviors

2.3. Multimodal Content Analysis

2.4. Multimodal Features and Variable Screening Methods

3. Materials and Methods

3.1. Data Collection and Pre-Processing

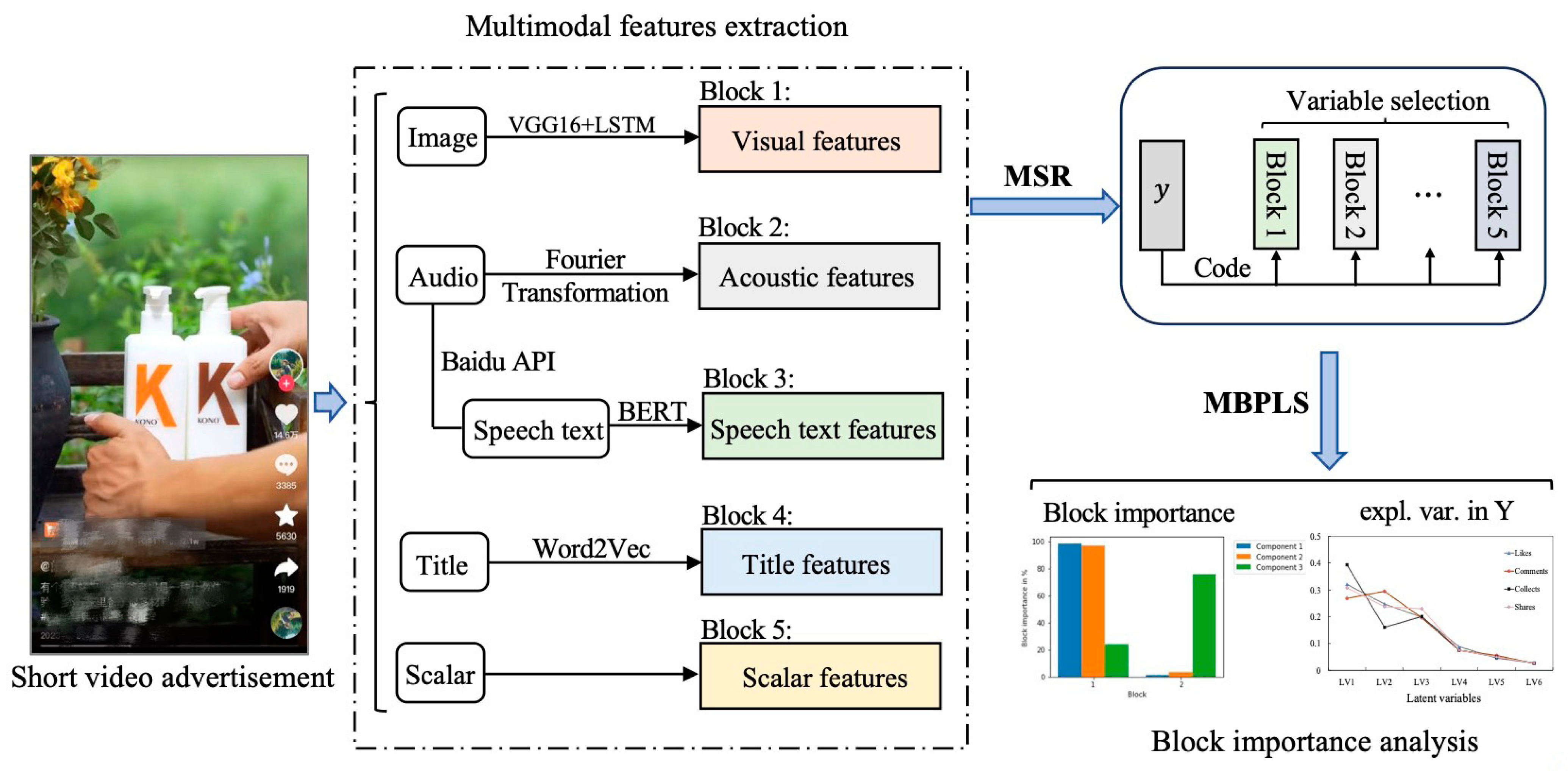

3.2. Feature Extraction

3.3. Method

3.3.1. Mixed-Regularization Sparse Representation-Based Method

| Algorithm 1. Coordinate descent method |

| Input: Given a starting point |

| Repeat for = 1, 2,…, do for = 1, 2,…, N do end end Until convergence |

| Output: when convergence |

3.3.2. Multiblock Partial Least Squares

4. Results

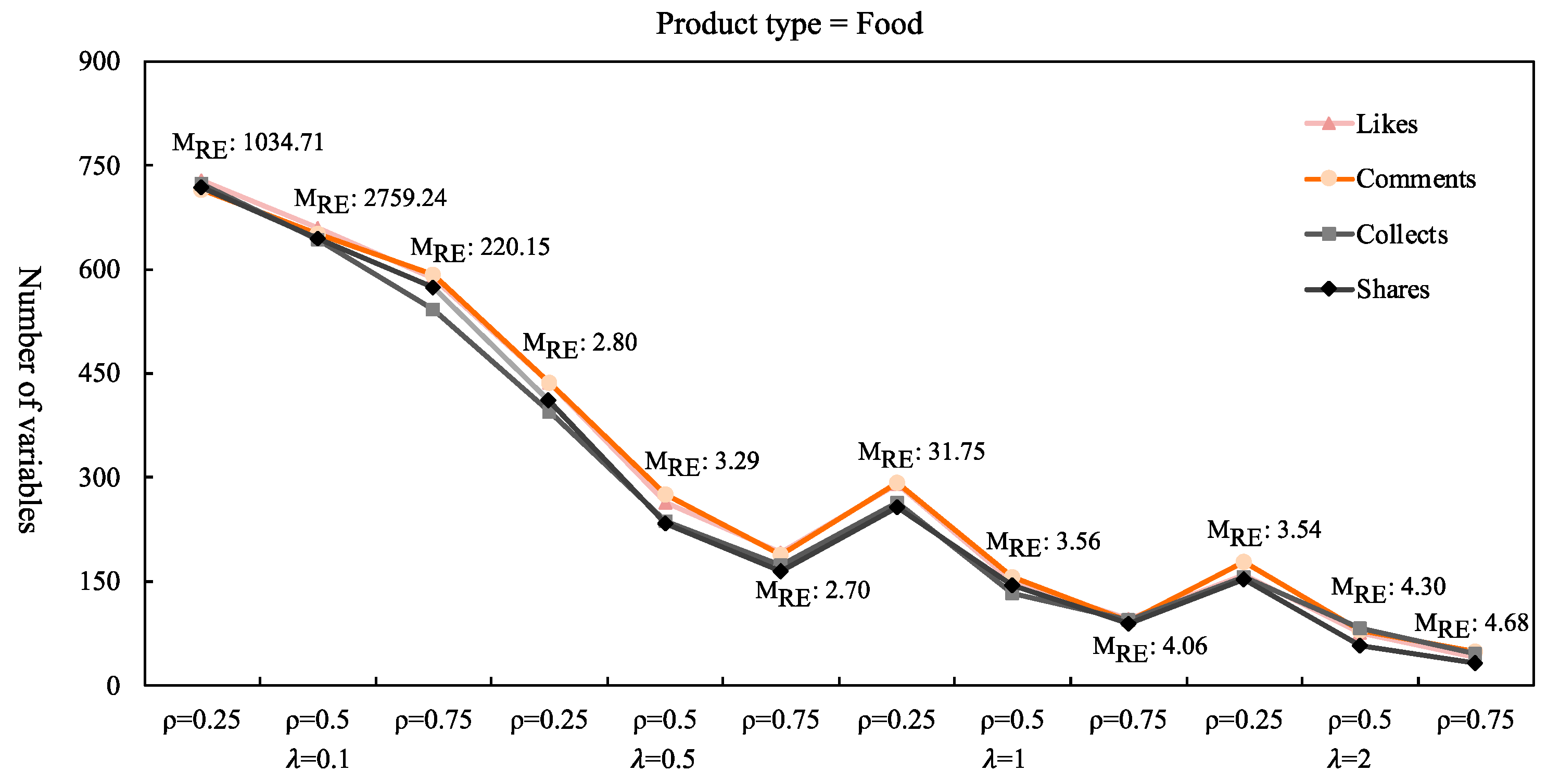

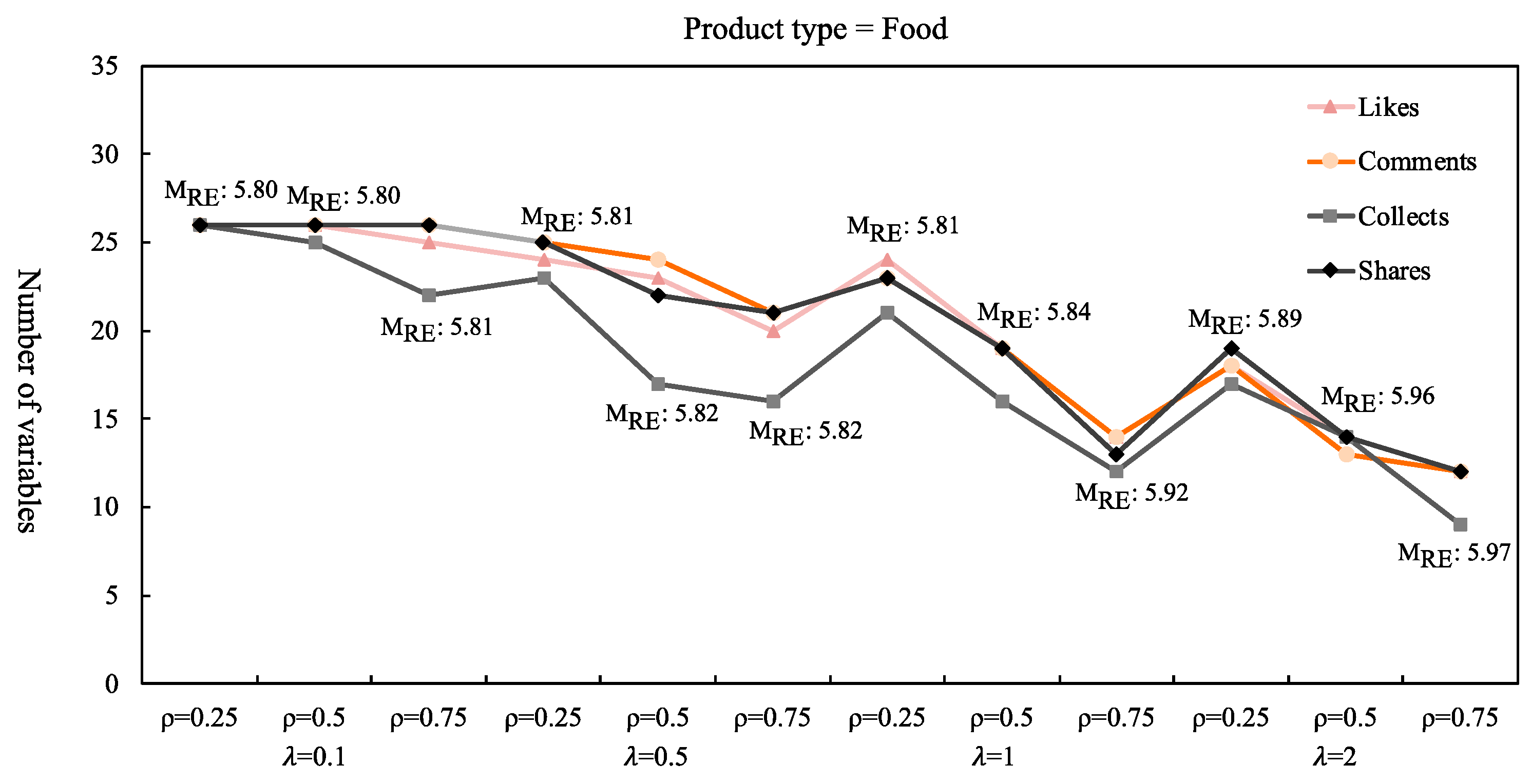

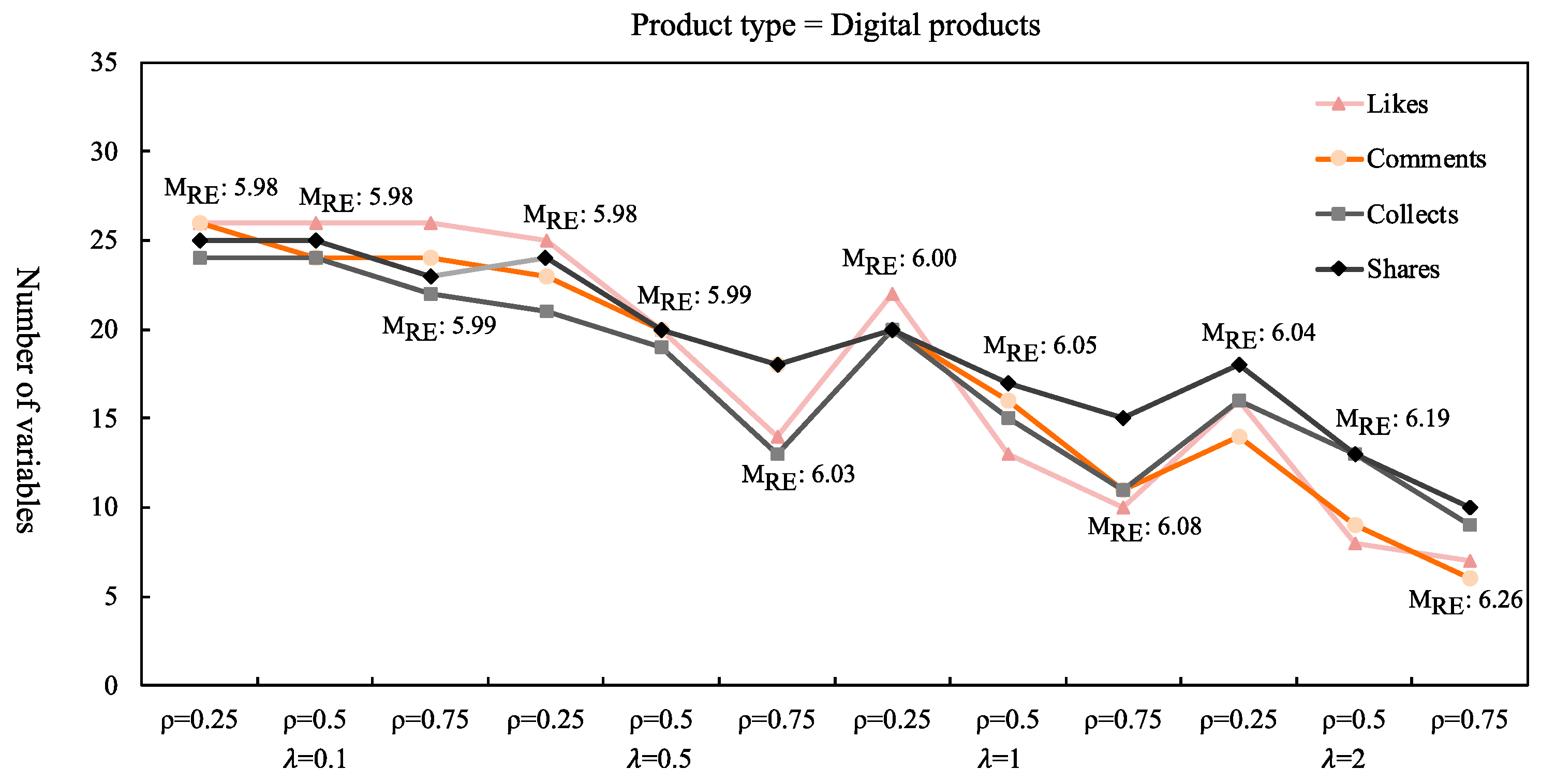

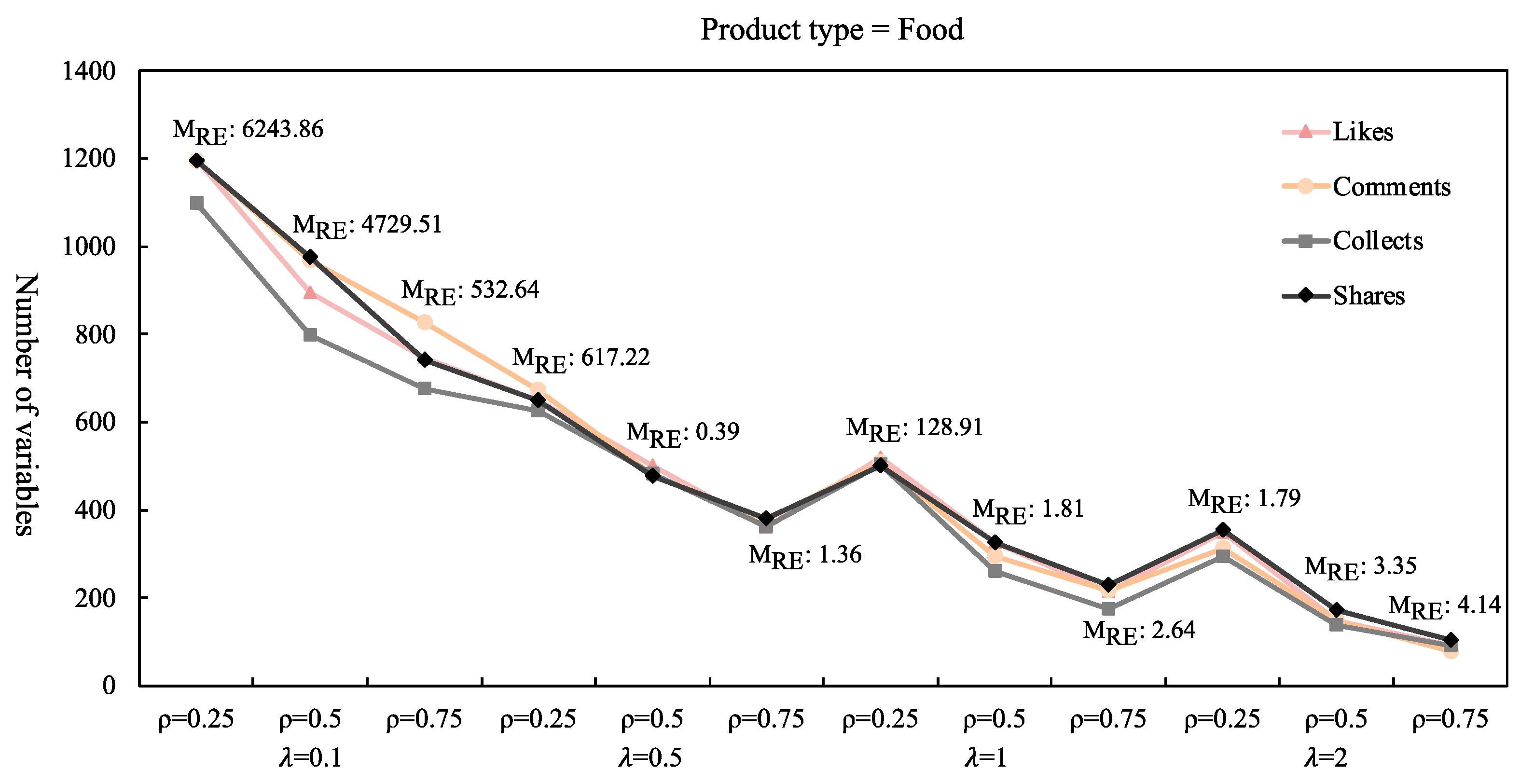

4.1. The Result of MSR

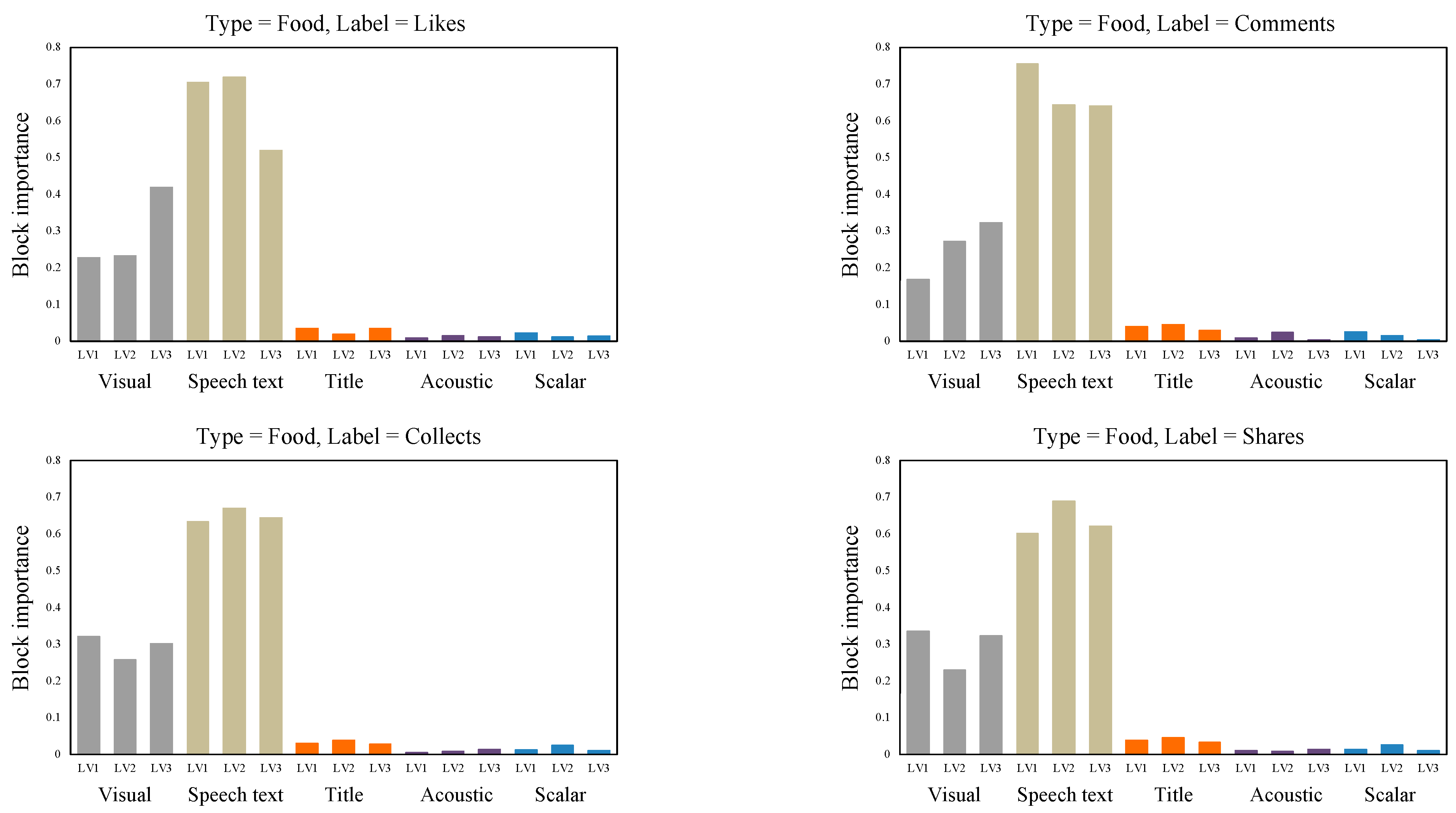

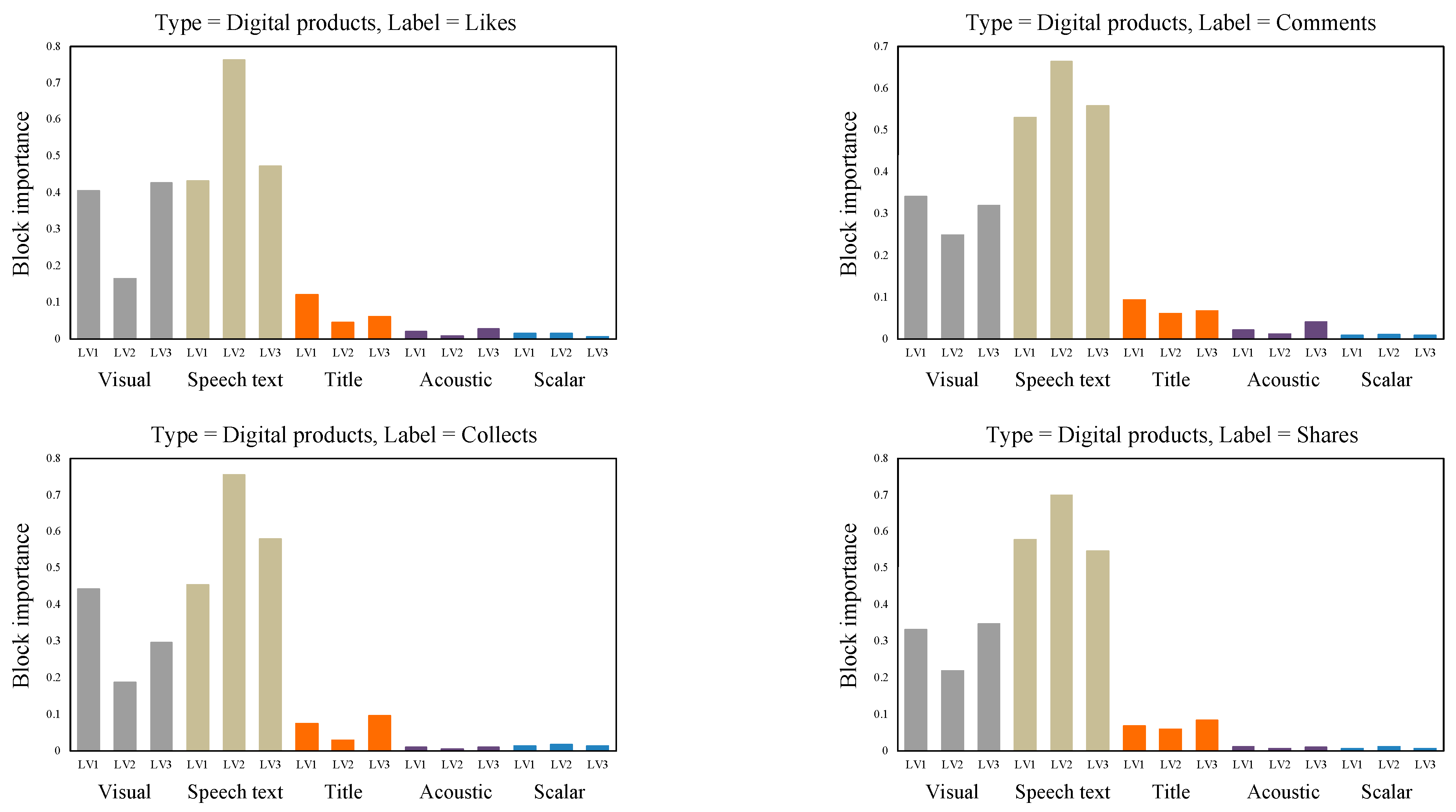

4.2. The Result of MBPLS

5. Discussion

5.1. Theoretical Implications

5.2. Practical Implications

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Yuan, L.; Xia, H.; Ye, Q. The effect of advertising strategies on a short video platform: Evidence from TikTok. Ind. Manag. Data Syst. 2022, 122, 1956–1974. [Google Scholar] [CrossRef]

- QuestMobile. Internet Advertising Semi-Annual Report in 2021. 2024. Available online: https://www.questmobile.com.cn/research/report-new/169 (accessed on 1 July 2024).

- Li, W.; Jiang, M.; Zhan, W. Why advertise on short video platforms? optimizing online advertising using advertisement quality. J. Theor. Appl. Electron. Commer. Res. 2022, 17, 1057–1074. [Google Scholar] [CrossRef]

- Molinillo, S.; Anaya-sánchez, R.; Liébana-cabanillas, F. Computers in human behavior analyzing the effect of social support and community factors on customer engagement and its impact on loyalty behaviors toward social commerce websites. Comput. Hum. Behav. 2019, 108, 105980. [Google Scholar]

- Pansari, A.; Kumar, V. Customer engagement: The construct, antecedents, and consequences. J. Acad. Market. Sci. 2017, 45, 294–311. [Google Scholar]

- Wei, Z.; Zhang, M.; Qiao, T. Effect of personal branding stereotypes on user engagement on short video platforms. J. Retail. Consum. Serv. 2022, 69, 103121. [Google Scholar]

- Xiao, Q.; Huang, W.; Qu, L.; Li, X. The impact of multimodal information features of short sales videos on consumer engagement behavior: A multi-method approach. J. Retail. Consum. Serv. 2025, 82, 104136. [Google Scholar]

- Lu, Y.; Duan, Y. Online content-based sequential recommendation considering multimodal contrastive representation and dynamic preferences. Neural Comput. Appl. 2024, 36, 7085–7103. [Google Scholar]

- Lu, S.; Yu, M.; Wang, H. What matters for short videos’ user engagement: A multiblock model with variable screening. Expert Syst. Appl. 2023, 218, 119452. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Peters, M.E.; Neumann, M.; Iyyer, M.; Gardner, M.; Zettlemoyer, L. Deep contextualized word representations. arXiv 2018, arXiv:1802.05365. [Google Scholar]

- Devlin, J.; Chang, M.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Westerhuis, J.A.; Kourti, T.; MacGregor, J.F. Analysis of multiblock and hierarchical PCA and PLS models. J. Chemometr. 1998, 12, 301–321. [Google Scholar] [CrossRef]

- Putri, N.; Prasetya, Y.; Handayani, P.W.; Fitriani, H. TikTok shop: How trust and privacy influence generation Z’s purchasing behaviors. Cogent Soc. Sci. 2024, 10, 2292759. [Google Scholar] [CrossRef]

- Xiao, L.; Li, X.; Zhang, Y. Exploring the factors influencing consumer engagement behavior regarding short-form video advertising: A big data perspective. J. Retail. Consum. Serv. 2023, 70, 103170. [Google Scholar]

- Jiang, W.; Chen, H. Can short videos work? The effects of use and gratification and social presence on purchase intention: Examining the mediating role of digital dependency. J. Theor. Appl. Electron. Commer. Res. 2025, 20, 5. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, L. How do online celebrities attract consumers? An EEG study on consumers’ neural engagement in short video advertising. Electron. Commer. Res. 2025, 1–27. [Google Scholar] [CrossRef]

- Haq, M.D.; Chiu, C.M. Boosting online user engagement with short video endorsement content on TikTok via the image transfer mechanism. Electron. Commer. Res. Appl. 2024, 64, 101379. [Google Scholar]

- Tan, Y.; Geng, S.; Chen, L.; Wu, L. How doctor image features engage health science short video viewers? Investigating the age and gender bias. Ind. Manag. Data Syst. 2023, 123, 2319–2348. [Google Scholar] [CrossRef]

- Chen, Q.; Min, C.; Zhang, W.; Ma, X.; Evans, R. Factors driving citizen engagement with government TikTok accounts during the covid-19 pandemic: Model development and analysis. J. Med. Internet Res. 2021, 23, e21463. [Google Scholar] [CrossRef]

- Zhang, C.; Zheng, H.; Wang, Q. Driving factors and moderating effects behind citizen engagement with mobile short-form videos. IEEE Access 2022, 10, 40999–41009. [Google Scholar]

- Dong, X.; Liu, H.; Xi, N.; Liao, J.; Yang, Z. Short video marketing: What, when and how short-branded videos facilitate consumer engagement. Internet Res. 2024, 34, 1104–1128. [Google Scholar]

- Van, D.J.; Lemon, K.N.; Mittal, V.; Nass, S.; Pick, D.; Pirner, P.; Verhoef, P.C. Customer engagement behavior: Theoretical foundations and research directions. J. Serv. Res. 2010, 13, 253–266. [Google Scholar]

- Islam, J.U.; Hollebeek, L.D.; Rahman, Z.; Khan, I.; Rasool, A. Customer engagement in the service context: An empirical investigation of the construct, its antecedents and consequences. J. Retail. Consum. Serv. 2019, 50, 277–285. [Google Scholar] [CrossRef]

- Fei, M.; Tan, H.; Peng, X.; Wang, Q.; Wang, L. Promoting or attenuating? An eye-tracking study on the role of social cues in e-commerce livestreaming. Decis. Support Syst. 2021, 142, 113466. [Google Scholar] [CrossRef]

- Jaakkola, E.; Alexander, M. The role of customer engagement behavior in value co-creation: A service system perspective. J. Serv. Res. 2014, 17, 247–261. [Google Scholar]

- Labrecque, L.I.; Swani, K.; Stephen, A.T. The impact of pronoun choices on consumer engagement actions: Exploring top global brands’ social media communications. Psychol. Market. 2020, 37, 796–814. [Google Scholar]

- Fu, Z.; Wang, K.; Wang, J.; Zhu, Y. Predicting sales lift of influencer-generated short video advertisements: A ladder attention-based multimodal time series forecasting framework. In Proceedings of the Hawaii International Conference on System Sciences 2024, Honolulu, HI, USA, 3–7 January 2024; pp. 2843–2852. [Google Scholar]

- Guo, Y.; Ban, C.; Yang, J.; Goh, K.Y.; Liu, X.; Peng, X.; Li, X. Analyzing and predicting consumer response to short videos in e-commerce. ACM Trans. Manag. Inf. Syst. 2024, 15, 17. [Google Scholar]

- Yang, J.; Zhang, J.; Zhang, Y. Engagement that sells: Influencer video advertising on TikTok. Mark. Sci. 2024, 44, 247–267. [Google Scholar]

- Wang, X.; Zhang, Z.; Jiang, Q. The effectiveness of human vs. AI voice-over in short video advertisements: A cognitive load theory perspective. J. Retail. Consum. Serv. 2024, 81, 104005. [Google Scholar]

- Mukherjee, S.; Bala, P.K. Detecting sarcasm in customer tweets: An NLP based approach. Ind. Manag. Data Syst. 2017, 117, 1109–1126. [Google Scholar]

- Yu, F.; Liu, Y. A sparse feature extraction method based on improved quantum evolutionary algorith. Trans. Beijing Inst. Technol. 2020, 40, 512–518. (In Chinese) [Google Scholar]

- Tian, Y.; Wang, H. Image edge feature extraction research based on quantum kernel clustering algorithm. Acta Metrol. Sin. 2016, 37, 582–586. (In Chinese) [Google Scholar]

- Sun, Y.; Wang, S.; Li, Y.; Feng, S.; Wu, H. ERNIE: Enhanced Representation through Knowledge Integration. arXiv 2019, arXiv:1904.09223. [Google Scholar]

- Hsieh, Y.H.; Zeng, X. Sentiment analysis: An ERNIE-BiLSTM approach to bullet screen comments. Sensors 2022, 22, 5233. [Google Scholar] [CrossRef]

- Hu, A.; Flaxman, S.R. Multimodal sentiment analysis to explore the structure of emotions. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 350–358. [Google Scholar]

- Gallo, I.; Calefati, A.; Nawaz, S.; Janjua, M.K. Image and encoded text fusion for multimodal classification. In Proceedings of the 2018 IEEE Digital Image Computing: Techniques and Applications (DICTA), Canberra, Australia, 10–13 December 2018; pp. 1–7. [Google Scholar]

- Zhang, X.; Liu, J.; Cole, M.; Belkin, N. Predicting users’ domain knowledge in information retrieval using multiple regression analysis of search behaviors. J. Assoc. Inf. Sci. Technol. 2015, 66, 980–1000. [Google Scholar]

- Zhao, Z.; Liu, H. Spectral feature selection for supervised and unsupervised learning. In Proceedings of the 24th International Conference on Machine Learning, Corvalis, OR, USA, 20–24 June 2007; pp. 1151–1157. [Google Scholar]

- Li, Z.; Yang, Y.; Liu, J.; Zhou, X.; Lu, H. Unsupervised feature selection using non-negative spectral analysis. In Proceedings of the AAAI Conference on Artificial Intelligence, Toronto, ON, Canada, 22–26 July 2012; pp. 1026–1032. [Google Scholar]

- Shi, L.; Du, L.; Shen, Y. Robust spectral learning for unsupervised feature selection. In Proceedings of the 2014 IEEE International Conference on Data Mining, Shenzhen, China, 14–17 December 2014; pp. 977–982. [Google Scholar]

- Zhang, Y.; Li, H.; Wang, Q.; Peng, C. A filter-based bare-bone particle swarm optimization algorithm for unsupervised feature selection. Appl. Intell. 2019, 49, 2889–2898. [Google Scholar]

- Nelson, P. Information and consumer behavior. J. Polit. Econ. 1970, 72, 311–329. [Google Scholar] [CrossRef]

- Jimenez, F.R.; Mendoza, N.A. Too popular to ignore: The influence of online reviews on purchase intentions of search and experience products. J. Interact. Mark. 2013, 27, 226–235. [Google Scholar]

- Ouzir, M.; Lamrani, H.C.; Bradley, R.L.; El-Moudden, I. Neuromarketing and decision-making: Classification of consumer preferences based on changes analysis in the EEG signal of brain regions. Biomed. Signal Process. Control 2024, 87, 105469. [Google Scholar]

- Wright, S.J. Coordinate descent algorithms. Math. Program. 2015, 151, 3–34. [Google Scholar] [CrossRef]

- Bougeard, S.; Qannari, E.M.; Lupo, C.; Hanafi, M. From multiblock partial least squares to multiblock redundancy analysis, a continuum approach. Informatica 2011, 22, 11–26. [Google Scholar] [CrossRef]

- Syeda, W.T.; Wannan, C.M.J.; Merritt, A.H.; Raghava, J.M.; Jayaram, M.; Velakoulis, D.; Kristensen, T.D.; Soldatos, R.F.; Tonissen, S.; Thomas, N.; et al. Cortico-cognition coupling in treatment resistant schizophrenia. NeuroImage Clin. 2022, 35, 103064. [Google Scholar] [CrossRef]

- Strani, L.; Vitale, R.; Tanzilli, D.; Bonacini, F.; Perolo, A.; Mantovani, E.; Ferrando, A.; Cocchi, M. A Multiblock approach to fuse process and near-infrared sensors for online prediction of polymer properties. Sensors 2022, 22, 1436. [Google Scholar] [CrossRef]

- Wang, X.; Lai, I.K.W.; Lu, Y.; Liu, X. Narrative or non-narrative? The effects of short video content structure on mental simulation and resort brand attitude. J. Hosp. Market. Manag. 2023, 32, 593–614. [Google Scholar] [CrossRef]

- Yu, X.; Zhang, Y.; Zhang, X. The short video usage motivation and behavior of middle-aged and old users. Libr. Hi Tech. 2024, 42, 624–641. [Google Scholar] [CrossRef]

- Erevelles, S.; Fukawa, N.; Swayne, L. Big data consumer analytics and the transformation of marketing. J. Bus. Res. 2016, 69, 897–904. [Google Scholar] [CrossRef]

- Vamsi, K.K.; Christian, H.; Brady, T.H. Standing out from the crowd: When and why color complexity in social media images increases user engagement. Int. J. Res. Mark. 2024, 41, 174–193. [Google Scholar]

| Modality Blocks | Features | Item | Description | Dimension |

|---|---|---|---|---|

| Block 1 | Visual | Beginning image | Features of the first 10 s of a short video frame | 1280 |

| Block 2 | Acoustic | Zero-crossing rate | The rate of symbol change in acoustic signals | 1 |

| Spectral centroid | The position of the spectral centroid | 1 | ||

| Spectrum roll-off | Measurement of the shape of acoustic signals | 1 | ||

| Chromaticity frequency | The 12 different semitones or chromatics in music | 1 | ||

| Spectral bandwidth | The effective range of the frequency spectrum | 1 | ||

| RMS energy | The energy or intensity level of the sound signal within the selected time period | 1 | ||

| MFCC | Reflecting the nonlinear perception features of the human ear to sound frequencies | 20 | ||

| Block 3 | Speech text | Author’s description | Speech text of short video authors | 768 |

| Block 4 | Title | Video title | The average value of word vectors of titles | 100 |

| Block 5 | Scalar | Duration | Number of seconds of a short video advertisement | 1 |

| Speed | The speed of voice playback | 1 | ||

| Title length | Word count of title | 1 | ||

| Time gap | The released days of the short videos | 1 | ||

| Product price | The price of the products | 1 |

| Statistic | Likes | Comments | Collects | Shares |

|---|---|---|---|---|

| Skewness | 6.12 | 22.24 | 5.74 | 6.87 |

| Kurtosis | 46.14 | 542.57 | 40.19 | 67.81 |

| Max | 1,069,000 | 690,000 | 266,000 | 302,000 |

| Min | 175 | 15 | 8 | 47 |

| Mean | 44,581.63 | 3229.13 | 11,023.74 | 8164.47 |

| Std | 96,193.25 | 26,367.08 | 26,193.67 | 20,462.20 |

| Feature Type | Parameter (Food) | Parameter (Digital Products) | ||

|---|---|---|---|---|

| visual | 0.5 | 0.5 | 0.5 | 0.75 |

| speech text | 0.5 | 0.25 | 0.5 | 0.5 |

| title | 0.5 | 0.75 | 0.5 | 0.5 |

| acoustic | 1 | 0.75 | 0.5 | 0.75 |

| Feature Type | Food | Digital Products | ||||||

|---|---|---|---|---|---|---|---|---|

| Likes | Comments | Collects | Shares | Likes | Comments | Collects | Shares | |

| visual | 500 | 479 | 482 | 477 | 301 | 270 | 262 | 310 |

| speech text | 436 | 436 | 395 | 410 | 240 | 228 | 208 | 233 |

| title | 48 | 50 | 53 | 58 | 58 | 57 | 48 | 59 |

| acoustic | 14 | 14 | 12 | 13 | 14 | 18 | 13 | 18 |

| Method | Index | Food | Digital Products | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Likes | Comments | Collects | Shares | Likes | Comments | Collects | Shares | ||

| MSR | R | 0.685 | 0.616 | 0.740 | 0.712 | 0.718 | 0.615 | 0.654 | 0.684 |

| MAE | 0.112 | 0.087 | 0.065 | 0.104 | 0.094 | 0.105 | 0.101 | 0.100 | |

| MSE | 0.019 | 0.012 | 0.007 | 0.017 | 0.014 | 0.017 | 0.017 | 0.017 | |

| RFR | R | 0.593 | 0.504 | 0.622 | 0.657 | 0.459 | 0.383 | 0.540 | 0.532 |

| MAE | 0.087 | 0.093 | 0.073 | 0.089 | 0.098 | 0.082 | 0.076 | 0.085 | |

| MSE | 0.012 | 0.014 | 0.009 | 0.013 | 0.015 | 0.011 | 0.009 | 0.012 | |

| t-SNE | R | 0.652 | 0.595 | 0.611 | 0.628 | 0.454 | 0.361 | 0.513 | 0.531 |

| MAE | 0.085 | 0.089 | 0.073 | 0.092 | 0.103 | 0.089 | 0.088 | 0.089 | |

| MSE | 0.011 | 0.013 | 0.010 | 0.012 | 0.015 | 0.013 | 0.012 | 0.013 | |

| PCA | R | 0.542 | 0.460 | 0.559 | 0.554 | 0.286 | 0.227 | 0.374 | 0.351 |

| MAE | 0.090 | 0.094 | 0.078 | 0.102 | 0.105 | 0.079 | 0.087 | 0.097 | |

| MSE | 0.013 | 0.015 | 0.010 | 0.015 | 0.017 | 0.011 | 0.011 | 0.015 | |

| CA | R | 0.560 | 0.507 | 0.592 | 0.626 | 0.049 | 0.464 | 0.554 | 0.518 |

| MAE | 0.092 | 0.090 | 0.075 | 0.093 | 0.094 | 0.075 | 0.074 | 0.087 | |

| MSE | 0.013 | 0.014 | 0.009 | 0.014 | 0.014 | 0.009 | 0.009 | 0.013 | |

| All | R | 0.416 | 0.337 | 0.529 | 0.589 | 0.362 | 0.297 | 0.272 | 0.340 |

| MAE | 0.148 | 0.113 | 0.082 | 0.118 | 0.131 | 0.127 | 0.137 | 0.131 | |

| MSE | 0.033 | 0.020 | 0.011 | 0.022 | 0.027 | 0.028 | 0.030 | 0.028 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.; Zhang, L. Most Significant Impact on Consumer Engagement: An Analytical Framework for the Multimodal Content of Short Video Advertisements. J. Theor. Appl. Electron. Commer. Res. 2025, 20, 54. https://doi.org/10.3390/jtaer20020054

Zhang Z, Zhang L. Most Significant Impact on Consumer Engagement: An Analytical Framework for the Multimodal Content of Short Video Advertisements. Journal of Theoretical and Applied Electronic Commerce Research. 2025; 20(2):54. https://doi.org/10.3390/jtaer20020054

Chicago/Turabian StyleZhang, Zhipeng, and Liyi Zhang. 2025. "Most Significant Impact on Consumer Engagement: An Analytical Framework for the Multimodal Content of Short Video Advertisements" Journal of Theoretical and Applied Electronic Commerce Research 20, no. 2: 54. https://doi.org/10.3390/jtaer20020054

APA StyleZhang, Z., & Zhang, L. (2025). Most Significant Impact on Consumer Engagement: An Analytical Framework for the Multimodal Content of Short Video Advertisements. Journal of Theoretical and Applied Electronic Commerce Research, 20(2), 54. https://doi.org/10.3390/jtaer20020054