Harmonizing Sight and Sound: The Impact of Auditory Emotional Arousal, Visual Variation, and Their Congruence on Consumer Engagement in Short Video Marketing

Abstract

:1. Introduction

2. Literature Review

2.1. Influencer Marketing in Short Video

2.2. Auditory Emotional Arousal

2.3. Visual Variation

2.4. Multiple Resource Theory

3. Hypotheses and Research Model

3.1. Auditory Emotional Arousal and Consumer Engagement

3.2. Visual Variation and Consumer Engagement

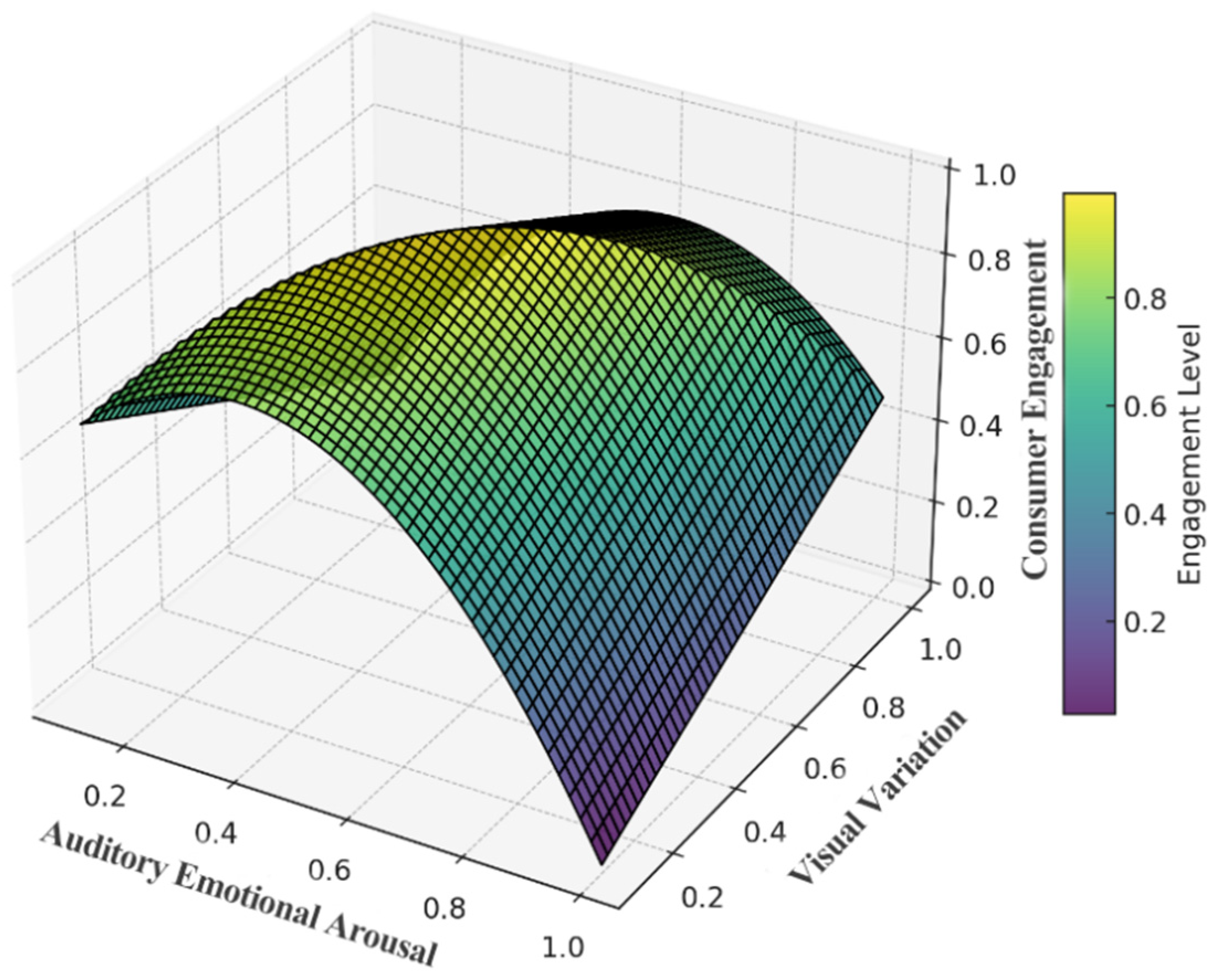

3.3. Auditory Emotional Arousal, Visual Variation, and Consumer Engagement

4. Method

4.1. Data Source

4.2. Data Preprocessing

4.3. Variable Measurement

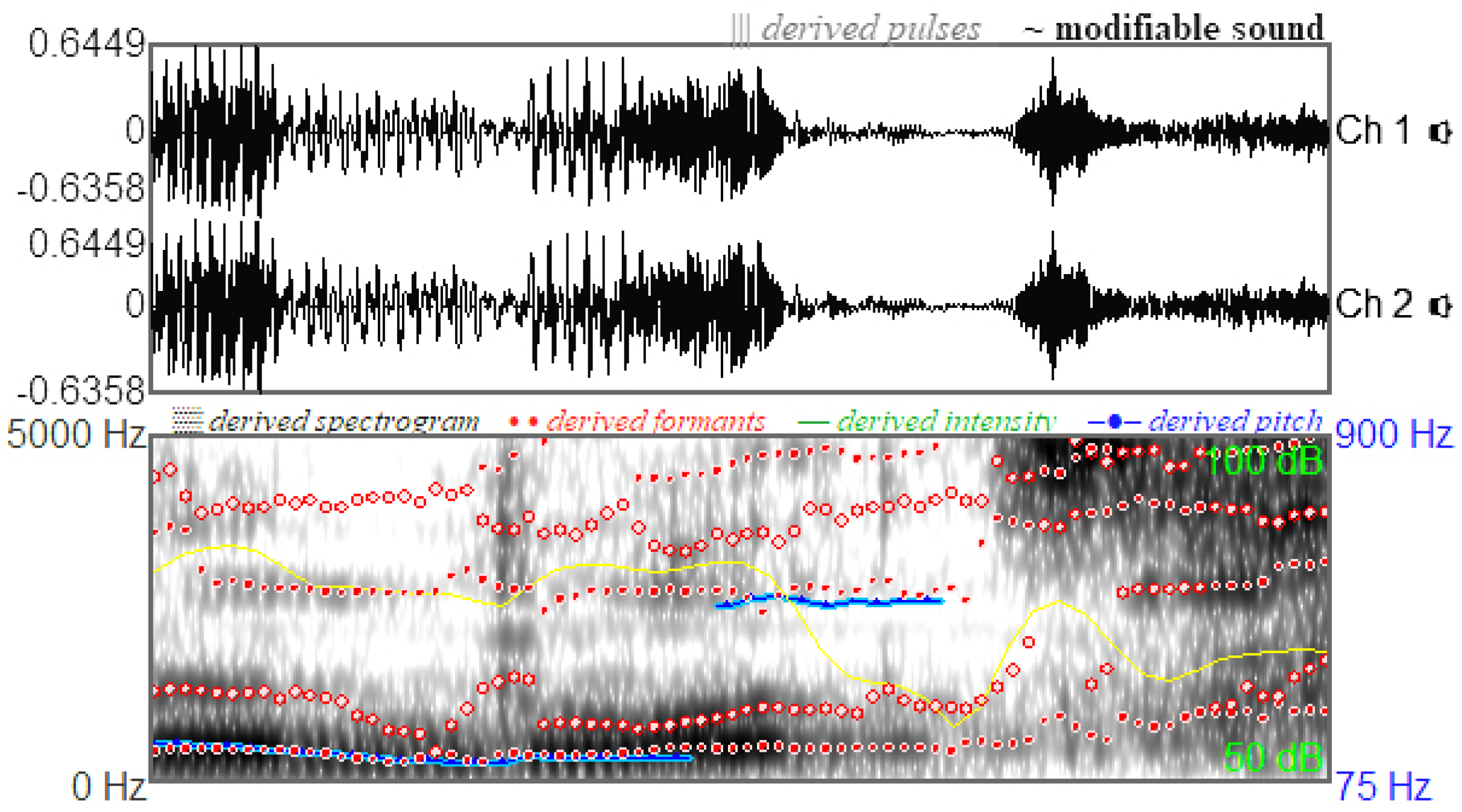

4.3.1. Independent Variables

4.3.2. Dependent Variable

4.3.3. Control Variables

4.3.4. Testing Methods

4.4. Results

4.5. Robustness Test

5. Conclusions

5.1. Study Summary

5.2. Theoretical Implications

5.3. Practical Implications

5.4. Study Limitations and Future Study Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wei, K.S. The Impact of Barrage System Fluctuation on User Interaction in Digital Video Platforms: A Perspective from Signaling Theory and Social Impact Theory. J. Res. Interact. Mark. 2023, 17, 602–619. [Google Scholar] [CrossRef]

- Liu, X.; Shi, S.W.; Teixeira, T.; Wedel, M. Video Content Marketing: The Making of Clips. J. Mark. 2018, 82, 86–101. [Google Scholar]

- Yin, X.; Li, J.; Si, H.; Wu, P. Attention Marketing in Fragmented Entertainment: How Advertising Embedding Influences Purchase Decision in Short-Form Video Apps. J. Retail. Consum. Serv. 2024, 76, 103572. [Google Scholar] [CrossRef]

- Oestreicher, G. 50 Tiktok Statistics in 2024 for Social Media Marketing. Available online: https://metricool.com/tiktok-statistics/ (accessed on 27 January 2025).

- Yang, Q.; Wang, Y.; Song, M.; Jiang, Y.; Li, Q. Sonic Strategies: Unveiling the Impact of Sound Features in Short Video Ads on Enterprise Market Entry Performance. J. Bus.-Bus. Mark. 2025, 32, 95–116. [Google Scholar] [CrossRef]

- Rutten, S.; Santoro, R.; Hervais-Adelman, A.; Formisano, E.; Golestani, N. Cortical Encoding of Speech Enhances Task-Relevant Acoustic Information. Nat. Hum. Behav. 2019, 3, 974–987. [Google Scholar] [CrossRef]

- Charest, I.; Pernet, C.R.; Rousselet, G.A.; Quiñones, I.; Latinus, M.; Fillion-Bilodeau, S.; Chartrand, J.; Belin, P. Electrophysiological Evidence for an Early Processing of Human Voices. BMC Neurosci. 2009, 10, 127. [Google Scholar] [CrossRef]

- Aeschlimann, M.; Knebel, J.F.O.; Murray, M.M.; Clarke, S. Emotional Pre-Eminence of Human Vocalizations. Brain Topogr. 2008, 20, 239–248. [Google Scholar] [CrossRef]

- Miao, M.; Wang, Y.; Li, J.; Jiang, Y.; Yang, Q. Audio Features and Crowdfunding Success: An Empirical Study Using Audio Mining. J. Theor. Appl. Electron. Commer. Res. 2024, 19, 3176–3196. [Google Scholar] [CrossRef]

- Shao, Z. Revealing Consumers’ Hedonic Buying in Social Media: The Roles of Social Status Recognition, Perceived Value, Immersive Engagement and Gamified Incentives. J. Res. Interact. Mark. 2024; ahead of print. [Google Scholar]

- Mather, M.; Sutherland, M.R. Arousal-Biased Competition in Perception and Memory. Perspect. Psychol. Sci. 2011, 6, 114–133. [Google Scholar]

- Buechel, E.C.; Townsend, C.; Fischer, E.; Moreau, P. Buying Beauty for the Long Run: (Mis)Predicting Liking of Product Aesthetics. J. Consum. Res. 2018, 45, 275–297. [Google Scholar]

- Berridge, C.W.; Arnsten, A.F.T. Psychostimulants and Motivated Behavior: Arousal and Cognition. Neurosci. Biobehav. Rev. 2013, 37, 1976–1984. [Google Scholar]

- Cian, L.; Krishna, A.; Elder, R.S. A Sign of Things to Come: Behavioral Change through Dynamic Iconography. J. Consum. Res. 2015, 41, 1426–1446. [Google Scholar]

- Ryu, S. From Pixels to Engagement: Examining the Impact of Image Resolution in Cause-Related Marketing on Instagram. J. Res. Interact. Mark. 2024, 18, 709–730. [Google Scholar] [CrossRef]

- Rumpf, C.; Boronczyk, F.; Breuer, C. Predicting Consumer Gaze Hits: A Simulation Model of Visual Attention to Dynamic Marketing Stimuli. J. Bus. Res. 2020, 111, 208–217. [Google Scholar]

- Sample, K.L.; Hagtvedt, H.; Brasel, S.A. Components of Visual Perception in Marketing Contexts: A Conceptual Framework and Review. J. Acad. Mark. Sci. 2020, 48, 405–421. [Google Scholar]

- Li, C.; Lu, H.; Xiang, Y.; Gao, R. Geo-Dmp: A Dtn-Based Mobile Prototype for Geospatial Data Retrieval. Isprs Int. J. Geo-Inf. 2020, 9, 8. [Google Scholar] [CrossRef]

- Gan, J.; Shi, S.; Filieri, R.; Leung, W.K.S. Short Video Marketing and Travel Intentions: The Interplay Between Visual Perspective, Visual Content, and Narration Appeal. Tour. Manag. (1982) 2023, 99, 104795. [Google Scholar]

- Ramezani Nia, M.; Shokouhyar, S. Analyzing the Effects of Visual Aesthetic of Web Pages on Users’ Responses in Online Retailing Using the Visawi Method. J. Res. Interact. Mark. 2020, 14, 357–389. [Google Scholar]

- Hagtvedt, H.; Brasel, S.A. Cross-Modal Communication: Sound Frequency Influences Consumer Responses to Color Lightness. J. Mark. Res. 2016, 53, 551–562. [Google Scholar]

- Fiez, J.A.; Balota, D.A.; Raichle, M.E.; Petersen, S.E. Effects of Lexicality, Frequency, and Spelling-to-Sound Consistency on the Functional Anatomy of Reading. Neuron 1999, 24, 205–218. [Google Scholar]

- Leung, F.F.; Gu, F.F.; Li, Y.; Zhang, J.Z.; Palmatier, R.W. Influencer Marketing Effectiveness. J. Mark. 2022, 86, 93–115. [Google Scholar] [CrossRef]

- Monroe Meng, L.; Kou, S.; Duan, S.; Bie, Y. The Impact of Content Characteristics of Short-Form Video Ads on Consumer Purchase Behavior: Evidence from Tiktok. J. Bus. Res. 2024, 183, 114874. [Google Scholar] [CrossRef]

- Ki, C.W.C.; Kim, Y.K. The Mechanism by Which Social Media Influencers Persuade Consumers: The Role of Consumers’ Desire to Mimic. Psychol. Mark. 2019, 36, 905–922. [Google Scholar] [CrossRef]

- Shao, Z. How the Characteristics of Social Media Influencers and Live Content Influence Consumers’ Impulsive Buying in Live Streaming Commerce? The Role of Congruence and Attachment. J. Res. Interact. Mark. 2024, 18, 506–527. [Google Scholar] [CrossRef]

- Shen, X.; Wang, J. How Short Video Marketing Influences Purchase Intention in Social Commerce: The Role of Users’ Persona Perception, Shared Values, and Individual-Level Factors. Hum. Soc. Sci. Commun. 2024, 11, 213–290. [Google Scholar] [CrossRef]

- Tian, Z.; Dew, R.; Iyengar, R. Mega or Micro? Influencer Selection Using Follower Elasticity. J. Mark. Res. 2024, 61, 472–495. [Google Scholar] [CrossRef]

- Chen, H.; Ren, J.; Salvendy, G.; Wei, J. The Effect of Influencer Persona on Consumer Decision-Making Towards Short-Form Video Ads—From the Angle of Narrative Persuasion; Springer International Publishing: Cham, Switzerland, 2022; pp. 223–234. ISBN 0302-9743. [Google Scholar]

- Yang, J.; Zhang, J.; Zhang, Y. Engagement that Sells: Influencer Video Advertising on Tiktok. Mark. Sci. 2024, 44, 247–489. [Google Scholar] [CrossRef]

- Zhang, J.; Li, C. The Influence and Configuration Effect of Content Characteristics on Customer Input in the Context of Short Video Platforms. J. Res. Interact. Mark. 2024, 19, 482–497. [Google Scholar] [CrossRef]

- Dong, X.; Liu, H.; Xi, N.; Liao, J.; Yang, Z. Short Video Marketing: What, When and How Short-Branded Videos Facilitate Consumer Engagement. Internet Res. 2024, 34, 1104–1128. [Google Scholar] [CrossRef]

- Wang, D.; Luo, X.R.; Hua, Y.; Benitez, J. Customers’ Help-Seeking Propensity and Decisions in Brands’ Self-Built Live Streaming E-Commerce: A Mixed-Methods and Fsqca Investigation from a Dual-Process Perspective. J. Bus. Res. 2023, 156, 113540. [Google Scholar] [CrossRef]

- Wang, Y. Humor and Camera View on Mobile Short-Form Video Apps Influence User Experience and Technology-Adoption Intent, an Example of Tiktok (Douyin). Comput. Hum. Behav. 2020, 110, 106373. [Google Scholar] [CrossRef]

- Al-Emadi, F.A.; Ben Yahia, I. Ordinary Celebrities Related Criteria to Harvest Fame and Influence on Social Media. J. Res. Interact. Mark. 2020, 14, 195–213. [Google Scholar] [CrossRef]

- Simmonds, L.; Bogomolova, S.; Kennedy, R.; Nenycz Thiel, M.; Bellman, S. A Dual-Process Model of How Incorporating Audio-Visual Sensory Cues in Video Advertising Promotes Active Attention. Psychol. Mark. 2020, 37, 1057–1067. [Google Scholar] [CrossRef]

- Noteboom, J.T.; Fleshner, M.; Enoka, R.M. Activation of the Arousal Response Can Impair Performance on a Simple Motor Task. J. Appl. Physiol. 2001, 91, 821–831. [Google Scholar] [CrossRef]

- Pribram, K.H.; Mcguinness, D. Arousal, Activation, and Effort in the Control of Attention. Psychol. Rev. 1975, 82, 116. [Google Scholar] [CrossRef]

- Wang, B.; Han, Y.; Kandampully, J.; Lu, X. How Language Arousal Affects Purchase Intentions in Online Retailing? The Role of Virtual Versus Human Influencers, Language Typicality, and Trust. J. Retail. Consum. Serv. 2025, 82, 104106. [Google Scholar] [CrossRef]

- Mehrabian, A. Affiliation as a Function of Attitude Discrepancy with Another and Arousal-Seeking Tendency. J. Pers. 1975, 43, 582–592. [Google Scholar] [CrossRef]

- Smith, P.C.; Curnow, R. “Arousal Hypothesis” and the Effects of Music on Purchasing Behavior. J. Appl. Psychol. 1966, 50, 255. [Google Scholar] [CrossRef]

- Yin, D.; Bond, S.D.; Zhang, H. Keep Your Cool or Let It Out: Nonlinear Effects of Expressed Arousal on Perceptions of Consumer Reviews. J. Mark. Res. 2017, 54, 447–463. [Google Scholar] [CrossRef]

- Yoo, J.; Kim, J.; Kim, M.; Park, M. Imagery Evoking Visual and Verbal Information Presentations in Mobile Commerce: The Roles of Augmented Reality and Product Review. J. Res. Interact. Mark. 2024, 18, 182–197. [Google Scholar] [CrossRef]

- Li, X.; Shi, M.; Wang, X.S. Video Mining: Measuring Visual Information Using Automatic Methods. Int. J. Res. Mark. 2019, 36, 216–231. [Google Scholar]

- Fan, S.; Shen, Z.; Koenig, B.L.; Ng, T.; Kankanhalli, M.S. When and Why Static Images are More Effective than Videos. IEEE Trans. Affect. Comput. 2023, 14, 308–320. [Google Scholar]

- Noldus, L.P.J.J.; Spink, A.J.; Tegelenbosch, R.A.J. Computerised Video Tracking, Movement Analysis and Behaviour Recognition in Insects. Comput. Electron. Agric. 2002, 35, 201–227. [Google Scholar] [CrossRef]

- Lu, S.; Yu, M.; Wang, H. What Matters for Short Videos’ User Engagement: A Multiblock Model with Variable Screening. Expert Syst. Appl. 2023, 218, 119542. [Google Scholar] [CrossRef]

- Rav-Acha, A.; Pritch, Y.; Peleg, S. Making a Long Video Short: Dynamic Video Synopsis. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006. [Google Scholar]

- Stuppy, A.; Landwehr, J.R.; Mcgraw, A.P. The Art of Slowness: Slow Motion Enhances Consumer Evaluations by Increasing Processing Fluency. J. Mark. Res. 2024, 61, 185–203. [Google Scholar]

- Yan, Y.; He, Y.; Li, L. Why Time Flies? The Role of Immersion in Short Video Usage Behavior. Front. Psychol. 2023, 14, 1127210. [Google Scholar]

- Wöllner, C.; Hammerschmidt, D.; Albrecht, H.; Jäncke, L. Slow Motion in Films and Video Clips: Music Influences Perceived Duration and Emotion, Autonomic Physiological Activation and Pupillary Responses. PLoS ONE 2018, 13, e0199161. [Google Scholar]

- Yin, Y.; Jia, J.S.; Zheng, W. The Effect of Slow Motion Video on Consumer Inference. J. Mark. Res. 2021, 58, 1007–1024. [Google Scholar]

- Wickens, C.D. Multiple Resources and Performance Prediction. Theor. Issues Ergon. Sci. 2002, 3, 159–177. [Google Scholar] [CrossRef]

- Wickens, C.D. Multiple Resources and Mental Workload. Hum. Factors 2008, 50, 449–455. [Google Scholar]

- Mayer, R.E.; Moreno, R. Nine Ways to Reduce Cognitive Load in Multimedia Learning. Educ. Psychol. 2003, 38, 43–52. [Google Scholar]

- Montero Perez, M.; Peters, E.; Desmet, P. Vocabulary Learning through Viewing Video: The Effect of Two Enhancement Techniques. Comput. Assist. Lang. Learn. 2018, 31, 1–26. [Google Scholar]

- Baumgartner, S.E.; Wiradhany, W.; Shackleford, K. Not All Media Multitasking is the Same: The Frequency of Media Multitasking Depends on Cognitive and Affective Characteristics of Media Combinations. Psychol. Pop. Media 2022, 11, 1–12. [Google Scholar]

- Biswas, D.; Szocs, C. The Smell of Healthy Choices: Cross-Modal Sensory Compensation Effects of Ambient Scent on Food Purchases. J. Mark. Res. 2019, 56, 123–141. [Google Scholar]

- Unnava, H.R.; Burnkrant, R.E.; Erevelles, S. Effects of Presentation Order and Communication Modality on Recall and Attitude. J. Consum. Res. 1994, 21, 481–490. [Google Scholar]

- Vroomen, J.; de Gelder, B. Sound Enhances Visual Perception: Cross-Modal Effects of Auditory Organization on Vision. J. Exp. Psychol. Hum. Percept. Perform. 2000, 26, 1583–1590. [Google Scholar] [CrossRef]

- Holiday, S.; Hayes, J.L.; Park, H.; Lyu, Y.; Zhou, Y. A Multimodal Emotion Perspective on Social Media Influencer Marketing: The Effectiveness of Influencer Emotions, Network Size, and Branding on Consumer Brand Engagement Using Facial Expression and Linguistic Analysis. J. Interact. Mark. 2023, 58, 414–439. [Google Scholar] [CrossRef]

- Janiszewski, C.; Meyvis, T. Effects of Brand Logo Complexity, Repetition, and Spacing on Processing Fluency and Judgment. J. Consum. Res. 2001, 28, 18–32. [Google Scholar] [CrossRef]

- Zhang, J.; Li, X.; Zhang, J.; Wang, L. Effect of Linguistic Disfluency on Consumer Satisfaction: Evidence from an Online Knowledge Payment Platform. Inf. Manag. 2023, 60, 103725. [Google Scholar]

- Frau, M.; Cabiddu, F.; Frigau, L.; Tomczyk, P.; Mola, F. How Emotions Impact the Interactive Value Formation Process During Problematic Social Media Interactions. J. Res. Interact. Mark. 2023, 17, 773–793. [Google Scholar] [CrossRef]

- Dhar, R.; Gorlin, M. A Dual-System Framework to Understand Preference Construction Processes in Choice. J. Consum. Psychol. 2013, 23, 528–542. [Google Scholar] [CrossRef]

- Douce, L.; Willems, K.; Chaudhuri, A. Bargain Effectiveness in Differentiated Store Environments: The Role of Store Affect, Processing Fluency, and Store Familiarity. J. Retail. Consum. Serv. 2022, 69, 103085. [Google Scholar] [CrossRef]

- Reyna, V.F. How People Make Decisions that Involve Risk: A Dual-Processes Approach. Curr. Dir. Psychol. Sci. J. Am. Psychol. Soc. 2004, 13, 60–66. [Google Scholar] [CrossRef]

- Mehrabian, A.; Russell, J.A. The Basic Emotional Impact of Environments. Percept. Mot. Skills 1974, 38, 283–301. [Google Scholar] [CrossRef] [PubMed]

- Neiss, R. Reconceptualizing Arousal: Psychobiological States in Motor Performance. Psychol. Bull. 1988, 103, 345–366. [Google Scholar] [CrossRef]

- Al-Qershi, O.M.; Kwon, J.; Zhao, S.; Li, Z. Predicting Crowdfunding Success with Visuals and Speech in Video Ads and Text Ads. Eur. J. Mark. 2022, 56, 1610–1649. [Google Scholar] [CrossRef]

- Wedel, M.; Pieters, R.; Liechty, J.; Rogers, W.A. Attention Switching During Scene Perception: How Goals Influence the Time Course of Eye Movements Across Advertisements. J. Exp. Psychol. Appl. 2008, 14, 129–138. [Google Scholar] [CrossRef]

- Waldner, M.; Le Muzic, M.; Bernhard, M.; Purgathofer, W.; Viola, I. Attractive Flicker—Guiding Attention in Dynamic Narrative Visualizations. IEEE Trans. Vis. Comput. Graph. 2014, 20, 2456–2465. [Google Scholar] [CrossRef]

- Paas, F.; van Gog, T.; Sweller, J. Cognitive Load Theory: New Conceptualizations, Specifications, and Integrated Research Perspectives. Educ. Psychol. Rev. 2010, 22, 115–121. [Google Scholar] [CrossRef]

- Valtchanov, D.; Ellard, C.G. Cognitive and Affective Responses to Natural Scenes: Effects of Low Level Visual Properties on Preference, Cognitive Load and Eye-Movements. J. Environ. Psychol. 2015, 43, 184–195. [Google Scholar] [CrossRef]

- Wang, Q.; Yang, S.; Liu, M.; Cao, Z.; Ma, Q. An Eye-Tracking Study of Website Complexity from Cognitive Load Perspective. Decis. Support Syst. 2014, 62, 1–10. [Google Scholar]

- Lloyd, D. In Touch with the Future: The Sense of Touch from Cognitive Neuroscience to Virtual Reality. Presence 2014, 23, 226–227. [Google Scholar]

- Spence, C.; Squire, S. Multisensory Integration: Maintaining the Perception of Synchrony. Curr. Biol. 2003, 13, R519–R521. [Google Scholar] [PubMed]

- Cacioppo, J.T.; Petty, R.E.; Chuan, F.K.; Rodriguez, R. Central and Peripheral Routes to Persuasion: An Individual Difference Perspective. J. Pers. Soc. Psychol. 1986, 51, 1032–1043. [Google Scholar]

- Su, L.; Cheng, J.; Swanson, S.R. The Impact of Tourism Activity Type on Emotion and Storytelling: The Moderating Roles of Travel Companion Presence and Relative Ability. Tour. Manag. 2020, 81, 104138. [Google Scholar]

- Joseph, J.; Gaba, V. Organizational Structure, Information Processing, and Decision-Making: A Retrospective and Road Map for Research. Acad. Manag. Ann. 2020, 14, 267–302. [Google Scholar] [CrossRef]

- Takahashi, N.; Mitsufuji, Y. Multi-Scale Multi-Band Densenets for Audio Source Separation. In Proceedings of the 2017 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics, New Paltz, NY, USA, 15–18 October 2017. [Google Scholar]

- Solovyev, R.; Stempkovskiy, A.; Habruseva, T. Benchmarks and Leaderboards for Sound Demixing Tasks. arXiv 2023, arXiv:2305.07489. [Google Scholar]

- Ravanelli, M.; Parcollet, T.; Plantinga, P.; Rouhe, A.; Cornell, S.; Lugosch, L.; Subakan, C.; Dawalatabad, N.; Heba, A.; Zhong, J.; et al. Speechbrain: A General-Purpose Speech Toolkit. arXiv 2021, arXiv:2106.04624. [Google Scholar]

- de Vries, L.; Gensler, S.; Leeflang, P.S.H. Popularity of Brand Posts on Brand Fan Pages: An Investigation of the Effects of Social Media Marketing. J. Interact. Mark. 2012, 26, 83–91. [Google Scholar]

- Lee, D.; Hosanagar, K.; Nair, H.S. Advertising Content and Consumer Engagement on Social Media: Evidence from Facebook. Manag. Sci. 2018, 64, 5105–5131. [Google Scholar]

- Barnes, S.J. Smooth Talking and Fast Music: Understanding the Importance of Voice and Music in Travel and Tourism Ads Via Acoustic Analytics. J. Travel Res. 2023, 63, 1070–1085. [Google Scholar]

- De Veirman, M.; Cauberghe, V.; Hudders, L. Marketing through Instagram Influencers: The Impact of Number of Followers and Product Divergence on Brand Attitude. Int. J. Advert. 2017, 36, 798–828. [Google Scholar]

- Jin, S.V.; Muqaddam, A.; Ryu, E. Instafamous and Social Media Influencer Marketing. Mark. Intell. Plan. 2019, 37, 567–579. [Google Scholar] [CrossRef]

- Simonsohn, U. Two Lines: A Valid Alternative to the Invalid Testing of U-Shaped Relationships with Quadratic Regressions. Adv. Methods Pract. Psych. Sci. 2018, 1, 538–555. [Google Scholar]

- Haans, R.F.J.; Pieters, C.; He, Z. Thinking about U: Theorizing and Testing U- and Inverted U-Shaped Relationships in Strategy Research. Strateg. Manag. J. 2016, 37, 1177–1195. [Google Scholar]

- Yang, Q.; Li, H.; Lin, Y.; Jiang, Y.; Huo, J. Fostering Consumer Engagement with Marketer-Generated Content: The Role of Content-Generating Devices and Content Features. Internet Res. 2022, 32, 307–329. [Google Scholar]

- Wang, C.L. Editorial—What is an Interactive Marketing Perspective and What are Emerging Research Areas? J. Res. Interact. Mark. 2024, 18, 161–165. [Google Scholar]

- Wang, C.L. Editorial: Demonstrating Contributions through Storytelling. J. Res. Interact. Mark. 2025, 19, 1–4. [Google Scholar]

- Zhang, X.; Zhao, Z.; Wang, K. The Effects of Live Comments and Advertisements on Social Media Engagement: Application to Short-Form Online Video. J. Res. Interact. Mark. 2024, 18, 485–505. [Google Scholar]

- Wang, X.; Lai, I.K.W.; Lu, Y.; Liu, X. Narrative or Non-Narrative? The Effects of Short Video Content Structure on Mental Simulation and Resort Brand Attitude. J. Hosp. Market. Manag. 2023, 32, 593–614. [Google Scholar] [CrossRef]

- Yin, J.; Li, T.; Ni, Y.; Cui, Y. The Power of Appeal: Do Good Looks and Talents of Vloggers in Tourism Short Videos Matter Online Customer Citizenship Behavior? Asia Pac. J. Tour. Res. 2024, 29, 1555–1572. [Google Scholar] [CrossRef]

- Deng, D.S.; Seo, S.; Li, Z.; Austin, E.W. What People Tiktok (Douyin) About Influencer-Endorsed Short Videos on Wine? An Exploration of Gender and Generational Differences. J. Hosp. Tour. Technol. 2022, 13, 683–698. [Google Scholar] [CrossRef]

- Lin, T.M.; Lu, K.Y.; Wu, J.J. The Effects of Visual Information in Ewom Communication. J. Res. Interact. Mark. 2012, 6, 7–26. [Google Scholar] [CrossRef]

- Nordhielm, C.L. The Influence of Level of Processing on Advertising Repetition Effects. J. Consum. Res. 2002, 29, 371–382. [Google Scholar] [CrossRef]

- Balducci, B.; Marinova, D. Unstructured Data in Marketing. J. Acad. Mark. Sci. 2018, 46, 557–590. [Google Scholar] [CrossRef]

- Ballouli, K.; Heere, B. Sonic Branding in Sport: A Model for Communicating Brand Identity through Musical Fit. Sport Manag. Rev. 2015, 18, 321–330. [Google Scholar] [CrossRef]

- Grewal, R.; Gupta, S.; Hamilton, R. Marketing Insights from Multimedia Data: Text, Image, Audio, and Video. J. Mark. Res. 2021, 58, 1025–1033. [Google Scholar] [CrossRef]

- Huang, Z.; Zhu, Y.; Hao, A.; Deng, J. How Social Presence Influences Consumer Purchase Intention in Live Video Commerce: The Mediating Role of Immersive Experience and the Moderating Role of Positive Emotions. J. Interact. Mark. 2023, 17, 493–509. [Google Scholar] [CrossRef]

- Ngai, E.W.T.; Wu, Y. Machine Learning in Marketing: A Literature Review, Conceptual Framework, and Research Agenda. J. Bus. Res. 2022, 145, 35–48. [Google Scholar] [CrossRef]

| Variable | Mean | SD | Min. | Max. |

|---|---|---|---|---|

| Like | 601.99 | 1727.35 | 0 | 104,378 |

| Share | 343.84 | 1047.45 | 0 | 12,234 |

| Bookmarks | 175.96 | 316.66 | 0 | 8582 |

| Comment | 237.51 | 692.82 | 0 | 11,563 |

| Emotional arousal | 0.52 | 0.16 | 0.18 | 0.89 |

| Visual variation | 7.49 | 3.52 | 1 | 34.40 |

| Speech rate | 4.97 | 3.02 | 1.74 | 8.75 |

| Pitch | 341.72 | 62.77 | 124.52 | 878.92 |

| Loudness | 55.62 | 20.72 | 31.52 | 119.78 |

| Emotional valence | 0.59 | 0.14 | 0.18 | 0.78 |

| Number of followers | 532,981.32 | 9389.95 | 11,152 | 1,382,657 |

| Functional words ratio | 0.22 | 0.81 | 0.04 | 0.31 |

| Cognitive words ratio | 0.15 | 0.22 | 0.07 | 0.38 |

| Emotional words ratio | 0.13 | 0.12 | 0.04 | 0.35 |

| Social process words ratio | 0.17 | 0.14 | 0.08 | 0.25 |

| Readability | 0.71 | 0.25 | 0.24 | 0.92 |

| Music rhythm | 110.85 | 25.52 | 65.82 | 179.23 |

| Image quality | 0.87 | 0.15 | 0.63 | 0.92 |

| Variables | Model 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| β | β | β | β | β | |

| Emotional arousal | 0.24 *** | 0.21 *** | 0.22 *** | ||

| Emotional arousal2 | –0.12 ** | –0.10 ** | –0.12 ** | ||

| Visual variation | 0.27 *** | 0.25 *** | 0.21 *** | ||

| Visual variation2 | 0.002 | ||||

| Emotional arousal * Visual variation | 0.18 *** | ||||

| Matching degree | 0.22 *** | ||||

| Controlled variables | |||||

| Number of influencer followers | 0.12 ** | 0.10 ** | 0.11 ** | 0.09 ** | 0.08 * |

| Influencer gender | –0.05 | –0.04 | –0.03 | –0.03 | –0.02 |

| Speech rate | 0.08 | 0.07 | 0.06 | 0.06 | 0.05 |

| Pitch | 0.03 | 0.02 | 0.03 | 0.02 | 0.01 |

| Loudness | 0.07 | 0.06 | 0.07 | 0.05 | 0.04 |

| Emotional valence | 0.1 | 0.09 | 0.07 | 0.08 | 0.07 |

| Functional words ratio | 0.04 | 0.03 | 0.02 | 0.02 | 0.02 |

| Cognitive words ratio | 0.02 | 0.01 | 0.05 | 0.01 | 0.01 |

| Emotional words ratio | 0.06 | 0.05 | 0.02 | 0.04 | 0.03 |

| Social process words ratio | 0.03 | 0.02 | 0.01 | 0.02 | 0.01 |

| Readability | 0.05 | 0.04 | 0.04 | 0.03 | 0.03 |

| Music rhythm | 0.09 | 0.08 | 0.06 | 0.07 | 0.06 |

| Image quality | 0.07 | 0.06 | 0.06 | 0.06 | 0.05 |

| R2 | 0.18 ** | 0.21 ** | 0.21 ** | 0.25 ** | 0.24 ** |

| Max VIF | 1.6 | 3.5 | 2.7 | 3.5 | 3.4 |

| AIC | 2245.8 | 2132.6 | 2153.4 | 2024.2 | 2085.4 |

| N | 12,842 | 12,842 | 12,842 | 12,842 | 12,842 |

| Robustness of Data | Robustness of the Dependent Variable | |||||

|---|---|---|---|---|---|---|

| Model 6 | Model 7 | Model 8 | Model 9 | Model 10 | Model 11 | |

| Emotional arousal | 0.13 *** | 0.11 *** | 0.11 *** | 0.27 *** | 0.21 *** | 0.24 *** |

| Emotional arousal2 | –0.08 *** | –0.05 *** | –0.07 *** | –0.14 ** | –0.11 ** | –0.13 ** |

| Visual variation | 0.12 *** | 0.21 *** | ||||

| Emotional arousal * Visual variation | 0.07 *** | 0.15 ** | ||||

| Matching degree | 0.15 *** | 0.25 *** | ||||

| Controls | Y | Y | Y | Y | Y | Y |

| AIC | 5428.2 | 5246.8 | 5275.2 | 1782.6 | 1525.5 | 1522.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Q.; Wang, Y.; Wang, Q.; Jiang, Y.; Li, J. Harmonizing Sight and Sound: The Impact of Auditory Emotional Arousal, Visual Variation, and Their Congruence on Consumer Engagement in Short Video Marketing. J. Theor. Appl. Electron. Commer. Res. 2025, 20, 69. https://doi.org/10.3390/jtaer20020069

Yang Q, Wang Y, Wang Q, Jiang Y, Li J. Harmonizing Sight and Sound: The Impact of Auditory Emotional Arousal, Visual Variation, and Their Congruence on Consumer Engagement in Short Video Marketing. Journal of Theoretical and Applied Electronic Commerce Research. 2025; 20(2):69. https://doi.org/10.3390/jtaer20020069

Chicago/Turabian StyleYang, Qiang, Yudan Wang, Qin Wang, Yushi Jiang, and Jingpeng Li. 2025. "Harmonizing Sight and Sound: The Impact of Auditory Emotional Arousal, Visual Variation, and Their Congruence on Consumer Engagement in Short Video Marketing" Journal of Theoretical and Applied Electronic Commerce Research 20, no. 2: 69. https://doi.org/10.3390/jtaer20020069

APA StyleYang, Q., Wang, Y., Wang, Q., Jiang, Y., & Li, J. (2025). Harmonizing Sight and Sound: The Impact of Auditory Emotional Arousal, Visual Variation, and Their Congruence on Consumer Engagement in Short Video Marketing. Journal of Theoretical and Applied Electronic Commerce Research, 20(2), 69. https://doi.org/10.3390/jtaer20020069