Abstract

Evolutionary theory should be a fundamental guide for neuroscientists. This would seem a trivial statement, but I believe that taking it seriously is more complicated than it appears to be, as I argue in this article. Elsewhere, I proposed the notion of “bounded functionality” As a way to describe the constraints that should be considered when trying to understand the evolution of the brain. There are two bounded-functionality constraints that are essential to any evolution-minded approach to cognitive neuroscience. The first constraint, the bricoleur constraint, describes the evolutionary pressure for any adaptive solution to re-use any relevant resources available to the system before the selection situation appeared. The second constraint, the satisficing constraint, describes the fact that a trait only needs to behave more advantageously than its competitors in order to be selected. In this paper I describe how bounded-functionality can inform an evolutionary-minded approach to cognitive neuroscience. In order to do so, I resort to Nikolaas Tinbergen’s four questions about how to understand behavior, namely: function, causation, development and evolution. The bottom line of assuming Tinbergen’s questions is that any approach to cognitive neuroscience is intrinsically tentative, slow, and messy.

1. Introduction

In the same way as Theodosius Dobzhansky said that “nothing in biology makes sense except in the light of evolution” [1], evolutionary theory should always be present in the practice of cognitive neuroscientists, and neuroscientists in general. This would seem a trivial statement, but I believe that taking it seriously is more complicated than it appears to be, as I argue here.

Let me begin by the simple evolutionary assumption that we must treat the brain as an adaptation. A generally accepted view in evolutionary thought is that adaptations are innovations that make a difference between alternative biological designs embodied in different individuals. Such designs are selected among available variations based on how well they perform in a given environment. Efficient adaptation increases survival and/or reproduction (e.g., detecting predators, improving foraging, winning resource competition, securing parental care, gaining dominance and status, etc.,) better than its alternatives. Therefore, the phenotype of an organism should be understood as a collection of adaptations that have been added and maintained (i.e., copied over generations) in the design features of a given species, because these adaptations had the consequence of solving problems that promoted, in some way or other, survival and reproduction.

Unfortunately, the view I have just presented about adaptations is an idealization. There is no widely accepted set of criteria to decide whether some trait is an adaptation or not. In principle, one assumes that a trait has an effect, and that such an effect has a selective advantage, which is what makes the trait an adaptation. Traits have normally numerous effects, and it is often very difficult to discern how many of them have had some impact on the fitness of the organism and on the prevalence of the trait. Some traits are indeed adaptations, that is, traits that have appeared over evolutionary time and have been selected because they contributed to the survival and reproduction of individuals. There are also exaptations, i.e., traits that were selected for some adaptive function that later acquired some other adaptive function (such as feathers that were selected for temperature regulation and then maintained for flight), and spandrels, i.e., traits that do not have any adaptive function, being a byproduct of the evolution of some other trait rather than a direct product of adaptive selection [2]. In short, not all traits comply with some adaptive function, nor how they are used now explains its design or emergence. Hence, it is risky to assume that any trait we identify is an adaptation. The default assumption must be that it is not an adaptation; before doing so, we need to provide evidence to accept it, and this is not easy to obtain, and more difficult when we deal with the brain.

2. The Complexities in Characterizing the Evolution of Brain Function

The way to approach the evolutionary conundrum of identifying adaptations is to identify those features that have some adaptive effect, and then suggest the possible traits that could be responsible for them. The brain as a cognitive adaptation presents, nevertheless, certain challenges for the researcher to identify traits [3]. In trying to explain the brain as a cognitive adaptation, one can identify an adaptive behavior that is supposed to have been produced, but the problem is to identify the actual trait itself, because the cognitive component that is responsible for producing the adaptive behavior is not as self-evident as, say, an eye, a liver, or a wing [4].

One illustrative example is the hand. The hand does not produce one but many effects, some of which have surely contributed to the selective advantage of humans. Should we treat the hand as a single adaptation or as many? The answer is not easy. In addition, it will probably be very difficult to identify which fingers have been necessary and which irrelevant to carry out the hand’s adaptive effects. Finally, some other parts of the body might have also contributed to such effects, and it will also be very difficult to distinguish the differential contributions of the hand-body interactions in the fitness of humans. The brain is in this sense very similar to the case of the hand. The different components of the brain are highly integrated in such a way that it will be very difficult to distinguish discrete and specific functions.

A second important problem for an evolution-minded approach to the brain as a cognitive organ is that the evolutionary treatment of behaviors is still in its infancy. At the moment, there are two different ways in which behavior is recognized as an evolutionary factor. On the one hand, behavior can lead evolution. For example, the first cetaceans produced the behavior of feeding in the water even before having body specializations for an aquatic lifestyle. However, once the behavior produced selective advantages, it began to induce the modification of its morphology. In contrast, the effects of behavior can sometimes delay, instead of drive, adaptations. When populations of Homo sapiens emerged from Africa and arrived at a very cold Europe, they used furs from animals to protect themselves from the cold. This behavior constrained human pelt evolution. In short, behavioral innovations can inhibit as well as drive evolution.

There is another way in which behavior is recognized as an evolutionary process, which is known as the Baldwin Effect [5]. This effect consists of the fact that innovation initially driven by a learned behavior can later be integrated during development, saving the species the time and cost of learning. The language faculty would be an example of such a process. A widely held hypothesis is that an antecessor species of Homo sapiens could have created some sort of proto-language as a cultural product. Such a proto-language could had afforded the species a strong adaptive advantage such that any genetic mutation leading to a neural innovation with increased linguistic capacity, reducing the time and the resources to manifest the faculty, would have been rapidly selected.

Development introduces an additional complication in the evolutionary approach to the brain. A developmental trait implies that the selective advantage could be located at any moment of the maturational process. In this sense, the adaptation could be a trait in the initial stage of development because it could potentially be developed into different phenotypes, or it could be only one specific developmental stage in which the trait embodies a phenotype that is adaptive but only during that specific developmental stage.

Finally, there is a question of time. Evolution is an extremely slow process. The shaping of the brain occurs over very long periods. Brains are not designed to solve current challenges, but instead were shaped in numerous and very different environments to solve problems that have long since disappeared. There are many examples of evolutionary vestiges: our difficulty in learning to fear modern threats, such as cars, and our near effortless learning to fear more ancient threats, such as snakes and spiders; our preference for sugar and fat, which was once adaptive, is now maladaptive.

3. Current Brain-Cognition Correlations Are Useful but Insufficient to Understand Brain Function

In a very simplified way, cognitive neuroscience can be said to study the relation between brain function and cognition. Generally, researchers assume that current correlations between brain and cognition provide the necessary and sufficient evidence to understand how the brain works and relates to cognition. The main point of this paper is to emphasize that it might be necessary, but it is certainly not sufficient; evolution is also crucially necessary. A specific brain trait is the product of an aggregation of past traits that have been selected in each species’ evolutionary history; in short, a brain trait is a composite of adaptations that appeared and were maintained until now [3]. Each trait was selected in a particular environment due to its adaptedness, but its present manifestation is the result of continuous modifications responding to subsequent environments in which it might have helped to address problems different to the ones for which the adaptation was originally selected for. Therefore, the way a trait works carries with it the evolutionary history of its modifications, rather than being the optimal design for the problems for which it is currently used.

A way to understand how the evolutionary history of the brain is relevant to understand the current function of the brain is via the notion of “bounded functionality” [6], which is based on notion of bounded rationality [7]. Bounded rationality was developed by Herbert Simon to characterize the dynamics of economic agents when dealing with insoluble problems. The notion of bounded functionality borrows the principles of bounded rationality to describe the constraints that should be taken into account when trying to understand the evolution of the brain.

There are two bounded-functionality constraints that are essential to any evolution-minded approach to the brain as a cognitive adaptation. The first constraint, the bricoleur constraint describes the evolutionary pressure for any adaptive solution to re-use any relevant resources available to the system before the selection situation appeared. The second constraint, which I called satisficing constraint, describes the fact that a trait only needs to behave more advantageously than its competitors in order to be selected [6]. In other words, to be selected, an adaptation need not show an optimal (in an engineering sense) behavior to solve the adaptive problem. Let me explain why these constraints are relevant to understand the importance of the evolutionary history to characterized current brain function.

4. The Bricoleur Constraint

The bricoleur constraint could be summarized by the idea that evolution favors adaptations based on previous materials and processes. The term bricoleur is taken from François-Jacob’s metaphor [8] on how evolution tinkers with available forms and functions. The fact is that evolution does not design new organisms; instead, new organisms emerge out of the genetic variation inherent in the gene pool. It is a well-worn truism that an engineer would not design us by starting from where nature did, for example by designing an animal to walk on two legs by tinkering with the blueprint meant for building one with four legs. Indeed, natural selection provides no mechanism for advance planning. Selection can only tinker with the available genetic variation, rather than “having a purpose”. Thus, whatever the adaptive requirements of the organism, the way evolution shapes brains must be constrained by factors such as the “materials” available to solve its adaptive problems. Note that this constraint does not completely prevent new traits from appearing in evolution. It just states the fact that many adaptations must be understood as the product of re-using and evolving existing traits for a different purpose. I will now present an illustrative example of the bricoleur constraint.

Some years ago, M.A Anderson [9] presented a hypothesis, the massive redeployment hypothesis (MRH), about the cortical organization of the brain that exemplifies the bricoleur constraint. MRH is a theory about the functional architecture of the human brain that uses the analogy of component reuse in software engineering. Anderson suggested that the brain is a set of exaptations originally selected to serve some specific purpose, but later used for new purposes and combined to support new capacities, without disrupting their participation in existing functions. Anderson used MRH to make some specific empirical predictions regarding the resulting functional organization of the brain.

Firstly, he presented evidence regarding how every brain region processes various mental faculties in different functional domains (for example, Broca’s area subserves both language and arithmetic operations). Secondly, he suggested that older phylogenetic areas are more likely to participate in many different functions, provided that they have been re-used more. In support of this idea, he presented evidence of a strong association between the phylogenetic age of different brain regions and the use that diverse mental functions make of it. Finally, Anderson provided evidence that there is an association between the phylogenetic age of a cognitive function and the degree of brain localization. Specifically, more recent functions seem to be using more, and more dispersed, brain regions than evolutionarily older functions, because the more recent a function is, the more likely it is that it will not have localized and specialized brain regions, and therefore exapts neural circuits in different parts of the brain that can be useful.

The MRH approach is a very good example of how the bricoleur constraint has shaped the brain during evolution. However, mainstream neuroscience is far from addressing the architecture of the brain with anything similar to MRH. For one thing, neuroscience still strongly supports the idea of a modular brain, with specialized areas underlying nearly all mental processes.

5. The Satisficing Constraint

The satisficing constraint could be summarized by the idea that evolution favors adaptations that maximize the cost/benefit tradeoff of traits in a selection situation. In his original proposal for cognitive systems, Herbert Simon [7], indicated that human intelligent solutions are not optimal but satisficing. By “satisficing” he meant that problem-solving and decision-making strategies are undertaken until a viable alternative is found that satisfies the goal criterion (the aspiration level) established beforehand, and thus selects that alternative. Simon originally used an analogy with chess to illustrate this aspect of satisficing. Chess players are constrained in their choice of moves by their ability to conceive all possible combinations of their own and their opponent’s moves. The player necessarily generates and examines a small number of possible moves, making a choice as soon as one is discovered that is satisfactory. The choice is satisficing because information processing constraints prevent the player from making the optimal choice (which, in a chess game, could potentially involve processing some 10,120 combinations).

In evolutionary theory, satisficing can be understood as a viable alternative that satisfies the adaptive goal, i.e., the solution to an adaptive problem lies where the adaptive value is maximized [10]. On this account, adaptive solutions only require meeting some minimum requirement, so as to allow a given organism to survive and reproduce more advantageously than its competitors. Thus, there is no need for an adaptation to be optimally designed, in engineering terms, to solve the problem concerned. The satisficing constraint invites us to detach engineering constraints from adaptation, by characterizing solutions that are merely “selectively efficient”. Biological adaptations are “good enough” (better than the alternatives), not “the best possible” under the circumstances. Let me present an example of the satisficing constraint.

The area of human decision-making is one of the most explored areas in relation to the idea of satisficing solutions. In everyday decisions, rationality has usually been seen to be bounded by incomplete knowledge of the relevant data and constraints of resources or time. Evidence has shown that humans address these limitations with the use of heuristic strategies that simplify decision-making problems by prioritizing some sources of information while ignoring others.

Cognitive bias is an example of this is the notion. Cognitive biases have been defined as systematic patterns of interpretation of stimuli or situations that deviate from what an objective or rational analysis would deduce. Examples of cognitive biases in humans are the tendency to interpret information in a way that confirms our own preconceptions, the propensity to favor people like ourselves, or overconfidence in our own capabilities to make the correct decision.

Even if the bulk of research on cognitive biases has been carried out on humans, it seems that they also exist in most animals. The evolutionary rationale behind the usefulness of cognitive biases seems to lie in situations where a decision would require more information, time, or resources than those available. Since the optimal decision would not be possible to achieve under such circumstances, evolution has come up with adaptive patterns of analysis that provide a decision shortcut.

Obviously, each species has different type of biases that work better in their environments although, surprisingly, there are some biases that are shared by many different species, such as flies and humans, like those known as optimistic and pessimistic biases. Bateson [11] defines optimism bias as a behavioral decision that is consistent with the individual having either a higher expectation of reward, or a lower expectation of punishment, than the same individual in a different state. A pessimism bias would be the inverse of an optimism bias. The optimism/pessimism biases are applied by animals in, for example, situations of stimulus ambiguity, which require a certain degree of conjecturing in order to make a decision. The optimism bias would remove the ambiguity by favoring a positive categorization of the stimuli, whereas the pessimism bias would do the opposite.

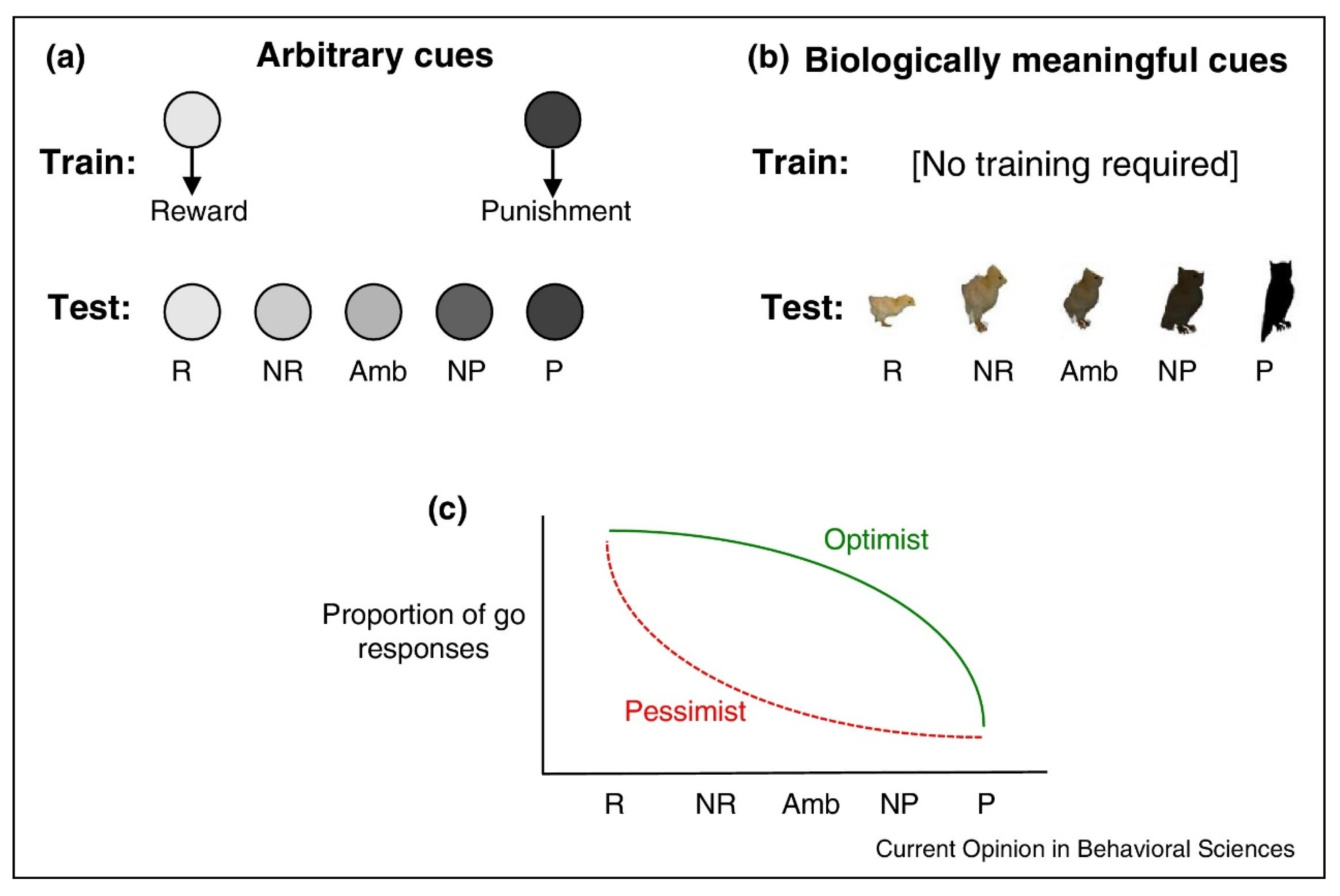

Studies about optimism and pessimism biases in animals have used what are known as judgment bias tasks, that is, probing the response to ambiguous stimuli. In the most common form, individuals are required to respond to ambiguous cues that are in a dimensional continuum between two clear-cut cues. The two opposing clear-cut cues usually differ in the valence of an associated outcome, one being positive and the other negative, normally with some biological meaning (such as one being the prey and the other the predator), so that they do not require prior training. In other paradigms, the cues require previous learning. In either case, the experimenters create a set of ambiguous stimuli along a dimensional continuum (modifying its form, color, or other features) between the positive and negative cues to which the subjects must respond. The smooth variation between the cues allows the measure of optimistic and pessimistic decisions in front of ambiguous stimuli.

Figure 1 presents a summary of the experimental protocols used in standard judgement bias tasks with animals. In paradigms like (a), the task is based on an arbitrary association between the cues used and the associated outcomes. In such paradigms, subjects must learn the association between the cues and the involved reward (R) and punishment (P) (NR standing for near reward, Amb for maximally ambiguous and NP for near punishment). In paradigms like (b), the cues have biological meaning to the individuals. (C) shows how the responses behaviorally vary (in this case a “go” behavior) depending on which bias (optimistic or pessimistic) is applied.

Figure 1.

Methodology used in Judgment bias tasks (From [11]) (a) Description of what is contained in the first panel; (b) Description of what is contained in the second panel; (c) Description.

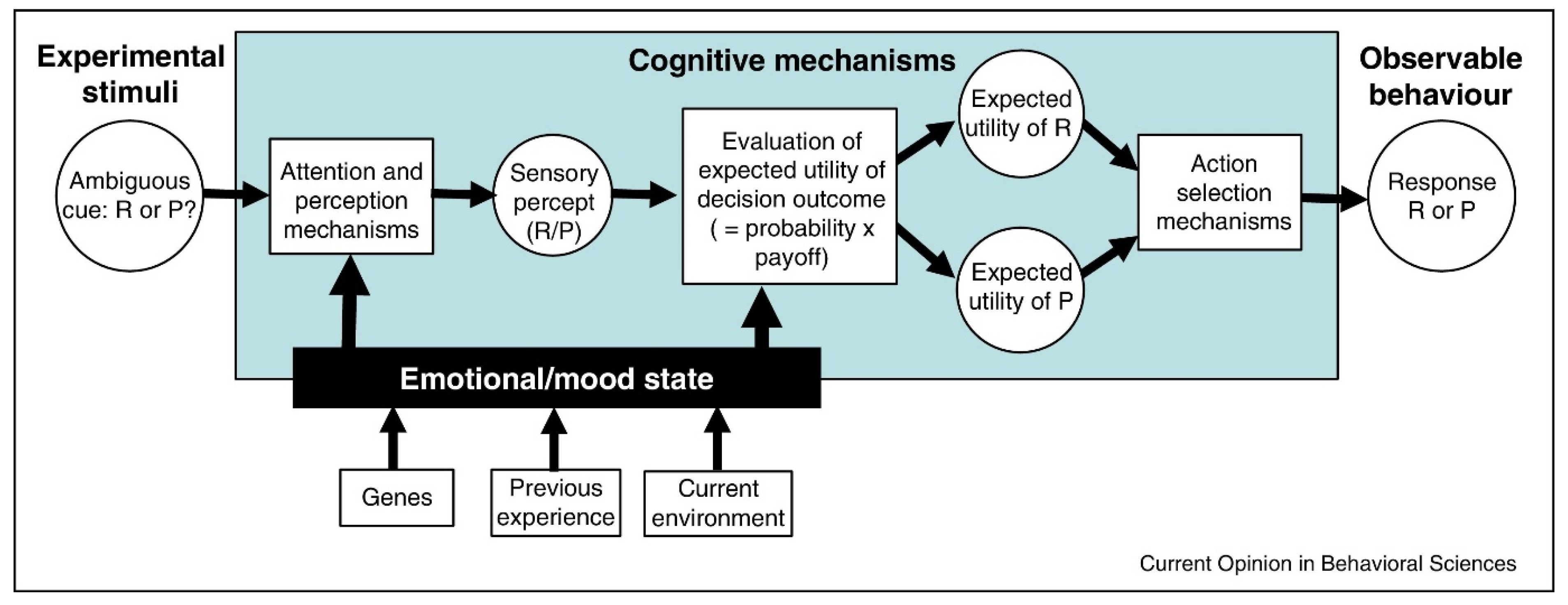

The different factors that influence an optimistic or pessimistic bias are described in Figure 2. A specific behavioral output can be understood as the product of different cognitive and emotional mechanisms that participate in decision-making processes.

Figure 2.

The cognitive mechanisms involved when an individual respond to an ambiguous cue in a judgement bias task. Emotional states or moods may modulate attention to cues (From [11]).

Theoretical and empirical studies have tried to assess and quantify the adaptive value of cognitive biases. These studies try to assess how animals behave when faced with situations involving risk and uncertainty. The majority of theoretical studies assess judgment bias tasks using an area of Bayesian decision theory known as signal detection theory (SDT). SDT is based on the assumption that an optimal decision depends, firstly, on the strength of the evidence provided by the cue as being one way or another; secondly, on the animal’s estimates of the prior probabilities that reward and punishment will occur and, finally, on the payoffs for the animal for each possible outcome. Under this model, a pessimist corresponds to the individual with a tendency towards the no-go response, whereas an optimist tends towards the go response. These theoretical models have been backed by empirical studies using judgement bias paradigms [11].

My take is that cognitive biases in general, and the optimism/pessimism ones in particular, are excellent examples of satisficing solutions (good enough solutions among those available) to problems that would require more time, resources or information to obtain an optimal outcome (the best solution under the circumstances). Cognitive biases perform good enough (better than the available alternatives) such that they have been selected and maintained in many species. The optimism bias is useful under certain circumstances, while the pessimist is so under others. As a balance, even if there are failures in certain optimistic or pessimistic uses, the species takes advantage of the fact that the global outcome of gains and losses when applying both biases is better than applying only one of them or none at all.

Generally, the importance of evolution in psychology and neuroscience is widely recognized by researchers. However, regardless of its popularity, the fact is that evolutionary thought is seldom applied in mainstream research in psychology and neuroscience. There are different reasons for this but, in my opinion, the most important one is that an evolution-minded neuroscientific research is tentative, slow, and messy. These are the worst enemies in academia, where the productivity pressure constrains researchers to publish as much, as clear-cut and as fast as possible. Yet, evolution is an unavoidable constraint for understanding the brain. If we do not assume it necessary to address the brain as a product of evolution, we will waste our time. Neuroscience can only be tentative, slow, and messy, because the original adaptive contribution of specific mental components to the survival and reproduction of the species is hidden in our evolutionary history. Every mental component has a particular evolutionary history of its own, grounded in a selective situation that, for the most part, has long passed. Furthermore, every mental component is also the product of previous versions that have also had an evolution of their own. Henceforth, its slow, tentative, and messy nature. Be that as it may, and however difficult it might seem, the study of the brain can only be addressed via evolutionary means that consider all the complexities of any sound evolutionary explanation.

6. There Are Methods Available to Characterize the Evolutionary History of Brain Function with Current Data

Even if the task to identify the original adaptive value of a particular neural trait is extremely difficult, it is nevertheless possible. There are different methods available, including comparative phylogenetics, evolutionary morphology, behavioral and evolutionary ecology, molecular and evolutionary genomics [12,13,14,15,16], that can be used to provide evidence of the origins of a neural or behavioral trait and its evolution. These new approaches open the possibility for a better understanding of the causal links between brain and behavior, as well as brain and cognitive function.

Let me present some examples that provide evidence of the evolution of a behavioral trait from an adaptation to an exaptation. The first example is a study [17] that combined genomic, morphological and behavioral methods to characterize autotomy, that is, the sacrifice of body parts that some animals show to escape predation. Autotomy has independently evolved multiple times in many different species. The behavior has been usually considered as an antipredatory trait, but there are additional survival benefits associated with autotomy, such as reducing the cost of injury and escaping nonpredatory entrapment.

The authors studied the evolution of autotomy in a species of insects, the leaf-footed bugs, by focusing on the speed at which autotomy is exerted. This factor differs in each possible adaptive advantage of autonomy. Antipredatory behavior must be exerted quickly (i.e., in the range of seconds), otherwise it would not serve its purpose, while reducing the cost of injury or escaping nonpredatory entrapment can be carried out more slowly. The authors hypothesized that if they assessed autonomy speed along the phylogenetic tree of the leaf-footed bug taxa, they could identify the original adaptive value of the behavior, as well as its evolutionary history.

Emberts and colleagues carried out their investigation collecting 62 leaf-footed bug species from the wild. These species represent 2% of all extant leaf-footed bug taxa. They then performed a phylogenetic reconstruction using the maximum likelihood method, which allowed them to build a phylogenetic tree of the leaf-footed bug taxa. In addition, they reconstituted the evolution of the behavior by conducting a series of ancestral state reconstructions.

The analyses showed that the leaf-footed bug ancestors autotomized their hind limbs slowly. Ancestral state reconstructions estimated that 42.7% of the ancestral leaf-footed bug population took more than an hour to autotomize their hind limbs. In addition, they found that from these slow ancestral rates, fast autotomy (less than two minutes) arose multiple times in the evolution of the taxa. These results indicate that sacrificing a limb was a slow behavior at the beginning. At this rate, the authors conclude that the leaf-footed bug ancestors used slow autotomy to reduce the cost of injury or to escape nonpredatory entrapment but could not use autotomy to escape predation. Moreover, they suggest that autotomy to escape predation is a coopted benefit (i.e., exaptation), revealing one way that sacrificing a limb to escape predation may arise.

Another strategy to identify possible exaptations is via the study of the evolution of morphological features and developmental constraints, and one good concrete example is the evolution of body plans. Body plans illustrate how selective challenges constrain genetic programs to provide effective morphological changes. Among other things, body plans must be flexible enough to provide adaptive behavioral innovations. Therefore, understanding the evolution of a body plan can be a source of evolutionary evidence for exaptations. Let me present an example.

Lires and colleagues [18] studied the evolution of the body plan in the anuran amphibians, that is, in frogs and toads. The anuran set of postcranial features have been considered adaptations to jumping locomotion since their evolutionary origin, although the authors note that this interpretation lacks evolutionary evidence. The fact is that the anuran body plan and its locomotor repertoire differ from its ancestral tetrapods. This taxon had a locomotor pattern similar to that seen today in salamanders, which move with lateral bending of the spine and asymmetrical movements of their short limbs.

The authors explored the transition from the tetrapod body plan to the anuran one, by assessing the locomotor mode of the salientian Triadobatrachus massinoti, which is one of the few examples of the transition from ancient tetrapods to anuran amphibians. The extinct taxon T. massinoti shows a set of morphological features that have been considered an intermediate stage in the evolution of the anuran body plan.

Lires et al. compared morphometric traits of T. massinoti with locomotion in amphibians, using multivariate statistics and comparative phylogenetic methods. The authors used the limb proportions of extant amphibians to infer the locomotor abilities in T. massinoti. The results show that the limb proportions of T. massinoti are more like those of salamanders than of frogs, indicating that the locomotor skill repertoire of this taxon is similar to the salamander-like asynchronous lateral undulatory movements. Furthermore, the findings suggest that T. massinoti would have been unable to leap or jump like living frogs. Therefore, the authors advance the hypothesis that the postcranial features that T. massinoti shares with frogs, like iliac morphology, would not have originally been linked to a saltatory locomotion and, thus, they might have been coopted as exaptations for jumping in anurans.

A final example illustrates the advances in evolutionary analyses based on genomic and morphological methods, which are being used to understand complex brain functions, including high level cognitive functions. For instance, comparative transcriptomics analysis is currently being applied to characterize the role of evolutionary genomic innovations in the development of neural modifications that have cognitive implications.

In one study [19], the authors showed how the rapid evolutionary cortical expansion of the default mode network (DMN) is associated with high expression of human-accelerated (HAR) genes in humans in comparison with chimpanzees and macaques. The DMN is a multimodal associative cortical area, which has been associated with a cortical pattern in today’s human population that has higher variability than in other primates. Such a feature is thought to correlate with the high inter-subject variation in cognitive abilities among humans. Accordingly, the finding of high expression of HAR genes in these brain areas suggest that genes linked to hominization may have a role in the process of developing higher cognitive abilities.

The authors also found that the HAR genes highly expressed in the DMN contain genetic variants that have been related to human intellectual ability and sociability in studies correlating IQ and DMN morphology. This supports the idea that the expansion of multimodal associative areas has been an important driving force of human brain evolution.

Interestingly, the authors suggest that this evolutionary innovation would have had the adverse effect of an increased risk of brain dysfunction, in terms of psychiatric conditions. The authors suggest that the pattern of cortical expression of some HAR genes shows overlap with genes involved in mental disorders, particularly in schizophrenia and autism, which, by the way, present disturbed DMN functional connectivity.

The bottom line of the previous studies is that there are already a number of methods available that allow researchers to study the evolutionary history of brain morphology and functioning with high-level cognitive abilities. Is it nevertheless possible to falsify any of the hypotheses suggested in this type of studies? The answer is yes. Hypotheses about an exaptation can be falsified in the same way that any other evolutionary hypothesis can be falsified. Take the case of the suggestion that the iliac morphology of T. massinoti cannot linked to saltatory locomotion. To falsify such a hypothesis, you only need to provide a biomechanical model of such an iliac morphology that shows that jumping is its natural locomotion mode. The same applies to any other hypotheses about exaptations; you only need to provide alternative evidence to the one supporting the exaptation hypothesis.

7. The Timely Tinbergen’s Questions

I will now present how the previous methodologies can be implemented in an evolutionary-minded approach to address complex cognitive questions. For illustrative purposes, I will resort to Niko Tinbergen’s famous four questions. In 1963 Tinbergen (Tinbergen, 1963) suggested that understanding behavior requires answering four different questions: a) the function of the behavior (understood as the adaptive trait), namely, how the behavior impacts on the animal’s chances of survival and reproduction; b) causation (proximate mechanisms), i.e., what molecular, physiological, neuro-ethological, cognitive and social mechanisms account for the behavior; c) development, that is, how the behavior changes with age, and what experiences and interactions with the environment are necessary for the behavior to be produced, and d) evolution, i.e., how the behavior compares with similar behaviors in related species, and how it might have arisen through the process of phylogeny.

In my opinion, these questions are still the best piece of advice that any researcher in neuroscience could follow. Nevertheless, the last 60 years of research shows a strong bias towards answering the causation question and, with less emphasis, the developmental one, leaving the evolutionary and functional questions at a testimonial level. It is true that there is a trend towards so-called evolutionary psychology, but most (although not all) evolutionary psychology does not really honor its evolutionary label.

The bottom line of assuming Tinbergen’s advice is that we do not need to only address the evolutionary and the functional questions in parallel with other questions; rather, it makes no sense trying to answer any of the questions without considering the others. Any approach to the brain should be multidisciplinary, integrating knowledge from different fields of research to answer such questions.

I will now present some examples that I find particularly illustrative of how to address each one of these questions with an eye on the other questions. There is no special weight to the order of my presentation; the idea is that an answer to any of these questions should consider the other questions. I will place more emphasis, though, on the evolutionary data because it has been more neglected in neuroscience.

8. Function

By function of a trait, Tinbergen pointed to the specific contribution of the trait to the fitness of the individual, that is, its contribution to survival and reproductive success. It is important to note that the function can be applied both to the current adaptive value, as to the original adaptive value that served to select the trait in the first place. Evolutionary theory now accepts that many (most?) traits have current adaptive values different from those that were originally selected, namely, they are exaptations rather than adaptations. In general, we can only try to determine, by experimental methods, their current function, because we lack all the necessary environmental elements of the original situation to assess the original adaptive value of the trait. Be that is as it may, any approach can be suitable if it makes explicit which function, current or original, it is trying to assess. Let me present an example of an evolution-minded approach to answering the current functional question.

One standard approach that has been thoroughly applied by evolutionary biologists, and which can also be applied in neuroscience, is to build mathematical models of how a trait maximizes fitness in a particular environment. The objective of a fitness-maximization model is to provide a measurable characterization of the trade-offs between the costs and the benefits of a set of alternatives. Such models take into account: a) the nature of the adaptive problem itself, b) the analysis of the system’s resources, c) the environment and the interaction with competitors, as well as d) the way in which all these elements interact. Applied to the study of the brain, a fitness-maximization model can help assess different behavioral alternatives of how to solve a given adaptive problem in a specific environment.

There have been many attempts to provide fitness-maximization models of human behavior. However, models do not always fit observations in the field. On average, fitness-maximization models only explain a small part of the behavioral variation in populations. There are, nevertheless, recent approaches that balance the insights that a fitness-maximization model offers with the complexity and variability of human behavior. One of these approaches, known as “error management theory” (EMT) [20], which I would call a satisficing approach, characterizes decision-making not as an optimal system, but as a system that assumes a degree of error to ensure an efficient adaptation to the environment. EMT acknowledges the role of decision-making biases in the fitness and reproductive success of individuals. In the years since its introduction, EMT has produced studies in many different domains.

9. Causation

The study of the causal mechanisms related to behavior, with an evolution-minded perspective, has been the less controversial and most developed area of inquiry. There is however an important aspect of Tinbergen’s approach that has been neglected. In his original paper, Tinbergen supported a multilevel approach to understand the mechanisms producing behavior, “ranging from the behavior of the individual and even of supra-individual societies all the way down to Molecular Biology’ [21].

The multilevel strategy has not been widely implemented by researchers due to its complexity and lack of specific methodologies. However, recently, there have been attempts to link molecular, neurobiological, and hormonal behavior data to the physiology of metabolism and behavior. A major challenge facing scientists in this area is to combine properties that were isolated for the purposes of an experiment, but that were interconnected in ecological environments. Let me present an illustrative example.

Making a decision is a fundamental behavior of all animals, because in ecological situations it may mean the difference between life and death. When a decision is processed, the different alternatives must be weighted as quickly and as precisely as possible by integrating multiple mental processes in a context of uncertainty. Few other mental processes are as complex and challenging.

Behavioral ecologists have developed elaborate models assessing the behavioral strategies that are expected to maximize life-time fitness under specific constraints. These mathematical models have been of great value in behavioral and evolutionary ecology, where the techniques of game theory and optimization have been used to predict the endpoints of natural selection. This approach has revealed some important general principles of how organisms (including humans) should choose between different options, from food items to potential mates.

However, most evolutionary models of decision-making consider a highly simplified environment focused on a single problem or context that is controllable and lack ambiguity. The models have usually focused on decision rules that maximize fitness in specific situations, leaving many critical factors unspecified. In contrast, natural environments are complex and continuously changing. Most of the stimuli that a brain receives are irrelevant and distracting, while relevant sensory input is often partial, ambiguous, and sometimes conflicting with previous interpretations of the situation. In this context, the weighting of different behavioral options changes very quickly over time.

As I already indicated in the previous chapter, the fact is that observational studies show that patterns of decision-making in humans reveal some striking deviations from rational expectations. These include distorted beliefs about external events, inconsistent preferences that are modulated by experience and current context, and apparent violations of the axioms of rational choice theory. These observations seem difficult to reconcile with the fundamental biological concept of natural selection as an optimizing process. Why would evolution produce such apparently irrational behavior?

A developing trend in the neuroscience of decision-making relies upon working with more natural ecological contexts, as well as establishing connections with other relevant issues, such as psychological mechanisms or evolutionary data. One example of this new trend is presented in a study (Fawcett et al., 2014), which suggests that deviations from fitness-maximization optimality may be the result of the rise of cognitive bias as an adaptation to deal with complex and unpredictable environments.

Indeed, the evolutionary rationale behind the usefulness of cognitive biases seems to be the ambiguity and unpredictability of natural environments, which require a certain degree of flexibility at the moment of adopting a particular behavior. Moreover, fluctuations in time and space of the environmental conditions are so strong and fast that these decisions must be readily flexible at any moment. Biases produce satisficing decisions, but on average, they perform well under all the situations individuals usually encounter.

10. Development

The most recent version of Darwinism, Modern Synthesis, stated that transmission genetics was the sole responsible of inheritance. However, over the last fifty years, Modern Synthesis has been enriched with evidence supporting the role of development in the inheritance of phenotypes, which can be materialized throughout the lifetime of an organism. Evidence includes phenomena such as cytoplasmic effects, genomic imprinting, and ecological legacies. There are authors who also include culture in the new inheritance toolbox. The fact is that there are social and ecological factors that influence individual development, which have been seen to accumulate in successive generations. For instance, the transmission of behaviors (such as diet choice or foraging skills) in social animals is now known to be present in many species, including mammals, birds, reptiles, fishes, insects, and cephalopods.

In short, we now understand heredity as occurring not only due to DNA transmission, but also because parents transfer a variety of developmental resources that enable the reconstruction of developmental paths. These findings have changed our idea of heredity from genetic transmission at conception to a complex process that shapes development throughout the lifespan.

The brain is an extremely illustrative example of the importance of the developmental question, because it relies on maturation and learning. The brain is shaped by time and interactions with the environment. A certain neural component can take years to acquire a state in which it shows its adaptive value. This means that if we want to identify “what” was selected by evolution, we must assess the role of development in the selection process: Is it the final developed trait that was selected? Or was the trait in its potential form at the beginning of development? Take stereopsis, which is a typical developmental faculty. Could stereopsis have been selected for? Or was it a certain potential system that can be developed into stereopsis (and possibly other ones) that was selected for?

In addition, the brain provides another development factor, since it offers the possibility of learning. Learning depends on development to be implemented, but at the same time surpasses it by including a new set of potential traits that cannot be predicted by the initial state of the neural system. Has learning been selected because of its open-ended potential capabilities? Or has it been selected for a specific final learning ability?

Finally, the interactions with the environment add complexity and unpredictability for developmental assessments. It is difficult to assess a fully developed trait for its adaptive function, because in a modern environment (in comparison with the environment where it was supposed to be selected), it is much more difficult to examine the developmental trajectory of the adaptive value of the trait in connection with an environment that has already disappeared. Sometimes the relevant aspects of the modern environment are very similar, if not identical, to the environment in which a trait evolved. If so, one would expect that the trait’s features match the modern environment very well if it is an adaptation. If the modern environment is different in some critical way from the proposed evolutionary environment, the prediction is that the trait will fit better with the original evolutionary environment than the modern one.

The bottom line is that the complexity of development for studying the brain presents many challenges for researchers. There are, nevertheless, certain strategies that try to deal with it. A way of assessing the role of evolution in development is by focusing on the differential maturational trajectories among behaviors. This requires detailing the maturational stages of each faculty and how it depends on the interactions with the environment. Such information can provide us with certain clues. For example, a fixed maturational path of a behavior may indicate that it is the final stage that has been selected for. In addition, in the case of one potential mental system that develops into different behaviors, the researcher can identify the best candidate selected by identifying the one that is more reliably developed and/or shows better proficiency.

A common example of this sort of approach to developmental specificity is the language faculty. The general view now is that the ease with which children learn new words and the ways in which they generate syntactic structure indicate a biological preparedness and, hence, adaptation for language learning. However, one must be very careful about mental mechanisms justified with developmental evidence, even in such strong cases as with language.

In the case of language, we could face, for instance, a case of an exaptation that co-opts various neural mechanisms, already present, selected for other functions. Hence, even if the language faculty seems to be specifically and reliably developed, and it is highly proficient, it might be the case that it is not the product of a new mechanism. In this sense, as the mosaic theory of language evolution suggests, the language faculty could very well be an assemblage of mechanisms that shows an apparent singular developmental trajectory, which is in fact the sum of different developmental trajectories [22]. For the moment, even if the most widely held assumption about language is to consider it a perfect developmental specificity, there are alternative approaches that support these other possibilities.

11. Evolution

Over the last 60 years we have notably increased our understanding of the evolution of behavior. Many studies have been published providing evidence for many different behaviors that seem to be driving evolution in many animal species. The most suitable methodology (and probably the only one for the moment) that has been used in these studies to characterize the evolutionary place of a particular behavior in a species is the comparative method. The aim of comparative studies is to understand a present component or behavior in light of its phylogenetic history. The comparative method has been likened to a biologist’s time machine. It consists of studying different but related species to assess the evolutionary history and adaptive function of a particular trait. The comparative methodology is based on the fact that in phylogenetic history, each individual species can be seen at the same time sharing many adaptations with related species, as well as showing specific adaptations linked to their particular environments and adaptive problems. Hence, the comparison between different but related species can provide relevant clues to the origin and function of similar traits.

Classically, evolutionary biologists have applied the comparative method to two types of traits. The first deals with homologous traits, namely, traits that are related by descent from common ancestral species. Mammary glands are a typical homologous trait that all mammals share, while color vision is a homologous trait in primates. Evolutionary biologists use such traits to deduce the common ancestor of that clade. Note, though, that behavior is not a criterion for homology. A whale’s flipper and a human hand are homologous despite their radically different uses.

Analogous traits are the second type of traits used in the comparative method. An analogous trait is one that evolved independently in two separate lineages. For example, an analogous trait is color vision in primates and honeybees, or wings in flies, birds, and bats. Biologists use the term convergent evolution to refer to such similar, but at the same time independent, evolution and they use it to assess evolutionary hypotheses about function. Color vision, which has evolved independently in different species, can provide insights about its function and adaptive value.

In neuroscience, the idea is to use the phylogenetic relatedness between species to assess the origins and evolution of a particular behavior or mental component. The researcher can, for example, assess the homology or analogy between morphological (e.g., amygdala) or functional (e.g., fear) traits underlying specific behaviors or mental components to describe a possible phylogenetic history. Let us see one illustrative study of this approach.

Tecumseh Fitch [22] published a particularly interesting hypothesis about the evolution of the neural components of language processing. The author focused on the evolution of language traits in humans and applied the comparative approach by looking at the similarities and differences between human brains and brains of other primates. Fitch analyzed how the particular characteristics of Broca’s area arose in the human lineage after it separated from the last common ancestor with other primates. The aim of his study was to present evidence supporting the idea that language processing is, in my terms, a bricoleur and a satisficing adaptation, rather than a new trait designed for language.

The first type of evidence that Fitch presents has to do with the neuroanatomy of speech motor control. He begins by describing the differences between the human vocal tract and that of other primates. Over the course of evolution, the human larynx has lowered its position, leaving a much larger pharyngeal cavity than that found in other primates. In 1969, Phillip Lieberman used this evolutionary fact to advance the laryngeal descent theory of vowel production, by which he suggested that the full human vowel inventory required the lowering of the larynx. The theory explained why non-human primates could not talk, and it also explained the association between laryngeal descent and complex social behavior via the appearance of language. However, and according to new evidence, the laryngeal descent theory seems to be wrong; primates can indeed vocalize.

In contrast to the laryngeal descent theory, Fitch suggests that what distinguishes humans from other primates is laryngeal control. Direct cortico-spinal connections to the motor neurons that innervate the larynx are, according to Fitch, the innovation that allows humans direct control over the larynx, affording speech its versatility. The cortico-spinal tract exists in other primates and mammals, but their larynx control is exerted through indirect multi-synaptic connections, which is much more inefficient.

Fitch suggests that the non-human primate cortico-spinal tract could be seen as a pre-adaptation, which was later modified in the hominin phylogenetic line to become a direct control of the larynx. Therefore, the adaptation for larynx control would be just a modification of an already present trait, rather than a completely new one, thus supporting the assumption of a bricoleur constraint.

The second piece of evidence that Fitch presents concerns another neuroanatomical adaptation that has been linked to human speech. The arcuate fasciculus is an intracortical white matter bundle connecting the Wernicke and Broca’s areas. According to Fitch, the human arcuate fasciculus could also be a pre-adaptation for vocal imitation. New neuroimaging techniques, such as DTI, have provided evidence that there are significant differences between humans and other primates regarding this intracortical white-matter bundle, but, at the same time, confirms that it is not a new trait, but a brain structure that could have been modified to serve linguistic functions.

The final piece of evidence that Fitch introduces involves the evolution of Broca’s area. Broca’s region has usually been presented as a neural circuitry specialized for syntax. Indeed, more than a century of neuroscientific research has made it extremely clear that Broca’s area plays a critical role in language, and more specifically, in syntax. However, what is not so clear at this moment, in light of some recent studies, is whether the function of Broca’s area is syntax, or if syntax is a by-product of a different Broca’s function.

Fitch echoes the evidence showing Broca’s area involved in a variety of behaviors, such as, among others, tool use, action understanding and recognition, gestural communication, intention understanding, and imitation. Furthermore, brain imaging studies and lesion cases show that Broca’s region is involved both in language production as well as management of action sequences. Comparative approaches have also shown that homologous areas in monkeys manage sequences of motor actions.

The involvement of Broca’s area in such a large set of activities seems to indicate that the syntax specialization hypothesis should be revised. Indeed, recent findings suggest that these sub-regions are involved in what some have called the syntax of action/motor sequences [23]. In this sense, some types of hierarchical processing behaviors, such as linguistic forms and motor actions, would rely on the same computations carried out by Broca’s area.

In support of this revision, Fitch indicates that there is an intrinsic complexity of the brain region that has been largely overlooked. A variety of studies has shown that Broca’s area can be broken down into multiple sub-regions, distinguishable on the basis of cytoarchitecture, receptor distributions, and connectivity. The differential motor planning and control activities exerted by each of these Broca’s sub-regions could explain the differential computations of human language syntax. More specifically, according to Fitch, the premotor functions of the subregion Brodmann area 6 and the deep frontal operculum could represent the evolution towards linguistic functions through a “granularization” of gray matter, while at the same time strengthening pre-existing links to other regions of the cortex involved in linguistic processes. In contrast, the Brodmann areas 44–45 could represent a domain dealing with the management of sequences.

Similarly, as in the case of the cortico-spinal hypothesis, Fitch suggests that Broca’s area could be seen as a modification of a pre-adaptation for other functions than syntax. The idea would be that the current Broca’s area works as a computational mechanism that integrates pre-existing functional units (motor actions, vocalizations, or visual objects) to freely create a discrete infinity of modality-independent cognitive structures such as human language requires.

The evolutionary implication of this hypothesis would be that the sequential management provided by Broca’s area was “exaptated” by all these different functions, including language. The fact that all these functions did not emerge simultaneously in evolution points to Broca’s area as having been reused to serve each of the new cognitive faculties. In summary, Broca’s area seems to represent an excellent bricoleur version of the neural basis of language.

12. Conclusions

Generally, the importance of evolution in psychology and neuroscience is widely recognized by researchers. However, regardless of its popularity, the fact is that evolutionary methods are difficult to apply in mainstream research in psychology and neuroscience. There are different reasons for this but, in my opinion, the most important one is that an evolution-minded neuroscientific research is tentative, slow, and messy. These are the worst enemies in academia, where the productivity pressure constrains researchers to publish as much, as clear-cut and as fast as possible. Yet, evolution is an unavoidable constraint for understanding the brain. If we do not assume it necessary to address the brain as a product of evolution, we will waste our time. Neuroscience can only be tentative, slow, and messy, because the original adaptive contribution of specific mental components to the survival and reproduction of the species is hid-den in our evolutionary history. Every mental component has a particular evolutionary history of its own, grounded in a selective situation that, for the most part, has long passed. Furthermore, every mental component is also the product of previous versions that have also had an evolution of their own. Henceforth, its slow, tentative, and messy nature. Be that as it may, and however difficult it might seem, the study of the brain can only be addressed via evolutionary means that consider all the complexities of any sound evolutionary explanation.

Funding

This project has received funding from “la Caixa” Foundation (project code LCF/PR/HR19/52160001), and from FEDER/Ministerio de Ciencia e Innovación—Agencia Estatal de Investigación (RTI2018-093952-B-I00).

Acknowledgments

I would like to thank Eva Juarros and Ricardo Tellez for their useful comments on an early version of this manuscript and Helena Kruyer for her help in obtaining the final version.

Conflicts of Interest

The author declares no conflict of interest.

References

- Dobzhansky, T. Nothing in Biology Makes Sense except in the Light of Evolution. Am. Biol. Teach. 1973, 35, 125–129. [Google Scholar] [CrossRef]

- Gould, S.J.; Vrba, E.S. Exaptation—A Missing Term in the Science of Form. Paleobiology 1982, 8, 4–15. [Google Scholar] [CrossRef]

- Croston, R.; Branch, C.L.; Kozlovsky, D.Y.; Dukas, R.; Pravosudov, V.V. Heritability and the evolution of cognitive traits: Table 1. Behav. Ecol. 2015, 26, 1447–1459. [Google Scholar] [CrossRef]

- Lloyd, E.A. Adaptationism and the Logic of Research Questions: How to Think Clearly About Evolutionary Causes. Biol. Theory 2015, 10, 343–362. [Google Scholar] [CrossRef]

- Heyes, C.; Chater, N.; Dwyer, D.M. Sinking in: The Peripheral Baldwinisation of Human Cognition. Trends Cogn. Sci. 2020, 24, 884–899. [Google Scholar] [CrossRef] [PubMed]

- Vilarroya, O. From functional ‘mess’ to bounded functionality. Minds Mach. 2001, 11, 239–256. [Google Scholar] [CrossRef]

- Simon, H.A. The Sciences of the Artificial, 2nd ed.; MIT Press: Cambridge, MA, USA, 1981. [Google Scholar]

- Jacob, F. Evolution and tinkering. Science 1977, 196, 1161–1166. [Google Scholar] [CrossRef]

- Anderson, M.L. Neural reuse: A fundamental organizational principle of the brain. Behav. Brain Sci. 2010, 33, 245–266. [Google Scholar] [CrossRef] [PubMed]

- Mouden, C.E.; Burton-Chellew, M.; Gardner, A.; West, S.A. What do humans maximize? In Evolution and Rationality: Decisions, Co-Operation and Strategic Behaviour; Cambridge University Press: Cambridge, UK, 2012. [Google Scholar]

- Bateson, M. Optimistic and pessimistic biases: A primer for behavioural ecologists. Curr. Opin. Behav. Sci. 2016, 12, 115–121. [Google Scholar] [CrossRef]

- Fisher, S.E. Evolution of language: Lessons from the genome. Psychon. Bull. Rev. 2017, 24, 34–40. [Google Scholar] [CrossRef] [PubMed]

- Franchini, L.F.; Pollard, K.S. Genomic approaches to studying human-specific developmental traits. Development 2015, 142, 3100–3112. [Google Scholar] [CrossRef] [PubMed]

- Sousa, A.M.M.; Meyer, K.A.; Santpere, G.; Gulden, F.O.; Sestan, N. Evolution of the Human Nervous System Function, Structure, and Development. Cell 2017, 170, 226–247. [Google Scholar] [CrossRef] [PubMed]

- Wagner, A. Information Theory Can Help Quantify the Potential of New Phenotypes to Originate as Exaptations. Front. Ecol. Evol. 2020, 8, 457. [Google Scholar] [CrossRef]

- Watt, W.B. Specific-gene studies of evolutionary mechanisms in an age of genome-wide surveying. Ann. N. Y. Acad. Sci. 2013, 1289, 1–17. [Google Scholar] [CrossRef] [PubMed]

- Emberts, Z.; Mary, C.M.S.; Howard, C.C.; Forthman, M.; Bateman, P.W.; Somjee, U.; Hwang, W.S.; Li, D.; Kimball, R.T.; Miller, C.W. The evolution of autotomy in leaf-footed bugs. Evolution 2020, 74, 897–910. [Google Scholar] [CrossRef] [PubMed]

- Lires, A.I.; Soto, I.M.; Gómez, R.O. Walk before you jump: New insights on early frog locomotion from the oldest known salientian. Paleobiology 2016, 42, 612–623. [Google Scholar] [CrossRef]

- Wei, Y.; de Lange, S.C.; Scholtens, L.H.; Watanabe, K.; Ardesch, D.J.; Jansen, P.R.; Savage, J.E.; Li, L.; Preuss, T.M.; Rilling, J.K.; et al. Genetic mapping and evolutionary analysis of human-expanded cognitive networks. Nat. Commun. 2019, 10, 4839. [Google Scholar] [CrossRef] [PubMed]

- Johnson, D.D.P.; Blumstein, D.T.; Fowler, J.H.; Haselton, M.G. The evolution of error: Error management, cognitive constraints, and adaptive decision-making biases. Trends Ecol. Evol. 2013, 28, 474–481. [Google Scholar] [CrossRef] [PubMed]

- Tinbergen, N. On aims and methods of Ethology. Z. Tierpsychol. 1963, 20, 410–433. [Google Scholar] [CrossRef]

- Fitch, W.T. The evolution of syntax: An exaptationist perspective. Front. Evol. Neurosci. 2011, 3, 9. [Google Scholar] [CrossRef]

- Ellis, R. Bodies and Other Objects: The Sensorimotor Foundations of Cognition; Cambridge University Press: Cambridge, UK, 2018. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).